Integration of EHR and ECG Data for Predicting Paroxysmal Atrial Fibrillation in Stroke Patients

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Data Collection

2.2. Inclusion/Exclusion

2.3. Data Preprocessing

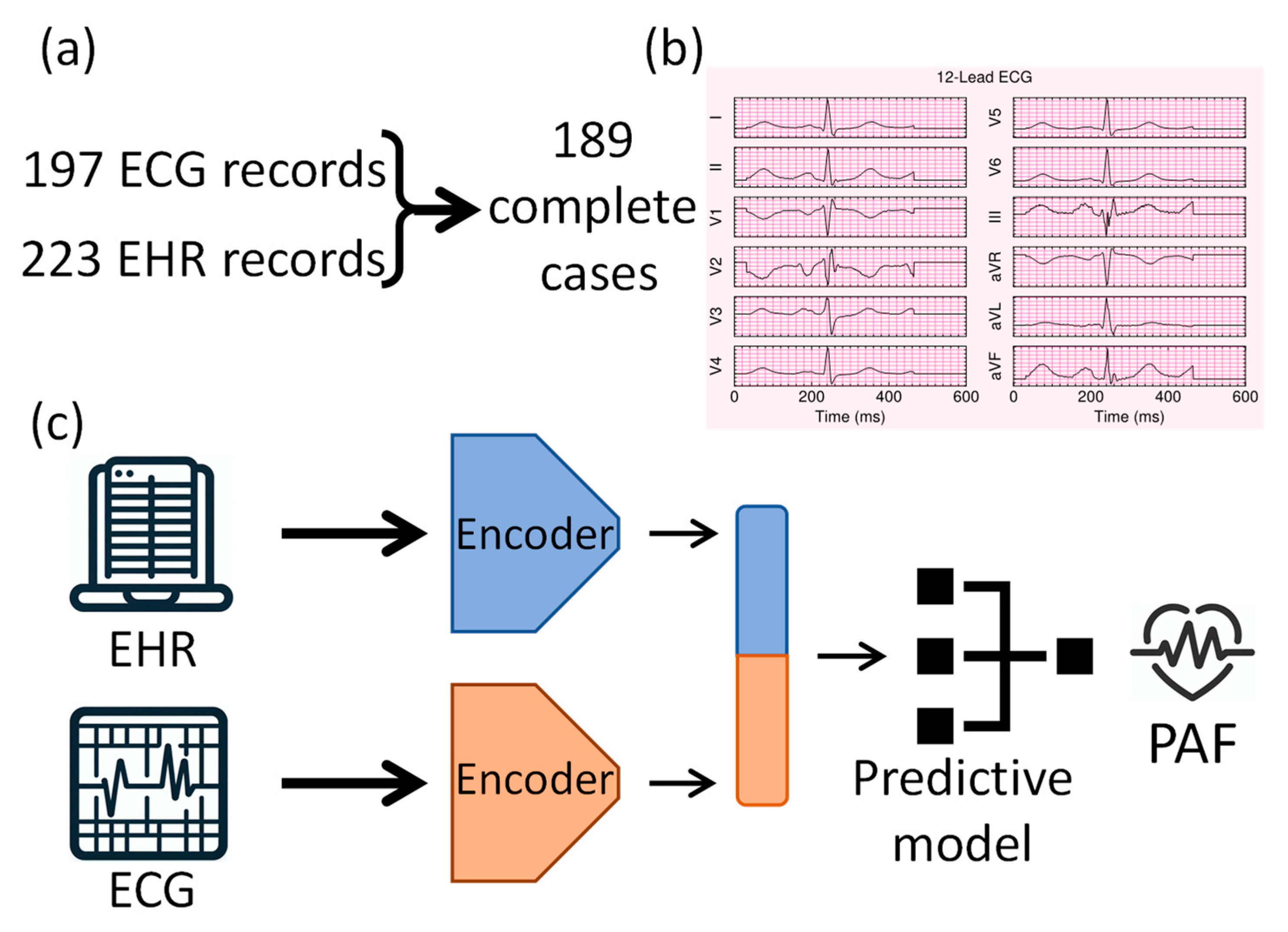

2.4. Deep Learning Model Architecture

2.5. Training and Validation

2.6. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AUROC | Area under the receiver operating characteristic curve |

| CNN | Convolutional neural network |

| ECG | Electrocardiogram |

| EHR | Electronic health record |

| PAF | Paroxysmal atrial fibrillation |

Appendix A. Additional Evaluation and Interpretability

Appendix B

| Item | Evidence in Manuscript |

|---|---|

| Title/Abstract identify model type and purpose | Title and abstract specify multimodal deep learning to predict PAF in stroke patients; metrics reported. |

| Background and objectives (intended use/clinical context) | Rationale for combining ECG+EHR; objective to develop a multimodal model and examine relative contributions. |

| Source of data and study design/setting | Single-center retrospective cohort; Penn State COM; accrual Jan 2017–May 2023; cardiologist and stroke neurologist validated data. |

| Participants (eligibility, selection, numbers) | Cryptogenic stroke patients; flow from 223 reviewed → 197 with ECG → 189 final. |

| Outcome (definition, timing, how assessed, blinding) | Outcome is PAF; data validated by specialists. |

| Predictors (definitions, timing, measurement) | 47 EHR variables (demographics, labs, comorbidities); 12-lead ECG; preprocessing described. |

| Sample size (rationale) | n = 189; 49 events (26%). |

| Handling of missing data | No imputation; chart-adjudicated binary comorbidities coded 0. |

| Bias, drift, data splits (leakage prevention) | 5-fold CV; repeated with different seeds. |

| Modeling details (algorithms, hyperparameters, class imbalance) | Hybrid CNN + attention for ECG; MLP for EHR; concatenation; tuned heads 4–8, learning rate 1 × 10−4–1 × 10−3; augmentation probability 0.1; compression dimensions varied. |

| Internal validation | 5-fold cross-validation; 10 repeats; test-time augmentation. |

| Performance measures (discrimination, calibration, CIs) | Accuracy, AUROC, sensitivity, specificity, precision, F1; mean ± SD and p-values; AUROC/AUPRC reported/best values. |

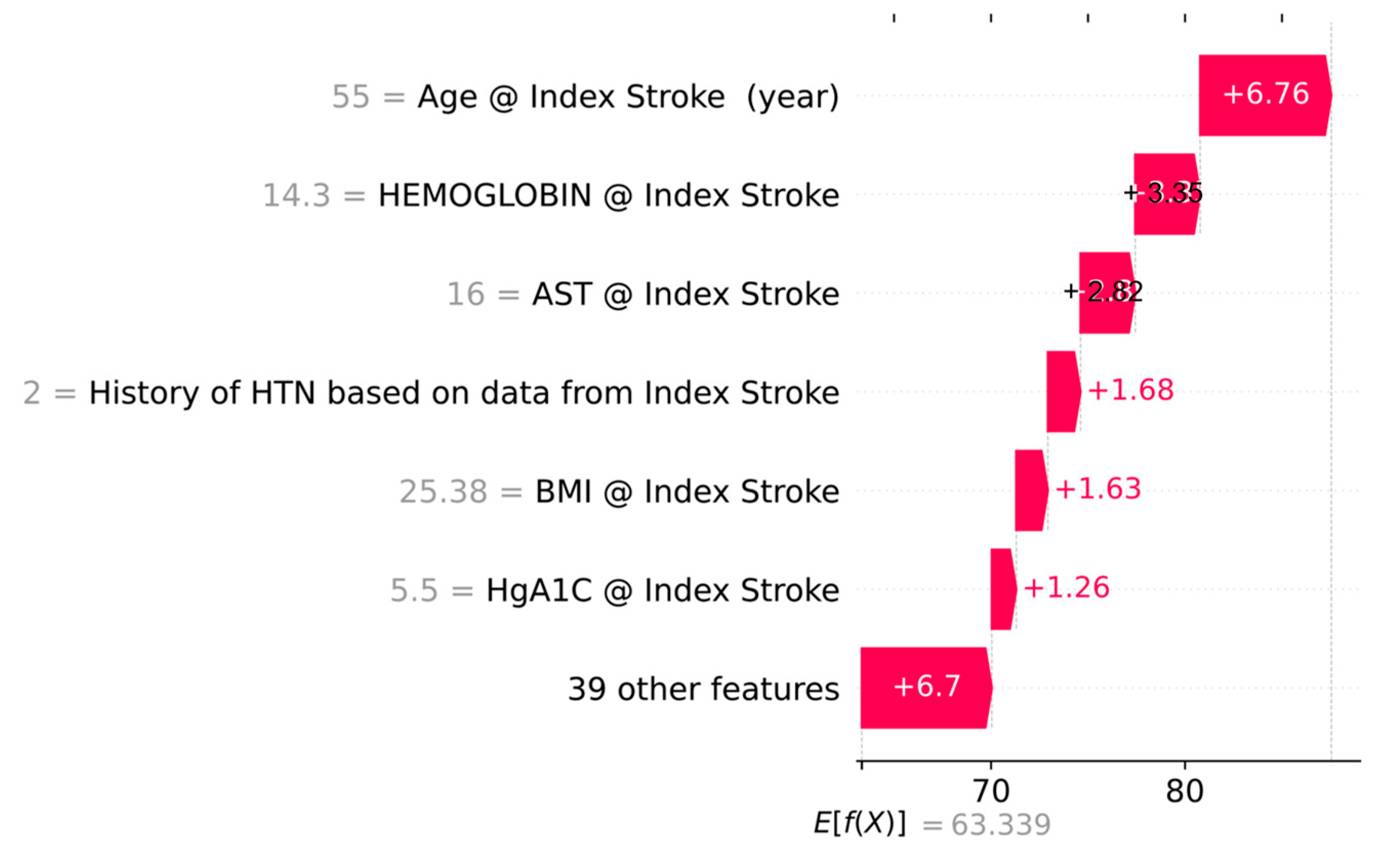

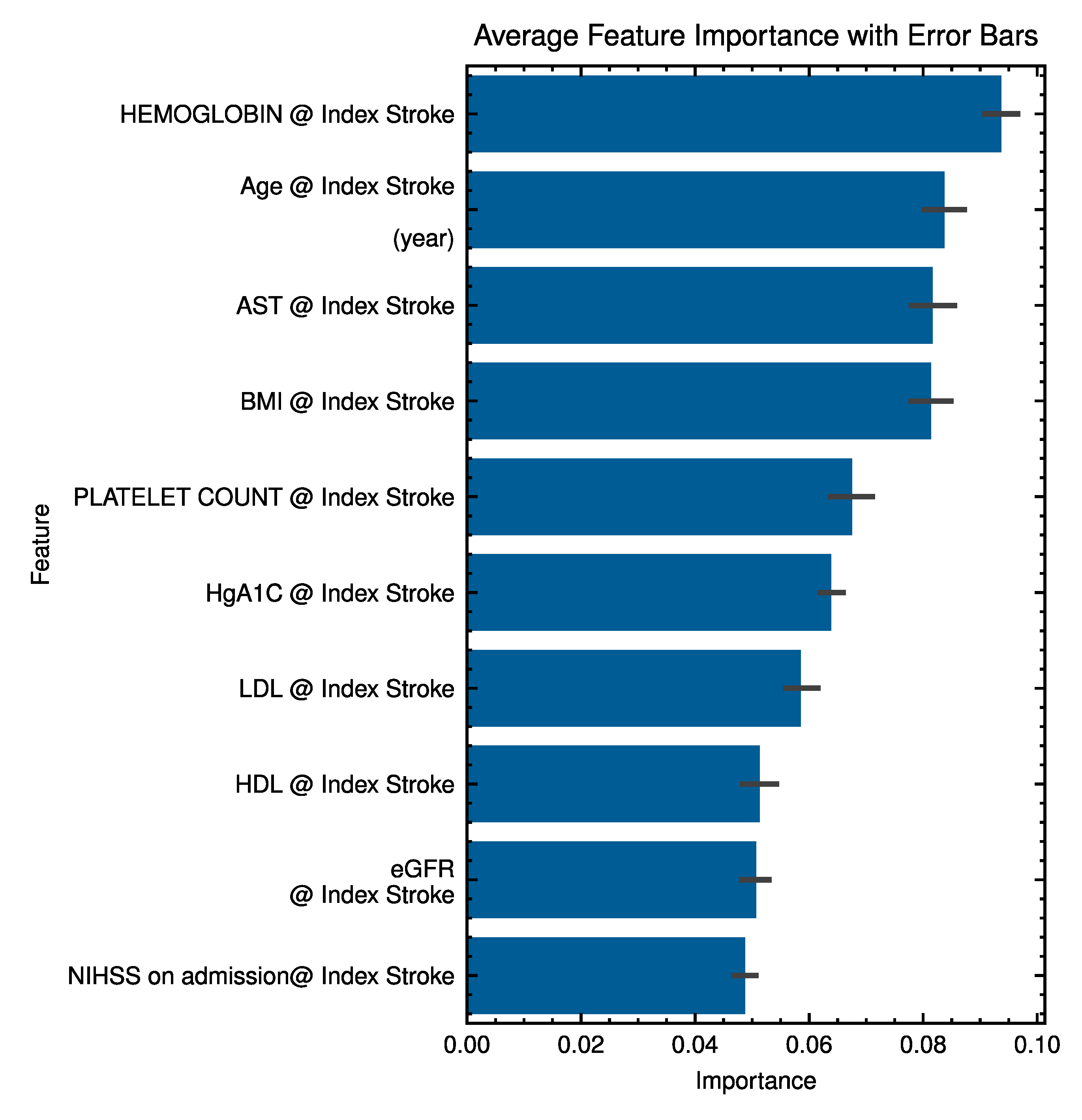

| Explainability | Global EHR feature importance (Random Forest); figures. |

| Subgroups/fairness | Appendix D Table A3 |

| Model presentation (final model, thresholds, access) | All codes are shared at https://github.com/TheDecodeLab/AFib-multimodal.git. (accessed on 4 September 2025) |

| Clinical utility | Not assessed (pilot; limited events). |

| Reproducibility (software, versions) | Python 3.11, TensorFlow 2.20. |

| Data availability | Data not publicly available due to privacy. |

Appendix C

| Category | Group | n | Accuracy (%) | SD (%) | p-Value |

|---|---|---|---|---|---|

| Age | Old (≥52) | 178 | 0.66 | 0.27 | 0.6413 |

| Young (<52) | 11 | 0.71 | 0.24 | ||

| Sex | Male | 80 | 0.69 | 0.28 | 0.0997 |

| Female | 109 | 0.65 | 0.26 | ||

| Race | White | 156 | 0.67 | 0.27 | 0.6539 |

| Black | 17 | 0.63 | 0.27 | ||

| Asian | 1 | 0.22 | - | ||

| Others | 15 | 0.62 | 0.27 | ||

| Ethnicity | Hispanic | 3 | 0.62 | 0.33 | 0.98 |

| Non-Hispanic | 186 | 0.67 | 0.27 |

Appendix D

| Component | Setting (Value) | Notes |

|---|---|---|

| Architecture | CNN encoder → Multi-Head Attention × 1 → latent compressors → concat → classifier | Transformer-style attention stack |

| Attention heads | 8 | — |

| ECG representation | Denoised + band-pass filtered 12-lead ECG (600 × 12) | Final choice used for the best model |

| EHR feature set | 47 structured predictors at index encounter | Demographics, comorbidities, labs, echo, stroke features |

| Fusion latent (ECG) | 32 | |

| Fusion latent (EHR) | 16 | ~33% EHR: 67% ECG contribution |

| Augmentation (train) | 0.1 | None used in the final model |

| Test-time augmentation | 5 replicates (averaged) | — |

| Optimizer/Loss | Adam, binary cross-entropy | — |

| Initial learning rate | 1 × 10−4 | Fixed across folds |

| Epochs/Batch size | 100/32 | Early stopping as implemented |

| Class imbalance handling | Minority oversampling in training folds | Evaluation on the original class mix |

| Cross-validation | 5-fold × 2 repeats | Model selection by mean CV AUROC |

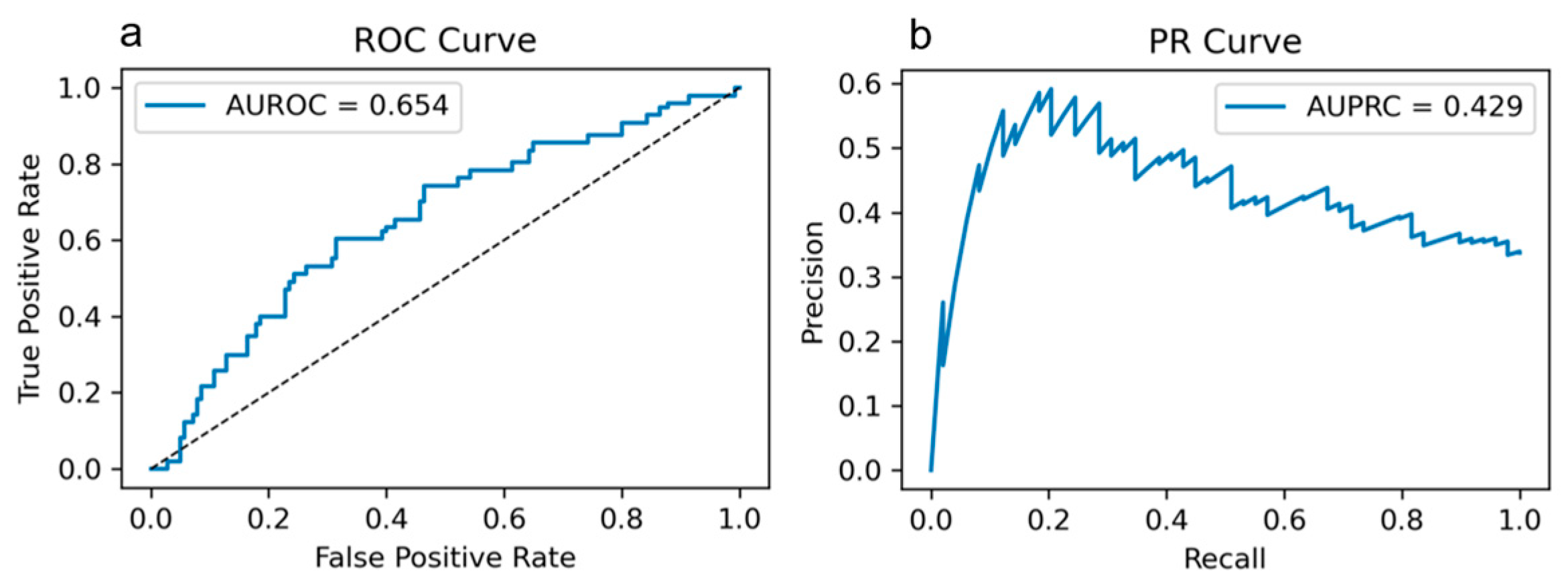

| Performance (CV mean) | AUROC = 0.654 ± 0.071; Accuracy = 0.751 ± 0.092; Specificity = 0.859 ± 0.105; F1 = 0.487 ± 0.106 | Threshold metrics from CV; full ROC/PR in Figure A1 |

Appendix E

| Comparison with 33% EHR | AUROC | Accuracy | Sensitivity | Specificity | F1 Score |

|---|---|---|---|---|---|

| 0% | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 |

| 11% | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 |

| 20% | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 |

| 50% | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 |

| 67% | ≤0.05 | 0.33 | ≤0.05 | ≤0.05 | ≤0.05 |

| 80% | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 |

| 100% | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 | ≤0.05 |

References

- Boon, K.H.; Khalil-Hani, M.; Malarvili, M.B.; Sia, C.W. Paroxysmal Atrial Fibrillation Prediction Method with Shorter HRV Sequences. Comput. Methods Programs Biomed. 2016, 134, 187–196. [Google Scholar] [CrossRef]

- Linz, D.; Hermans, A.; Tieleman, R.G. Early Atrial Fibrillation Detection and the Transition to Comprehensive Management. Europace 2021, 23, ii46–ii51. [Google Scholar] [CrossRef]

- Miyazaki, Y.; Yamagata, K.; Ishibashi, K.; Inoue, Y.; Miyamoto, K.; Nagase, S.; Aiba, T.; Kusano, K. Paroxysmal Atrial Fibrillation as a Predictor of Pacemaker Implantation in Patients with Unexplained Syncope. J. Cardiol. 2022, 80, 28–33. [Google Scholar] [CrossRef]

- Nayak, T.; Lohrmann, G.; Passman, R. Controversies in Diagnosis and Management of Atrial Fibrillation. Cardiol. Rev. 2024. [Google Scholar] [CrossRef]

- Xia, Y.; Wulan, N.; Wang, K.; Zhang, H. Detecting Atrial Fibrillation by Deep Convolutional Neural Networks. Comput. Biol. Med. 2018, 93, 84–92. [Google Scholar] [CrossRef]

- Raghunath, S.; Pfeifer, J.M.; Ulloa-Cerna, A.E.; Nemani, A.; Carbonati, T.; Jing, L.; vanMaanen, D.P.; Hartzel, D.N.; Ruhl, J.A.; Lagerman, B.F.; et al. Deep Neural Networks Can Predict New-Onset Atrial Fibrillation From the 12-Lead ECG and Help Identify Those at Risk of Atrial Fibrillation-Related Stroke. Circulation 2021, 143, 1287–1298. [Google Scholar] [CrossRef] [PubMed]

- Tang, S.; Razeghi, O.; Kapoor, R.; Alhusseini, M.I.; Fazal, M.; Rogers, A.J.; Rodrigo Bort, M.; Clopton, P.; Wang, P.J.; Rubin, D.L.; et al. Machine Learning-Enabled Multimodal Fusion of Intra-Atrial and Body Surface Signals in Prediction of Atrial Fibrillation Ablation Outcomes. Circ. Arrhythm. Electrophysiol. 2022, 15, e010850. [Google Scholar] [CrossRef] [PubMed]

- Bhagubai, M.; Vandecasteele, K.; Swinnen, L.; Macea, J.; Chatzichristos, C.; De Vos, M.; Van Paesschen, W. The Power of ECG in Semi-Automated Seizure Detection in Addition to Two-Channel behind-the-Ear EEG. Bioengineering 2023, 10, 491. [Google Scholar] [CrossRef] [PubMed]

- Neri, L.; Gallelli, I.; Dall’Olio, M.; Lago, J.; Borghi, C.; Diemberger, I.; Corazza, I. Validation of a New and Straightforward Algorithm to Evaluate Signal Quality during ECG Monitoring with Wearable Devices Used in a Clinical Setting. Bioengineering 2024, 11, 222. [Google Scholar] [CrossRef]

- Murat, F.; Sadak, F.; Yildirim, O.; Talo, M.; Murat, E.; Karabatak, M.; Demir, Y.; Tan, R.-S.; Acharya, U.R. Review of Deep Learning-Based Atrial Fibrillation Detection Studies. Int. J. Environ. Res. Public Health 2021, 18, 11302. [Google Scholar] [CrossRef]

- Bhattacharya, A.; Sadasivuni, S.; Chao, C.-J.; Agasthi, P.; Ayoub, C.; Holmes, D.R.; Arsanjani, R.; Sanyal, A.; Banerjee, I. Multi-Modal Fusion Model for Predicting Adverse Cardiovascular Outcome Post Percutaneous Coronary Intervention. Physiol. Meas. 2022, 43, 124004. [Google Scholar] [CrossRef]

- Jo, Y.-Y.; Cho, Y.; Lee, S.Y.; Kwon, J.-M.; Kim, K.-H.; Jeon, K.-H.; Cho, S.; Park, J.; Oh, B.-H. Explainable Artificial Intelligence to Detect Atrial Fibrillation Using Electrocardiogram. Int. J. Cardiol. 2021, 328, 104–110. [Google Scholar] [CrossRef] [PubMed]

- Tzou, H.-A.; Lin, S.-F.; Chen, P.-S. Paroxysmal Atrial Fibrillation Prediction Based on Morphological Variant P-Wave Analysis with Wideband ECG and Deep Learning. Comput. Methods Programs Biomed. 2021, 211, 106396. [Google Scholar] [CrossRef]

- Machine Learning for Detecting Atrial Fibrillation from ECGs: Systematic Review and Meta-Analysis. Available online: https://www.imrpress.com/journal/RCM/25/1/10.31083/j.rcm2501008/htm (accessed on 2 February 2025).

- Jabbour, G.; Nolin-Lapalme, A.; Tastet, O.; Corbin, D.; Jordà, P.; Sowa, A.; Delfrate, J.; Busseuil, D.; Hussin, J.G.; Dubé, M.-P.; et al. Prediction of Incident Atrial Fibrillation Using Deep Learning, Clinical Models, and Polygenic Scores. Eur. Heart J. 2024, 45, 4920–4934. [Google Scholar] [CrossRef]

- Abadi, M. TensorFlow: Learning Functions at Scale. In Proceedings of the 21st ACM SIGPLAN International Conference on Functional Programming, Nara, Japan, 18–22 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; p. 1. [Google Scholar]

- Qiu, Y.; Guo, H.; Wang, S.; Yang, S.; Peng, X.; Xiayao, D.; Chen, R.; Yang, J.; Liu, J.; Li, M.; et al. Deep Learning-Based Multimodal Fusion of the Surface ECG and Clinical Features in Prediction of Atrial Fibrillation Recurrence Following Catheter Ablation. BMC Med. Inform. Decis. Mak. 2024, 24, 225. [Google Scholar] [CrossRef]

- Lin, F.; Zhang, P.; Chen, Y.; Liu, Y.; Li, D.; Tan, L.; Wang, Y.; Wang, D.W.; Yang, X.; Ma, F.; et al. Artificial-Intelligence-Based Risk Prediction and Mechanism Discovery for Atrial Fibrillation Using Heart Beat-to-Beat Intervals. Med 2024, 5, 414–431.e5. [Google Scholar] [CrossRef] [PubMed]

- Khurshid, S.; Friedman, S.; Reeder, C.; Di Achille, P.; Diamant, N.; Singh, P.; Harrington, L.X.; Wang, X.; Al-Alusi, M.A.; Sarma, G.; et al. ECG-Based Deep Learning and Clinical Risk Factors to Predict Atrial Fibrillation. Circulation 2022, 145, 122–133. [Google Scholar] [CrossRef] [PubMed]

- Uchida, Y.; Kan, H.; Kano, Y.; Onda, K.; Sakurai, K.; Takada, K.; Ueki, Y.; Matsukawa, N.; Hillis, A.E.; Oishi, K. Longitudinal Changes in Iron and Myelination Within Ischemic Lesions Associate With Neurological Outcomes: A Pilot Study. Stroke 2024, 55, 1041–1050. [Google Scholar] [CrossRef]

- Uchida, Y.; Kan, H.; Inoue, H.; Oomura, M.; Shibata, H.; Kano, Y.; Kuno, T.; Usami, T.; Takada, K.; Yamada, K.; et al. Penumbra Detection With Oxygen Extraction Fraction Using Magnetic Susceptibility in Patients With Acute Ischemic Stroke. Front. Neurol. 2022, 13, 752450. [Google Scholar] [CrossRef]

- Attia, Z.I.; Noseworthy, P.A.; Lopez-Jimenez, F.; Asirvatham, S.J.; Deshmukh, A.J.; Gersh, B.J.; Carter, R.E.; Yao, X.; Rabinstein, A.A.; Erickson, B.J.; et al. An Artificial Intelligence-Enabled ECG Algorithm for the Identification of Patients with Atrial Fibrillation during Sinus Rhythm: A Retrospective Analysis of Outcome Prediction. Lancet 2019, 394, 861–867. [Google Scholar] [CrossRef]

- Chen, W.; Zheng, P.; Bu, Y.; Xu, Y.; Lai, D. Achieving Real-Time Prediction of Paroxysmal Atrial Fibrillation Onset by Convolutional Neural Network and Sliding Window on R-R Interval Sequences. Bioengineering 2024, 11, 903. [Google Scholar] [CrossRef] [PubMed]

- Abstract 16068: Polycythemia Vera Is Associated with Increased Atrial Fibrillation Compared to the General Population: Results from the National Inpatient Sample Database|Circulation. Available online: https://www.ahajournals.org/doi/10.1161/circ.134.suppl_1.16068?utm_source=chatgpt.com (accessed on 13 July 2025).

- Truong, E.T.; Lyu, Y.; Ihdayhid, A.R.; Lan, N.S.R.; Dwivedi, G. Beyond Clinical Factors: Harnessing Artificial Intelligence and Multimodal Cardiac Imaging to Predict Atrial Fibrillation Recurrence Post-Catheter Ablation. J. Cardiovasc. Dev. Dis. 2024, 11, 291. [Google Scholar] [CrossRef] [PubMed]

| Variable | Total (n = 189) | No PAF (n = 140) | PAF (n = 49) | p-Value |

|---|---|---|---|---|

| Age (years) | 71.4 ± 11.4 | 70.0 ± 11.6 | 75.4 ± 9.6 | 0.004 |

| Sex, n (%) | 0.452 | |||

| Male | 80 (42.3) | 62 (44.3) | 18 (36.7) | |

| Female | 109 (57.7) | 78 (55.7) | 31 (63.3) | |

| Race, n (%) | 0.205 | |||

| White | 156 (82.5) | 116 (82.9) | 40 (81.6) | |

| Black | 17 (9.0) | 13 (9.3) | 4 (8.2) | |

| Asian | 1 (0.5) | 0 (0.0) | 1 (2.0) | |

| Others | 14 (7.4) | 11 (7.9) | 3 (6.1) | |

| Ethnicity, n (%) | 0.999 | |||

| Hispanic | 3 (1.6) | 2 (1.4) | 1 (2.0) | |

| Non-Hispanic | 186 (98.4) | 138 (98.6) | 48 (98.0) | |

| Monitoring (months) | 19.4 ± 13.9 | 18.3 ± 13.6 | 22.6 ± 14.5 | 0.064 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vafaei Sadr, A.; Mareboina, M.; Orabueze, D.; Sarkar, N.; Hejazian, S.S.; Vemuri, A.; Shah, R.; Maheshwari, A.; Zand, R.; Abedi, V. Integration of EHR and ECG Data for Predicting Paroxysmal Atrial Fibrillation in Stroke Patients. Bioengineering 2025, 12, 961. https://doi.org/10.3390/bioengineering12090961

Vafaei Sadr A, Mareboina M, Orabueze D, Sarkar N, Hejazian SS, Vemuri A, Shah R, Maheshwari A, Zand R, Abedi V. Integration of EHR and ECG Data for Predicting Paroxysmal Atrial Fibrillation in Stroke Patients. Bioengineering. 2025; 12(9):961. https://doi.org/10.3390/bioengineering12090961

Chicago/Turabian StyleVafaei Sadr, Alireza, Manvita Mareboina, Diana Orabueze, Nandini Sarkar, Seyyed Sina Hejazian, Ajith Vemuri, Ravi Shah, Ankit Maheshwari, Ramin Zand, and Vida Abedi. 2025. "Integration of EHR and ECG Data for Predicting Paroxysmal Atrial Fibrillation in Stroke Patients" Bioengineering 12, no. 9: 961. https://doi.org/10.3390/bioengineering12090961

APA StyleVafaei Sadr, A., Mareboina, M., Orabueze, D., Sarkar, N., Hejazian, S. S., Vemuri, A., Shah, R., Maheshwari, A., Zand, R., & Abedi, V. (2025). Integration of EHR and ECG Data for Predicting Paroxysmal Atrial Fibrillation in Stroke Patients. Bioengineering, 12(9), 961. https://doi.org/10.3390/bioengineering12090961