The Evaluation of a Deep Learning Approach to Automatic Segmentation of Teeth and Shade Guides for Tooth Shade Matching Using the SAM2 Algorithm

Abstract

1. Introduction

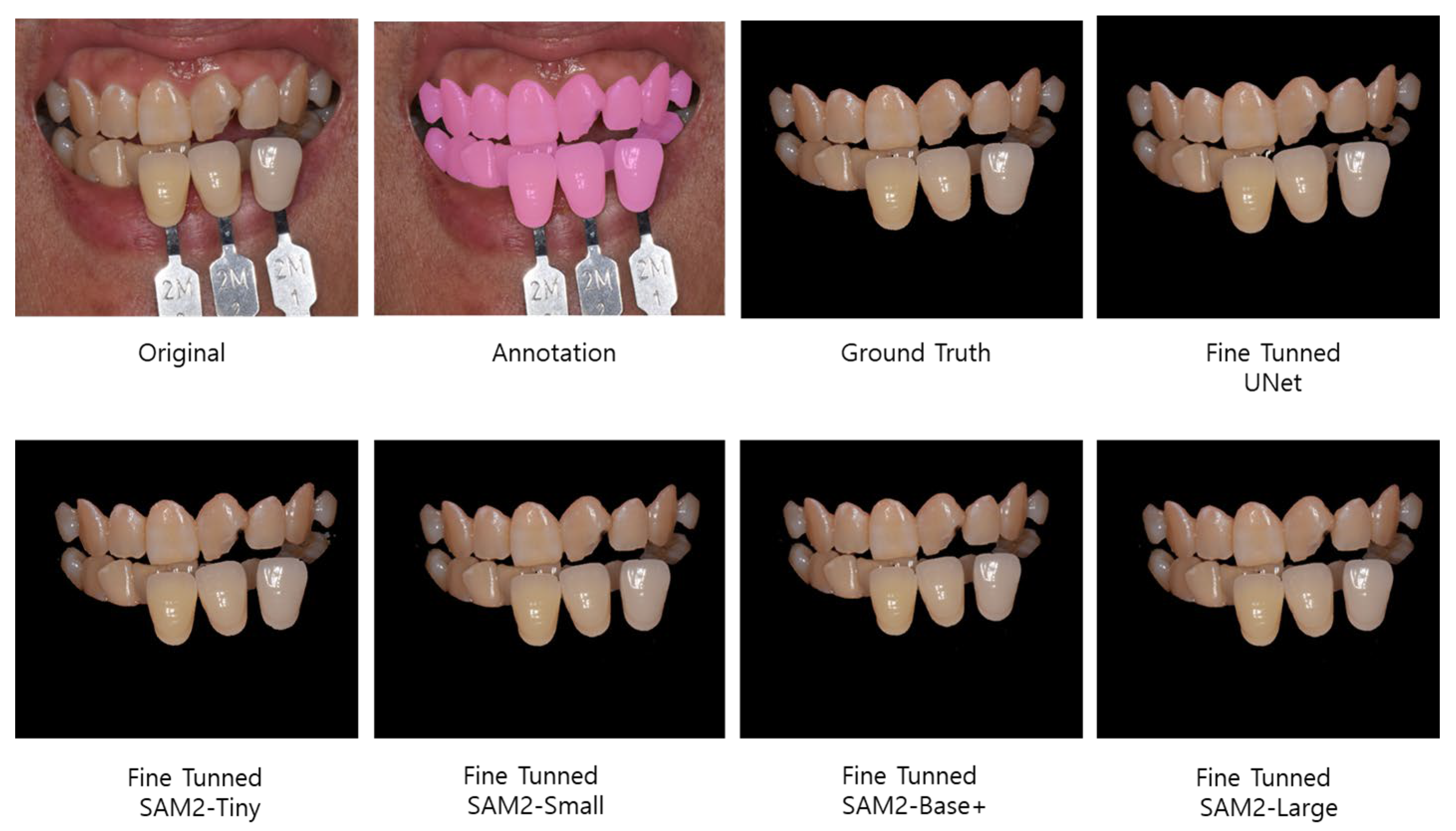

2. Materials and Methods

2.1. The Experimental Design

2.2. Data Collection

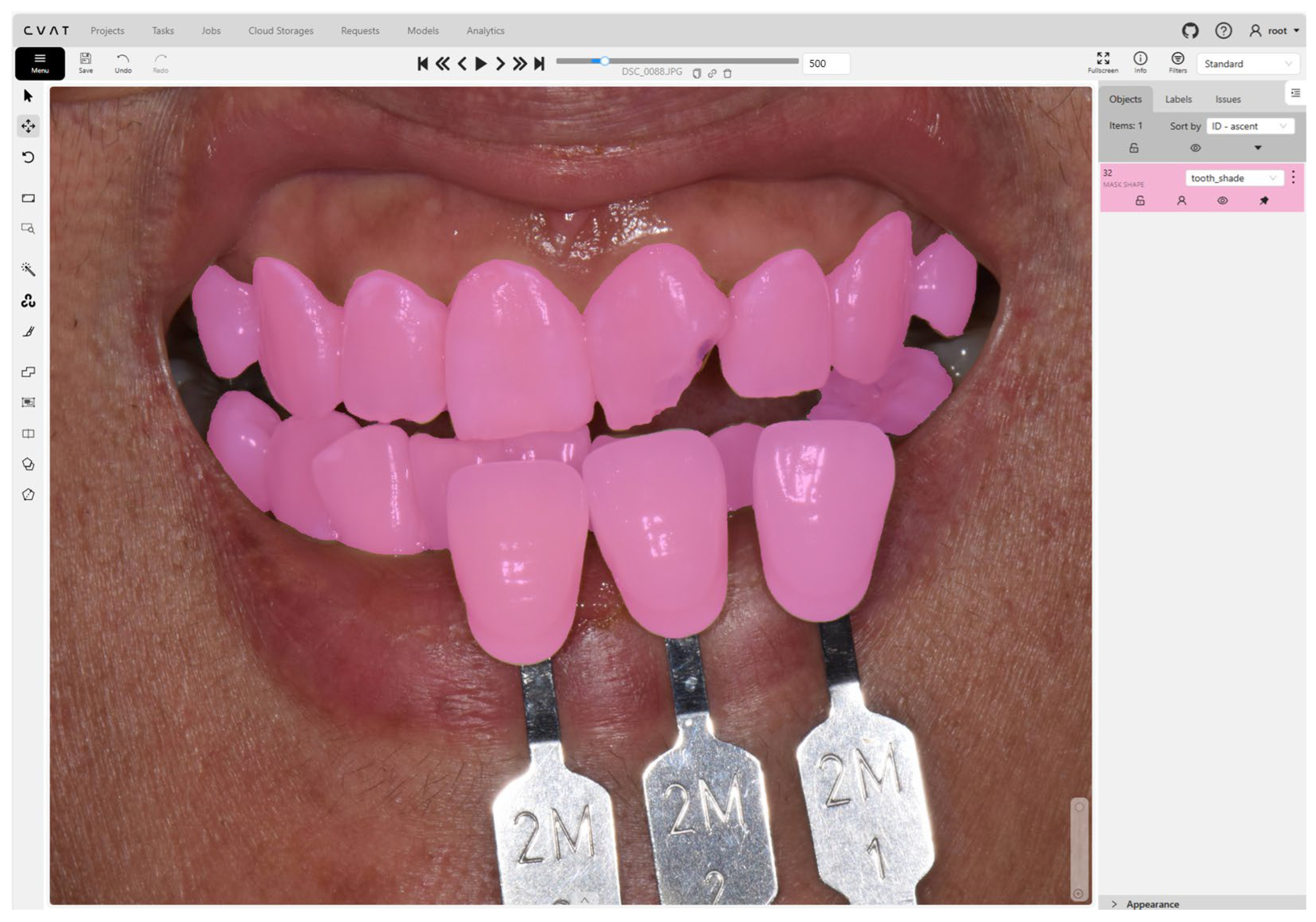

2.3. Data Annotation

2.4. Model Training and Fine-Tuning

2.5. Evaluation

2.5.1. Segmentation Performance

2.5.2. Color Uniformity and Perceptual Difference Evaluation

3. Results

3.1. Segmentation Performance Evaluation

3.2. Color Uniformity and Perceptual Difference Evaluation

3.2.1. Color Uniformity Evaluation

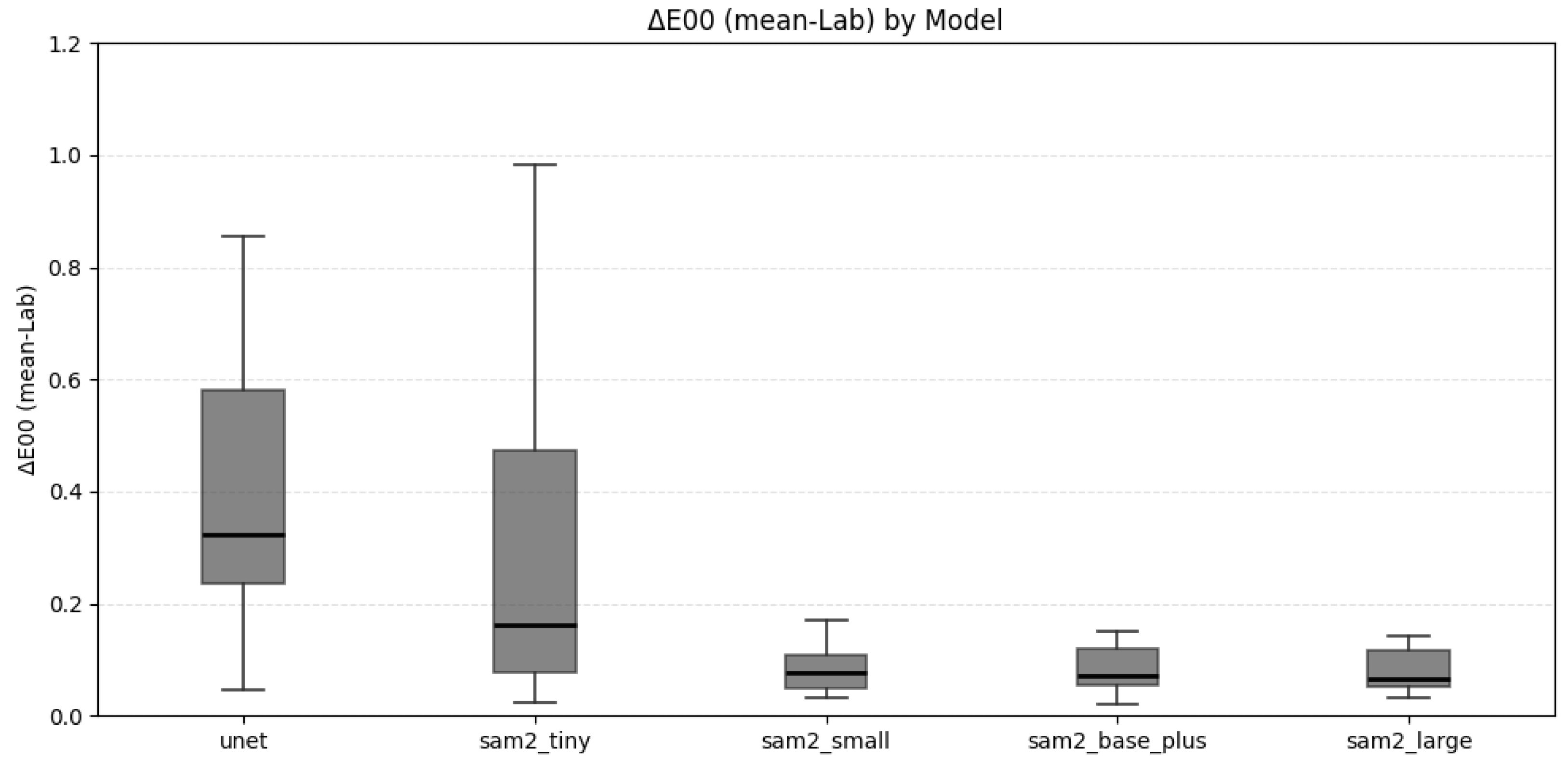

3.2.2. Perceptual Color Difference Evaluation

4. Discussion

4.1. The Segmentation Performance

4.2. Color Uniformity and Implications for Shade Matching

4.3. Clinical and Technical Implications

4.4. Limitations and Future Work

4.5. The Computational Cost, Inference Time, and Deployment Feasibility

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Clary, J.A.; Ontiveros, J.C.; Cron, S.G.; Paravina, R.D. Influence of light source, polarization, education, and training on shade matching quality. J. Prosthet. Dent. 2016, 116, 91–97. [Google Scholar] [CrossRef]

- Igiel, C.; Lehmann, K.M.; Ghinea, R.; Weyhrauch, M.; Hangx, Y.; Scheller, H.; Paravina, R.D. Reliability of visual and instrumental color matching. J. Esthet. Restor. Dent. 2017, 29, 303–308. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, S.; Shetty, S.R.; Prithviraj, D. An evaluation of shade differences between natural anterior teeth in different age groups and gender using commercially available shade guides. J. Indian Prosthodont. Soc. 2012, 12, 222–230. [Google Scholar] [CrossRef] [PubMed]

- Pohlen, B.; Hawlina, M.; Šober, K.; Kopač, I. Tooth Shade-Matching Ability Between Groups of Students with Different Color Knowledge. Int. J. Prosthodont. 2016, 29, 487–492. [Google Scholar] [CrossRef] [PubMed]

- Jouhar, R. Comparison of Shade Matching ability among Dental students under different lighting conditions: A cross-sectional study. Int. J. Environ. Res. Public Health 2022, 19, 11892. [Google Scholar] [CrossRef]

- Gurrea, J.; Gurrea, M.; Bruguera, A.; Sampaio, C.S.; Janal, M.; Bonfante, E.; Coelho, P.G.; Hirata, R. Evaluation of Dental Shade Guide Variability Using Cross-Polarized Photography. Int. J. Periodontics Restor. Dent. 2016, 36, pe76. [Google Scholar] [CrossRef]

- Özat, P.; Tuncel, İ.; Eroğlu, E. Repeatability and reliability of human eye in visual shade selection. J. Oral Rehabil. 2013, 40, 958–964. [Google Scholar] [CrossRef]

- AlSaleh, S.; Labban, M.; AlHariri, M.; Tashkandi, E. Evaluation of self shade matching ability of dental students using visual and instrumental means. J. Dent. 2012, 40, e82–e87. [Google Scholar] [CrossRef]

- Geetha, A.S.; Hussain, M. From SAM to SAM 2: Exploring Improvements in Meta’s Segment Anything Model. arXiv 2024, arXiv:2408.06305. [Google Scholar]

- Ravi, N.; Gabeur, V.; Hu, Y.-T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L. Sam 2: Segment anything in images and videos. arXiv 2024, arXiv:2408.00714. [Google Scholar]

- Jiaxing, Z.; Hao, T. SAM2 for Image and Video Segmentation: A Comprehensive Survey. arXiv 2025, arXiv:2503.12781. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.-Y. Segment anything. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef] [PubMed]

- Mazurowski, M.A.; Dong, H.; Gu, H.; Yang, J.; Konz, N.; Zhang, Y. Segment anything model for medical image analysis: An experimental study. Med. Image Anal. 2023, 89, 102918. [Google Scholar] [CrossRef] [PubMed]

- Koch, T.L.; Perslev, M.; Igel, C.; Brandt, S.S. Accurate segmentation of dental panoramic radiographs with U-Nets. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 15–19. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L. Attention U-Net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical image computing and computer-assisted intervention, Daejeon, Republic of Korea, 23–27 September 2015; pp. 234–241. [Google Scholar]

- Ma, J.; Yang, Z.; Kim, S.; Chen, B.; Baharoon, M.; Fallahpour, A.; Asakereh, R.; Lyu, H.; Wang, B. Medsam2: Segment anything in 3d medical images and videos. arXiv 2025, arXiv:2504.03600. [Google Scholar] [CrossRef]

- Sengupta, S.; Chakrabarty, S.; Soni, R. Is SAM 2 better than SAM in medical image segmentation? In Proceedings of the Medical Imaging 2025: Image Processing, San Diego, CA, USA, 17–20 February 2025; pp. 666–672. [Google Scholar]

- Taha, A.A.; Hanbury, A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Med. Imaging 2015, 15, 29. [Google Scholar] [CrossRef]

- Reinke, A.; Tizabi, M.D.; Sudre, C.H.; Eisenmann, M.; Rädsch, T.; Baumgartner, M.; Acion, L.; Antonelli, M.; Arbel, T.; Bakas, S. Common limitations of image processing metrics: A picture story. arXiv 2021, arXiv:2104.05642. [Google Scholar] [CrossRef]

- Kusayanagi, T.; Maegawa, S.; Terauchi, S.; Hashimoto, W.; Kaneda, S. A smartphone application for personalized tooth shade determination. Diagnostics 2023, 13, 1969. [Google Scholar] [CrossRef]

- Luo, M.R.; Cui, G.; Rigg, B. The development of the CIE 2000 colour-difference formula: CIEDE2000. In Color Research & Application: Endorsed by Inter-Society Color Council, The Colour Group (Great Britain), Canadian Society for Color, Color Science Association of Japan, Dutch Society for the Study of Color, The Swedish Colour Centre Foundation, Colour Society of Australia, Centre Français de la Couleur; Wiley: Hoboken, NJ, USA, 2001; Volume 26, pp. 340–350. [Google Scholar]

- Paravina, R.D.; Ghinea, R.; Herrera, L.J.; Bona, A.D.; Igiel, C.; Linninger, M.; Sakai, M.; Takahashi, H.; Tashkandi, E.; Mar Perez, M.d. Color difference thresholds in dentistry. J. Esthet. Restor. Dent. 2015, 27, S1–S9. [Google Scholar] [CrossRef]

- Huang, Y.; Yang, X.; Liu, L.; Zhou, H.; Chang, A.; Zhou, X.; Chen, R.; Yu, J.; Chen, J.; Chen, C. Segment anything model for medical images? Med. Image Anal. 2024, 92, 103061. [Google Scholar] [CrossRef]

- Sirintawat, N.; Leelaratrungruang, T.; Poovarodom, P.; Kiattavorncharoen, S.; Amornsettachai, P. The accuracy and reliability of tooth shade selection using different instrumental techniques: An in vitro study. Sensors 2021, 21, 7490. [Google Scholar] [CrossRef]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. In Color Research & Application: Endorsed by Inter-Society Color Council, The Colour Group (Great Britain), Canadian Society for Color, Color Science Association of Japan, Dutch Society for the Study of Color, The Swedish Colour Centre Foundation, Colour Society of Australia, Centre Français de la Couleur; Wiley: Hoboken, NJ, USA, 2005; Volume 30, pp. 21–30. [Google Scholar]

- Li, X.; Chen, G.; Wu, Y.; Yang, J.; Zhou, T.; Zhou, Y.; Zhu, W. MedSegViG: Medical Image Segmentation with a Vision Graph Neural Network. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisbon, Portugal, 3–6 December 2024; pp. 3408–3411. [Google Scholar]

- Chen, G.; Qin, J.; Amor, B.B.; Zhou, W.; Dai, H.; Zhou, T.; Huang, H.; Shao, L. Automatic detection of tooth-gingiva trim lines on Dental Surfaces. IEEE Trans. Med. Imaging 2023, 42, 3194–3204. [Google Scholar] [CrossRef]

| Model Variant | Backbone | Number of Parameters | Input Resolution | Recommended Use Case |

|---|---|---|---|---|

| UNet | CNN encoder–decoder | ~40 million | 512 × 512 (configurable) | Classical baseline; fast training/inference; robust with limited data |

| SAM2-tiny | ViT-Tiny | ~5 million | 256 × 256 | Lightweight applications, mobile deployment |

| SAM2-small | ViT-Small | ~30 million | 512 × 512 | Real-time inference with efficiency–accuracy balance |

| SAM2-base+ | ViT-Base Plus | ~90 million | 1024 × 1024 | Balanced trade-off between performance and cost |

| SAM2-large | ViT-Large | ~300 million | 1024 × 1024 or higher | High-precision medical or scientific segmentation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, K.; Lim, J.; Ahn, J.-S.; Lee, K.-S. The Evaluation of a Deep Learning Approach to Automatic Segmentation of Teeth and Shade Guides for Tooth Shade Matching Using the SAM2 Algorithm. Bioengineering 2025, 12, 959. https://doi.org/10.3390/bioengineering12090959

Han K, Lim J, Ahn J-S, Lee K-S. The Evaluation of a Deep Learning Approach to Automatic Segmentation of Teeth and Shade Guides for Tooth Shade Matching Using the SAM2 Algorithm. Bioengineering. 2025; 12(9):959. https://doi.org/10.3390/bioengineering12090959

Chicago/Turabian StyleHan, KyeongHwan, JaeHyung Lim, Jin-Soo Ahn, and Ki-Sun Lee. 2025. "The Evaluation of a Deep Learning Approach to Automatic Segmentation of Teeth and Shade Guides for Tooth Shade Matching Using the SAM2 Algorithm" Bioengineering 12, no. 9: 959. https://doi.org/10.3390/bioengineering12090959

APA StyleHan, K., Lim, J., Ahn, J.-S., & Lee, K.-S. (2025). The Evaluation of a Deep Learning Approach to Automatic Segmentation of Teeth and Shade Guides for Tooth Shade Matching Using the SAM2 Algorithm. Bioengineering, 12(9), 959. https://doi.org/10.3390/bioengineering12090959