ACM-Assessor: An Artificial Intelligence System for Assessing Angle Closure Mechanisms in Ultrasound Biomicroscopy

Abstract

1. Introduction

2. Materials and Methods

2.1. Datasets and Labeling

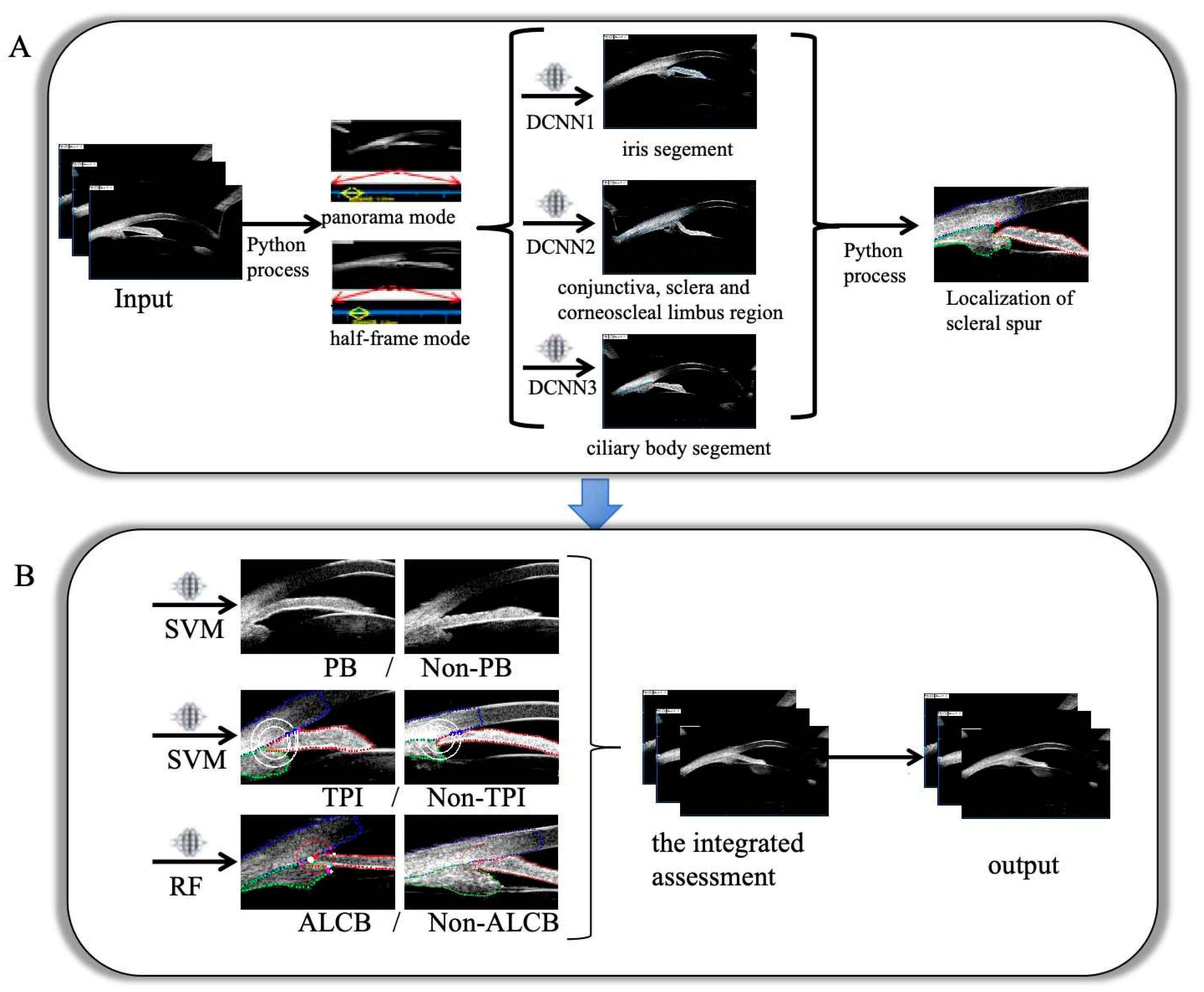

2.2. Development of the Model

2.3. Evaluation and Experiments

3. Results

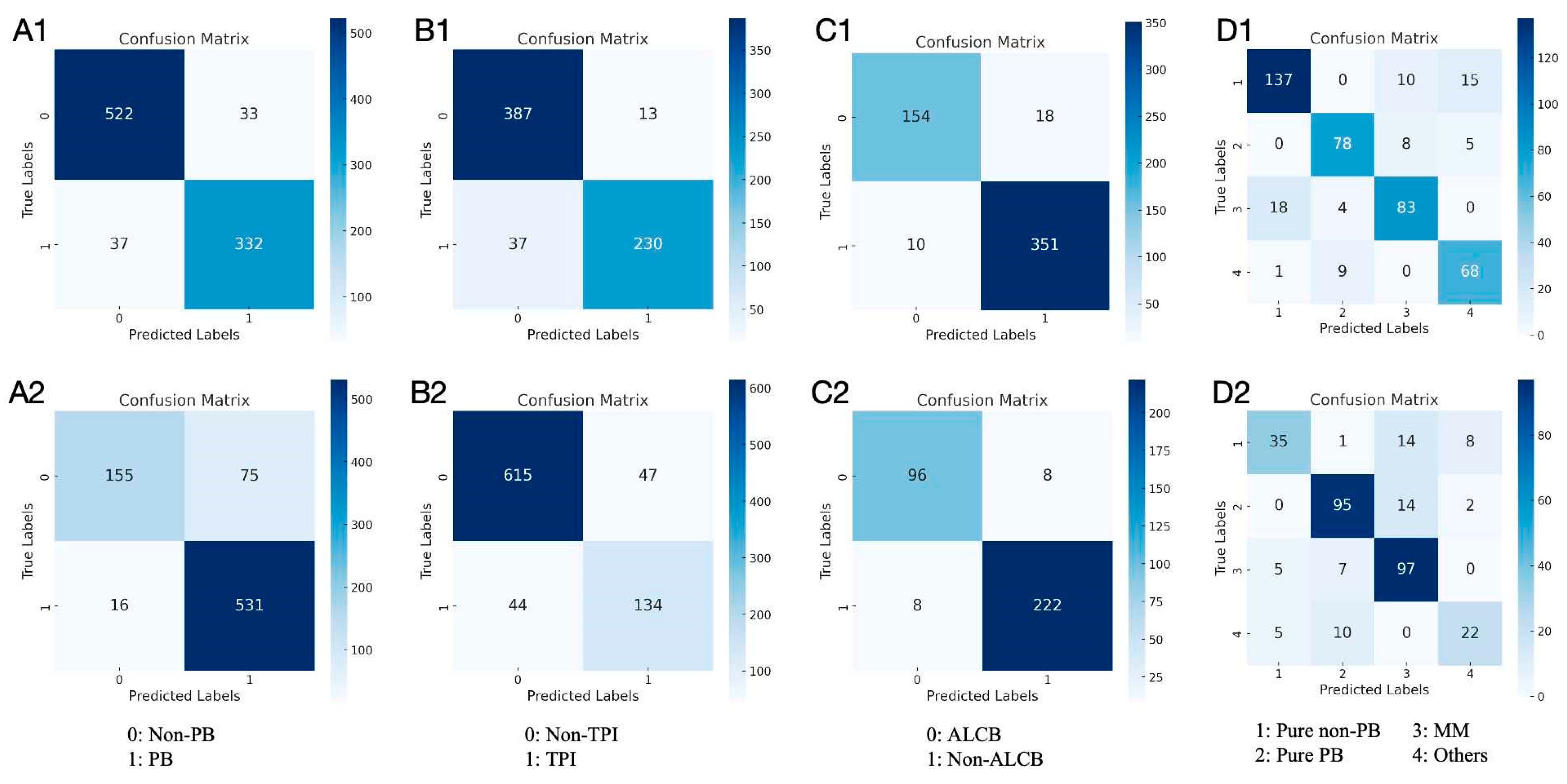

3.1. Results in Both Internal and External Test Datasets

3.2. Performance of the Models and Experts

3.3. Comparison of the Performance of Beginners with and Without the Model Assistance

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- GBD 2019 Blindness and Vision Impairment Collaborators; Vision Loss Expert Group of the Global Burden of Disease Study. Causes of Blindness and Vision Impairment in 2020 and Trends over 30 Years, and Prevalence of Avoidable Blindness in Relation to VISION 2020: The Right to Sight: An Analysis for the Global Burden of Disease Study. Lancet Glob. Health 2021, 9, e144–e160. [Google Scholar] [CrossRef] [PubMed]

- Chan, E.W.; Li, X.; Tham, Y.-C.; Liao, J.; Wong, T.Y.; Aung, T.; Cheng, C.-Y. Glaucoma in Asia: Regional Prevalence Variations and Future Projections. Br. J. Ophthalmol. 2016, 100, 78–85. [Google Scholar] [CrossRef] [PubMed]

- Quigley, H.A.; Broman, A.T. The Number of People with Glaucoma Worldwide in 2010 and 2020. Br. J. Ophthalmol. 2006, 90, 262–267. [Google Scholar] [CrossRef] [PubMed]

- Burton, M.J.; Ramke, J.; Marques, A.P.; Bourne, R.R.A.; Congdon, N.; Jones, I.; Ah Tong, B.A.M.; Arunga, S.; Bachani, D.; Bascaran, C.; et al. The Lancet Global Health Commission on Global Eye Health: Vision beyond 2020. Lancet Glob. Health 2021, 9, e489–e551. [Google Scholar] [CrossRef]

- Tham, Y.-C.; Li, X.; Wong, T.Y.; Quigley, H.A.; Aung, T.; Cheng, C.-Y. Global Prevalence of Glaucoma and Projections of Glaucoma Burden through 2040: A Systematic Review and Meta-Analysis. Ophthalmology 2014, 121, 2081–2090. [Google Scholar] [CrossRef]

- Foster, P.J.; Buhrmann, R.; Quigley, H.A.; Johnson, G.J. The Definition and Classification of Glaucoma in Prevalence Surveys. Br. J. Ophthalmol. 2002, 86, 238–242. [Google Scholar] [CrossRef]

- Wang, N.; Wu, H.; Fan, Z. Primary Angle Closure Glaucoma in Chinese and Western Populations. Chin. Med. J. 2002, 115, 1706–1715. [Google Scholar]

- Wang, N.; Ouyang, J.; Zhou, W.; Lai, M.; Ye, T.; Zeng, M.; Chen, J. Multiple patterns of angle closure mechanisms in primary angle closure glaucoma in Chinese. Zhonghua Yan Ke Za Zhi Chin. J. Ophthalmol. 2000, 36, 46–51. [Google Scholar]

- Kwon, J.; Sung, K.R.; Han, S. Long-Term Changes in Anterior Segment Characteristics of Eyes With Different Primary Angle-Closure Mechanisms. Am. J. Ophthalmol. 2018, 191, 54–63. [Google Scholar] [CrossRef]

- Song, M.K.; Sung, K.R.; Shin, J.W. Glaucoma Progression After Lens Extraction in Primary Angle-Closure Glaucoma According to Angle-Closure Mechanism. J. Glaucoma 2022, 31, 261–267. [Google Scholar] [CrossRef]

- Tun, T.A.; Sawicki, A.; Wilkos-Kuc, A.; Aung, T.; Zarnowski, T. Changes in Anterior Segment Parameters After Laser Peripheral Iridotomy in Caucasian Eyes With Different Primary Angle Closure Mechanisms. J. Glaucoma 2023, 32, 820–825. [Google Scholar] [CrossRef] [PubMed]

- Razeghinejad, M.R.; Myers, J.S. Contemporary Approach to the Diagnosis and Management of Primary Angle-Closure Disease. Surv. Ophthalmol. 2018, 63, 754–768. [Google Scholar] [CrossRef] [PubMed]

- Chan, P.P.; Pang, J.C.; Tham, C.C. Acute Primary Angle Closure-Treatment Strategies, Evidences and Economical Considerations. Eye 2019, 33, 110–119. [Google Scholar] [CrossRef] [PubMed]

- Riva, I.; Micheletti, E.; Oddone, F.; Bruttini, C.; Montescani, S.; De Angelis, G.; Rovati, L.; Weinreb, R.N.; Quaranta, L. Anterior Chamber Angle Assessment Techniques: A Review. J. Clin. Med. 2020, 9, 3814. [Google Scholar] [CrossRef]

- Porporato, N.; Baskaran, M.; Husain, R.; Aung, T. Recent Advances in Anterior Chamber Angle Imaging. Eye 2020, 34, 51–59. [Google Scholar] [CrossRef]

- Li, Z.; Guo, C.; Nie, D.; Lin, D.; Zhu, Y.; Chen, C.; Wu, X.; Xu, F.; Jin, C.; Zhang, X.; et al. Deep Learning for Detecting Retinal Detachment and Discerning Macular Status Using Ultra-Widefield Fundus Images. Commun. Biol. 2020, 3, 15. [Google Scholar] [CrossRef]

- Xie, Y.; Zhao, L.; Yang, X.; Wu, X.; Yang, Y.; Huang, X.; Liu, F.; Xu, J.; Lin, L.; Lin, H.; et al. Screening Candidates for Refractive Surgery With Corneal Tomographic-Based Deep Learning. JAMA Ophthalmol. 2020, 138, 519–526. [Google Scholar] [CrossRef]

- Zhang, Y.; Dong, Z.; Zhang, Q.; Li, L.; Thomas, R.; Li, S.Z.; He, M.G.; Wang, N.L. Detection of Primary Angleclosure Suspect with Different Mechanisms of Angle Closure Using Multivariate Prediction Models. Acta Ophthalmol. 2021, 99, e576–e586. [Google Scholar] [CrossRef]

- Wanichwecharungruang, B.; Kaothanthong, N.; Pattanapongpaiboon, W.; Chantangphol, P.; Seresirikachorn, K.; Srisuwanporn, C.; Parivisutt, N.; Grzybowski, A.; Theeramunkong, T.; Ruamviboonsuk, P. Deep Learning for Anterior Segment Optical Coherence Tomography to Predict the Presence of Plateau Iris. Transl. Vis. Sci. Technol. 2021, 10, 7. [Google Scholar] [CrossRef]

- Niwas, S.I.; Lin, W.; Kwoh, C.K.; Kuo, C.-C.J.; Sng, C.C.; Aquino, M.C.; Chew, P.T.K. Cross-Examination for Angle-Closure Glaucoma Feature Detection. IEEE J. Biomed. Health Inform. 2016, 20, 343–354. [Google Scholar] [CrossRef]

- Niwas, S.I.; Lin, W.; Bai, X.; Kwoh, C.K.; Jay Kuo, C.-C.; Sng, C.C.; Aquino, M.C.; Chew, P.T.K. Automated Anterior Segment OCT Image Analysis for Angle Closure Glaucoma Mechanisms Classification. Comput. Methods Programs Biomed. 2016, 130, 65–75. [Google Scholar] [CrossRef]

- Niwas, S.I.; Lin, W.; Bai, X.; Kwoh, C.K.; Sng, C.C.; Aquino, M.C.; Chew, P.T.K. Reliable Feature Selection for Automated Angle Closure Glaucoma Mechanism Detection. J. Med. Syst. 2015, 39, 21. [Google Scholar] [CrossRef]

- Jiang, W.; Yan, Y.; Cheng, S.; Wan, S.; Huang, L.; Zheng, H.; Tian, M.; Zhu, J.; Pan, Y.; Li, J.; et al. Deep Learning-Based Model for Automatic Assessment of Anterior Angle Chamber in Ultrasound Biomicroscopy. Ultrasound Med. Biol. 2023, 49, 2497–2509. [Google Scholar] [CrossRef]

- Gedde, S.J.; Chen, P.P.; Muir, K.W.; Vinod, K.; Lind, J.T.; Wright, M.M.; Li, T.; Mansberger, S.L. American Academy of Ophthal-mology Preferred Practice Pattern Glaucoma Panel Primary Angle-Closure Disease Preferred Practice Pattern®. Ophthalmology 2021, 128, P30–P70. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, S.Z.; Li, L.; He, M.G.; Thomas, R.; Wang, N.L. Quantitative Analysis of Iris Changes Following Mydriasis in Subjects with Different Mechanisms of Angle Closure. Investig. Ophthalmol. Vis. Sci. 2015, 56, 563–570. [Google Scholar] [CrossRef]

- Ye, Z.; Xin, T.; Ningli, W. The Reproducibility of Mechanism Categories of Angle Closure Using Ultrasound Biomicroscopy and Anterior Segment Optical Coherence Tomography. Chin. J. Exp. Ophthalmol. 2016, 34, 936–940. [Google Scholar] [CrossRef]

- Barkan, O. Narrow-Angle Glaucoma; Pupillary Block and the Narrow-Angle Mechanism. Am. J. Ophthalmol. 1954, 37, 332–350. [Google Scholar]

- Moghimi, S.; Torkashvand, A.; Mohammadi, M.; Yaseri, M.; Saunders, L.J.; Lin, S.C.; Weinreb, R.N. Classification of Primary An-gle Closure Spectrum with Hierarchical Cluster Analysis. PLoS ONE 2018, 13, e0199157. [Google Scholar] [CrossRef]

- Lai, J.; Choy, B.N.K.; Shum, J.W.H. Management of Primary Angle-Closure Glaucoma. Asia-Pac. J. Ophthalmol. 2016, 5, 59. [Google Scholar] [CrossRef]

- Sun, X.; Dai, Y.; Chen, Y.; Yu, D.-Y.; Cringle, S.J.; Chen, J.; Kong, X.; Wang, X.; Jiang, C. Primary Angle Closure Glaucoma: What We Know and What We Don’t Know. Prog. Retin. Eye Res. 2017, 57, 26–45. [Google Scholar] [CrossRef]

- Wang, W.; Wang, L.; Wang, T.; Wang, X.; Zhou, S.; Yang, J.; Lin, S. Automatic Localization of the Scleral Spur Using Deep Learning and Ultrasound Biomicroscopy. Transl. Vis. Sci. Technol. 2021, 10, 28. [Google Scholar] [CrossRef]

- Wang, W.; Wang, L.; Wang, X.; Zhou, S.; Lin, S.; Yang, J. A Deep Learning System for Automatic Assessment of Anterior Chamber Angle in Ultrasound Biomicroscopy Images. Transl. Vis. Sci. Technol. 2021, 10, 21. [Google Scholar] [CrossRef]

- Li, W.; Chen, Q.; Jiang, Z.; Deng, G.; Zong, Y.; Shi, G.; Jiang, C.; Sun, X. Automatic Anterior Chamber Angle Measurement for Ultrasound Biomicroscopy Using Deep Learning. J. Glaucoma 2020, 29, 81–85. [Google Scholar] [CrossRef]

- Zhu, J.; Yan, Y.; Jiang, W.; Zhang, S.; Niu, X.; Wan, S.; Cong, Y.; Hu, X.; Zheng, B.; Yang, Y. A Deep Learning Model for Automatically Quantifying the Anterior Segment in Ultrasound Biomicroscopy Images of Implantable Collamer Lens Candidates. Ultrasound Med. Biol. 2024, 50, 1262–1272. [Google Scholar] [CrossRef]

| Classification | Algorithm | Accuracy (95% CI) | Sensitivity (95% CI) | Specificity (95% CI) | PPV (95% CI) | NPV (95% CI) | F1 Score | MCC | AUC |

|---|---|---|---|---|---|---|---|---|---|

| PB /Non-PB | RF | 0.921 (0.901–0.937) | 0.900 (0.863–0.928) | 0.935 (0.910–0.954) | 0.902 (0.866–0.930) | 0.933 (0.909–0.952) | 0.901 | 0.835 | 0.970 |

| GBDT | 0.916 (0.895–0.932) | 0.891 (0.854–0.920) | 0.932 (0.906–0.951) | 0.896 (0.859–0.925) | 0.928 (0.903–0.948) | 0.894 | 0.824 | 0.969 | |

| XGB | 0.916 (0.895–0.932) | 0.889 (0.851–0.918) | 0.933 (0.908–0.952) | 0.899 (0.862–0.927) | 0.927 (0.901–0.946) | 0.894 | 0.824 | 0.970 | |

| SVM | 0.924 (0.905–0.940) | 0.900 (0.863–0.928) | 0.941 (0.917–0.958) | 0.910 (0.874–0.936) | 0.934 (0.909–0.952) | 0.905 | 0.842 | 0.970 | |

| LR | 0.921 (0.901–0.937) | 0.878 (0.839–0.909) | 0.950 (0.927–0.966) | 0.920 (0.886–0.946) | 0.921 (0.895–0.941) | 0.899 | 0.835 | 0.971 | |

| TPI /Non-TPI | RF | 0.915 (0.890–0.934) | 0.843 (0.792–0.883) | 0.963 (0.938–0.978) | 0.938 (0.897–0.963) | 0.902 (0.868–0.927) | 0.888 | 0.822 | 0.958 |

| GBDT | 0.915 (0.890–0.934) | 0.831 (0.780–0.873) | 0.970 (0.947–0.984) | 0.949 (0.910–0.972) | 0.896 (0.863–0.922) | 0.886 | 0.823 | 0.964 | |

| XGB | 0.922 (0.898–0.941) | 0.854 (0.805–0.893) | 0.968 (0.944–0.982) | 0.946 (0.907–0.970) | 0.908 (0.876–0.933) | 0.898 | 0.838 | 0.970 | |

| SVM | 0.925 (0.902–0.943) | 0.861 (0.813–0.899) | 0.968 (0.944–0.982) | 0.947 (0.908–0.970) | 0.913 (0.881–0.937) | 0.902 | 0.844 | 0.971 | |

| LR | 0.922 (0.898–0.941) | 0.854 (0.805–0.893) | 0.968 (0.944–0.982) | 0.946 (0.907–0.970) | 0.908 (0.876–0.933) | 0.898 | 0.838 | 0.963 | |

| ALCB /Non-ALCB | RF | 0.947 (0.924–0.964) | 0.895 (0.837–0.935) | 0.972 (0.948–0.986) | 0.939 (0.888–0.969) | 0.951 (0.923–0.970) | 0.917 | 0.879 | 0.977 |

| GBDT | 0.921 (0.894–0.942) | 0.826 (0.759–0.878) | 0.967 (0.941–0.982) | 0.922 (0.965–0.957) | 0.921 (0.888–0.945) | 0.871 | 0.817 | 0.973 | |

| XGB | 0.938 (0.913–0.956) | 0.884 (0.824–0.926) | 0.964 (0.938–0.980) | 0.921 (0.866–0.956) | 0.946 (0.916–0.966) | 0.902 | 0.857 | 0.972 | |

| SVM | 0.880 (0.849–0.906) | 0.645 (0.568–0.716) | 0.992 (0.974–0.998) | 0.974 (0.919–0.993) | 0.854 (0.816–0.886) | 0.776 | 0.726 | 0.954 | |

| LR | 0.921 (0.894–0.942) | 0.820 (0.752–0.873) | 0.970 (0.945–0.984) | 0.928 (0.871–0.962) | 0.919 (0.885–0.943) | 0.870 | 0.817 | 0.952 |

| Accuracy of Classification (95% CI) | Times (s) | ||||

|---|---|---|---|---|---|

| PB /Non-PB | TPI /Non-TPI | ALCB /Non-ALCB | The Integrated Assessment | ||

| model | 0.867 (0.831–0.897) | 0.828 (0.789–0.862) | 0.897 (0.863–0.923) | 0.839 (0.800–0.872) | 784.80 |

| expert 1 | 0.812 (0.771–0.867) | 0.888 (0.853–0.915) | 0.867 (0.831–0.897) | 0.729 (0.685–0.770) | 2940.02 |

| expert 2 | 0.837 (0.798–0.870) | 0.755 (0.711–0.793) | 0.862 (0.826–0.893) | 0.798 (0.757–0.834) | 3360.50 |

| expert 3 | 0.929 (0.900–0.950) | 0.853 (0.816–0.884) | 0.908 (0.876–0.933) | 0.849 (0.811–0.880) | 2760.66 |

| expert average | 0.860 (0.823–0.891) | 0.833 (0.794–0.866) | 0.878 (0.843–0.907) | 0.791 (0.750–0.828) | 3020.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cong, Y.; Jiang, W.; Dong, Z.; Zhu, J.; Yang, Y.; Wang, Y.; Deng, Q.; Yan, Y.; Mao, J.; Shi, X.; et al. ACM-Assessor: An Artificial Intelligence System for Assessing Angle Closure Mechanisms in Ultrasound Biomicroscopy. Bioengineering 2025, 12, 415. https://doi.org/10.3390/bioengineering12040415

Cong Y, Jiang W, Dong Z, Zhu J, Yang Y, Wang Y, Deng Q, Yan Y, Mao J, Shi X, et al. ACM-Assessor: An Artificial Intelligence System for Assessing Angle Closure Mechanisms in Ultrasound Biomicroscopy. Bioengineering. 2025; 12(4):415. https://doi.org/10.3390/bioengineering12040415

Chicago/Turabian StyleCong, Yuyu, Weiyan Jiang, Zehua Dong, Jian Zhu, Yuanhao Yang, Yujin Wang, Qian Deng, Yulin Yan, Jiewen Mao, Xiaoshuo Shi, and et al. 2025. "ACM-Assessor: An Artificial Intelligence System for Assessing Angle Closure Mechanisms in Ultrasound Biomicroscopy" Bioengineering 12, no. 4: 415. https://doi.org/10.3390/bioengineering12040415

APA StyleCong, Y., Jiang, W., Dong, Z., Zhu, J., Yang, Y., Wang, Y., Deng, Q., Yan, Y., Mao, J., Shi, X., Pan, J., Yang, Z., Wang, Y., Fang, J., Zheng, B., & Yang, Y. (2025). ACM-Assessor: An Artificial Intelligence System for Assessing Angle Closure Mechanisms in Ultrasound Biomicroscopy. Bioengineering, 12(4), 415. https://doi.org/10.3390/bioengineering12040415