PatientEase—Domain-Aware RAG for Rehabilitation Instruction Simplification

Abstract

1. Introduction

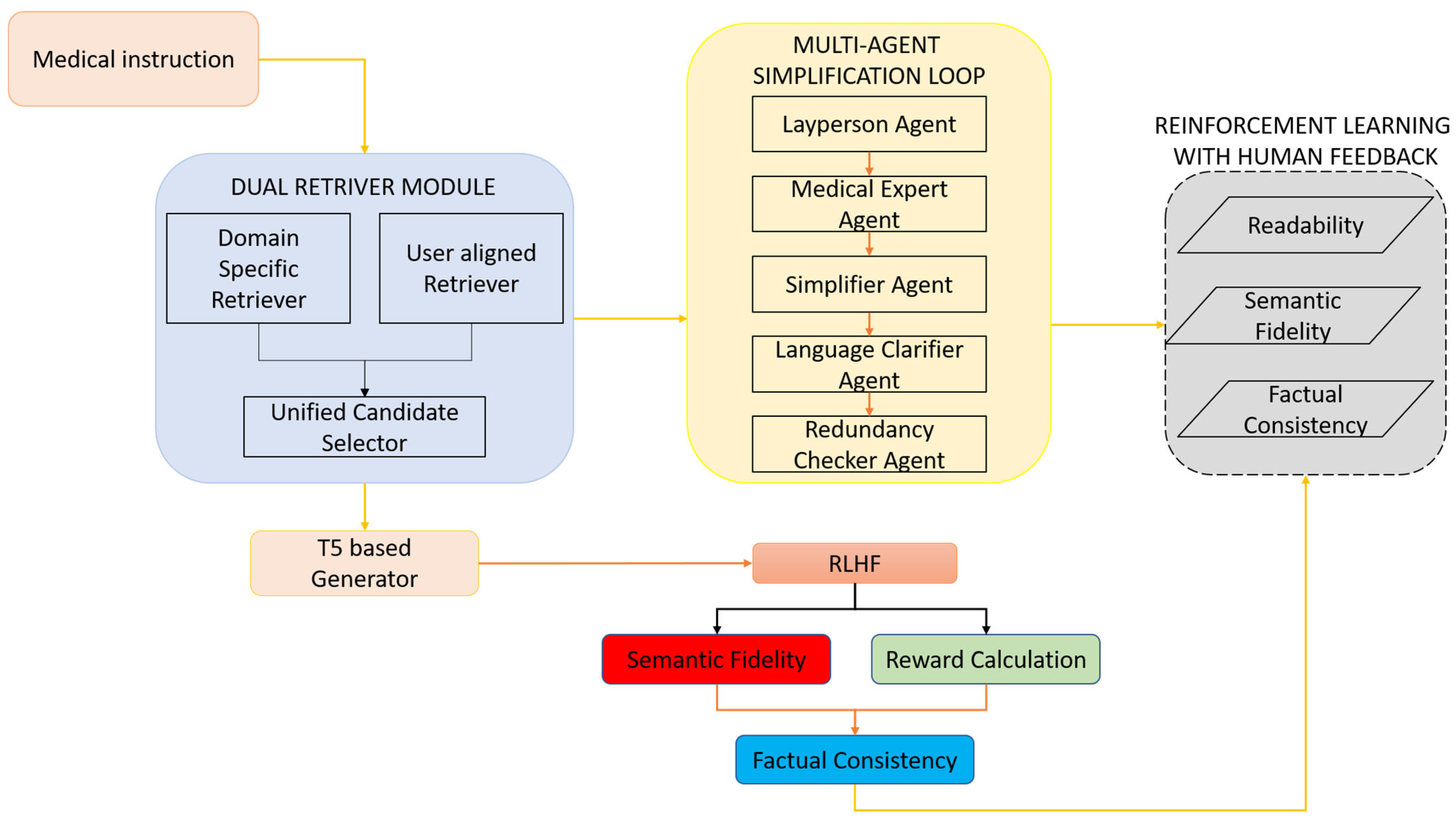

- A dual-retrieval engine combining a domain-specific retriever and a user-aligned retriever.

- A multi-agent simplification loop that distributes rewriting tasks among specialized transformer agents responsible for lay translation, domain validation, and syntactic restructuring.

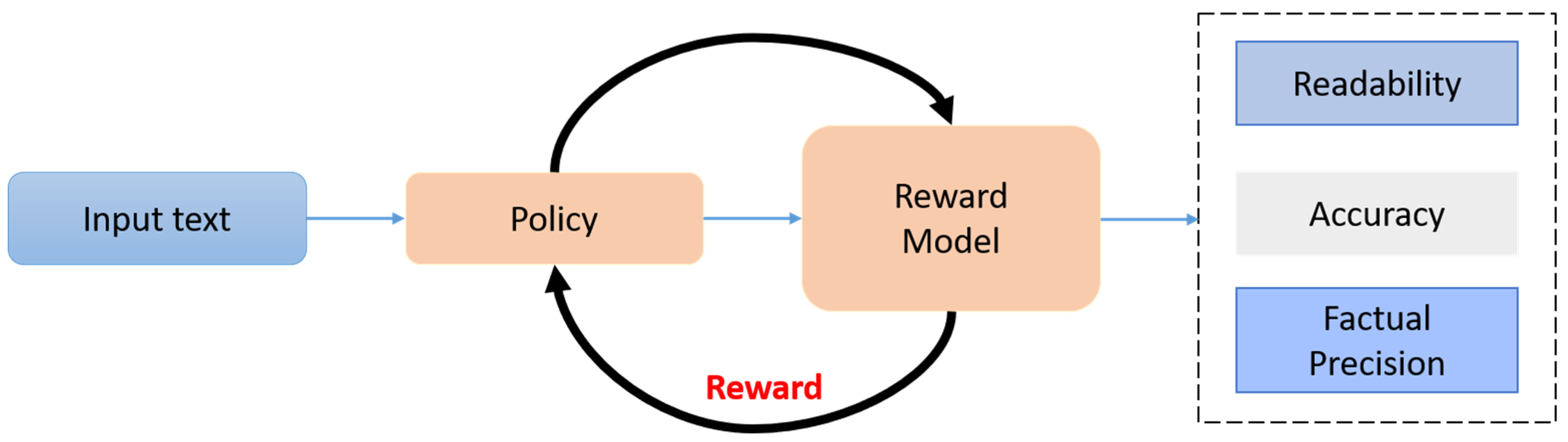

- A reinforcement learning optimization phase guided by human and simulated clinical feedback, optimizing for readability, factual accuracy, and clinician-preferred style.

2. Related Works

3. Materials and Methods

3.1. Dual Retrieval Module

3.2. Multi-Agent Simplification Loop

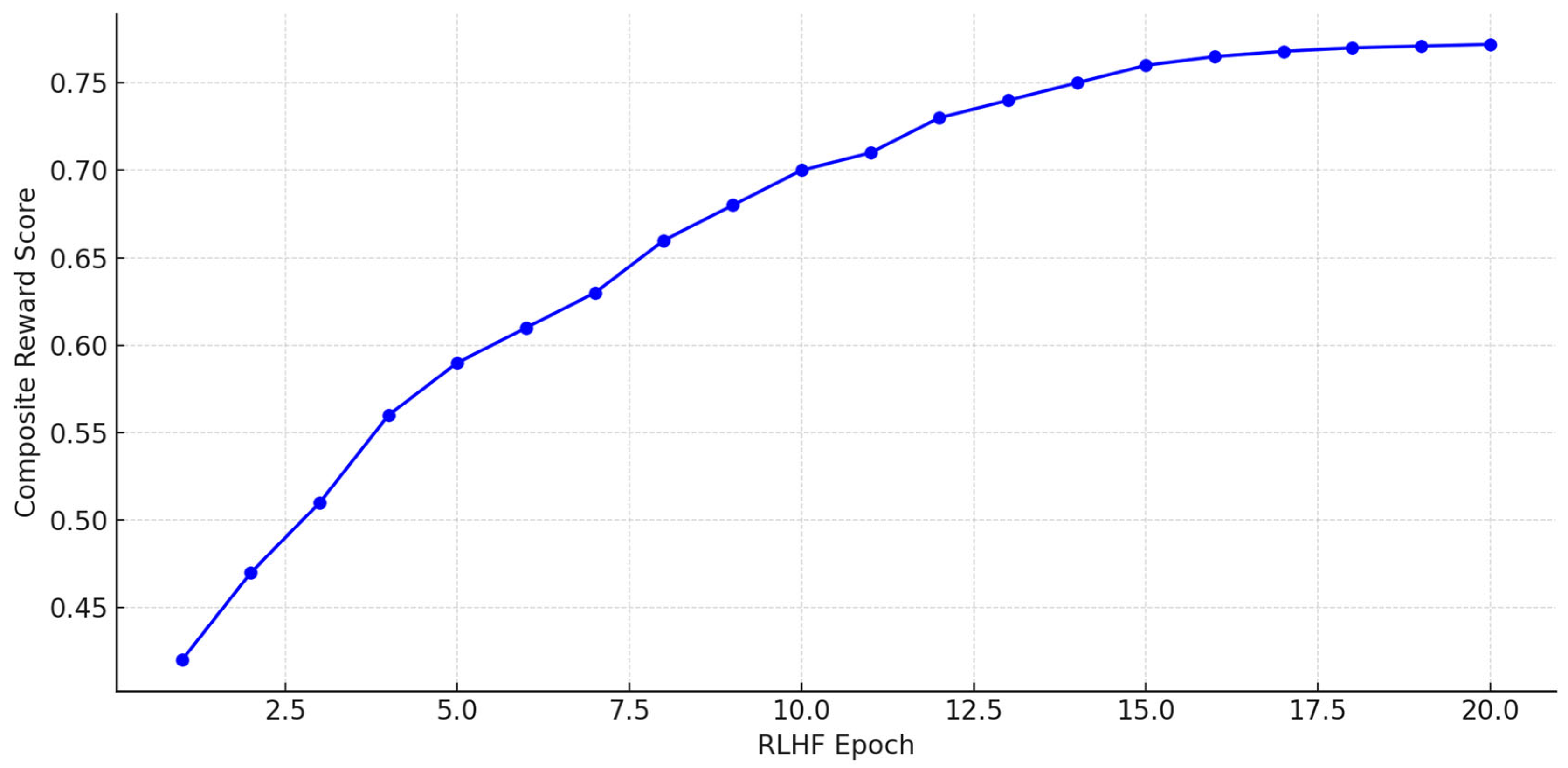

3.3. RLHF Optimization

4. Experimental Results

4.1. Results

4.2. Ablation Study

5. Discussion

6. Conclusions

Ethical and Societal Considerations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abdusalomov, A.; Umirzakova, S.; Mirzakhalilov, S.; Kutlimuratov, A.; Nasimov, R.; Temirov, Z.; Jeong, W.; Choi, H.; Whangbo, T.K. A Generative Expert-Narrated Simplification Model for Enhancing Health Literacy Among the Older Population. Bioengineering 2025, 12, 1066. [Google Scholar] [CrossRef]

- Shool, S.; Adimi, S.; Saboori Amleshi, R.; Bitaraf, E.; Golpira, R.; Tara, M. A systematic review of large language model (LLM) evaluations in clinical medicine. BMC Med. Inform. Decis. Mak. 2025, 25, 117. [Google Scholar] [CrossRef]

- Goyal, S.; Rastogi, E.; Rajagopal, S.P.; Yuan, D.; Zhao, F.; Chintagunta, J.; Naik, G.; Ward, J. Healai: A healthcare llm for effective medical documentation. In Proceedings of the 17th ACM International Conference on Web Search and Data Mining, Merida, Mexico, 4–8 March 2024; pp. 1167–1168. [Google Scholar]

- Furini, M.; Mariani, M.; Montagna, S.; Ferretti, S. Conversational Skills of LLM-based Healthcare Chatbot for Personalized Communications. In Proceedings of the 2024 International Conference on Information Technology for Social Good, Brisbane, Australia, 11–13 September 2024; pp. 429–432. [Google Scholar]

- Vishwanath, A.B.; Srinivasalu, V.K.; Subramaniam, N. Role of large language models in improving provider–patient experience and interaction efficiency: A scoping review. Artif. Intell. Health 2024, 2, 4808. [Google Scholar] [CrossRef]

- Mukherjee, S.; Gamble, P.; Ausin, M.S.; Kant, N.; Aggarwal, K.; Manjunath, N.; Datta, D.; Liu, Z.; Ding, J.; Busacca, S.; et al. Polaris: A safety-focused llm constellation architecture for healthcare. arXiv 2024, arXiv:2403.13313. [Google Scholar] [CrossRef]

- Ng, K.K.Y.; Matsuba, I.; Zhang, P.C. RAG in health care: A novel framework for improving communication and decision-making by addressing LLM limitations. Nejm Ai 2025, 2, AIra2400380. [Google Scholar] [CrossRef]

- Kumar, M.V.; Ramesh, G.P. Smart IoT based health care environment for an effective information sharing using Resource Constraint LLM Models. J. Smart Internet Things (JSIoT) 2024, 2024, 133–147. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Boymatov, E.; Zaripova, D.; Kamalov, S.; Temirov, Z.; Jeong, W.; Choi, H.; Whangbo, T.K. A Human-Centric, Uncertainty-Aware Event-Fused AI Network for Robust Face Recognition in Adverse Conditions. Appl. Sci. 2025, 15, 7381. [Google Scholar] [CrossRef]

- Subramanian, C.R.; Yang, D.A.; Khanna, R. Enhancing health care communication with large language models—The role, challenges, and future directions. JAMA Netw. Open 2024, 7, e240347. [Google Scholar] [CrossRef]

- Weisman, D.; Sugarman, A.; Huang, Y.M.; Gelberg, L.; Ganz, P.A.; Comulada, W.S. Development of a GPT-4–Powered Virtual Simulated Patient and Communication Training Platform for Medical Students to Practice Discussing Abnormal Mammogram Results With Patients: Multiphase Study. JMIR Form. Res. 2025, 9, e65670. [Google Scholar] [CrossRef]

- Nashwan, A.J.; Abujaber, A.A.; Choudry, H. Embracing the future of physician-patient communication: GPT-4 in gastroenterology. Gastroenterol. Endosc. 2023, 1, 132–135. [Google Scholar] [CrossRef]

- Borchert, F.; Llorca, I.; Schapranow, M.P. Improving biomedical entity linking for complex entity mentions with LLM-based text simplification. Database 2024, 2024, baae067. [Google Scholar] [CrossRef] [PubMed]

- Makhmutova, L.; Salton, G.D.; Perez-Tellez, F.; Ross, R.J. Automated Medical Text Simplification for Enhanced Patient Access. In Proceedings of the 17th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2024), Rome, Italy, 21–23 February 2024; pp. 208–218. [Google Scholar]

- Dehkordi, M.K.H.; Zhou, S.; Perl, Y.; Deek, F.P.; Einstein, A.J.; Elhanan, G.; He, Z.; Liu, H. December. Enhancing patient Comprehension: An effective sequential prompting approach to simplifying EHRs using LLMs. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), London, UK, 3–6 December 2024; IEEE: New York, NY, USA, 2024; pp. 6370–6377. [Google Scholar]

- Devaraj, A.; Wallace, B.C.; Marshall, I.J.; Li, J.J. Paragraph-level simplification of medical texts. In Proceedings of the Conference. Association for Computational Linguistics. North American Chapter. Meeting, Online, 6–11 June 2021; Volume 2021, p. 4972. [Google Scholar]

- Van den Bercken, L.; Sips, R.J.; Lofi, C. Evaluating neural text simplification in the medical domain. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 3286–3292. [Google Scholar]

- Hembroff, G.; Naseem, S. Advancing Health Literacy Through Generative AI: The Utilization of Open-Source LLMs for Text Simplification and Readability. In World Congress in Computer Science, Computer Engineering & Applied Computing; Springer: Cham, Switzerland, 2024; pp. 326–339. [Google Scholar]

- Attal, K.; Ondov, B.; Demner-Fushman, D. A dataset for plain language adaptation of biomedical abstracts. Sci. Data 2023, 10, 8. [Google Scholar] [CrossRef] [PubMed]

- Andalib, S.; Solomon, S.S.; Picton, B.G.; Spina, A.C.; Scolaro, J.A.; Nelson, A.M. Source Characteristics Influence AI-Enabled Orthopaedic Text Simplification: Recommendations for the Future. JBJS Open Access 2025, 10, e24. [Google Scholar] [CrossRef] [PubMed]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.T.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Wang, C.; Long, Q.; Xiao, M.; Cai, X.; Wu, C.; Meng, Z.; Wang, X.; Zhou, Y. Biorag: A rag-llm frame-work for biological question reasoning. arXiv 2024, arXiv:2408.01107. [Google Scholar]

- Kumichev, G.; Blinov, P.; Kuzkina, Y.; Goncharov, V.; Zubkova, G.; Zenovkin, N.; Goncharov, A.; Savchenko, A. Medsyn: Llm-based synthetic medical text generation framework. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Belgrade, Serbia, 9–13 September 2024; Springer: Cham, Switzerland, 2024; pp. 215–230. [Google Scholar]

- Wang, Z.; Gong, P.; Zhang, Y.; Gu, J.; Yang, X. Retrieval-augmented knowledge-intensive dialogue. In Proceedings of the CCF International Conference on Natural Language Processing and Chinese Computing, Foshan, China, 12–15 October 2023; Springer: Cham, Switzerland, 2023; pp. 16–28. [Google Scholar]

- Jiang, M.; Lin, B.Y.; Wang, S.; Xu, Y.; Yu, W.; Zhu, C. Knowledge-augmented Methods for Natural Language Generation. In Knowledge-Augmented Methods for Natural Language Processing; Springer: Singapore, 2024; pp. 41–63. [Google Scholar]

- Zhang, W.; Huang, J.H.; Vakulenko, S.; Xu, Y.; Rajapakse, T.; Kanoulas, E. Beyond relevant documents: A knowledge-intensive approach for query-focused summarization using large language models. In Proceedings of the International Conference on Pattern Recognition, Beijing, China, 1–5 December 2025; Springer: Cham, Switzerland, 2025; pp. 89–104. [Google Scholar]

- Phan, L.N.; Anibal, J.T.; Tran, H.; Chanana, S.; Bahadroglu, E.; Peltekian, A.; Altan-Bonnet, G. Scifive: A text-to-text transformer model for biomedical literature. arXiv 2021, arXiv:2106.03598. [Google Scholar]

- Bolton, E.; Venigalla, A.; Yasunaga, M.; Hall, D.; Xiong, B.; Lee, T.; Daneshjou, R.; Frankle, J.; Liang, P.; Carbin, M.; et al. Biomedlm: A 2.7 b parameter language model trained on biomedical text. arXiv 2024, arXiv:2403.18421. [Google Scholar]

- Luo, R.; Sun, L.; Xia, Y.; Qin, T.; Zhang, S.; Poon, H.; Liu, T.Y. BioGPT: Generative pre-trained transformer for biomedical text generation and mining. Brief. Bioinform. 2022, 23, bbac409. [Google Scholar] [CrossRef]

- Goldsack, T.; Zhang, Z.; Lin, C.; Scarton, C. Making science simple: Corpora for the lay summarisation of scientific literature. arXiv 2022, arXiv:2210.09932. [Google Scholar]

- Song, R.; Li, Y.; Tian, M.; Wang, H.; Giunchiglia, F.; Xu, H. Causal keyword driven reliable text classification with large language model feedback. Inf. Process. Manag. 2025, 62, 103964. [Google Scholar] [CrossRef]

- Zhan, Q.; Fang, R.; Bindu, R.; Gupta, A.; Hashimoto, T.; Kang, D. Removing rlhf protections in gpt-4 via fine-tuning. arXiv 2023, arXiv:2311.05553. [Google Scholar]

- Rahman, M.M.; Irbaz, M.S.; North, K.; Williams, M.S.; Zampieri, M.; Lybarger, K. Health text simplification: An annotated corpus for digestive cancer education and novel strategies for reinforcement learning. J. Biomed. Inform. 2024, 158, 104727. [Google Scholar] [CrossRef]

- Ayre, J.; Bonner, C.; Muscat, D.M.; Cvejic, E.; Mac, O.; Mouwad, D.; Shepherd, H.L.; Aslani, P.; Dunn, A.G.; McCaffery, K.J. Online plain language tool and health information quality: A randomized clinical trial. JAMA Netw. Open 2024, 7, e2437955. [Google Scholar] [CrossRef]

- Gaber, F.; Shaik, M.; Franke, V.; Akalin, A. Evaluating large language model workflows in clinical decision support: Referral, triage, and diagnosis. medRxiv 2024, 1–21. [Google Scholar] [CrossRef]

- Gargari, O.K.; Habibi, G. Enhancing medical AI with retrieval-augmented generation: A mini narrative review. Digit. Health 2025, 11, 20552076251337177. [Google Scholar] [CrossRef]

- Minsky, M. The Society of Mind; Simon and Schuster: New York, NY, USA, 1988. [Google Scholar]

- Chen, D.; Chen, R.; Zhang, S.; Wang, Y.; Liu, Y.; Zhou, H.; Zhang, Q.; Wan, Y.; Zhou, P.; Sun, L. Mllm-as-a-judge: Assessing multimodal llm-as-a-judge with vision-language benchmark. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Shardlow, M.; Saggion, H.; Alva-Manchego, F.; Zampieri, M.; North, K.; Štajner, S.; Stodden, R. The Third Workshop. In Proceedings of the Text Simplification, Accessibility and Readability (TSAR 2024), Miami, FL, USA, 15–16 November 2024. [Google Scholar]

| Model | SARI ↑ | FKGL ↓ | BERTScore ↑ | MedEntail (%) ↑ | Human Simplicity Rating (/5) ↑ |

|---|---|---|---|---|---|

| PatientEase | 52.7 | 5.9 | 91.4 | 92.1 | 4.6 |

| Lay-SciFive-RLHF | 47.3 | 6.6 | 90.8 | 90.5 | 4.3 |

| GPT-4 Prompted | 43.1 | 8.9 | 91.8 | 89.1 | 3.9 |

| BART-CT (PLABA) | 44.5 | 7.2 | 88.7 | 85.5 | 3.7 |

| T5-MedSimplify | 43.2 | 6.9 | 89.3 | 86.7 | 3.8 |

| SciFive-Base | 41.8 | 7.4 | 88.1 | 83.9 | 3.6 |

| SciBERT-T5 | 40.3 | 7.9 | 87.6 | 82.5 | 3.4 |

| PEGASUS-CLS | 38.7 | 7.1 | 85.2 | 81.4 | 3.3 |

| LexRank-Simplify | 35.9 | 6.5 | 80.1 | 75.7 | 2.9 |

| GPT-3.5 Prompted | 40.7 | 7.6 | 88.5 | 84.3 | 3.5 |

| XLNet-TransSimplify | 39.4 | 7.8 | 87.0 | 83.1 | 3.2 |

| AutoMeTS | 36.2 | 6.0 | 79.4 | 73.9 | 3.0 |

| GPT-NeoX-MedPrompt | 41.1 | 7.3 | 86.5 | 83.7 | 3.6 |

| CTRLsum-Simplify | 37.8 | 7.5 | 84.6 | 80.9 | 3.1 |

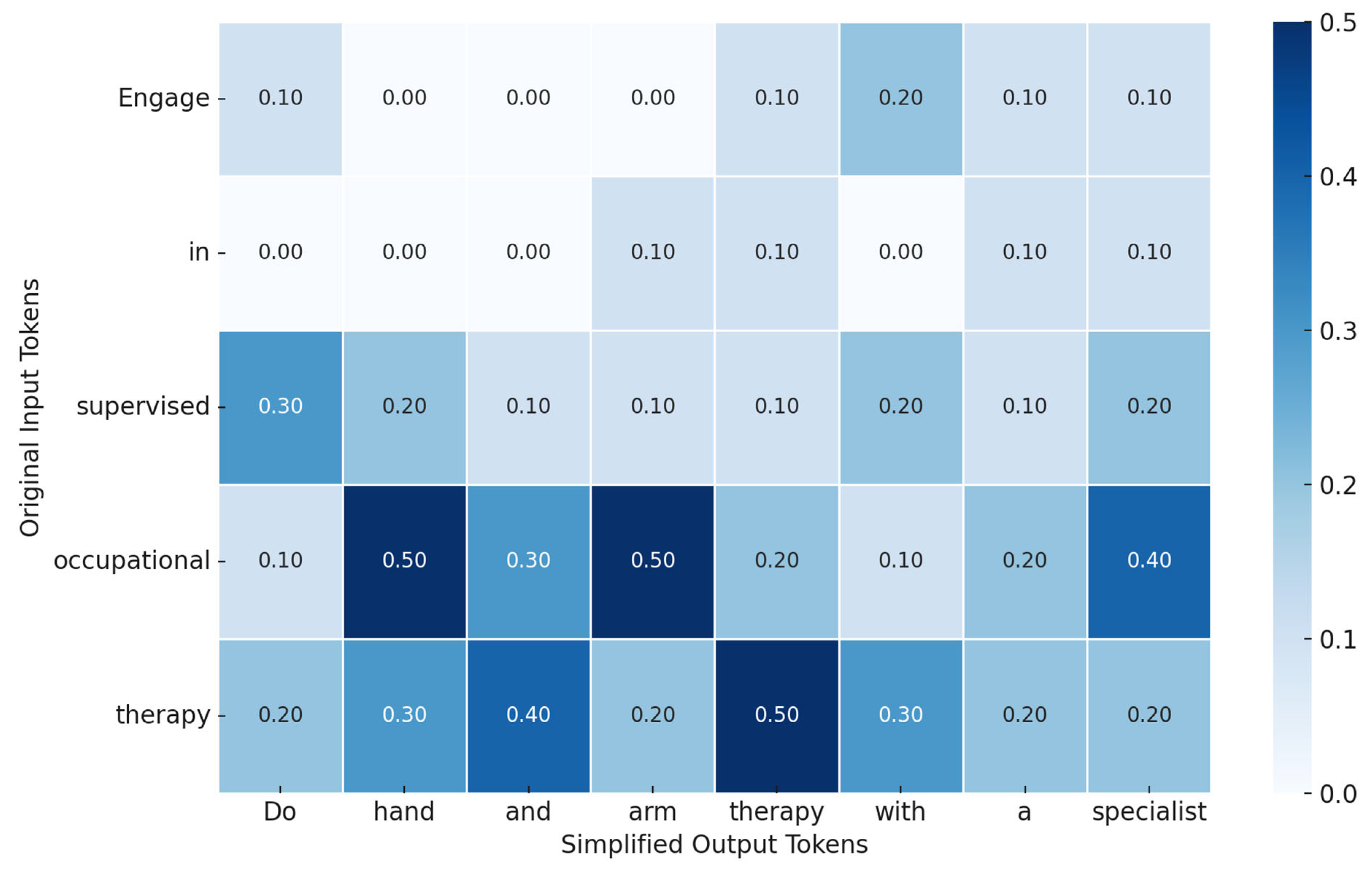

| Original Instruction | Simplified Output by PatientEase |

|---|---|

| Engage in supervised occupational therapy to optimize upper limb function post-stroke. | Do hand and arm therapy with a specialist after stroke. |

| Encourage diaphragmatic breathing techniques to reduce dyspnea during exertion. | Teach belly breathing to help with shortness of breath. |

| Initiate early mobilization protocols to reduce the risk of pulmonary complications. | Get the patient moving soon to avoid lung problems. |

| Administer anticoagulants prophylactically to mitigate venous thromboembolism risk. | Give blood thinners early to prevent clots. |

| Apply cryotherapy intermittently to the affected joint to minimize localized inflammation and neurogenic pain signaling. | Use an ice pack on the joint now and then to reduce swelling and pain. |

| Administer anticoagulants prophylactically to mitigate venous thromboembolism risk. | Give blood thinners early to prevent clots. |

| Administer anticoagulants prophylactically to mitigate venous thromboembolism risk. | Give blood thinners early to prevent clots. |

| Commence ambulation exercises bi-daily to promote post-operative circulation and prevent thromboembolic events. | Walk twice a day to improve blood flow and prevent clots after surgery. |

| Initiate a graduated resistance training protocol to counteract sarcopenic deterioration post-discharge. | Start light strength exercises after leaving the hospital to help rebuild weak muscles. |

| Adhere to scheduled wound inspection to monitor for signs of infection or dehiscence. | Check the wound regularly for infection or opening. |

| Apply cryotherapy intermittently to the affected joint to minimize localized inflammation and neurogenic pain signaling. | Use an ice pack on the joint now and then to reduce swelling and pain. |

| Apply cryotherapy intermittently to the affected joint to minimize localized inflammation and neurogenic pain signaling. | Use an ice pack on the joint now and then to reduce swelling and pain. |

| Patient education is recommended to enhance adherence to modified activities of daily living following total hip arthroplasty. | Teach the patient how to safely do daily tasks after hip surgery. |

| Adhere to scheduled wound inspection to monitor for signs of infection or dehiscence. | Check the wound regularly for infection or opening. |

| Encourage diaphragmatic breathing techniques to reduce dyspnea during exertion. | Teach belly breathing to help with shortness of breath. |

| Initiate compensatory swallowing strategies under SLP supervision to mitigate aspiration risk during the oral phase of deglutition. | Practice safe swallowing techniques with a speech therapist to avoid choking. |

| Commence ambulation exercises bi-daily to promote post-operative circulation and prevent thromboembolic events. | Walk twice a day to improve blood flow and prevent clots after surgery. |

| Administer anticoagulants prophylactically to mitigate venous thromboembolism risk. | Give blood thinners early to prevent clots. |

| Adhere to scheduled wound inspection to monitor for signs of infection or dehiscence. | Check the wound regularly for infection or opening. |

| Encourage diaphragmatic breathing techniques to reduce dyspnea during exertion. | Teach belly breathing to help with shortness of breath. |

| Administer anticoagulants prophylactically to mitigate venous thromboembolism risk. | Give blood thinners early to prevent clots. |

| Patient education is recommended to enhance adherence to modified activities of daily living following total hip arthroplasty. | Teach the patient how to safely do daily tasks after hip surgery. |

| Apply cryotherapy intermittently to the affected joint to minimize localized inflammation and neurogenic pain signaling. | Use an ice pack on the joint now and then to reduce swelling and pain. |

| Encourage diaphragmatic breathing techniques to reduce dyspnea during exertion. | Teach belly breathing to help with shortness of breath. |

| Patient education is recommended to enhance adherence to modified activities of daily living following total hip arthroplasty. | Teach the patient how to safely do daily tasks after hip surgery. |

| Administer anticoagulants prophylactically to mitigate venous thromboembolism risk. | Give blood thinners early to prevent clots. |

| Initiate early mobilization protocols to reduce the risk of pulmonary complications. | Get the patient moving soon to avoid lung problems. |

| Initiate a graduated resistance training protocol to counteract sarcopenic deterioration post-discharge. | Start light strength exercises after leaving the hospital to help rebuild weak muscles. |

| Initiate compensatory swallowing strategies under SLP supervision to mitigate aspiration risk during the oral phase of deglutition. | Practice safe swallowing techniques with a speech therapist to avoid choking. |

| Initiate a graduated resistance training protocol to counteract sarcopenic deterioration post-discharge. | Start light strength exercises after leaving the hospital to help rebuild weak muscles. |

| Patient education is recommended to enhance adherence to modified activities of daily living following total hip arthroplasty. | Teach the patient how to safely do daily tasks after hip surgery. |

| Apply cryotherapy intermittently to the affected joint to minimize localized inflammation and neurogenic pain signaling. | Use an ice pack on the joint now and then to reduce swelling and pain. |

| Administer anticoagulants prophylactically to mitigate venous thromboembolism risk. | Give blood thinners early to prevent clots. |

| Initiate a graduated resistance training protocol to counteract sarcopenic deterioration post-discharge. | Start light strength exercises after leaving the hospital to help rebuild weak muscles. |

| Initiate early mobilization protocols to reduce the risk of pulmonary complications. | Get the patient moving soon to avoid lung problems. |

| Adhere to scheduled wound inspection to monitor for signs of infection or dehiscence. | Check the wound regularly for infection or opening. |

| Patient education is recommended to enhance adherence to modified activities of daily living following total hip arthroplasty. | Teach the patient how to safely do daily tasks after hip surgery. |

| Initiate compensatory swallowing strategies under SLP supervision to mitigate aspiration risk during the oral phase of deglutition. | Practice safe swallowing techniques with a speech therapist to avoid choking. |

| Administer anticoagulants prophylactically to mitigate venous thromboembolism risk. | Give blood thinners early to prevent clots. |

| Apply cryotherapy intermittently to the affected joint to minimize localized inflammation and neurogenic pain signaling. | Use an ice pack on the joint now and then to reduce swelling and pain. |

| Initiate a graduated resistance training protocol to counteract sarcopenic deterioration post-discharge. | Start light strength exercises after leaving the hospital to help rebuild weak muscles. |

| Initiate a graduated resistance training protocol to counteract sarcopenic deterioration post-discharge. | Start light strength exercises after leaving the hospital to help rebuild weak muscles. |

| Initiate compensatory swallowing strategies under SLP supervision to mitigate aspiration risk during the oral phase of deglutition. | Practice safe swallowing techniques with a speech therapist to avoid choking. |

| Commence ambulation exercises bi-daily to promote post-operative circulation and prevent thromboembolic events. | Walk twice a day to improve blood flow and prevent clots after surgery. |

| Engage in supervised occupational therapy to optimize upper limb function post-stroke. | Do hand and arm therapy with a specialist after stroke. |

| Apply cryotherapy intermittently to the affected joint to minimize localized inflammation and neurogenic pain signaling. | Use an ice pack on the joint now and then to reduce swelling and pain. |

| Initiate early mobilization protocols to reduce the risk of pulmonary complications. | Get the patient moving soon to avoid lung problems. |

| Commence ambulation exercises bi-daily to promote post-operative circulation and prevent thromboembolic events. | Walk twice a day to improve blood flow and prevent clots after surgery. |

| Initiate compensatory swallowing strategies under SLP supervision to mitigate aspiration risk during the oral phase of deglutition. | Practice safe swallowing techniques with a speech therapist to avoid choking. |

| Apply cryotherapy intermittently to the affected joint to minimize localized inflammation and neurogenic pain signaling. | Use an ice pack on the joint now and then to reduce swelling and pain. |

| Model Variant | SARI ↑ | FKGL ↓ | BERTScore ↑ | MedEntail (%) ↑ | Human Rating (/5) ↑ |

|---|---|---|---|---|---|

| Full PatientEase | 52.7 | 5.9 | 91.4 | 92.1 | 4.6 |

| –User-Aligned Retriever | 46.8 | 7.1 | 89.2 | 87.4 | 4.1 |

| –Multi-Agent Loop | 45.3 | 6.4 | 87.9 | 86.4 | 4.0 |

| –RLHF Optimization | 44.2 | 7.6 | 88.5 | 85.1 | 3.9 |

| –User Retriever + RLHF | 41.7 | 7.8 | 86.3 | 82.7 | 3.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasimov, R.; Abdusalomov, A.; Khidirova, C.; Temirova, K.; Kutlimuratov, A.; Sadikova, S.; Jeong, W.; Choi, H.; Whangbo, T.K. PatientEase—Domain-Aware RAG for Rehabilitation Instruction Simplification. Bioengineering 2025, 12, 1204. https://doi.org/10.3390/bioengineering12111204

Nasimov R, Abdusalomov A, Khidirova C, Temirova K, Kutlimuratov A, Sadikova S, Jeong W, Choi H, Whangbo TK. PatientEase—Domain-Aware RAG for Rehabilitation Instruction Simplification. Bioengineering. 2025; 12(11):1204. https://doi.org/10.3390/bioengineering12111204

Chicago/Turabian StyleNasimov, Rashid, Akmalbek Abdusalomov, Charos Khidirova, Khosiyat Temirova, Alpamis Kutlimuratov, Shakhnoza Sadikova, Wonjun Jeong, Hyoungsun Choi, and Taeg Keun Whangbo. 2025. "PatientEase—Domain-Aware RAG for Rehabilitation Instruction Simplification" Bioengineering 12, no. 11: 1204. https://doi.org/10.3390/bioengineering12111204

APA StyleNasimov, R., Abdusalomov, A., Khidirova, C., Temirova, K., Kutlimuratov, A., Sadikova, S., Jeong, W., Choi, H., & Whangbo, T. K. (2025). PatientEase—Domain-Aware RAG for Rehabilitation Instruction Simplification. Bioengineering, 12(11), 1204. https://doi.org/10.3390/bioengineering12111204