Stimulus-Evoked Brain Signals for Parkinson’s Detection: A Comprehensive Benchmark Performance Analysis on Cross-Stimulation and Channel-Wise Experiments

Abstract

1. Introduction

- Investigate a cross-stimulation evaluation framework to assess the generalizability and robustness of Parkinson’s disease detection algorithms across varying stimulus conditions, addressing a key shortcoming of prior work that primarily relies on intra-stimulus (within-stimulus) evaluations.

- Conduct a channel-wise performance analysis, evaluating classification accuracy at individual EEG channels to identify the most discriminative brain regions for PD detection across different stimulus conditions.

- Introduce the newly constructed ParEEG database, comprising 203,520 EEG samples from 60 subjects (30 healthy controls and 30 individuals with Parkinson’s disease), capturing EEG responses to diverse emotional states induced by Resting-State Visual Evoked Potential (RSVEP) and Steady-State Visually Evoked Potential (SSVEP) stimuli. The ParEEG dataset will be made publicly available for research purposes to support reproducible research.

- Present a comprehensive experimental analysis of PD detection algorithms within a cross-stimulation evaluation framework, benchmarking classification accuracy at the individual EEG channel level. The evaluation includes two handcrafted feature-based methods and two deep learning-based approaches, enabling an in-depth comparative assessment in handling variability across stimulus conditions.

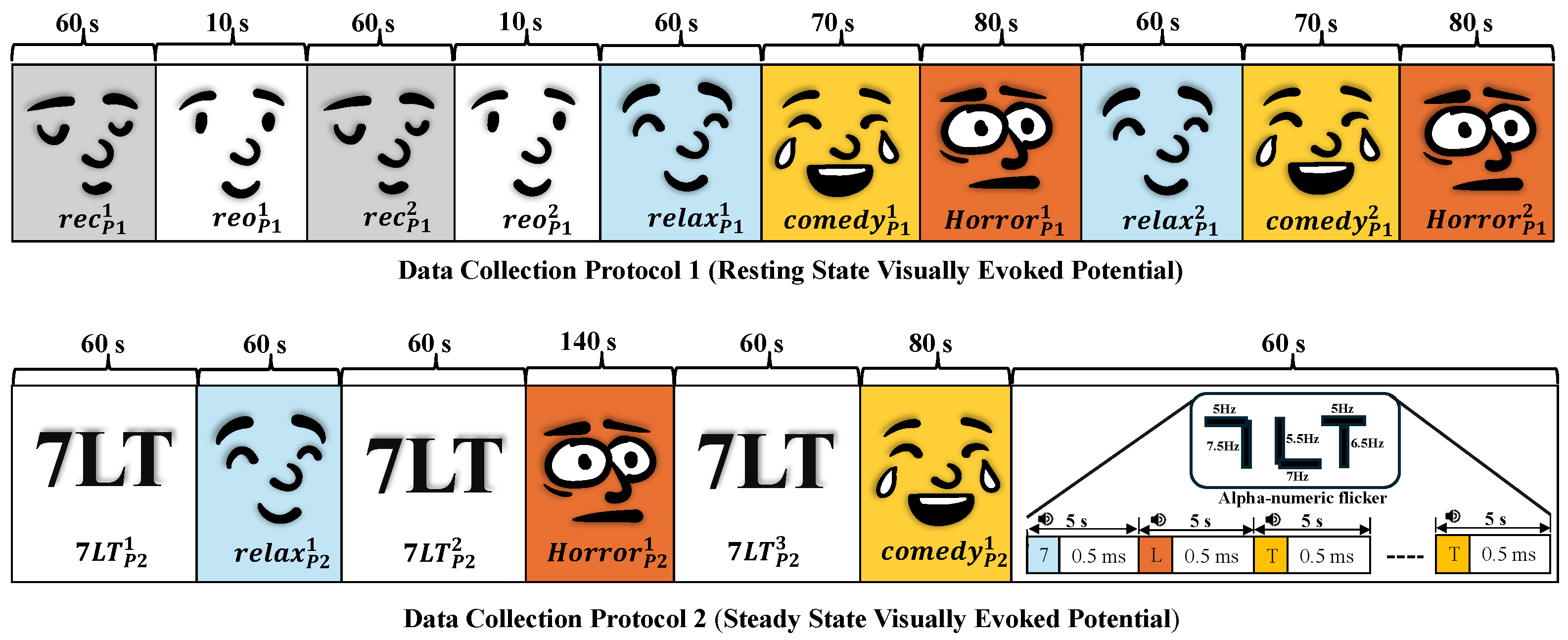

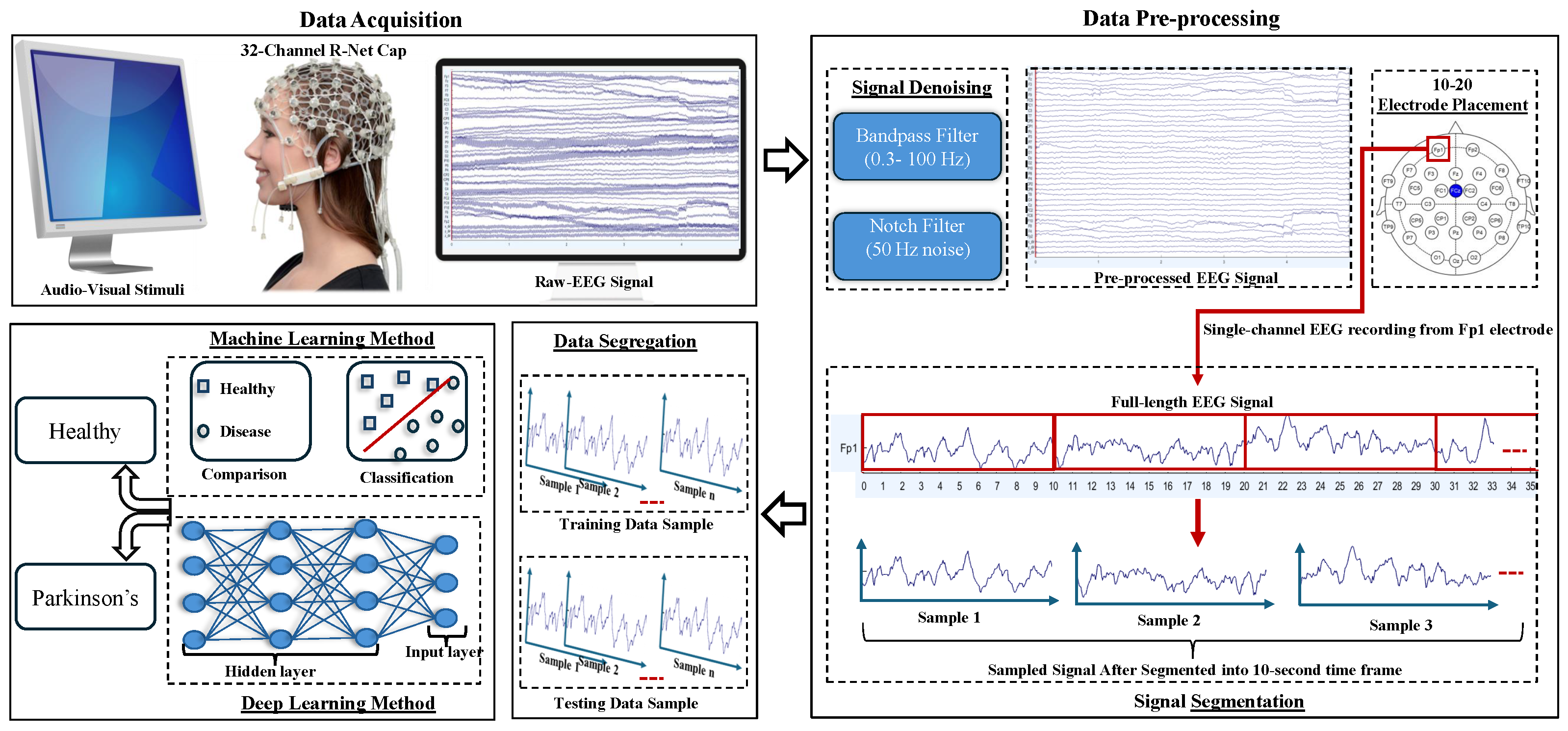

2. Materials and Methods

2.1. Database Description

2.1.1. Data Collection Protocol 1 (DCP 1)

2.1.2. Data Collection Protocol 2 (DCP 2)

2.2. Pre-Processing

2.3. Classification Methods

2.3.1. Support Vector Machine (SVM)

2.3.2. Collaborative Representation Classifier (CRC)

2.3.3. Long Short-Term Memory (LSTM)

2.3.4. One-Dimensional Convolutional Neural Network (1D-CNN)

2.4. Evaluation Method

3. Results

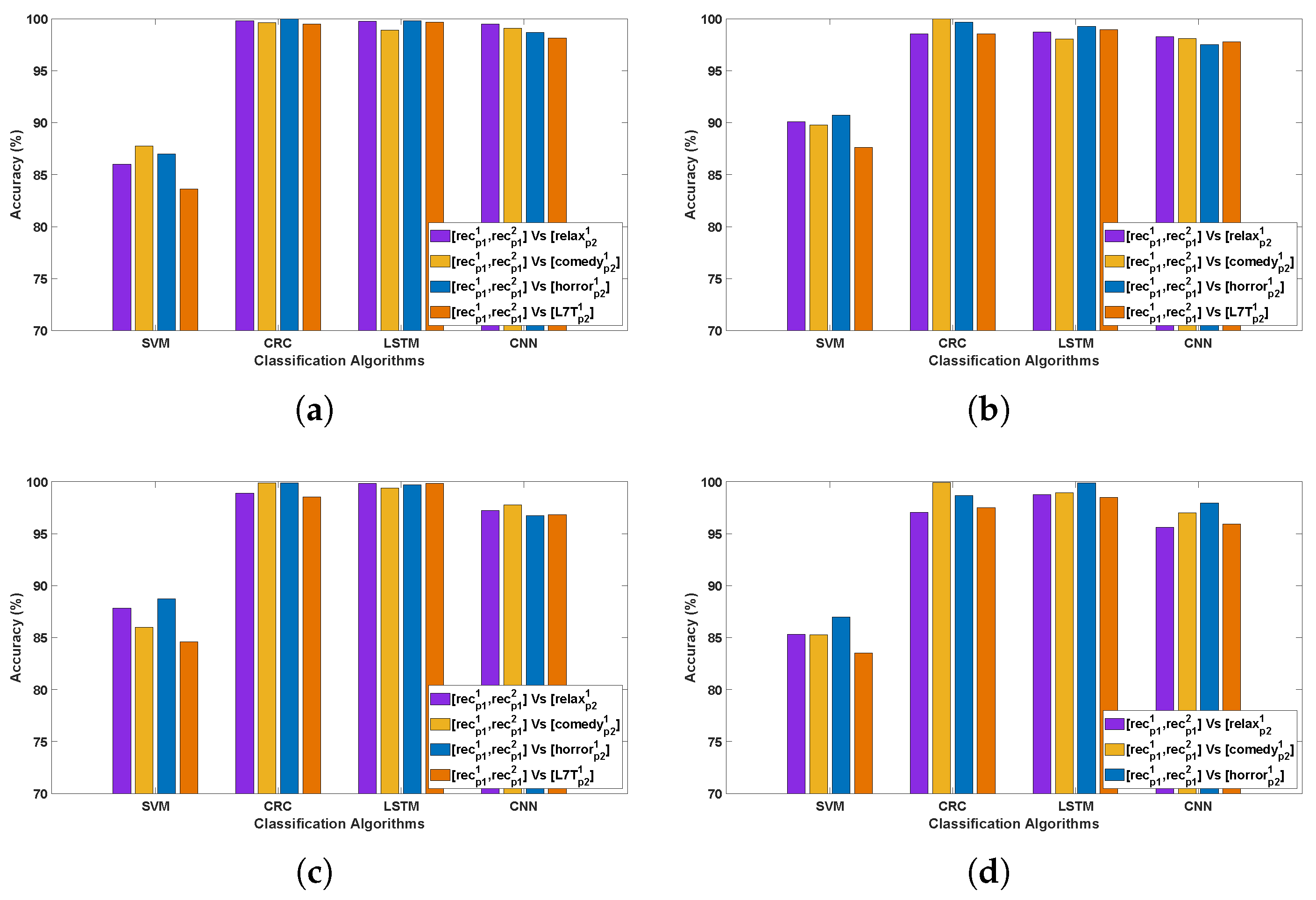

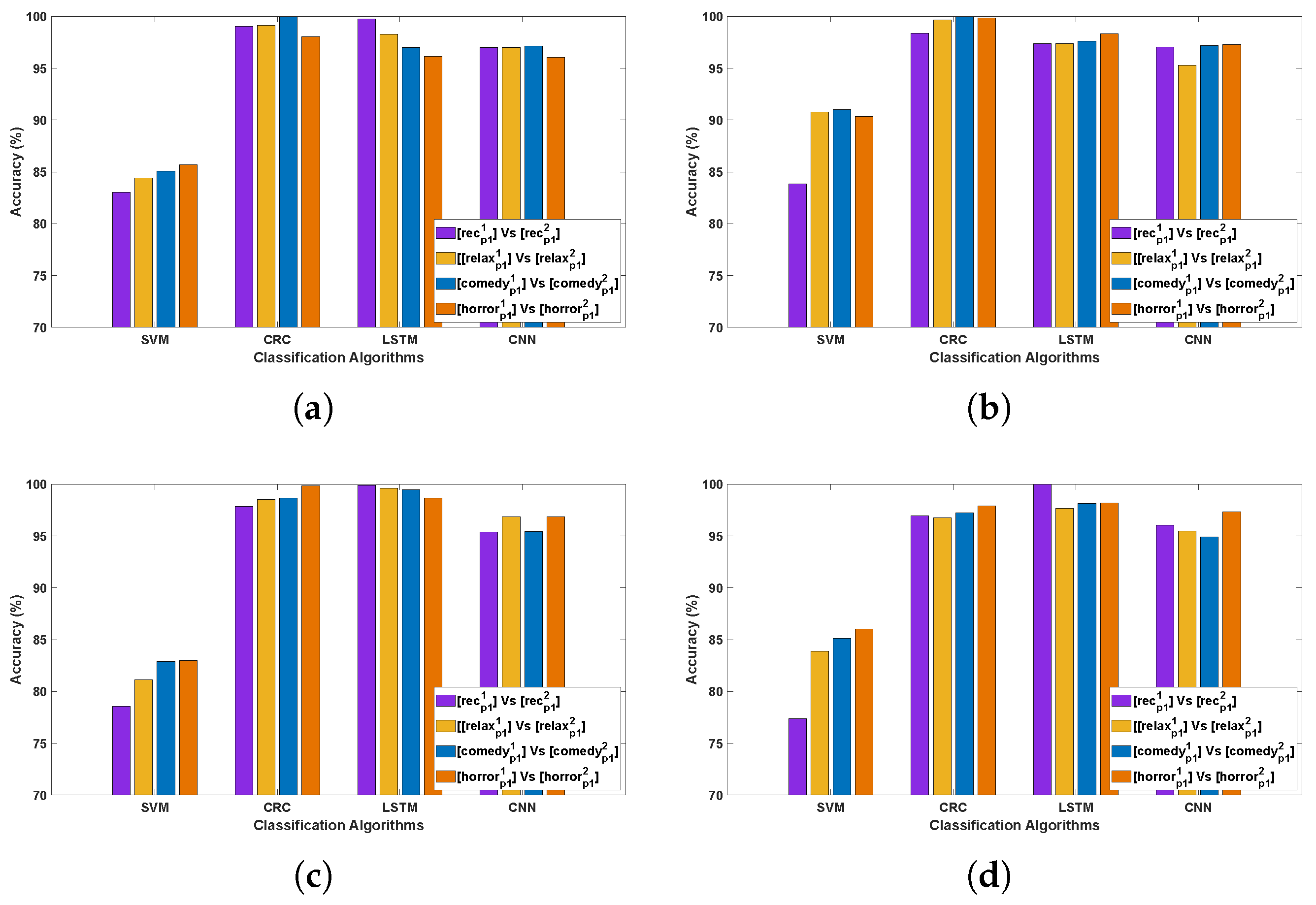

3.1. Evaluation 1

3.1.1. Observations Related to Evaluation 1 Based on DCP 1

- The CRC and LSTM algorithms demonstrated exceptional performance, attributable to the robustness of CRC in handling EEG signal variability and the capacity of LSTMs to capture long-term temporal dependencies in Parkinson’s EEG data. CNN also showed consistently strong performance, whereas SVM yielded comparatively lower accuracy, likely due to its reliance on manually extracted features that may not adequately represent the complex, nonlinear characteristics of EEG signals.

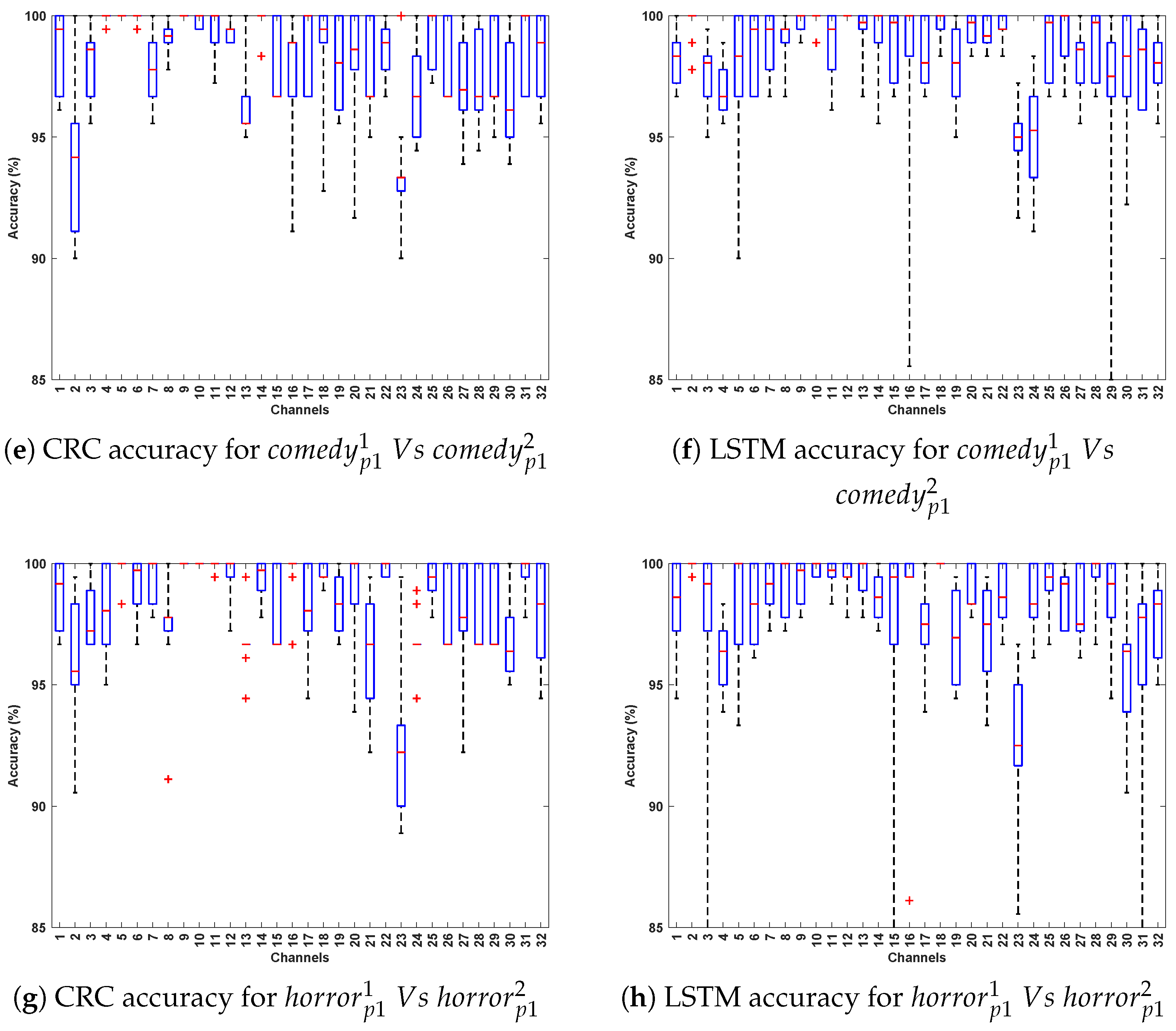

- Comparing the classification accuracy across different stimuli in DCP 1, the horror and comedy stimuli yielded comparatively better performance across most algorithms, suggesting that these stimuli may evoke stronger neural resonances, thereby enabling the models to more effectively differentiate Parkinson’s-affected EEG patterns from healthy ones.

- The best-performing EEG channels across all algorithms include frontal (Fp1, F9, F7), fronto-central (Fc5, Fc1, Fc2), central–parietal (Cp2), and parietal (P8), achieving an average classification accuracy ranging from 80% to 95%. This could be attributed to Parkinson’s disease being associated with widespread alterations in EEG spectral power and functional connectivity, particularly affecting the frontal and parietal regions [33,34]. In the eye-closed resting state, healthy individuals typically exhibit dominant alpha rhythms in posterior regions, which are often reduced or disrupted in PD patients [35]. Such disruptions manifest as altered activity patterns in parietal and central–parietal channels. Furthermore, during relaxed wakefulness, frontal and fronto-central regions often exhibit significant changes in EEG power and coherence in individuals with PD [36], contributing to distinguishable patterns that can support effective classification. These findings suggest that channel-wise EEG analysis is a valuable approach for identifying informative features and optimizing electrode selection in the PD detection system.

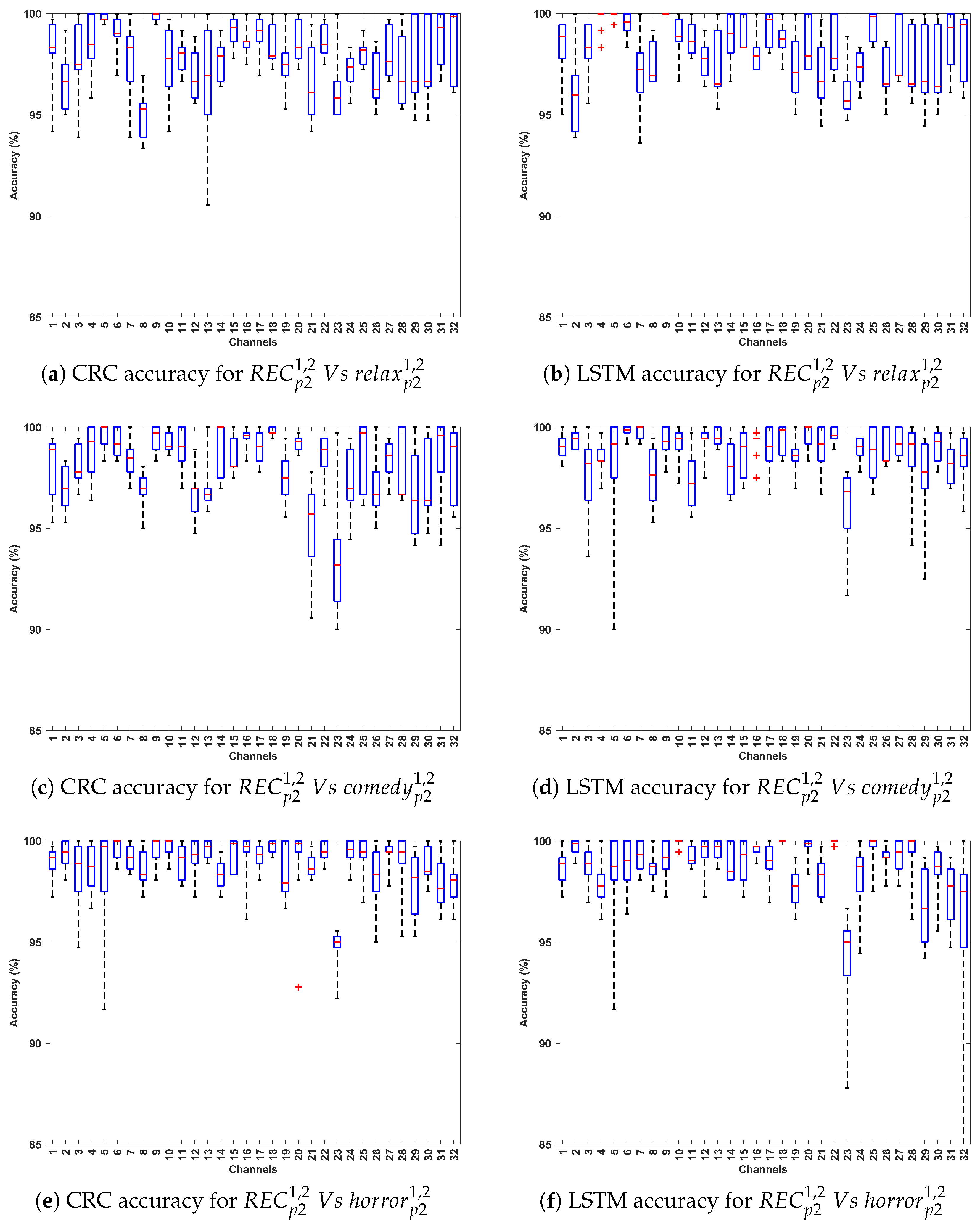

3.1.2. Observations Related to Evaluation 1 Based on DCP 2

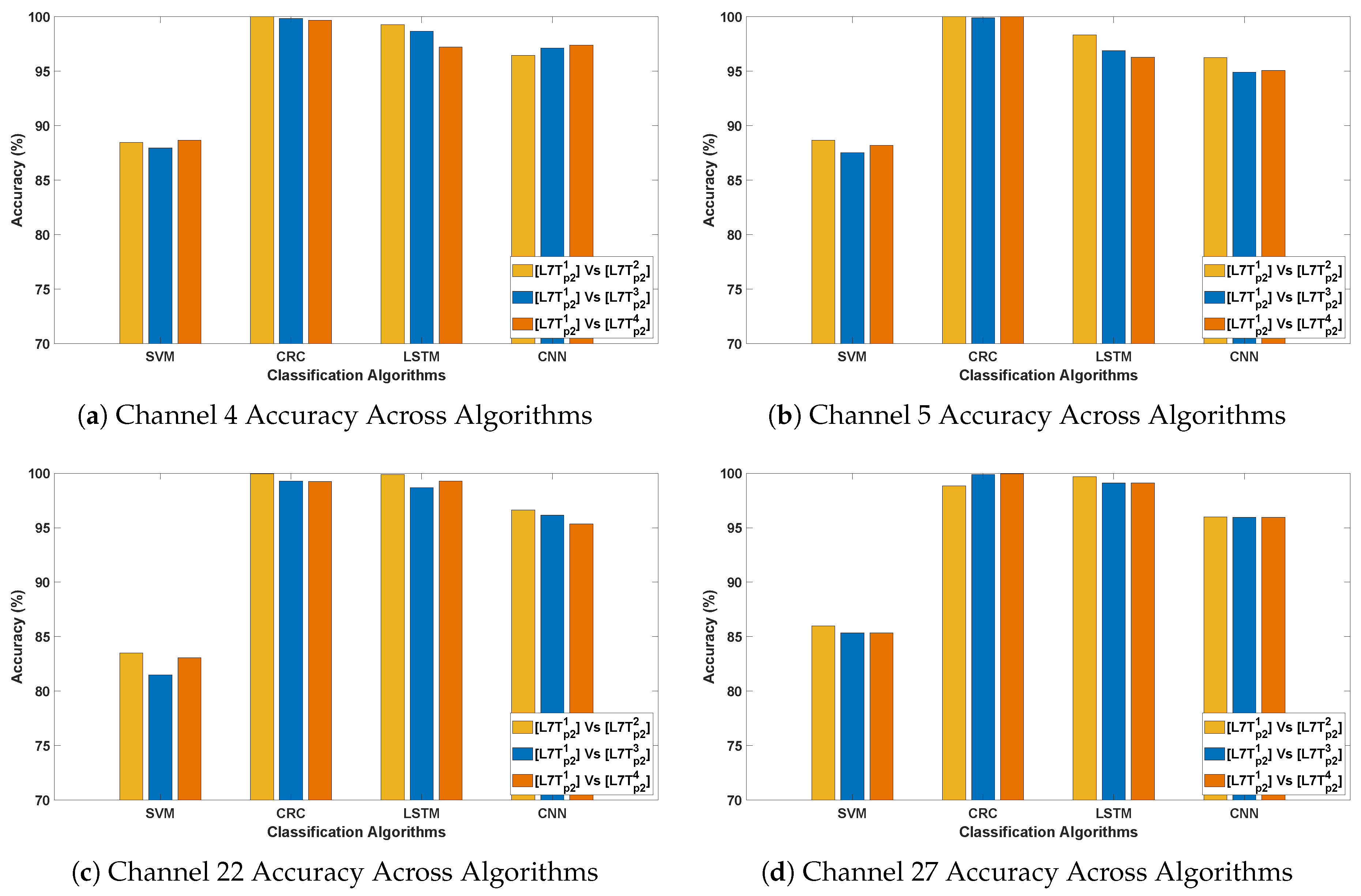

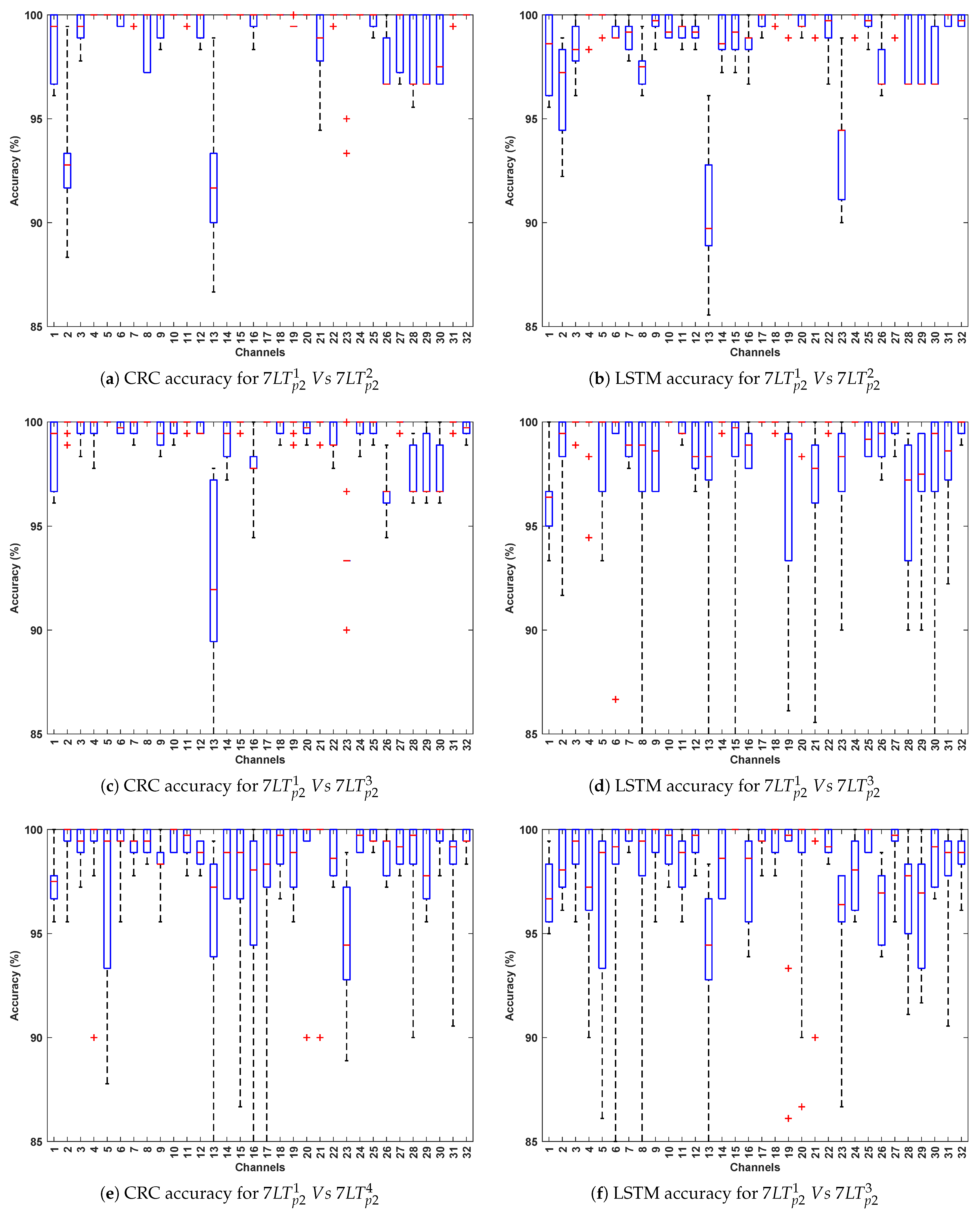

- In DCP-2, the highest classification accuracy of 100% was achieved with the CRC and LSTM classification methods. Across all algorithms, classification accuracy was highest for the evaluation, while it was relatively lower in and evaluations. This suggests that the flickering pattern induces strong and consistent neural responses, particularly in short-term sequential comparisons, thereby facilitating more accurate differentiation of PD signals from healthy controls.

- The best-performing channels (F7, F9, Fc1, C3, P4, Cp2, Fc2, and Fp2) demonstrated high classification accuracy, spanning frontal, fronto-central, central, and parietal scalp regions. Their consistent performance highlights strong discriminative potential in Parkinson’s disease detection. The flickering pattern, designed to elicit steady-state visual responses, may enhance neural activity effectively captured by fronto-central and parietal channels. The resulting stimulus-induced signal variations across these regions likely contribute to the enhanced classification performance observed under visual stimulation.

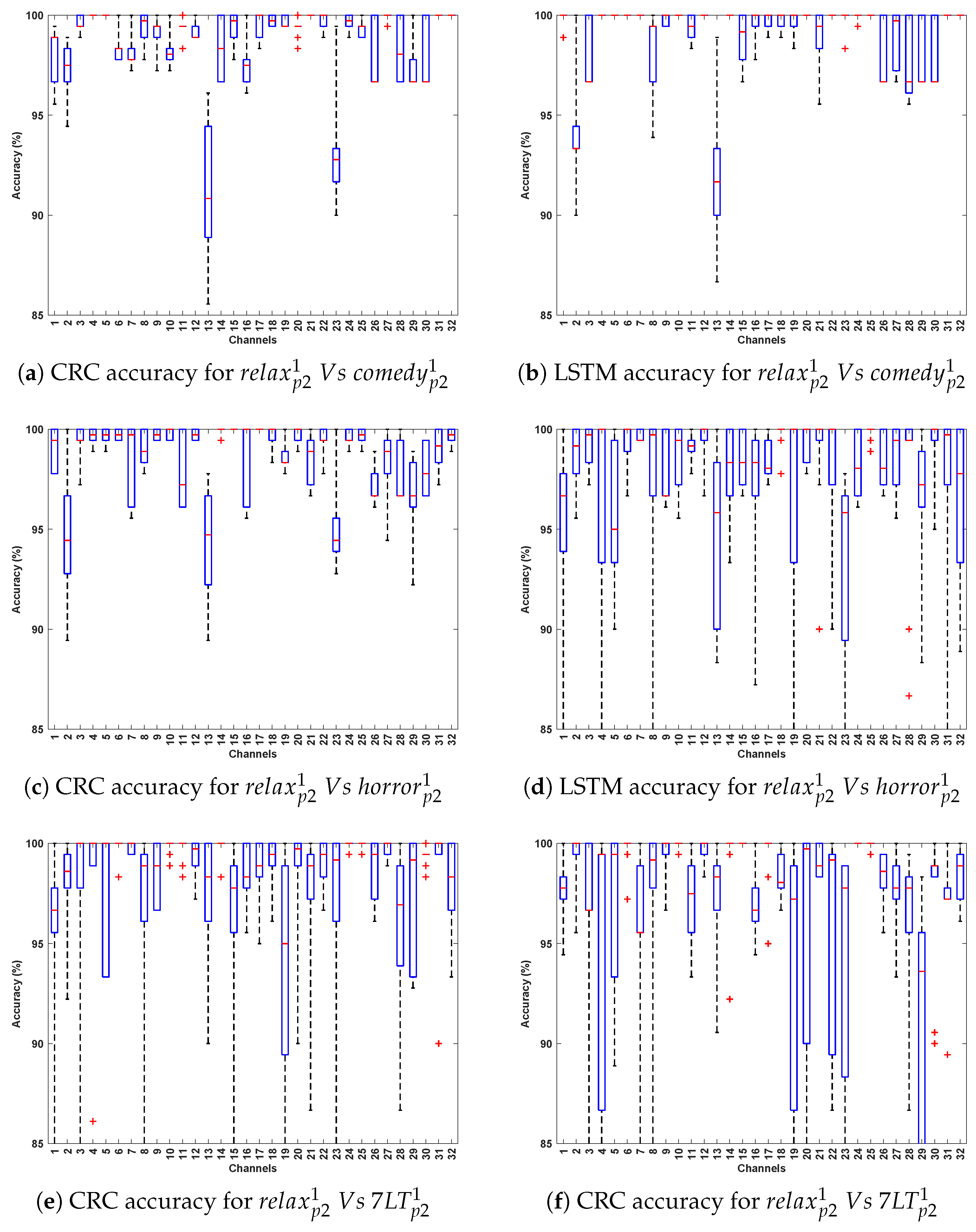

3.2. Evaluation 2

3.2.1. Observations Related to Evaluation 2 Based on DCP 1

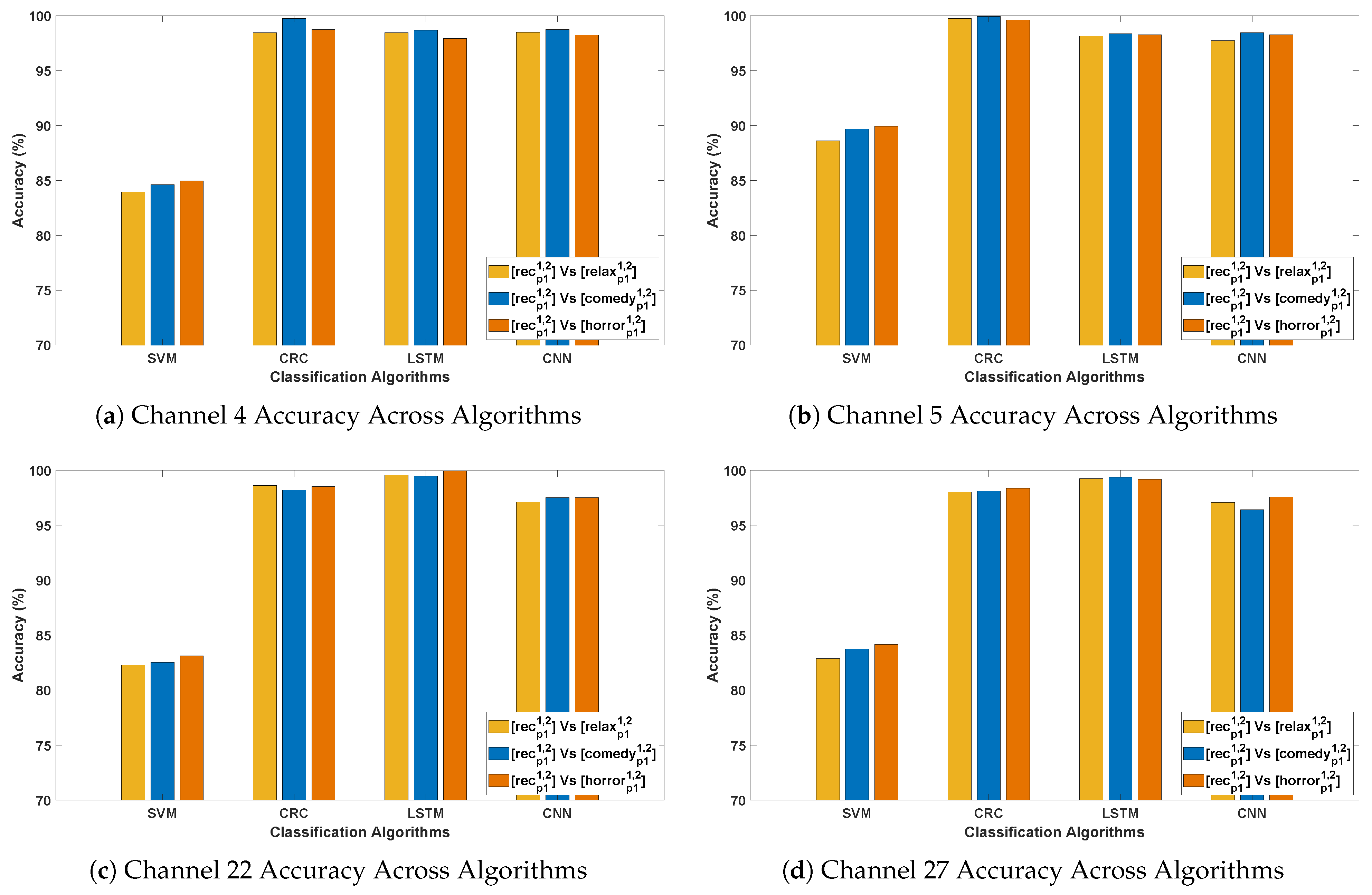

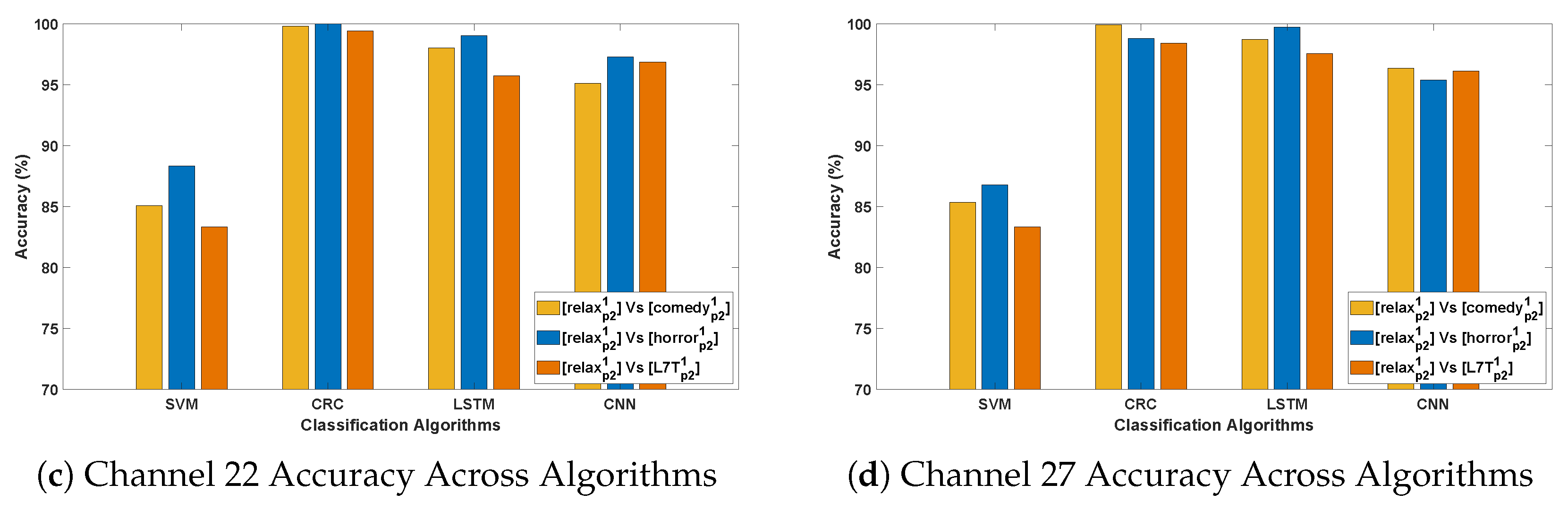

- An outstanding performance was observed with CRC and LSTM algorithms, attaining the highest average classification accuracy of 99–100%, indicating their superior ability to capture spatial and temporal dependencies in Parkinson’s EEG signals. In comparison, CNN also demonstrated strong performance in PD detection, while SVM yielded the lowest accuracy among the evaluated methods.

- In Parkinson’s patients, emotional processing and cognitive engagement involve frontal and limbic system regions, which are often affected by neurodegeneration. This may influence EEG patterns differently across Horror and Comedy stimuli compared to the Relax stimulus, resulting in better classification accuracy. Such differences could be attributed to stronger neural activation in response to emotionally and cognitively engaging stimuli, leading to clearer differentiation between Parkinson’s and healthy EEG patterns. In contrast, the stimulus might evoke weaker cortical responses, making classification more challenging.

- The best-performing channels across all algorithms include F7, F9, Fc5, Fc2, C3, Cp2, P3, and Fp2. The frontal and fronto-central areas (F7, F9, Fc5, and Fc2) are linked to cognitive processing and attention, which are likely heightened during emotional stimuli such as Horror and Comedy, thereby contributing to outstanding classification accuracy.

3.2.2. Observations Related to Evaluation 2 Based on DCP 2

- Again, CRC and LSTM consistently outperform other models, reinforcing their robustness in classifying Parkinson’s EEG signals. Accuracy peaks in Relax v/s Horror, reaching 93–100% for CRC and 93–99% for LSTM, which suggests a strong neural contrast between these conditions.

- Higher classification accuracy for Relax v/s Horror indicates that the horror stimulus elicits stronger neural activity compared to Relax, making the EEG patterns more distinguishable.

- The best-performing channels are located in frontal (F7, F9), fronto-central (Fc1), central (C3), parietal (P4, Cp2), and occipital regions. These regions are linked to emotion processing, motor control, and sensory integration, all of which are affected in Parkinson’s, thereby explaining their contribution to high classification accuracy.

3.3. Evaluation 3

- This evaluation introduces greater variability by training on from Protocol 1 and testing on independent instances such as , , , and from Protocol 2. The variation in recording conditions across protocols enables assessment of the models’ generalizability under different stimulus and temporal settings. As expected, CRC and LSTM achieve the highest accuracy, reaffirming their ability to capture spatial and temporal EEG patterns. CNN maintains consistent performance, whereas SVM lags, highlighting its sensitivity to cross-stimulation evaluation.

- Among the test stimuli, vs. achieves the highest classification accuracy across all algorithms. This indicates that horror stimuli evoke distinct EEG responses that enhance PD classification relative to other conditions. The stronger emotional and cognitive engagement associated with horror may likely lead to more pronounced neural differences between Parkinson’s and healthy subjects, thereby improving classification performance.

- The most effective channels—F7, F9, Fc1, Fc2, Fc5, C4, Cp2, and P8—are primarily located in the frontal, fronto-central, and central regions, which are crucial for motor control, cognitive processing, and sensorimotor integration. These regions, often affected in Parkinson’s disease, consistently yield reliable classification performance across evaluations, even under varying stimulus and protocol conditions.

| Ch | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | |

| 1 | 80.6 ± 4.5 | 97.7 ± 2.8 | 95.8 ± 3.8 | 98.4 ± 1.1 | 79.9 ± 2.5 | 97.8 ± 2.1 | 97.3 ± 1.9 | 97.1 ± 1.6 | 81.5 ± 4.7 | 99.9 ± 0.2 | 98.3 ± 1.6 | 98.7 ± 1.1 | 79.0 ± 3.7 | 98.8 ± 1.3 | 98.4 ± 1.2 | 96.5 ± 0.9 |

| 2 | 72.2 ± 3.3 | 92.4 ± 3.5 | 97.8 ± 2.2 | 95.1 ± 3.3 | 71.2 ± 3.1 | 96.8 ± 2.1 | 98.8 ± 1.5 | 97.5 ± 1.3 | 72.4 ± 3.2 | 93.4 ± 3.0 | 97.7 ± 1.2 | 93.3 ± 2.0 | 70.5 ± 3.5 | 93.2 ± 3.2 | 98.7 ± 1.4 | 97.7 ± 1.5 |

| 3 | 79.8 ± 3.8 | 99.9 ± 0.2 | 99.7 ± 0.4 | 98.3 ± 1.3 | 78.5 ± 4.6 | 99.1 ± 1.2 | 98.0 ± 1.9 | 98.7 ± 0.7 | 80.6 ± 4.5 | 98.3 ± 1.5 | 99.8 ± 0.4 | 98.5 ± 1.4 | 78.6 ± 4.2 | 99.2 ± 0.8 | 98.3 ± 1.2 | 97.9 ± 1.7 |

| 4 | 86.0 ± 4.2 | 99.8 ± 0.3 | 99.8 ± 0.4 | 99.5 ± 0.4 | 87.8 ± 3.6 | 99.6 ± 1.1 | 98.9 ± 1.4 | 99.1 ± 0.8 | 87.0 ± 4.0 | 100.0 ± 0.0 | 99.8 ± 0.5 | 98.7 ± 0.8 | 83.6 ± 3.1 | 99.5 ± 0.4 | 99.7 ± 0.4 | 98.2 ± 1.5 |

| 5 | 90.1 ± 3.3 | 98.6 ± 1.5 | 98.7 ± 2.5 | 98.3 ± 2.4 | 89.8 ± 2.8 | 100.0 ± 0.0 | 98.1 ± 2.9 | 98.1 ± 3.5 | 90.7 ± 3.1 | 99.7 ± 0.4 | 99.3 ± 2.0 | 97.5 ± 2.2 | 87.6 ± 3.2 | 98.6 ± 1.4 | 98.9 ± 3.0 | 97.8 ± 2.9 |

| 6 | 83.4 ± 3.4 | 99.8 ± 0.3 | 99.9 ± 0.2 | 98.0 ± 2.0 | 81.2 ± 3.0 | 98.3 ± 1.4 | 98.8 ± 1.8 | 97.9 ± 1.0 | 83.7 ± 4.1 | 100.0 ± 0.0 | 99.9 ± 0.3 | 98.6 ± 0.9 | 83.3 ± 4.1 | 99.6 ± 0.5 | 99.9 ± 0.2 | 98.6 ± 1.0 |

| 7 | 84.4 ± 3.8 | 99.9 ± 0.2 | 98.7 ± 1.2 | 98.2 ± 1.7 | 82.8 ± 3.7 | 98.9 ± 1.1 | 98.8 ± 1.3 | 97.9 ± 1.0 | 85.3 ± 3.9 | 100.0 ± 0.0 | 98.9 ± 0.9 | 96.7 ± 1.8 | 83.0 ± 3.3 | 98.8 ± 1.6 | 98.2 ± 1.9 | 97.9 ± 0.7 |

| 8 | 81.9 ± 3.3 | 99.1 ± 1.2 | 99.4 ± 1.0 | 95.7 ± 1.7 | 83.2 ± 3.8 | 99.6 ± 1.1 | 98.4 ± 1.6 | 96.6 ± 2.2 | 83.2 ± 3.4 | 98.6 ± 1.4 | 99.5 ± 1.0 | 96.4 ± 2.2 | 77.6 ± 3.1 | 98.8 ± 0.9 | 99.7 ± 0.5 | 97.2 ± 1.8 |

| 9 | 74.0 ± 3.1 | 98.3 ± 1.9 | 99.4 ± 1.0 | 99.0 ± 0.9 | 73.2 ± 2.8 | 100.0 ± 0.0 | 97.3 ± 1.6 | 98.7 ± 1.3 | 74.4 ± 3.6 | 99.3 ± 0.8 | 98.4 ± 1.2 | 98.7 ± 1.1 | 73.3 ± 2.7 | 98.8 ± 1.2 | 99.2 ± 1.1 | 98.3 ± 1.2 |

| 10 | 58.9 ± 3.6 | 99.7 ± 0.7 | 99.9 ± 0.3 | 97.9 ± 1.3 | 59.5 ± 3.6 | 98.7 ± 1.4 | 98.9 ± 1.4 | 97.9 ± 1.0 | 58.8 ± 2.9 | 100.0 ± 0.0 | 100.0 ± 0.0 | 97.3 ± 2.4 | 59.2 ± 4.8 | 99.8 ± 0.5 | 99.8 ± 0.5 | 98.1 ± 1.5 |

| 11 | 66.7 ± 4.3 | 98.9 ± 0.9 | 99.6 ± 0.3 | 96.1 ± 2.2 | 68.5 ± 5.8 | 99.4 ± 0.4 | 99.0 ± 0.9 | 95.8 ± 1.4 | 66.9 ± 4.5 | 99.4 ± 0.8 | 99.8 ± 0.3 | 96.1 ± 3.1 | 67.0 ± 4.1 | 96.6 ± 2.4 | 97.9 ± 1.1 | 94.3 ± 1.1 |

| 12 | 75.8 ± 4.3 | 97.6 ± 2.5 | 100.0 ± 0.0 | 96.7 ± 1.8 | 76.3 ± 4.0 | 99.3 ± 0.4 | 99.6 ± 0.7 | 95.3 ± 2.2 | 77.4 ± 5.5 | 100.0 ± 0.0 | 99.8 ± 0.4 | 95.4 ± 2.7 | 74.1 ± 2.9 | 98.1 ± 2.0 | 99.9 ± 0.2 | 96.5 ± 1.5 |

| 13 | 69.6 ± 2.6 | 94.0 ± 3.5 | 99.1 ± 1.2 | 96.2 ± 2.4 | 68.7 ± 4.1 | 93.0 ± 4.2 | 94.1 ± 2.8 | 93.3 ± 4.6 | 69.7 ± 2.9 | 92.3 ± 3.9 | 98.9 ± 1.7 | 88.7 ± 4.0 | 68.4 ± 3.2 | 96.3 ± 3.5 | 98.3 ± 1.0 | 95.1 ± 2.6 |

| 14 | 80.0 ± 4.4 | 100.0 ± 0.0 | 99.4 ± 1.2 | 98.1 ± 2.0 | 79.6 ± 5.1 | 98.3 ± 1.8 | 97.2 ± 3.0 | 96.6 ± 2.0 | 80.0 ± 4.4 | 100.0 ± 0.0 | 99.6 ± 0.9 | 97.7 ± 1.4 | 80.0 ± 4.4 | 99.8 ± 0.5 | 99.6 ± 0.8 | 97.4 ± 2.4 |

| 15 | 77.1 ± 5.2 | 99.4 ± 0.8 | 98.9 ± 1.4 | 98.4 ± 1.2 | 79.7 ± 5.1 | 99.8 ± 0.3 | 98.7 ± 1.3 | 97.9 ± 1.1 | 77.2 ± 5.4 | 99.8 ± 0.4 | 97.9 ± 1.4 | 98.3 ± 1.0 | 76.8 ± 5.1 | 100.0 ± 0.0 | 99.1 ± 1.3 | 98.7 ± 1.0 |

| 16 | 77.7 ± 5.5 | 99.4 ± 0.7 | 99.9 ± 0.2 | 96.7 ± 1.9 | 76.4 ± 4.3 | 98.1 ± 1.6 | 96.7 ± 2.8 | 95.5 ± 2.0 | 78.6 ± 5.4 | 99.7 ± 0.5 | 98.3 ± 1.2 | 96.1 ± 4.1 | 76.0 ± 5.4 | 98.8 ± 1.6 | 99.7 ± 0.5 | 96.3 ± 1.5 |

| 17 | 74.8 ± 4.1 | 99.7 ± 0.7 | 99.7 ± 0.4 | 98.6 ± 1.5 | 76.5 ± 4.2 | 99.4 ± 1.1 | 98.7 ± 0.9 | 97.3 ± 1.0 | 75.5 ± 4.8 | 100.0 ± 0.0 | 99.2 ± 0.7 | 98.4 ± 1.4 | 73.9 ± 4.3 | 99.3 ± 0.7 | 99.1 ± 1.2 | 98.3 ± 1.2 |

| 18 | 73.5 ± 4.2 | 99.7 ± 0.4 | 99.7 ± 0.5 | 96.7 ± 2.5 | 73.1 ± 5.2 | 99.9 ± 0.2 | 98.5 ± 1.6 | 97.4 ± 1.6 | 74.5 ± 4.9 | 99.9 ± 0.2 | 100.0 ± 0.0 | 97.4 ± 2.0 | 71.8 ± 4.4 | 99.7 ± 0.4 | 98.9 ± 1.0 | 97.7 ± 0.9 |

| 19 | 76.4 ± 9.6 | 97.1 ± 2.6 | 96.7 ± 1.9 | 96.2 ± 2.4 | 75.8 ± 8.0 | 99.7 ± 0.3 | 96.1 ± 2.0 | 98.2 ± 1.2 | 76.8 ± 9.3 | 99.3 ± 0.8 | 99.1 ± 0.9 | 96.1 ± 2.4 | 75.2 ± 8.3 | 96.9 ± 2.4 | 97.3 ± 1.6 | 96.1 ± 2.5 |

| 20 | 85.7 ± 2.5 | 98.4 ± 1.5 | 99.2 ± 0.6 | 96.6 ± 2.6 | 85.2 ± 2.8 | 99.6 ± 0.4 | 98.8 ± 2.0 | 95.7 ± 1.7 | 86.7 ± 3.1 | 99.6 ± 0.5 | 99.8 ± 0.5 | 96.4 ± 1.7 | 84.9 ± 2.2 | 99.4 ± 0.5 | 99.9 ± 0.2 | 96.2 ± 1.7 |

| 21 | 77.2 ± 8.1 | 95.2 ± 3.1 | 99.6 ± 1.0 | 98.4 ± 1.4 | 80.9 ± 7.5 | 100.0 ± 0.0 | 99.4 ± 1.5 | 98.1 ± 1.1 | 79.0 ± 8.8 | 97.6 ± 1.6 | 99.1 ± 1.0 | 97.9 ± 0.8 | 76.2 ± 7.3 | 95.8 ± 1.5 | 99.7 ± 0.4 | 96.6 ± 1.8 |

| 22 | 87.8 ± 2.7 | 98.9 ± 1.7 | 99.8 ± 0.4 | 97.2 ± 2.6 | 86.0 ± 3.7 | 99.9 ± 0.2 | 99.4 ± 0.8 | 97.8 ± 1.2 | 88.7 ± 3.8 | 99.9 ± 0.4 | 99.7 ± 0.7 | 96.7 ± 2.5 | 84.6 ± 3.7 | 98.6 ± 1.5 | 99.8 ± 0.3 | 96.8 ± 1.0 |

| 23 | 63.8 ± 2.9 | 98.8 ± 1.8 | 97.2 ± 2.4 | 97.8 ± 2.4 | 67.5 ± 4.2 | 93.4 ± 3.0 | 91.6 ± 2.3 | 94.9 ± 1.2 | 65.3 ± 3.3 | 96.7 ± 3.3 | 94.6 ± 3.2 | 98.5 ± 1.1 | 64.0 ± 2.6 | 95.1 ± 2.4 | 94.5 ± 3.5 | 95.8 ± 2.6 |

| 24 | 77.0 ± 4.6 | 97.3 ± 1.4 | 100.0 ± 0.0 | 98.8 ± 0.7 | 79.3 ± 4.4 | 99.6 ± 0.5 | 98.3 ± 1.5 | 98.9 ± 1.0 | 78.1 ± 4.7 | 99.3 ± 0.8 | 99.9 ± 0.2 | 98.6 ± 1.3 | 76.6 ± 4.1 | 99.4 ± 0.7 | 99.9 ± 0.2 | 98.8 ± 0.8 |

| 25 | 84.5 ± 4.4 | 97.5 ± 3.0 | 99.4 ± 1.0 | 96.2 ± 2.7 | 84.2 ± 4.8 | 99.6 ± 0.4 | 98.4 ± 2.5 | 98.4 ± 1.1 | 86.0 ± 3.8 | 99.4 ± 0.6 | 99.3 ± 1.1 | 97.3 ± 2.1 | 82.3 ± 4.0 | 98.2 ± 2.4 | 99.3 ± 1.0 | 96.3 ± 2.7 |

| 26 | 69.0 ± 3.5 | 95.4 ± 2.3 | 98.5 ± 1.3 | 97.7 ± 1.8 | 72.7 ± 6.0 | 97.8 ± 1.7 | 98.8 ± 1.5 | 97.4 ± 1.5 | 70.3 ± 4.4 | 97.6 ± 1.6 | 99.1 ± 0.9 | 97.5 ± 1.9 | 68.9 ± 3.3 | 96.2 ± 1.4 | 99.0 ± 0.7 | 97.3 ± 1.5 |

| 27 | 85.3 ± 1.9 | 97.1 ± 2.1 | 98.8 ± 0.9 | 95.6 ± 2.2 | 85.3 ± 2.3 | 99.9 ± 0.2 | 98.9 ± 1.8 | 97.0 ± 1.6 | 87.0 ± 1.9 | 98.7 ± 1.4 | 99.9 ± 0.3 | 97.9 ± 1.5 | 83.5 ± 1.9 | 97.5 ± 1.5 | 98.5 ± 1.3 | 95.9 ± 2.8 |

| 28 | 79.6 ± 3.4 | 96.6 ± 1.9 | 98.4 ± 1.8 | 97.6 ± 1.5 | 82.6 ± 3.9 | 98.1 ± 1.6 | 98.9 ± 1.5 | 97.3 ± 1.6 | 80.4 ± 3.7 | 97.7 ± 1.7 | 99.4 ± 1.0 | 97.2 ± 2.6 | 78.3 ± 3.4 | 97.9 ± 1.4 | 98.8 ± 2.1 | 98.3 ± 1.9 |

| 29 | 73.6 ± 3.4 | 96.6 ± 2.1 | 97.6 ± 1.4 | 97.3 ± 1.3 | 75.0 ± 5.9 | 97.9 ± 1.7 | 97.9 ± 1.3 | 97.4 ± 1.2 | 73.6 ± 4.0 | 97.7 ± 1.7 | 99.1 ± 1.1 | 96.6 ± 1.4 | 73.0 ± 3.6 | 96.0 ± 2.3 | 95.7 ± 2.4 | 96.4 ± 2.1 |

| 30 | 73.8 ± 4.1 | 96.6 ± 2.6 | 99.1 ± 1.0 | 97.8 ± 1.5 | 74.7 ± 2.8 | 98.8 ± 1.4 | 99.2 ± 1.3 | 97.2 ± 1.5 | 75.8 ± 4.6 | 97.6 ± 1.6 | 99.7 ± 0.5 | 95.7 ± 3.9 | 72.7 ± 3.2 | 97.3 ± 2.1 | 99.1 ± 0.7 | 98.7 ± 0.9 |

| 31 | 79.6 ± 2.7 | 97.9 ± 3.3 | 96.9 ± 2.0 | 98.0 ± 1.1 | 81.0 ± 5.1 | 100.0 ± 0.0 | 96.1 ± 3.4 | 98.4 ± 1.2 | 80.6 ± 3.3 | 99.2 ± 1.4 | 99.1 ± 1.1 | 97.7 ± 1.2 | 77.6 ± 2.7 | 98.9 ± 1.4 | 96.2 ± 2.5 | 97.1 ± 1.3 |

| 32 | 80.7 ± 4.6 | 99.8 ± 0.3 | 98.6 ± 0.9 | 98.9 ± 1.0 | 82.7 ± 4.1 | 99.7 ± 1.1 | 99.2 ± 2.0 | 98.7 ± 0.7 | 82.8 ± 4.0 | 100.0 ± 0.0 | 98.3 ± 3.4 | 98.1 ± 1.2 | 80.1 ± 4.4 | 99.4 ± 0.6 | 99.4 ± 0.8 | 98.8 ± 0.7 |

4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Philipe de Souza Ferreira, L.; André da Silva, R.; Marques Mesquita da Costa, M.; Moraes de Paiva Roda, V.; Vizcaino, S.; Janisset, N.R.L.L.; Ramos Vieira, R.; Marcos Sanches, J.; Maria Soares Junior, J.; de Jesus Simões, M. Sex differences in Parkinson’s Disease: An emerging health question. Clinics 2022, 77, 100121. [Google Scholar] [CrossRef]

- Zirra, A.; Rao, S.C.; Bestwick, J.; Rajalingam, R.; Marras, C.; Blauwendraat, C.; Mata, I.F.; Noyce, A.J. Gender differences in the prevalence of Parkinson’s disease. Mov. Disord. Clin. Pract. 2023, 10, 86–93. [Google Scholar] [CrossRef]

- Marsili, L.; Rizzo, G.; Colosimo, C. Diagnostic criteria for Parkinson’s disease: From James Parkinson to the concept of prodromal disease. Front. Neurol. 2018, 9, 156. [Google Scholar] [CrossRef]

- Postuma, R.B.; Berg, D.; Stern, M.; Poewe, W.; Olanow, C.W.; Oertel, W.; Obeso, J.; Marek, K.; Litvan, I.; Lang, A.E.; et al. MDS clinical diagnostic criteria for Parkinson’s disease. Mov. Disord. 2015, 30, 1591–1601. [Google Scholar] [CrossRef] [PubMed]

- Narayanan, N.S.; Rodnitzky, R.L.; Uc, E.Y. Prefrontal dopamine signaling and cognitive symptoms of Parkinson’s disease. Rev. Neurosci. 2013, 24, 267–278. [Google Scholar] [CrossRef]

- Ye, Z. Mapping neuromodulatory systems in Parkinson’s disease: Lessons learned beyond dopamine. Curr. Med. 2022, 1, 15. [Google Scholar] [CrossRef]

- Buddhala, C.; Loftin, S.K.; Kuley, B.M.; Cairns, N.J.; Campbell, M.C.; Perlmutter, J.S.; Kotzbauer, P.T. Dopaminergic, serotonergic, and noradrenergic deficits in Parkinson disease. Ann. Clin. Transl. Neurol. 2015, 2, 949–959. [Google Scholar] [CrossRef]

- Beach, T.G.; Adler, C.H. Importance of low diagnostic Accuracy for early Parkinson’s disease. Mov. Disord. 2018, 33, 1551–1554. [Google Scholar] [CrossRef]

- Sheng, J.; Wang, B.; Zhang, Q.; Liu, Q.; Ma, Y.; Liu, W.; Shao, M.; Chen, B. A novel joint HCPMMP method for automatically classifying Alzheimer’s and different stage MCI patients. Behav. Brain Res. 2019, 365, 210–221. [Google Scholar] [CrossRef] [PubMed]

- Raghavendra, U.; Acharya, U.R.; Adeli, H. Artificial intelligence techniques for automated diagnosis of neurological disorders. Eur. Neurol. 2019, 82, 41–64. [Google Scholar] [CrossRef]

- Bigdely-Shamlo, N.; Mullen, T.; Kothe, C.; Su, K.M.; Robbins, K.A. The PREP pipeline: Standardized preprocessing for large-scale EEG analysis. Front. Neuroinform. 2015, 9, 16. [Google Scholar] [CrossRef]

- Cole, S.; Voytek, B. Cycle-by-cycle analysis of neural oscillations. J. Neurophysiol. 2019, 122, 849–861. [Google Scholar] [CrossRef] [PubMed]

- Gimenez-Aparisi, G.; Guijarro-Estelles, E.; Chornet-Lurbe, A.; Diaz-Roman, M.; Hao, D.; Li, G.; Ye-Lin, Y. Early detection of Parkinson’s disease based on beta dynamic features and beta-gamma coupling from non-invasive resting state EEG: Influence of the eyes. Biomed. Signal Process. Control 2025, 107, 107868. [Google Scholar] [CrossRef]

- Victor Paul M, A.; Shankar, S. Deep learning-based method for detecting Parkinson using 1D convolutional neural networks and improved jellyfish algorithms. Int. J. Electr. Comput. Eng. Syst. 2024, 15, 515–522. [Google Scholar] [CrossRef]

- Loh, H.W.; Ooi, C.P.; Palmer, E.; Barua, P.D.; Dogan, S.; Tuncer, T.; Baygin, M.; Acharya, U.R. GaborPDNet: Gabor Transformation and Deep Neural Network for Parkinson’s Disease Detection Using EEG Signals. Electronics 2021, 10, 1740. [Google Scholar] [CrossRef]

- de Oliveira, A.P.S.; de Santana, M.A.; Andrade, M.K.S.; Gomes, J.C.; Rodrigues, M.C.A.; dos Santos, W.P. Early diagnosis of Parkinson’s disease using EEG, machine learning and partial directed coherence. Res. Biomed. Eng. 2020, 36, 311–331. [Google Scholar] [CrossRef]

- Ezazi, Y.; Ghaderyan, P. Textural feature of EEG signals as a new biomarker of reward processing in Parkinson’s disease detection. Biocybern. Biomed. Eng. 2022, 42, 950–962. [Google Scholar] [CrossRef]

- Hassin-Baer, S.; Cohen, O.S.; Israeli-Korn, S.; Yahalom, G.; Benizri, S.; Sand, D.; Issachar, G.; Geva, A.B.; Shani-Hershkovich, R.; Peremen, Z. Identification of an early-stage Parkinson’s disease neuromarker using event-related potentials, brain network analytics and machine-learning. PLoS ONE 2022, 17, e0261947. [Google Scholar] [CrossRef]

- Shah, S.A.A.; Zhang, L.; Bais, A. Dynamical system based compact deep hybrid network for classification of Parkinson disease related EEG signals. Neural Netw. 2020, 130, 75–84. [Google Scholar] [CrossRef]

- Wang, X.; Huang, J.; Chatzakou, M.; Medijainen, K.; Taba, P.; Toomela, A.; Nomm, S.; Ruzhansky, M. A light-weight CNN model for efficient Parkinson’s disease diagnostics. In Proceedings of the 2023 IEEE 36th International Symposium on Computer-Based Medical Systems (CBMS), L’Aquila, Italy, 22–24 June 2023; pp. 616–621. [Google Scholar]

- Vanegas, M.I.; Ghilardi, M.F.; Kelly, S.P.; Blangero, A. Machine learning for EEG-based biomarkers in Parkinson’s disease. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Aslam, A.R.; Altaf, M.A.B. An On-Chip Processor for Chronic Neurological Disorders Assistance Using Negative Affectivity Classification. IEEE Trans. Biomed. Circuits Syst. 2020, 14, 838–851. [Google Scholar] [CrossRef]

- Dar, M.N.; Akram, M.U.; Yuvaraj, R.; Gul Khawaja, S.; Murugappan, M. EEG-based emotion charting for Parkinson’s disease patients using Convolutional Recurrent Neural Networks and cross dataset learning. Comput. Biol. Med. 2022, 144, 105327. [Google Scholar] [CrossRef]

- He, S.B.; Liu, C.Y.; Chen, L.D.; Ye, Z.N.; Zhang, Y.P.; Tang, W.G.; Wang, B.d.; Gao, X. Meta-analysis of visual evoked potential and Parkinson’s disease. Park. Dis. 2018, 2018, 3201308. [Google Scholar]

- Molcho, L.; Maimon, N.B.; Hezi, N.; Zeimer, T.; Intrator, N.; Gurevich, T. Evaluation of Parkinson’s Disease Early Diagnosis Using Single-Channel EEG Features and Auditory Cognitive Assessment. Front. Neurol. 2023, 14, 1273458. [Google Scholar] [CrossRef]

- Wu, H.; Qi, J.; Purwanto, E.; Zhu, X.; Yang, P.; Chen, J. Multi-Scale Feature and Multi-Channel Selection toward Parkinson’s Disease Diagnosis with EEG. arXiv 2024, arXiv:382338819. [Google Scholar]

- Maitín, A.M.; García-Tejedor, A.J.; Muñoz, J.P.R. Machine learning approaches for detecting Parkinson’s disease from EEG analysis: A systematic review. Appl. Sci. 2020, 10, 8662. [Google Scholar] [CrossRef]

- Belyaev, M.; Murugappan, M.; Velichko, A.; Korzun, D. Entropy-based machine learning model for fast diagnosis and monitoring of Parkinson’s disease. Sensors 2023, 23, 8609. [Google Scholar] [PubMed]

- Guo, D.; Guo, F.; Zhang, Y.; Li, F.; Xia, Y.; Xu, P.; Yao, D. Periodic visual stimulation induces resting-state brain network reconfiguration. Front. Comput. Neurosci. 2018, 12, 21. [Google Scholar] [CrossRef] [PubMed]

- Petro, N.M.; Ott, L.R.; Penhale, S.H.; Rempe, M.P.; Embury, C.M.; Picci, G.; Wang, Y.P.; Stephen, J.M.; Calhoun, V.D.; Wilson, T.W. Eyes-closed versus eyes-open differences in spontaneous neural dynamics during development. Neuroimage 2022, 258, 119337. [Google Scholar] [CrossRef]

- İşcan, Z.; Nikulin, V.V. Steady state visual evoked potential (SSVEP) based brain-computer interface (BCI) performance under different perturbations. PLoS ONE 2018, 13, e0191673. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, M.; Feng, X. Sparse representation or collaborative representation: Which helps face recognition? In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 471–478. [Google Scholar]

- Dubbelink, K.T.O.; Hillebrand, A.; Twisk, J.W.; Deijen, J.B.; Stoffers, D.; Scherder, E.J.; Stam, C.J. Predicting dementia in Parkinson disease by combining neurophysiologic and cognitive markers. Neurology 2014, 82, 263–270. [Google Scholar] [CrossRef] [PubMed]

- Babiloni, C.; De Pandis, M.F.; Vecchio, F.; Buffo, P.; Sorpresi, F.; Frisoni, G.B.; Rossini, P.M. Cortical sources of resting state EEG rhythms in Parkinson’s disease related dementia and Alzheimer’s disease. Clin. Neurophysiol. 2011, 122, 2355–2364. [Google Scholar] [CrossRef]

- Caviness, J.N.; Utianski, R.L.; Hentz, J.G.; Beach, T.G.; Dugger, B.N.; Shill, H.A.; Adler, C.H. Spectral EEG abnormalities in Parkinson’s disease without dementia. Clin. Neurophysiol. 2007, 118, 2510–2515. [Google Scholar] [CrossRef]

- Bosboom, J.L.; Stoffers, D.; Wolters, E.C.; Stam, C.J.; Berendse, H.W. MEG resting state functional connectivity in Parkinson’s disease related dementia. J. Neural Transm. 2006, 113, 593–598. [Google Scholar] [CrossRef] [PubMed]

- Shaban, M.; Amara, A.W. Resting-state electroencephalography based deep-learning for the detection of parkinson’s disease. PLoS ONE 2022, 17, e0263159. [Google Scholar] [CrossRef] [PubMed]

- Geraedts, V.J.; Boon, L.I.; Marinus, J.; Gouw, A.A.; van Hilten, J.J.; Stam, C.J.; Tannemaat, M.R.; Contarino, M.F. Clinical correlates of quantitative EEG in parkinson disease. Neurology 2018, 91, 871–883. [Google Scholar] [CrossRef]

- Gérard, M.; Bayot, M.; Derambure, P.; Dujardin, K.; Defebvre, L.; Betrouni, N.; Delval, A. EEG-based functional connectivity and executive control in patients with parkinson’s disease and freezing of gait. Clin. Neurophysiol. 2022, 137, 207–215. [Google Scholar] [CrossRef] [PubMed]

- Little, S.; Pogosyan, A.; Kuhn, A.A.; Brown, P. β band stability over time correlates with Parkinsonian rigidity and bradykinesia. Exp. Neurol. 2012, 236, 383–388. [Google Scholar] [CrossRef]

- Morita, A.; Kamei, S.; Mizutani, T. Relationship between slowing of the EEG and cognitive impairment in Parkinson disease. J. Clin. Neurophysiol. 2011, 28, 384–387. [Google Scholar] [CrossRef]

- Aljalal, M.; Aldosari, S.A.; AlSharabi, K.; Abdurraqeeb, A.M.; Alturki, F.A. Parkinson’s disease detection from resting-state EEG signals using common spatial pattern, entropy, and Machine Learning Techniques. Diagnostics 2022, 12, 1033. [Google Scholar] [CrossRef]

- Siuly, S.; Khare, S.K.; Kabir, E.; Sadiq, M.T.; Wang, H. An efficient parkinson’s disease detection framework: Leveraging time-frequency representation and AlexNet Convolutional Neural Network. Comput. Biol. Med. 2024, 174, 108462. [Google Scholar] [CrossRef]

- Qiu, L.; Li, J.; Zhong, L.; Feng, W.; Zhou, C.; Pan, J. A novel EEG-based parkinson’s disease detection model using multiscale convolutional Prototype Networks. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar] [CrossRef]

| Data Acquisition Protocol | Stimuli | No. of Subject (30 HC & 30 PD) | Sample (Ch * Sample/Subject) | Total Sample (Sample * Subject) | |

|---|---|---|---|---|---|

| Description | Notation | ||||

| Data Collection Protocol 1 | Resting State Eye Close | 60 | 192 (32 * 06) | 103,680 (1728 * 60) | |

| 60 | 192 (32 * 06) | ||||

| Relax State | 60 | 192 (32 * 06) | |||

| 60 | 192 (32 * 06) | ||||

| Comedy State | 60 | 224 (32 * 07) | |||

| 60 | 224 (32 * 07) | ||||

| Horror State | 60 | 256 (32 * 08) | |||

| 60 | 256 (32 * 08) | ||||

| Data Collection Protocol 2 | Alpha Neumaric Flikering | 60 | 192 (32 * 06) | 99,840 (1664 * 60) | |

| 60 | 192 (32 * 06) | ||||

| 60 | 192 (32 * 06) | ||||

| 60 | 192 (32 * 06) | ||||

| Relax State | 60 | 192 (32 * 06) | |||

| Comedy State | 60 | 256 (32 * 08) | |||

| Horror State | 60 | 448 (32 * 14) | |||

| Ch | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | |

| 1 | 79.0 ± 6.2 | 97.8 ± 2.0 | 99.3 ± 1.1 | 96.6 ± 1.4 | 78.1 ± 4.2 | 97.6 ± 1.7 | 98.8 ± 1.2 | 93.7 ± 2.4 | 78.6 ± 4.12 | 98.5 ± 1.8 | 98.2 ± 1.1 | 95.7 ± 2.7 | 78.9 ± 4.0 | 98.6 ± 1.5 | 98.3 ± 1.8 | 95.6 ± 2.9 |

| 2 | 69.1 ± 4.2 | 97.4 ± 1.5 | 98.9 ± 0.8 | 96.5 ± 1.1 | 68.3 ± 3.9 | 95.1 ± 2.8 | 98.8 ± 1.6 | 96.2 ± 1.8 | 68.7 ± 3.68 | 93.8 ± 3.1 | 99.7 ± 0.7 | 96.6 ± 2.4 | 70.1 ± 4.0 | 96.1 ± 2.7 | 100.0 ± 0.2 | 95.7 ± 1.9 |

| 3 | 78.9 ± 3.0 | 98.9 ± 1.4 | 97.8 ± 1.6 | 97.8 ± 1.0 | 80.3 ± 3.9 | 97.8 ± 2.0 | 97.8 ± 2.5 | 93.3 ± 3.0 | 80.3 ± 3.99 | 98.0 ± 1.5 | 95.7 ± 6.3 | 93.3 ± 7.7 | 79.7 ± 4.6 | 97.9 ± 1.3 | 94.8 ± 8.8 | 93.1 ± 7.3 |

| 4 | 83.1 ± 4.7 | 99.1 ± 1.3 | 99.7 ± 0.2 | 97.0 ± 1.3 | 84.4 ± 5.1 | 99.1 ± 1.1 | 98.3 ± 1.8 | 97.0 ± 2.1 | 85.1 ± 4.59 | 100.0 ± 0.2 | 97.0 ± 1.2 | 97.1 ± 2.1 | 85.7 ± 3.9 | 98.1 ± 1.9 | 96.1 ± 1.3 | 96.1 ± 1.9 |

| 5 | 83.8 ± 6.6 | 98.4 ± 1.4 | 97.4 ± 3.0 | 97.1 ± 3.2 | 90.8 ± 1.8 | 99.7 ± 0.3 | 97.4 ± 3.2 | 95.3 ± 3.7 | 91.0 ± 1.61 | 100.0 ± 0.0 | 97.6 ± 2.9 | 97.2 ± 2.8 | 90.3 ± 1.9 | 99.8 ± 0.5 | 98.3 ± 2.7 | 97.3 ± 1.7 |

| 6 | 79.5 ± 4.5 | 96.8 ± 2.5 | 99.6 ± 0.7 | 96.8 ± 1.8 | 82.0 ± 3.9 | 99.1 ± 0.4 | 99.5 ± 0.7 | 98.1 ± 1.3 | 82.8 ± 4.31 | 99.9 ± 0.2 | 98.7 ± 1.5 | 95.7 ± 3.2 | 83.2 ± 4.5 | 99.1 ± 1.3 | 98.3 ± 1.7 | 97.6 ± 2.1 |

| 7 | 76.6 ± 6.1 | 98.2 ± 1.7 | 99.1 ± 1.0 | 95.5 ± 1.5 | 78.1 ± 4.5 | 97.0 ± 2.4 | 99.6 ± 0.5 | 95.4 ± 1.9 | 78.5 ± 4.60 | 97.7 ± 1.3 | 98.8 ± 1.3 | 95.1 ± 1.6 | 78.8 ± 3.4 | 99.5 ± 0.9 | 99.0 ± 1.0 | 95.2 ± 2.7 |

| 8 | 77.6 ± 4.6 | 96.8 ± 1.9 | 97.7 ± 1.9 | 95.2 ± 2.6 | 77.7 ± 4.7 | 96.5 ± 1.9 | 97.8 ± 0.9 | 93.2 ± 2.1 | 78.2 ± 4.19 | 99.1 ± 0.7 | 99.0 ± 1.0 | 92.3 ± 2.5 | 79.0 ± 3.5 | 97.3 ± 2.5 | 99.2 ± 1.1 | 95.2 ± 3.4 |

| 9 | 67.6 ± 4.1 | 99.2 ± 0.7 | 98.7 ± 1.2 | 96.5 ± 1.2 | 74.3 ± 3.4 | 100.0 ± 0.1 | 99.7 ± 0.4 | 98.0 ± 1.3 | 74.7 ± 3.58 | 100.0 ± 0.0 | 99.8 ± 0.4 | 96.7 ± 1.6 | 74.7 ± 3.6 | 100.0 ± 0.0 | 99.2 ± 0.9 | 96.9 ± 2.9 |

| 10 | 56.9 ± 4.3 | 95.5 ± 2.9 | 98.5 ± 1.5 | 96.4 ± 2.1 | 59.0 ± 3.6 | 99.1 ± 1.3 | 99.6 ± 0.7 | 95.2 ± 1.7 | 59.0 ± 3.82 | 99.8 ± 0.3 | 99.8 ± 0.5 | 92.8 ± 2.9 | 58.9 ± 4.4 | 100.0 ± 0.0 | 99.8 ± 0.3 | 96.8 ± 2.2 |

| 11 | 68.2 ± 3.7 | 95.5 ± 1.8 | 99.3 ± 0.6 | 94.8 ± 2.0 | 67.8 ± 3.3 | 98.1 ± 1.3 | 97.6 ± 2.0 | 95.5 ± 1.5 | 68.3 ± 3.68 | 99.4 ± 1.0 | 98.7 ± 1.4 | 94.8 ± 2.6 | 68.2 ± 4.0 | 100.0 ± 0.2 | 99.5 ± 0.6 | 95.8 ± 2.2 |

| 12 | 75.2 ± 5.5 | 97.5 ± 2.1 | 99.0 ± 1.0 | 93.3 ± 2.3 | 76.5 ± 4.3 | 96.7 ± 1.8 | 99.4 ± 0.4 | 96.7 ± 2.0 | 76.8 ± 4.46 | 99.3 ± 0.4 | 100.0 ± 0.0 | 94.1 ± 2.6 | 77.1 ± 4.4 | 99.6 ± 0.9 | 99.4 ± 0.6 | 95.2 ± 1.7 |

| 13 | 69.7 ± 4.0 | 96.1 ± 4.3 | 98.3 ± 0.8 | 92.8 ± 4.3 | 69.3 ± 3.4 | 96.8 ± 2.3 | 99.3 ± 0.7 | 95.7 ± 2.1 | 69.4 ± 3.11 | 96.5 ± 1.8 | 99.3 ± 1.1 | 91.5 ± 1.6 | 68.7 ± 3.6 | 96.7 ± 1.2 | 99.5 ± 0.8 | 94.7 ± 1.6 |

| 14 | 78.0 ± 3.2 | 97.1 ± 1.9 | 98.0 ± 1.7 | 95.3 ± 1.3 | 79.0 ± 4.1 | 98.8 ± 1.6 | 99.0 ± 1.4 | 96.2 ± 1.4 | 79.6 ± 4.23 | 99.8 ± 1.8 | 99.2 ± 1.3 | 95.6 ± 1.5 | 79.1 ± 5.0 | 99.3 ± 0.8 | 98.7 ± 1.0 | 97.1 ± 1.7 |

| 15 | 74.7 ± 6.2 | 100.0 ± 0.0 | 99.2 ± 0.9 | 97.2 ± 1.9 | 77.5 ± 5.6 | 98.0 ± 1.7 | 97.0 ± 5.7 | 96.3 ± 1.2 | 77.6 ± 6.17 | 98.0 ± 1.7 | 99.0 ± 1.3 | 97.5 ± 1.1 | 76.6 ± 6.3 | 98.0 ± 1.7 | 96.7 ± 5.8 | 94.8 ± 1.9 |

| 16 | 73.2 ± 4.8 | 97.8 ± 1.6 | 99.6 ± 0.9 | 94.6 ± 2.1 | 72.2 ± 4.7 | 97.6 ± 1.0 | 97.3 ± 4.7 | 94.8 ± 2.3 | 73.3 ± 4.56 | 97.8 ± 2.6 | 97.9 ± 4.3 | 94.7 ± 1.7 | 74.3 ± 3.7 | 99.6 ± 1.0 | 98.3 ± 4.1 | 96.7 ± 2.2 |

| 17 | 72.3 ± 5.2 | 97.7 ± 1.9 | 97.4 ± 1.6 | 94.5 ± 2.2 | 73.3 ± 4.9 | 99.1 ± 1.0 | 98.1 ± 1.5 | 97.3 ± 1.5 | 73.1 ± 4.92 | 99.0 ± 1.6 | 98.3 ± 1.3 | 96.5 ± 1.7 | 73.1 ± 4.9 | 98.2 ± 1.9 | 97.6 ± 1.6 | 94.6 ± 1.9 |

| 18 | 70.5 ± 4.8 | 96.3 ± 1.9 | 100.0 ± 0.0 | 93.8 ± 2.8 | 68.5 ± 4.2 | 98.5 ± 0.9 | 98.8 ± 1.5 | 95.8 ± 2.2 | 69.7 ± 4.44 | 98.7 ± 2.2 | 99.7 ± 0.6 | 95.4 ± 2.0 | 69.3 ± 4.4 | 99.6 ± 0.4 | 100.0 ± 0.0 | 96.6 ± 1.9 |

| 19 | 72.0 ± 9.2 | 95.5 ± 3.6 | 99.1 ± 0.7 | 92.1 ± 2.3 | 73.3 ± 9.1 | 96.8 ± 2.6 | 94.2 ± 6.2 | 96.9 ± 1.8 | 74.6 ± 8.93 | 97.8 ± 1.9 | 98.0 ± 1.5 | 97.1 ± 1.6 | 74.1 ± 8.5 | 98.4 ± 1.2 | 97.0 ± 1.9 | 96.0 ± 2.0 |

| 20 | 81.6 ± 6.3 | 95.8 ± 2.9 | 99.4 ± 1.6 | 93.7 ± 1.1 | 84.7 ± 3.7 | 97.7 ± 1.8 | 98.1 ± 4.4 | 98.6 ± 1.4 | 85.3 ± 4.27 | 98.2 ± 2.4 | 99.5 ± 0.7 | 94.4 ± 2.6 | 85.0 ± 2.8 | 99.1 ± 1.9 | 98.8 ± 0.9 | 96.7 ± 2.2 |

| 21 | 68.0 ± 6.1 | 96.3 ± 2.9 | 99.5 ± 1.1 | 93.6 ± 2.6 | 75.2 ± 5.7 | 96.1 ± 2.6 | 98.6 ± 1.1 | 96.1 ± 1.5 | 76.2 ± 5.16 | 97.8 ± 1.9 | 99.3 ± 0.7 | 95.0 ± 2.4 | 77.5 ± 4.6 | 96.3 ± 2.4 | 97.0 ± 2.1 | 95.4 ± 1.8 |

| 22 | 78.6 ± 5.2 | 97.8 ± 1.5 | 99.9 ± 0.2 | 95.4 ± 2.0 | 81.1 ± 4.5 | 98.5 ± 1.6 | 99.6 ± 0.3 | 96.8 ± 1.3 | 82.9 ± 4.57 | 98.7 ± 1.2 | 99.5 ± 0.5 | 95.5 ± 2.4 | 83.0 ± 4.6 | 99.8 ± 0.3 | 98.7 ± 1.1 | 96.8 ± 1.5 |

| 23 | 58.7 ± 3.6 | 100.0 ± 0.1 | 99.4 ± 0.3 | 97.2 ± 1.4 | 64.1 ± 3.0 | 92.3 ± 3.1 | 91.8 ± 4.0 | 89.5 ± 3.7 | 65.0 ± 2.40 | 93.4 ± 2.8 | 94.8 ± 1.4 | 91.0 ± 3.7 | 66.1 ± 2.5 | 92.3 ± 2.9 | 92.6 ± 3.1 | 92.3 ± 3.3 |

| 24 | 69.8 ± 5.3 | 98.6 ± 1.3 | 99.7 ± 0.5 | 96.5 ± 1.1 | 77.4 ± 3.4 | 94.7 ± 2.3 | 97.0 ± 1.8 | 97.0 ± 2.7 | 77.7 ± 3.86 | 96.8 ± 2.1 | 94.9 ± 2.3 | 95.6 ± 1.8 | 77.7 ± 3.9 | 96.8 ± 1.2 | 98.5 ± 1.4 | 96.2 ± 1.8 |

| 25 | 80.6 ± 3.3 | 98.2 ± 1.6 | 99.2 ± 1.2 | 95.7 ± 1.2 | 79.6 ± 4.4 | 96.9 ± 1.3 | 97.8 ± 2.2 | 96.2 ± 1.6 | 78.7 ± 4.05 | 99.2 ± 1.3 | 98.8 ± 1.4 | 95.8 ± 2.2 | 78.8 ± 4.7 | 99.3 ± 0.7 | 99.1 ± 1.1 | 96.6 ± 1.7 |

| 26 | 72.4 ± 3.0 | 96.0 ± 2.8 | 99.2 ± 1.8 | 95.8 ± 1.7 | 69.0 ± 1.9 | 96.7 ± 1.2 | 99.5 ± 0.9 | 95.6 ± 2.0 | 68.9 ± 1.57 | 98.0 ± 1.7 | 99.2 ± 1.1 | 94.9 ± 2.5 | 69.3 ± 3.1 | 98.0 ± 1.7 | 98.7 ± 1.1 | 96.6 ± 2.0 |

| 27 | 77.4 ± 5.8 | 97.0 ± 2.2 | 100.0 ± 0.0 | 96.1 ± 2.2 | 83.9 ± 4.1 | 96.8 ± 2.7 | 97.7 ± 1.9 | 95.5 ± 1.3 | 85.1 ± 3.99 | 97.2 ± 1.9 | 98.1 ± 1.4 | 94.9 ± 2.0 | 86.0 ± 3.1 | 97.9 ± 2.4 | 98.2 ± 1.4 | 97.3 ± 1.8 |

| 28 | 79.2 ± 4.3 | 96.5 ± 2.7 | 99.4 ± 1.1 | 93.8 ± 2.6 | 77.0 ± 5.0 | 96.2 ± 2.0 | 97.0 ± 3.8 | 95.0 ± 1.9 | 79.0 ± 5.19 | 97.3 ± 1.9 | 99.0 ± 1.3 | 97.0 ± 1.4 | 80.5 ± 4.6 | 97.8 ± 1.6 | 99.4 ± 1.1 | 97.0 ± 1.5 |

| 29 | 68.2 ± 4.5 | 97.4 ± 1.8 | 98.8 ± 1.1 | 95.4 ± 1.7 | 72.3 ± 4.4 | 96.2 ± 2.1 | 95.2 ± 5.8 | 93.1 ± 2.8 | 73.0 ± 3.99 | 97.5 ± 2.0 | 96.5 ± 4.1 | 94.6 ± 1.4 | 73.1 ± 4.1 | 97.8 ± 1.6 | 98.4 ± 1.9 | 93.2 ± 2.1 |

| 30 | 70.5 ± 3.8 | 95.0 ± 3.9 | 98.7 ± 1.1 | 94.7 ± 1.4 | 74.1 ± 4.8 | 96.3 ± 2.0 | 98.1 ± 1.2 | 94.6 ± 2.2 | 74.5 ± 4.69 | 96.8 ± 2.3 | 97.7 ± 2.7 | 96.5 ± 1.9 | 75.9 ± 4.4 | 96.8 ± 1.6 | 95.8 ± 2.5 | 97.0 ± 2.6 |

| 31 | 75.2 ± 4.9 | 98.7 ± 1.7 | 98.0 ± 1.2 | 97.8 ± 1.9 | 77.2 ± 4.2 | 98.7 ± 1.7 | 97.6 ± 1.1 | 95.2 ± 2.1 | 78.3 ± 4.49 | 98.7 ± 1.7 | 98.1 ± 1.6 | 94.5 ± 2.8 | 78.6 ± 4.5 | 99.6 ± 0.7 | 95.5 ± 5.3 | 97.0 ± 1.1 |

| 32 | 81.0 ± 5.2 | 99.3 ± 1.1 | 99.1 ± 0.5 | 97.8 ± 1.0 | 81.3 ± 4.8 | 98.4 ± 1.7 | 99.1 ± 0.7 | 96.1 ± 2.4 | 81.5 ± 5.30 | 98.3 ± 1.7 | 98.1 ± 1.3 | 96.7 ± 1.8 | 81.0 ± 5.4 | 97.8 ± 2.3 | 97.9 ± 1.6 | 96.2 ± 1.9 |

| Ch | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | |

| 1 | 82.0 ± 4.8 | 98.5 ± 1.7 | 96.5 ± 2.1 | 93.6 ± 2.3 | 80.3 ± 2.9 | 98.1 ± 1.9 | 97.6 ± 1.3 | 93.6 ± 2.5 | 83.5 ± 3.2 | 98.5 ± 1.7 | 96.8 ± 1.4 | 94.2 ± 2.5 |

| 2 | 72.0 ± 3.3 | 92.8 ± 3.0 | 98.5 ± 2.4 | 91.9 ± 4.1 | 70.3 ± 3.0 | 96.5 ± 2.6 | 99.5 ± 1.3 | 95.2 ± 1.8 | 72.3 ± 3.0 | 99.8 ± 0.4 | 98.4 ± 1.4 | 95.8 ± 2.1 |

| 3 | 80.2 ± 5.3 | 99.3 ± 0.7 | 99.8 ± 0.4 | 96.2 ± 2.3 | 77.7 ± 4.4 | 98.5 ± 1.1 | 99.2 ± 0.8 | 96.8 ± 1.3 | 77.8 ± 4.6 | 99.7 ± 0.5 | 99.0 ± 1.3 | 98.1 ± 0.6 |

| 4 | 88.5 ± 2.9 | 100.0 ± 0.0 | 99.3 ± 1.7 | 96.5 ± 1.8 | 88.0 ± 3.4 | 99.8 ± 0.5 | 98.7 ± 3.0 | 97.1 ± 1.7 | 88.7 ± 3.7 | 99.7 ± 0.7 | 97.2 ± 2.9 | 97.4 ± 2.3 |

| 5 | 88.7 ± 3.6 | 100.0 ± 0.0 | 98.3 ± 2.7 | 96.2 ± 3.0 | 87.5 ± 3.1 | 99.9 ± 0.4 | 96.9 ± 4.5 | 94.9 ± 3.7 | 88.2 ± 2.9 | 100.0 ± 0.0 | 96.3 ± 4.6 | 95.1 ± 3.9 |

| 6 | 81.4 ± 3.7 | 99.8 ± 0.3 | 98.6 ± 4.0 | 96.1 ± 1.8 | 79.5 ± 3.7 | 99.2 ± 0.5 | 99.1 ± 1.3 | 95.6 ± 3.0 | 80.2 ± 3.1 | 99.7 ± 0.3 | 97.7 ± 4.4 | 97.1 ± 1.5 |

| 7 | 84.9 ± 3.4 | 100.0 ± 0.2 | 99.1 ± 0.7 | 96.1 ± 2.2 | 82.0 ± 3.0 | 99.0 ± 0.8 | 99.2 ± 0.6 | 97.3 ± 1.4 | 83.1 ± 3.4 | 99.7 ± 0.4 | 99.8 ± 0.4 | 97.5 ± 1.3 |

| 8 | 83.3 ± 4.1 | 99.2 ± 1.3 | 97.0 ± 5.1 | 94.2 ± 5.9 | 82.3 ± 3.2 | 97.5 ± 1.0 | 97.5 ± 5.8 | 94.8 ± 6.3 | 83.6 ± 2.7 | 100.0 ± 0.0 | 97.3 ± 5.5 | 93.6 ± 5.9 |

| 9 | 75.2 ± 3.8 | 99.6 ± 0.6 | 98.4 ± 1.6 | 96.5 ± 2.4 | 73.9 ± 3.2 | 99.6 ± 0.6 | 98.5 ± 1.2 | 96.2 ± 2.3 | 74.0 ± 3.3 | 99.5 ± 0.6 | 99.1 ± 1.5 | 95.9 ± 2.4 |

| 10 | 60.7 ± 3.4 | 100.0 ± 0.0 | 100.0 ± 0.0 | 96.3 ± 1.3 | 59.7 ± 4.3 | 99.3 ± 0.5 | 99.7 ± 0.5 | 96.7 ± 1.4 | 59.6 ± 4.5 | 99.8 ± 0.4 | 99.3 ± 0.9 | 95.8 ± 1.9 |

| 11 | 65.0 ± 2.8 | 99.9 ± 0.2 | 99.6 ± 0.4 | 92.7 ± 2.7 | 65.1 ± 2.8 | 99.3 ± 0.5 | 99.4 ± 0.8 | 93.5 ± 3.0 | 65.7 ± 2.3 | 99.9 ± 0.2 | 98.3 ± 1.5 | 93.3 ± 2.0 |

| 12 | 75.3 ± 7.0 | 99.5 ± 0.7 | 98.6 ± 1.1 | 94.2 ± 4.1 | 72.4 ± 4.9 | 99.2 ± 0.5 | 98.8 ± 0.6 | 96.4 ± 1.7 | 74.1 ± 4.9 | 99.6 ± 0.3 | 99.5 ± 0.7 | 94.0 ± 1.8 |

| 13 | 69.6 ± 2.8 | 92.2 ± 3.6 | 96.8 ± 4.8 | 89.2 ± 5.5 | 67.8 ± 2.9 | 90.6 ± 3.5 | 95.1 ± 4.9 | 91.7 ± 4.2 | 69.1 ± 3.7 | 92.2 ± 4.8 | 93.8 ± 4.0 | 93.5 ± 3.3 |

| 14 | 80.0 ± 4.4 | 100.0 ± 0.0 | 100.0 ± 0.2 | 97.2 ± 1.1 | 80.0 ± 4.4 | 98.8 ± 1.0 | 98.5 ± 1.5 | 95.3 ± 1.4 | 80.0 ± 4.4 | 99.1 ± 1.0 | 98.3 ± 1.5 | 95.8 ± 2.7 |

| 15 | 78.0 ± 5.9 | 100.0 ± 0.0 | 97.6 ± 4.9 | 96.3 ± 2.2 | 78.2 ± 6.2 | 99.0 ± 1.1 | 97.4 ± 3.8 | 96.2 ± 2.0 | 80.3 ± 6.6 | 100.0 ± 0.2 | 100.0 ± 0.0 | 96.7 ± 2.2 |

| 16 | 73.6 ± 5.0 | 99.6 ± 0.6 | 98.8 ± 0.8 | 93.8 ± 2.9 | 71.3 ± 2.8 | 98.6 ± 1.0 | 96.4 ± 4.6 | 95.2 ± 2.1 | 71.5 ± 3.0 | 97.7 ± 1.5 | 97.8 ± 2.0 | 95.0 ± 2.7 |

| 17 | 76.0 ± 4.6 | 100.0 ± 0.0 | 100.0 ± 0.0 | 97.5 ± 2.1 | 76.3 ± 5.1 | 99.8 ± 0.4 | 97.1 ± 4.7 | 97.8 ± 1.2 | 77.0 ± 4.9 | 100.0 ± 0.0 | 99.4 ± 0.7 | 94.1 ± 3.0 |

| 18 | 70.1 ± 5.1 | 100.0 ± 0.0 | 100.0 ± 0.2 | 95.5 ± 2.6 | 67.9 ± 4.3 | 99.9 ± 0.2 | 99.0 ± 1.3 | 97.3 ± 1.6 | 68.2 ± 4.6 | 99.8 ± 0.4 | 99.5 ± 0.7 | 95.6 ± 2.5 |

| 19 | 67.8 ± 8.7 | 99.6 ± 0.2 | 96.7 ± 4.3 | 94.6 ± 1.8 | 66.8 ± 6.8 | 99.8 ± 0.5 | 98.5 ± 1.5 | 97.6 ± 1.6 | 67.2 ± 7.4 | 99.8 ± 0.4 | 97.8 ± 4.3 | 96.4 ± 1.8 |

| 20 | 83.0 ± 5.6 | 100.0 ± 0.0 | 99.8 ± 0.5 | 96.9 ± 1.7 | 80.8 ± 3.4 | 99.6 ± 0.4 | 98.9 ± 3.0 | 98.1 ± 1.2 | 81.8 ± 3.9 | 99.7 ± 0.4 | 97.5 ± 4.6 | 95.8 ± 2.6 |

| 21 | 85.3 ± 5.1 | 98.6 ± 1.8 | 96.3 ± 4.0 | 91.6 ± 4.9 | 85.6 ± 5.0 | 99.9 ± 0.4 | 99.0 ± 3.0 | 95.8 ± 3.7 | 86.3 ± 7.0 | 99.9 ± 0.4 | 99.0 ± 3.0 | 95.1 ± 3.9 |

| 22 | 83.5 ± 7.3 | 100.0 ± 0.2 | 99.9 ± 0.2 | 96.6 ± 1.6 | 81.5 ± 4.6 | 99.3 ± 1.1 | 98.7 ± 1.1 | 96.2 ± 1.5 | 83.1 ± 3.8 | 99.2 ± 0.7 | 99.3 ± 0.7 | 95.3 ± 2.8 |

| 23 | 67.2 ± 5.1 | 98.8 ± 2.5 | 97.5 ± 2.8 | 95.2 ± 2.1 | 71.1 ± 4.6 | 93.8 ± 3.3 | 94.5 ± 3.2 | 96.1 ± 2.1 | 73.0 ± 4.2 | 93.7 ± 2.9 | 95.5 ± 3.2 | 96.3 ± 3.3 |

| 24 | 81.3 ± 4.2 | 100.0 ± 0.0 | 100.0 ± 0.0 | 96.0 ± 2.1 | 81.1 ± 3.3 | 99.9 ± 0.4 | 99.6 ± 0.5 | 96.8 ± 1.6 | 81.2 ± 4.3 | 99.7 ± 0.6 | 97.9 ± 1.7 | 96.6 ± 2.2 |

| 25 | 84.2 ± 3.3 | 99.7 ± 0.4 | 99.2 ± 0.8 | 95.9 ± 2.7 | 82.1 ± 4.4 | 99.6 ± 0.5 | 99.6 ± 0.3 | 97.5 ± 1.3 | 86.0 ± 3.0 | 99.8 ± 0.4 | 99.7 ± 0.5 | 95.3 ± 2.8 |

| 26 | 67.5 ± 4.7 | 97.8 ± 1.5 | 99.2 ± 0.9 | 95.8 ± 2.4 | 68.1 ± 5.9 | 97.5 ± 1.5 | 98.7 ± 1.0 | 96.9 ± 2.3 | 71.5 ± 4.8 | 96.5 ± 1.3 | 96.5 ± 1.8 | 93.3 ± 3.1 |

| 27 | 86.0 ± 2.1 | 98.8 ± 1.5 | 99.7 ± 0.5 | 96.0 ± 2.1 | 85.3 ± 2.3 | 99.9 ± 0.4 | 99.1 ± 0.8 | 96.0 ± 1.8 | 85.3 ± 2.3 | 100.0 ± 0.2 | 99.1 ± 1.4 | 96.0 ± 1.2 |

| 28 | 82.0 ± 3.8 | 97.8 ± 1.9 | 96.1 ± 3.0 | 95.7 ± 3.4 | 83.6 ± 4.4 | 98.0 ± 1.7 | 98.5 ± 2.9 | 95.8 ± 3.6 | 86.3 ± 4.2 | 97.4 ± 1.3 | 97.0 ± 2.5 | 96.2 ± 2.6 |

| 29 | 76.0 ± 4.7 | 98.0 ± 1.6 | 97.2 ± 2.6 | 95.3 ± 1.7 | 77.4 ± 6.2 | 98.0 ± 1.7 | 98.1 ± 1.6 | 95.6 ± 1.8 | 78.9 ± 5.9 | 97.7 ± 1.5 | 96.4 ± 2.7 | 95.5 ± 1.6 |

| 30 | 78.7 ± 4.8 | 98.2 ± 1.6 | 96.8 ± 5.1 | 97.4 ± 1.4 | 77.5 ± 3.7 | 97.7 ± 1.4 | 99.5 ± 0.8 | 97.7 ± 1.2 | 79.2 ± 3.6 | 97.6 ± 1.3 | 98.7 ± 1.3 | 96.3 ± 2.2 |

| 31 | 82.7 ± 3.6 | 100.0 ± 0.2 | 97.6 ± 2.6 | 96.5 ± 2.0 | 84.5 ± 4.3 | 99.8 ± 0.3 | 98.0 ± 2.8 | 96.1 ± 2.2 | 85.1 ± 4.1 | 100.0 ± 0.2 | 97.7 ± 2.7 | 96.7 ± 1.5 |

| 32 | 83.2 ± 3.9 | 100.0 ± 0.0 | 99.7 ± 0.5 | 97.0 ± 2.0 | 84.1 ± 4.0 | 99.7 ± 0.3 | 99.6 ± 0.5 | 95.5 ± 1.8 | 85.3 ± 3.3 | 99.6 ± 0.5 | 98.7 ± 1.1 | 96.0 ± 2.3 |

| Ch | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | |

| 1 | 79.2 ± 5.1 | 98.1 ± 1.9 | 99.0 ± 0.6 | 97.5 ± 1.4 | 79.8 ± 5.0 | 98.1 ± 1.7 | 96.9 ± 6.6 | 97.4 ± 0.9 | 79.6 ± 4.8 | 97.9 ± 1.7 | 97.1 ± 5.0 | 97.6 ± 1.6 |

| 2 | 69.0 ± 4.0 | 96.6 ± 1.4 | 99.4 ± 0.4 | 97.8 ± 0.8 | 69.0 ± 4.0 | 96.1 ± 2.0 | 99.3 ± 0.6 | 97.8 ± 0.9 | 69.6 ± 3.7 | 97.0 ± 1.1 | 99.7 ± 0.4 | 98.4 ± 0.9 |

| 3 | 80.3 ± 3.1 | 97.6 ± 1.9 | 97.6 ± 1.8 | 96.3 ± 1.6 | 80.3 ± 3.1 | 98.2 ± 1.2 | 98.2 ± 1.7 | 97.6 ± 1.4 | 80.8 ± 3.4 | 98.1 ± 1.0 | 98.8 ± 0.9 | 97.8 ± 1.3 |

| 4 | 84.0 ± 4.9 | 98.5 ± 1.4 | 98.5 ± 0.7 | 98.5 ± 1.2 | 84.6 ± 4.5 | 99.8 ± 0.6 | 98.7 ± 1.0 | 98.7 ± 0.6 | 85.0 ± 4.2 | 98.7 ± 1.4 | 97.9 ± 1.2 | 98.3 ± 1.1 |

| 5 | 88.6 ± 3.8 | 99.8 ± 0.2 | 98.1 ± 2.9 | 97.8 ± 2.7 | 89.7 ± 2.9 | 100.0 ± 0.2 | 98.4 ± 2.5 | 98.5 ± 2.3 | 89.9 ± 3.0 | 99.6 ± 0.6 | 98.3 ± 2.4 | 98.3 ± 2.5 |

| 6 | 83.0 ± 3.1 | 99.0 ± 0.9 | 99.8 ± 0.3 | 98.4 ± 0.4 | 83.5 ± 3.2 | 99.5 ± 0.6 | 99.6 ± 0.5 | 98.6 ± 0.9 | 83.5 ± 3.6 | 99.3 ± 0.7 | 98.9 ± 1.2 | 99.1 ± 0.7 |

| 7 | 78.3 ± 4.3 | 97.9 ± 1.8 | 99.7 ± 0.4 | 98.2 ± 1.4 | 79.1 ± 4.1 | 97.1 ± 1.9 | 99.1 ± 0.6 | 97.8 ± 1.2 | 79.8 ± 3.7 | 98.4 ± 0.9 | 99.2 ± 0.7 | 98.4 ± 1.0 |

| 8 | 77.6 ± 4.7 | 95.0 ± 1.1 | 97.6 ± 1.3 | 95.0 ± 0.9 | 77.9 ± 4.5 | 97.5 ± 1.0 | 98.6 ± 0.9 | 96.5 ± 0.7 | 78.3 ± 4.1 | 96.9 ± 0.9 | 98.7 ± 0.6 | 97.0 ± 2.0 |

| 9 | 72.0 ± 3.0 | 99.8 ± 0.2 | 99.3 ± 0.7 | 98.6 ± 0.8 | 72.4 ± 3.0 | 100.0 ± 0.0 | 99.6 ± 0.6 | 98.8 ± 0.7 | 73.3 ± 3.5 | 99.5 ± 0.7 | 99.1 ± 0.8 | 98.6 ± 0.8 |

| 10 | 58.4 ± 3.7 | 97.6 ± 1.8 | 99.3 ± 0.8 | 97.8 ± 1.0 | 58.7 ± 4.0 | 98.8 ± 1.2 | 99.7 ± 0.5 | 98.2 ± 1.4 | 58.8 ± 4.1 | 99.2 ± 0.5 | 100.0 ± 0.2 | 98.4 ± 1.1 |

| 11 | 68.2 ± 3.3 | 97.8 ± 0.8 | 97.5 ± 1.4 | 96.8 ± 1.4 | 68.3 ± 3.8 | 98.8 ± 0.8 | 99.0 ± 0.8 | 96.2 ± 2.0 | 68.5 ± 3.9 | 99.0 ± 1.0 | 99.2 ± 0.5 | 96.7 ± 1.7 |

| 12 | 76.2 ± 4.8 | 97.0 ± 1.2 | 99.4 ± 0.7 | 97.1 ± 2.0 | 76.5 ± 5.0 | 97.7 ± 0.9 | 99.1 ± 0.9 | 96.3 ± 2.4 | 77.0 ± 5.0 | 96.8 ± 1.2 | 99.4 ± 0.8 | 96.8 ± 1.5 |

| 13 | 70.2 ± 3.6 | 96.7 ± 3.0 | 99.6 ± 0.4 | 96.5 ± 2.4 | 69.9 ± 3.5 | 97.6 ± 1.8 | 99.6 ± 0.4 | 95.4 ± 1.6 | 69.6 ± 3.5 | 97.2 ± 1.5 | 99.5 ± 0.5 | 96.2 ± 1.5 |

| 14 | 79.0 ± 3.9 | 97.7 ± 1.1 | 98.0 ± 1.1 | 97.9 ± 1.3 | 79.5 ± 3.8 | 98.9 ± 1.1 | 98.3 ± 0.7 | 97.9 ± 1.6 | 79.2 ± 4.1 | 99.0 ± 1.4 | 99.0 ± 0.9 | 98.4 ± 1.0 |

| 15 | 76.9 ± 6.1 | 99.1 ± 0.8 | 98.7 ± 1.1 | 98.4 ± 0.6 | 77.3 ± 5.6 | 99.0 ± 0.8 | 99.4 ± 0.7 | 98.4 ± 0.8 | 77.4 ± 5.8 | 98.6 ± 1.0 | 99.0 ± 1.0 | 98.2 ± 1.0 |

| 16 | 74.1 ± 5.3 | 98.6 ± 0.6 | 99.2 ± 0.6 | 96.1 ± 2.3 | 74.3 ± 5.4 | 98.0 ± 0.9 | 99.4 ± 1.1 | 96.0 ± 2.4 | 75.4 ± 5.6 | 99.5 ± 0.5 | 99.5 ± 0.3 | 96.4 ± 1.4 |

| 17 | 73.0 ± 4.9 | 99.0 ± 1.0 | 98.9 ± 1.0 | 98.7 ± 0.7 | 73.2 ± 4.9 | 99.3 ± 0.8 | 99.3 ± 0.5 | 96.9 ± 1.5 | 73.5 ± 4.7 | 99.0 ± 0.8 | 98.8 ± 1.0 | 98.1 ± 1.0 |

| 18 | 69.9 ± 4.6 | 98.3 ± 1.0 | 99.5 ± 0.7 | 98.1 ± 1.1 | 70.2 ± 4.5 | 98.8 ± 0.9 | 99.7 ± 0.3 | 97.3 ± 1.0 | 70.6 ± 4.9 | 99.8 ± 0.2 | 100.0 ± 0.0 | 96.4 ± 1.6 |

| 19 | 73.1 ± 9.9 | 97.6 ± 1.3 | 98.6 ± 0.9 | 97.4 ± 1.1 | 73.5 ± 10.0 | 97.4 ± 1.8 | 98.4 ± 1.2 | 96.6 ± 1.4 | 74.0 ± 9.6 | 97.5 ± 1.1 | 97.8 ± 0.9 | 97.2 ± 1.5 |

| 20 | 85.6 ± 3.8 | 98.6 ± 1.1 | 99.6 ± 0.6 | 96.4 ± 1.5 | 85.8 ± 4.4 | 98.5 ± 1.4 | 99.0 ± 2.1 | 97.3 ± 1.5 | 86.0 ± 4.0 | 99.2 ± 0.4 | 99.7 ± 0.5 | 96.3 ± 1.1 |

| 21 | 72.5 ± 6.7 | 96.6 ± 1.8 | 99.0 ± 1.0 | 97.1 ± 1.5 | 73.5 ± 6.4 | 96.9 ± 1.6 | 98.8 ± 0.5 | 97.4 ± 1.8 | 74.2 ± 6.3 | 95.0 ± 2.4 | 98.2 ± 0.9 | 96.9 ± 1.5 |

| 22 | 82.3 ± 3.7 | 98.6 ± 0.8 | 99.6 ± 0.4 | 97.1 ± 1.9 | 82.5 ± 4.5 | 98.2 ± 1.3 | 99.5 ± 0.4 | 97.5 ± 1.8 | 83.1 ± 4.6 | 98.5 ± 1.1 | 100.0 ± 0.1 | 97.5 ± 1.3 |

| 23 | 63.0 ± 3.7 | 96.2 ± 1.6 | 96.1 ± 1.8 | 95.1 ± 1.6 | 63.5 ± 3.0 | 96.0 ± 1.2 | 94.7 ± 0.9 | 94.8 ± 1.8 | 64.3 ± 2.6 | 93.5 ± 2.9 | 94.1 ± 2.4 | 95.8 ± 2.0 |

| 24 | 75.6 ± 4.1 | 97.1 ± 0.9 | 98.9 ± 0.5 | 98.2 ± 0.8 | 76.5 ± 4.4 | 97.3 ± 0.8 | 99.5 ± 0.6 | 98.1 ± 1.1 | 76.8 ± 4.1 | 97.2 ± 1.5 | 98.3 ± 1.6 | 98.2 ± 0.7 |

| 25 | 81.0 ± 3.6 | 98.1 ± 0.6 | 98.6 ± 1.4 | 96.3 ± 1.3 | 81.0 ± 3.5 | 99.5 ± 0.7 | 99.2 ± 1.0 | 97.0 ± 2.0 | 81.3 ± 4.0 | 98.8 ± 1.7 | 99.6 ± 0.8 | 96.3 ± 2.4 |

| 26 | 70.8 ± 2.0 | 96.8 ± 1.3 | 99.0 ± 0.8 | 97.0 ± 1.3 | 71.0 ± 2.1 | 97.0 ± 1.2 | 98.3 ± 1.5 | 97.6 ± 1.0 | 70.0 ± 2.0 | 97.0 ± 1.4 | 99.1 ± 0.6 | 97.3 ± 1.1 |

| 27 | 82.9 ± 3.8 | 98.0 ± 1.3 | 99.3 ± 0.7 | 97.1 ± 0.7 | 83.8 ± 3.8 | 98.1 ± 1.6 | 99.4 ± 0.6 | 96.4 ± 1.5 | 84.2 ± 3.3 | 98.4 ± 1.0 | 99.2 ± 0.8 | 97.6 ± 0.9 |

| 28 | 78.6 ± 4.9 | 97.1 ± 1.8 | 98.6 ± 1.7 | 97.1 ± 1.6 | 79.2 ± 4.4 | 97.5 ± 1.7 | 99.1 ± 1.4 | 97.5 ± 1.4 | 78.7 ± 4.3 | 97.9 ± 1.6 | 99.5 ± 1.1 | 97.3 ± 1.5 |

| 29 | 71.5 ± 4.0 | 97.4 ± 2.1 | 97.5 ± 2.0 | 95.9 ± 0.8 | 72.4 ± 4.1 | 97.4 ± 2.0 | 97.7 ± 1.5 | 96.4 ± 1.4 | 72.8 ± 3.9 | 97.0 ± 2.2 | 96.9 ± 1.7 | 96.4 ± 1.2 |

| 30 | 73.3 ± 4.3 | 97.4 ± 2.0 | 99.1 ± 0.7 | 97.1 ± 1.3 | 73.8 ± 4.5 | 97.5 ± 2.0 | 98.9 ± 0.9 | 98.3 ± 1.2 | 74.4 ± 4.4 | 97.2 ± 2.0 | 98.4 ± 1.4 | 97.2 ± 1.6 |

| 31 | 77.0 ± 4.3 | 98.8 ± 1.3 | 98.2 ± 0.9 | 97.6 ± 1.3 | 77.3 ± 4.0 | 98.6 ± 1.6 | 98.0 ± 1.2 | 98.4 ± 1.4 | 77.7 ± 4.1 | 98.5 ± 2.1 | 97.4 ± 1.4 | 98.6 ± 0.7 |

| 32 | 81.0 ± 5.3 | 98.5 ± 1.8 | 97.0 ± 4.9 | 98.6 ± 0.7 | 81.5 ± 5.2 | 98.4 ± 1.8 | 98.1 ± 1.1 | 98.2 ± 1.4 | 81.1 ± 5.4 | 98.1 ± 1.9 | 95.7 ± 5.6 | 97.6 ± 1.0 |

| Ch | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | SVM | CRC | LSTM | CNN | |

| 1 | 82.8 ± 2.7 | 97.8 ± 1.5 | 95.3 ± 4.4 | 94.9 ± 1.7 | 82.4 ± 4.7 | 99.8 ± 0.5 | 95.9 ± 4.1 | 96.3 ± 1.6 | 79.5 ± 4.2 | 98.9 ± 1.0 | 97.6 ± 1.5 | 94.9 ± 2.0 |

| 2 | 70.8 ± 3.4 | 97.3 ± 1.4 | 98.7 ± 1.5 | 95.7 ± 1.8 | 72.0 ± 3.2 | 93.8 ± 2.9 | 98.2 ± 2.2 | 90.0 ± 3.1 | 70.4 ± 3.7 | 94.6 ± 3.1 | 99.4 ± 1.3 | 95.1 ± 3.1 |

| 3 | 78.4 ± 4.3 | 99.6 ± 0.4 | 96.4 ± 8.7 | 94.7 ± 7.6 | 81.1 ± 5.2 | 98.0 ± 1.7 | 97.2 ± 6.5 | 95.4 ± 6.2 | 77.7 ± 4.8 | 99.2 ± 1.0 | 95.8 ± 6.7 | 94.6 ± 7.0 |

| 4 | 88.2 ± 3.2 | 100.0 ± 0.0 | 95.6 ± 6.2 | 94.7 ± 5.7 | 88.6 ± 2.5 | 100.0 ± 0.0 | 97.1 ± 5.5 | 93.6 ± 6.3 | 85.4 ± 4.3 | 99.6 ± 0.5 | 95.1 ± 6.9 | 95.3 ± 5.1 |

| 5 | 88.3 ± 2.8 | 100.0 ± 0.0 | 95.4 ± 3.7 | 96.0 ± 4.0 | 90.0 ± 2.7 | 100.0 ± 0.0 | 97.9 ± 3.0 | 96.3 ± 2.8 | 85.7 ± 2.9 | 99.7 ± 0.4 | 96.7 ± 4.2 | 94.9 ± 4.5 |

| 6 | 79.3 ± 3.8 | 98.4 ± 0.9 | 99.3 ± 1.1 | 96.5 ± 1.2 | 81.3 ± 3.6 | 100.0 ± 0.0 | 99.8 ± 0.5 | 97.2 ± 1.5 | 80.1 ± 4.0 | 99.7 ± 0.3 | 99.7 ± 0.8 | 97.3 ± 1.0 |

| 7 | 84.0 ± 2.9 | 98.2 ± 0.9 | 95.7 ± 8.1 | 94.9 ± 7.0 | 86.0 ± 3.4 | 100.0 ± 0.0 | 96.6 ± 6.6 | 95.3 ± 4.5 | 82.7 ± 2.7 | 98.6 ± 1.9 | 93.3 ± 8.0 | 94.3 ± 6.0 |

| 8 | 83.3 ± 3.1 | 99.4 ± 0.8 | 97.2 ± 5.9 | 95.0 ± 5.9 | 84.2 ± 3.6 | 98.2 ± 2.3 | 96.4 ± 5.7 | 93.6 ± 6.2 | 78.4 ± 2.8 | 98.9 ± 0.9 | 96.8 ± 6.8 | 93.9 ± 6.7 |

| 9 | 74.0 ± 3.4 | 99.2 ± 0.8 | 97.8 ± 1.6 | 96.1 ± 1.5 | 75.3 ± 3.9 | 99.8 ± 0.3 | 98.4 ± 1.6 | 97.3 ± 2.2 | 74.0 ± 3.4 | 99.7 ± 0.3 | 99.5 ± 1.0 | 97.2 ± 1.4 |

| 10 | 60.7 ± 3.1 | 98.3 ± 0.9 | 98.7 ± 1.6 | 96.8 ± 1.5 | 61.3 ± 2.8 | 100.0 ± 0.0 | 99.8 ± 0.4 | 95.8 ± 2.1 | 61.1 ± 3.3 | 99.8 ± 0.3 | 99.9 ± 0.2 | 96.9 ± 1.3 |

| 11 | 66.1 ± 4.8 | 99.3 ± 0.5 | 99.1 ± 0.7 | 95.8 ± 2.0 | 66.0 ± 4.7 | 99.4 ± 0.7 | 99.7 ± 0.6 | 96.6 ± 1.4 | 65.4 ± 3.3 | 97.7 ± 1.7 | 97.2 ± 2.0 | 92.8 ± 1.4 |

| 12 | 74.3 ± 4.5 | 99.2 ± 0.5 | 99.3 ± 1.2 | 98.1 ± 1.7 | 77.6 ± 5.8 | 100.0 ± 0.0 | 99.3 ± 0.9 | 96.1 ± 1.8 | 73.7 ± 2.6 | 99.7 ± 0.3 | 99.7 ± 0.5 | 96.8 ± 1.5 |

| 13 | 69.2 ± 4.1 | 91.4 ± 3.5 | 94.7 ± 4.1 | 92.3 ± 2.9 | 70.7 ± 3.1 | 92.1 ± 3.5 | 97.3 ± 3.2 | 87.5 ± 3.0 | 69.6 ± 3.2 | 94.3 ± 3.1 | 97.4 ± 2.6 | 94.4 ± 1.9 |

| 14 | 80.0 ± 4.4 | 98.3 ± 1.8 | 98.0 ± 2.2 | 94.8 ± 2.9 | 80.0 ± 4.4 | 100.0 ± 0.0 | 99.8 ± 0.5 | 96.5 ± 1.6 | 79.7 ± 4.0 | 99.9 ± 0.2 | 99.2 ± 2.3 | 97.8 ± 1.7 |

| 15 | 79.6 ± 6.0 | 99.3 ± 0.8 | 98.5 ± 1.4 | 97.0 ± 2.5 | 78.3 ± 5.9 | 98.9 ± 1.1 | 95.3 ± 6.0 | 95.8 ± 2.5 | 76.4 ± 6.1 | 100.0 ± 0.0 | 96.7 ± 6.7 | 97.4 ± 0.7 |

| 16 | 72.0 ± 3.2 | 97.5 ± 1.2 | 97.1 ± 3.7 | 96.9 ± 1.9 | 74.4 ± 4.7 | 99.6 ± 0.7 | 98.5 ± 1.5 | 96.4 ± 2.5 | 71.1 ± 3.4 | 98.7 ± 1.9 | 97.1 ± 1.7 | 95.1 ± 1.0 |

| 17 | 76.9 ± 5.3 | 99.6 ± 0.7 | 98.3 ± 0.9 | 97.0 ± 1.7 | 76.2 ± 5.1 | 99.7 ± 0.5 | 98.7 ± 1.5 | 96.8 ± 1.7 | 74.7 ± 5.7 | 100.0 ± 0.0 | 99.3 ± 1.5 | 96.4 ± 1.8 |

| 18 | 70.1 ± 5.1 | 99.7 ± 0.3 | 99.7 ± 0.7 | 96.8 ± 2.4 | 71.8 ± 5.0 | 99.7 ± 0.5 | 98.9 ± 1.4 | 95.4 ± 2.3 | 69.4 ± 5.0 | 99.7 ± 0.5 | 98.4 ± 1.1 | 96.1 ± 1.5 |

| 19 | 68.7 ± 7.8 | 99.7 ± 0.3 | 96.1 ± 6.3 | 97.4 ± 2.0 | 69.1 ± 9.2 | 99.7 ± 0.6 | 93.9 ± 5.4 | 96.9 ± 1.5 | 68.7 ± 8.2 | 98.6 ± 0.7 | 93.7 ± 7.0 | 96.5 ± 2.0 |

| 20 | 82.0 ± 2.7 | 99.3 ± 0.4 | 99.3 ± 0.9 | 97.1 ± 1.2 | 84.7 ± 3.3 | 100.0 ± 0.0 | 97.8 ± 3.8 | 97.2 ± 1.9 | 82.1 ± 3.1 | 99.8 ± 0.4 | 95.8 ± 6.2 | 96.4 ± 1.7 |

| 21 | 86.2 ± 5.7 | 100.0 ± 0.0 | 98.6 ± 3.0 | 94.2 ± 4.3 | 87.0 ± 3.7 | 98.9 ± 1.5 | 97.6 ± 3.8 | 94.4 ± 3.8 | 82.5 ± 2.7 | 98.6 ± 1.2 | 97.2 ± 5.8 | 93.9 ± 6.0 |

| 22 | 85.1 ± 2.9 | 99.8 ± 0.4 | 98.0 ± 3.5 | 95.1 ± 2.4 | 88.3 ± 3.6 | 100.0 ± 0.0 | 99.0 ± 1.1 | 97.3 ± 1.6 | 83.3 ± 4.2 | 99.4 ± 0.8 | 95.7 ± 5.1 | 96.8 ± 2.7 |

| 23 | 74.2 ± 5.3 | 93.2 ± 2.9 | 93.2 ± 5.5 | 92.8 ± 5.3 | 71.3 ± 8.1 | 99.8 ± 0.5 | 95.9 ± 6.4 | 93.9 ± 5.6 | 70.0 ± 7.4 | 95.1 ± 2.1 | 94.4 ± 6.4 | 93.7 ± 5.4 |

| 24 | 82.8 ± 4.4 | 99.7 ± 0.4 | 98.3 ± 1.5 | 97.1 ± 1.7 | 81.9 ± 4.5 | 99.9 ± 0.2 | 99.9 ± 0.2 | 95.6 ± 1.2 | 80.2 ± 4.9 | 99.6 ± 0.4 | 100.0 ± 0.0 | 97.1 ± 1.9 |

| 25 | 84.6 ± 5.7 | 99.3 ± 0.4 | 99.8 ± 0.4 | 96.6 ± 1.8 | 86.1 ± 5.0 | 100.0 ± 0.0 | 99.9 ± 0.2 | 96.4 ± 1.7 | 82.7 ± 5.4 | 99.6 ± 0.5 | 99.9 ± 0.2 | 96.4 ± 1.7 |

| 26 | 72.1 ± 7.3 | 97.9 ± 1.7 | 98.4 ± 1.3 | 96.7 ± 2.0 | 71.1 ± 7.7 | 98.0 ± 1.7 | 98.7 ± 1.5 | 96.3 ± 1.9 | 69.8 ± 4.6 | 97.1 ± 0.9 | 98.3 ± 1.2 | 95.9 ± 1.2 |

| 27 | 85.3 ± 2.3 | 99.9 ± 0.2 | 98.7 ± 1.6 | 96.3 ± 1.3 | 86.8 ± 1.8 | 98.8 ± 1.5 | 99.7 ± 0.5 | 95.4 ± 2.1 | 83.3 ± 2.2 | 98.4 ± 1.6 | 97.6 ± 2.0 | 96.1 ± 2.5 |

| 28 | 85.8 ± 4.4 | 98.3 ± 1.7 | 97.4 ± 4.6 | 95.6 ± 5.2 | 82.7 ± 3.3 | 97.8 ± 1.9 | 95.6 ± 4.2 | 93.8 ± 6.1 | 80.7 ± 3.4 | 97.8 ± 1.5 | 95.9 ± 4.2 | 95.3 ± 4.1 |

| 29 | 78.8 ± 5.8 | 97.6 ± 1.4 | 96.1 ± 4.0 | 95.9 ± 1.9 | 76.9 ± 4.8 | 98.0 ± 1.7 | 97.4 ± 3.0 | 95.8 ± 1.7 | 75.5 ± 3.6 | 96.4 ± 2.1 | 90.4 ± 6.5 | 94.4 ± 1.7 |

| 30 | 78.1 ± 3.2 | 97.9 ± 1.7 | 99.3 ± 1.5 | 98.1 ± 1.6 | 79.1 ± 4.9 | 97.9 ± 1.7 | 99.4 ± 0.5 | 97.6 ± 1.8 | 75.8 ± 3.4 | 97.9 ± 1.3 | 97.2 ± 3.5 | 97.3 ± 1.5 |

| 31 | 85.7 ± 2.3 | 100.0 ± 0.0 | 96.4 ± 6.3 | 96.6 ± 2.0 | 83.9 ± 2.9 | 100.0 ± 0.0 | 97.1 ± 5.9 | 97.2 ± 1.9 | 80.9 ± 2.8 | 99.0 ± 1.0 | 95.1 ± 6.5 | 95.9 ± 3.2 |

| 32 | 85.5 ± 2.7 | 100.0 ± 0.0 | 96.3 ± 4.1 | 98.2 ± 1.1 | 85.4 ± 3.3 | 100.0 ± 0.0 | 97.9 ± 2.0 | 97.4 ± 1.8 | 80.9 ± 3.7 | 99.6 ± 0.5 | 98.4 ± 1.4 | 97.1 ± 1.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Patel, K.; Gad, R.; Lourdes de Ataide, M.; Vetrekar, N.; Ferreira, T.; Ramachandra, R. Stimulus-Evoked Brain Signals for Parkinson’s Detection: A Comprehensive Benchmark Performance Analysis on Cross-Stimulation and Channel-Wise Experiments. Bioengineering 2025, 12, 1185. https://doi.org/10.3390/bioengineering12111185

Patel K, Gad R, Lourdes de Ataide M, Vetrekar N, Ferreira T, Ramachandra R. Stimulus-Evoked Brain Signals for Parkinson’s Detection: A Comprehensive Benchmark Performance Analysis on Cross-Stimulation and Channel-Wise Experiments. Bioengineering. 2025; 12(11):1185. https://doi.org/10.3390/bioengineering12111185

Chicago/Turabian StylePatel, Krishna, Rajendra Gad, Marissa Lourdes de Ataide, Narayan Vetrekar, Teresa Ferreira, and Raghavendra Ramachandra. 2025. "Stimulus-Evoked Brain Signals for Parkinson’s Detection: A Comprehensive Benchmark Performance Analysis on Cross-Stimulation and Channel-Wise Experiments" Bioengineering 12, no. 11: 1185. https://doi.org/10.3390/bioengineering12111185

APA StylePatel, K., Gad, R., Lourdes de Ataide, M., Vetrekar, N., Ferreira, T., & Ramachandra, R. (2025). Stimulus-Evoked Brain Signals for Parkinson’s Detection: A Comprehensive Benchmark Performance Analysis on Cross-Stimulation and Channel-Wise Experiments. Bioengineering, 12(11), 1185. https://doi.org/10.3390/bioengineering12111185