Spectral Analysis of Light-Adapted Electroretinograms in Neurodevelopmental Disorders: Classification with Machine Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. Electrophysiology

2.2. Electroretinogram Analysis

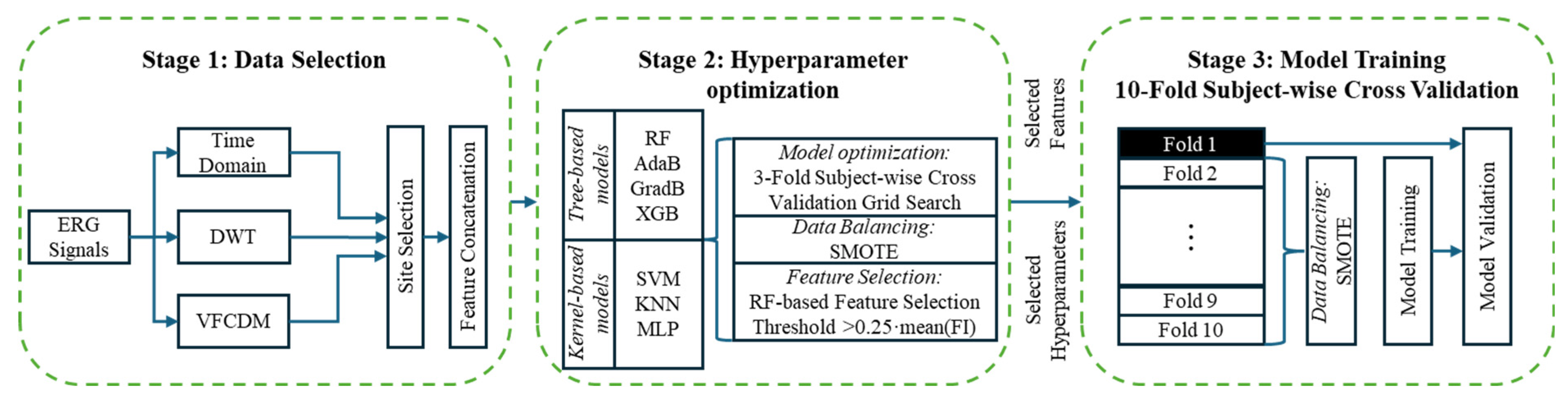

2.3. Machine Learning

2.4. Model Refinement

2.5. Statistics

3. Results

3.1. Participants

3.2. Medication Effects

3.3. Between Groups Classification

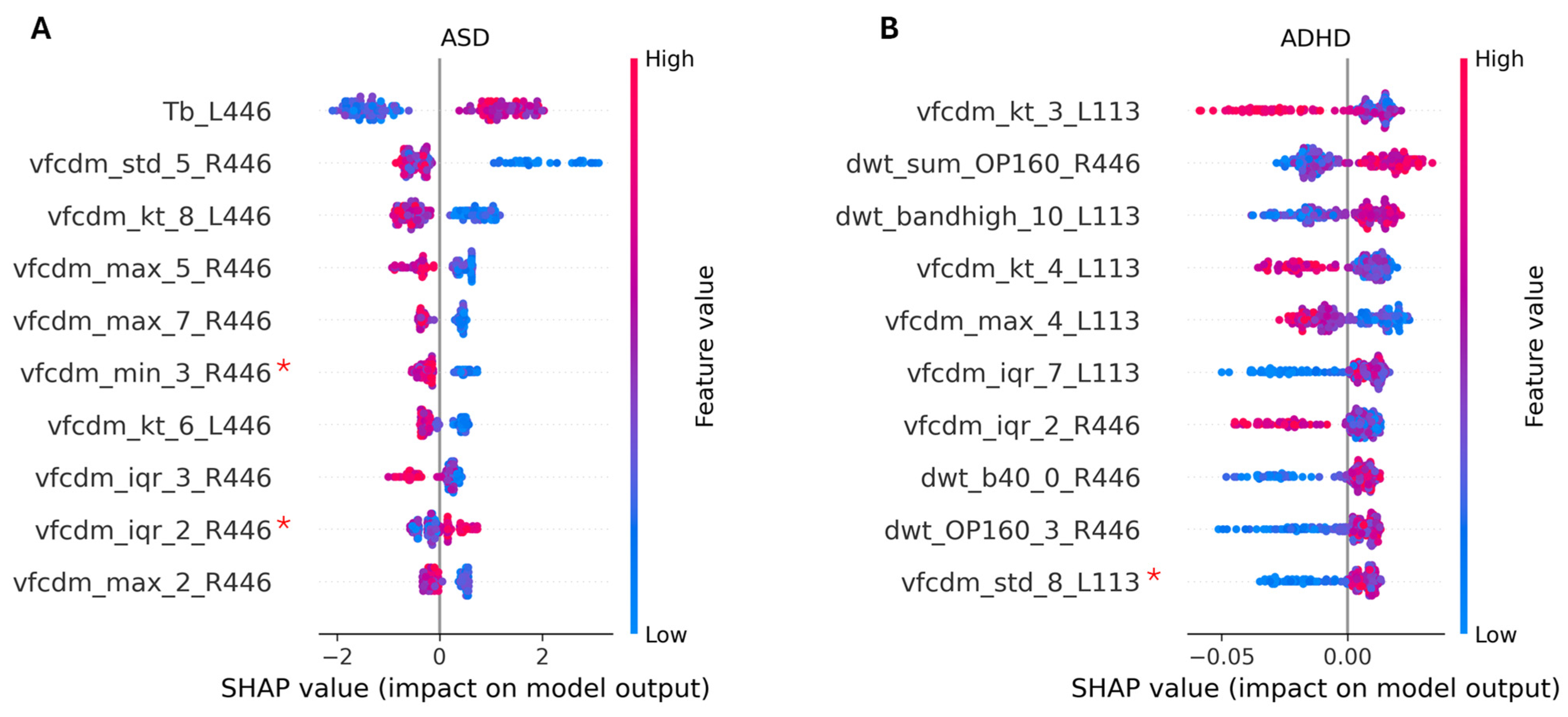

3.3.1. Two-Group Classification: ASD vs. Control

3.3.2. Two-Group Classification: ADHD vs. Control

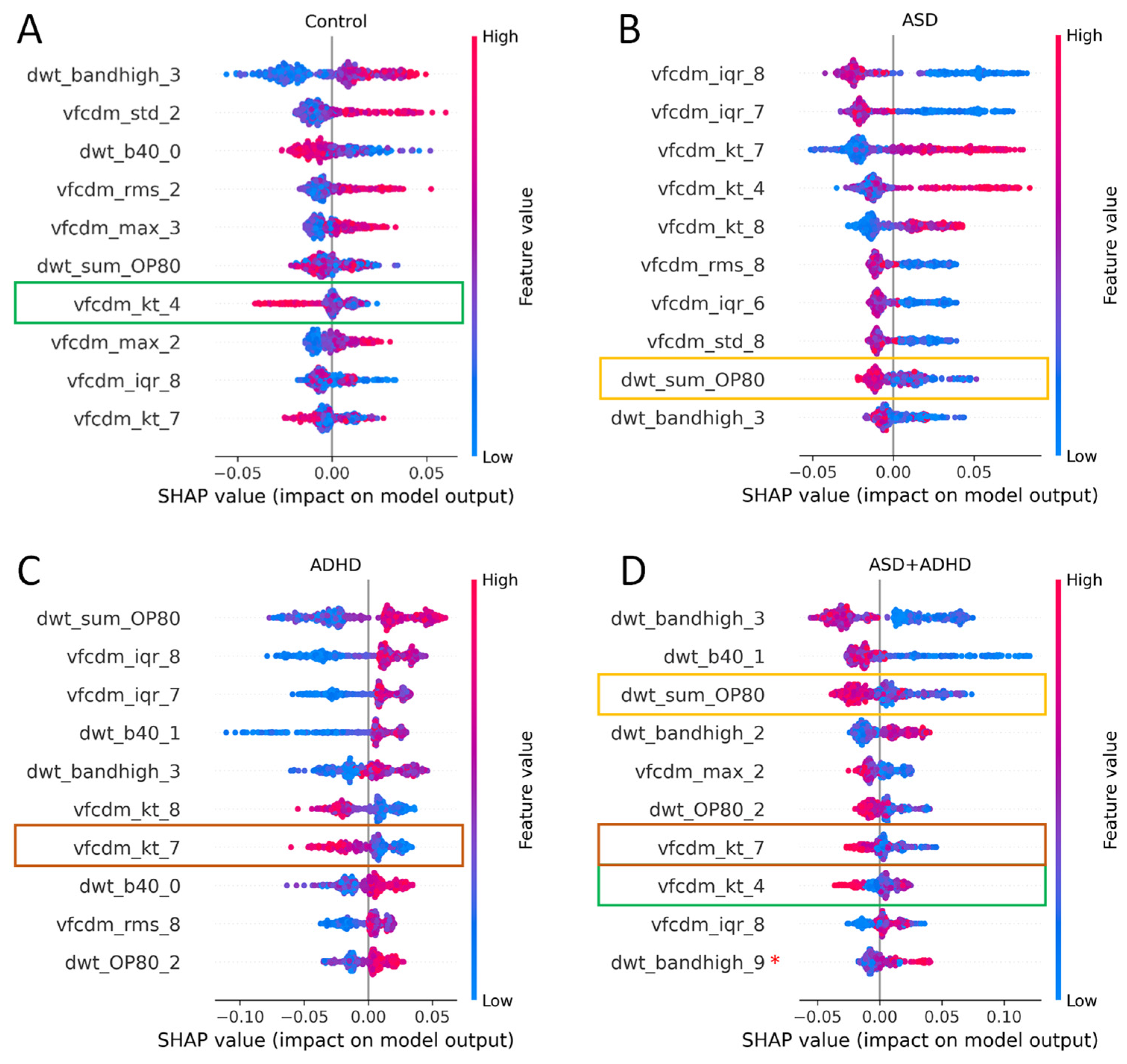

3.3.3. Three Group Classification: ASD vs. ADHD vs. Control

3.3.4. Four Group Classification: ASD vs. ADHD vs. ASD + ADHD vs. Control

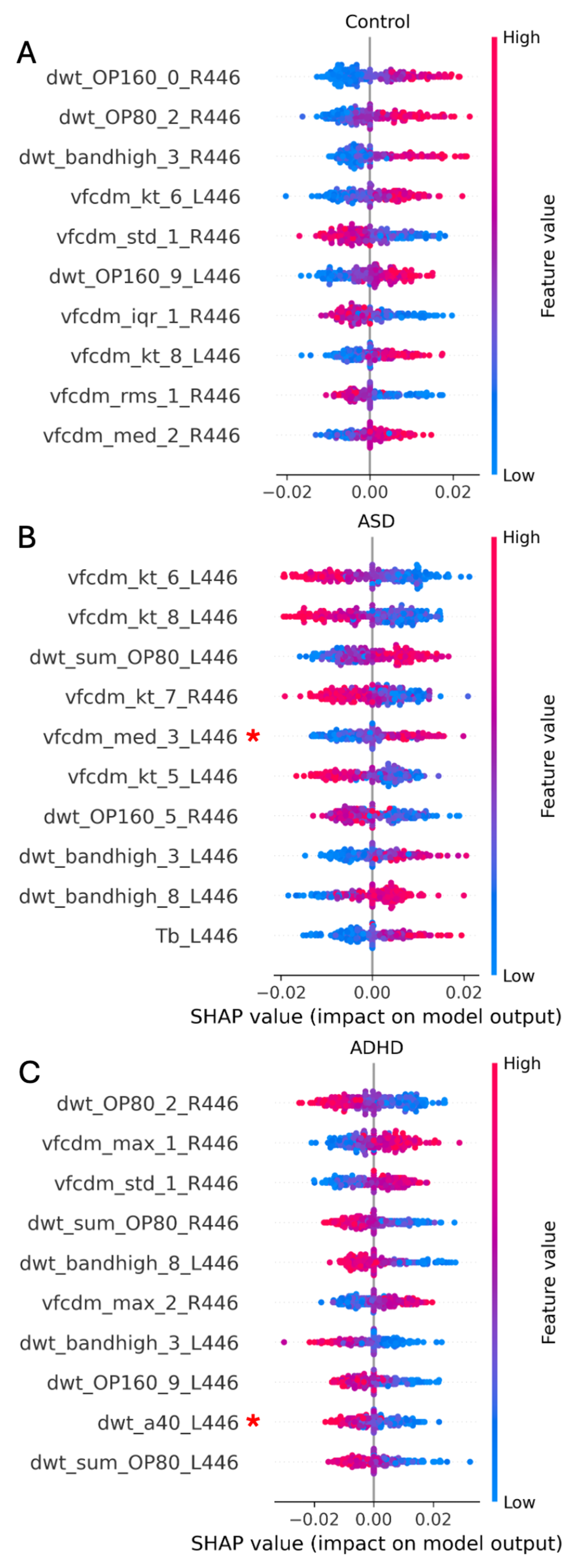

3.4. Effects of Medication

3.5. Time Domain Feature Effects

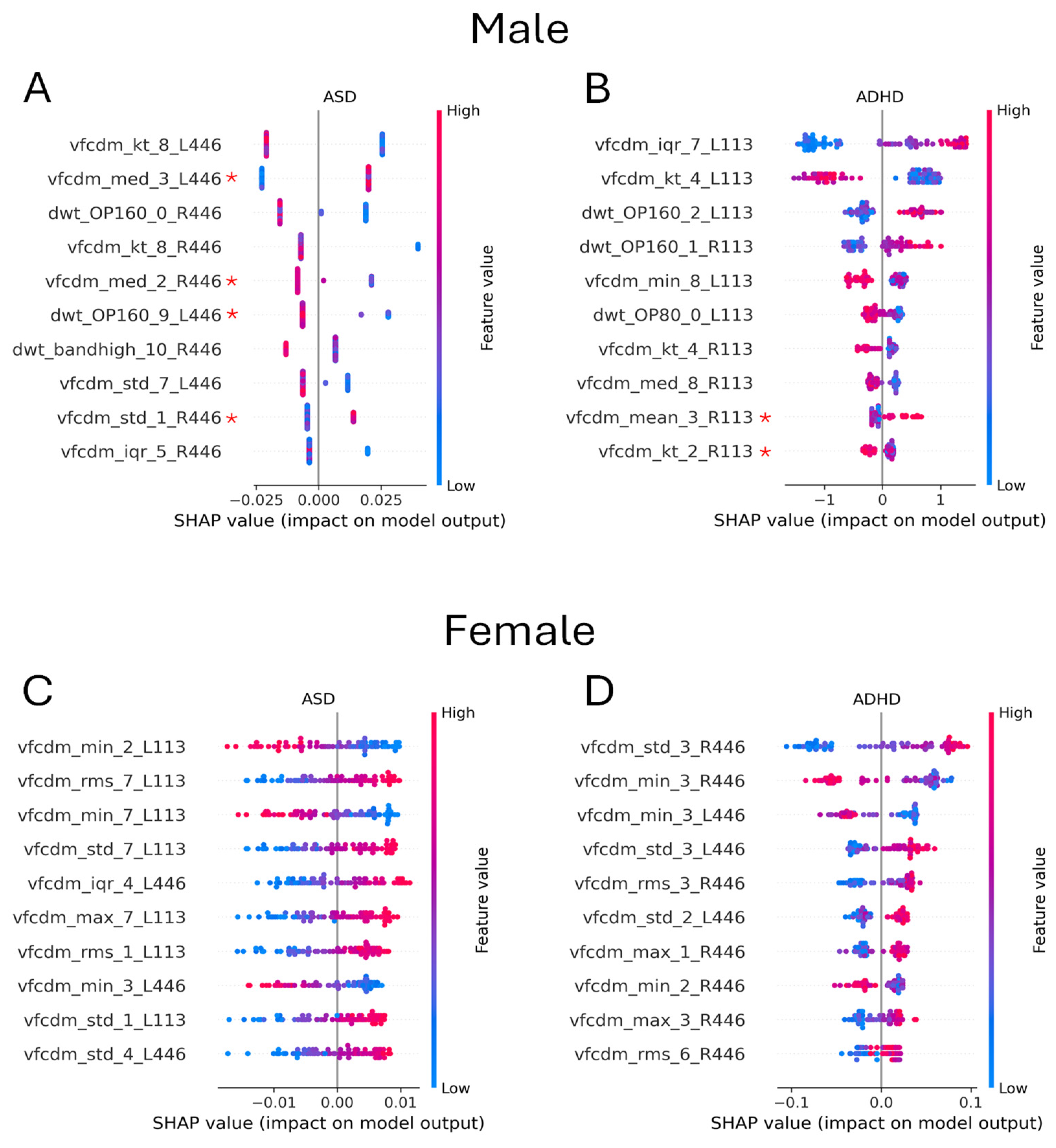

3.6. Effect of Sex

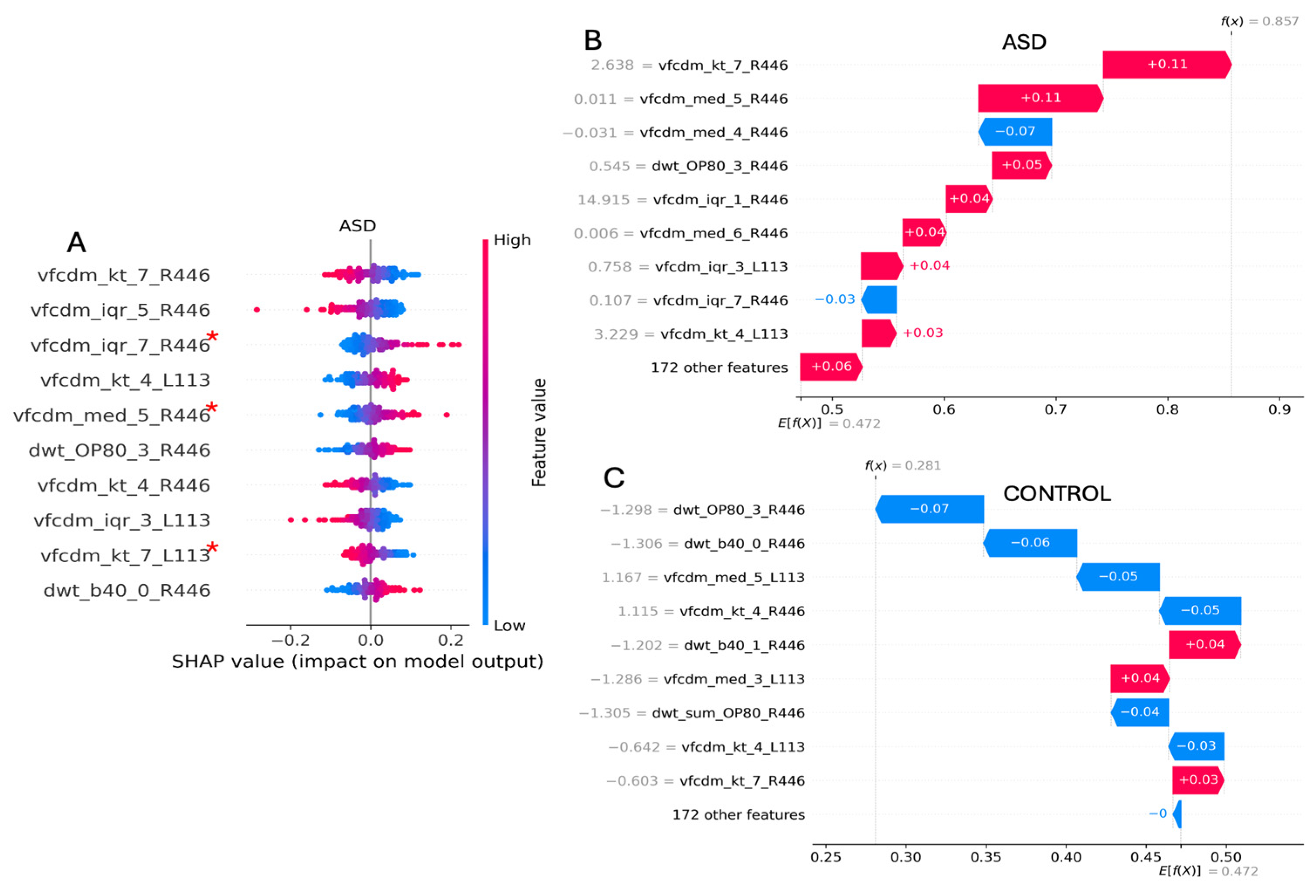

3.7. Individual Case Analysis

4. Discussion

4.1. Medications

4.2. Inter-Site Variability

4.3. Sex

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

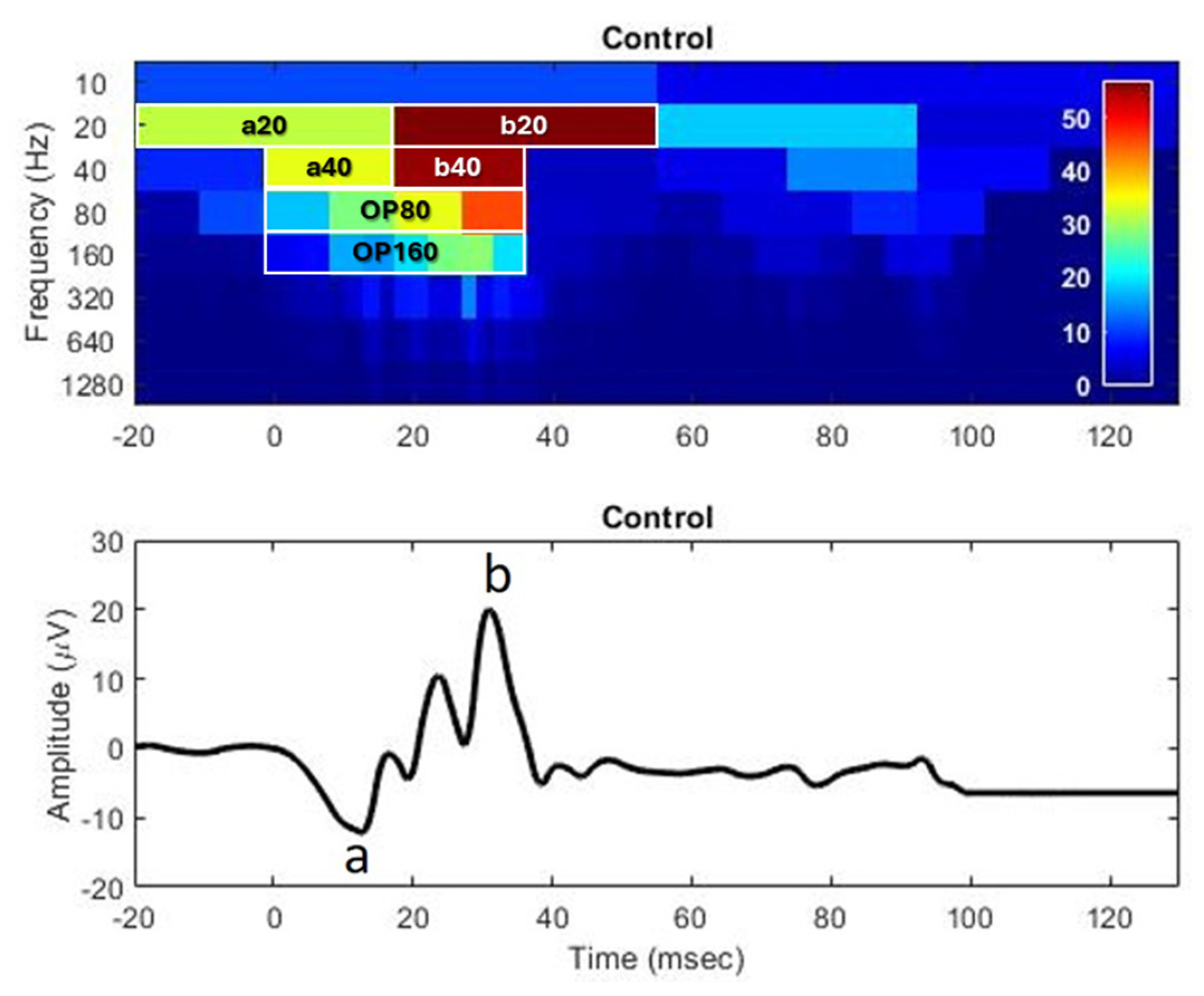

The Electroretinogram

Appendix B

Representative Time Domain Traces

- Analysis 1: Time Domain

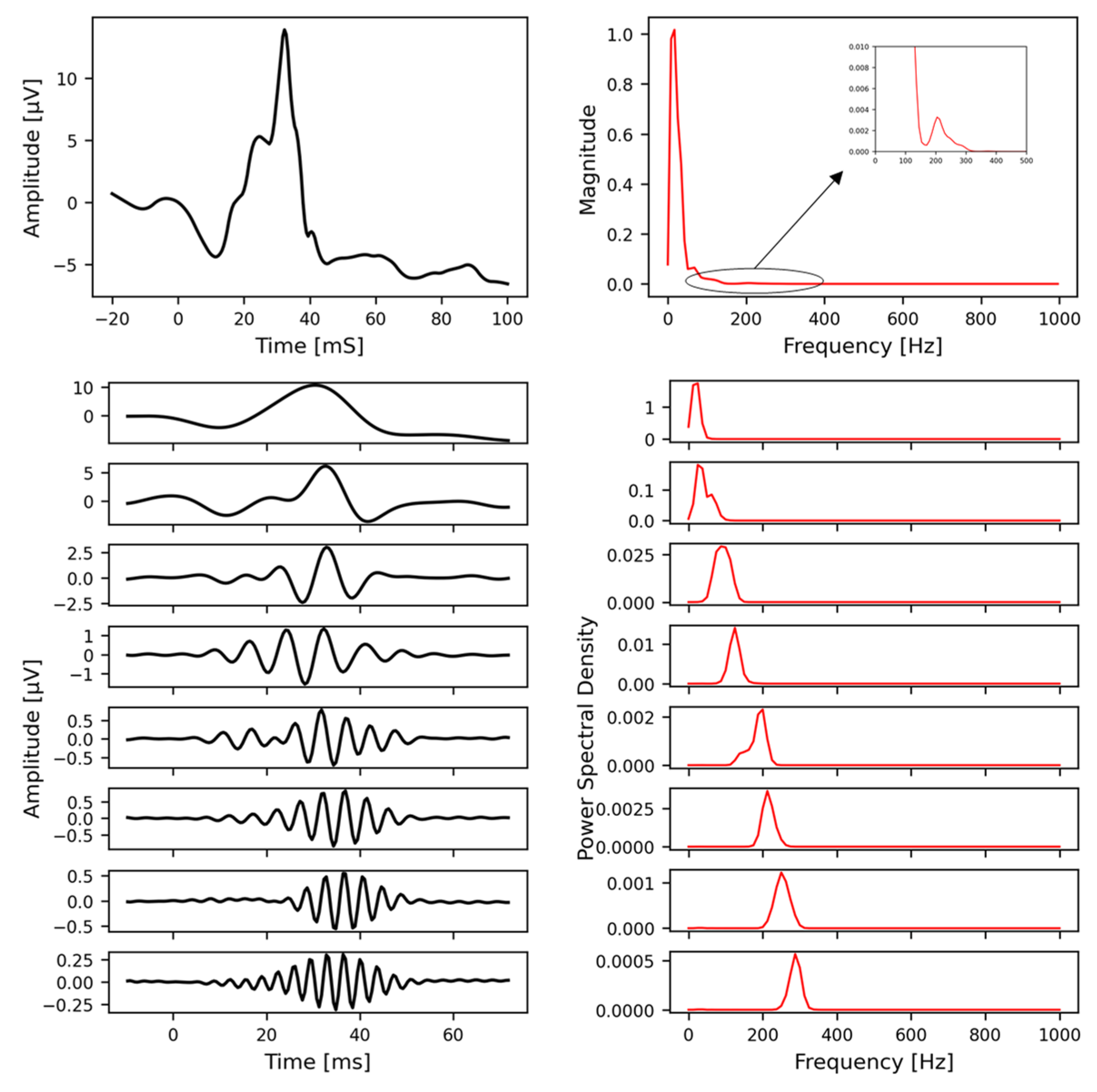

- Analysis 2: Discrete Wavelet Transform (DWT)

- Analysis 3: Variable-Frequency Complex Demodulation (VFCDM)

Appendix C

Appendix C.1. Participant and Site Information

Appendix C.2. Iris Color, Electrode Height, and Recording Parameters

| Parameter | ASD (n = 73) | ADHD (n = 43) | ASD + ADHD (n = 21) | Control (n = 137) |

|---|---|---|---|---|

| Age (years) | 12.8 ± 4.3 | 13.0 ± 3.4 | 12.9 ± 4.4 | 12.2 ± 4.5 |

| Sex (M:F) | 58:17 * | 25:18 | 16:5 | 57:80 |

| Right iris color | 1.20 ± 0.10 | 1.18 ± 0.11 | 1.16 ± 0.07 | 1.21 ± 0.12 |

| Left iris color | 1.23 ± 0.11 | 1.19 ± 0.10 | 1.17 ± 0.11 * | 1.23 ± 0.11 |

| Right electrode height | −0.52 ± 0.80 | 0.07 ± 0.75 * | −0.19 ± 0.75 | −0.34 ± 0.79 |

| Left electrode height | −0.51 ± 0.89 | 0.00 ± 0.93 * | −0.24 ± 0.70 | −0.40 ± 0.83 |

Appendix C.3. Representative Discrete Wavelet Transform

Appendix C.4. Representative Variable-Frequency Complex Demodulation

References

- Parellada, M.; Andreu-Bernabeu, Á.; Burdeus, M.; San José Cáceres, A.; Urbiola, E.; Carpenter, L.L.; Kraguljac, N.V.; McDonald, W.M.; Nemeroff, C.B.; Rodriguez, C.I.; et al. In search of biomarkers to guide interventions in autism spectrum disorder: A Systematic Review. Am. J. Psychiatry 2023, 180, 23–40. [Google Scholar] [CrossRef]

- London, A.; Benhar, I.; Schwartz, M. The retina as a window to the brain—From eye research to CNS disorders. Nat. Rev. Neurol. 2013, 9, 44–53. [Google Scholar] [CrossRef] [PubMed]

- Hébert, M.; Mérette, C.; Gagné, A.M.; Paccalet, T.; Moreau, I.; Lavoie, J.; Maziade, M. The electroretinogram may differentiate schizophrenia from bipolar disorder. Biol. Psychiatry 2020, 87, 263–270. [Google Scholar] [CrossRef] [PubMed]

- Asanad, S.; Felix, C.M.; Fantini, M.; Harrington, M.G.; Sadun, A.A.; Karanjia, R. Retinal ganglion cell dysfunction in preclinical Alzheimer’s disease: An electrophysiologic biomarker signature. Sci. Rep. 2021, 11, 6344. [Google Scholar] [CrossRef]

- Schwitzer, T.; Le Cam, S.; Cosker, E.; Vinsard, H.; Leguay, A.; Angioi-Duprez, K.; Laprevote, V.; Ranta, R.; Schwan, R.; Dorr, V.L. Retinal electroretinogram features can detect depression state and treatment response in adults: A machine learning approach. J. Affect. Disord. 2022, 306, 208–214. [Google Scholar] [CrossRef] [PubMed]

- Elanwar, R.; Al Masry, H.; Ibrahim, A.; Hussein, M.; Ibrahim, S.; Masoud, M.M. Retinal functional and structural changes in patients with Parkinson’s disease. BMC Neurol. 2023, 23, 330. [Google Scholar] [CrossRef] [PubMed]

- Constable, P.A.; Lim, J.K.H.; Thompson, D.A. Retinal electrophysiology in central nervous system disorders. A review of human and mouse studies. Front. Neurosci. 2023, 17, 1215097. [Google Scholar] [CrossRef] [PubMed]

- Schwitzer, T.; Leboyer, M.; Laprévote, V.; Louis Dorr, V.; Schwan, R. Using retinal electrophysiology toward precision psychiatry. Eur. Psychiatry 2022, 65, e9. [Google Scholar] [CrossRef]

- Schwitzer, T.; Lavoie, J.; Giersch, A.; Schwan, R.; Laprevote, V. The emerging field of retinal electrophysiological measurements in psychiatric research: A review of the findings and the perspectives in major depressive disorder. J. Psychiatr. Res. 2015, 70, 113–120. [Google Scholar] [CrossRef] [PubMed]

- Ritvo, E.R.; Creel, D.; Realmuto, G.; Crandall, A.S.; Freeman, B.J.; Bateman, J.B.; Barr, R.; Pingree, C.; Coleman, M.; Purple, R. Electroretinograms in autism: A pilot study of b-wave amplitudes. Am. J. Psychiatry 1988, 145, 229–232. [Google Scholar] [CrossRef] [PubMed]

- Realmuto, G.; Purple, R.; Knobloch, W.; Ritvo, E. Electroretinograms (ERGs) in four autistic probands and six first-degree relatives. Can. J. Psychiatry 1989, 34, 435–439. [Google Scholar] [CrossRef]

- Constable, P.A.; Gaigg, S.B.; Bowler, D.M.; Jägle, H.; Thompson, D.A. Full-field electroretinogram in autism spectrum disorder. Doc. Ophthalmol. 2016, 132, 83–99. [Google Scholar] [CrossRef] [PubMed]

- Constable, P.A.; Ritvo, E.R.; Ritvo, A.R.; Lee, I.O.; McNair, M.L.; Stahl, D.; Sowden, J.; Quinn, S.; Skuse, D.H.; Thompson, D.A.; et al. Light-Adapted electroretinogram differences in Autism Spectrum Disorder. J. Autism Dev. Disord. 2020, 50, 2874–2885. [Google Scholar] [CrossRef]

- Constable, P.A.; Lee, I.O.; Marmolejo-Ramos, F.; Skuse, D.H.; Thompson, D.A. The photopic negative response in autism spectrum disorder. Clin. Exp. Optom. 2021, 104, 841–847. [Google Scholar] [CrossRef]

- Lee, I.O.; Skuse, D.H.; Constable, P.A.; Marmolejo-Ramos, F.; Olsen, L.R.; Thompson, D.A. The electroretinogram b-wave amplitude: A differential physiological measure for Attention Deficit Hyperactivity Disorder and Autism Spectrum Disorder. J. Neurodev. Disord. 2022, 14, 30. [Google Scholar] [CrossRef] [PubMed]

- Constable, P.A.; Marmolejo-Ramos, F.; Gauthier, M.; Lee, I.O.; Skuse, D.H.; Thompson, D.A. Discrete Wavelet Transform analysis of the electroretinogram in Autism Spectrum Disorder and Attention Deficit Hyperactivity Disorder. Front. Neurosci. 2022, 16, 890461. [Google Scholar] [CrossRef] [PubMed]

- Friedel, E.B.N.; Schäfer, M.; Endres, D.; Maier, S.; Runge, K.; Bach, M.; Heinrich, S.P.; Ebert, D.; Domschke, K.; Tebartz van Elst, L.; et al. Electroretinography in adults with high-functioning autism spectrum disorder. Autism Res. 2022, 15, 2026–2037. [Google Scholar] [CrossRef] [PubMed]

- Huang, Q.; Ellis, C.L.; Leo, S.M.; Velthuis, H.; Pereira, A.C.; Dimitrov, M.; Ponteduro, F.M.; Wong, N.M.L.; Daly, E.; Murphy, D.G.M.; et al. Retinal GABAergic alterations in adults with Autism Spectrum Disorder. J. Neurosci. 2024, 44, e1218232024. [Google Scholar] [CrossRef] [PubMed]

- Bubl, E.; Dörr, M.; Riedel, A.; Ebert, D.; Philipsen, A.; Bach, M.; Tebartz van Elst, L. Elevated background noise in adult attention deficit hyperactivity disorder is associated with inattention. PLoS ONE 2015, 10, e0118271. [Google Scholar] [CrossRef]

- Dubois, M.A.; Pelletier, C.A.; Mérette, C.; Jomphe, V.; Turgeon, R.; Bélanger, R.E.; Grondin, S.; Hébert, M. Evaluation of electroretinography (ERG) parameters as a biomarker for ADHD. Prog. Neuropsychopharmacol. Biol. Psychiatry 2023, 127, 110807. [Google Scholar] [CrossRef]

- Hamilton, R.; Bees, M.A.; Chaplin, C.A.; McCulloch, D.L. The luminance-response function of the human photopic electroretinogram: A mathematical model. Vision. Res. 2007, 47, 2968–2972. [Google Scholar] [CrossRef] [PubMed]

- Constable, P.A.; Skuse, D.H.; Thompson, D.A.; Lee, I.O. Brief report: Effects of methylphenidate on the light adapted electroretinogram. Doc. Ophthalmol. 2024. [Google Scholar] [CrossRef] [PubMed]

- Robson, A.G.; Frishman, L.J.; Grigg, J.; Hamilton, R.; Jeffrey, B.G.; Kondo, M.; Li, S.; McCulloch, D.L. ISCEV standard for full-field clinical electroretinography (2022 update). Doc. Ophthalmol. 2022, 144, 165–177. [Google Scholar] [CrossRef]

- Manjur, S.M.; Diaz, L.R.M.; Lee, I.O.; Skuse, D.H.; Thompson, D.A.; Marmolejos-Ramos, F.; Constable, P.A.; Posada-Quintero, H.F. Detecting Autism Spectrum Disorder and Attention Deficit Hyperactivity Disorder using multimodal time-frequency analysis with machine learning using the electroretinogram from two flash strengths. J. Autism Dev. Disord. 2024. [Google Scholar] [CrossRef]

- Manjur, S.M.; Hossain, M.B.; Constable, P.A.; Thompson, D.A.; Marmolejo-Ramos, F.; Lee, I.O.; Posada-Quintero, H.F. Spectral analysis of Electroretinography to differentiate autism spectrum disorder and attention deficit hyperactivity disorder. In Proceedings of the 2023 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI), Pittsburgh, PA, USA, 15–18 October 2023; Volume 2023, p. 10313406. [Google Scholar] [CrossRef]

- Manjur, S.M.; Hossain, M.B.; Constable, P.A.; Thompson, D.A.; Marmolejo-Ramos, F.; Lee, I.O.; Skuse, D.H.; Posada-Quintero, H.F. Detecting Autism Spectrum Disorder using spectral analysis of electroretinogram and machine learning: Preliminary results. IEEE Trans. Biomed. Eng. 2022, 2022, 435–3438. [Google Scholar] [CrossRef]

- Gauvin, M.; Sustar, M.; Little, J.M.; Brecelj, J.; Lina, J.M.; Lachapelle, P. Quantifying the ON and OFF Contributions to the Flash ERG with the Discrete Wavelet Transform. Transl. Vis. Sci. Technol. 2017, 6, 3. [Google Scholar] [CrossRef]

- Gauvin, M.; Dorfman, A.L.; Trang, N.; Gauthier, M.; Little, J.M.; Lina, J.M.; Lachapelle, P. Assessing the contribution of the oscillatory potentials to the genesis of the photopic ERG with the Discrete Wavelet Transform. Biomed. Res. Int. 2016, 2016, 2790194. [Google Scholar] [CrossRef] [PubMed]

- Gauvin, M.; Little, J.M.; Lina, J.M.; Lachapelle, P. Functional decomposition of the human ERG based on the discrete wavelet transform. J. Vis. 2015, 15, 14. [Google Scholar] [CrossRef] [PubMed]

- Gauvin, M.; Lina, J.M.; Lachapelle, P. Advance in ERG analysis: From peak time and amplitude to frequency, power, and energy. Biomed. Res. Int. 2014, 2014, 246096. [Google Scholar] [CrossRef]

- Wang, H.; Siu, K.; Ju, K.; Chon, K.H. A high resolution approach to estimating time-frequency spectra and their amplitudes. Ann. Biomed. Eng. 2006, 34, 326–338. [Google Scholar] [CrossRef] [PubMed]

- Habib, F.; Huang, H.; Gupta, A.; Wright, T. MERCI: A machine learning approach to identifying hydroxychloroquine retinopathy using mfERG. Doc. Ophthalmol. 2022, 145, 53–63. [Google Scholar] [CrossRef] [PubMed]

- Gajendran, M.K.; Rohowetz, L.J.; Koulen, P.; Mehdizadeh, A. Novel Machine-Learning based framework using electroretinography data for the detection of early-stage glaucoma. Front. Neurosci. 2022, 16, 869137. [Google Scholar] [CrossRef] [PubMed]

- Glinton, S.L.; Calcagni, A.; Lilaonitkul, W.; Pontikos, N.; Vermeirsch, S.; Zhang, G.; Arno, G.; Wagner, S.K.; Michaelides, M.; Keane, P.A.; et al. Phenotyping of ABCA4 retinopathy by Machine Learning analysis of full-field electroretinography. Transl. Vis. Sci. Technol. 2022, 11, 34. [Google Scholar] [CrossRef] [PubMed]

- Müller, P.L.; Treis, T.; Odainic, A.; Pfau, M.; Herrmann, P.; Tufail, A.; Holz, F.G. Prediction of function in ABCA4-related retinopathy using ensemble Machine Learning. J. Clin. Med. 2020, 9, 2428. [Google Scholar] [CrossRef] [PubMed]

- Martinez, S.; Stoyanov, K.; Carcache, L. Unraveling the spectrum: Overlap, distinctions, and nuances of ADHD and ASD in children. Front. Psychiatry 2024, 15, 1387179. [Google Scholar] [CrossRef] [PubMed]

- Berg, L.M.; Gurr, C.; Leyhausen, J.; Seelemeyer, H.; Bletsch, A.; Schaefer, T.; Ecker, C. The neuroanatomical substrates of autism and ADHD and their link to putative genomic underpinnings. Mol. Autism 2023, 14, 36. [Google Scholar] [CrossRef]

- Knott, R.; Johnson, B.P.; Tiego, J.; Mellahn, O.; Finlay, A.; Kallady, K.; Bellgrove, M. The Monash Autism-ADHD genetics and neurodevelopment (MAGNET) project design and methodologies: A dimensional approach to understanding neurobiological and genetic aetiology. Mol. Autism 2021, 12, 55. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Calhoun, V.; Jiang, R.; Yan, W.; Sui, J. Brain imaging-based machine learning in autism spectrum disorder: Methods and applications. J. Neurosci. Methods 2021, 361, 109271. [Google Scholar] [CrossRef]

- Liu, M.; Li, B.; Hu, D. Autism Spectrum Disorder studies using fMRI data and machine learning: A review. Front. Neurosci. 2021, 15, 697870. [Google Scholar] [CrossRef]

- Ingalhalikar, M.; Shinde, S.; Karmarkar, A.; Rajan, A.; Rangaprakash, D.; Deshpande, G. Functional connectivity-based prediction of autism on site harmonized ABIDE Dataset. IEEE Trans. Biomed. Eng. 2021, 68, 3628–3637. [Google Scholar] [CrossRef] [PubMed]

- Sá, R.O.D.S.; Michelassi, G.C.; Butrico, D.D.S.; Franco, F.O.; Sumiya, F.M.; Portolese, J.; Brentani, H.; Nunes, F.L.S.; Machado-Lima, A. Enhancing ensemble classifiers utilizing gaze tracking data for autism spectrum disorder diagnosis. Comput. Biol. Med. 2024, 182, 109184. [Google Scholar] [CrossRef]

- Wei, Q.; Dong, W.; Yu, D.; Wang, K.; Yang, T.; Xiao, Y.; Long, D.; Xiong, H.; Chen, J.; Xu, X.; et al. Early identification of autism spectrum disorder based on machine learning with eye-tracking data. J. Affect. Disord. 2024, 358, 326–334. [Google Scholar] [CrossRef] [PubMed]

- Ranaut, A.; Khandnor, P.; Chand, T. Identifying autism using EEG: Unleashing the power of feature selection and machine learning. Biomed. Phys. Eng. Express 2024, 10, 035013. [Google Scholar] [CrossRef] [PubMed]

- Posada-Quintero, H.F.; Manjur, S.M.; Hossain, M.B.; Marmolejo-Ramos, F.; Lee, I.O.; Skuse, D.H.; Thompson, D.A.; Constable, P.A. Autism spectrum disorder detection using variable frequency complex demodulation of the electroretinogram. Res. Aut. Spectr. Disord. 2023, 109, 102258. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Saeb, S.; Lonini, L.; Jayaraman, A.; Mohr, D.C.; Kording, K.P. The need to approximate the use-case in clinical machine learning. Gigascience 2017, 6, 1–9. [Google Scholar] [CrossRef]

- Chen, R.-C.; Dewi, C.; Huang, S.-W.; Caraka, R.E. Selecting critical features for data classification based on machine learning methods. J. Big Data 2020, 7, 52. [Google Scholar] [CrossRef]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Prasetiyowati, M.I.; Maulidevi, N.U.; Surendro, K. Determining threshold value on information gain feature selection to increase speed and prediction accuracy of random forest. J. Big Data 2021, 8, 84. [Google Scholar] [CrossRef]

- Fryer, D.; Strümke, I.; Nguyen, G. Shapley values for feature selection: The good, the bad, and the axioms. IEEE Access 2021, 9, 144352–144360. [Google Scholar] [CrossRef]

- Gramegna, A.; Giudici, P. Shapley feature selection. FinTech 2022, 1, 72–80. [Google Scholar] [CrossRef]

- Rozemberczki, B.; Watson, L.; Bayer, P.; Yang, H.-T.; Kiss, O.; Nilsson, S.; Sarkar, R. The shapley value in machine learning. arXiv 2022, arXiv:2202.05594. [Google Scholar] [CrossRef]

- Witkovsky, P. Dopamine and retinal function. Doc. Ophthalmol. 2004, 108, 17–40. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zhang, L.; Wang, Q.; Chen, L.; Shi, J.; Chen, X.; Li, Z.; Shen, D. Multi-Class ASD classification based on functional connectivity and functional correlation tensor via multi-source domain adaptation and multi-view sparse representation. IEEE Trans. Med. Imaging 2020, 39, 3137–3147. [Google Scholar] [CrossRef] [PubMed]

- Al-Hiyali, M.I.; Yahya, N.; Faye, I.; Hussein, A.F. Identification of autism subtypes based on Wavelet Coherence of BOLD FMRI signals using Convolutional Neural Network. Sensors 2021, 21, 5256. [Google Scholar] [CrossRef]

- Waterhouse, L. Heterogeneity thwarts autism explanatory power: A proposal for endophenotypes. Front. Psychiatry 2022, 13, 947653. [Google Scholar] [CrossRef] [PubMed]

- Jacob, S.; Wolff, J.J.; Steinbach, M.S.; Doyle, C.B.; Kumar, V.; Elison, J.T. Neurodevelopmental heterogeneity and computational approaches for understanding autism. Transl. Psychiatry 2019, 9, 63. [Google Scholar] [CrossRef] [PubMed]

- Santos, S.; Ferreira, H.; Martins, J.; Gonçalves, J.; Castelo-Branco, M. Male sex bias in early and late onset neurodevelopmental disorders: Shared aspects and differences in Autism Spectrum Disorder, Attention Deficit/hyperactivity Disorder, and Schizophrenia. Neurosci. Biobehav. Rev. 2022, 135, 104577. [Google Scholar] [CrossRef] [PubMed]

- Raman, S.R.; Man, K.K.C.; Bahmanyar, S.; Berard, A.; Bilder, S.; Boukhris, T.; Bushnell, G.; Crystal, S.; Furu, K.; KaoYang, Y.-H.; et al. Trends in attention-deficit hyperactivity disorder medication use: A retrospective observational study using population-based databases. Lancet Psychiatry 2018, 5, 824–835. [Google Scholar] [CrossRef]

- Werner, A.L.; Tebartz van Elst, L.; Ebert, D.; Friedel, E.; Bubl, A.; Clement, H.W.; Lukačin, R.; Bach, M.; Bubl, E. Normalization of increased retinal background noise after ADHD treatment: A neuronal correlate. Schizophr. Res. 2020, 219, 77–83. [Google Scholar] [CrossRef]

- Gustafsson, U.; Hansen, M. QbTest for monitoring medication treatment response in ADHD: A Systematic Review. Clin. Pract. Epidemiol. Ment. Health 2023, 19, e17450179276630. [Google Scholar] [CrossRef] [PubMed]

- Lord, C.; Rutter, M.; Goode, S.; Heemsbergen, J.; Jordan, H.; Mawhood, L.; Schopler, E. Autism diagnostic observation schedule: A standardized observation of communicative and social behavior. J. Autism Dev. Disord. 1989, 19, 185–212. [Google Scholar] [CrossRef]

- Skuse, D.; Warrington, R.; Bishop, D.; Chowdhury, U.; Lau, J.; Mandy, W.; Place, M. The developmental, dimensional and diagnostic interview (3di): A novel computerized assessment for autism spectrum disorders. J. Am. Acad. Child. Adolesc. Psychiatry 2004, 43, 548–558. [Google Scholar] [CrossRef] [PubMed]

- Ruigrok, A.N.V.; Lai, M.C. Sex/gender differences in neurology and psychiatry: Autism. Handb. Clin. Neurol. 2020, 175, 283–297. [Google Scholar] [CrossRef]

- Lai, M.C.; Lerch, J.P.; Floris, D.L.; Ruigrok, A.N.; Pohl, A.; Lombardo, M.V.; Baron-Cohen, S. Imaging sex/gender and autism in the brain: Etiological implications. J. Neurosci. Res. 2017, 95, 380–397. [Google Scholar] [CrossRef] [PubMed]

- Muñoz-Suazo, M.D.; Navarro-Muñoz, J.; Díaz-Román, A.; Porcel-Gálvez, A.M.; Gil-García, E. Sex differences in neuropsychological functioning among children with attention-deficit/hyperactivity disorder. Psychiatry Res. 2019, 278, 289–293. [Google Scholar] [CrossRef] [PubMed]

- Hollis, C.; Hall, C.L.; Guo, B.; James, M.; Boadu, J.; Groom, M.J.; Brown, N.; Kaylor-Hughes, C.; Moldavsky, M.; Valentine, A.Z.; et al. The impact of a computerised test of attention and activity (QbTest) on diagnostic decision-making in children and young people with suspected attention deficit hyperactivity disorder: Single-blind randomised controlled trial. J. Child. Psychol. Psychiatry 2018, 59, 1298–1308. [Google Scholar] [CrossRef]

- Soker-Elimaliah, S.; Lehrfield, A.; Scarano, S.R.; Wagner, J.B. Associations between the pupil light reflex and the broader autism phenotype in children and adults. Front. Hum. Neurosci. 2022, 16, 1052604. [Google Scholar] [CrossRef]

- Krishnappa Babu, P.R.; Aikat, V.; Di Martino, J.M.; Chang, Z.; Perochon, S.; Espinosa, S.; Aiello, R.; L H Carpenter, K.; Compton, S.; Davis, N.; et al. Blink rate and facial orientation reveal distinctive patterns of attentional engagement in autistic toddlers: A digital phenotyping approach. Sci. Rep. 2023, 13, 7158. [Google Scholar] [CrossRef]

- Benabderrahmane, B.; Gharzouli, M.; Benlecheb, A. A novel multi-modal model to assist the diagnosis of autism spectrum disorder using eye-tracking data. Health Inf. Sci. Syst. 2024, 12, 40. [Google Scholar] [CrossRef] [PubMed]

- Tuesta, R.; Harris, R.; Posada-Quintero, H.F. Circuit and sensor design for smartphone-based electroretinography. In Proceedings of the IEEE 20th International Conference on Body Sensor Networks (BSN), Chicago, IL, USA, 15–17 October 2024; Volume 2024, p. 10780451. [Google Scholar] [CrossRef]

- Cordoba, N.; Daza, S.; Constable, P.A.; Posada-Quintero, H.F. Design of a smartphone-based clinical electroretinogram recording system. In Proceedings of the IEEE International Symposium on Medical Measurements and Applications (MeMeA), Eindhoven, The Netherlands, 26–28 June 2024; pp. 1–2. [Google Scholar] [CrossRef]

- Huddy, O.; Tomas, A.; Manjur, S.M.; Posada-Quintero, H.F. Prototype for Smartphone-based Electroretinogram. In Proceedings of the IEEE 19th International Conference on Body Sensor Networks (BSN), Boston, MA, USA, 9–11 October 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Zhdanov, A.; Dolganov, A.; Zanca, D.; Borisov, V.; Ronkin, M. Advanced analysis of electroretinograms based on wavelet scalogram processing. Appl. Sci. 2022, 12, 12365. [Google Scholar] [CrossRef]

- Kulyabin, M.; Zhdanov, A.; Dolganov, A.; Ronkin, M.; Borisov, V.; Maier, A. Enhancing electroretinogram classification with multi-wavelet analysis and visual transformer. Sensors 2023, 23, 8727. [Google Scholar] [CrossRef]

- Zhdanov, A.; Constable, P.; Manjur, S.M.; Dolganov, A.; Posada-Quintero, H.F.; Lizunov, A. OculusGraphy: Signal analysis of the electroretinogram in a rabbit model of endophthalmitis using discrete and continuous wavelet transforms. Bioengineering 2023, 10, 708. [Google Scholar] [CrossRef]

- Sarossy, M.; Crowston, J.; Kumar, D.; Weymouth, A.; Wu, Z. Time-frequency analysis of ERG with discrete wavelet transform and matching pursuits for glaucoma. Transl. Vis. Sci. Technol. 2022, 11, 19. [Google Scholar] [CrossRef]

- Dorfman, A.L.; Gauvin, M.; Vatcher, D.; Little, J.M.; Polomeno, R.C.; Lachapelle, P. Ring analysis of multifocal oscillatory potentials (mfOPs) in cCSNB suggests near-normal ON-OFF pathways at the fovea only. Doc. Ophthalmol. 2020, 141, 99–109. [Google Scholar] [CrossRef]

- Brandao, L.M.; Monhart, M.; Schötzau, A.; Ledolter, A.A.; Palmowski-Wolfe, A.M. Wavelet decomposition analysis in the two-flash multifocal ERG in early glaucoma: A comparison to ganglion cell analysis and visual field. Doc. Ophthalmol. 2017, 135, 29–42. [Google Scholar] [CrossRef]

- Ramsay, J.O.; Silverman, B.W. Functional Data Analysis; Springer: New York, NY, USA, 2005. [Google Scholar]

- Ramsay, J.O. When the data are functions. Psychometrika 1982, 47, 379–396. [Google Scholar] [CrossRef]

- Brabec, M.; Constable, P.A.; Thompson, D.A.; Marmolejo-Ramos, F. Group comparisons of the individual electroretinogram time trajectories for the ascending limb of the b-wave using a raw and registered time series. BMC Res. Notes 2023, 16, 238. [Google Scholar] [CrossRef]

- Maturo, F.; Verde, R. Pooling random forest and functional data analysis for biomedical signals supervised classification: Theory and application to electrocardiogram data. Stat. Med. 2022, 41, 2247–2275. [Google Scholar] [CrossRef]

- Kulyabin, M.; Zhdanov, A.; Maier, A.; Loh, L.; Estevez, J.J.; Constable, P.A. Generating synthetic light-adapted electroretinogram waveforms using artificial intelligence to improve classification of retinal conditions in under-represented populations. J. Ophthalmol. 2024, 2024, 1990419. [Google Scholar] [CrossRef]

- Kulyabin, M.; Constable, P.A.; Zhdanov, A.; Lee, I.O.; Thompson, D.A.; Maier, A. Attention to the electroretinogram: Gated Multilayer Perceptron for ASD classification. IEEE Access 2024, 12, 52352–52362. [Google Scholar] [CrossRef]

- Masland, R.H. The neuronal organization of the retina. Neuron 2012, 76, 266–280. [Google Scholar] [CrossRef]

- Bhatt, Y.; Hunt, D.M.; Carvalho, L.S. The origins of the full-field flash electroretinogram b-wave. Front. Mol. Neurosci. 2023, 16, 1153934. [Google Scholar] [CrossRef] [PubMed]

- Thompson, D.A.; Feather, S.; Stanescu, H.C.; Freudenthal, B.; Zdebik, A.A.; Warth, R.; Ognjanovic, M.; Hulton, S.A.; Wassmer, E.; Russell-Eggitt, I.; et al. Altered electroretinograms in patients with KCNJ10 mutations and EAST syndrome. J. Physiol. 2011, 589, 1681–1689. [Google Scholar] [CrossRef] [PubMed]

- Kaneda, M. Signal processing in the mammalian retina. J. Nippon. Med. Sch. 2013, 80, 16–24. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Severns, M.L.; Johnson, M.A. The variability of the b-wave of the electroretinogram with stimulus luminance. Doc. Ophthalmol. 1993, 84, 291–299. [Google Scholar] [CrossRef]

- Hanna, M.C.; Calkins, D.J. Expression and sequences of genes encoding glutamate receptors and transporters in primate retina determined using 3’-end amplification polymerase chain reaction. Mol. Vis. 2006, 12, 961–976. [Google Scholar]

- Hanna, M.C.; Calkins, D.J. Expression of genes encoding glutamate receptors and transporters in rod and cone bipolar cells of the primate retina determined by single-cell polymerase chain reaction. Mol. Vis. 2007, 13, 2194–2208. [Google Scholar]

- Bush, R.A.; Sieving, P.A. A proximal retinal component in the primate photopic ERG a-wave. Investig. Ophthalmol. Vis. Sci. 1994, 35, 635–645. [Google Scholar]

- Robson, J.G.; Saszik, S.M.; Ahmed, J.; Frishman, L.J. Rod and cone contributions to the a-wave of the electroretinogram of the macaque. J. Physiol. 2003, 547, 509–530. [Google Scholar] [CrossRef]

- Friedburg, C.; Allen, C.P.; Mason, P.J.; Lamb, T.D. Contribution of cone photoreceptors and post-receptoral mechanisms to the human photopic electroretinogram. J. Physiol. 2004, 556, 819–834. [Google Scholar] [CrossRef]

- Diamond, J.S. Inhibitory interneurons in the retina: Types, circuitry, and function. Annu. Rev. Vis. Sci. 2017, 3, 1–24. [Google Scholar] [CrossRef]

- Wachtmeister, L. Some aspects of the oscillatory response of the retina. Prog. Brain Res. 2001, 131, 465–474. [Google Scholar] [CrossRef]

- Wachtmeister, L. Oscillatory potentials in the retina: What do they reveal. Prog. Retin. Eye Res. 1998, 17, 485–521. [Google Scholar] [CrossRef]

- Frishman, L.; Sustar, M.; Kremers, J.; McAnany, J.J.; Sarossy, M.; Tzekov, R.; Viswanathan, S. ISCEV extended protocol for the photopic negative response (PhNR) of the full-field electroretinogram. Doc. Ophthalmol. 2018, 136, 207–211. [Google Scholar] [CrossRef]

- Viswanathan, S.; Frishman, L.J.; Robson, J.G.; Walters, J.W. The photopic negative response of the flash electroretinogram in primary open angle glaucoma. Invest. Ophthalmol. Vis. Sci. 2001, 42, 514–522. [Google Scholar] [PubMed]

- Asi, H.; Perlman, I. Relationships between the electroretinogram a-wave, b-wave and oscillatory potentials and their application to clinical diagnosis. Doc. Ophthalmol. 1992, 79, 125–139. [Google Scholar] [CrossRef]

- Robson, A.G.; Nilsson, J.; Li, S.; Jalali, S.; Fulton, A.B.; Tormene, A.P.; Holder, G.E.; Brodie, S.E. ISCEV guide to visual electrodiagnostic procedures. Doc. Ophthalmol. 2018, 136, 1–26. [Google Scholar] [CrossRef]

- American Psychiatric Association. DSM IV Diagnostic and Statistical Manual of Mental Disorders; American Psychiatric Association: Washington, DC, USA, 1994. [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders V; Adamczyk, R., Ed.; American Psychiatric Association: Arlington, VA, USA, 2013. [Google Scholar]

- Gotham, K.; Risi, S.; Pickles, A.; Lord, C. The autism diagnostic observation schedule: Revised algorithms for improved diagnostic validity. J. Autism Dev. Disord. 2007, 37, 613–627. [Google Scholar] [CrossRef] [PubMed]

- Schopler, E.; Van Bourgondien, M.E.; Wellman, G.J.; Love, S.R. Childhood Autism Rating Scale, Second Edition (CARS-2); Western Psychological Services: Torrance, CA, USA, 2010. [Google Scholar]

- Hobby, A.E.; Kozareva, D.; Yonova-Doing, E.; Hossain, I.T.; Katta, M.; Huntjens, B.; Hammond, C.J.; Binns, A.M.; Mahroo, O.A. Effect of varying skin surface electrode position on electroretinogram responses recorded using a handheld stimulating and recording system. Doc. Ophthalmol. 2018, 137, 79–86. [Google Scholar] [CrossRef]

- Al Abdlseaed, A.; McTaggart, Y.; Ramage, T.; Hamilton, R.; McCulloch, D.L. Light- and dark-adapted electroretinograms (ERGs) and ocular pigmentation: Comparison of brown- and blue-eyed cohorts. Doc. Ophthalmol. 2010, 121, 135–146. [Google Scholar] [CrossRef]

| Technique | Model | Site | Eye Flash Strength | # Samples [Control/ASD] | BA | F1 Score | # Features |

|---|---|---|---|---|---|---|---|

| TD + VFCDM + DWT | AdaB | 2 | R-446 | [83/73] | 0.712 | 0.712 | 102 |

| TD + VFCDM | XGB | 2 | R-446/L-446 | [80/62] | 0.759 | 0.761 | 136 |

| Selected Features | KNN | 2 | L-113/L-446 | [77/60] | 0.727 | 0.726 | 36 |

| TD + VFCDM (FI ≥ 0.01) * | XGB | 2 | R-446/L-446 | [77/60] | 0.747 | 0.748 | 35 |

| TD + VFCDM (Shapley val ≥ 0.005) * | XGB | 2 | R-446/L-446 | [77/60] | 0.745 | 0.747 | 96 |

| Technique | Model | Site | Eye-Strength | # Samples [Control/ADHD] | BA | F1 Score | # Features |

|---|---|---|---|---|---|---|---|

| TD + DWT | XGB | 1 | L-113 | [122/74] | 0.750 | 0.750 | 38 |

| TD + VFCDM + DWT | SVM | 1 | R-446/L-446 | [116/69] | 0.724 | 0.726 | 204 |

| TD + VFCDM + DWT | RF | 1 | L-113/R-446 | [112/67] | 0.758 | 0.760 | 204 |

| TD + VFCDM + DWT (FI ≥ 0.01) * | RF | 1 | L-113/R-446 | [112/67] | 0.727 | 0.729 | 23 |

| TD + VFCDM + DWT (Shapley val ≥ 0.005) * | RF | 1 | L-113/R-446 | [112/67] | 0.773 | 0.773 | 34 |

| Technique | Model | Site | Eye Strength | # Samples [Control/ASD/ADHD] | BA | F1 Score | # Features |

|---|---|---|---|---|---|---|---|

| TD + VFCDM + DWT | GradB | 1 | L-446 | [128/50/55] | 0.581 | 0.578 | 102 |

| TD + VFCDM + DWT | KNN | 2 | R-446/L-446 | [80/47/24] | 0.672 | 0.622 | 204 |

| Selected Features | SVM | 1 | L-113/L-446 | [115/47/51] | 0.648 | 0.620 | 36 |

| TD + VFCDM + DWT (FI ≥ 0.01) * | KNN | 2 | R-446/L-446 | [80/47/24] | 0.610 | 0.579 | 18 |

| TD + VFCDM + DWT (Shapley val ≥ 0.005) * | KNN | 2 | R-446/L-446 | [80/47/24] | 0.704 | 0.660 | 41 |

| Technique | Model | Site | Eye Strength | # Samples [Control/ASD/ADHD/ASD + ADHD] | BA | F1 Score | # Features |

|---|---|---|---|---|---|---|---|

| TD + VFCDM + DWT | RF | 1 | L-446 | [128/50/55/21] | 0.468 | 0.474 | 102 |

| TD + VFCDM | KNN | 2 | R-446/L-446 | [80/47/24/15] | 0.477 | 0.461 | 136 |

| TD + VFCDM + DWT | RF | 1 | L-113/L-446 | [115/47/51/20] | 0.491 | 0.477 | 204 |

| TD + VFCDM + DWT (FI ≥ 0.01) * | RF | 1 | L-446 | [128/50/55/21] | 0.529 | 0.526 | 34 |

| TD + VFCDM + DWT (Shapley val ≥ 0.005) * | RF | 1 | L-446 | [128/50/55/21] | 0.521 | 0.517 | 31 |

| Technique | Model | Site | Eye Strength | # Samples | # Feats | BA | F1 Score | Mean AUC | # Groups | TD | Med |

|---|---|---|---|---|---|---|---|---|---|---|---|

| TD + VFCDM | XGB | 2 | R-446/L-446 | [80/62] | 136 | 0.759 | 0.761 | 0.78 | 2 (ASD) | Y | Y |

| VFCDM + DWT (Shapley val ≥ 0.005) | SVM | 2 | L-113/R-446 | [77/60] | 123 | 0.763 | 0.763 | 0.83 | 2 (ASD) | N | Y |

| TD + DWT | AdaB | 2 | R-113/L-446 | [75/46] | 1 | 0.730 | 0.729 | 0.65 | 2 (ASD) | Y | N |

| VFCDM + DWT (Shapley val ≥ 0.005) | SVM | 2 | R-446/L-446 | [78/47] | 54 | 0.738 | 0.744 | 0.73 | 2 (ASD) | N | N |

| TD + VFCDM + DWT (Shapley val ≥ 0.005) | RF | 1 | L-113/R-446 | [112/67] | 34 | 0.773 | 0.773 | 0.81 | 2 (ADHD) | Y | Y |

| VFCDM (Shapley val ≥ 0.005) | SVM | 2 | R-113/R-446 | [79/44] | 62 | 0.809 | 0.801 | 0.86 | 2 (ADHD) | N | Y |

| TD + VFCDM (Shapley val ≥ 0.005) | AdaB | 1 | L-113/R-446 | [112/31] | 8 | 0.842 | 0.831 | 0.86 | 2 (ADHD) | Y | N |

| VFCDM(Shapley val ≥ 0.005) | AdaB | 2 | R-446/L-446 | [78/19] | 9 | 0.817 | 0.799 | 0.84 | 2 (ADHD) | N | N |

| TD + VFCDM + DWT (Shapley val ≥ 0.005) | KNN | 2 | L-446/R-446 | [80/47/24] | 41 | 0.704 | 0.660 | 0.79 | 3 | Y | Y |

| VFCDM + DWT (Shapley val ≥ 0.005) | SVM | 1 | R-113/L-446 | [110/45/52] | 47 | 0.649 | 0.641 | 0.81 | 3 | N | Y |

| TD + VFCDM (FI ≥ 0.01) | GradB | 1 | L-113/R-446 | [112/41/23] | 18 | 0.662 | 0.635 | 0.80 | 3 | Y | N |

| VFCDM + DWT (FI ≥ 0.01) | XGB | 1 | R-446/L-446 | [116/42/23] | 30 | 0.604 | 0.609 | 0.78 | 3 | N | N |

| TD + VFCDM + DWT (FI ≥ 0.01) | RF | 1 | L-446 | [128/50/55/21] | 34 | 0.529 | 0.526 | 0.73 | 4 | Y | Y |

| VFCDM + DWT (Shapley val ≥ 0.005) | RF | 1 | L-446 | [128/50/55/21] | 45 | 0.510 | 0.499 | 0.72 | 4 | N | Y |

| Technique | Model | Site | Eye Strength | # Samples | # Feats | BA | F1 Score | Mean AUC | # Groups | Sex |

|---|---|---|---|---|---|---|---|---|---|---|

| VFCDM + DWT (Shapley val ≥ 0.005) | SVM | 2 | R-113/L-446 | [77/60] | 123 | 0.763 | 0.763 | 0.82 | ASD vs. Con | Both |

| VFCDM + DWT (FI ≥ 0.01) | AdaB | 2 | R-446/L-446 | [39/50] | 26 | 0.870 | 0.873 | 0.93 | ASD vs. Con | Male |

| VFCDM (Shapley val ≥ 0.005) | SVM | 2 | R-113/L-446 | [40/11] | 10 | 0.814 | 0.804 | 0.77 | ASD vs. Con | Female |

| VFCDM (Shapley val ≥ 0.005) | SVM | 2 | R-113/L-446 | [79/44] | 62 | 0.809 | 0.801 | 0.86 | ADHD vs. Con | Both |

| VFCDM + DWT (FI ≥ 0.01) | XGB | 1 | R-113/L-113 | [37/47] | 32 | 0.793 | 0.794 | 0.86 | ADHD vs. Con | Male |

| VFCDM | RF | 2 | R-446/L-446 | [41/18] | 128 | 0.840 | 0.840 | 0.89 | ADHD vs. Con | Female |

| Technique | Model | Site | Eye Strength | # Samples | # Feats | BA | F1 Score | Mean AUC | Sex | TD | Med |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-Group ASD vs. Control Classification | |||||||||||

| VFCDM + DWT (Shapley val ≥ 0.005) | SVM | 2 | L-113/R-446 | [77/60] | 123 | 0.763 | 0.763 | 0.83 | Both | N | Y |

| VFCDM + DWT (FI ≥ 0.01) | AdaB | 2 | R-446/L-446 | [39/50] | 26 | 0.870 | 0.873 | 0.93 | Male | N | Y |

| 2-Group ADHD vs. Control Classification | |||||||||||

| TD + VFCDM (Shapley val ≥ 0.005) | AdaB | 1 | L-113/R-446 | [112/31] | 8 | 0.842 | 0.831 | 0.86 | Both | Y | N |

| VFCDM | RF | 2 | R-446/L-446 | [41/18] | 128 | 0.840 | 0.840 | 0.89 | Female | N | Y |

| 3-Group ASD vs. ADHD vs. Control Classification | |||||||||||

| TD + VFCDM + DWT (Shapley val ≥ 0.005) | KNN | 2 | L-446/R-446 | [80/47/24] | 41 | 0.704 | 0.660 | 0.79 | Both | Y | Y |

| 4-Group ASD vs. ADHD vs. ASD + ADHD vs. Control Classification | |||||||||||

| TD + VFCDM + DWT (FI ≥ 0.01) | RF | 1 | L-446 | [128/50/55/21] | 34 | 0.529 | 0.526 | 0.73 | Both | Y | Y |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Constable, P.A.; Pinzon-Arenas, J.O.; Mercado Diaz, L.R.; Lee, I.O.; Marmolejo-Ramos, F.; Loh, L.; Zhdanov, A.; Kulyabin, M.; Brabec, M.; Skuse, D.H.; et al. Spectral Analysis of Light-Adapted Electroretinograms in Neurodevelopmental Disorders: Classification with Machine Learning. Bioengineering 2025, 12, 15. https://doi.org/10.3390/bioengineering12010015

Constable PA, Pinzon-Arenas JO, Mercado Diaz LR, Lee IO, Marmolejo-Ramos F, Loh L, Zhdanov A, Kulyabin M, Brabec M, Skuse DH, et al. Spectral Analysis of Light-Adapted Electroretinograms in Neurodevelopmental Disorders: Classification with Machine Learning. Bioengineering. 2025; 12(1):15. https://doi.org/10.3390/bioengineering12010015

Chicago/Turabian StyleConstable, Paul A., Javier O. Pinzon-Arenas, Luis Roberto Mercado Diaz, Irene O. Lee, Fernando Marmolejo-Ramos, Lynne Loh, Aleksei Zhdanov, Mikhail Kulyabin, Marek Brabec, David H. Skuse, and et al. 2025. "Spectral Analysis of Light-Adapted Electroretinograms in Neurodevelopmental Disorders: Classification with Machine Learning" Bioengineering 12, no. 1: 15. https://doi.org/10.3390/bioengineering12010015

APA StyleConstable, P. A., Pinzon-Arenas, J. O., Mercado Diaz, L. R., Lee, I. O., Marmolejo-Ramos, F., Loh, L., Zhdanov, A., Kulyabin, M., Brabec, M., Skuse, D. H., Thompson, D. A., & Posada-Quintero, H. (2025). Spectral Analysis of Light-Adapted Electroretinograms in Neurodevelopmental Disorders: Classification with Machine Learning. Bioengineering, 12(1), 15. https://doi.org/10.3390/bioengineering12010015