A Comparison of Myoelectric Control Modes for an Assistive Robotic Virtual Platform

Abstract

1. Introduction

2. Methods

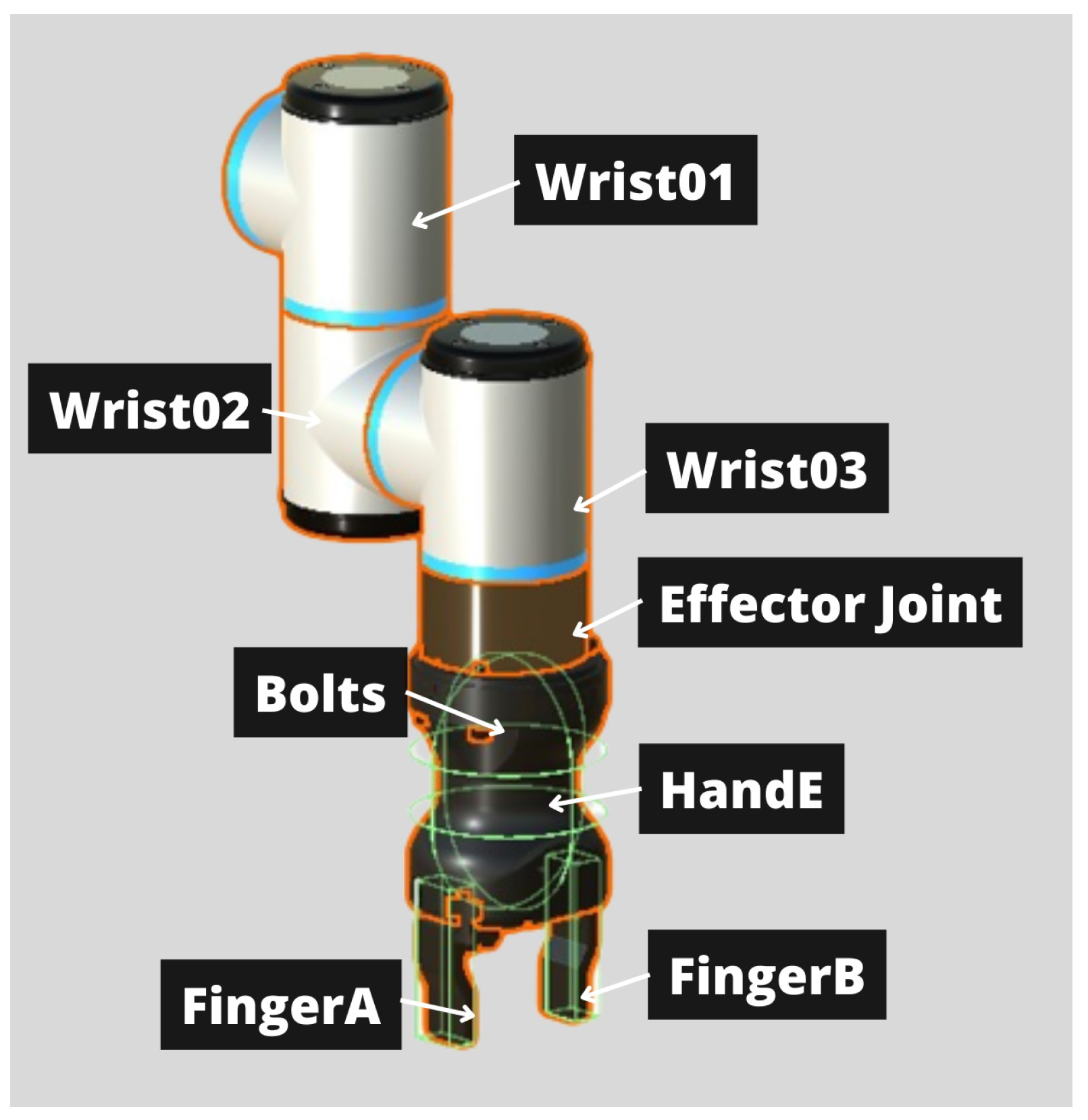

2.1. Virtual Environment

2.1.1. Virtual Reality Software

2.1.2. Appearance of the Virtual Environment

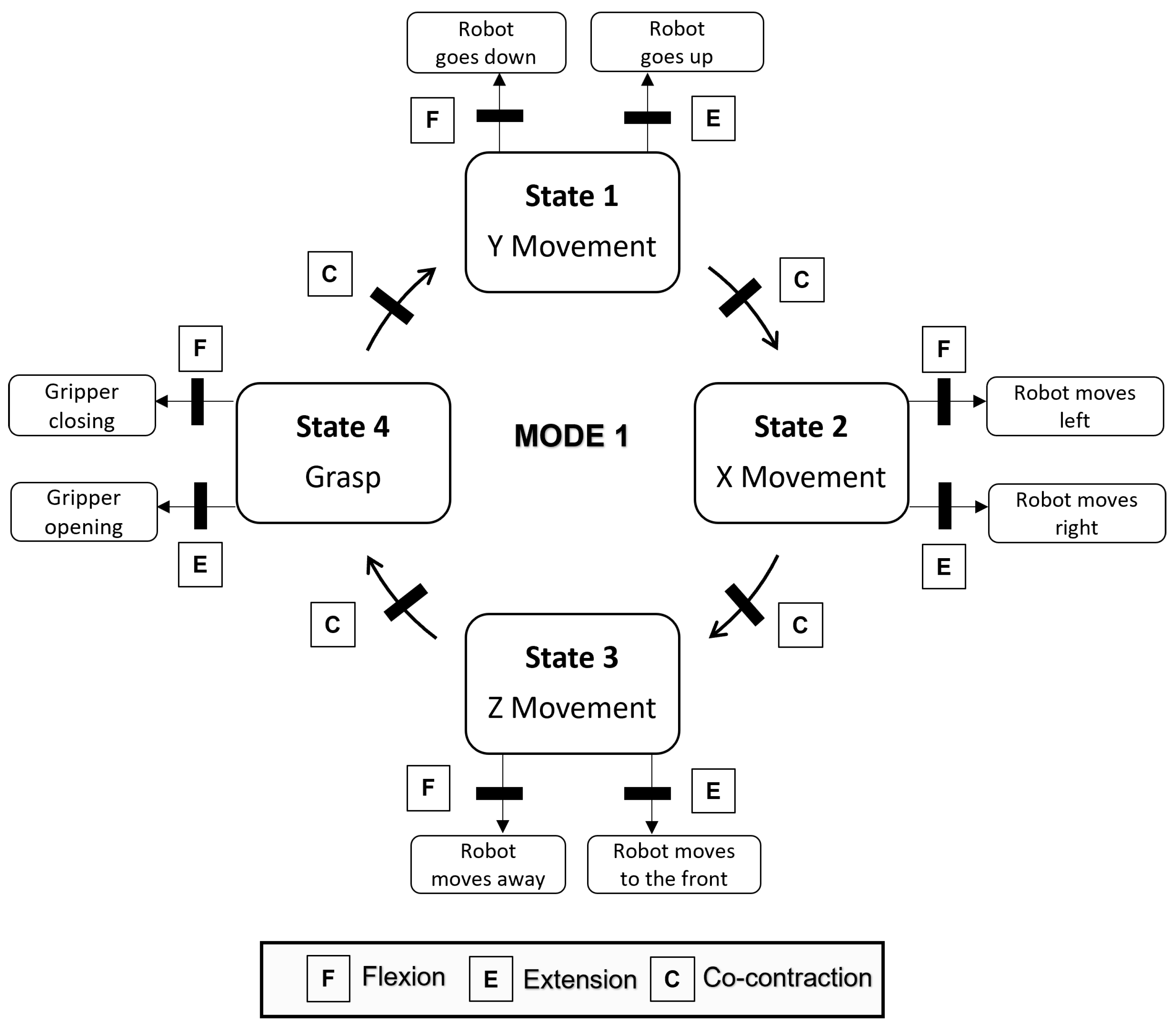

2.1.3. Control Modes

- Mode 1: Free motion on three axes and manual grasping. The user is in charge of most of the control of the robot. In this mode the user can perform eight different actions. These actions are the movement of the end effector of the robot, both positive and negative, in all three Cartesian axes, and the opening and closing of the gripper.

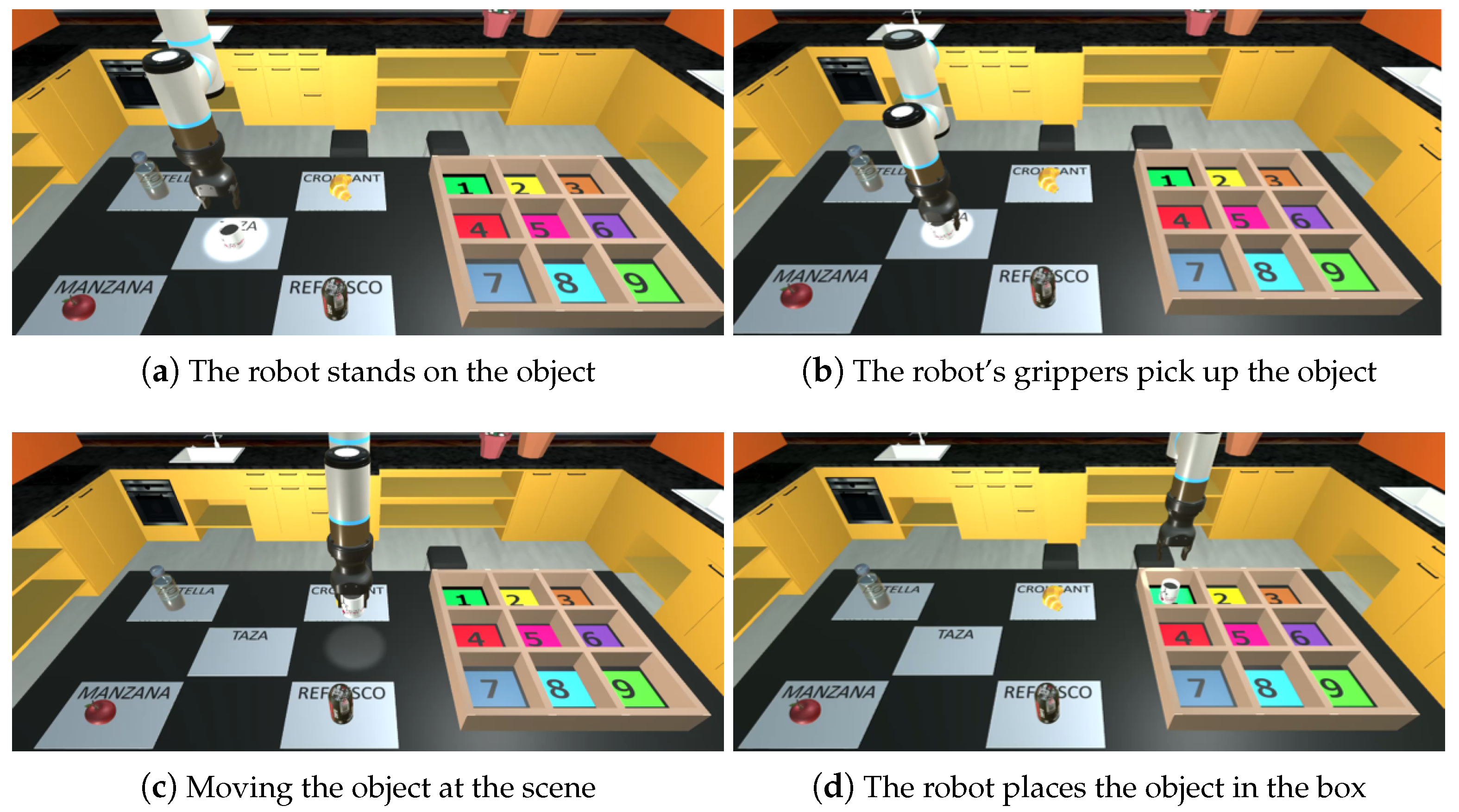

- Mode 2: Free motion on two axes and automatic grasping. The number of actions that the user can perform is reduced to six. The movements that the robot can perform are the translations in the x and z axes, both in the positive and negative directions. The other two user actions command the robot to automatically grasp or place an object. Figure 4 illustrates the robot’s movement in the scene. The robot positions itself on the closest object, grasps it with its grippers, and transports it to the target position. Upon reaching the desired box position, the robot deposits the object.

- Mode 3: Automatic motion and grasping. This is the only mode in which all the robot’s movements are performed automatically. The user chooses, by selecting the options implemented in the virtual interface, the object to grasp and the position where the object will be placed.

2.2. Myoelectric Control

2.2.1. Selected Muscles and Movements

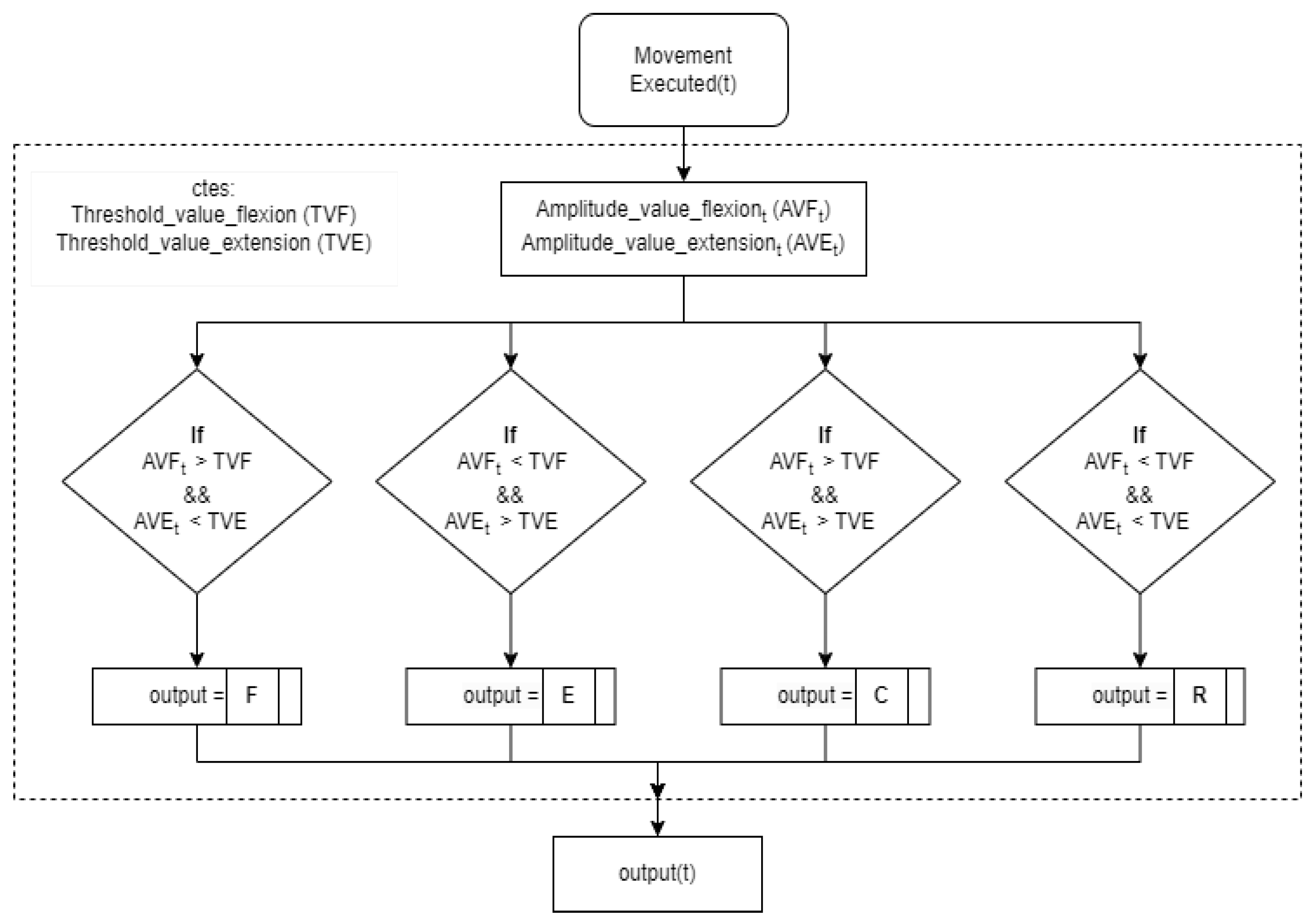

2.2.2. Signal Processing

2.2.3. Control Approach

- In mode 1, the state machine has 4 states. This mode provides the user with the highest number of degrees of freedom. Figure 6 shows the distribution of these states and how each movement is associated with a replica of the robot’s movement in the virtual environment.

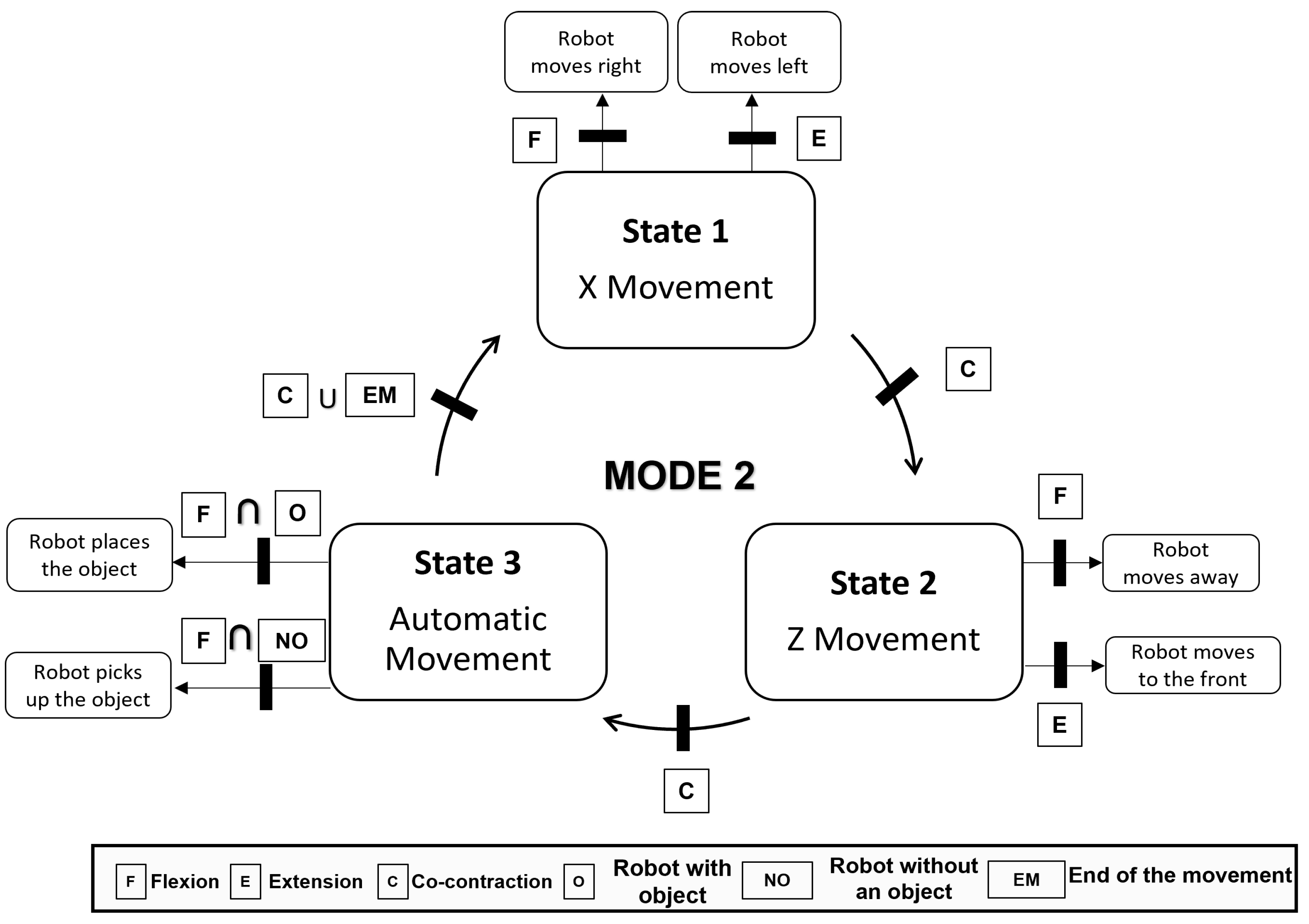

- Mode 2 has one state less because the user is not provided with the ability to manipulate the object in the y-axis (Figure 7). The robot automatically performs this function as already mentioned in the virtual environment section.

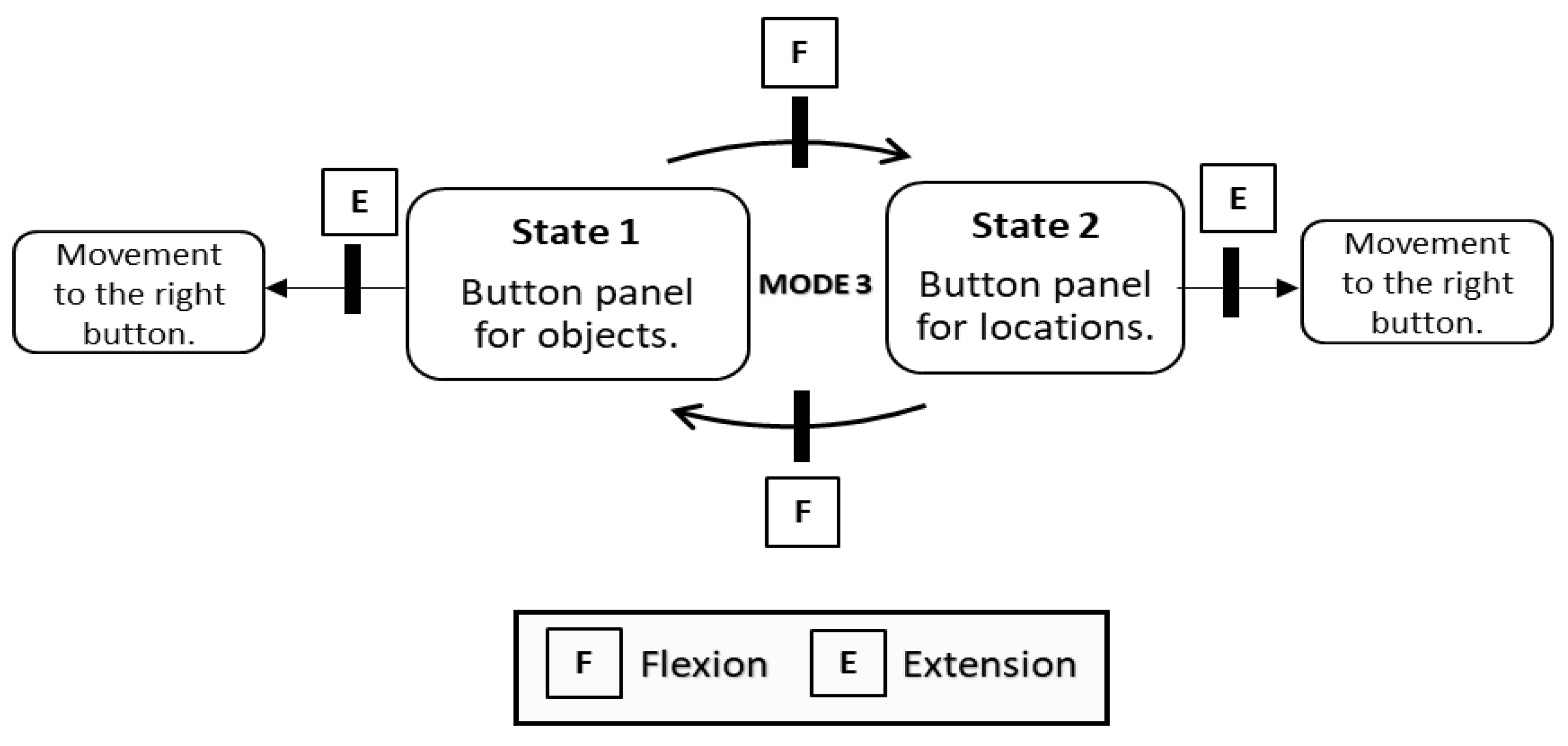

- Mode 3 significantly differs from the previous modes. The user performs extension and flexion movements to move around a button panel and selects one of the options (Figure 8). In this mode there are two states, the first in which the user is selecting the object and the second when the object’s destination is selected. The transition between states is done automatically when the robot finishes the pick-up or drop-off function. For this reason, the co-contraction movement has no functionality in this mode.

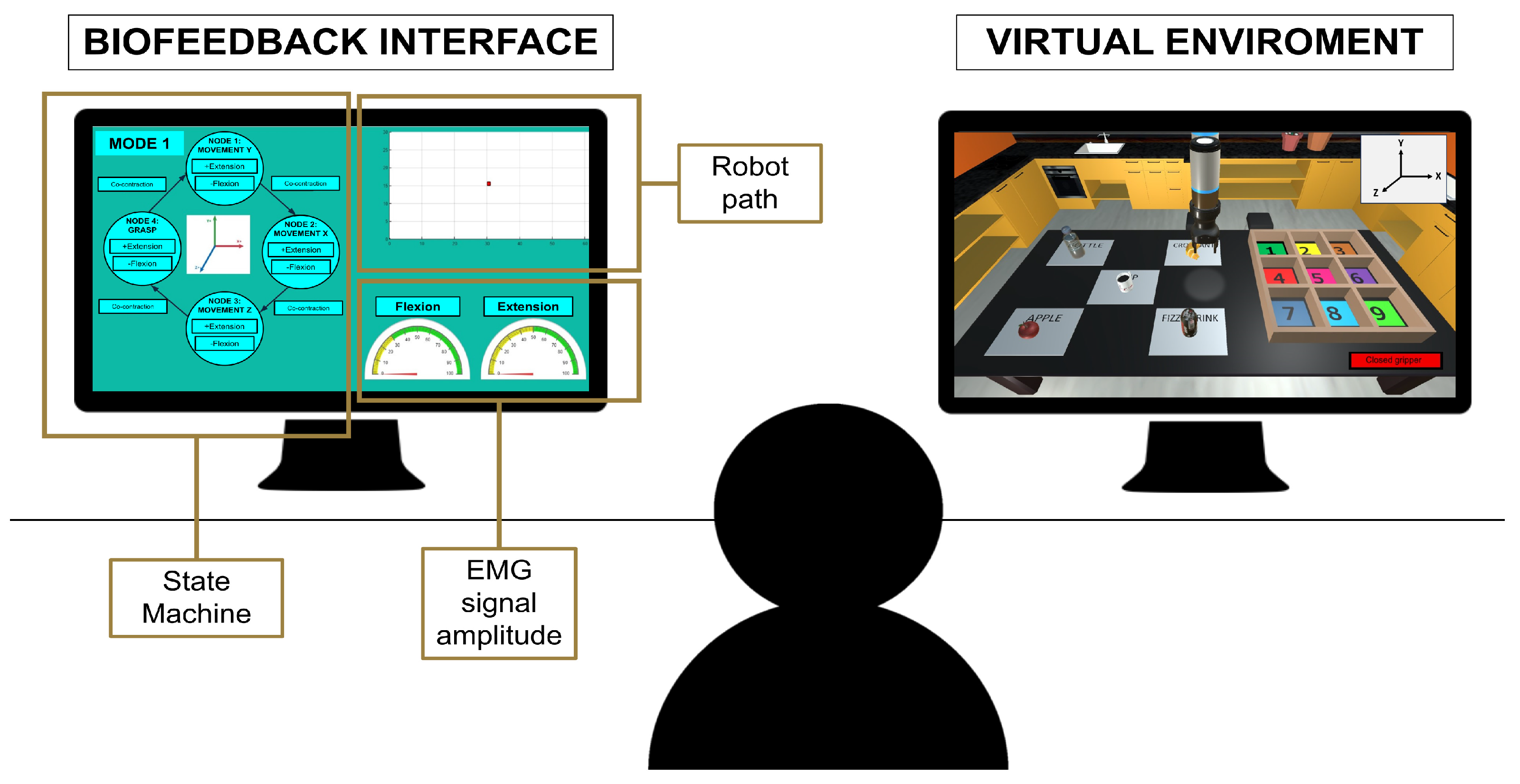

2.2.4. Biofeedback Interface

2.3. Experimental Protocol

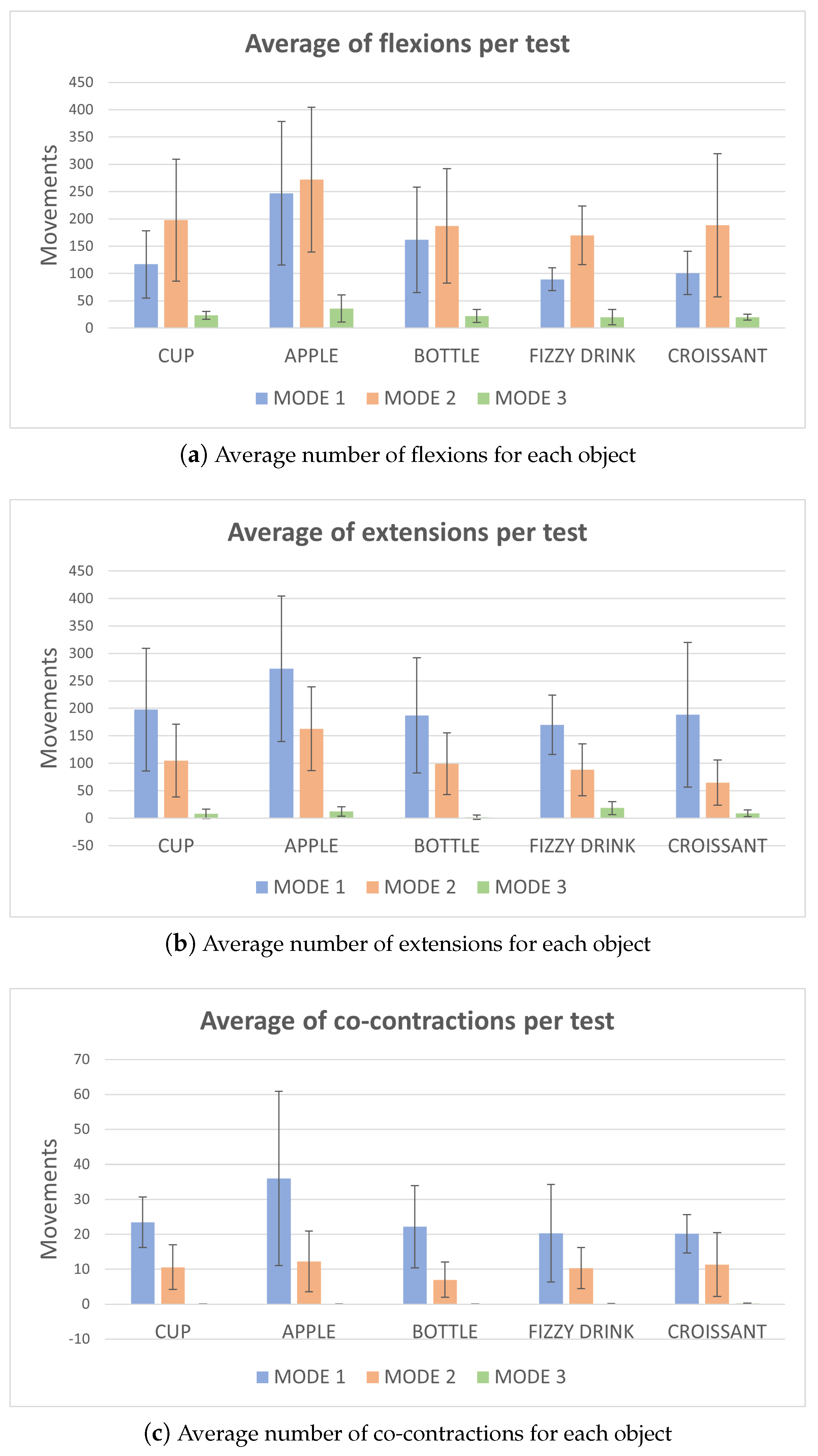

3. Results

Survey Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Walicka, M.; Raczyńska, M.; Marcinkowska, K.; Lisicka, I.; Czaicki, A.; Wierzba, W.; Franek, E. Amputations of Lower Limb in Subjects with Diabetes Mellitus: Reasons and 30-Day Mortality. J. Diabetes Res. 2021, 2021, 8866126. [Google Scholar] [CrossRef] [PubMed]

- McDonald, C.L.; Westcott-McCoy, S.; Weaver, M.R.; Haagsma, J.; Kartin, D. Global prevalence of traumatic non-fatal limb amputation. Prosthet. Orthot. Int. 2021, 45, 105–114. [Google Scholar] [CrossRef] [PubMed]

- Atzori, M.; Gijsberts, A.; Castellini, C.; Caputo, B.; Hager, A.M.; Elsig, S.; Giatsidis, G.; Bassetto, F.; Müller, H. Electromyography data for non-invasive naturally-controlled Robotic hand prostheses. Sci. Data 2014, 1, 140053. [Google Scholar] [CrossRef] [PubMed]

- Geethanjali, P. Myoelectric control of prosthetic hands: State-of-the-art review. Med. Devices 2016, 9, 247–255. [Google Scholar] [CrossRef]

- Sears, H.H.; Shaperman, J. Proportional myoelectric hand control: An evaluation. Am. J. Phys. Med. Rehabil. 1991, 70, 20–28. [Google Scholar] [CrossRef] [PubMed]

- Jiang, N.; Lorrain, T.; Farina, D. A state-based, proportional myoelectric control method: Online validation and comparison with the clinical state-of-the-art. J. Neuroeng. Rehabil. 2014, 11, 110. [Google Scholar] [CrossRef] [PubMed]

- Matrone, G.C.; Cipriani, C.; Carrozza, M.C.; Magenes, G. Real-time myoelectric control of a multi-fingered hand prosthesis using principal components analysis. J. Neuroeng. Rehabil. 2012, 9, 40. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.; Tong, K.-Y.; Zhang, X.; Li, S.; Zhou, P. Myoelectric Pattern Recognition for Controlling a Robotic Hand: A Feasibility Study in Stroke. IEEE. Trans. Biomed. Eng. 2019, 66, 365–372. [Google Scholar] [CrossRef] [PubMed]

- Resnik, L.; Huang, H.; Winslow, A.; Crouch, D.L.; Zhang, F.; Wolk, N. Evaluation of EMG pattern recognition for upper limb prosthesis control: A case study in comparison with direct myoelectric control. J. Neuroeng. Rehabil. 2018, 15, 1–13. [Google Scholar] [CrossRef]

- Desrosiers, J.; Bravo, G.; Hébert, R.; Dutil, E.; Mercier, L. Validation of the Box and Block Test as a measure of dexterity of elderly people: Reliability, validity, and norms studies. Arch. Phys. Med. Rehabil. 1994, 75, 751–755. [Google Scholar] [CrossRef] [PubMed]

- Hussaini, A.; Kyberd, P. Refined clothespin relocation test and assessment of motion. Prosthet. Orthot. Int. 2017, 41, 294–302. [Google Scholar] [CrossRef] [PubMed]

- Koh, C.L.; Hsueh, I.P.; Wang, W.C.; Sheu, C.F.; Yu, T.Y.; Wang, C.H.; Hsieh, C.L. Validation of the action research arm test using item response theory in patients after stroke. J. Rehabil. Med. 2006, 38, 375–380. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Nakamura, G.; Shibanoki, T.; Shima, K.; Kurita, Y.; Hasegawa, M.; Otsuka, A.; Honda, Y.; Chin, T.; Tsuji, T. A training system for the MyoBock hand in a virtual reality environment. In Proceedings of the 2013 IEEE Biomedical Circuits and Systems Conference (BioCAS 2013), Rotterdam, The Netherlands, 31 October–2 November 2013; pp. 61–64. [Google Scholar] [CrossRef]

- Cavalcante, R.; Gaballa, A.; Cabibihan, J.J.; Soares, A.; Lamounier, E. A VR-Based Serious Game Associated to EMG Signal Processing and Sensory Feedback for Upper Limb Prosthesis Training. In Entertainment Computing—ICEC 2021; Hauge, J.B., Cardoso, J., Roque, L., Gonzalez-Calero, P.A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; Volume 13056. [Google Scholar]

- Greene, R.J.; Kim, D.; Kaliki, R.; Kazanzides, P.; Thakor, N. Shared Control of Upper Limb Prosthesis for Improved Robustness and Usability. In Proceedings of the 9th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), Seoul, Republic of Korea, 21–24 August 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Chan, W.P.; Sakr, M.; Quintero, C.P.; Croft, E.; der Loos, H.F.M.V. Towards a Multimodal System combining Augmented Reality and Electromyography for Robot Trajectory Programming and Execution. In Proceedings of the 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 419–424. [Google Scholar]

- Fukuda, O.; Tsuji, T.; Kaneko, M.; Otsuka, A. A Human-Assisting Manipulator Teleoperated by EMG Signals and Arm Motion. IEEE Trans. Robot. Autom. 2003, 19, 210–222. [Google Scholar] [CrossRef]

- Vogel, J.; Castellini, C.; van der Smagt, P. EMG-based teleoperation and manipulation with the DLR LWR-III. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 672–678. [Google Scholar] [CrossRef]

- Resnik, L.; Borgia, M.; Heinemann, A.W.; Clark, M.A. Prosthesis satisfaction in a national sample of Veterans with upper limb amputation. Prosthet. Orthot. Int. 2020, 44, 81–91. [Google Scholar] [CrossRef] [PubMed]

- Cipriani, C.; Zaccone, F.; Micera, S.; Carrozza, M.C. On the Shared Control of an EMG-Controlled Prosthetic Hand: Analysis of User–Prosthesis Interaction. IEEE Trans. Robot. 2008, 234, 170–184. [Google Scholar] [CrossRef]

- Kyberd, P.J.; Holland, O.E.; Chappell, P.H.; Smith, S.; Tregidgo, R.; Bagwell, P.J.; Snaith, M. MARCUS: A two degree of freedom hand prosthesis with hierarchical grip control. IEEE Trans. Rehabil. Eng. 1995, 3, 70–76. [Google Scholar] [CrossRef]

- Yang, D.; Liu, H. An EMG-based deep learning approach for multi-DOF wrist movement decoding. IEEE Trans. Ind. Electron. 2021, 69, 7099–7108. [Google Scholar] [CrossRef]

- Oskoei, M.A.; Hu, H. Support vector machine-based classification scheme for myoelectric control applied to upper limb. IEEE Trans. Biomed. Eng. 2008, 55, 1956–1965. [Google Scholar] [CrossRef] [PubMed]

- Rajapriya, R.; Rajeswari, K.; Thiruvengadam, S.J. Deep learning and machine learning techniques to improve hand movement classification in myoelectric control system. Biocybern. Biomed. Eng. 2021, 41, 554–571. [Google Scholar] [CrossRef]

- Phelan, I.; Arden, M.; Matsangidou, M.; Carrion-Plaza, A.; Lindley, S. Designing a virtual reality myoelectric prosthesis training system for amputees. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Grebenstein, M.; Albu-Schäffer, A.; Bahls, T.; Chalon, M.; Eiberger, O.; Friedl, W.; Gruber, R.; Haddadin, S.; Hagn, U.; Haslinger, R. The DLR hand arm system. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3175–3182. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, Y.; Pancheri, F.; Lueth, T.C. LARG: A Lightweight Robotic Gripper with 3-D Topology Optimized Adaptive Fingers. IEEE/ASME Trans. Mechatron. 2022, 27, 2026–2034. [Google Scholar] [CrossRef]

| USERS | MODE 1 | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bottle | Croissant | Cup | Apple | Fizzy Drink | |||||||||||

| Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | |

| User 1 | 73 | 88 | 43 | 34 | 40 | 23 | 29 | 33 | 19 | 50 | 52 | 19 | 68 | 85 | 51 |

| User 2 | - | - | - | 139 | 132 | 27 | 80 | 130 | 23 | 98 | 125 | 11 | 57 | 100 | 15 |

| User 3 | - | - | - | 86 | 461 | 23 | 175 | 285 | 27 | 450 | 490 | 87 | 90 | 203 | 11 |

| User 4 | 210 | 282 | 15 | 154 | 207 | 23 | 162 | 266 | 15 | 261 | 314 | 23 | 104 | 207 | 15 |

| User 5 | 43 | 59 | 19 | 83 | 131 | 15 | 40 | 42 | 23 | 234 | 296 | 31 | 118 | 191 | 19 |

| User 6 | 252 | 246 | 19 | 97 | 168 | 11 | 148 | 248 | 15 | 276 | 313 | 31 | 98 | 190 | 11 |

| User 7 | - | - | - | - | - | - | 112 | 285 | 35 | 228 | 312 | 27 | 90 | 214 | 20 |

| User 8 | 231 | 260 | 15 | 114 | 179 | 19 | 189 | 293 | 31 | 379 | 274 | 59 | - | - | - |

| USERS | MODE 2 | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bottle | Croissant | Cup | Apple | Fizzy Drink | |||||||||||

| Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | |

| User 1 | 21 | 20 | 4 | 18 | 18 | 14 | 15 | 17 | 7 | 34 | 48 | 7 | 22 | 28 | 7 |

| User 2 | 42 | 50 | 4 | 19 | 32 | 4 | 37 | 50 | 4 | 42 | 51 | 7 | 34 | 71 | 8 |

| User 3 | 106 | 129 | 7 | 30 | 77 | 4 | - | - | - | 140 | 171 | 11 | 64 | 87 | 7 |

| User 4 | 129 | 141 | 4 | 29 | 98 | 13 | - | - | - | 187 | 237 | 17 | 86 | 117 | 10 |

| User 5 | 31 | 27 | 7 | 54 | 6 | 7 | 95 | 147 | 10 | 155 | 179 | 7 | 10 | 14 | 4 |

| User 6 | 111 | 135 | 4 | 25 | 89 | 7 | 96 | 155 | 11 | 139 | 158 | 14 | 77 | 146 | 10 |

| User 7 | 92 | 137 | 7 | 15 | 122 | 32 | - | - | - | 126 | 246 | 31 | 78 | 122 | 23 |

| User 8 | 131 | 153 | 19 | 26 | 77 | 10 | 108 | 155 | 2 | 146 | 211 | 4 | 91 | 120 | 14 |

| USERS | MODE 3 | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bottle | Croissant | Cup | Apple | Fizzy Drink | |||||||||||

| Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | Flex | Ext | Co | |

| User 1 | 2 | 0 | 0 | 8 | 13 | 2 | 4 | 4 | 0 | 2 | 6 | 0 | 6 | 13 | 0 |

| User 2 | 5 | 2 | 0 | 3 | 7 | 0 | 3 | 4 | 0 | 3 | 30 | 0 | 2 | 10 | 0 |

| User 3 | 2 | 0 | 0 | 2 | 2 | 0 | 6 | 5 | 0 | 2 | 6 | 0 | 2 | 13 | 0 |

| User 4 | 11 | 0 | 0 | 2 | 4 | 0 | 3 | 8 | 0 | 2 | 19 | 0 | 4 | 12 | 0 |

| User 5 | 11 | 0 | 0 | 4 | 8 | 0 | 5 | 4 | 0 | 5 | 11 | 0 | 9 | 34 | 0 |

| User 6 | 3 | 0 | 0 | 3 | 2 | 0 | 2 | 4 | 0 | 3 | 7 | 0 | 3 | 8 | 0 |

| User 7 | 3 | 0 | 0 | 2 | 17 | 0 | 3 | 29 | 0 | 3 | 7 | 0 | 3 | 40 | 1 |

| User 8 | 4 | 11 | 0 | 3 | 17 | 0 | 2 | 4 | 0 | 3 | 11 | 0 | 2 | 18 | 0 |

| Users | Total Test Time (min) | Flexion Threshold (µV) | Extension Threshold (µV) | SUCCESS Rate (%) |

|---|---|---|---|---|

| User 1 | 58′01″ | 20 | 30 | 100 |

| User 2 | 45′50″ | 40 | 50 | 93.33 |

| User 3 | 58′56″ | 40 | 60 | 86.67 |

| User 4 | 50′04″ | 70 | 60 | 93.33 |

| User 5 | 50′45″ | 60 | 100 | 100 |

| User 6 | 42′07″ | 30 | 60 | 100 |

| User 7 | 51′ | 20 | 30 | 80 |

| User 8 | 57′ | 30 | 50 | 93.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Polo-Hortigüela, C.; Maximo, M.; Jara, C.A.; Ramon, J.L.; Garcia, G.J.; Ubeda, A. A Comparison of Myoelectric Control Modes for an Assistive Robotic Virtual Platform. Bioengineering 2024, 11, 473. https://doi.org/10.3390/bioengineering11050473

Polo-Hortigüela C, Maximo M, Jara CA, Ramon JL, Garcia GJ, Ubeda A. A Comparison of Myoelectric Control Modes for an Assistive Robotic Virtual Platform. Bioengineering. 2024; 11(5):473. https://doi.org/10.3390/bioengineering11050473

Chicago/Turabian StylePolo-Hortigüela, Cristina, Miriam Maximo, Carlos A. Jara, Jose L. Ramon, Gabriel J. Garcia, and Andres Ubeda. 2024. "A Comparison of Myoelectric Control Modes for an Assistive Robotic Virtual Platform" Bioengineering 11, no. 5: 473. https://doi.org/10.3390/bioengineering11050473

APA StylePolo-Hortigüela, C., Maximo, M., Jara, C. A., Ramon, J. L., Garcia, G. J., & Ubeda, A. (2024). A Comparison of Myoelectric Control Modes for an Assistive Robotic Virtual Platform. Bioengineering, 11(5), 473. https://doi.org/10.3390/bioengineering11050473