1. Introduction

Lung cancer remains one of the most significant health concerns worldwide, with high morbidity and mortality rates. It is primarily associated with exposure to tobacco smoke, either through active or passive smoke. However, as mentioned in Dubin et.al. [

1], non-smokers may also develop lung cancer due to environmental pollutants, occupational hazards, genetic predisposition, and underlying lung diseases. The risk increases with prolonged exposure to carcinogens, such as asbestos, radon, and certain industrial chemicals. In recent years, there has been increasing emphasis on early detection and intervention of lung cancer. At early stages, the tumor is localized; hence the treatment options are more effective, and the chances of successful intervention and cure are significantly high. Unfortunately, as sermonized in Selman et al. [

2], lung cancer often remains asymptomatic or presents nonspecific symptoms until it reaches an advanced stage, making early detection challenging.

Various detection methods have been employed to identify lung cancer early. Infante et al. [

3], utilized chest X-rays and Computed Tomography (CT) scan imaging techniques to detect suspicious lung nodules, masses, or other abnormalities that may indicate the presence of lung cancer. The Sputum Cytology method used in Thunnissenet al. [

4] analyzes the sputum sample for the presence of cancer cells. There are also procedures such as Bronchoscopy studied in Andolfiet al. [

5] that allow the collection of lung tissue samples and visualization of the airways using a thin, flexible tube and camera. All of the above methods or procedures are conducted in the primary stages after the spread of lung cancer cells in the individual. However, Zhu et al. [

6] used the method of microarray-based gene expression analysis to detect lung cancer at its earliest stages, facilitating timely intervention and improving patient outcomes. The microarray analysis compares healthy lung tissue’s gene expression profiles with cancerous tissue. The analysis aids the researchers in identifying distinct patterns and signatures associated with different lung cancer types.

1.1. Review of Previous Works

As mentioned, microarray based gene expression analysis is widely used for the detection and classification of various health conditions. A microarray is a tool used in molecular biology to study gene expression. As described by Churchill et al. [

7], microarray is a collection of microscopic spots containing DNA molecules representing individual genes. These spots are arranged in a grid pattern on a small glass slide or a silicon chip. Microarray gene expression analysis can investigate changes in gene expression patterns in cancer cells compared to normal cells, as sermonized in Ross et al. [

8]. By simultaneously measuring the expression levels of thousands of genes, microarrays can provide a comprehensive view of the changes in cancer cells. Also, as reported by Reis et al. [

9], microarray gene expression analysis can be utilized to classify different types of cancer, which can help and guide treatment decisions at early stages.

As indicated in Lapointe et al. [

10], different types of cancer have distinct gene expression profiles, which reflect the underlying molecular and genetic changes that drive the disease. In Dwivedi et. al. [

11], researchers have used microarray analysis to identify gene expression patterns that distinguish between different types of leukemia, such as acute lymphoblastic leukemia (ALL) and acute myeloid leukemia (AML). Similarly, Rody et al. [

12] used microarray analysis to distinguish between different breast cancer subtypes, such as estrogen receptor-positive and HER2-positive breast cancer. In Sanchez et al. [

13], microarray gene expression analysis is employed to predict the chances of tumor and lung cancer. Kerkentzes et al. [

14] classified Adenocarcinoma (Adeno) and Mesothelioma (Meso) lung cancer from microarray genes. Adeno and Meso are considered serious cancer conditions for diverse reasons mentioned in Wagner et al. [

15]. Adeno can exhibit rapid tumor growth and potentially spread to other body parts, including distant organs. Meso is extremely aggressive, making the treatment difficult when diagnosed at advanced stages. Both Adeno and Meso have limited treatment options compared to other forms of lung cancer. Although surgery, chemotherapy, and radiation therapy can be used, these treatments may not always be curative, especially if the cancer has spread all over the body.

Further, these cancer conditions develop resistance to standard cancer treatments due to genetic mutations in tumor cells, limiting the effectiveness of targeted therapies. Above all, the significance of this research investigation is the poor prognosis of Adeno and Meso conditions compared to other forms of lung cancer. The outcome of this research will help in timely detection, early intervention, and personalized treatment approaches to improve the conditions of cancer-diagnosed individuals. Microarray gene expression analysis-based lung cancer detection offers several advantages over traditional histopathology diagnostic methods, as sermonized in Weigelt et al. [

16]. Unlike histopathology, gene expression analysis provides molecular insights into the underlying biology of lung cancer, which helps to understand lung cancer subtypes, disease progression, and treatment responses. The molecular information goes beyond the structural or morphological features captured by imaging or histopathology, providing a deeper understanding of the disease. Also, traditional diagnostic methods may only detect lung cancer when it has already reached a more advanced stage. Microarray gene expression analysis also brings up potential biomarkers with higher sensitivity and specificity than traditional diagnostic methods. All of these benefits motivate us to use microarray gene expression analysis for early diagnosis of lung cancer.

1.2. Review of Feature Extraction Techniques

Feature extraction techniques are crucial in acquiring relevant features from microarray gene data. As described in Hiraet al. [

17], these methods aim to reduce the dimensionality of the data while retaining important information for subsequent analysis. The high dimensional data are reduced so that it can explain most of the variance in the dataset. The reduced data also contains the essential patterns and relationships between genes, entitling a more manageable and meaningful representation of the data. Feature extraction methods are advantageous in removing noise by extracting the underlying signal or patterns in the data by focusing on the most significant features.

In this paper, we employ Short-Time Fourier Transform (STFT) for analyzing and expressing the microarray gene expression data. The STFT provides a time-frequency representation of the signal, which can capture changes in gene expression over time. The STFT provides information about the temporal localization of signal events, allowing researchers to identify specific time points or intervals where gene expression changes occur as mentioned in Qi et al. [

18]. This representation can also reveal temporal patterns and relationships between genes, allowing for the identification of dynamic gene expression changes associated with specific conditions or biological processes. The STFT can also extract frequency-domain features from gene expression data. These features provide additional information about the distribution or characteristics of gene expression patterns that help to improve classification accuracy. Also, STFT identifies specific frequency bands representing relevant genes associated with biological processes and disease conditions.

1.3. Review of Feature Selection Techniques

After feature extraction, feature selection methods further improve lung cancer classification from microarray gene expression data. As described in Abdelwahab et al. [

19], these methods help identify a subset of relevant genes that are informative for distinguishing between different lung cancer types. In this paper, we employ metaheuristic feature selection methods namely Particle Swarm Optimization (PSO) and Harmonic Search (HS) for selecting this subset of genes most discriminatory for lung cancer classification. As elaborated in Shukla et al. [

20], by focusing on the most discriminative genes, these metaheuristic methods can reduce the risk of overfitting and improve the generalization capability of the classifier, leading to better performance. Also, this is extremely useful for classifying the LH2 dataset, as the number of samples is limited. Metaheuristic feature selection methods can also handle correlations and dependencies between genes to remove redundant genes while maximizing their relevance to lung cancer classification. Overall, the metaheuristic feature selection methods identify a biologically relevant gene subset and can give insights into the underlying biological processes associated with lung cancer. But, the choice of metaheuristic algorithm for the feature selection method plays a vital role in selecting the relevant genes from the dataset. So it is important to evaluate different metaheuristic methods and consider their performance, computational complexity, and suitability for the specific problem.

1.4. Review of CNN Methodology

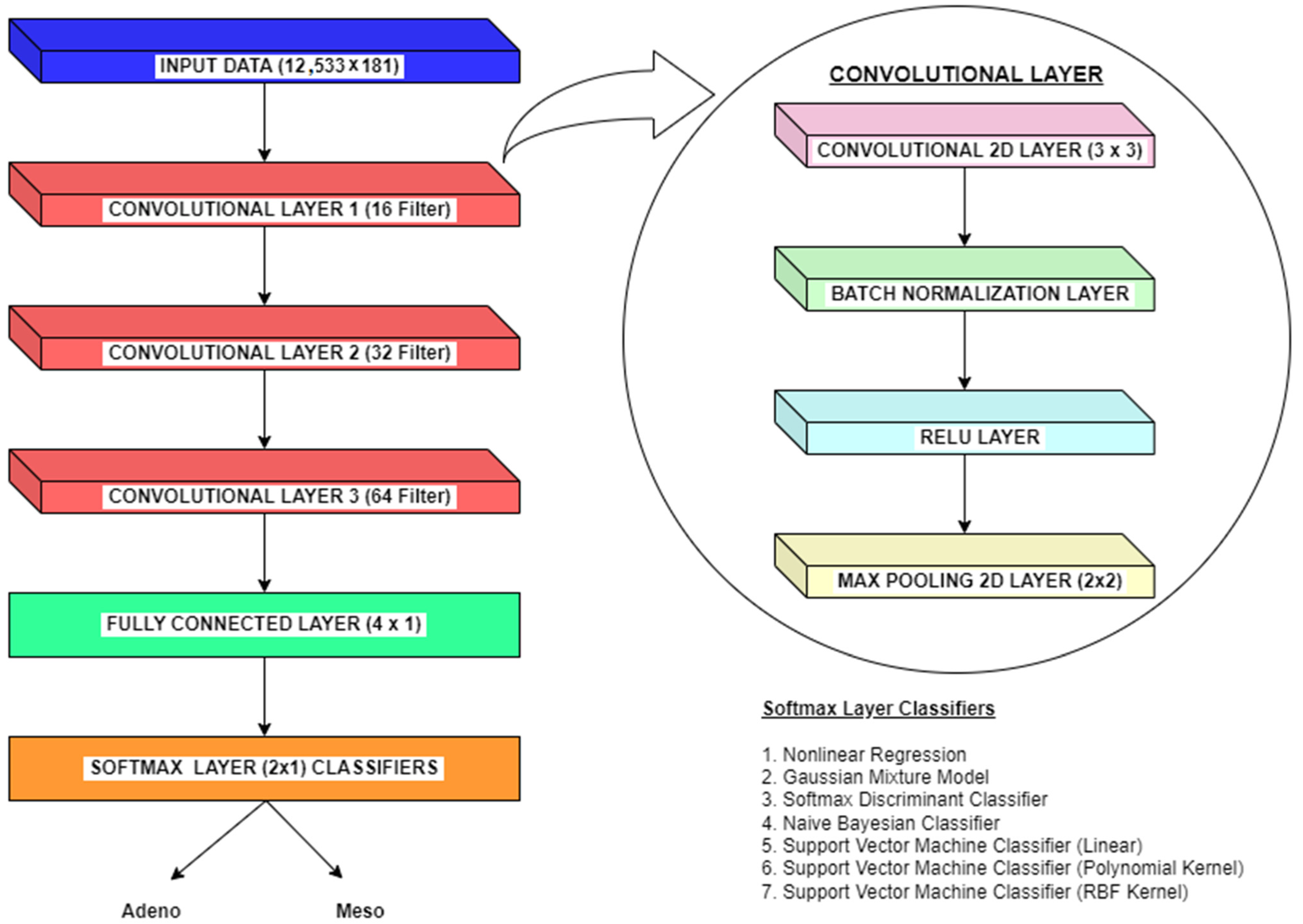

Apart from machine learning classifiers, Convolutional Neural Networks (CNNs) may make a significant difference in the classification of lung cancer from microarray gene expression data. Without manual feature extraction and selection methods, CNNs can automatically learn relevant features from raw input data. In the context of gene expression data, CNNs can extract meaningful patterns and relationships between genes. This methodology allow CNNs to capture complex interactions that may be difficult to discern manually. For gene expression data, genes can be considered spatial entities, and their expressions across samples can be treated as spatial patterns. Here, CNNs excel at learning hierarchical representations, starting from low-level gene expression features and gradually building up to higher-level features that capture more abstract characteristics. Also, CNNs can identify gene expression patterns relevant to lung cancer, even if they occur in different regions or orders within the microarray. CNNs employ parameter optimization and deduce conclusions better from limited training data like microarray gene expression datasets. Further, CNNs are robust to inherent noise, variability, and batch effects variations. CNNs can learn to identify important patterns despite these variations, making them suitable for analyzing microarray gene expression datasets. Also, CNNs models inherently form nonlinear relationships between features crucial for capturing the complex and nonlinear interactions within gene expression data. So we also use experiments on employing CNN methodology to classify lung cancer from microarray gene expression data. The overall methodologies for dimensionality reduction and classification of microarray gene expression data for lung cancer employed in this paper are shown in

Figure 1.

Despite the numerous benefits of using CNNs for classifying lung cancer from microarray gene expression data, they also have certain limitations. Even though CNNs are good at learning features, it is often challenging to interpret the physical significance behind the prediction and classification of gene expression patterns from the learned features due to their black-box nature. Further, CNNs are usually prone to overfitting due to the limited dataset availability; hence, it is important to carefully tune and select the parameters in the CNN model. The feature extraction and selection methods can solve the overfitting problem to a certain extent in CNN models. However, these stages also add computational complexity to CNNs’ inherent complexity. For the microarray gene expression dataset used in this paper (Lung Harvard 2 Dataset), the imbalanced class distributions in the lung cancer dataset can pose challenges. Due to class imbalance, CNNs may tend to bias towards the Adeno class, leading to lower accuracy for the Meso class. The performance of CNN can be improved by carefully choosing tuning parameters like learning rate, network architecture, and regularization strength. Also, suitable classifiers are integrated into the softmax layer to improve classification performance in CNN.

1.5. Description of the Dataset

In this research, we have utilized the Lung Harvard 2 (LH2) medical imaging dataset [

21]. This dataset contains translated microarray data of patient samples affected by Adenocarcinoma (Adeno) and Mesothelioma (Meso). The gene expression ratio technique is the translation technique used in the dataset for a simpler representation. The technique can accurately differentiate between genetically disparate and normal tissues based on thresholds. The dataset contains a total of 150 Adeno and 31 Meso tissue samples. The 12533x 1 genes characterize each of the subject. The final row of the dataset is used for labelling Adenocarcinoma and malignant Mesothelioma samples. This microarray gene expression dataset is widely used for early and authentic diagnosis of lung cancer classification.

1.6. Relevance of This Research

As mentioned earlier, lung cancer detection from microarray gene data helps to diagnose cancer at an earlier stage, which is crucial as the treatment options are limited in advanced stages. Thus, early detection can significantly improve patient outcomes and survival rates. In this paper, we perform the lung cancer classification of Adeno and Meso cancer from the LH2 dataset. The dataset is of high volume and contains inherent nonlinearity and class imbalance. Therefore, it is important to adopt suitable preprocessing and classification methods to enhance the classification performance. Thus, learning on microarray gene data analysis enables the identification of potential biomarkers associated with lung cancer to help in diagnosis, prognosis, and monitoring treatment response.

2. Methodology

In this research, a two-level strategy is adopted to improve the overall efficiency of the classifiers. First, feature extraction is performed using STFT to solve the curse of dimensionality problem. The dimension of the dataset is reduced to leverage the computational overhead of the classifier. Further, the second step involves selecting features from PSO and HS metaheuristic algorithms. The feature selection step further improves the classification methodology by selecting relevant features and patterns and reducing the risk of overfitting and underfitting.

2.1. Feature Extraction Using STFT

The Short-Time Fourier Transform (STFT) is a frequency domain analysis of the information over a short period. The STFT, when applied to microarray gene data, can reduce the data dimension by extracting relevant and useful features. Gupta et al. [

22] have performed QRS Complex Detection Using STFT. The authors have identified that STFT is useful in providing a time-frequency representation of the data, which helps researchers investigate how gene expression levels change over time and across different frequency components. Identifying specific time intervals where certain frequency components are prominent is possible with STFT, giving a localized representation of frequency content over time. For microarray gene expression analysis, this feature of STFT is useful in identifying genes that exhibit temporal patterns active during specific time intervals. Moreover, STFT reduces dimensionality by extracting the important genes or gene clusters associated with specific frequency components. The dimensionality of the microarray gene expression dataset is reduced to 2049 × 150 and 2049 × 31 for Adeno and Meso subjects, respectively. For performing STFT, the Blackman window that minimizes spectral leakage is used as the windowing function. Thus, STFT can provide biological insights by finding the frequency patterns and fundamental relationships in the dataset.

Here,

x[

n] represents the input data having length

N with

n = 0, 1, 2, …

N−1. The STFT window is represented by

w[

n] having length

M with

m = 0, 1, 2, … M−1, j = √ (−1) denotes the complex number. The Blackman window is given by the expression

Next section is an analysis on the various statistical parameters associated with STFT dimensionality reduction.

2.2. Statistical Analysis on STFT

After the dimensionality reduction methods on microarray genes, the resultant outputs are analyzed by the statistical parameters such as mean, variance, skewness, kurtosis, Pearson correlation coefficient (PCC), f-test,

t-test,

p-value, and Canonical correlation Analysis (CCA) to identify whether the outcomes are representing the underlying microarray genes properties in the reduced subspace.

Table 1 shows the statistical features analysis for the STFT Dimensionally Reduced (DR) Adeno Carcinoma and Meso Cancer Cases of microarray genes. As mentioned in

Table 1, the STFT features show higher mean, variance and kurtosis values among the two classes. Skewness values are observed to be similar with positive skewness. PCC values indicate the high correlation within the class of the attained outputs. The permutation and sample entropy for the Adeno and Meso cancer cases are similar. The f-test,

t-test and

p-value exhibit no significant nature of the dimensionally reduced outputs of the microarray gene. The statistical f-test and

t-test are performed for the Null Hypothesis. The

t-test value is low for the Meso cancer class but does not indicate significance as the

p-value is higher than 0.01. This statistical analysis indicates that the values are associated with non-Gaussian and nonlinear ones. The histogram, Normal probability plots, and Scatter plots of DR techniques output further examine the same. CCA visualizes the correlation of DR methods outcomes among the Adeno Carcinoma and Meso Cancer Cases. The low CCA values in

Table 1 indicate that the DR outcomes are less correlated among the two cancer classes.

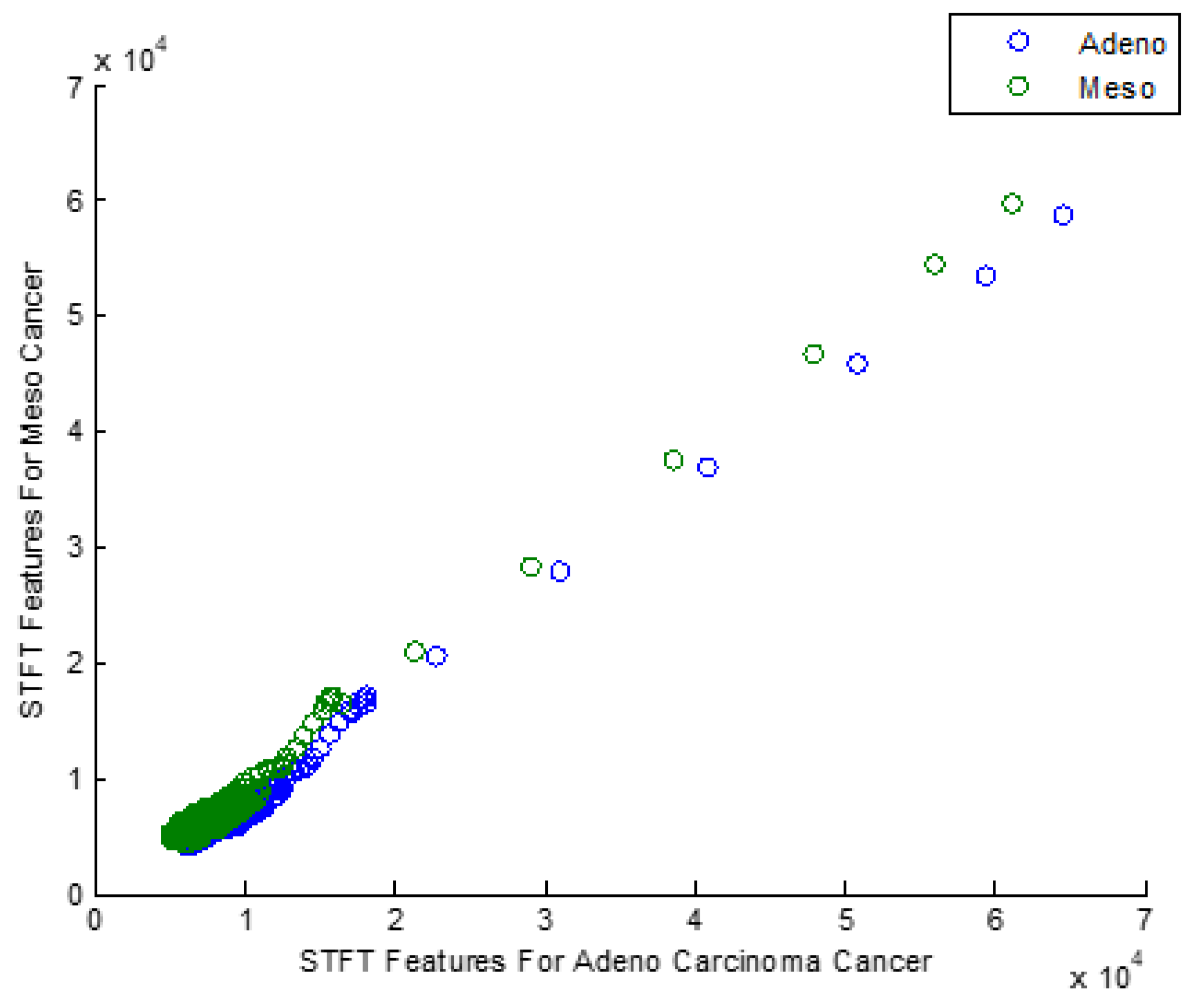

Figure 2 explores the Scatter plot for the STFT-based Dimensionality Reduction Method in Meso and Adeno Carcinoma Cancer Classes.

After applying STFT-DR, the Meso and Adeno Carcinoma Cancer dataset seems clustered and overlapped in the scatter plot as most data are amalgamated into a single group. The data after STFT-DR also does not show any signs of Gaussian distribution. Therefore, the scatter plot emphasizes the need for the feature selection process that enhances the classification accuracy of the classifiers.

2.3. Feature Selection Using Metaheuristic Algorithms

As described before, the dimensionality reduction step reduces computational complexity and removes noise in the data. The feature selection step further reduces the number of features after the dimensionality reduction step making subsequent computations faster and more efficient. Also, the feature selection step acts as one more noise filter stage that captures only the relevant features that contribute to the underlying structure of the data. The feature selection step reveals the relevant features from the dimensionally reduced data. This subset of the significant features is the most informative one that holds the gene patterns and functionality to develop a simpler model. The feature selection using metaheuristic algorithms helps to overcome the problems of computationally expensive and noise-hidden datasets by employing efficient search strategies. The algorithms, namely PSO (Particle Swarm Optimization) and Harmony Search (HS), are known for effectively handling high dimensional and complex datasets like microarray gene datasets. Metaheuristic algorithms reduce the microarray gene expression datasets considering the original number of samples and the required number of genes. The reduced dataset contains complex relationships between genes and their associations with biological processes. Metaheuristic algorithms evaluate the collective behavior of the population to arrive at optimal feature subsets. The optimal feature subset assists the subsequent stages, like disease classification.

PSO is an optimization algorithm inspired by the food search and social behavior of a flock of birds. When applied in the feature selection step, the PSO explores and refines the population by moving through the entire search space, balancing exploration and exploitation. Over the iterations, PSO gradually refines the solution space towards the global optimum. The implementation of PSO is simple and efficient as it involves fewer control parameters than other metaheuristic algorithms. Therefore, PSO converges relatively fast, making it computationally efficient for feature selection from large datasets like microarray gene expression datasets. The HS, on the other hand, is inspired by the process of improvisation in music. The generation of harmonious musical notes represents potential solutions. The HS employs a unique approach to enhance the search space exploration by dodging local optima. The HS incorporates adaptive parameters that dynamically change during the search process. This behavior is useful because HS can easily adapt to the characteristics of the microarray gene expression dataset. The HS is also robust to noise and nonlinearities in data, making it a suitable method for feature selection from microarray datasets. So, based on the peculiar characteristics possessed by PSO and HS, these algorithms are used as the feature selection step in this research to understand gene interactions, capture complex relationships, balance exploration and exploitation, and thus identify the most relevant genes. Both objective and fitness functions in the PSO problem are to minimize the Mean Square Error (MSE) of the training process. The relevant features selected after the metaheuristic method will further reduce the dimensionality of the STFT feature extracted values to 2049 × 30 and 2049 × 6 for Adeno and Meso cancer subjects, respectively.

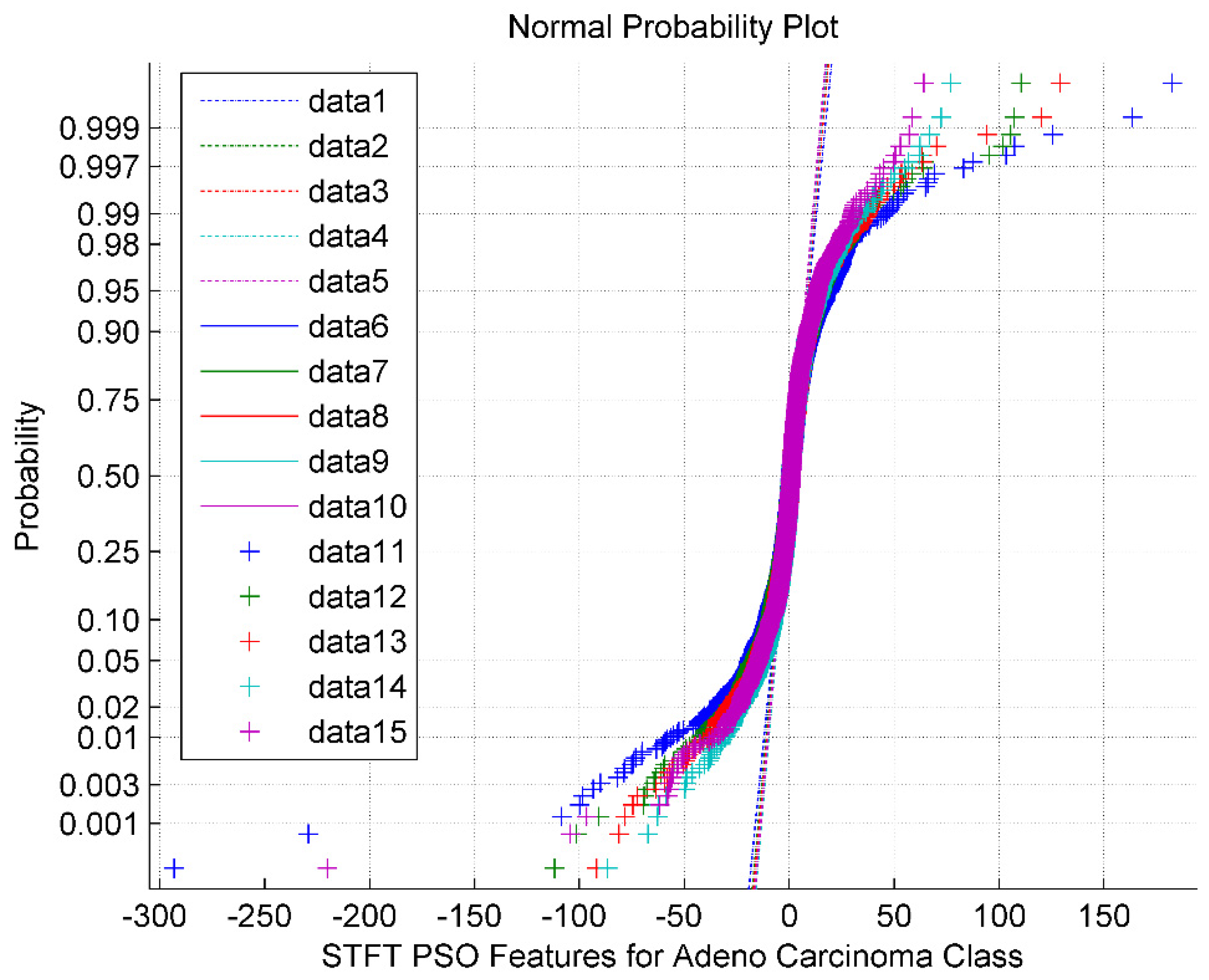

The normal probability plot for the STFT DR method followed by the PSO feature Selection technique for Adeno Carcinoma Cancer Classes is shown in

Figure 3. It is also observed from the

Figure 3 that the PSO feature selection turned around the STFT features into a clustered one with a sigmoid structure having a mean value of 2.56. The normal plot also implies that the PSO features are non-Gaussian and exhibit minimum segregation. The data 1 through data 5 represents the reference line. The data 6 through data 10 represents the upper bound. The data 11 through data 15 are the feature selected PSO values for the Adeno class.

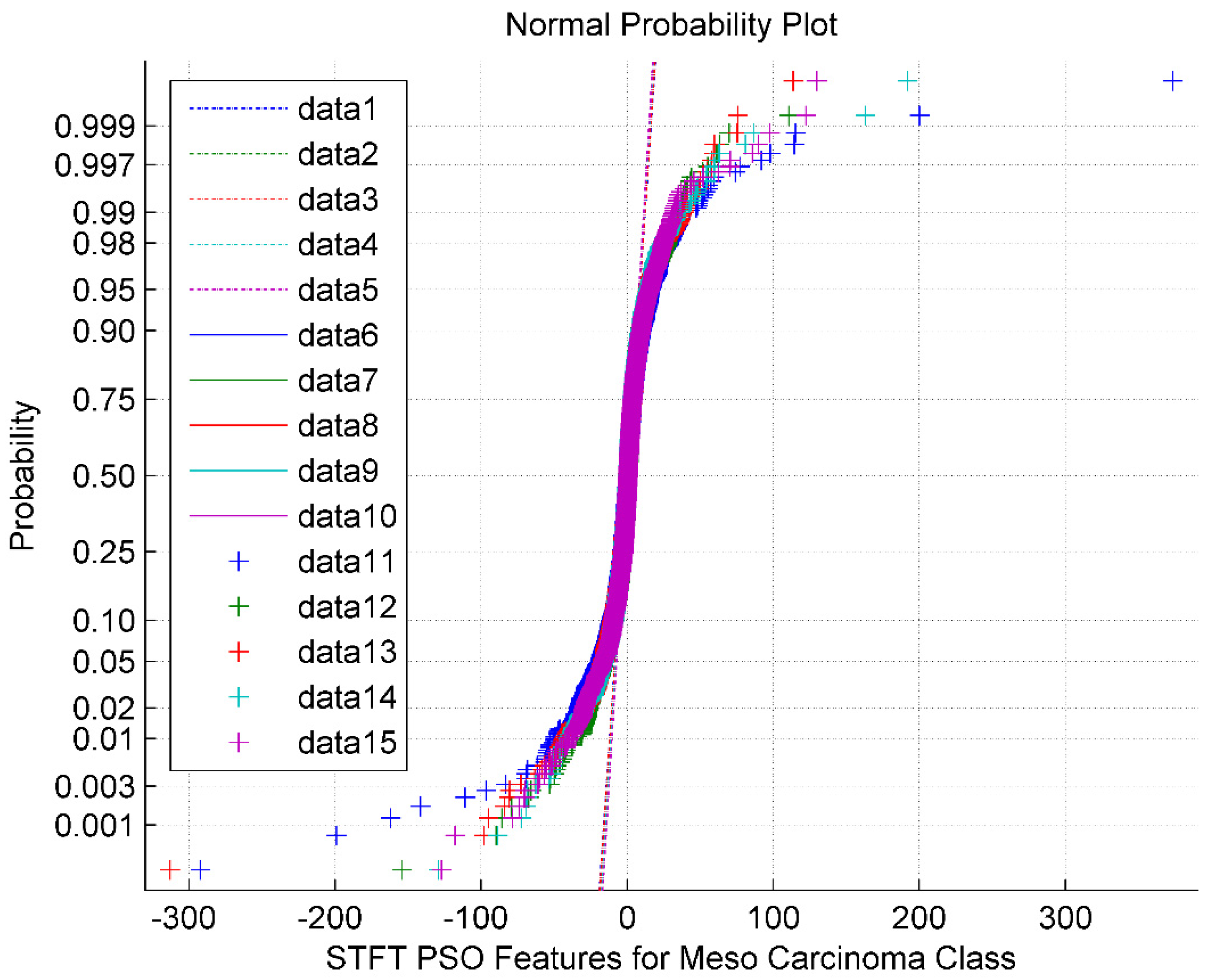

The normal probability plot for the STFT DR method followed by the PSO feature Selection technique for Meso Carcinoma Cancer Classes is shown in

Figure 4. It is also observed from

Figure 4 that the PSO feature selection clustered the STFT features around its mean value of 2.47. The normal plot also looks like a sigmoid structure. The normal plot also implies that the PSO features are non-Gaussian and exhibit minimum segregation. The data 1 through data 5 represents the reference line. The data 6 through data 10 represents the upper bound. The data 11 through data 15 are the feature selected PSO values for the Meso class.

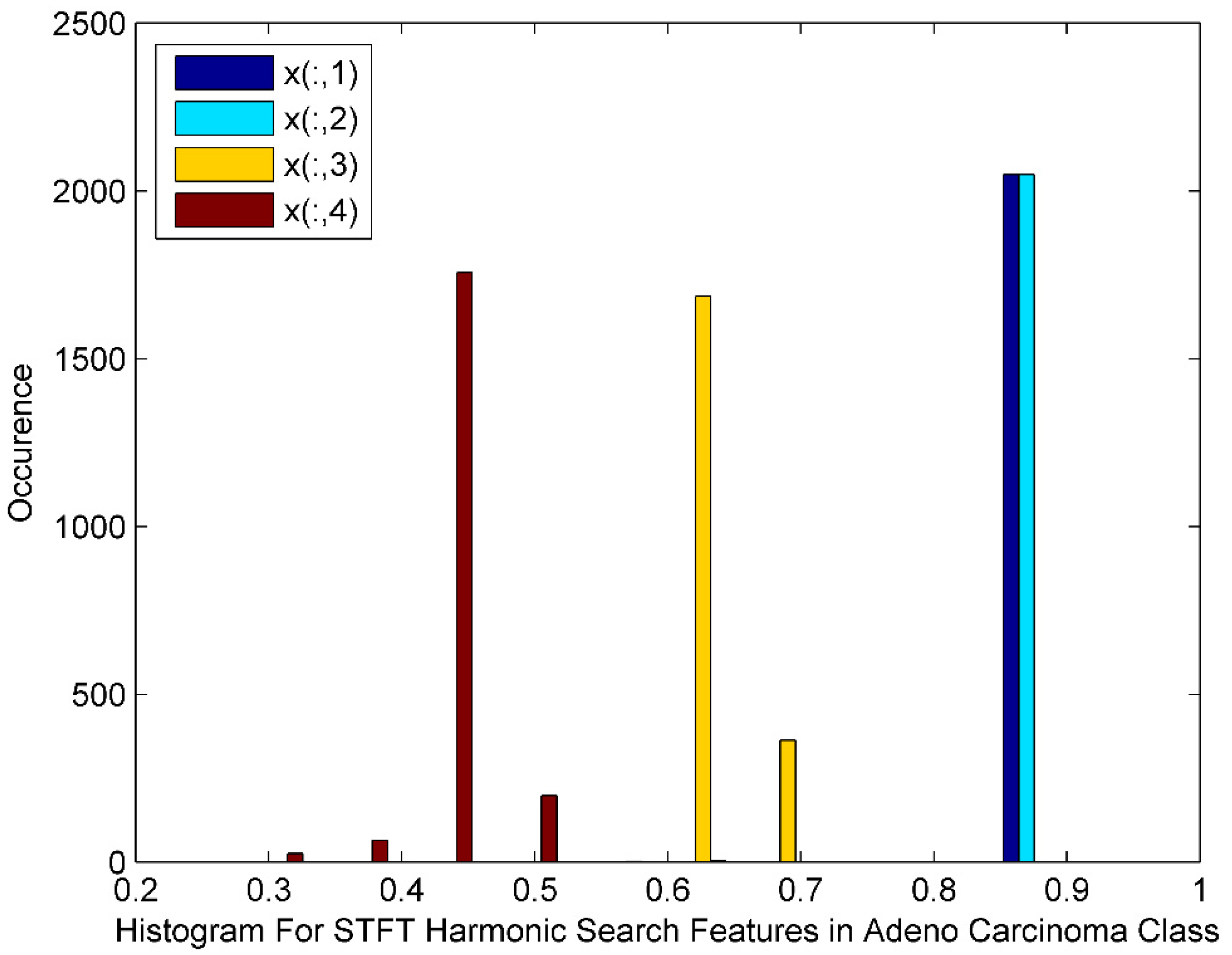

Figure 5 displays the histogram of the STFT DR method followed by Harmonic Search Feature selection techniques for Adeno Carcinoma Cancer classes. The histogram also depicts harmonic search features with outliers, wide gaps, down trends and non-Gaussian nature. The STFT dimensionality reduced Harmonic Search feature selection data for four Adeno Carcinoma class patients is denoted by x(:,1), x(:,2), x(:,3), and x(:,4).

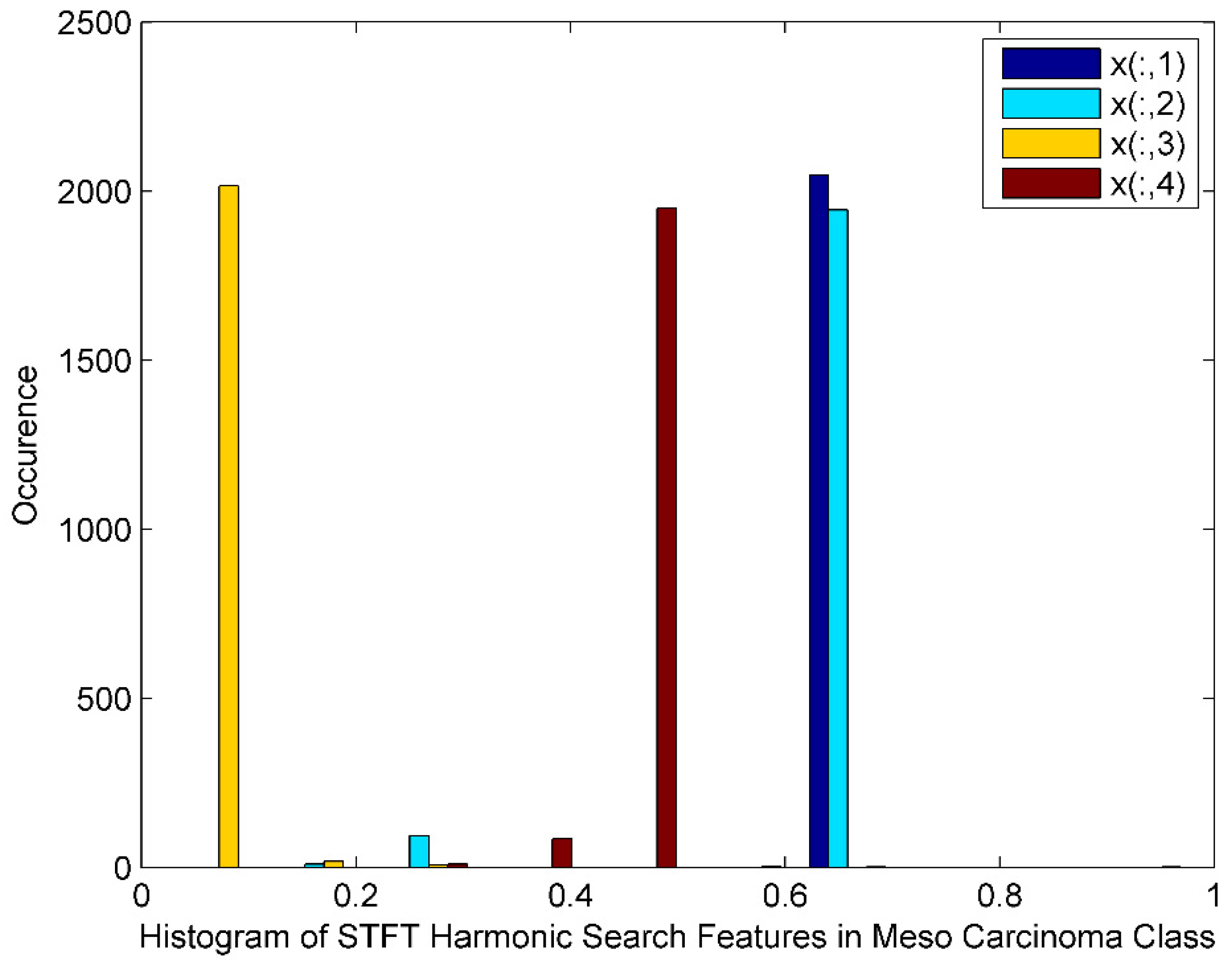

Figure 6 exhibits the histogram of the STFT DR method followed by Harmonic Search Feature selection techniques for Meso Carcinoma Cancer classes. The histogram also displays harmonic search features with outliers, wide gaps, skewed, and non-Gaussian nature. The STFT dimensionality reduced Harmonic Search feature selection data for four Meso Carcinoma class patients is denoted by x(:,1), x(:,2), x(:,3),and x(:,4).

Table 2 shows the analysis of the Friedman test in Feature selection methods on STFT Data. It is observed from

Table 2 that the PSO and Harmonic Search for the both cancer classes and the feature selection methods depicts the non-significance

p-values.

The Friedman test is one type of statistical test which tests the stationarity and two-way analysis of variance. It is an extension of the sign test for matched pairs and is used when the data arise from more than two related samples. It is applied to determine whether any dependent variable significantly affects the feature selection method. It is employed in this research as a measuring tool to compare the performance of feature selection algorithms on the same dataset and assess their relative performance. Thus, the Friedman test helps to identify the most effective method among the evaluated set. Since it is a non-parametric test, it is particularly useful when data have heterogeneity or are not following a normal distribution. The Friedman test also provides a statistical measure to determine whether the observed differences in performance are significant. Overall, the test is useful in deciding the relative efficacy of the feature selection techniques. At first, suitable parameters attained from the Friedman test are decided, and performance is evaluated multiple times to account for variability. After that, methods are ranked such that the best method receives a rank of 1, the second best receives a rank of 2, and so on. Consecutively average rank is also calculated. Finally, the Friedman statistic is computed using the following expression.

Here, k represents the number of feature selection methods, n denotes the number of repetitions, and R indicates the average rank of each method. This research measures the efficacy of feature selection methods, namely PSO and HS, on STFT data. The parameters from the Friedman test are X2r statistic, p-value, and its significance for p < 0.01, confidence level.

3. Classification Using Machine Learning Techniques

This research employs various machine learning classifiers for lung cancer classification. The choice of the classifier is very significant in the classification methodology. Martín et al. [

23] employed a Nonlinear Regression classifier for heterogeneous and highly nonlinear datasets related to the manufacturing process. For this research problem, such a classifier can capture complex nonlinear dependencies between gene expression levels and class labels is suitable. Linear classifiers cannot effectively capture these complex patterns and nonlinear decision boundaries. Also, as discussed in Dalatu et al. [

24], Nonlinear regression models can be more robust to outliers than linear classifiers. Ficklin et al. [

25] have utilized Gaussian Mixture Models (GMMs) for the classification of microarray gene data for various cancer types, namely lower-grade glioma, thyroid, glioblastoma, ovarian, and bladder cancer. Since GMMs are probabilistic models, they can work effectively on microarray gene expression datasets that are combinations of multi-modal distributions. The GMMs can capture complex data distributions by representing them as a combination of Gaussian components. Further, each Gaussian component in the mixture model can represent a different class, and the combination of these components enables the modeling of nonlinear decision boundaries that can be particularly useful when large amounts of samples overlap among the classes. Shah et al. [

26] used the Softmax Discriminant Classifier for lung classification from microarray gene expression. The classifier works on estimating probability for each class, and it produces a probability distribution over all possible classes. This property is useful and suitable for multi-class classification problems, such as microarray gene expression data. The Softmax Discriminant Classifier is also computationally efficient as it incorporates regularization techniques to prevent overfitting and improve generalization. Ahmed et al. [

27] used Naive Bayesian Classifier (NBC) to classify head and neck cancer from microarray gene expression data. NBCs are based on probabilistic principles, specifically Bayes’ theorem. The classifier computes the probabilities of diverse classes based on the feature values and allocates the class label with the highest probability. NBC framework also facilitates the integration of prior knowledge or expert beliefs through prior probabilities. Moreover, NBCs are computationally efficient, making them particularly suitable for large-volume and sparse datasets like microarray gene expression datasets. Vanitha et al. [

28] used a Support Vector Machine (SVM) for gene expression-based data classification. SVM classifiers work on Vapnik’s statistical learning theory and thus handle the curse of dimensionality problem by finding the optimal hyperplanes in high-dimensional feature space. This process maximizes the margin between classes and provides robustness to outliers. In SVM, the mapping of higher dimensional feature space is achieved through the kernel trick. SVMs often yield sparse solutions and select the most informative genes contributing to the classification task. The sparse solution reduces the computational burden and improves generalization and classification accuracy. All of the above features of SVM make it a suitable classifier for microarray gene expression datasets. The following sections discuss the classification methodology of each classifier mentioned above.

3.1. Nonlinear Regression (NLR)

The nonlinear regression classification is another variant of the linear regression classifier that associates variables in the form of y = mx + c. Unlike linear regression models, nonlinear regression models associate variables by randomly changing the variable y. NLR strives to minimize the MSE of the individual observations in the dataset. The data points are adapted to a nonlinear function through multiple iterations for building a classifier model. The Euclidean distance is initially calculated from the dataset’s target and input data through the following expression as in Dai et al. [

29].

Here,

T represents target data, and

X represents input data with

j as the sample index. The Euclidean distance is then projected to a three-dimensional space using the following cuboid equation:

Later, the minimum value of the three-dimensional space, f = min (z) is computed.

The threshold function ‘g’ for the NLR classification problem is framed with the help of the minimum value of the three-dimensional space, f = min (z) and d

0 as the sum of the squares of mean deviation values as

Since the calculation of d0 involves least squares method, the methodology employed here may be also called as a least square based NLR.

3.2. Gaussian Mixture Model

The Gaussian Mixture Model (GMM) is a probabilistic model for classification and clustering tasks. The model considers that the data points are generated from a mixture of Gaussian distributions. The probability Density Function (PDF) of the Gaussian distribution having mean μ and covariance Ԑ is given by Ge et al. [

30].

where

x is the data point,

n hold the dimensionality of the data, and

T indicates the transpose operation. The GMM assumes that the observed data are generated from a mixture of K Gaussian distributions. The posterior probability of the

kth Gaussian component with mixing coefficient π

k is given by:

µ

k and Ԑ

k are the covariance matrix and mean vector for the

kth component with

k as total number of components in the mixture. In the next step, the parameters of the GMM, including the mixing coefficients, means, and covariances, will be estimated using the Maximum Likelihood Estimation (MLE) technique. In the next step, the parameters of the GMM, including the mixing coefficients, means, and covariances, will be estimated using the Maximum Likelihood Estimation (MLE) technique. The MLE estimation for the GMM is defined as the log-likelihood of the observed data.

where

θ represents the set of all parameters of the GMM and

N is the total number of observed data points. In the next step, the expectation minimization is performed through an iterative approach by computing posterior probabilities

p(

k/

x) for each data point and updating parameters

θ by maximizing the log-likelihood, considering the computed posterior probabilities.

3.3. Softmax Discriminant Classifier

The Softmax Discriminant Classifier uses the softmax function to transform the discriminant scores into class probabilities. At first, in SDC, the discriminant scores are calculated by taking the dot product between the feature vector

x and the weight vector

wk for each class

k, along with the bias term

bk. Mathematically, the discriminant score

zk for class

k is given by Hastie et al. [

31]

For the discriminant scores

z = [

z1,

z2…

zk] for

K classes, the softmax function calculates the probability of the input

x belonging to class

k using the following expression

The loss function measures the discrepancy between the predicted class probabilities and the true class labels. The typically used loss function for Softmax Discriminant Classification is the cross-entropy loss. For the training dataset with

N samples, the cross-entropy loss ‘

L’ is defined as

where

N is the number of samples in the training dataset,

yk(i) is the true label for sample

i, which is 1 if the sample belongs to class

k and 0 otherwise, and

is the predicted probability of class

k for sample

i. Finally, the weight vector

and bias term

are updated using gradient descent optimization method with learning rate α as follows.

3.4. Naive Bayesian Classifier (NBC)

The Naive Bayesian classifier (NBC) is efficient and straightforward and works on Bayes principles. NBC is a probabilistic classification algorithm based on the Bayes theorem and feature independence assumption. First, NBC calculates the posterior probability of a class using the prior probability and likelihood. For a given class

C and features

x1,

x2…

xn, posterior probability

is expressed as Berrar [

32].

For the class C, p(C) represents the prior probability, is the likelihood, and is the evidence probability.

In Naive Bayes approach is that the features are conditionally independent for the class. This assumption that simplifies the calculation of the likelihood can be expressed as

where

is the probability of feature

of the class

C. The

is estimated from the fraction of class

C training examples with the feature value

. Then the prior probability

can be estimated as the fraction of training examples belonging to class

C. Finally, to predict the class label for the features

, the algorithm calculates the posterior probability for each class and assigns the instance to the class with the highest probability.

3.5. Support Vector Machine Classifier (Linear)

In machine learning, Support Vector Machine (SVM) is a robust learning algorithm used for analyzing and classifying data. The SVM with a linear kernel model is the type of SVM that can solve linear problems by creating a line or hyperplane which separates the data into classes. The data that reside close to the hyperplane is considered the support vector. The expression for the hyperplane used in the binary classification problem can be written as Zhang et al. [

33].

Here,

f(

x) is the objective function with

x as the feature vector,

w as the weight vector perpendicular to the hyper plane and

b as the bias term, determining the offset of the hyper plane from the origin. SVM aims to find the hyperplane that maximizes the margin. The optimization problem for SVM linear classification with margin

is given by:

Here, represents the class label (+1 or −1) for the training example with feature vector .

From the above primal optimization problem, the dual optimization problem is derived by finding the Lagrange multipliers or dual variables for each training example. The dual problem reduces computational burden using optimal Lagrange multipliers

. Thus the SVM classification with the decision function

flinear(

x) is expressed as

If flinear(x) is positive, the instance is assigned to one class, and if it is negative, it is assigned to the other class.

3.6. Support Vector Machine Classifier (Polynomial)

SVM with a polynomial kernel is a variant of SVM that allows for nonlinear classification by mapping the input features into a higher-dimensional space using a polynomial function. The polynomial kernel function calculates the dot product between the mapped feature vectors in the higher-dimensional space. The polynomial kernel function

Kpoly(

x,

z) with

x and

z are pair of feature vectors is given by Zhang et al. [

33].

where

γ is the coefficient scaling the dot product,

r is the coefficient representing the independent term, and

d is the degree of the polynomial. Further, the decision function in SVM polynomial classification is defined as the linear combination of the kernel evaluations between the support vectors and the input feature vector with the bias term. The decision function

is given by:

Based on fpoly(x) value, the class assignment is performed in a similar way as performed in SVM (Linear) classifier.

3.7. Support Vector Machine Classifier (RBF)

The Radial Basis Function (RBF) can also be used as a kernel while using SVM classifier. Here, nonlinear mapping of the input features into a higher-dimensional space is performed using an RBF. The RBF kernel

KRBF(

x,

z) that is used to compute the similarity between feature vectors in the input space is expressed as Zhang et al. [

33].

where |

x −

z| is the Euclidean distance between

x and

z with

σ as the kernel width parameter that controls the influence of each training sample. As computed before, the decision function for SVM RBF classification is also defined as a linear combination of the kernel evaluations between the support vectors and the input feature vector with the bias term. The decision function

fRBF(

x) for SVM RBF is given by:

Based on fRBF(x) value, the class assignment is performed in a similar way as performed in SVM linear and polynomial classifiers.

3.8. Selection of Target

This research involves a binary classification, and hence two targets

TAdeno and

TMeso for Adeno and Meso classification and mapping constraints must be selected. The target and mapping constraints are selected based on the distribution of classes in the dataset and the class imbalance problems involved in the microarray gene expression dataset. The target value,

is having the following constraint. For

N number of features, with

µi as the average of input feature vector, the mapping constraint is framed as:

TAdeno must have a target value greater than

µi and the average of input feature vectors

µj for the Meso case. The target value

∈ [0, 1], for the Meso case with

M number of features, the mapping constraint is framed as:

Also, the difference between the target values must follow the following constraint:

Thus, following all of the above mentioned constraints, the targets TAdeno, and TMeso for Adeno and Meso output classes are chosen as 0.85, and 0.65, respectively. The performance of classifiers is will be evaluated based on the Mean Squared Error (MSE).

3.9. Training and Testing of Classifiers

The training data for the dataset are limited. So, we perform k-fold cross-validation. K-fold cross-validation is a popular method for estimating the performance of a machine learning model. The process performed by Fushiki et al. [

34] for k-fold cross-validation is as follows. The first step is to divide the dataset into k equally sized subsets (or “folds”). For each fold, i, train the model on all of the data except the i-th fold and test the model on the i-th fold. The process is repeated for all k folds so that each is used once for testing. At the end of the process, you will have k performance estimates (one for each fold). Now, calculate the average of the k performance estimates to obtain an overall estimate of the model’s performance. Once the model has been trained and validated using k-fold cross-validation, you can retrain it on the full dataset and predict new, unseen data. The advantage of k-fold cross-validation is that it provides a more reliable estimate of a model’s performance than a simple train-test split, as it uses all of the available data. In this paper, the k-value is chosen as 10-fold. This research used a value of 2049 dimensionally reduced features per patient. This research is associated with 150 patients of Adeno Carcinoma and 31 Meso cancer patients with multi-trail training of classifiers required. The use of cross-validation removes any dependence on the choice of pattern for the test set. The training process is controlled by monitoring the Mean Square Error (MSE), which is deduced from Fushiki et al. [

34] as,

where

Oj is the observed value at time

j,

Tj is the target value at model

j;

j = 1 and 2, and

N is the total number of observations per epoch. In our case, it is 2049.

Table 3 shows the confusion matrix for lung cancer detection.

In the case of lung cancer detection, the following terms can be defined:

True Positive (TP): A patient correctly identifies as having Adeno Carcinoma lung cancer.

True Negative (TN): A patient is correctly identified as having Meso cancer.

False Positive (FP): A patient is incorrectly identified as having Adeno Carcinoma lung cancer when they have Meso Carcinoma cancer disease.

False Negative (FN): A patient is incorrectly identified as having Meso Carcinoma lung cancer when they do have the Adeno Carcinoma cancer disease.

Table 4 explores the training and testing MSE performance of the classifiers without and with feature selection (PSO and Harmonic Search) Methods for STFT Dimensionality reduction techniques. The standard training MSE always varies between 1 × 10

−6 and 1 × 10

−8, while the standard testing MSE varies from 1 × 10

−4 to 1 × 10

−7. GMM classifier without feature selection method settled at minimum training and Testing MSE of 3.6 × 10

−8 and 7.21 × 10

−7, respectively. PSO Feature Selection method SVM (RBF) Classifier scores minimum training and Testing MSE of 2.25 × 10

−9 and 3.6 × 10

−8, correspondingly. Like the Harmonic Search feature Selection method, the SVM (RBF) classifier once again attained minimum Training and Testing MSE of 2.56 × 10

−8 and 1.96 × 10

−7, respectively. The minimum testing MSE is one of the indicators towards the attainment of better classifier performance. As shown in

Table 4, the higher the value of testing MSE leads to poorer performance of the classifier, irrespective of the feature selection methods.

Table 5 explores the selection of classifier parameters during the training process. The classifiers parameters are chosen on a trial and error basis for minimum training MSE training condition. The stopping criteria for training MSE is fixed to either minimum standard MSE value or 1000 iterations. It is observed that the training MSE value reached 1.0 × 10

−10 within 1000 iterations as the training progressed.

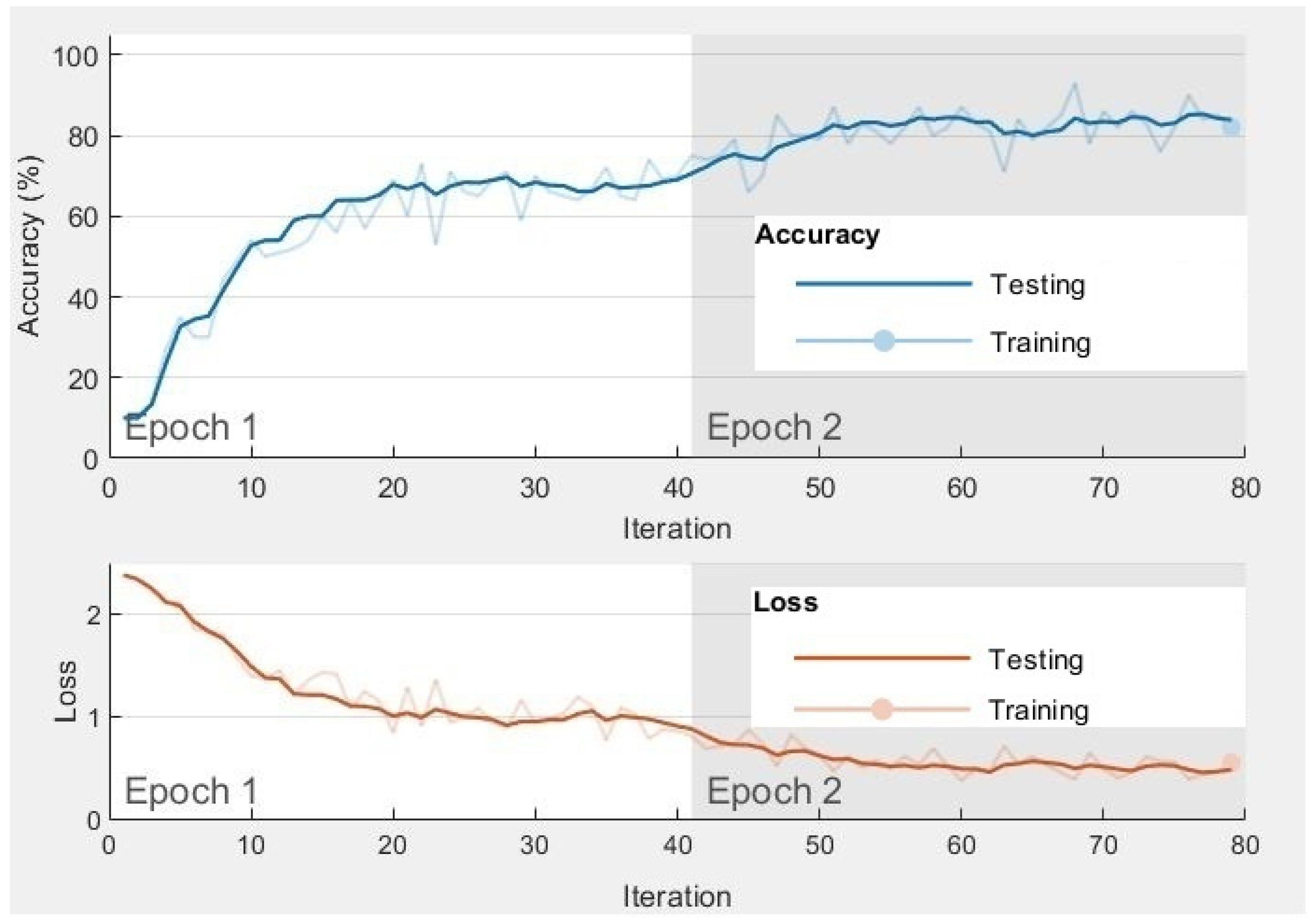

5. Results and Discussion

The research utilizes formal ten-fold testing for machine learning and CNN classification methodologies. The training uses 85% of the gene expression data, and the remaining 15% are employed for testing the model. Since this research is binary classification, the performance metrics are accordingly chosen. In binary classification problems, a confusion matrix is a valuable tool for evaluating the performance of a machine learning model. As mentioned earlier, the confusion matrix summarizes the predictions made by the model against the true labels of the data. The performance metrics such as Accuracy, Precision, Recall, F1 score, MCC, Error Rate, and Kappa are derived by analyzing the values within the confusion matrix. Next, we discuss in detail the performance matrices employed in this research.

The F1 score is a measure of a classifier’s accuracy that combines precision and recall into a single metric. It is calculated as the harmonic mean of precision and recall, with values ranging from 0 to 1, where 1 indicates perfect precision and recall. The equation for F1 score is given by Koizumi et al. [

36].

Here, precision is the proportion of true positives among all instances classified as positive, and recall is the proportion of true positives among all positive instances. The F1 score is useful when the classes in the dataset are imbalanced, meaning there are more instances of one class than the other. In such cases, accuracy may be a bad metric, as a classifier that predicts the majority class would have high accuracy but low precision and recall. The F1 score provides a more balanced measure of a classifier’s performance.

MCC stands for “Matthews Correlation Coefficient”, which measures the quality of binary (two-class) classification models. It considers true and false positives and negatives and is particularly useful in situations where the classes are imbalanced.

The MCC is defined by the following equation as given in Chicco et al. [

37]:

The MCC takes on values between −1 and 1, where a coefficient of 1 represents a perfect prediction, 0 represents a random prediction, and −1 represents a perfectly incorrect prediction.

As mentioned by Duda et al. [

38], the error rate of a classifier is the proportion of misclassified instances. It can be calculated using the following equation:

The kappa statistic, also known as Cohen’s kappa, measures agreement between two raters or between a rater and a classifier. In the context of classification, it is used to evaluate the performance of a classifier on a binary or multi-class classification task. The kappa statistic measures the agreement between the predicted and true classes, considering the possibility of the agreement by chance. Cohen et al. [

39] defined kappa as follows:

where

is the observed proportion of agreement, and

is the proportion of agreement expected by chance.

and

are calculated as follows:

The Kappa is always less than or equal to 1. The Kappa coefficient with a value of 1 implies perfect agreement and any value less than 1 can be interpreted as follows: Poor agreement (<0.2), Fair agreement (0.2–0.4), Moderate agreement (0.4–0.6), Good agreement (0.6–0.8), and Very good agreement (0.8–1). The results are tabulated in the following tables.

Table 8 indicates the performance analysis of the classifiers based on parameters such as Accuracy, Precision, Recall, F1 Score, MCC, Error Rate, and Kappa values for the STFT Dimensionality Reduction method without feature selection methods. It is explored from

Table 8 that the GMM Classifier is a high performing one with an accuracy of 80.66%, Precision of 92.59%, Recall of 83.33%, an F1 Score of 87.71%, with a low error rate of 19.34%. The GMM Classifier also demonstrates a moderate value of MCC 0.4419 and a Kappa value of 0.4285. The Softmax Discriminant Classifier is a low-performing classifier with a low accuracy of 59.11%, with high Error Rate of 40.89%, Precision of 90.42%, Recall of 56.66%, and an F1 Score of 69.67%. The MCC and Kappa values of the SD classifier are 0.2083 and 0.1609, respectively.

Table 9 depicts the performance analysis of the classifiers for the STFT Dimensionality Reduction method with PSO feature selection methods. It is identified from

Table 9 that the SVM (RBF) Classifier achieved high accuracy of 94.47%, Precision of 97.94%, Recall of 95.33%, and an F1 Score of 96.62% with a low error rate of 5.52%. The SVM (RBF) Classifier has also reached a high value of MCC 0.81709 and Kappa value of 0.81485. The nonlinear regression classifier is placed at the lower edge with a low accuracy of 59.91%, a high Error Rate of 43.09%, Precision of 88.297%, Recall of 55.34%, and an F1 Score of 68.03%. The MCC and Kappa values of the nonlinear Regression classifier are at 0.1496 and 0.1156, correspondingly.

Table 10 exhibits the performance analysis of the classifiers for the STFT Dimensionality Reduction method with Harmonic Search feature selection methods. As shown in

Table 10, the SVM (RBF) Classifier achieved high accuracy of 90.05%, Precision of 97.82%, Recall of 90%, F1 Score of 93.75% with a low error rate of 9.94%. The SVM (RBF) Classifier is also maintained at a high value of MCC 0.711 and Kappa value of 0.6963. Unfortunately, SVM (poly) classifier is placed at the low performing one with an accuracy of 59.11%, Precision of 88%, Recall of 58.67%, and a high Error Rate of 40.89% and an F1 Score of 70.4%. The MCC and Kappa value of the SVM (Poly) classifier is at 0.1512 and 0.1217, accordingly.

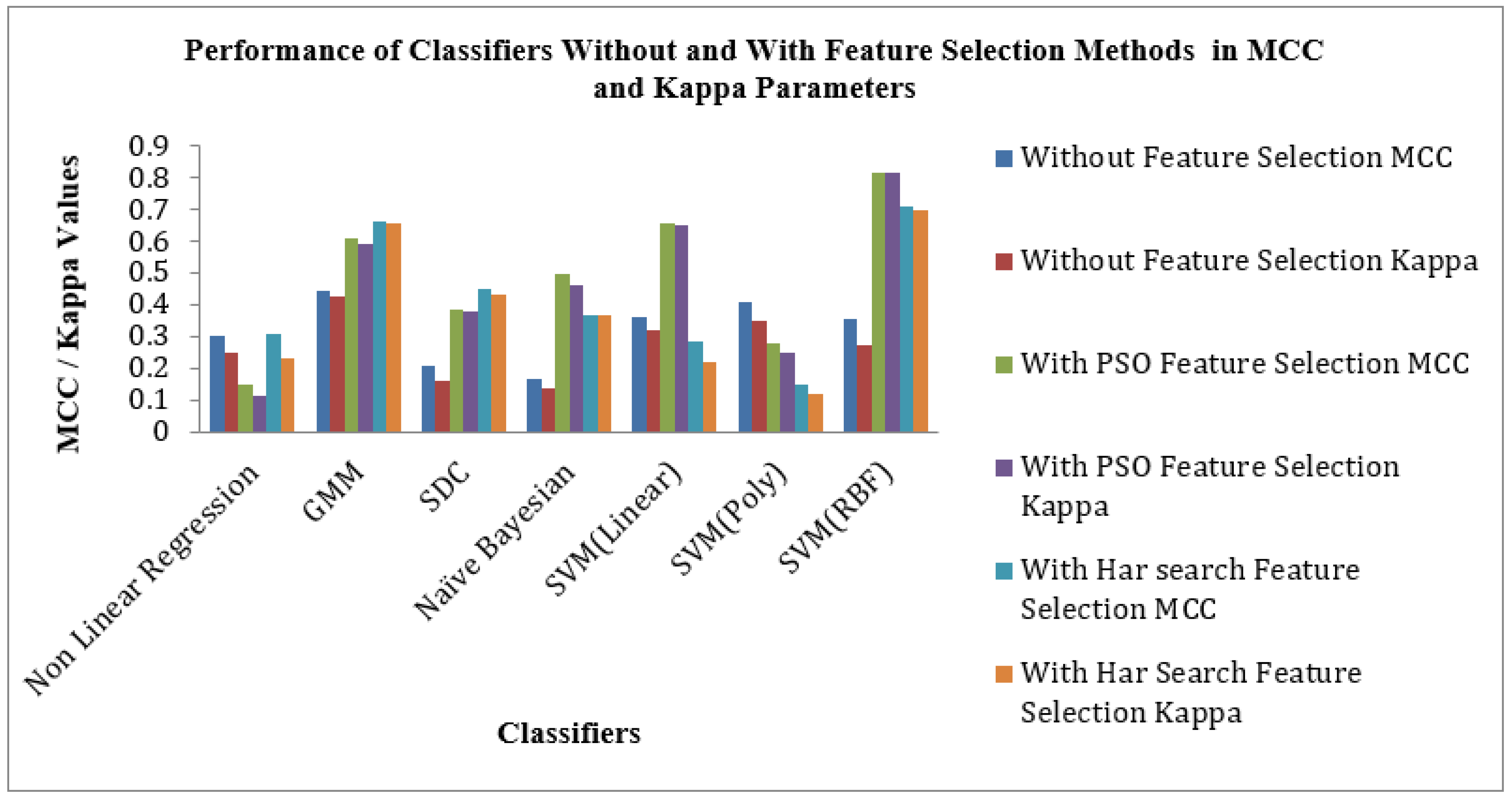

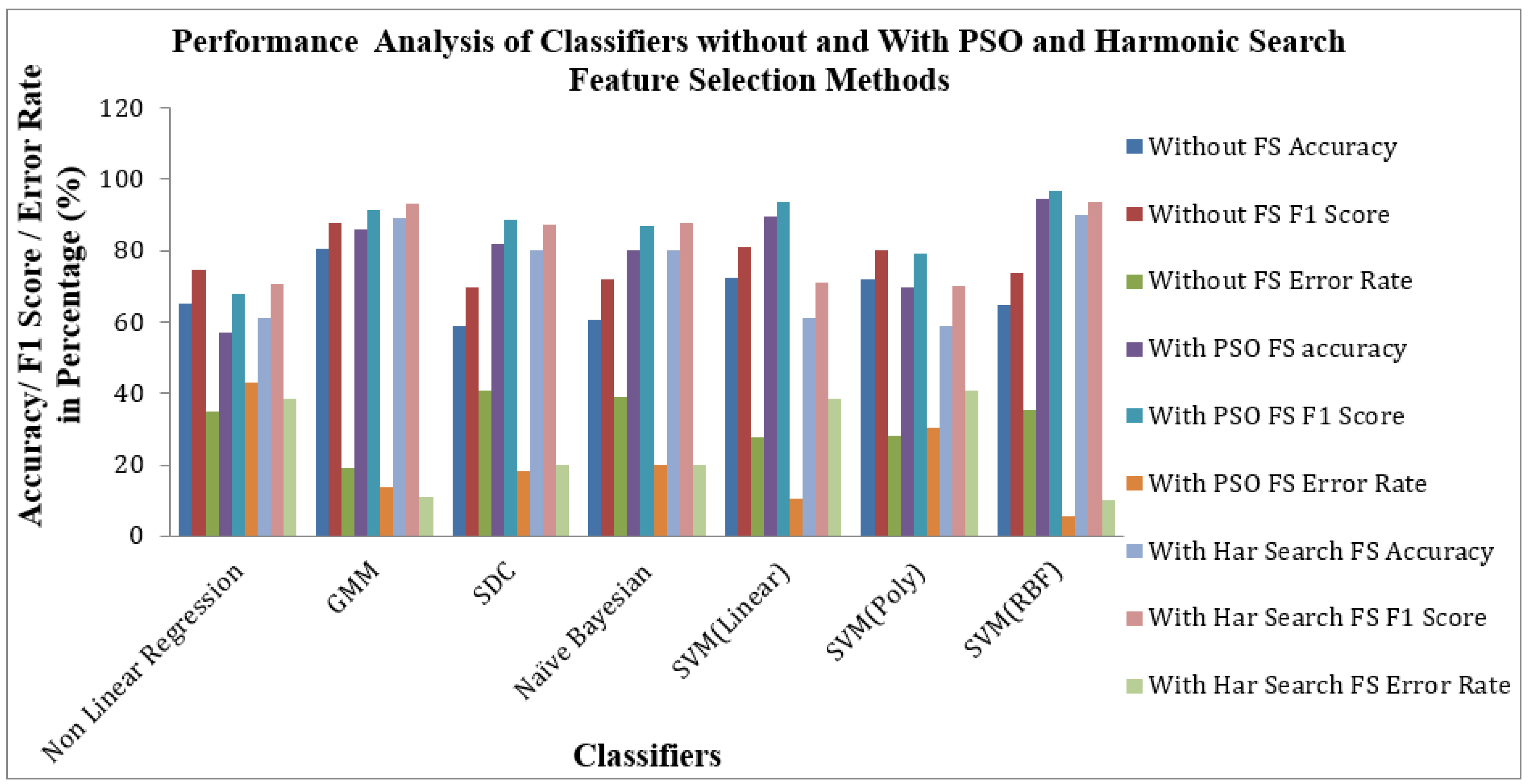

Figure 9 shows the Performance of Classifiers with and without Feature Selection regarding MCC and Kappa parameters. As indicated by

Figure 9 that the SVM (RBF) Classifier is reached a high value of MCC 0.81709 and Kappa value of 0.81485. The nonlinear Regression Classifier for the PSO feature selection method attains low MCC and Kappa values of 0.1496 and 0.1156. The average MCC and Kappa values across the classifiers for feature selection are 0.3212 and 0.27403, respectively. The average MCC and Kappa values across the classifiers for PSO and Harmonic Search Feature selection methods are settled at 0.4194, 0.3878 and 0.4841 and 0.4667. This signature effect of Feature selection shows the improvement of average MCC and Kappa values across the classifiers.

Figure 10 shows the Performance of Classifiers with and without Feature Selection regarding parameters like Accuracy, F1 Score and Error Rate. As identified by

Figure 10, the SVM (RBF) Classifier with the PSO feature selection method achieved higher values in Accuracy of 94.47%, an F1 Score of 96.62% and a lower Error Rate of 5.52% than all other categories of classifiers. In the case of the Harmonic Search feature selection method, once again SVM (RBF) classifier attained good values of Accuracy of 90.05%, an F1 Score of 93.75% and a low Error Rate of 9.944% compared with all other six classifiers. GMM Classifier attained an appreciable value of Accuracy of 80.66%, F1 Score of 87.71% and moderate Error Rate of 19.33% for the STFT inputs without any feature selection methods. The effect of feature selection improves the classifiers’ benchmark parameters and overall performance. The PSO feature selection retained its top position. It maintained its superiority over the harmonic search feature selection method, reflected in the classical improvements of the classifier’s performance.

Table 11 explores the performance analysis of the classifiers for raw microarray gene data with CNN methods. As displayed in

Table 11, the SVM (RBF) Classifier achieved the highest accuracy of 90.607%, Precision of 90.797%, Recall of 98.67%, and an F1 Score of 94.56%, with a low Error Rate of 9.39%. The SVM (RBF) Classifier is also maintained at a moderate value of MCC 0.6329 and Kappa value of 0.6031. For the CNN method, all seven classifiers are maintained at more than 83% accuracy, Precision of 88.554%, Recall of 98%, and more than 90% F1 Score. The Softmax Discriminant Classifier attained minimum MCC and Kappa values of 0.5016 and 0.4616, respectively.

Table 12 exhibits the performance analysis of the classifiers for the STFT Dimensionality Reduction method with CNN methods.

Table 12 shows that Softmax Discriminant Classifier (SDC) achieved a high accuracy of 91.66%, Precision of 93.54%, Recall of 96.67%, an F1 Score of 95.08%, with a low error rate of 8.33%. The SD Classifier is also maintained at a high MCC value of 0.6825 and Kappa value of 0.6785. All seven classifiers are maintained at more than 86% of accuracy, Precision of 87%, Recall of 96%, and more than 92% of F1 Score. The Naïve Bayesian Classifier achieved an accuracy of 91.66%, Precision of 90.909%, Recall of 100%, and also attained MCC and Kappa values of 0.6742 and 0.625, respectively. It is also observed from

Table 12 that STFT input to the CNN method enhances the performance metrics of the classifiers when compared with raw input with CNN methods, as discussed in the paper.

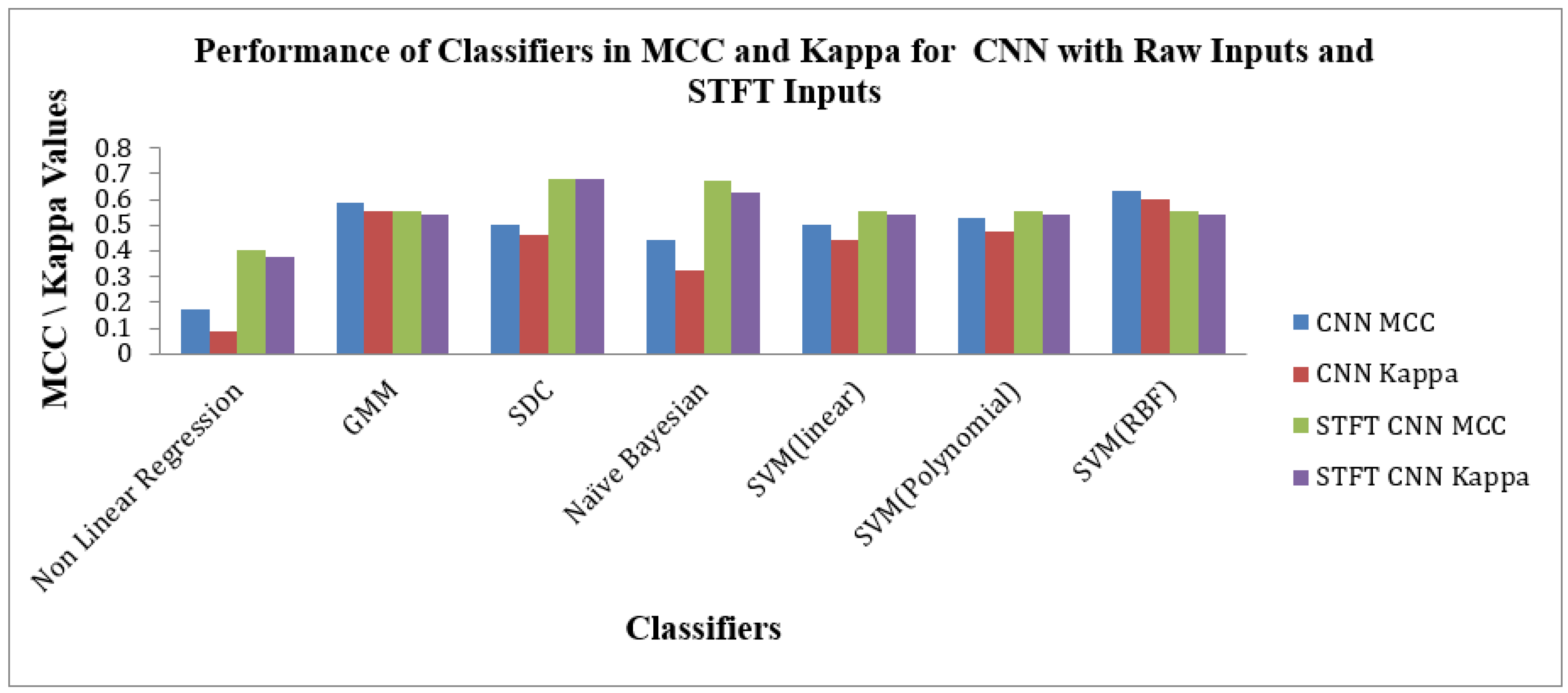

Figure 11 depicts the Performance of Classifiers regarding MCC and Kappa parameters for Raw and STFT inputs to the CNN method. As indicated by

Figure 11, the SD Classifier for the STFT feature method reached high MCC and Kappa values of 0.6825 and 0.6785, respectively. The average MCC and Kappa values across the classifiers are 0.5236 and 0.485. As shown by

Figure 11 that the SVM (RBF) Classifier is attained at good values of MCC 0.6329 and Kappa value of 0.6031 for the raw inputs to the CNN method. The average MCC and Kappa value across the classifiers for CNN is 0.4794 and 0.4226, respectively. The Scalogram effect of STFT inputs to the CNN methods exemplifies the enhancement of average MCC and Kappa values across the classifiers.

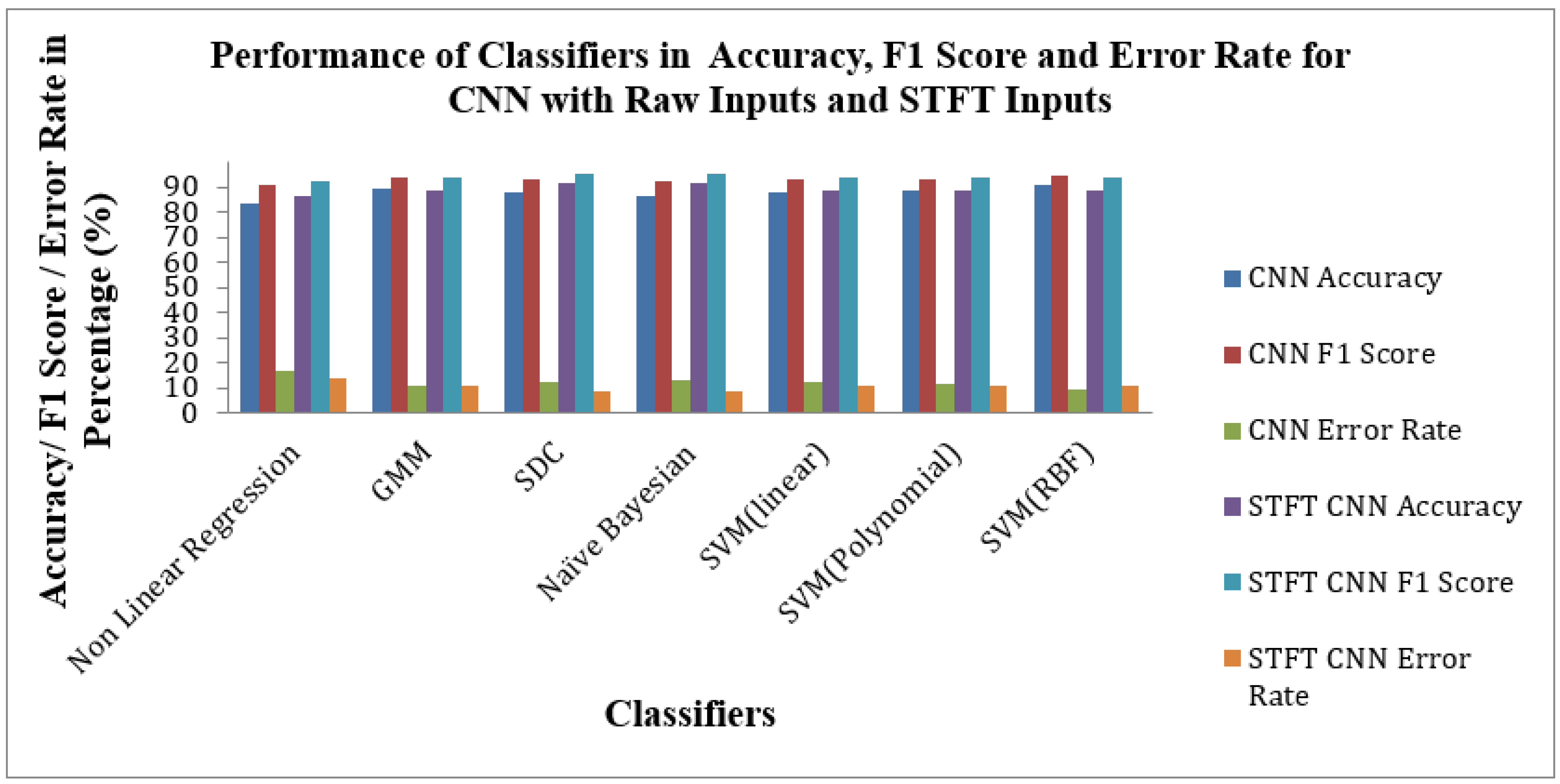

Figure 12 shows the Performance of Classifiers regarding Accuracy, F1 Score, and Error Rate parameters for raw and STFT inputs with the CNN method. As depicted by

Figure 12, the SVM(RBF) Classifier for raw input with the CNN method reached high accuracy, F1 Score and low Error Rates of 90.6%,94.56% and 9.39%, respectively. As displayed in

Figure 12 that the SD Classifier is attained at the high accuracy value, F1 Score and low Error Rate of 91.66%, 95.08% and 8.33%, respectively, for STFT inputs with the CNN method. The yardstick effect of STFT inputs in CNN methods maketh the improvement in the accuracy, F1 Score and Error Rate values across the classifiers.

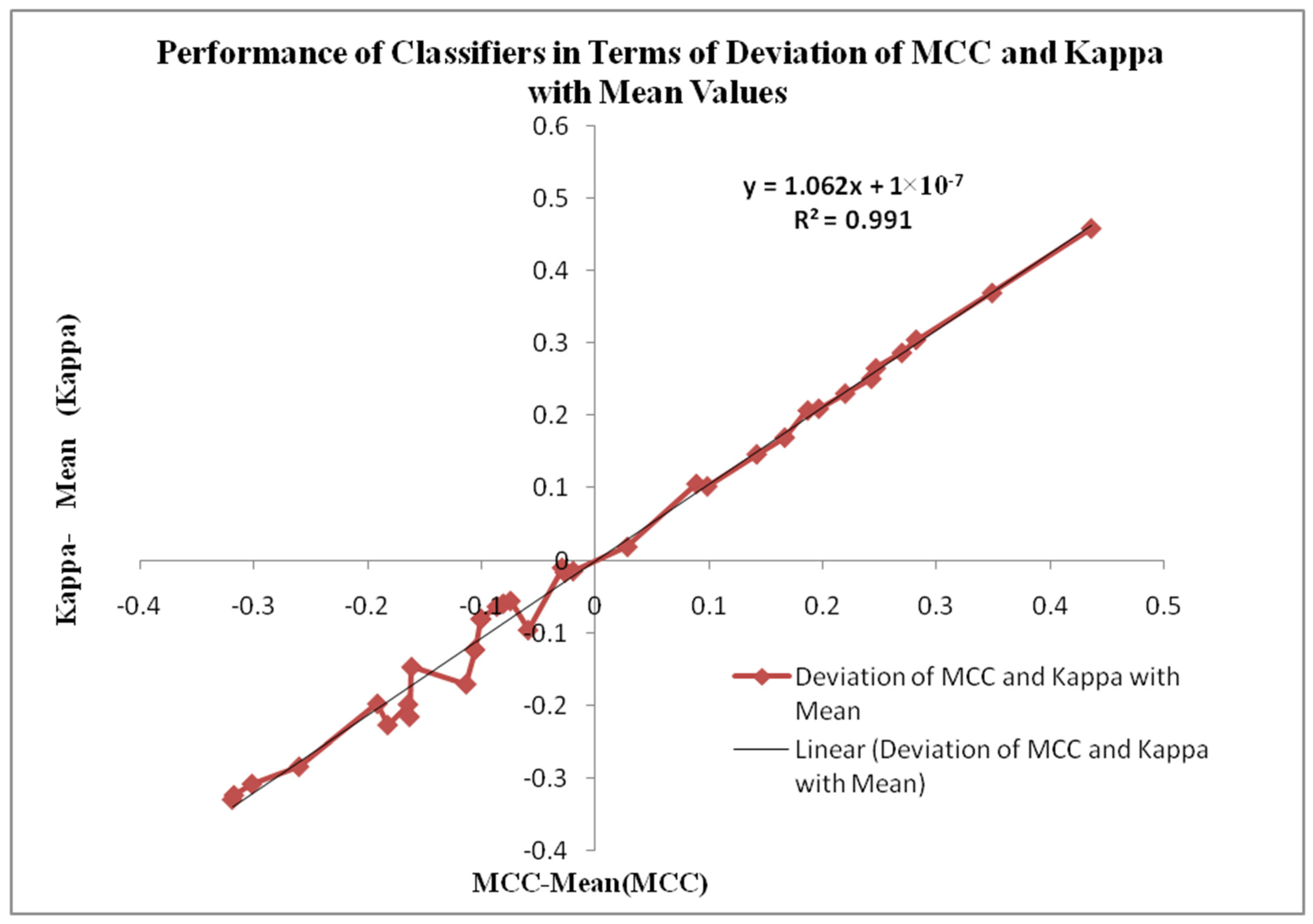

Figure 13 displays the Performance of Classifiers in terms of Deviation of MCC and Kappa Parameters with mean values. The MCC and Kappa are the benchmark parameters that indicate the classifiers’ outcomes for different inputs. As in this research, there are two categories of inputs like raw microarray genes, STFT, STFT with PSO and Harmonic Search Feature selection, STFT with Scalogram method and finally, CNN methods are provided to the classifiers. The classifier’s performance is observed through the attained MCC and Kappa values for these inputs. The average MCC and Kappa values from the classifiers are 0.4681 and 0.44518, respectively. Now a methodology is devised to identify the deviation of MCC and Kappa values from their respective mean values to point out the classifier’s performance. It is also observed from

Figure 13 that the MCC and Kappa values placed in the third quadrant of the graph depict the nonlinear outcome of the classifiers with lower performance metrics. The MCC and Kappa values placed at the first quadrant show higher outcomes for classifiers with MCC and Kappa values more than the average value. This specifies the classifier’s performance is improved for the STFT inputs along with the PSO Feature selection method.

Figure 13 is also denoted by the curve fitting of the linear line with the following equation y = 1.062x + 1 × 10

−7 and R

2 = 0.991 values.

Computational Complexity

The computational complexity analyzes the efficiency of the machine learning methods in terms of time and space complexity. In this research, the Big O notation represents the computational complexity of the dimensionality reduction, feature selection and classification methodologies. The computational complexity is represented by O (n), where n is the number of features. The O(log2n) means that the computational complexity increases ‘log2n’ times for any increase in ‘n’. The classifiers considered in this research are integrated with either dimensionality reduction techniques, feature selection techniques, or a combination of both. Therefore, the computational complexity also is a combination of these hybrid methods. Overall, the choice of methodology should consider the trade-off between computational complexity and the desired level of performance in classification methodology.

Table 13 shows the computational complexity of the classifiers for the STFT dimensionality reduction method with and without feature selection methods, along with CNN Models.

As noted from

Table 13, the SVM (Linear), NBC, Nonlinear Regression, Softmax Discriminant Classifiers, and SVM (RBF) classifiers are at the level of low computational complexity for the STFT DR method without feature selection methods. GMM Classifier attains moderate complexity of O (2n

4 log2n) without feature selection methods and achieved good accuracy of 80.66%. For PSO and Harmonic search feature selection methods, the SVM (RBF) classifier with the computational complexity of O (2n

5 log4n) placed at high accuracy of 94.47% and 90.05%, respectively. GMM and SVM (polynomial) classifiers are denoted by the high computational complexity of O (2n

7 log2n) for the PSO and Harmonic Search Feature selection methods poorly performed in terms of accuracy score. These poor performances of classifiers are due to the presence of outlier problems in the STFT features. In order to enhance the performance of the classifiers, the STFT Scalogram features and CNN methods are included in this research. The SVM (RBF) Classifier attained good performance with moderate complexity of O (2n

3 log4n) and O (2n

3 log8n) for the methods, respectively. Next, through

Table 14, we compare the accuracy of our research work with various methodologies across diverse microarray gene datasets employed for Adenocarcinoma and Mesothelioma lung cancer.

Table 15 compares previous works involving Lung and other types of cancer classification from microarray gene datasets with the reference from Fathi et al. [

43] and Karmokar et al. [

48], which contains performance metrics, namely Accuracy, Precision, Recall and F1 Score. Fathi et al. [

43] utilized the PCC–DTCV (Pearson correlation–Decision Tree Cross Validation) model for lung cancer classification and reported accuracy in ranges from 93 to 95. The model involves a tuning parameter to maximize the depth of the decision tree classifier using grid search and Pearson correlation for the feature selection method. The grid search model is employed for dimensionality reduction and selects the most informative genes which involve higher computational complexity. In our research, we have utilized the gene expression intensity values incorporated with dimensionality reduction and classification. Unlike Fathi et al. [

43], our methodology does not select prominent genes that increase computational overhead. Therefore, our method involves less computational complexity but is at an accuracy trade-off. Comparison with other types of cancer, namely prostate, breast, and leukemia, is also recorded.

Overall, this research evaluates and explores machine learning and CNN classifiers for detecting lung cancer from microarray gene expressions. The research investigation shows that although CNNs are good at learning features, due to their black-box nature, the CNNs fail to analyze the physical meaning behind forecasting and categorizing gene expression patterns from the learned features. Further, the LH2 dataset used for this research contains data imbalance, and many times during the analysis, CNNs were prone to overfitting and underfitting issues. The measures like the feature extraction step and replacement of the softmax layer with suitable classifiers were adopted to rectify these issues. However, the trade-off is that computational complexity will be increased while adding multiple stages to the CNN classification methodology.

Our research shows that microarray gene expression-based data are instrumental for disease classification. It contributes significantly to understanding gene expression patterns and their association with various biological processes and diseases. However, microarray genes have some limitations over general approaches like mRNA-Seq. The microarray gene expressions are selected from limited areas with specific genes or tissue regions. Therefore, the data generated may not capture the entire transcriptome and provide comprehensive information about gene expression. Also, microarray experiments may be prone to background noise and cross-hybridization problems due to non-specific binding, resulting in decreased sensitivity and specificity. Microarray experiments are expensive and sometimes have limited throughput compared to newer high-throughput sequencing technologies like mRNA-Seq.