StackDPP: Stacking-Based Explainable Classifier for Depression Prediction and Finding the Risk Factors among Clinicians

Abstract

1. Introduction

- Building an efficient stacking-based ensemble classifier, which will be able to diagnose the mental health stage of clinicians with higher accuracy.

- Finding the best subset of features that are the most significant and risky for clinicians.

- Analyzing the most significant risk factors for the mental health of clinicians.

- Investigating whether only one group of attributes, such as only PHQ-related features, sociodemographic, or job-related features, or a combination is capable of predicting the mental health condition of clinicians or not.

2. Materials and Methods

2.1. Data Collection and Description

2.2. Data Preprocessing

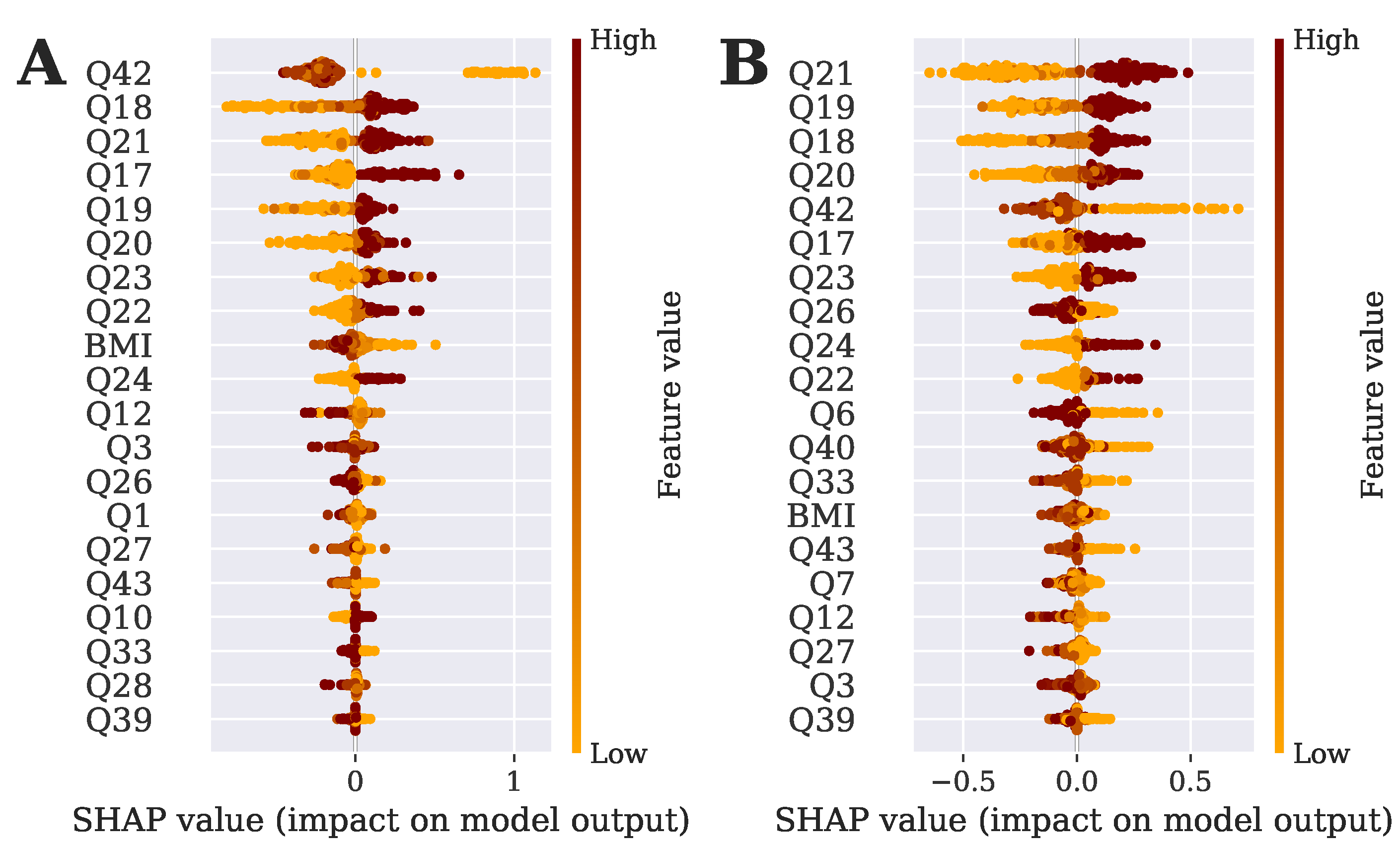

2.3. Model Interpretation for Feature Selection

2.4. Supervised Machine Learning Model

2.4.1. K Nearest Neighbor (KNN)

2.4.2. Decision Tree (DT)

2.4.3. Gradient Boosting (GB)

2.4.4. LightGBM (LGBM)

- Increased training pace and effectiveness.

- Reduce memory utilization.

- Increased precision.

- Parallel, distributed, and GPU learning are all supported.

- Capable of managing enormous amounts of data

2.4.5. Random Forest (RF)

2.4.6. Extra Tree Classifier (ETC)

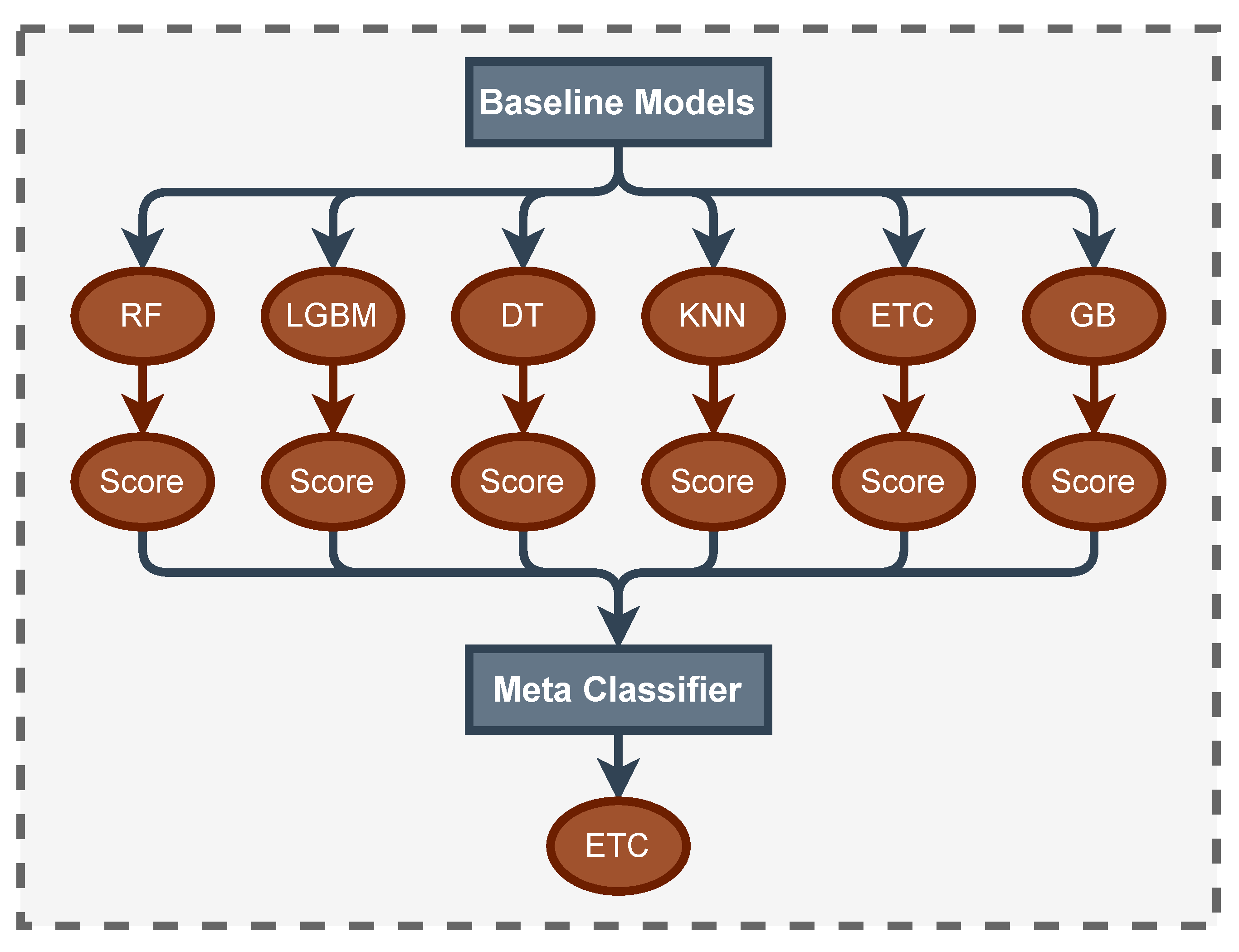

2.4.7. Stacking Classifier (StackDPP)

2.5. Performance Evaluation Metrics

3. Experimental Results Analysis

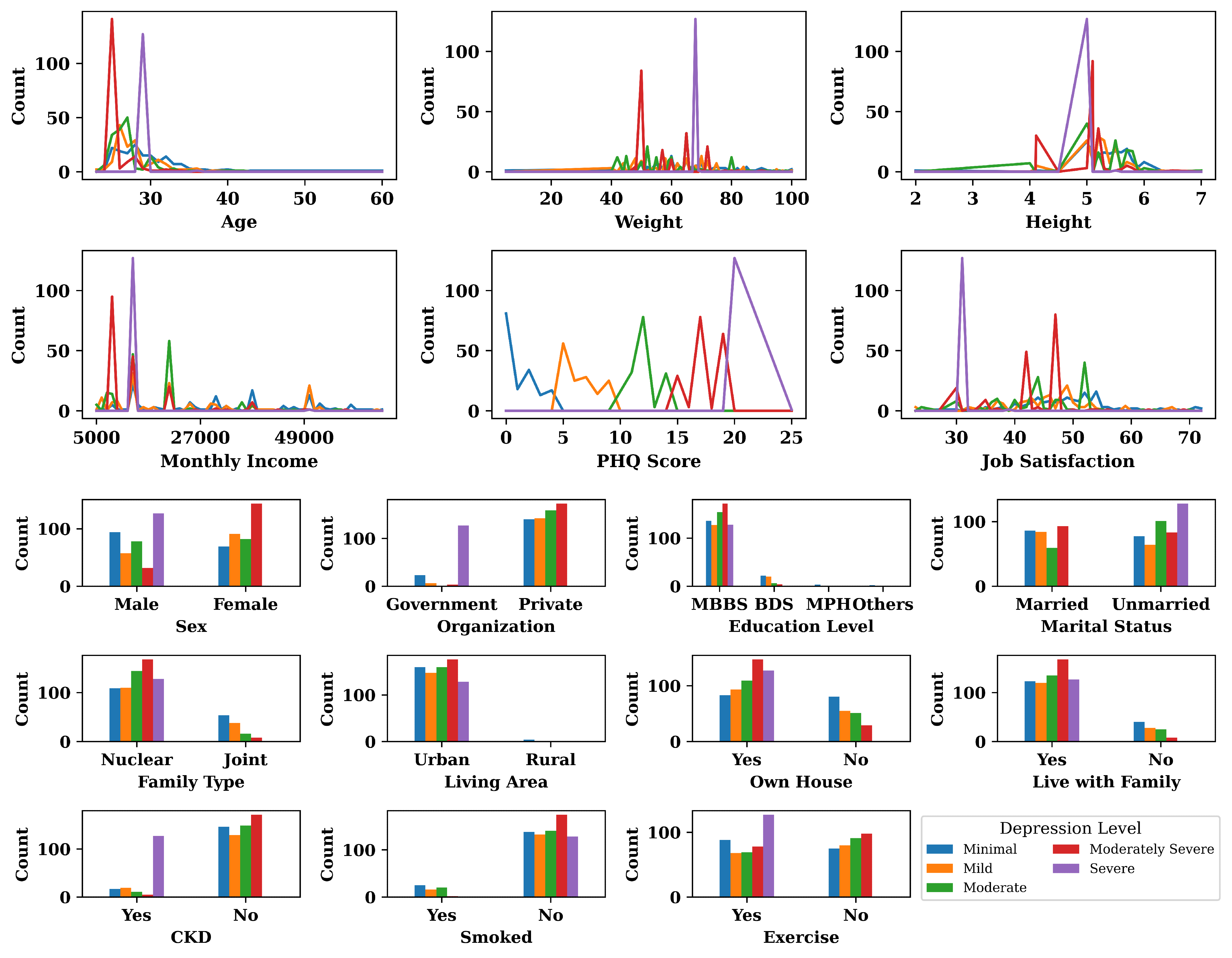

3.1. Result of Exploratory Data Analysis

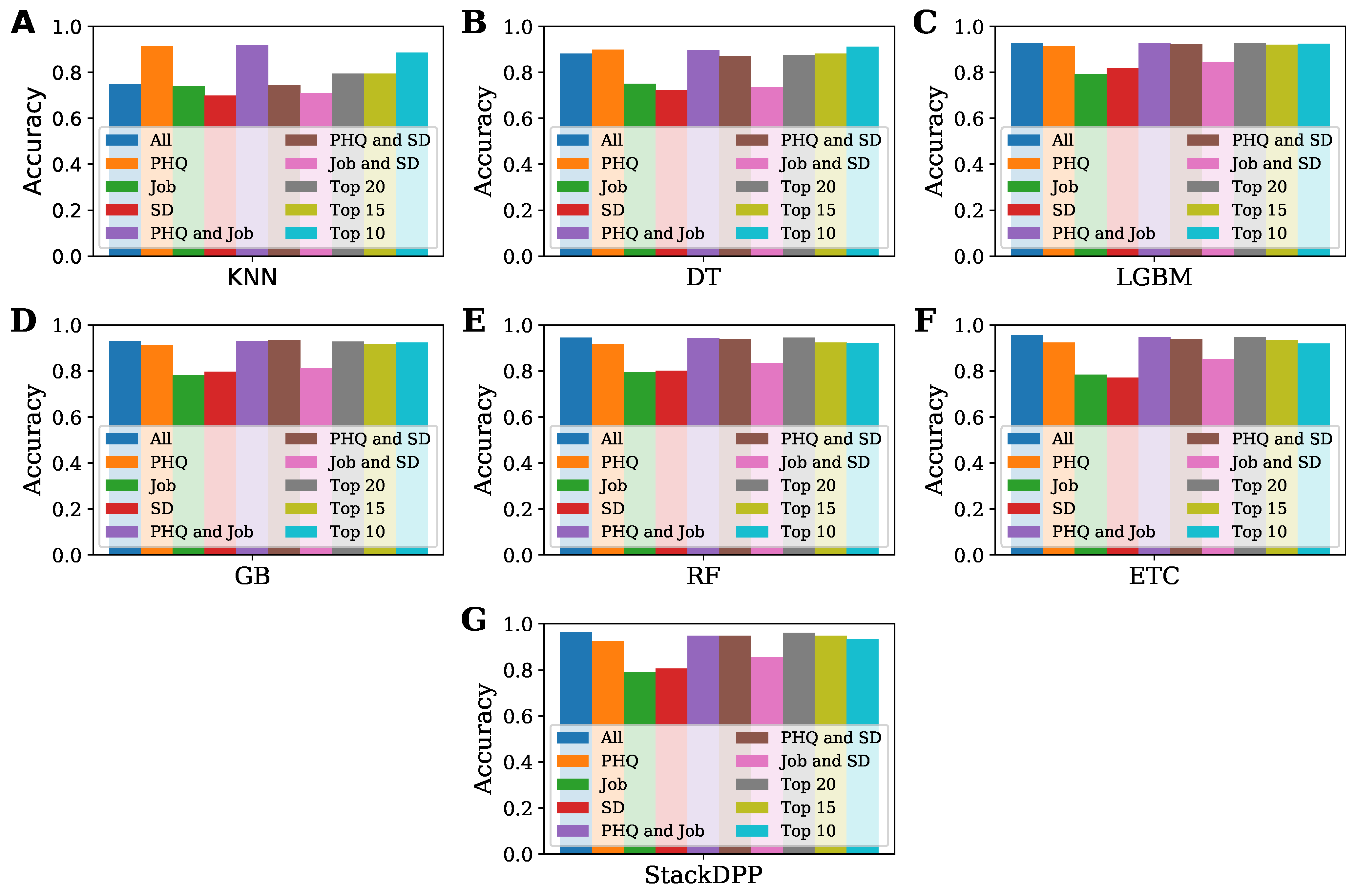

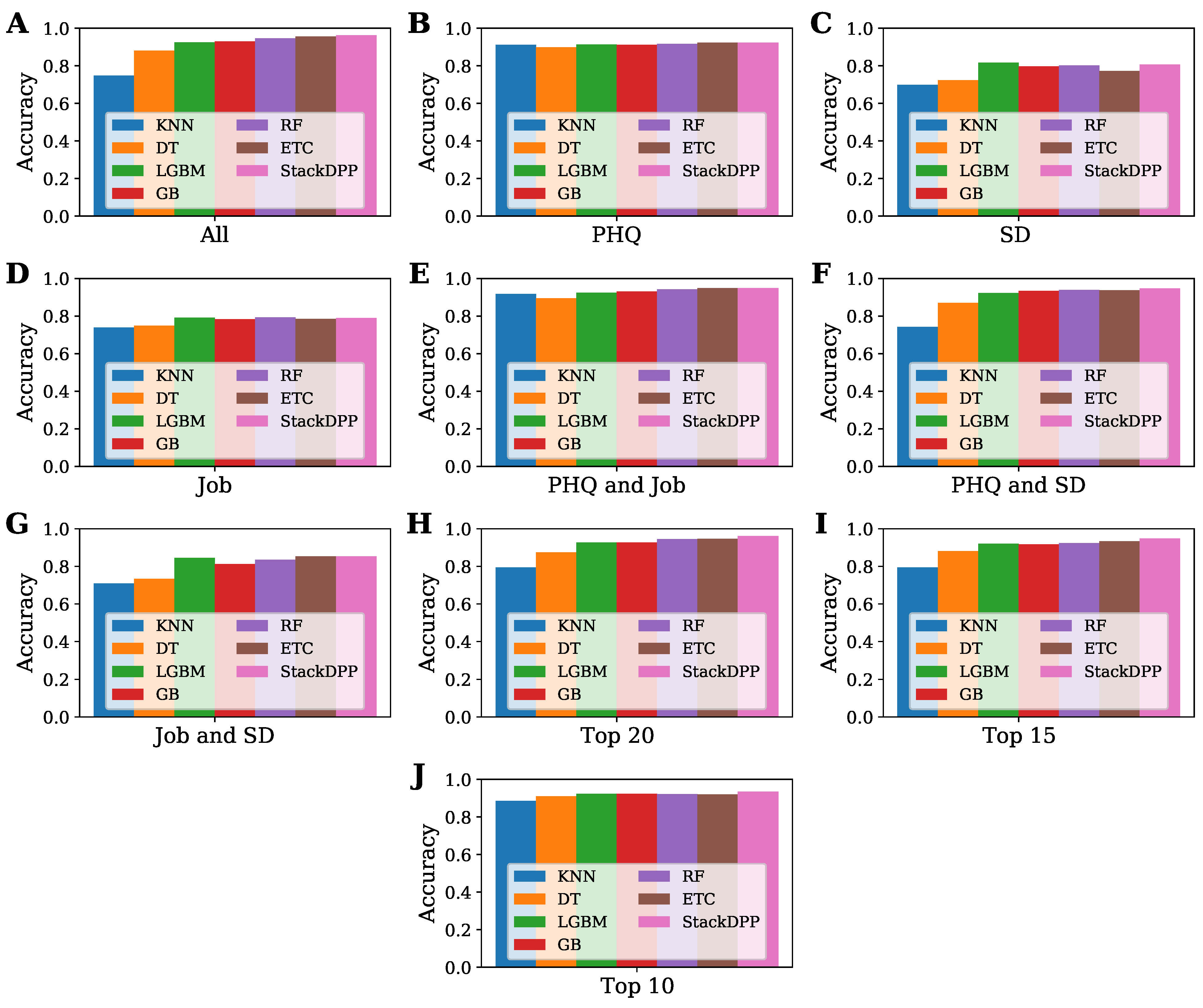

3.2. Result of Supervised Machine Learning

3.2.1. Performance Analysis for All the Features

3.2.2. Performance Analysis for PHQ-Related Features

3.2.3. Performance Analysis for Sociodemographic Features

3.2.4. Performance Analysis for Job-Related Features

3.2.5. Performance Analysis for PHQ and Job-Related Features

3.2.6. Performance Analysis for PHQ and Sociodemographic Features

3.2.7. Performance Analysis for Job and Sociodemographic Features

3.2.8. Performance Analysis for the Selected Features

3.2.9. Overall Performance Analysis of Machine Learning Models

3.2.10. Identification of Important Risk Factors for Mental Health

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Galderisi, S.; Heinz, A.; Kastrup, M.; Beezhold, J.; Sartorius, N. Toward a new definition of mental health. World Psychiatry 2015, 14, 231. [Google Scholar] [CrossRef]

- Gray-Stanley, J.A.; Muramatsu, N. Work stress, burnout, and social and personal resources among direct care workers. Res. Dev. Disabil. 2011, 32, 1065–1074. [Google Scholar] [CrossRef] [PubMed]

- Harvey, S.B.; Epstein, R.M.; Glozier, N.; Petrie, K.; Strudwick, J.; Gayed, A.; Dean, K.; Henderson, M. Mental illness and suicide among physicians. Lancet 2021, 398, 920–930. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://www.who.int/teams/mental-health-and-substance-use/data-research/suicide-data (accessed on 12 February 2022).

- Hawton, K.; Clements, A.; Sakarovitch, C.; Simkin, S.; Deeks, J.J. Suicide in doctors: A study of risk according to gender, seniority and specialty in medical practitioners in England and Wales, 1979–1995. J. Epidemiol. Community Health 2001, 55, 296–300. [Google Scholar] [CrossRef] [PubMed]

- Sakib, N.; Akter, T.; Zohra, F.; Bhuiyan, A.I.; Mamun, M.A.; Griffiths, M.D. Fear of COVID-19 and depression: A comparative study among the general population and healthcare professionals during COVID-19 pandemic crisis in Bangladesh. Int. J. Ment. Health Addict. 2023, 21, 976–992. [Google Scholar] [CrossRef]

- Available online: https://www.singlecare.com/blog/news/mental-health-survey/ (accessed on 12 February 2022).

- Brooks, S.K.; Chalder, T.; Gerada, C. Doctors vulnerable to psychological distress and addictions: Treatment from the Practitioner Health Programme. J. Ment. Health 2011, 20, 157–164. [Google Scholar] [CrossRef]

- Gabbard, G.O. The role of compulsiveness in the normal physician. JAMA 1985, 254, 2926–2929. [Google Scholar] [CrossRef]

- Carter, G.; Milner, A.; McGill, K.; Pirkis, J.; Kapur, N.; Spittal, M.J. Predicting suicidal behaviours using clinical instruments: Systematic review and meta-analysis of positive predictive values for risk scales. Br. J. Psychiatry 2017, 210, 387–395. [Google Scholar] [CrossRef]

- Gerada, C. Doctors, suicide and mental illness. Bjpsych Bull. 2018, 42, 165–168. [Google Scholar] [CrossRef]

- Arusha, A.R.; Biswas, R.K. Prevalence of stress, anxiety and depression due to examination in Bangladeshi youths: A pilot study. Child. Youth Serv. Rev. 2020, 116, 105254. [Google Scholar] [CrossRef]

- Tasnim, R.; Sujan, M.; Hossain, S.; Islam, M.; Ritu, A.H.; Siddique, M.; Bin, A.; Toma, T.Y.; Nowshin, R.; Hasan, A.; et al. Prevalence and correlates of anxiety and depression in frontline healthcare workers treating people with COVID-19 in Bangladesh. BMC Psychiatry 2021, 21, 14. [Google Scholar] [CrossRef] [PubMed]

- Rahman, A.; Deeba, F.; Akhter, S.; Bashar, F.; Nomani, D.; Koot, J.; Koly, K.N.; Salah, F.B.; Haverlag, K.; Anwar, I. Mental health condition of physicians working frontline with COVID-19 patients in Bangladesh. BMC Psychiatry 2021, 21, 615. [Google Scholar] [CrossRef] [PubMed]

- Kundu, S.; Bakchi, J.; Al Banna, M.H.; Sayeed, A.; Hasan, M.T.; Abid, M.T.; Ghosh, S.; Sarker, N.; Khan, M.S.I. Depressive symptoms associated with loneliness and physical activities among graduate university students in Bangladesh: Findings from a cross-sectional pilot study. Heliyon 2021, 7, e06401. [Google Scholar] [CrossRef] [PubMed]

- Accidents, I.M.V. Congruencies in increased mortality rates, years of potential life lost, and causes of death among public mental health clients in eight states. Heart Dis. 2006, 3, A42. [Google Scholar]

- Khatun, M.F.; Parvin, M.F.; Rashid, M.M.U.; Alam, M.S.; Kamrunnahar, M.; Talukder, A.; Rahman Razu, S.; Ward, P.R.; Ali, M. Mental health of physicians during COVID-19 outbreak in Bangladesh: A web-based cross-sectional survey. Front. Public Health 2021, 9, 592058. [Google Scholar] [CrossRef]

- Fahrenkopf, A.M.; Sectish, T.C.; Barger, L.K.; Sharek, P.J.; Lewin, D.; Chiang, V.W.; Edwards, S.; Wiedermann, B.L.; Landrigan, C.P. Rates of medication errors among depressed and burnt out residents: Prospective cohort study. BMJ 2008, 336, 488–491. [Google Scholar] [CrossRef]

- Chang, K.H.; Chan, M.K.; Canny, J. Analyzethis: Unobtrusive mental health monitoring by voice. In CHI’11 Extended Abstracts on Human Factors in Computing Systems; ACM: New York, NY, USA, 2011; pp. 1951–1956. [Google Scholar]

- Frogner, J.I.; Noori, F.M.; Halvorsen, P.; Hicks, S.A.; Garcia-Ceja, E.; Torresen, J.; Riegler, M.A. One-dimensional convolutional neural networks on motor activity measurements in detection of depression. In Proceedings of the 4th International Workshop on Multimedia for Personal Health & Health Care, Nice, France, 21 October 2019; ACM: New York, NY, USA, 2019; pp. 9–15. [Google Scholar]

- Mitra, V.; Shriberg, E.; McLaren, M.; Kathol, A.; Richey, C.; Vergyri, D.; Graciarena, M. The SRI AVEC-2014 evaluation system. In Proceedings of the 4th International Workshop on Audio/Visual Emotion Challenge, Orlando, FL, USA, 7 November 2014; ACM: New York, NY, USA, 2014; pp. 93–101. [Google Scholar]

- Salekin, A.; Eberle, J.W.; Glenn, J.J.; Teachman, B.A.; Stankovic, J.A. A weakly supervised learning framework for detecting social anxiety and depression. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–26. [Google Scholar] [CrossRef]

- Nguyen, T.; O’Dea, B.; Larsen, M.; Phung, D.; Venkatesh, S.; Christensen, H. Using linguistic and topic analysis to classify sub-groups of online depression communities. Multimed. Tools Appl. 2017, 76, 10653–10676. [Google Scholar] [CrossRef]

- Fatima, I.; Mukhtar, H.; Ahmad, H.F.; Rajpoot, K. Analysis of user-generated content from online social communities to characterise and predict depression degree. J. Inf. Sci. 2018, 44, 683–695. [Google Scholar] [CrossRef]

- Yazdavar, A.H.; Mahdavinejad, M.S.; Bajaj, G.; Romine, W.; Monadjemi, A.; Thirunarayan, K.; Sheth, A.; Pathak, J. Fusing visual, textual and connectivity clues for studying mental health. arXiv 2019, arXiv:1902.06843. [Google Scholar]

- Chen, X.; Sykora, M.D.; Jackson, T.W.; Elayan, S. What about mood swings: Identifying depression on twitter with temporal measures of emotions. In Proceedings of the the Web Conference, Lyon, France, 23–27 April 2018; ACM: New York, NY, USA, 2018; pp. 1653–1660. [Google Scholar]

- Zhou, Z.; Luo, D.; Yang, B.X.; Liu, Z. Machine Learning-Based Prediction Models for Depression Symptoms among Chinese Healthcare Workers during the Early COVID-19 Outbreak in 2020: A Cross-Sectional Study. Front. Psychiatry 2022, 13, 876995. [Google Scholar] [CrossRef] [PubMed]

- Salma, U.; Hasan, M.M. Relationship between job satisfaction and depression, anxiety and stress among the female nurses of Dhaka Medical College and Hospital, Bangladesh. Patient Care 2020, 20, 21. [Google Scholar]

- Kroenke, K.; Spitzer, R.L.; Williams, J.B. The PHQ-9: Validity of a brief depression severity measure. J. Gen. Intern. Med. 2001, 16, 606–613. [Google Scholar] [CrossRef] [PubMed]

- Cameron, I.M.; Crawford, J.R.; Lawton, K.; Reid, I.C. Psychometric comparison of PHQ-9 and HADS for measuring depression severity in primary care. Br. J. Gen. Pract. 2008, 58, 32–36. [Google Scholar] [CrossRef]

- Chang, E.; Cohen, J.; Koethe, B.; Smith, K.; Bir, A. Measuring job satisfaction among healthcare staff in the United States: A confirmatory factor analysis of the Satisfaction of Employees in Health Care (SEHC) survey. Int. J. Qual. Health Care 2017, 29, 262–268. [Google Scholar] [CrossRef]

- Available online: https://www.euro.who.int/en/health-topics/disease-prevention/nutrition/a-healthy-lifestyle/body-mass-index-bmi (accessed on 23 February 2022).

- James, W.P.T.; Chunming, C.; Inoue, S. Appropriate Asian body mass indices? Obes. Rev. 2002, 3, 139. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 1135–1144. [Google Scholar]

- Shapley, L.S. A Value for n-Person Games, Contributions to the Theory of Games; Princeton University Press: Princeton, NJ, USA, 1953; Volume 2, pp. 307–317. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. Consistent feature attribution for tree ensembles. arXiv 2017, arXiv:1706.06060. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Taunk, K.; De, S.; Verma, S.; Swetapadma, A. A brief review of nearest neighbor algorithm for learning and classification. In Proceedings of the 2019 International Conference on Intelligent Computing and Control Systems (ICCS), Madurai, India, 15–17 May 2019; IEEE: Toulouse, France, 2019; pp. 1255–1260. [Google Scholar]

- Uddin, S.; Khan, A.; Hossain, M.E.; Moni, M.A. Comparing different supervised machine learning algorithms for disease prediction. BMC Med. Inform. Decis. Mak. 2019, 19, 281. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Cruz, J.A.; Wishart, D.S. Applications of machine learning in cancer prediction and prognosis. Cancer Inform. 2006, 2, 117693510600200030. [Google Scholar] [CrossRef]

- Rahman, S.; Irfan, M.; Raza, M.; Moyeezullah Ghori, K.; Yaqoob, S.; Awais, M. Performance analysis of boosting classifiers in recognizing activities of daily living. Int. J. Environ. Res. Public Health 2020, 17, 1082. [Google Scholar] [CrossRef]

- Friedman, J.H. Stochastic gradient boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Sharaff, A.; Gupta, H. Extra-tree classifier with metaheuristics approach for email classification. In Advances in Computer Communication and Computational Sciences; Springer: Singapore, 2019; pp. 189–197. [Google Scholar]

- Shafique, R.; Mehmood, A.; Choi, G.S. Cardiovascular disease prediction system using extra trees classifier. Res. Sq. 2019. [Google Scholar] [CrossRef]

- Charoenkwan, P.; Nantasenamat, C.; Hasan, M.M.; Moni, M.A.; Manavalan, B.; Shoombuatong, W. StackDPPIV: A novel computational approach for accurate prediction of dipeptidyl peptidase IV (DPP-IV) inhibitory peptides. Methods 2022, 204, 189–198. [Google Scholar] [CrossRef] [PubMed]

- Xie, R.; Li, J.; Wang, J.; Dai, W.; Leier, A.; Marquez-Lago, T.T.; Akutsu, T.; Lithgow, T.; Song, J.; Zhang, Y. DeepVF: A deep learning-based hybrid framework for identifying virulence factors using the stacking strategy. Briefings Bioinform. 2021, 22, bbaa125. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.M.; Paul, B.K.; Ahmed, K.; Bui, F.M.; Quinn, J.M.; Moni, M.A. Heart disease prediction using supervised machine learning algorithms: Performance analysis and comparison. Comput. Biol. Med. 2021, 136, 104672. [Google Scholar] [CrossRef]

- Ali, M.M.; Ahmed, K.; Bui, F.M.; Paul, B.K.; Ibrahim, S.M.; Quinn, J.M.; Moni, M.A. Machine learning-based statistical analysis for early stage detection of cervical cancer. Comput. Biol. Med. 2021, 139, 104985. [Google Scholar] [CrossRef]

- Ali, M.M.; Al-Doori, V.S.; Mirzah, N.; Hemu, A.A.; Mahmud, I.; Azam, S.; Al-tabatabaie, K.F.; Ahmed, K.; Bui, F.M.; Moni, M.A. A machine learning approach for risk factors analysis and survival prediction of Heart Failure patients. Healthc. Anal. 2023, 3, 100182. [Google Scholar] [CrossRef]

| Evaluation Criteria | Explanation | Formula |

|---|---|---|

| Accuracy | Accuracy is the ratio of correctly classified instances [48]. | |

| Precision | Precision is a valid assessment parameter when we need to be highly confident in our forecast. Precision is defined as the ratio of True Positives to all Positives [49]. | |

| Recall | The recall is a test of how well our model identifies True Positives [50]. | |

| F-Measure | F1 Score is the weighted average of Precision and Recall [51]. | |

| Kappa Statistics | It evaluates the performance of qualitative characteristics from expected and observed inter-rater interaction [51]. | |

| MCC | It is essentially a correlation coefficient number ranging from to [50]. |

| Classifiers | Accuracy | MCC | Kappa | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KNN | 0.748387 | 0.686697 | 0.684685 | 0.748387 | 0.748387 | 0.748387 |

| DT | 0.881290 | 0.851288 | 0.851226 | 0.881290 | 0.881290 | 0.881290 |

| LGBM | 0.92516 | 0.906331 | 0.906206 | 0.925161 | 0.925161 | 0.925161 |

| GB | 0.930323 | 0.912740 | 0.912674 | 0.930323 | 0.930323 | 0.930323 |

| RF | 0.945806 | 0.932326 | 0.932116 | 0.945806 | 0.945806 | 0.945806 |

| ETC | 0.956129 | 0.945075 | 0.945012 | 0.956129 | 0.956129 | 0.956129 |

| StackDPP | 0.962581 | 0.953152 | 0.953087 | 0.962581 | 0.962581 | 0.962581 |

| Classifiers | Accuracy | MCC | Kappa | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KNN | 0.912258 | 0.890218 | 0.890033 | 0.912258 | 0.912258 | 0.912258 |

| DT | 0.898065 | 0.87239 | 0.872206 | 0.898065 | 0.898065 | 0.898065 |

| LGBM | 0.913548 | 0.891663 | 0.89164 | 0.913548 | 0.913548 | 0.913548 |

| GB | 0.912258 | 0.890166 | 0.89006 | 0.912258 | 0.912258 | 0.912258 |

| RF | 0.917419 | 0.896591 | 0.896487 | 0.917419 | 0.917419 | 0.917419 |

| ETC | 0.923871 | 0.904603 | 0.904571 | 0.923871 | 0.923871 | 0.923871 |

| StackDPP | 0.923871 | 0.904592 | 0.904579 | 0.923871 | 0.923871 | 0.923871 |

| Classifiers | Accuracy | MCC | Kappa | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KNN | 0.699355 | 0.624561 | 0.623198 | 0.699355 | 0.699355 | 0.699355 |

| DT | 0.722581 | 0.652358 | 0.652291 | 0.722581 | 0.722581 | 0.722581 |

| LGBM | 0.816774 | 0.771119 | 0.770388 | 0.816774 | 0.816774 | 0.816774 |

| GB | 0.797419 | 0.74657 | 0.746212 | 0.797419 | 0.797419 | 0.797419 |

| RF | 0.80129 | 0.751976 | 0.750981 | 0.80129 | 0.80129 | 0.80129 |

| ETC | 0.771613 | 0.714728 | 0.713827 | 0.771613 | 0.771613 | 0.771613 |

| StackDPP | 0.806452 | 0.761496 | 0.757392 | 0.806452 | 0.806452 | 0.806452 |

| Classifiers | Accuracy | MCC | Kappa | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KNN | 0.739355 | 0.676446 | 0.673519 | 0.739355 | 0.739355 | 0.739355 |

| DT | 0.749677 | 0.686364 | 0.686093 | 0.749677 | 0.749677 | 0.749677 |

| LGBM | 0.792258 | 0.739725 | 0.739466 | 0.792258 | 0.792258 | 0.792258 |

| GB | 0.783226 | 0.728512 | 0.728228 | 0.783226 | 0.783226 | 0.783226 |

| RF | 0.793548 | 0.742098 | 0.741251 | 0.793548 | 0.793548 | 0.793548 |

| ETC | 0.784516 | 0.730979 | 0.73007 | 0.784516 | 0.784516 | 0.784516 |

| StackDPP | 0.789677 | 0.73649 | 0.736337 | 0.789677 | 0.789677 | 0.789677 |

| Classifiers | Accuracy | MCC | Kappa | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KNN | 0.917419 | 0.896684 | 0.896503 | 0.917419 | 0.917419 | 0.917419 |

| DT | 0.895484 | 0.869003 | 0.868998 | 0.895484 | 0.895484 | 0.895484 |

| LGBM | 0.925161 | 0.906401 | 0.906248 | 0.925161 | 0.925161 | 0.925161 |

| GB | 0.931613 | 0.914344 | 0.9143 | 0.931613 | 0.931613 | 0.931613 |

| RF | 0.943226 | 0.929018 | 0.928859 | 0.943226 | 0.943226 | 0.943226 |

| ETC | 0.948387 | 0.935689 | 0.93534 | 0.948387 | 0.948387 | 0.948387 |

| StackDPP | 0.948387 | 0.935327 | 0.935315 | 0.948387 | 0.948387 | 0.948387 |

| Classifiers | Accuracy | MCC | Kappa | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KNN | 0.743226 | 0.681195 | 0.678136 | 0.743226 | 0.743226 | 0.743226 |

| DT | 0.870968 | 0.838398 | 0.838313 | 0.870968 | 0.870968 | 0.870968 |

| LGBM | 0.922581 | 0.903034 | 0.902983 | 0.922581 | 0.922581 | 0.922581 |

| GB | 0.934194 | 0.917665 | 0.917557 | 0.934194 | 0.934194 | 0.934194 |

| RF | 0.939355 | 0.924123 | 0.923994 | 0.939355 | 0.939355 | 0.939355 |

| ETC | 0.938065 | 0.922468 | 0.922383 | 0.938065 | 0.938065 | 0.938065 |

| StackDPP | 0.947097 | 0.933839 | 0.93367 | 0.947097 | 0.947097 | 0.947097 |

| Classifiers | Accuracy | MCC | Kappa | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| KNN | 0.709677 | 0.637304 | 0.635947 | 0.709677 | 0.709677 | 0.709677 |

| DT | 0.734194 | 0.667581 | 0.667125 | 0.734194 | 0.734194 | 0.734194 |

| LGBM | 0.845161 | 0.806528 | 0.805889 | 0.845161 | 0.845161 | 0.845161 |

| GB | 0.811613 | 0.764333 | 0.763889 | 0.811613 | 0.811613 | 0.811613 |

| RF | 0.834839 | 0.793837 | 0.793101 | 0.834839 | 0.834839 | 0.834839 |

| ETC | 0.852903 | 0.816465 | 0.815654 | 0.852903 | 0.852903 | 0.852903 |

| StackDPP | 0.852903 | 0.821431 | 0.815536 | 0.852903 | 0.852903 | 0.852903 |

| Number of Selected Features | Classifiers | Accuracy | MCC | Kappa | Precision | Recall | F1 |

|---|---|---|---|---|---|---|---|

| Top 20 | KNN | 0.794839 | 0.744718 | 0.742895 | 0.794839 | 0.794839 | 0.794839 |

| DT | 0.874839 | 0.843509 | 0.843148 | 0.874839 | 0.874839 | 0.874839 | |

| LGBM | 0.926452 | 0.907929 | 0.907851 | 0.926452 | 0.926452 | 0.926452 | |

| GB | 0.927742 | 0.90952 | 0.909452 | 0.927742 | 0.927742 | 0.927742 | |

| RF | 0.945806 | 0.93226 | 0.9321 | 0.945806 | 0.945806 | 0.945806 | |

| ETC | 0.947097 | 0.933922 | 0.933709 | 0.947097 | 0.947097 | 0.947097 | |

| StackDPP | 0.96129 | 0.951558 | 0.951472 | 0.96129 | 0.96129 | 0.96129 | |

| Top 15 | KNN | 0.794839 | 0.744524 | 0.742907 | 0.794839 | 0.794839 | 0.794839 |

| DT | 0.88129 | 0.851396 | 0.851252 | 0.88129 | 0.88129 | 0.88129 | |

| LGBM | 0.92 | 0.89979 | 0.899739 | 0.92 | 0.92 | 0.92 | |

| GB | 0.917419 | 0.89674 | 0.896538 | 0.917419 | 0.917419 | 0.917419 | |

| RF | 0.923871 | 0.904849 | 0.904619 | 0.923871 | 0.923871 | 0.923871 | |

| ETC | 0.934194 | 0.917771 | 0.917547 | 0.934194 | 0.934194 | 0.934194 | |

| StackDPP | 0.948387 | 0.9354 | 0.935281 | 0.948387 | 0.948387 | 0.948387 | |

| Top 10 | KNN | 0.886452 | 0.858205 | 0.857725 | 0.886452 | 0.886452 | 0.886452 |

| DT | 0.910968 | 0.888464 | 0.888414 | 0.910968 | 0.910968 | 0.910968 | |

| LGBM | 0.923871 | 0.904685 | 0.904613 | 0.923871 | 0.923871 | 0.923871 | |

| GB | 0.923871 | 0.904748 | 0.904591 | 0.923871 | 0.923871 | 0.923871 | |

| RF | 0.92129 | 0.901525 | 0.90138 | 0.92129 | 0.92129 | 0.92129 | |

| ETC | 0.92 | 0.899887 | 0.899754 | 0.92 | 0.92 | 0.92 | |

| StackDPP | 0.934194 | 0.917578 | 0.917509 | 0.934194 | 0.934194 | 0.934194 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Zahrani, F.A.; Abdulrazak, L.F.; Ali, M.M.; Islam, M.N.; Ahmed, K. StackDPP: Stacking-Based Explainable Classifier for Depression Prediction and Finding the Risk Factors among Clinicians. Bioengineering 2023, 10, 858. https://doi.org/10.3390/bioengineering10070858

Al-Zahrani FA, Abdulrazak LF, Ali MM, Islam MN, Ahmed K. StackDPP: Stacking-Based Explainable Classifier for Depression Prediction and Finding the Risk Factors among Clinicians. Bioengineering. 2023; 10(7):858. https://doi.org/10.3390/bioengineering10070858

Chicago/Turabian StyleAl-Zahrani, Fahad Ahmed, Lway Faisal Abdulrazak, Md Mamun Ali, Md Nazrul Islam, and Kawsar Ahmed. 2023. "StackDPP: Stacking-Based Explainable Classifier for Depression Prediction and Finding the Risk Factors among Clinicians" Bioengineering 10, no. 7: 858. https://doi.org/10.3390/bioengineering10070858

APA StyleAl-Zahrani, F. A., Abdulrazak, L. F., Ali, M. M., Islam, M. N., & Ahmed, K. (2023). StackDPP: Stacking-Based Explainable Classifier for Depression Prediction and Finding the Risk Factors among Clinicians. Bioengineering, 10(7), 858. https://doi.org/10.3390/bioengineering10070858