Abstract

Diabetic retinopathy is one of the most significant retinal diseases that can lead to blindness. As a result, it is critical to receive a prompt diagnosis of the disease. Manual screening can result in misdiagnosis due to human error and limited human capability. In such cases, using a deep learning-based automated diagnosis of the disease could aid in early detection and treatment. In deep learning-based analysis, the original and segmented blood vessels are typically used for diagnosis. However, it is still unclear which approach is superior. In this study, a comparison of two deep learning approaches (Inception v3 and DenseNet-121) was performed on two different datasets of colored images and segmented images. The study’s findings revealed that the accuracy for original images on both Inception v3 and DenseNet-121 equaled 0.8 or higher, whereas the segmented retinal blood vessels under both approaches provided an accuracy of just greater than 0.6, demonstrating that the segmented vessels do not add much utility to the deep learning-based analysis. The study’s findings show that the original-colored images are more significant in diagnosing retinopathy than the extracted retinal blood vessels.

1. Introduction

Diabetic retinopathy (DR) has been wreaking havoc on the modern-day population given the estimation that 463 million of the global population in 2019 and 700 million in 2045 are affected by diabetes mellitus (DM). Diabetic retinopathy appears to be a prevalent consequence of DM, thus being one of the major causes of blindness in the working population, as suggested by the International Diabetes Federation (IDF) [1]. Due to the lack of precise diagnosis, leaving DR untreated at the following stages can cause blindness; it has been responsible for 5 percent of the global blindness being diagnosed, where estimations suggest 50–65 cases of blindness occur for every 100,000 [2].

Biomarkers behind biological and pathological processes of diabetic retinopathy, such as blood pressure, diabetes duration, glucose level, and cholesterol levels, are considered to be unquestionably important determinants of the development of this disease, although growth of DR cannot be determined from these [3]. This is in contrast to patients with poor control and strong glycemic control, which can suddenly deteriorate and can allow for the identification of various phenotypes of progression of DR following the nature of the retinal lesions.

A comprehensive examination must take place for the proper evaluation of this disease. Likely, all patients suffering from type 1 diabetes and approximately 60% from type 2 diabetes will develop a particular degree of DR within 20 years of diagnosis [4]. Thus, regular screening is a prerequisite for catching the disease at an early stage.

Consequently, a precise diagnosis of DR must be a prerequisite in the case of addressing a patient regarding the compatible treatment, as DR treatments are vastly driven according to their severity levels. In these terms, deep learning-based image processing can accelerate the DR classification proficiency on the basis of precise recognition of its severity, which can provide accurate prediction of patient’s morbidities as well as ensure enriched diagnosis and, hence, can aid in designing plausible treatment plans for the cure. Image processing has been enormously engaged in DR classification by highlighting where fundus cameras are employed to collect retinal fundus images. Techniques including image enhancement, fusion, morphology identification, and image segmentation give a rise to the cognitive efforts of medical physicians in extracting additional information from medical image data [5]. Several attempts have been found in the case of automated DR classification using DL, where proposed methods encompass the types of categorizations based on lesions and blood vessels [6], as detailed in the following sections.

1.1. State of the Art on Approaching DR Detection with Deep Learning Techniques

Studies have been extensively carried out pertinent to diabetic retinopathy, suggesting multiple techniques proven adept for DR detection. This section represents the deep learning and neural network technique approaches for the multiclass classification of diabetic retinopathy. A novel automated recognition system was developed by Abbas et al. [7] under the five severity levels of diabetic retinopathy where pre- or post-processing of fundus images was not required. A semi-supervised deep learning technique was utilized in tandem with fine-tuning steps, where the sensitivity of 92.18% and specificity of 94.50% were obtained.

In another study, diabetic retinopathy detection was carried out using fundus images from the EyePACS1 dataset, containing approximately 9963 images that were collected from 4997 patients, and the Messidor-2 dataset, which contained 1748 images acquired from 874 patients. For detecting RDR using operation cut points of high specificity, the sensitivity and specificity gained for EyePACS-1 were 90.3 and 98.1 percent, respectively. On the other hand, 87.0% and 98.5% were acquired in terms of sensitivity and specificity, respectively, for the Messidor-2 dataset [8]. The architecture used was the Inception v3 [9], which showed impressively high sensitivity and specificity. DR severity grading was performed, yielding 93.33% accuracy by applying a quadrant-based approach; here, [10,11] addressed the issue of scarcity of annotated data for training purposes. The model used was Inception v3. The model training was conducted on a subsample of a Kaggle diabetic retinopathy dataset, while the accuracy testing was carried out on another subset of data. Transfer learning was implemented. In [12], the detection was shifted onto different platforms, such as smartphones and smartwatches. Deploying automated DR detection on such ubiquitous platforms, cost-effectively provides healthcare, offering a frictionless healthcare system. A convolutional neural network (CNN) model was based on Inception, which also serves as an ensemble of classifiers and also functions as a binary decision-tree-based method. Goncalves et al. compared human graders and the agreement of different machine learning models. Regardless of the dataset, transfer learning has performed well in terms of agreement across different CNNs.

A comparison had been undertaken between traditional approaches and CNN-based approaches. The Inception v3 model has been nonpareil, having reached the accuracy of 89% on the EyePACS dataset and performing the best [13]. In another study, the fundus images were classified into average to extreme conditions versus non-proliferative DR [14], where they used backpropagation neural organization (BPNN). Table 1 highlights the multiple deep learning approaches for DR detection and classification over various datasets of retinal fundus images.

Table 1.

Representation of traditional deep learning approaches for DR detection and classification over various datasets.

1.2. Research Gap

Patients at higher risk in the proliferating group should be addressed for prompt remedy and diagnosis, which demands the diagnostic technique to be highly precise and appropriate, in short, serves as an urgent call for a proficient and self-contained feasible approach for retinopathy identification, thus providing reliable results. Thus, we have seen pre-trained CNNs and other deep learning techniques being used to classify multiple diseases in the past. While taking this view into account, a furnished dataset and deep transfer learning are required for the improvement of classification accuracy. Otherwise, the dataset comprising low-resolution DR images mentioned in previous sections, where research has taken on pre-trained and traditional approaches, may lead to erroneous classification followed by misleading accuracy. While considering the feasibility in the case of DR detection following deep learning-based analysis, both the original-colored and segmented images had been used for diagnosis earlier by researchers. Nonetheless, which approach is clinically efficient remains equivocally a matter of doubt. In short, it indicates that a mechanism for assessing the classification performance characteristics of modern deep learning approaches on relevant datasets should be enriched.

1.3. Seleciton of the Original Dataset and Derivation of the Segmented One for Our Study

In terms of DR classification, the size and quality of the obtained dataset vastly determine the classification accuracy, that is, a higher accuracy requires a large amount of training data using a deep learning algorithm. Thus, considering the quality assurance, the dataset should be gained from reliable sources with accurate tags. Here, in Table 1, we mention some of the datasets widely used for DR detection, including following the Kaggle Diabetic Retinopathy dataset [28,29], DiaretDB1 dataset [30], HRF (High Resolution Fundus Image database) [31], and the Messidor and Messidor-2 datasets [32].

One of the two datasets involved in this research is the HRF database [31] which is used to train our transfer learning model for segmentation; it comprises three sets of fundus images, including 15 images of healthy patients, 15 images of DR patients, and lastly, the same number of images of patients with glaucoma. Each image from three sections has binary gold standard vessel segmentation images of its own. A group of professionals from the field of retinal image analysis as well as the clinicians from the cooperated ophthalmology clinics contributed to generating these data. In addition, the masks illustrating the field of view (FOV) are provided for particular datasets. The plane resolution of HRF is 3504 × 2336, which is relatively high compared with other available datasets in this field, asserting it as the more worthy one for our segmentation purpose.

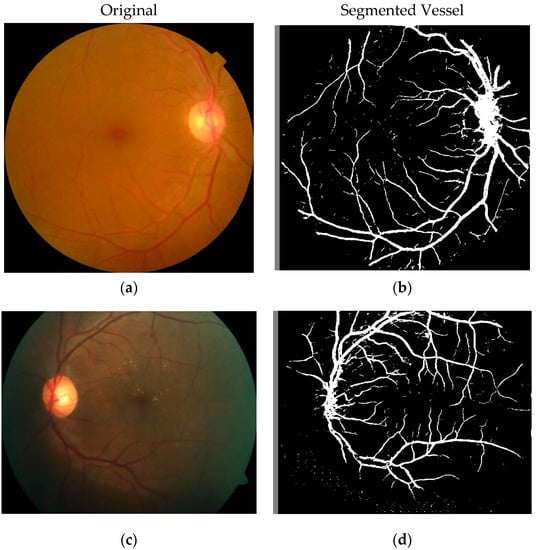

As a part of our study, another dataset we used is the APTOS Blindness Dataset provided by Kaggle [30] and generated by Aravind Eye Hospital located in India, whose original-colored and segmented blood vessels had been used to meet our research objectives. The goal was to derive the solutions from other ophthalmologists through the 4th Asia Pacific Tele-Ophthalmology Society (APTOS) Symposium. A large dataset of retinal images was generated using fundus photography, more precisely a photograph of the rear of the eye. The images were rated in the range 0–4 inclusive, where different ratings correlate to different stages of DR, except 0, which is to be assumed indicative of no symptoms of DR. One of the major reasons for choosing this one among multiple options, as illustrated in Table 1, is because of its greater size. It is the third-largest dataset, consisting of 5590 images, where the DR grading followed the ICDRDSS protocol and contained appropriate class distribution of images into each of the relative grades according to their severity levels. Figure 1 represents two of the original-colored images along with their segmented one to signify the dataset quality of this study.

Figure 1.

Image before segmentation and after segmentation stage. (a,c) Images before segmentation and (b,d) images after segmentation of their respective original images.

2. Proposed Methodology

2.1. Dataset Preprocessing and Enhancement

At the outset, the HRF dataset went through data augmentation. The U-Net network used this dataset as input for an initial transfer learning phase. Data augmentation uses certain techniques to artificially elevate the size of the data, meaning an overall quantitative augmentation. Deep learning models require ample training data, and a perennial problem is the shortage of training data, which is certainly the case in the medical image processing field. Techniques of augmenting data include position augmentation, such as scaling, flipping, cropping, padding, translation, affine transformation, rotation, and color augmentation, including changing contrast, saturation, and brightness, to make the images consistent in case of intensity and size that will contribute to CNN for precise classification.

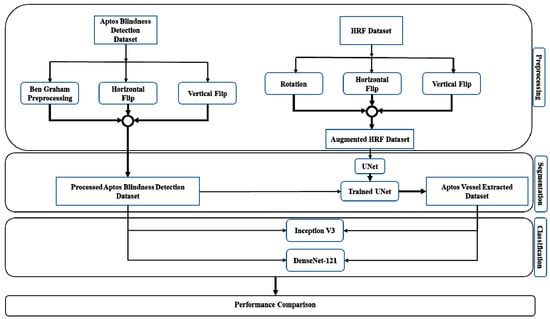

In this preview, we implemented horizontal flipping, vertical flipping, and rotation to elevate our data. The point to note is that only training data went through augmentation. We initially had 45 total images. There was an 80:20 bifurcation of the data such that 36 of the images from that initial pool went through the augmenting procedures. This is intended to be training data. A fourfold increase in training data was achieved, meaning we now had an expanded pool of images which bodes well for the training of our model. This will essentially help the model generalize better. The latter portion of that split was used for validating the model. All images were converted to a size of 224 × 224 to be used for input on the convolutional network. Figure 2 shows the proposed methodology.

Figure 2.

Block diagram of the proposed methodology.

For the APTOS dataset, the data had to be run through a filtering process where we separated it into three portions: the training set, the validation set, and the test set. Initially, we had 3662 images of training data. Seventy percent of those images, that is, 2563 images, were used for the actual training of the model. The remaining 30 percent of the leftover images were bifurcated, meaning that from the 1099 images that were left untouched, 549 images were designated as one group and the other 550 images as another group. The former is used for validation, while the latter is used for testing. All the images were changed to a size of 224 × 224 before operation. Here, Ben Graham’s preprocessing was coupled with auto-cropping, which upgraded our training performance [33]. The images were scaled to a certain radius, and the local average color was subtracted. The need for this arose due to a lack of lighting in a section of the images. One workaround was to convert the images to greyscale, but the aforementioned preprocessing method was selected instead. Cropping is used to shave off the uninformative areas of images. Data augmentation techniques, specifically horizontal flipping and vertical flipping, were used for artificially enriching the data and ultimately boosting performance.

2.2. CNN Architectures and our Suggested Workflow

In recent years, deep neural networks based on CNN models have been vastly engaged to address the disease classification challenges; they are assisted by computer vision since CNN has appeared to be applied in the field of deep learning, computer vision, and medical image processing with collective success and progression. Some of the related works corresponding to renowned authors depict the range of work that has been carried out. In medical image processing, CNNs run the gamut from performing pneumonia detection using chest x-rays [34] to brain tumor detection using MRI scans [35]. The U-Net architecture has been extensively used for segmentation [36], for instance, for prostate zone segmentation [37].

The Inception v3 architecture has achieved performances near the level of humans, where tasks such as colorectal cancer lymph node metastasis classification [38] and skin cancer classification have fared well [39]. Under the umbrella of the DenseNet architecture, studies have been carried out on image classification [40], COVID-19 diagnosis [41], as well as many other studies.

Hence, we deliberately chose CNN models to handle the DR classification more precisely. Our proposed framework comprises two phases, including segmentation and classification. In the case of segmentation purposes, CNN-based segmentation was chosen over other image segmentation techniques. To be more precise, U-Net CNN architectures were engaged for DR severity classification in our study, which were later carried out to transfer learning, as discussed in Section 3. Another phase denoting classification refers to the employment of several CNN-based architectures following Inception v3 and DenseNet-121, which have boosted the chances for robust classification of DR severity.

Since the APTOS original dataset had no previous instance of being segmented, there is a prerequisite for this flow of work to segment the dataset. To meet our research goal, the U-Net model has been trained on the HRF dataset, which is later passed through a transfer learning phase and then applied on the APTOS Blindness dataset to derive the instance segmented dataset from the original one. Then, the pre-trained models (Inception v3 and DenseNet-121) performed classification tasks on the original-colored images of the APTOS Blindness dataset as well as on instance segmented blood vessels image dataset as mentioned in Figure 2. Finally, the robustness of CNN architecture’s classification performances on our original and instance segmented dataset were evaluated for the precise diagnosis of DR patients.

3. Pre-Trained CNN Architectures and Experimental Setups

3.1. Segmentation

The U-Net convolutional network is used for our segmentation phase. There is a multitude of image segmentation techniques: region-based image segmentation, edge-based segmentation, clustering-based image segmentation, and, of course, convolutional neural network (CNN)-based image segmentation [42]. CNN-based segmentation is on the cutting edge of this field of research. Extrapolating various regions in an image and demarcating those regions into different classes is image segmentation. In simple terms, an image is broken down for segmentation into multiple regions. The intention behind this is to make images more ‘palatable’, meaning representing images in a format suitable for analysis by machines. We segmented the images of the APTOS dataset using a U-Net CNN architecture later to be worked on by other CNNs for DR severity classification.

3.1.1. U-Net

U-Net is a breakthrough architecture in medical image processing and is a successor to the sliding-window approach [43]. The sliding-window approach by [44] had downsides, as there was redundancy due to overlapping patches and a lack of cost-effectiveness [36]. U-Net architecture does more with less, as it is trained with fewer training images but provides comparatively more accurate segmentation. Fully convoluted networks (FCN) do not contain any dense layers, but this network extends FCNs that won the ISBI 2015 challenge [45].

3.1.2. Transfer Learning with U-Net

The U-net has gone through a transfer learning phase. Taking inspiration from humankind, transfer learning refers to the phenomenon of transferring knowledge across different tasks. The chances of transfer of learning increase the more related the newer task is to the older one. In transfer learning, weights and features from earlier trained modules can be used for another task. Low-level features, including edges, intensity, and shapes, can be transferred across tasks denoting a transfer of knowledge. The U-Net model was trained on the HRF dataset, which had been augmented beforehand. After the training phase, the model was saved, and later the U-Net model was used on the APTOS Blindness dataset, resulting in an instance segmented version of the original images as the output.

3.2. Classification

CNNs are adept at reducing the number of parameters without losing the quality of the model. An image goes through an analysis where a multitude of image features are scrutinized, and the outputs are demarcated into separate categories. The convolutional networks used for classification purposes are Inception v3 and DenseNet-121.

To overcome the challenges of computational expense, over-fitting, and gradient updates, the Inception framework provides multiple sizes of the kernel on the same level, opting to go wider rather than deeper following a heavily engineered route; the latest Inception v3 is well-received, achieving good accuracy on the ImageNet dataset. Inception v3 is 48 layers deep. Modifications from earlier models include factorizing larger convolutions into smaller ones, and asymmetric convolutions following a 5 × 5 convolution are replaced by two 3 × 3 convolutions to reduce parameters.

DenseNet is a breed of CNN characterized by dense connections between layers, thus being preferred since deeper networks are more adept at better generalizing [46], as the depth allows the network to learn far more complex functions. The deeper the network, the more chance for input information to vanish, which is dubbed the vanishing gradient problem. Resolving this issue entails moving away from the quintessential CNN architecture and installing dense layers requiring fewer parameters [47], and each feature is passed through layer by layer, being concatenated at each stage. Bottleneck layers are embedded in the architecture. DenseNet-121 has a total of 120 convolutions with 4 AvgPool. To elucidate further, it has 1 7 × 7 convolution layer, 58 3 × 3 convolution layers, a total of 61 1 × 1 convolution layers, the aforementioned 4 AvgPool, and 1 fully connected layer. However, here instead of AvgPool, global average pooling was used.

3.3. Original and Segmented Image Classification

One segment of the workflow entailed using the pre-trained DenseNet-121 and Inception v3 on the original-colored APTOS dataset images. The intention was to classify the data as per the categorization scheme of DR mentioned before. Both pre-trained models were used on the original images and segmented versions of those of the colored images. Before that, the U-Net was trained previously on the HRF dataset, and then that trained model was applied on the original-colored images to derive the segmented versions of itself, where the accuracy achieved was extremely high, approximately 99.02%. This higher accuracy, thus, validates the significance and feasibility of our segmentation process more precisely. Moreover, the data were categorized according to the aforementioned stages of DR.

The following applies to both workflows mentioned above for the DenseNet-121 and Inception v3. Both models were warmed up, using a total of 10 epochs from weight initialization, and a total of 20 epochs were used for training. Table 2 summarizes the training parameters of InceptionV3 and DenseNet-121.

Table 2.

Inception v3 and DenseNet-121 training parameter information.

Stochastic gradient descent (SGD) was used for optimization. Gradient descent is continued iteratively to determine the optimal values of parameters, initiating from a starting value which is intended to enumerate the minimal possibility of a given cost function. Three types of gradient descent are used: batch, mini-batch, and stochastic gradient descent. Among these three types, the stochastic gradient descent is best suited in terms of vast datasets, as quintessential optimization techniques of gradient descent following batch gradient descent are intended to pursue the entire dataset as a batch. However, this strategy does not fare well on a typical technique like this, as it would take the entire dataset and run it on each iteration, thus incurring a heavy expense. The drawback is that this tends to be noisier, but training time is a priority. Thus, global average pooling was used. The layer input and the pool size are identical, and the average pool is taken. The input feature map is partitioned into smaller patches, where by applying max operation, the maximum of each patch was computed. In addition, the global average pooling layer lessens the intermediate dimensionality.

To introduce non-linearity into the network, the non-differentiable rectified linear unit (ReLU) function was implemented. ReLU is an activation function related to a particular input, where certain outputs are activated with non-zero values while others with zero values are turned off. The softmax activation function produces values in the range (0–1) and so is suitable for the output layer. The softmax output layer was reduced to five probability points corresponding to the five levels of DR severity given in Equation (1).

where

Each input value is normalized into a vector of values. These values belong to a probability distribution. Here, θ is a one-hot encoded matrix which is a representation of categorical variables as binary vectors. This function predicts whether a set of features f are a class of j. Ultimately, the output is the ratio of the exponential of the input parameter and the sum of parameters of all existing values. A variable learning rate was used. The learning rate is a tuning parameter that determines the step size at each iteration. It dictates the level of change a model should go through in response to the predicted error when the weights are updated. Whenever the outputs stagnate for a given number of training epochs (i.e., hit a plateau), the learning rate is manipulated. Categorical cross-entropy was used as the loss function. Cross-entropy, in general terms, is a continuous and differentiable function that provides feedback necessary for steady incremental improvements in the model. This loss function, as given in Equation (2), is used when an example can only belong to one class out of the possible classes available, which is appropriate for our task at hand.

Here, scalar value for the output of the model, and target value and denotes the probability that event i occurs. In our work, the cross-entropy loss between labels and predictions were calculated. In particular, we opted for this loss function, as we had more than two label classes.

4. Experimental Results and Performance Matrices

To reiterate the main aspects of our study, this is essentially a comparative study on original-colored images versus segmented images for DR severity levels detection.

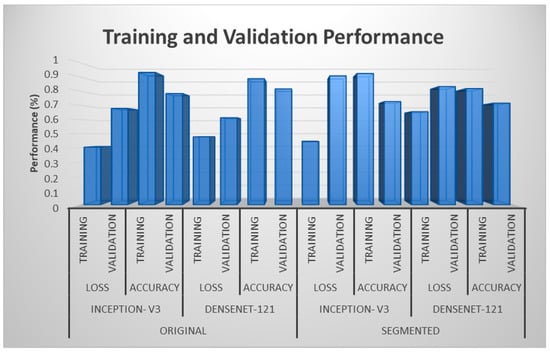

4.1. Training and Validation Performance

From Figure 3, it can be seen that training accuracies were greater than validation accuracies for both the models applied on original and segmented blood vessels images. In contrast, the reverse was observed for loss since validation losses exceeded training losses. For both Inception v3 and DenseNet-121, the maximum validation accuracy and the minimum validation loss were attained while using the original images. A conspicuous trend was observed when comparing equivalent portions of the original and segmented results, which showed a preference for the original images over the segmented blood vessels images, as they offered an advantage to the classification performance.

Figure 3.

Training and validation performances for two different models.

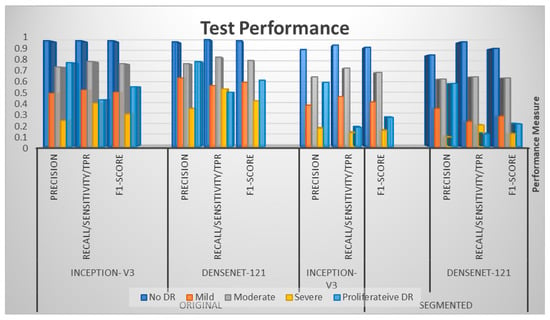

4.2. Test Performance

Table 3 represents four performance metrics for each model applied on both original-colored and segmented images for gauging test performance. Looking at each class, the maximum values for all metrics achieved for both models occurred in the case of original-colored images compared with the segmented ones. For instance, the maximum values of precision, recall, and F1-score were found to be 0.97 each for Inception v3, whereas the maximum values of those three performance metrics were found to be 0.89, 0.93, and 0.91, respectively, for DenseNet121 for the No DR stage. Both were acquired in terms of original-colored images; these seemed to outperform the model’s performance metrics when applied to the segmented images since the maximum values of precision, recall, and F1-score, in this case, were 0.89, 0.93, and 0.91 for Inception v3 and 0.84, 0.96, and 0.9 for the DenseNet-121 model, respectively. This underlying pattern of better performances using original-colored images rather than segmented images was carried over to each class of severity levels.

Table 3.

Test performance for original and segmented blood vessels.

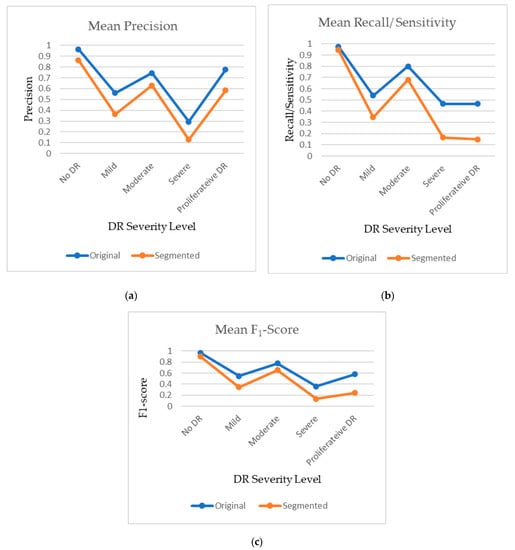

In the same vein as Table 3, the data of the table were visualized in Figure 4, illustrating the same pattern of better metrics for both models on original images compared with those from segmented images.

Figure 4.

Test performances for Inception v3 and DenseNet-121.

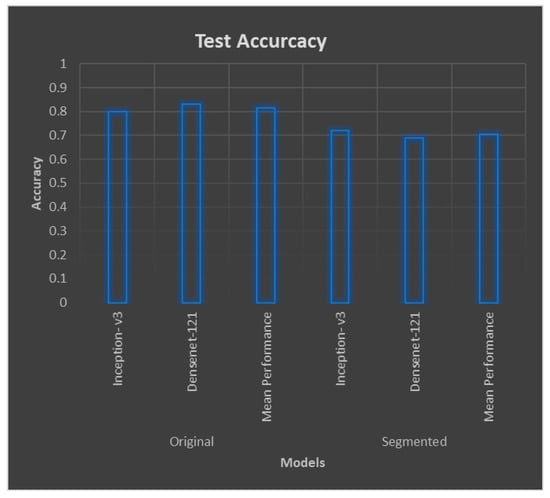

The original images continued to follow the underlying pattern of better performance over segmented images regarding accuracy metrics. As shown in Figure 5, original images outscored the segmented images, attaining accuracies of 80% and 83%, respectively, compared with 72% and 69%, respectively, on segmented image classification. Regarding the context, the mean performance in terms of segmented images was between 0.6 and 0.7, which was outperformed, as previously, by that of the original image, which was 0.8. The values for Table 3 are pictorially depicted in Figure 6. Regarding the two dotted lines in the three tables, one represents the values from the original images, while the other represents the values from the segmented ones. Vertical and horizontal axes represent the actual values of the metrics and the severity levels, respectively. For the three charts, the blue bar remains relatively more elevated than the orange bar, and for every point, this difference stayed constant, implying better outcomes found for original images.

Figure 5.

Mean test performances for original and segmented blood vessels.

Figure 6.

Plot of mean (a) precision, (b) recall, and (c) F1-Score for original and segmented datasets.

4.3. Comparison of Original and Segmented Datasets

Three different performance metrics, i.e., the F1-score, precision, and recall/sensitivity/TPR, were tabulated and are shown in Table 4. Values were found for all three metrics for the original images and segmented blood vessels with respect to all the five severity levels. The same underlying pattern of better performances using original images was found for all of the metrics, such as precision for original and segmented blood vessels for detecting No DR, which was 0.965 and 0.865, respectively. Likewise, the precision for Proliferative DR was 0.775 and 0.585, respectively, for the two models. For recall/sensitivity/TPR, the values for original and segmented blood vessels for detecting No DR were 0.975 and 0.945 and for detecting Proliferative DR, they were 0.465 and 0.15, respectively.

Table 4.

F1 Score, Precision, and Recall/Sensitivity/TPR for original and segmented blood vessels for DR severity levels.

5. Discussion

Firstly, our preference for engaging deep learning models, including Inception v3 and DenseNet-121, is proven significant because of their evident aptitude in diagnosing medical images for disease identification. In terms of medical image diagnosis, Inception v3 provides the ability to adopt both global and local features from an image using different sized filters of convolution layers and pooling operations. In contrast, the dense connectivity pattern of DenseNet-121 ensures efficient retrieval and extraction of better features, leading to an improved outcome. The Resnet model is not included here because of its slightly poorer results compared with the other two included in this study. The performance metrics from the outcome show that the precision, recall, and F1-score obtained after the classification of original-colored images provides better performance than that for segmented blood vessels, for both state-of-the-art deep learning models. This indicates that the segmentation does not add much value to the diagnosis of diabetic retinopathy. The reason behind the lower performance using the segmented blood vessels can be explained in two ways. First, when we use the segmented blood vessels, the retinal blood vessels are extracted, while the colored image is converted to greyscale images, and the region outside the retinal blood vessel is filtered out. As a result of down-sampling in the segmentation process, some information (pixel values) from the retinal blood vessels is lost due to segmentation [48]. In addition, the region outside the vessel can contain important information (such as drusen and other biomarkers) which are essential biomarkers in a glaucoma diagnosis. Again, the loss of retinal lesions can be considered as a significant drawback in the case of using segmented images for diagnosis of DR.

Second, a certain type of disease shows different characteristics in retinal vascular structure [49]. If the changes in retinal vascular tissue in diabetic retinopathy are not as predominant as optic nerve disease, glaucoma, etc., then the segmented image will not add much utility to the diagnostic performance [50]. Furthermore, the confounding issue and overlap with other disease biomarkers in the retinal blood vessel will significantly impact the diagnostic performance.

Third, some studies have shown promise in using segmented drusen for the diagnosis of diabetic retinopathy [51]. Given that this study focuses only on retinal blood vessels and original images, segmented drusen are beyond the scope of the study. However, future research can be performed comparing the performance for original image and segmented images.

Lastly, the segmented blood vessels used in this study are the generated images from a trained model, which is trained on another dataset. The model trained with other images could have less ability to extract the retinal blood vessels from images of another dataset. This is likely due to different image acquisition devices and the quality of the data acquisition. The main finding from this study is that the original-colored images are better than the segmented blood vessels in the deep learning-based diagnosis of diabetic retinopathy. These findings support some of the previous studies, which used original fundus images for the classification purpose using deep learning. However, some of the studies claimed higher performance in classification using segmented blood vessels. The probable reason could be the segmentation model trained on a portion of their dataset (after manual annotation). Another reason could be that they used the original images in the segmentation without performing any under-sampling, which results in less information to lose. Therefore, using the high-resolution images could help to obtain high-resolution segmented blood vessels, which further helps achieve higher diagnostic performance since the analysis of the segmented image provides less resolution and precision compared with the analysis of original image. This finding can largely supplement the automated screening and real-time diagnosis of DR in clinical practices.

This study has some limitations which are worth mentioning. First, the image segmentation model was based on transfer learning, i.e., the original model was trained on a different dataset, and the images used in this study were used as a test dataset. Second, the study focuses only on disease classification or diagnosis. However, temporal analysis is required to track the disease progression, and the utility of the two approaches (original and segmented) also needs to be investigated. Future research could be performed on this aspect.

6. Conclusions

Diabetic retinopathy is one of the major retinal diseases impacting the human eye as well as causing blindness. Thus, early diagnosis is crucial, but manual diagnosis and limited human capability can lead to misdiagnosis. Therefore, obtaining a deep learning-based automated diagnosis of the disease could assist in feasible detection for treatment. In the case of deep learning-based analysis, it is still equivocal and unclear to choose between original and segmented blood vessels for diagnosis. In this study, a comparative analysis was conducted involving two different deep learning algorithms in two different approaches: colored images and segmented blood vessels. From the findings of this study, the segmented blood vessels were shown not to add much utility to the deep learning-based analysis. Hence, for diagnostic purposes, using the original images could help lessen the time and cognitive efforts of manual annotation and segmentation. As for future research, it is suggested to use temporal data for observing the contribution of the two different approaches in disease detection and progression as well as to derive a lesion-based classification for the precise understanding of DR severity level.

Author Contributions

Conceptualization, M.A. and M.B.K.; methodology, M.B.K. and R.S.; software, R.S.; validation, R.S.; formal analysis, M.B.K.; writing—original draft preparation, M.A.; writing—review and editing, S.B.Y.; visualization, S.B.Y.; supervision, H.H.; project administration, M.A.R.; funding acquisition, S.B.Y. and H.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research has received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this research are available at the following links: https://buffyhridoy.github.io/ (accessed on 19 June 2022). https://www.linkedin.com/in/mohammad-badhruddouza-khan-97b023195/ (accessed on 19 June 2022). https://www.researchgate.net/profile/Mohammad-Khan-353 (accessed on 19 June 2022).

Acknowledgments

The authors acknowledge the support of Biomedical Engineering Department of KUET Bangladesh for providing the necessary lab facilities to complete this research work.

Conflicts of Interest

The authors declare that there are no conflict of interest regarding the publication of this paper. The authors also declare that they have no known competing financial interest that could have appeared to influence the work reported in this paper.

References

- Teo, Z.L.; Tham, Y.-C.; Yu, M.C.Y.; Chee, M.L.; Rim, T.H.; Cheung, N.; Bikbov, M.M.; Wang, Y.X.; Tang, Y.; Lu, Y.; et al. Global Prevalence of Diabetic Retinopathy and Projection of Burden through 2045: Systematic Review and Meta-analysis. Ophthalmology 2021, 128, 1580–1591. [Google Scholar] [CrossRef]

- Olson, J.; Strachan, F.; Hipwell, J.; Goatman, K.A.; McHardy, K.; Forrester, J.V.; Sharp, P.F. A comparative evaluation of digital imaging, retinal photography and optometrist examination in screening for diabetic retinopathy. Diabet. Med. 2003, 20, 528–534. [Google Scholar] [CrossRef] [PubMed]

- Leontidis, G.; Al-Diri, B.; Hunter, A. Diabetic retinopathy: Current and future methods for early screening from a retinal hemodynamic and geometric approach. Expert Rev. Ophthalmol. 2014, 9, 431–442. [Google Scholar] [CrossRef]

- Cheung, N.; Wong, I.Y.; Wong, T.Y. Ocular anti-VEGF therapy for diabetic retinopathy: Overview of clinical efficacy and evolving applications. Diabetes Care 2014, 37, 900–905. [Google Scholar] [CrossRef]

- Tariq, N.H.; Rashid, M.; Javed, A.; Zafar, E.; Alotaibi, S.S.; Zia, M.Y.I. Performance Analysis of Deep-Neural-Network-Based Automatic Diagnosis of Diabetic Retinopathy. Sensors 2021, 22, 205. [Google Scholar] [CrossRef]

- Wejdan, L.; Alyoubi, W.; Shalash, M.; Maysoon, F.; Abulkhair Information Technology Department, University of King Abdul Aziz, Jeddah, Saudi Arabia. Diabetic retinopathy detection through deep learning techniques: A review. Inform. Med. Unlocked 2020, 20, 100377. [Google Scholar]

- Alemany, P.; Qaisar, A.; Irene, F.; Auxiliadora, S.; Soledad, J. Automatic recognition of severity level for diagnosis of diabetic retinopathy using the deep visual feature. Med. Biol. Eng. Comput. 2017, 55, 1959–1974. [Google Scholar]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Bhardwaj, C.; Jain, S.; Sood, M. Diabetic retinopathy severity grading employing quadrant-based Inception-V3 convolution neural network architecture. Int. J. Imaging Syst. Technol. 2021, 31, 592–608. [Google Scholar] [CrossRef]

- Hagos, M.T.; Kant, S. Transfer learning based detection of diabetic retinopathy from small dataset. arXiv 2019, arXiv:1905.07203. [Google Scholar]

- Batista, G.C.; de Oliveira, D.L.; Silva, W.L.S.; Saotome, O. Hardware Architectures of Support Vector Machine Applied in Pattern Recognition Systems. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017. [Google Scholar]

- Gonçalves, J.; Conceiçao, T.; Soares, F. Inter-Observer Reliability in Computer-Aided Diagnosis of Diabetic Retinopathy. In Proceedings of the 12th International Conference on Health Informatics—HEALTHINF 2019, Prague, Czech Republic, 22–24 February 2019; pp. 481–491. [Google Scholar]

- Prasad, D.K.; Vibha, L.; Venugopal, K.R. Early Detection of Diabetic Retinopathy from Digital Retinal Fundus Images. In Proceedings of the 2015 IEEE Recent Advances in Intelligent Computational Systems (RAICS), Trivandrum, India, 10–12 December 2015; pp. 240–245. [Google Scholar]

- Wan, P.S.; Liang, Y.; Zhang, Y. Deep convolutional neural networks for diabetic retinopathy detection by image classification. Comput. Electr. Eng. 2018, 72, 274–282. [Google Scholar] [CrossRef]

- Gargeya, R.; Leng, T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology 2017, 124, 962–969. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Zhong, J.; Yang, S.; Gao, Z.; Hu, J.; Chen, Y.; Yi, Z. Automated identification and grading system of diabetic retinopathy using deep neural networks. Knowl. Based Syst. 2019, 175, 12–25. [Google Scholar] [CrossRef]

- Santhi, T.; Sabeenian, R.S. Modified Alexnet architecture for classification of diabetic retinopathy images. Comput. Electr. Eng. 2019, 76, 56–64. [Google Scholar] [CrossRef]

- Mobeen-Ur-Rehman; Khan, S.H.; Abbas, Z.; Danish, R.S.M. Classification of Diabetic Retinopathy Images Based on Customized CNN Architecture. In Proceedings of the 2019 Amity International Conference on artificial intelligence (AICAI), Dubai, United Arab Emirates, 4–6 February 2019; pp. 244–248. [Google Scholar]

- Lu, J.; Xu, Y.; Chen, M.; Luo, Y. A coarse-to-fine fully convolutional neural network for fundus vessel segmentation. Symmetry 2018, 10, 607. [Google Scholar] [CrossRef]

- Hua, C.H.; Huynh-The, T.; Lee, S. Retinal Vessel Segmentation using Round-Wise Features Aggregation on Bracket-Shaped Convolutional Neural Networks. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and biology society (EMBS), Berlin, Germany, 23–27 July 2019; pp. 36–39. [Google Scholar]

- Zhou, Y.; He, X.; Huang, L.; Liu, L.; Zhu, F.; Cui, S.; Shao, L. Collaborative Learning of Semi-Supervised Segmentation and Classification for Medical Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2079–2088. [Google Scholar]

- Li, X.; Hu, X.; Yu, L.; Zhu, L.; Fu, C.-W.; Heng, P.-A. CANet: Cross-disease attention network for joint diabetic retinopathy and diabetic macular edema grading. IEEE Trans. Med. Imag. 2020, 39, 1483–1493. [Google Scholar] [CrossRef]

- Vengalil, S.K.; Sinha, N.; Kruthiventi, S.S.S.; Babu, R.V. Customizing CNNs for Blood Vessel Segmentation from Fundus Images. In Proceedings of the International Conference on Signal Processing and communications SPCOM 2016, Bangalore, India, 12–15 June 2016; pp. 1–4. [Google Scholar]

- Yan, Y.; Gong, J.; Liu, Y. A novel Deep Learning Method for Red Lesions Detection using the Hybrid Feature. In Proceedings of the 31st Chinese Control and Decision Conference CCDC 2019, Nanchang, China, 3–5 June 2019; pp. 2287–2292. [Google Scholar]

- Jiang, H.; Kang, Y.; Gao, M.; Zhang, D.; He, M.; Qian, W. An Interpretable Ensemble Deep Learning Model for Diabetic Retinopathy Disease Classification. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 2045–2048, ISBN 978-1-5386-1311-5. [Google Scholar]

- Wu, Y.; Xia, Y.; Song, Y.; Zhang, Y.; Cai, W. NFN+: A novel network followed network for retinal vessel segmentation. Neural Netw. 2020, 126, 153–162. [Google Scholar] [CrossRef]

- Kaggle Dataset. Available online: https://kaggle.com/c/diabetic-retinopathy-detection (accessed on 15 November 2022).

- Asia Pacific Tele-Ophthalmology Society (APTOS). Blindness Detection Dataset “Detect Diabetic Retinopathy to Stop Blindness Before It’s Too Late”. 2019. Available online: https://www.kaggle.com/competitions/aptos2019-blindness-detection/data (accessed on 15 November 2022).

- Kauppi, T.; Kalesnykiene, V.; Kamarainen, J.-K.; Lensu, L.; Sorri, I.; Raninen, A.; Voutilainen, R.; Pietila, J.; Kalviainen, H.; Uusitalo, H.; et al. The DIARETDB1 Diabetic Retinopathy Database and Evaluation Protocol. In Proceedings of the British Machine Vision Conference 2007, Coventry, UK, 10–13 September 2007; pp. 1–10. [Google Scholar]

- Budai, A.; Bock, R.; Maier, A.; Hornegger, J.; Michelson, G. Robust vessel segmentation in fundus images. Int. J. Biomed. Imag. 2013, 2013, 154860. [Google Scholar] [CrossRef]

- Decenciere, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A.; et al. Feedback on a publicly distributed image database: The messidor database. Image. Anal. Stereol. 2014, 33, 231–234. [Google Scholar] [CrossRef]

- Preprocessing in Diabetic Retinopathy. Available online: https://www.kaggle.com/ratthachat/aptoseye-preprocessing-in-diabetic-retinopathy (accessed on 25 August 2020).

- Hasan, N.; Hasan, M.M.; Alim, M.A. Design of EEG Based Wheel Chair by Using Color Stimuli and Rhythm Analysis. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), Dhaka, Bangladess, 3–5 May 2019; pp. 1–4. [Google Scholar]

- Sharif, M.; Amin, J.; Haldorai, A.; Yasmin, M.; Nayak, R.S. Brain tumor detection and classification using machine learning: A comprehensive survey. Complex Intell. Syst. 2021, 8, 1–23. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Aldoj, N.; Biavati, F.; Michallek, F.; Stober, S.; Dewey, M. Automatic prostate and prostate zones segmentation of magnetic resonance images using DenseNet-like U-net. Sci. Rep. 2020, 10, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Wang, P.; Li, Y.; Zhou, Y.; Liu, X.; Luan, K. Transfer Learning of Pre-Trained Inception-V3 Model for Colorectal Cancer Lymph Node Metastasis Classification. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 1650–1654. [Google Scholar]

- Estava, A.; Kuprel, B.; Novoa, R.; Ko, J.; Swetter, S.; Blau, H.; Thrun, S. Dermatologist level classification of 4skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Zhang, K.; Guo, Y.; Wang, X.; Yuan, J.; Ding, Q. Multiple features reweight DenseNet for image classification. IEEE Access 2019, 7, 9872–9880. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Satapathy, S.C.; Zhang, X.; Wang, S.-H. COVID-19 diagnosis via DenseNet and optimization of transfer learning setting. Cogn. Comput. 2021, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Kaushik, R.; Kumar, S. Image Segmentation Using Convolutional Neural Network. Int. J. Sci. Technol. Res. 2019, 8, 667–675. [Google Scholar]

- Helwan, A.; Ozsahin, D.U. Sliding window-based machine learning system for the left ventricle localization in MR cardiac images. Appl. Comput. Intell. Soft Comput. 2017, 2017, 3048181. [Google Scholar] [CrossRef]

- Ciresan, D.C.; Giusti, A.; Gambardella, L.; Schmidhuber, J. Deep neural networks segment neuronal membranes in electron microscopy images. Adv. Neural Inf. Process. Syst. 2012, 25, 2843–2851. [Google Scholar]

- Cheng, D.; Lam, E.Y. Transfer Learning U-Net Deep Learning for Lung Ultrasound Segmentation. arXiv 2021, arXiv:2110.02196. [Google Scholar]

- O’Brien, J.A. Why Are Neural Networks Becoming Deeper, But not Wider? Available online: https://stats.stackexchange.com/q/223637 (accessed on 23 November 2022).

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Jin, C.; Tanno, R.; Mertzanidou, T.; Panagiotaki, E.; Alexander, D.C. Learning to Downsample for Segmentation of Ultra-High Resolution Images. arXiv 2021, arXiv:2109.11071. [Google Scholar]

- Chalam, K.V.; Sambhav, K. Optical coherence tomography angiography in retinal diseases. J. Ophthalmic Vis. Res. 2016, 11, 84. [Google Scholar] [CrossRef]

- Cogan, D.G.; Toussaint, D.; Kuwabara, T. Retinal vascular patterns: IV. Diabet. Retin. Arch. Ophthalmol. 1961, 66, 366–378. [Google Scholar] [CrossRef] [PubMed]

- Niemeijer, M.; van Ginneken, B.; Russell, S.R.; Suttorp-Schulten, M.S.; Abramoff, M.D. Automated detection and differentiation of drusen, exudates, and cotton-wool spots in digital color fundus photographs for diabetic retinopathy diagnosis. Investig. Ophthalmol. Vis. Sci. 2007, 48, 2260–2267. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).