On Machine Learning in Clinical Interpretation of Retinal Diseases Using OCT Images

Abstract

1. Introduction

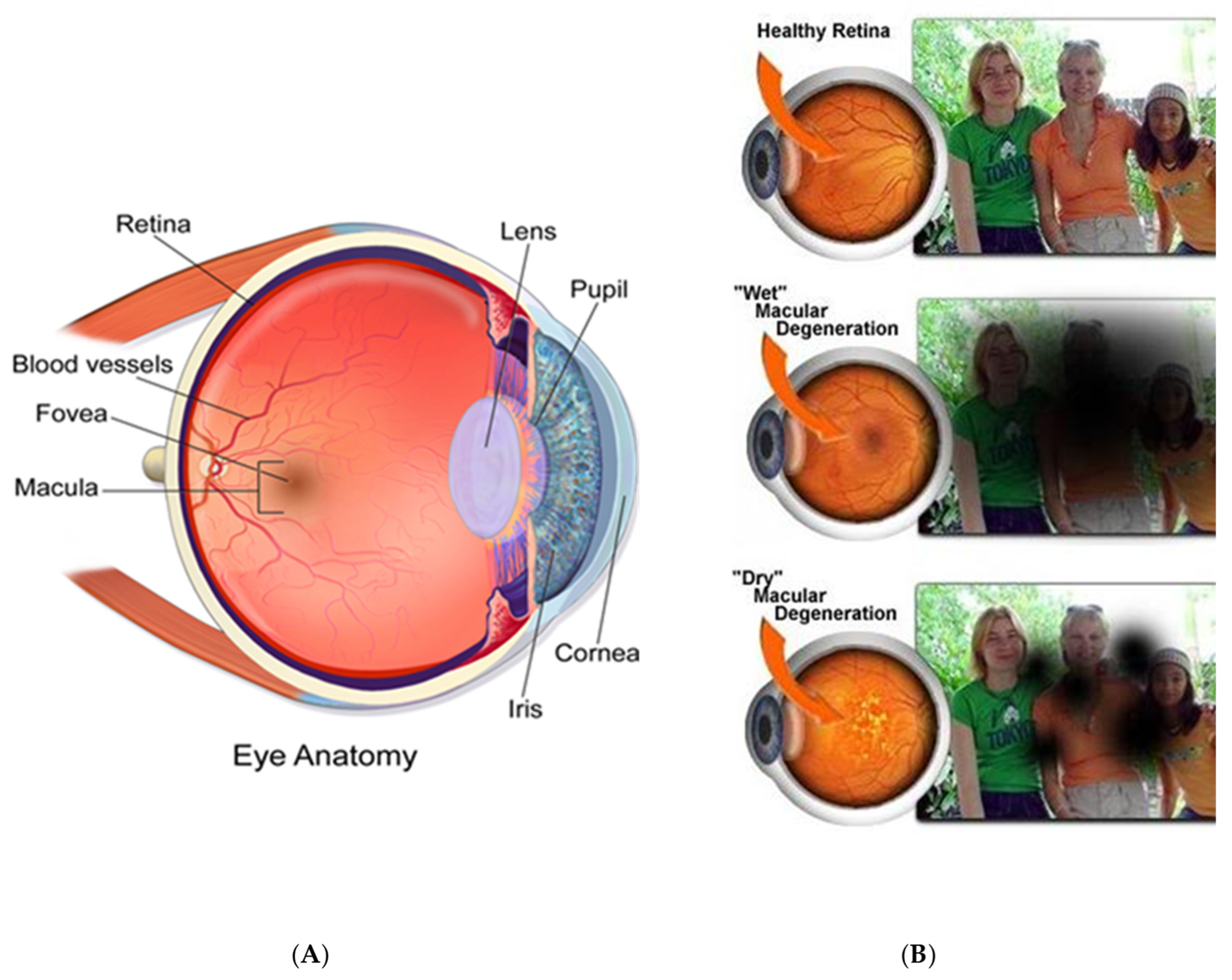

1.1. Ophthalmic Imaging

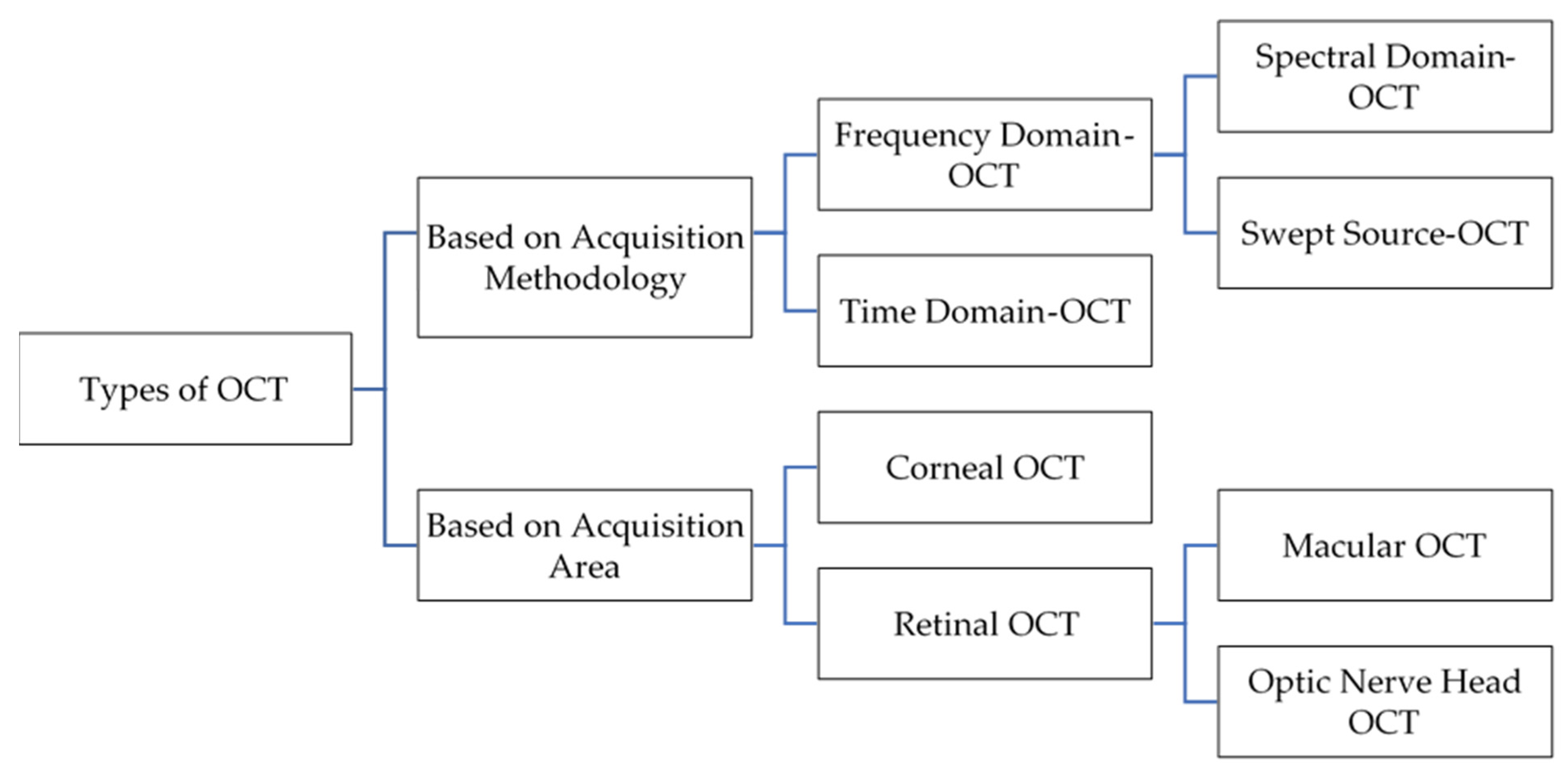

1.2. OCT Types

1.2.1. Time-Domain OCT (TD-OCT)

1.2.2. Fourier-Domain OCT (FD-OCT)

1.2.3. Swept-Source OCT

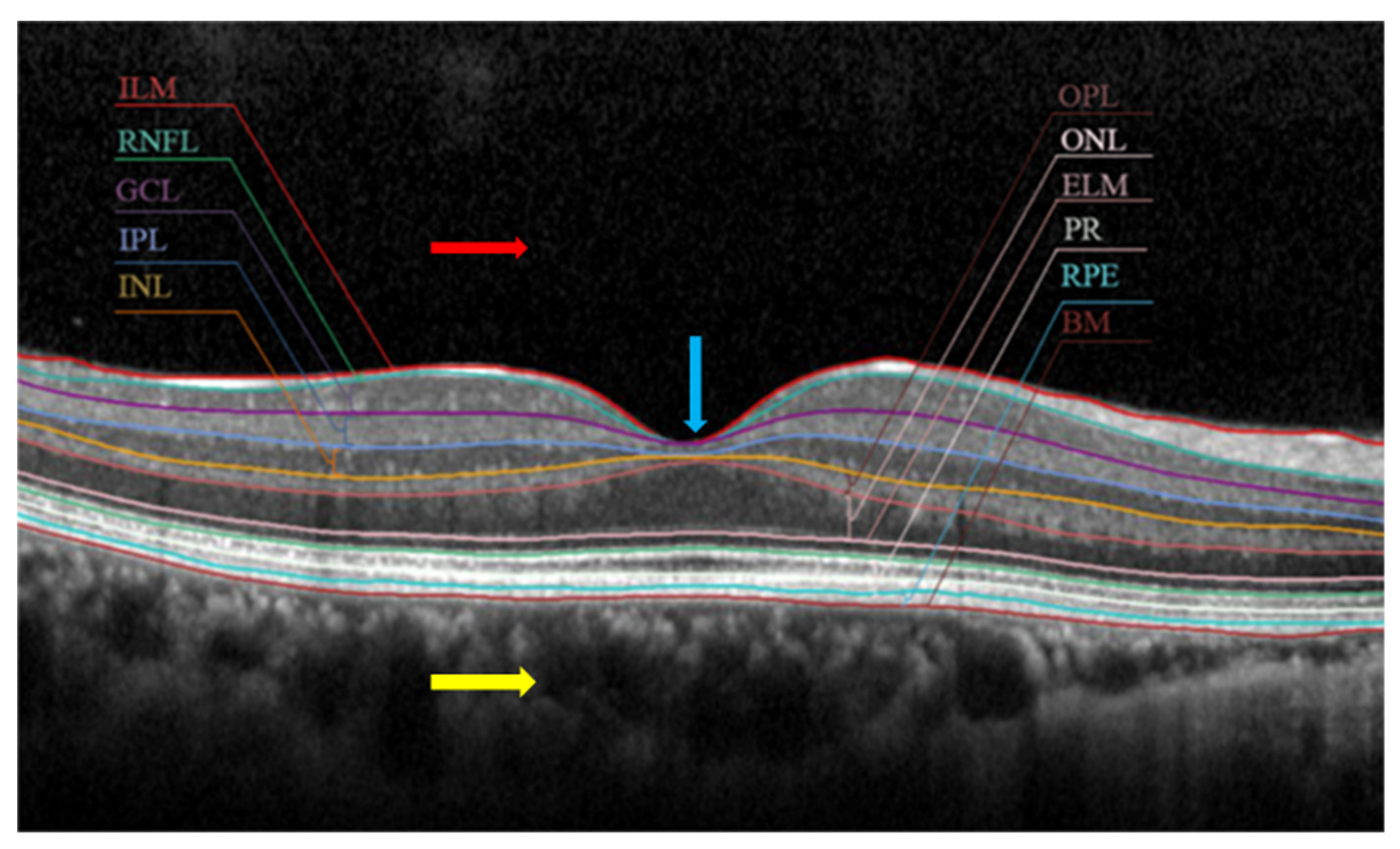

2. Clinical Interpretation of OCT Images with Different Biomarkers

3. Most Common Disease Identification Using OCT Image Analysis

3.1. Glaucoma

3.2. Age-Related Macular Degeneration (ARMD)

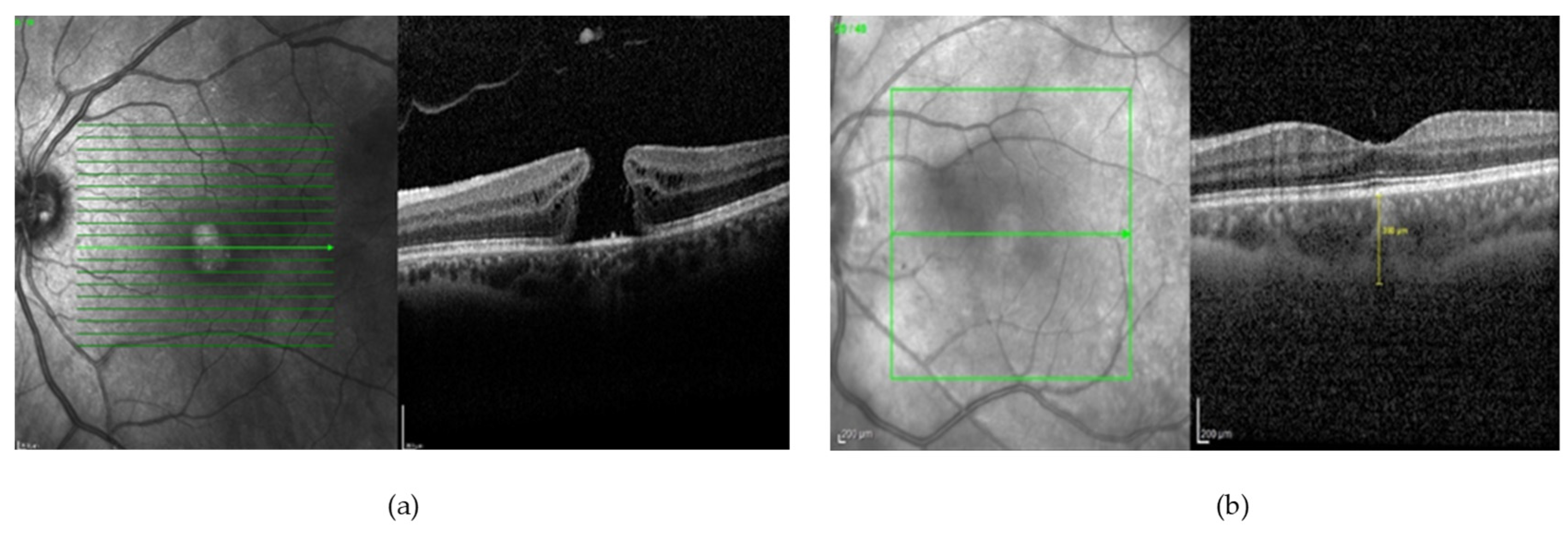

3.3. Macular Oedema

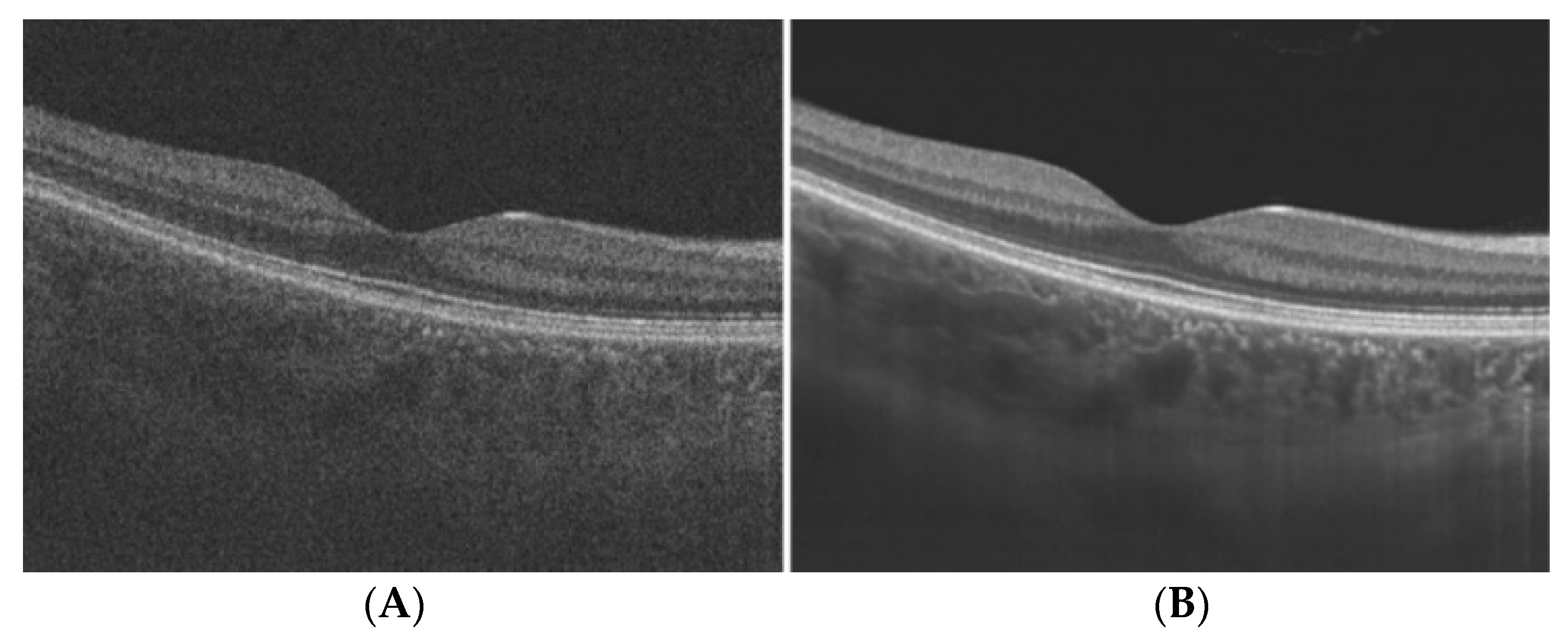

3.4. Diabetic Retinopathy

4. Analysis of Optical Coherence Tomography Images

4.1. Denoising OCT Images

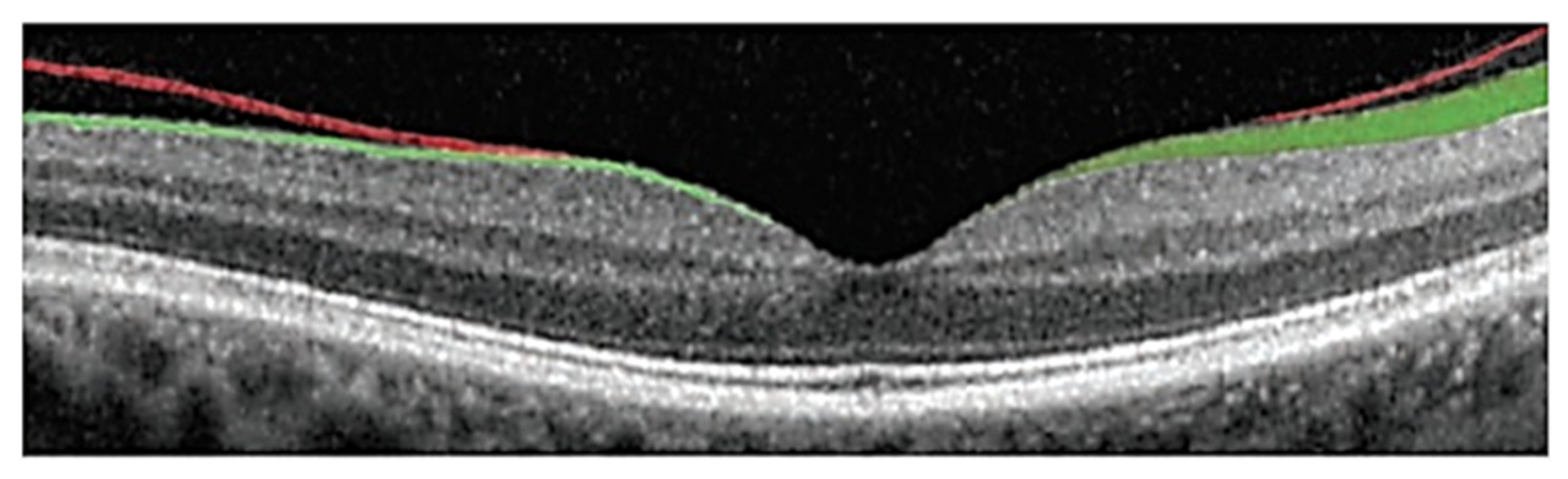

4.2. Segmentation of Subretinal Layers of OCT Images

4.3. Detection of Various Pathologies in OCT Images

4.4. Deep Learning Approach for OCT Image Analysis

5. Publicly Available OCT Dataset

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bogunovic, H.; Venhuizen, F.; Klimscha, S.; Apostolopoulos, S.; Bab-Hadiashar, A.; Bagci, U.; Beg, M.F.; Bekalo, L.; Chen, Q.; Ciller, C.; et al. RETOUCH: The Retinal OCT Fluid Detection and Segmentation Benchmark and Challenge. IEEE Trans. Med. Imaging 2019, 38, 1858–1874. [Google Scholar] [CrossRef] [PubMed]

- Sousa, J.A.; Paiva, A.; Silva, A.; Almeida, J.D.; Braz, G.; Diniz, J.O.; Figueredo, W.K.; Gattass, M. Automatic Segmentation of Retinal Layers in OCT Images with Intermediate Age-Related Macular Degeneration Using U-Net and DexiNed. PLoS ONE 2021, 16, 1–16. [Google Scholar] [CrossRef]

- Shen, S.Y.; Wong, T.Y.; Foster, P.J.; Loo, J.L.; Rosman, M.; Loon, S.C.; Wong, W.L.; Saw, S.M.; Aung, T. The Prevalence and Types of Glaucoma in Malay People: The Singapore Malay Eye Study. Investig. Ophthalmol. Vis. Sci. 2008, 49, 3846–3851. [Google Scholar] [CrossRef] [PubMed]

- Abràmoff, M.D.; Garvin, M.K.; Sonka, M. Retinal Imaging and Image Analysis. IEEE Rev. Biomed. Eng. 2010, 3, 169–208. [Google Scholar] [CrossRef] [PubMed]

- Hee, M.R.; Izatt, J.A.; Swanson, E.A.; Huang, D.; Schuman, J.S.; Lin, C.P.; Puliafito, C.A.; Fujimoto, J.G. Optical Coherence Tomography of the Human Retina. Arch. Ophthalmol. 1995, 113, 325–332. [Google Scholar] [CrossRef]

- Popescu, D.P.; Choo-Smith, L.P.; Flueraru, C.; Mao, Y.; Chang, S.; Disano, J.; Sherif, S.; Sowa, M.G. Optical Coherence Tomography: Fundamental Principles, Instrumental Designs and Biomedical Applications. Biophys. Rev. 2011, 3, 155–169. [Google Scholar] [CrossRef] [PubMed]

- Aumann, S.; Donner, S.; Fischer, J.; Müller, F. Optical Coherence Tomography (OCT): Principle and Technical Realization. In High Resolution Imaging in Microscopy and Ophthalmology; Bille, J.F., Ed.; Springer International Publishing: Cham, Switzerland, 2019; pp. 59–85. ISBN 9783030166373. [Google Scholar]

- Prati, F.; Mallus, M.T.; Imola, F.; Albertucci, M. Optical Coherence Tomography (OCT). Catheter. Cardiovasc. Interv. A Knowl.-Based Approach 2013, 254, 363–375. [Google Scholar] [CrossRef]

- Rabbani, H.; Kafieh, R.; Amini, Z. Optical Coherence Tomography Image Analysis. In Wiley Encyclopedia of Electrical and Electronics Engineering; Webster, J.G., Ed.; 2016; ISBN 047134608X. [Google Scholar] [CrossRef]

- Singh, L.K.; Pooja; Garg, H.; Khanna, M. An Artificial Intelligence-Based Smart System for Early Glaucoma Recognition Using OCT Images. Int. J. E-Health Med. Commun. 2021, 12, 32–59. [Google Scholar] [CrossRef]

- Li, A.; Thompson, A.C.; Asrani, S. Impact of Artifacts From Optical Coherence Tomography Retinal Nerve Fiber Layer and Macula Scans on Detection of Glaucoma Progression. Am. J. Ophthalmol. 2021, 221, 235–245. [Google Scholar] [CrossRef]

- Medeiros, F.A.; Zangwill, L.M.; Bowd, C.; Vessani, R.M.; Susanna, R.; Weinreb, R.N. Evaluation of Retinal Nerve Fiber Layer, Optic Nerve Head, and Macular Thickness Measurements for Glaucoma Detection Using Optical Coherence Tomography. Am. J. Ophthalmol. 2005, 139, 44–55. [Google Scholar] [CrossRef]

- Chan, E.W.; Liao, J.; Wong, R.; Loon, S.C.; Aung, T.; Wong, T.Y.; Cheng, C.Y. Diagnostic Performance of the ISNT Rule for Glaucoma Based on the Heidelberg Retinal Tomograph. Transl. Vis. Sci. Technol. 2013, 2, 2. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Ishikawa, H.; Wollstein, G.; Bilonick, R.A.; Folio, L.S.; Nadler, Z.; Kagemann, L.; Schuman, J.S. Three-Dimensional Spectral-Domain Optical Coherence Tomography Data Analysis for Glaucoma Detection. PLoS ONE 2013, 8, e55476. [Google Scholar] [CrossRef]

- Vermeer, K.A.; van der Schoot, J.; Lemij, H.G.; de Boer, J.F. Automated Segmentation by Pixel Classification of Retinal Layers in Ophthalmic OCT Images. Biomed. Opt. Express 2011, 2, 1743. [Google Scholar] [CrossRef] [PubMed]

- Kral, J.; Lestak, J.; Nutterova, E. OCT Angiography, RNFL and the Visual Fielat Different Values of Intraocular Pressure. Biomed. Rep. 2022, 16, 1–5. [Google Scholar] [CrossRef]

- Khalid, S.; Akram, M.U.; Hassan, T.; Jameel, A.; Khalil, T. Automated Segmentation and Quantification of Drusen in Fundus and Optical Coherence Tomography Images for Detection of ARMD. J. Digit. Imaging 2018, 31, 464–476. [Google Scholar] [CrossRef] [PubMed]

- Tvenning, A.-O.; Rikstad Hanssen, S.; Austeng, D.; Sund Morken, T.; Ophthalmol, A. Deep Learning Identify Retinal Nerve Fibre and Choroid Layers as Markers of Age-Related Macular Degeneration in the Classification of Macular Spectral-Domain Optical Coherence Tomography Volumes. Acta Ophthalmol. 2022, 100, 937–945. [Google Scholar] [CrossRef] [PubMed]

- N, G.G.; Saikumar, B.; Roychowdhury, S.; Kothari, A.R.; Rajan, J. Depthwise Separable Convolutional Neural Network Model for Intra-Retinal Cyst Segmentation; Depthwise Separable Convolutional Neural Network Model for Intra-Retinal Cyst Segmentation. In Proceedings of the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; ISBN 9781538613115. [Google Scholar]

- Aziza, E.Z.; Mohamed El Amine, L.; Mohamed, M.; Abdelhafid, B. Decision Tree CART Algorithm for Diabetic Retinopathy Classification. In Proceedings of the 2019 6th International Conference on Image and Signal Processing and their Applications (ISPA), Mostaganem, Algeria, 24–25 November 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Bilal, A.; Sun, G.; Li, Y.; Mazhar, S.; Khan, A.Q. Diabetic Retinopathy Detection and Classification Using Mixed Models for a Disease Grading Database. IEEE Access 2021, 9, 23544–23553. [Google Scholar] [CrossRef]

- Elgafi, M.; Sharafeldeen, A.; Elnakib, A.; Elgarayhi, A.; Alghamdi, N.S.; Sallah, M.; El-Baz, A. Detection of Diabetic Retinopathy Using Extracted 3D Features from OCT Images. Sensors 2022, 22, 7833. [Google Scholar] [CrossRef]

- Mishra, S.S.; Mandal, B.; Puhan, N.B. MacularNet: Towards Fully Automated Attention-Based Deep CNN for Macular Disease Classification. SN Comput. Sci. 2022, 3, 1–16. [Google Scholar] [CrossRef]

- Qiu, B.; Huang, Z.; Liu, X.; Meng, X.; You, Y.; Liu, G.; Yang, K.; Maier, A.; Ren, Q.; Lu, Y. Noise Reduction in Optical Coherence Tomography Images Using a Deep Neural Network with Perceptually-Sensitive Loss Function. Biomed. Opt. Express 2020, 11, 817. [Google Scholar] [CrossRef]

- Iftimia, N.; Bouma, B.E.; Tearney, G.J. Speckle Reduction in Optical Coherence Tomography by “Path Length Encoded” Angular Compounding. J. Biomed. Opt. 2003, 8, 260. [Google Scholar] [CrossRef] [PubMed]

- Kennedy, B.F.; Hillman, T.R.; Curatolo, A.; Sampson, D.D. Speckle Reduction in Optical Coherence Tomography by Strain Compounding. Opt. Lett. 2010, 35, 2445. [Google Scholar] [CrossRef] [PubMed]

- Cheng, W.; Qian, J.; Cao, Z.; Chen, X.; Mo, J. Dual-Beam Angular Compounding for Speckle Reduction in Optical Coherence Tomography. In Proceedings of the Optical Coherence Tomography and Coherence Domain Optical Methods in Biomedicine XXI, SPIE, San Francisco, CA, USA, 28 January–2 February 2017; Volume 10053, p. 100532Z. [Google Scholar]

- Dong, W.; Zhang, L.; Shi, G.; Li, X. Nonlocally Centralized Sparse Representation for Image Restoration. IEEE Trans. Image Process. 2013, 22, 1620–1630. [Google Scholar] [CrossRef] [PubMed]

- Dong, W.; Zhang, L.; Shi, G.; Wu, X. Image Deblurring and Super-Resolution by Adaptive Sparse Domain Selection and Adaptive Regularization. IEEE Trans. Image Process. 2011, 20, 1838–1857. [Google Scholar] [CrossRef]

- Abbasi, A.; Monadjemi, A. Optical Coherence Tomography Retinal Image Reconstruction via Nonlocal Weighted Sparse Representation. J. Biomed. Opt. 2018, 23, 1. [Google Scholar] [CrossRef]

- Qiu, B.; You, Y.; Huang, Z.; Meng, X.; Jiang, Z.; Zhou, C.; Liu, G.; Yang, K.; Ren, Q.; Lu, Y. N2NSR-OCT: Simultaneous Denoising and Super-Resolution in Optical Coherence Tomography Images Using Semisupervised Deep Learning. J. Biophotonics 2021, 14, 1–15. [Google Scholar] [CrossRef]

- Wang, M.; Zhu, W.; Yu, K.; Chen, Z.; Shi, F.; Zhou, Y.; Ma, Y.; Peng, Y.; Bao, D.; Feng, S.; et al. Semi-Supervised Capsule CGAN for Speckle Noise Reduction in Retinal OCT Images. IEEE Trans. Med. Imaging 2021, 40, 1168–1183. [Google Scholar] [CrossRef]

- Kande, N.A.; Dakhane, R.; Dukkipati, A.; Yalavarthy, P.K. SiameseGAN: A Generative Model for Denoising of Spectral Domain Optical Coherence Tomography Images. IEEE Trans. Med. Imaging 2021, 40, 180–192. [Google Scholar] [CrossRef]

- Scott, A.W.; Farsiu, S.; Enyedi, L.B.; Wallace, D.K.; Toth, C.A. Imaging the Infant Retina with a Hand-Held Spectral-Domain Optical Coherence Tomography Device. Am. J. Ophthalmol. 2009, 147, 364–373.e2. [Google Scholar] [CrossRef]

- Liu, H.; Lin, S.; Ye, C.; Yu, D.; Qin, J.; An, L. Using a Dual-Tree Complex Wavelet Transform for Denoising an Optical Coherence Tomography Angiography Blood Vessel Image. OSA Contin. 2020, 3, 2630. [Google Scholar] [CrossRef]

- Yan, Q.; Chen, B.; Hu, Y.; Cheng, J.; Gong, Y.; Yang, J.; Liu, J.; Zhao, Y. Speckle Reduction of OCT via Super Resolution Reconstruction and Its Application on Retinal Layer Segmentation. Artif. Intell. Med. 2020, 106, 101871. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Zhang, N.; Hao, Q. Real-Time Noise Reduction Based on Ground Truth Free Deep Learning for Optical Coherence Tomography. Biomed. Opt. Express 2021, 12, 2027. [Google Scholar] [CrossRef] [PubMed]

- Yazdanpanah, A.; Hamarneh, G.; Smith, B.; Sarunic, M. Intra-Retinal Layer Segmentation in Optical Coherence Tomography Using an Active Contour Approach. In Medical Image Computing and Computer-Assisted Intervention; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5762, pp. 649–656. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Lee, K.; Niemeijer, M.; Alward, W.L.M.; Greenlee, E.C.; Garvin, M.K.; Sonka, M.; Kwon, Y.H. Automated Segmentation of the Cup and Rim from Spectral Domain OCT of the Optic Nerve Head. Investig. Ophthalmol. Vis. Sci. 2009, 50, 5778–5784. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Reisman, C.A.; Wang, Z.; Fukuma, Y.; Hangai, M.; Yoshimura, N.; Tomidokoro, A.; Araie, M.; Raza, A.S.; Hood, D.C.; et al. Automated Layer Segmentation of Macular OCT Images Using Dual-Scale Gradient Information. Opt. Express 2010, 18, 21293. [Google Scholar] [CrossRef]

- Sappa, L.B.; Okuwobi, I.P.; Li, M.; Zhang, Y.; Xie, S.; Yuan, S.; Chen, Q. RetFluidNet: Retinal Fluid Segmentation for SD-OCT Images Using Convolutional Neural Network. J. Digit. Imaging 2021, 34, 691–704. [Google Scholar] [CrossRef]

- Huang, Y.; Danis, R.P.; Pak, J.W.; Luo, S.; White, J.; Zhang, X.; Narkar, A.; Domalpally, A. Development of a Semi-Automatic Segmentation Method for Retinal OCT Images Tested in Patients with Diabetic Macular Edema. PLoS ONE 2013, 8, 8–13. [Google Scholar] [CrossRef]

- Quellec, G.; Lee, K.; Dolejsi, M.; Garvin, M.K.; Abràmoff, M.D.; Sonka, M. Three-Dimensional Analysis of Retinal Layer Texture: Identification of Fluid-Filled Regions in SD-OCT of the Macula. IEEE Trans. Med. Imaging 2010, 29, 1321–1330. [Google Scholar] [CrossRef]

- Mishra, A.; Wong, A.; Bizheva, K.; Clausi, D.A. Intra-Retinal Layer Segmentation in Optical Coherence Tomography Images. Opt. Express 2009, 17, 23719. [Google Scholar] [CrossRef]

- Baroni, M.; Fortunato, P.; La Torre, A. Towards Quantitative Analysis of Retinal Features in Optical Coherence Tomography. Med. Eng. Phys. 2007, 29, 432–441. [Google Scholar] [CrossRef]

- Fuller, A.R.; Zawadzki, R.J.; Choi, S.; Wiley, D.F.; Werner, J.S.; Hamann, B. Segmentation of Three-Dimensional Retinal Image Data. IEEE Trans. Vis. Comput. Graph. 2007, 13, 1719–1726. [Google Scholar] [CrossRef]

- Morales, S.; Colomer, A.; Mossi, J.M.; del Amor, R.; Woldbye, D.; Klemp, K.; Larsen, M.; Naranjo, V. Retinal Layer Segmentation in Rodent OCT Images: Local Intensity Profiles & Fully Convolutional Neural Networks. Comput. Methods Programs Biomed. 2021, 198. [Google Scholar] [CrossRef]

- Fernández, D.C. Delineating Fluid-Filled Region Boundaries in Optical Coherence Tomography Images of the Retina. IEEE Trans. Med. Imaging 2005, 24, 929–945. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Sahni, J.; Campa, C.; Stangos, A.N.; Raj, A.; Harding, S.P. Computerized Assessment of Intraretinal and Subretinal Fluid Regions in Spectral-Domain Optical Coherence Tomography Images of the Retina. Am. J. Ophthalmol. 2013, 155, 277–286.e1. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Lee, K.; Zhang, L.; Sonka, M.; Abramoff, M.D. Stratified Sampling Voxel Classification for Segmentation of Intraretinal and Subretinal Fluid in Longitudinal Clinical OCT Data. IEEE Trans. Med. Imaging 2015, 34, 1616–1623. [Google Scholar] [CrossRef]

- Gopinath, K.; Sivaswamy, J. Segmentation of Retinal Cysts from Optical Coherence Tomography Volumes Via Selective Enhancement. IEEE J. Biomed. Health Inform. 2019, 23, 273–282. [Google Scholar] [CrossRef]

- Derradji, Y.; Mosinska, A.; Apostolopoulos, S.; Ciller, C.; De Zanet, S.; Mantel, I. Fully-Automated Atrophy Segmentation in Dry Age-Related Macular Degeneration in Optical Coherence Tomography. Sci. Rep. 2021, 11, 154–166. [Google Scholar] [CrossRef]

- Guo, Y.; Hormel, T.T.; Xiong, H.; Wang, J.; Hwang, T.S.; Jia, Y. Automated Segmentation of Retinal Fluid Volumes from Structural and Angiographic Optical Coherence Tomography Using Deep Learning. Transl. Vis. Sci. Technol. 2020, 9, 1–12. [Google Scholar] [CrossRef]

- Wilson, M.; Chopra, R.; Wilson, M.Z.; Cooper, C.; MacWilliams, P.; Liu, Y.; Wulczyn, E.; Florea, D.; Hughes, C.O.; Karthikesalingam, A.; et al. Validation and Clinical Applicability of Whole-Volume Automated Segmentation of Optical Coherence Tomography in Retinal Disease Using Deep Learning. JAMA Ophthalmol. 2021, 139, 964–973. [Google Scholar] [CrossRef]

- Girish, G.N.; Thakur, B.; Chowdhury, S.R.; Kothari, A.R.; Rajan, J. Segmentation of Intra-Retinal Cysts from Optical Coherence Tomography Images Using a Fully Convolutional Neural Network Model. IEEE J. Biomed. Health Inform. 2019, 23, 296–304. [Google Scholar] [CrossRef]

- Venhuizen, F.G.; van Ginneken, B.; Liefers, B.; van Asten, F.; Schreur, V.; Fauser, S.; Hoyng, C.; Theelen, T.; Sánchez, C.I. Deep Learning Approach for the Detection and Quantification of Intraretinal Cystoid Fluid in Multivendor Optical Coherence Tomography. Biomed. Opt. Express 2018, 9, 1545–1569. [Google Scholar] [CrossRef]

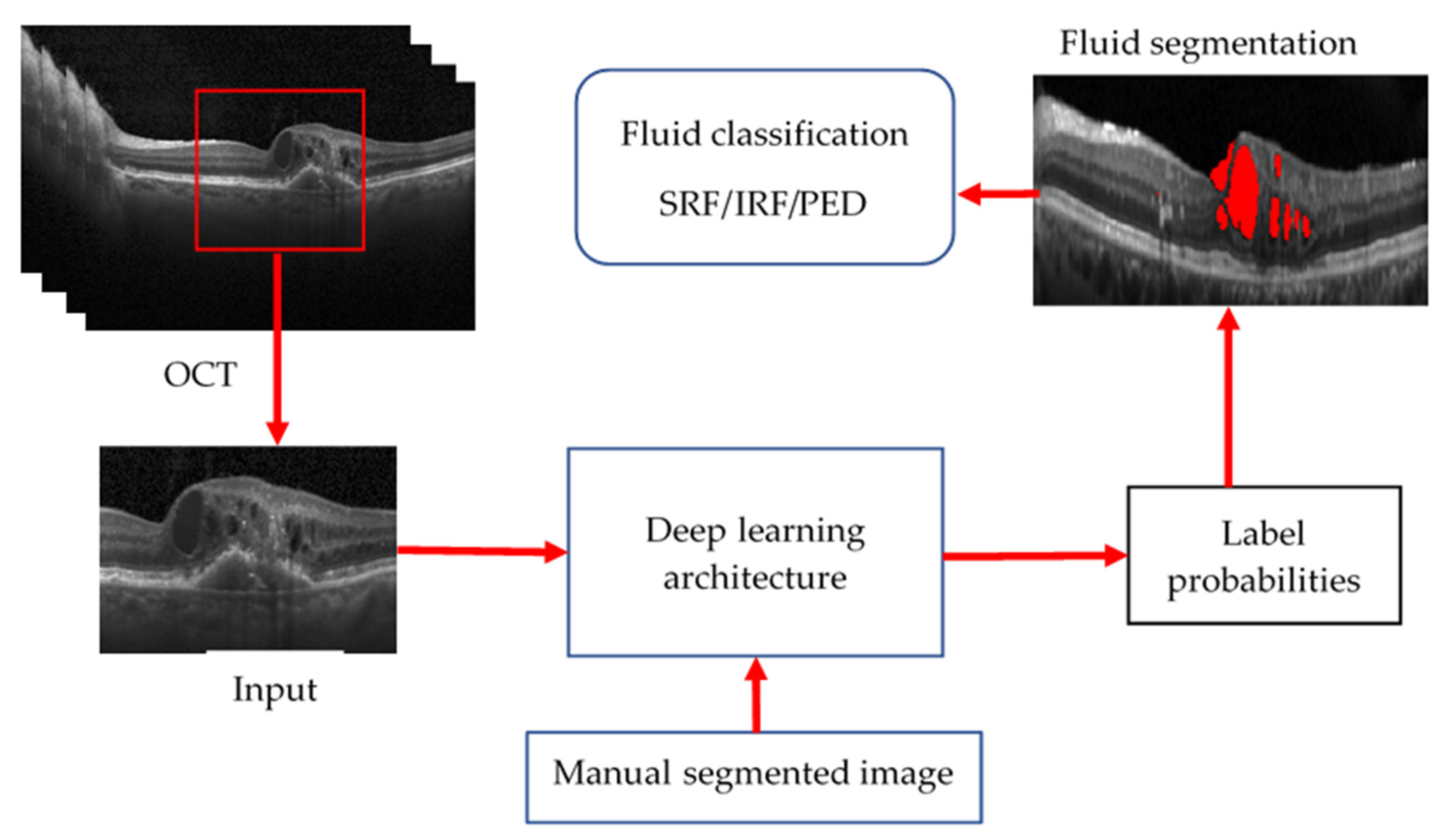

- Schlegl, T.; Waldstein, S.M.; Bogunovic, H.; Endstraßer, F.; Sadeghipour, A.; Philip, A.M.; Podkowinski, D.; Gerendas, B.S.; Langs, G.; Schmidt-Erfurth, U. Fully Automated Detection and Quantification of Macular Fluid in OCT Using Deep Learning. Ophthalmology 2018, 125, 549–558. [Google Scholar] [CrossRef] [PubMed]

- Chiu, S.J.; Allingham, M.J.; Mettu, P.S.; Cousins, S.W.; Izatt, J.A.; Farsiu, S. Kernel Regression Based Segmentation of Optical Coherence Tomography Images with Diabetic Macular Edema. Biomed. Opt. Express 2015, 6, 1172. [Google Scholar] [CrossRef]

- Altan, G. DeepOCT: An Explainable Deep Learning Architecture to Analyze Macular Edema on OCT Images. Eng. Sci. Technol. Int. J. 2022, 34, 101091. [Google Scholar] [CrossRef]

- Bhende, M.; Shetty, S.; Parthasarathy, M.K.; Ramya, S. Optical Coherence Tomography: A Guide to Interpretation of Common Macular Diseases. Indian J. Ophthalmol. 2018, 66, 20–35. [Google Scholar] [CrossRef] [PubMed]

- Farshad, A.; Yeganeh, Y.; Gehlbach, P.; Navab, N. Y-Net: A Spatiospectral Dual-Encoder Network for Medical Image Segmentation; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13432, pp. 582–592. [Google Scholar] [CrossRef]

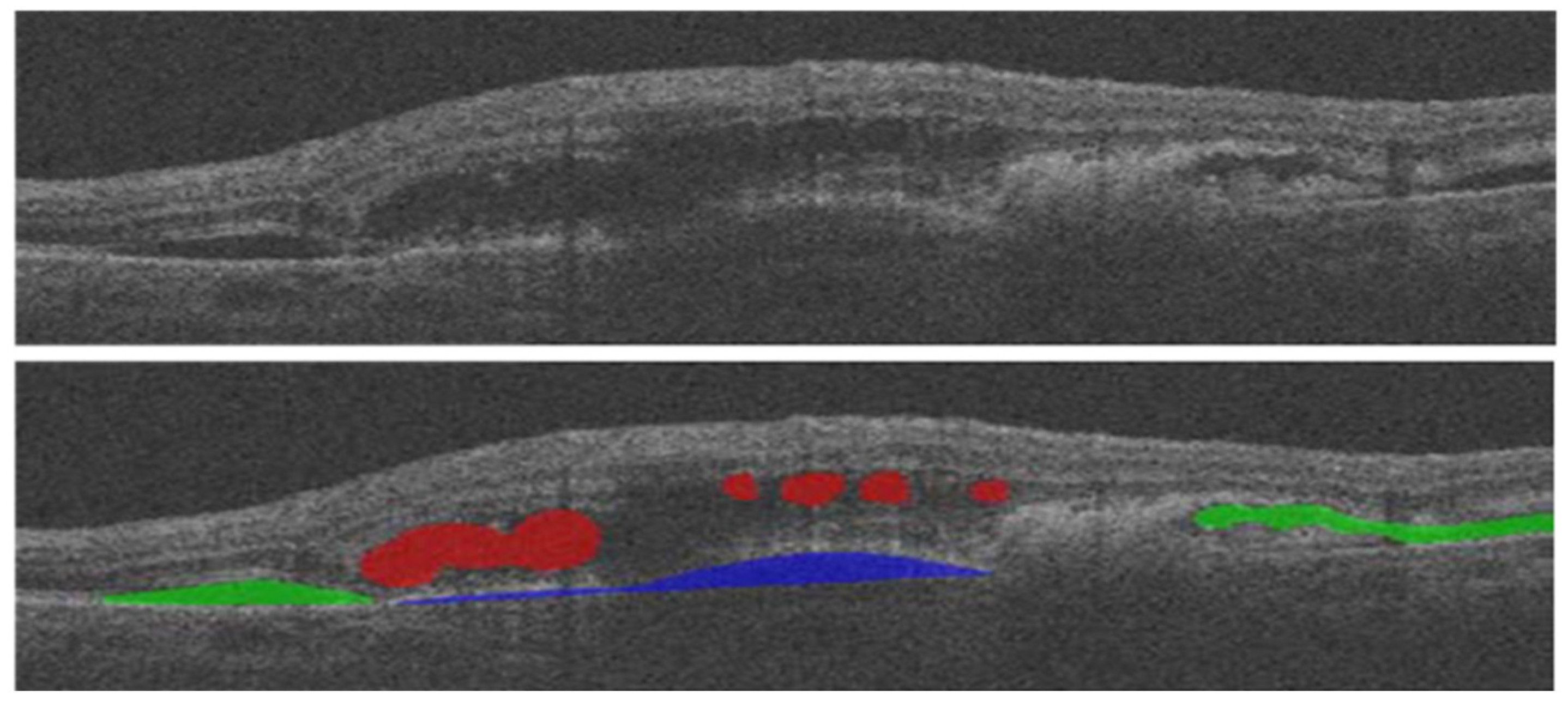

- Hassan, B.; Qin, S.; Ahmed, R.; Hassan, T.; Taguri, A.H.; Hashmi, S.; Werghi, N. Deep Learning Based Joint Segmentation and Characterization of Multi-Class Retinal Fluid Lesions on OCT Scans for Clinical Use in Anti-VEGF Therapy. Comput. Biol. Med. 2021, 136, 104727. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, X.; Zhang, X.; Lu, Y.; Huang, S.; Yang, D. EANet: Iterative Edge Attention Network for Medical Image Segmentation. Pattern Recognit. 2022, 127, 108636. [Google Scholar] [CrossRef]

- Medhi, J.P.; Nirmala, S.R.; Choudhury, S.; Dandapat, S. Improved Detection and Analysis of Macular Edema Using Modified Guided Image Filtering with Modified Level Set Spatial Fuzzy Clustering on Optical Coherence Tomography Images. Biomed. Signal Process. Control 2023, 79, 104149. [Google Scholar] [CrossRef]

- Wu, M.; Chen, Q.; He, X.J.; Li, P.; Fan, W.; Yuan, S.T.; Park, H. Automatic Subretinal Fluid Segmentation of Retinal SD-OCT Images with Neurosensory Retinal Detachment Guided by Enface Fundus Imaging. IEEE Trans. Biomed. Eng. 2018, 65, 87–95. [Google Scholar] [CrossRef]

- Ai, Z.; Huang, X.; Feng, J.; Wang, H.; Tao, Y.; Zeng, F.; Lu, Y. FN-OCT: Disease Detection Algorithm for Retinal Optical Coherence Tomography Based on a Fusion Network. Front. Neuroinform. 2022, 16, 1–17. [Google Scholar] [CrossRef]

- Wang, C.; Gan, M. Wavelet Attention Network for the Segmentation of Layer Structures on OCT Images. Biomed. Opt. Express 2022, 13, 6167. [Google Scholar] [CrossRef]

- Ovreiu, S.; Cristescu, I.; Balta, F.; Ovreiu, E. An Exploratory Study for Glaucoma Detection Using Densely Connected Neural Networks. In Proceedings of the 2020 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 29–30 October 2020; pp. 4–7. [Google Scholar] [CrossRef]

- Chen, Z.; Li, D.; Shen, H.; Mo, H.; Zeng, Z.; Wei, H. Automated Segmentation of Fluid Regions in Optical Coherence Tomography B-Scan Images of Age-Related Macular Degeneration. Opt. Laser Technol. 2020, 122, 105830. [Google Scholar] [CrossRef]

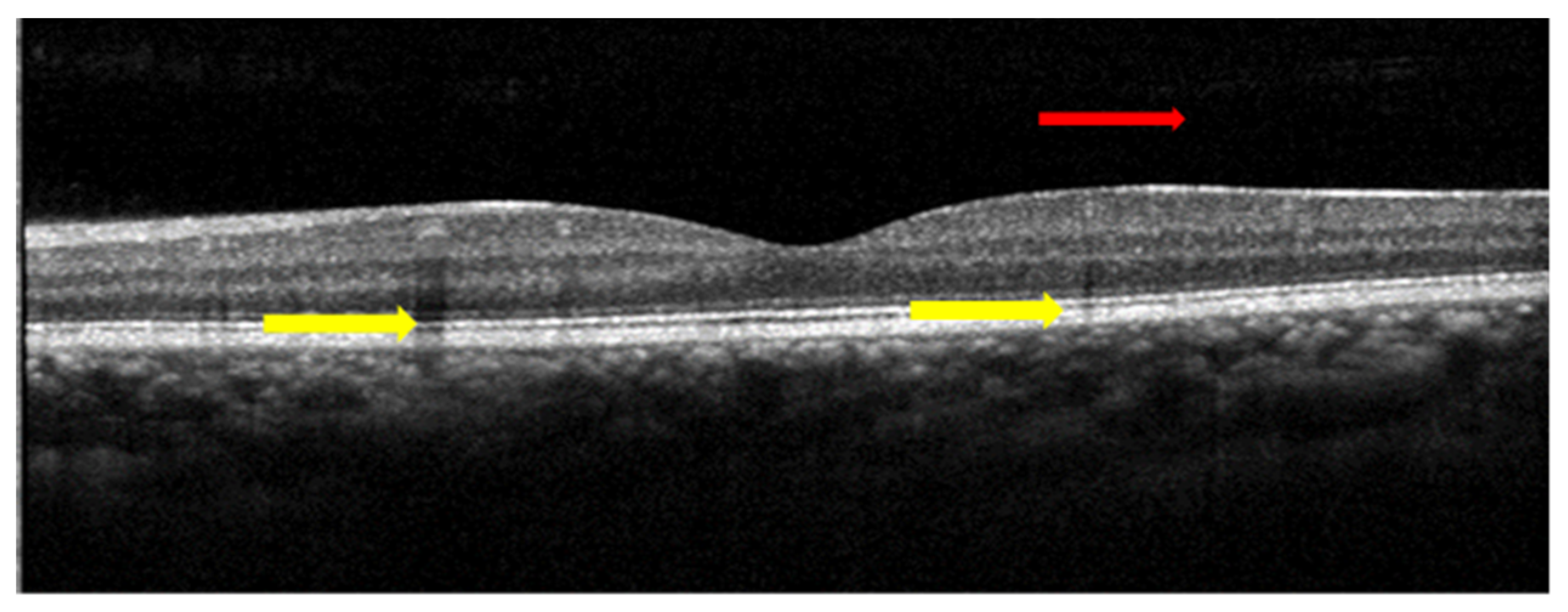

| Biomarkers | Association of the Biomarkers with the Eye Disease |

|---|---|

| Layer thickness | Changes in the retina’s thickness and its layers are characteristics of many diseases such as glaucoma and age-related macular degeneration (AMD). For example: a glaucoma patient has 20% lower RNFL (retinal nerve fibre layer) thickness than normal patient |

| Inner retinal lesion | A characteristic finding in various stages of diabetic macular oedema (DME) that is a key risk factor for developing more advanced stages of DME. |

| Drusen | A characteristic finding of the early stages of AMD that is a key risk factor for the development of more advanced stages. |

| Cup–disc ratio | A cup–disc ratio of more than 0.5 is a risk sign of glaucoma. |

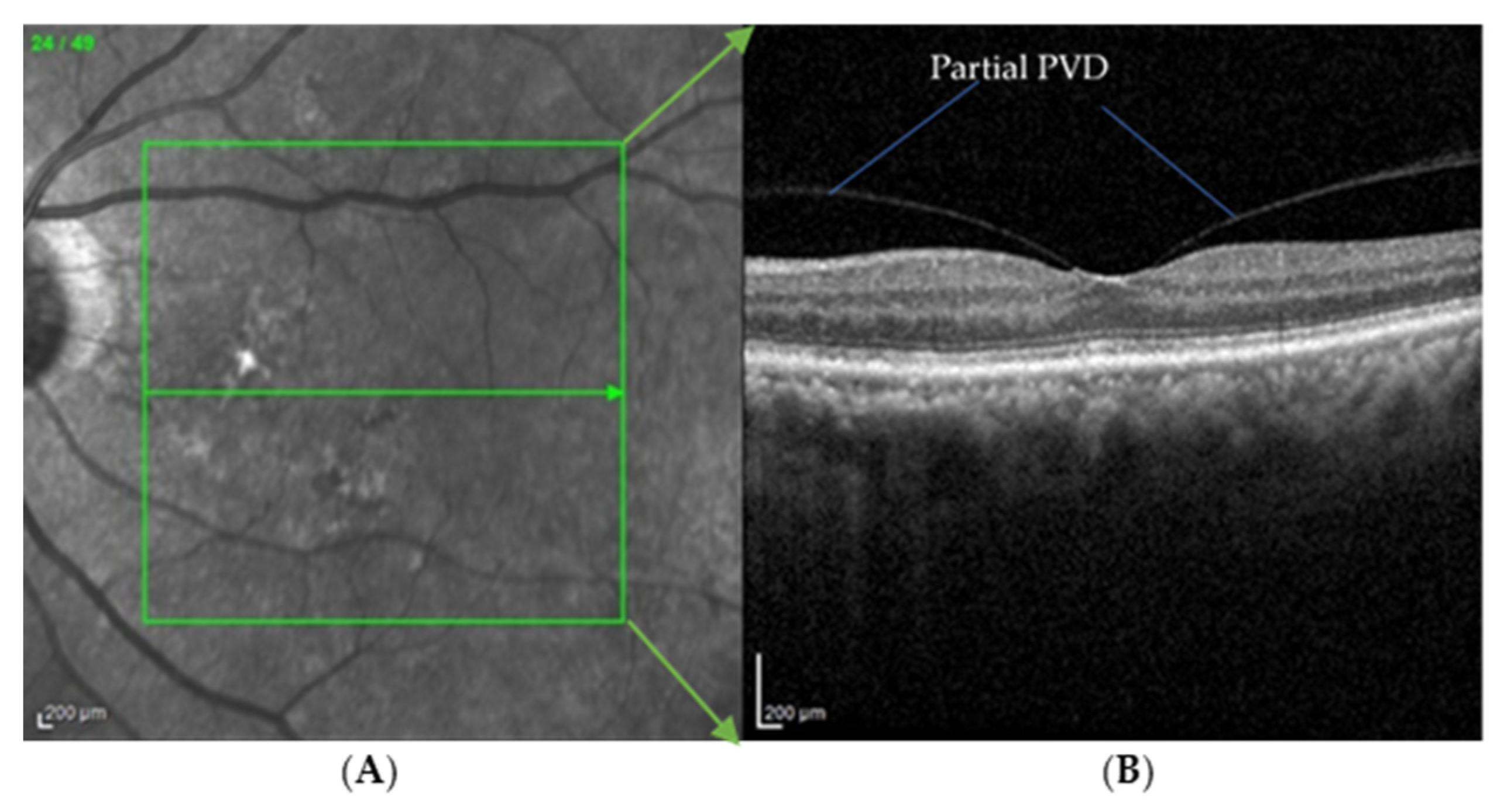

| PVD | An early sign of macular 0edema and lamellar macular hole |

| Pre-Processing Methods | Researchers | Key Point | Evaluation Parameters |

|---|---|---|---|

| Deep learning (Unet and SRResNet and AC-SRResNet) | Y. Huang et al. [37] | This is the modification of the existing combination of U-Net and Super-Resolution Residual network with the addition of asymmetric convolution. The evaluation parameters used in this paper were signal-to-noise ratio, contrast to noise ratio, and edge preservation index. | SNR (dB) U-Net: 19.36 SRResNet: 20.11 AC-SRResNet: 22.15 |

| SiameseGAN | K. Nilesh et al. [33] | This is the combination of Siamese network module and a generative adversarial network. This model helps generate denoised images closer to the ground-truth image. | Mean PSNR: 28.25 dB |

| Semi-Supervised (N2NSR-OCT) | Q. Bin et al. [31] | This paper utilises up- and down-sampling networks, consisting of modified U-net and DPBN, to obtain a super-resolution image. | PSNR: 20.7491 dB RMSE: 0.0956 dB MS: SSIM 0.8205 |

| Semi-Supervised Capsule cGAN | M. Wang et al. [32] | This paper addresses the issue of speckle noise with a semi-supervised learning-based algorithm. A capsule cognitive generative adversarial network is used to construct the learning system, and the structural information loss is regained by using a semi-supervised loss function. | SNR: 59.01 dB |

| None | Yazdanpanah A. [38], Abramoff M.D. [39], Yang Q. [40] S. Bekalo et al. [41] | These researchers did not use any pre-processing algorithm for the oct image analysis. | DSC: 0.85 Correlation C/D: 0.93 |

| 2D linear smoothing | Huang Y. et al. [42] | This paper uses 3 × 3 pixel boxcar averaging filter to reduce speckle noise. | Avg. mean: 0.51 SD: 0.49 |

| Mean filter | J. Xu et al. [14] | In this paper, the author investigated the possibility of variable-size super pixel analysis for early detection of glaucoma. The ncut algorithm is used to map variable-size superpixels on a 2D feature map by grouping similar neighbouring pixels. | AUC: 0.855 |

| Wavelet shrinkage | Quellec G. et al. [43] | This paper describes an automated method for detection of the footprint of symptomatic exudate-associated derangements (SEADs) in SD-OCT scans from AMD patients. An adaptive wavelet transformation is used to extract a 3D textural feature. | AUC: 0.961 |

| Adaptive vector-valued kernel function | Mishra A. et al. [44] | This paper proposes a two-step kernel-based optimisation scheme to identify the location of layers and then refine them to obtain their segmentation. | NA |

| Two 1D filters: (1) median filtering along the A-scans; (2) Gaussian kernel in the longitudinal direction | Baroni M. et al. [45] | The layer identification is made by smoothing the OCT image with a median filter on the A-scan and a Gaussian field on the B-scan image. | Correlation: 5.13 Entropy: 25.65 |

| SVM approach | Fuller A.R. et al. [46] | This paper uses the SVM approach by considering a voxel’s mean value and variance with various resolution settings to handle the noise and decrease the classification error. | SD: 6.043 |

| Low-pass filtering | Hee M.R. et al. [5] | The peak position of the OCT image was filtered out using a low-pass filter to create similarity in spatial frequency distribution in the axial position. | NA |

| Reference | Fluid Type | Disease | Year | Method | Evaluation Parameter |

|---|---|---|---|---|---|

| Gopinath et al. [51] | IRF | AMD, RVO, DME | 2019 | Selective enhancement of cyst using generalised motion pattern (GMP) and CNN | Mean DC: 0.71 |

| Y. Derradji et al. [52] | Retinal atrophy | AMD | 2021 | CNN and Residual U-shaped Network | Mean Dice score: 0.881 Sensitivity: 0.85 Precision: 0.92 |

| Y. Guo et al. [53] | IRF, SRF, PED | DME | 2020 | ReF-Net | F1 score: 0.892 |

| Marc Wilson et al. [54] | IRF, PED | AMD | 2021 | Various DL Models | DSC: 0.43–0.78 |

| B. Sappa et al. [41] | IRF, SRF, PED | AMD | 2021 | RetFluidNet (based on auto-encoder) | Accuracy IRF: 80.05% PED: 92.74% PED: 95.53% |

| Girish et al. [55] | IRF | AMD, RVO, DME | 2019 | Fully connected neural network (FCNN) | Dice rate: 0.71 |

| Venhuizen et al. [56] | IRF | AMD, RVO, DME | 2018 | Cascade of neural networks to form DL algorithm | DC: 0.75 |

| Schlegl et al. [57] | IRF, SRF | AMD, RVO, DME | 2018 | Auto-encoder | AUC: 0.94 |

| Retouch [1] | IRF, SRF, PED | AMD, RVO | 2019 | Various models proposed by participants | DSC: 0.7–0.8 |

| Xu et al. [50] | Any | AMD | 2015 | Stratified sampling voxel classification for feature extraction and graph method for layer segmentation | TPR: 96% TNR: 0.16% |

| Chiu et al. [58] | Any | DME | 2015 | Kernel regression method to estimate fluid and graph theory and dynamic programming (GTDP) for boundary segmentation | DC: 0.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karn, P.K.; Abdulla, W.H. On Machine Learning in Clinical Interpretation of Retinal Diseases Using OCT Images. Bioengineering 2023, 10, 407. https://doi.org/10.3390/bioengineering10040407

Karn PK, Abdulla WH. On Machine Learning in Clinical Interpretation of Retinal Diseases Using OCT Images. Bioengineering. 2023; 10(4):407. https://doi.org/10.3390/bioengineering10040407

Chicago/Turabian StyleKarn, Prakash Kumar, and Waleed H. Abdulla. 2023. "On Machine Learning in Clinical Interpretation of Retinal Diseases Using OCT Images" Bioengineering 10, no. 4: 407. https://doi.org/10.3390/bioengineering10040407

APA StyleKarn, P. K., & Abdulla, W. H. (2023). On Machine Learning in Clinical Interpretation of Retinal Diseases Using OCT Images. Bioengineering, 10(4), 407. https://doi.org/10.3390/bioengineering10040407