Automatic Segmentation and Quantification of Abdominal Aortic Calcification in Lateral Lumbar Radiographs Based on Deep-Learning-Based Algorithms

Abstract

:1. Introduction

2. Materials and Methods

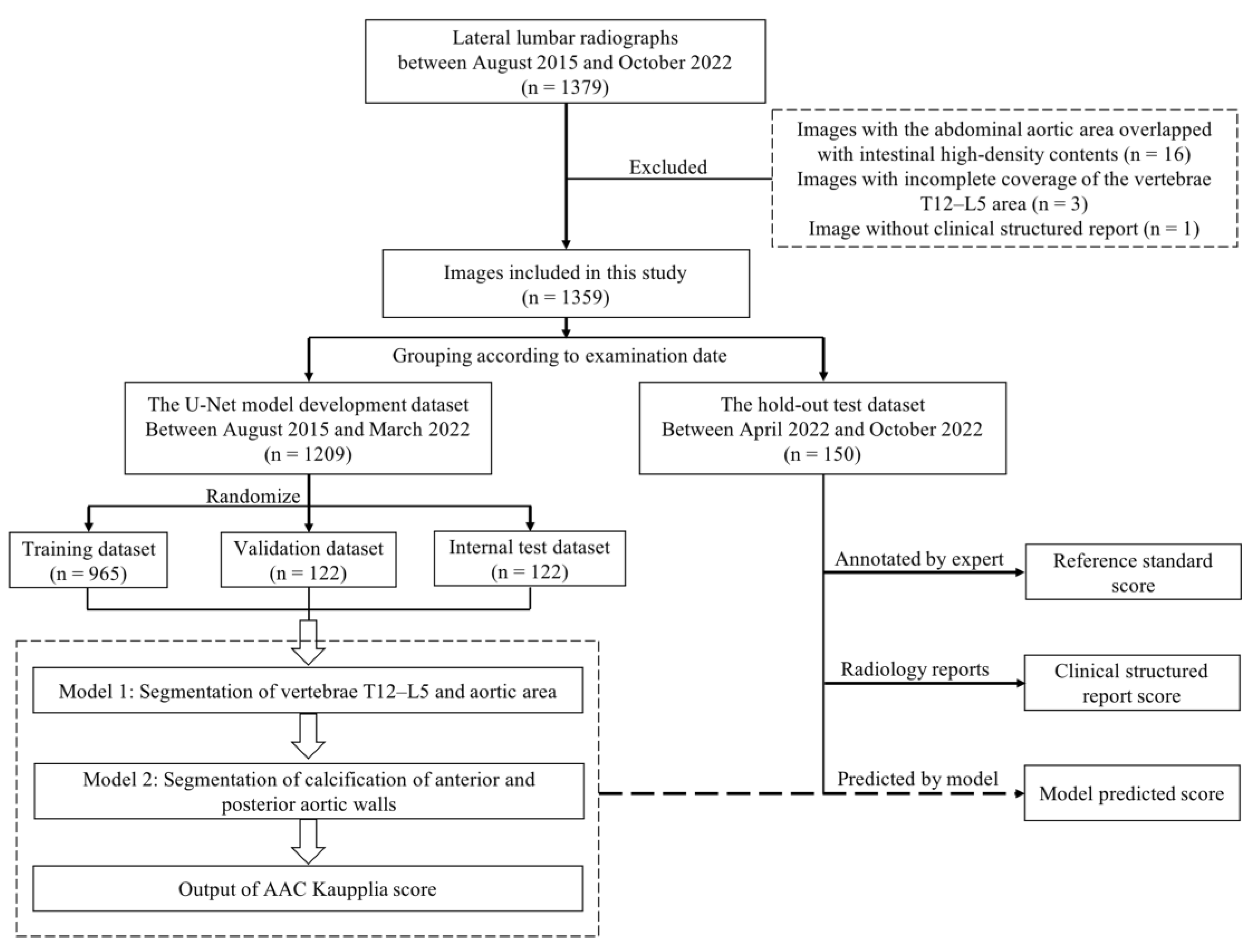

2.1. Data Enrollment

2.2. Image Annotation

2.3. AAC Scoring

2.4. Model Development

2.5. Evaluation of the U-Net Model Performance

2.6. Statistical Analysis

3. Results

3.1. Clinical Characteristics

3.2. Performance of U-Net Models in Model Development

3.3. Performance of the U-Net Model in AAC Score Quantification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tatami, Y.; Yasuda, Y.; Suzuki, S.; Ishii, H.; Sawai, A.; Shibata, Y.; Ota, T.; Shibata, K.; Niwa, M.; Morimoto, R.; et al. Impact of abdominal aortic calcification on long-term cardiovascular outcomes in patients with chronic kidney disease. Atherosclerosis 2015, 243, 349–355. [Google Scholar] [CrossRef] [PubMed]

- Kidney Disease: Improving Global Outcomes (KDIGO) CKD-MBD Update Work Group. KDIGO 2017 Clinical practice guideline update for the diagnosis, evaluation, prevention, and treatment of chronic kidney disease-mineral and bone disorder (CKD-MBD). Kidney Int. Suppl. 2017, 7, 1–59. [Google Scholar] [CrossRef] [PubMed]

- Leow, K.; Szulc, P.; Schousboe, J.T.; Kiel, D.P.; Teixeira-Pinto, A.; Shaikh, H.; Sawang, M.; Sim, M.; Bondonno, N.; Hodgson, J.M.; et al. Prognostic value of abdominal aortic calcification: A systematic review and meta-analysis of observational studies. J. Am. Heart Assoc. 2021, 10, e017205. [Google Scholar] [CrossRef]

- Wong, N.D.; Lopez, V.A.; Allison, M.; Detrano, R.C.; Blumenthal, R.S.; Folsom, A.R.; Ouyang, P.; Criqui, M.H. Abdominal aortic calcium and multi-site atherosclerosis: The multiethnic study of atherosclerosis. Atherosclerosis 2011, 214, 436–441. [Google Scholar] [CrossRef]

- Zhang, H.; Li, G.; Yu, X.; Yang, J.; Jiang, A.; Cheng, H.; Fu, J.; Liang, X.; Liu, J.; Lou, J.; et al. Progression of vascular calcification and clinical outcomes in patients receiving maintenance dialysis. JAMA Netw. Open 2023, 6, e2310909. [Google Scholar] [CrossRef]

- Schousboe, J.T.; Taylor, B.C.; Kiel, D.P.; Ensrud, K.E.; Wilson, K.E.; McCloskey, E.V. Abdominal aortic calcification detected on lateral spine images from a bone densitometer predicts incident myocardial infarction or stroke in older women. J. Bone Miner. Res. 2008, 23, 409–416. [Google Scholar] [CrossRef] [PubMed]

- Cox, A.J.; Hsu, F.C.; Agarwal, S.; Freedman, B.I.; Herrington, D.M.; Carr, J.J.; Bowden, D.W. Prediction of mortality using a multi-bed vascular calcification score in the Diabetes Heart Study. Cardiovasc. Diabetol. 2014, 13, 160. [Google Scholar] [CrossRef] [PubMed]

- Lewis, J.R.; Schousboe, J.T.; Lim, W.H.; Wong, G.; Wilson, K.E.; Zhu, K.; Thompson, P.L.; Kiel, D.P.; Prince, R.L. Long-term atherosclerotic vascular disease risk and prognosis in elderly women with abdominal aortic calcification on lateral spine images captured during bone density testing: A prospective study. J. Bone Miner. Res. 2018, 33, 1001–1010. [Google Scholar] [CrossRef]

- Wilson, P.W.; Kauppila, L.I.; O’Donnell, C.J.; Kiel, D.P.; Hannan, M.; Polak, J.M.; Cupples, L.A. Abdominal aortic calcific deposits are an important predictor of vascular morbidity and mortality. Circulation 2001, 103, 1529–1534. [Google Scholar] [CrossRef]

- Niu, Q.; Zhao, H.; Wu, B.; Tsai, S.; Wu, J.; Zhang, M.; Lu, L.; Qiao, J.; Men, C.; Zuo, L.; et al. Abdominal aortic calcification is superior to other arteries calcification in predicting the mortality in peritoneal dialysis patients—A 8 years cohort study. BMC Nephrol. 2019, 20, 439. [Google Scholar] [CrossRef]

- Kauppila, L.I.; Polak, J.F.; Cupples, L.A.; Hannan, M.T.; Kiel, D.P.; Wilson, P.W. New indices to classify location, severity and progression of calcific lesions in the abdominal aorta: A 25-year follow-up study. Atherosclerosis 1997, 132, 245–250. [Google Scholar] [CrossRef]

- Reid, S.; Schousboe, J.T.; Kimelman, D.; Monchka, B.A.; Jafari Jozani, M.; Leslie, W.D. Machine learning for automated abdominal aortic calcification scoring of DXA vertebral fracture assessment images: A pilot study. Bone 2021, 148, 115943. [Google Scholar] [CrossRef]

- Conrad-Hansen, L.A.; de Bruijne, M.; Lauze, F.; Tankó, L.B.; Pettersen, P.C.; He, Q.; Chen, J.; Christiansen, C.; Nielsen, M. Quantifying calcification in the lumbar aorta on X-ray images. Med. Image Comput. Comput. Assist. Interv. 2007, 10, 352–359. [Google Scholar] [CrossRef]

- Sharif, N.; Gilani, S.Z.; Suter, D.; Reid, S.; Szulc, P.; Kimelman, D.; Monchka, B.A.; Jozani, M.J.; Hodgson, J.M.; Sim, M.; et al. Machine learning for abdominal aortic calcification assessment from bone density machine-derived lateral spine images. EBioMedicine 2023, 94, 104676. [Google Scholar] [CrossRef] [PubMed]

- Petersen, K.; Ganz, M.; Mysling, P.; Nielsen, M.; Lillemark, L.; Crimi, A.; Brandt, S.S. A Bayesian framework for automated cardiovascular risk scoring on standard lumbar radiographs. IEEE Trans. Med. Imaging 2012, 31, 663–676. [Google Scholar] [CrossRef]

- Elmasri, K.; Hicks, Y.; Yang, X.; Sun, X.; Pettit, R.; Evans, W. Automatic Detection and Quantification of Abdominal Aortic Calcification in Dual Energy X-ray Absorptiometry. Procedia Comput. Sci. 2016, 96, 1011–1021. [Google Scholar] [CrossRef]

- Lauze, F.; de Bruijne, M. Toward automated detection and segmentation of aortic calcifications from radiographs. Proc. SPIE 2007, 6512, 651239. [Google Scholar] [CrossRef]

- Gao, Z.; Pan, X.; Shao, J.; Jiang, X.; Su, Z.; Jin, K.; Ye, J. Automatic interpretation and clinical evaluation for fundus fluorescein angiography images of diabetic retinopathy patients by deep learning. Br. J. Ophthalmol. 2022. online ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Fu, F.; Shan, Y.; Yang, G.; Zheng, C.; Zhang, M.; Rong, D.; Wang, X.; Lu, J. Deep learning for head and neck CT angiography: Stenosis and plaque classification. Radiology 2023, 307, e220996. [Google Scholar] [CrossRef]

- Ao, J.; Shao, X.; Liu, Z.; Liu, Q.; Xia, J.; Shi, Y.; Qi, L.; Pan, J.; Ji, M. Stimulated Raman Scattering Microscopy Enables Gleason Scoring of Prostate Core Needle Biopsy by a Convolutional Neural Network. Cancer Res. 2023, 83, 641–651. [Google Scholar] [CrossRef] [PubMed]

- Lu, S.; Ding, Y.; Liu, M.; Yin, Z.; Yin, L.; Zheng, W. Multiscale Feature Extraction and Fusion of Image and Text in VQA. Int. J. Comput. Intell. Syst. 2023, 16, 54. [Google Scholar] [CrossRef]

- Li, M.; Ling, R.; Yu, L.; Yang, W.; Chen, Z.; Wu, D.; Zhang, J. Deep learning segmentation and reconstruction for CT of chronic total coronary occlusion. Radiology 2023, 306, e221393. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. Med. Image Comput. Comput. Assist. Interv. 2015, 9351, 234–241. [Google Scholar] [CrossRef]

- Zair, A.M.; Bouzouad Cherfa, A.; Cherfa, Y.; Belkhamsa, N. An automated segmentation of coronary artery calcification using deep learning in specific region limitation. Med. Biol. Eng. Comput. 2023, 61, 1687–1696. [Google Scholar] [CrossRef] [PubMed]

- Rajamani, K.T.; Rani, P.; Siebert, H.; Elagiri Ramalingam, R.; Heinrich, M.P. Attention-augmented U-Net (AA-U-Net) for semantic segmentation. Signal Image Video Process. 2023, 17, 981–989. [Google Scholar] [CrossRef]

- Sun, Q.; Dai, M.; Lan, Z.; Cai, F.; Wei, L.; Yang, C.; Chen, R. UCR-Net: U-shaped context residual network for medical image segmentation. Comput. Biol. Med. 2022, 151, 106203. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Zhong, X.; Li, G.; Liu, W.; Liu, J.; Ji, D.; Li, X.; Wu, J. BCU-Net: Bridging ConvNeXt and U-Net for medical image segmentation. Comput. Biol. Med. 2023, 159, 106960. [Google Scholar] [CrossRef]

- Luke, C.; Tim, C. Automated scoring of aortic calcification in vertebral fracture assessment images. Proc. SPIE 2019, 10950, 811–819. [Google Scholar] [CrossRef]

- Verbeke, F.; van Biesen, W.; Honkanen, E.; Wikström, B.; Jensen, P.B.; Krzesinski, J.M.; Rasmussen, M.; Vanholder, R.; Rensma, P.L.; CORD Study Investigators. Prognostic value of aortic stiffness and calcification for cardiovascular events and mortality in dialysis patients: Outcome of the calcification outcome in renal disease (CORD) study. Clin. J. Am. Soc. Nephrol. 2011, 6, 153–159. [Google Scholar] [CrossRef]

- Bruijne, M.D. Shape particle guided tissue classification. In Proceedings of the 2006 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06), New York, NY, USA, 17–22 June 2006; p. 64. [Google Scholar] [CrossRef]

- Bruijne, M.D. A pattern classification approach to aorta calcium scoring in radiographs. In Computer Vision for Biomedical Image Applications—CVBIA 2005; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar] [CrossRef]

- Fusaro, M.; Schileo, E.; Crimi, G.; Aghi, A.; Bazzocchi, A.; Barbanti Brodano, G.; Girolami, M.; Sella, S.; Politi, C.; Ferrari, S.; et al. A novel quantitative computer-assisted score can improve repeatability in the estimate of vascular calcifications at the abdominal aorta. Nutrients 2022, 14, 4276. [Google Scholar] [CrossRef]

| Training (n = 965) | Validation (n = 122) | Internal Test (n = 122) | Hold-Out Test (n = 150) | Overall (n = 1359) | |

|---|---|---|---|---|---|

| Vendor | |||||

| Carestream health, n (%) | 322 (33.4%) | 41 (33.6%) | 38 (31.1%) | 126 (84.0%) | 527 (38.8%) |

| GE healthcare, n (%) | 383 (39.7%) | 53 (43.4%) | 47 (38.5%) | 23 (15.3%) | 506 (37.2%) |

| Kodak, n (%) | 190 (19.7%) | 19 (15.6%) | 25 (20.5%) | 0 (0%) | 234 (17.2%) |

| Siemens, n (%) | 70 (7.3%) | 9 (7.4%) | 12 (9.8%) | 1 (0.7%) | 92 (6.8%) |

| FOV, mm2 | 424 [424, 424] | 424 [404, 424] | 424 [404, 424] | 410 [404, 424] | 424 [404, 424] |

| Tube current, mA | 250 [250, 250] | 500 [250, 789] | 499 [250, 766] | 499 [250, 510] | 498 [250, 630] |

| Tube voltage, kV | 40.0 [26.0, 58.0] | 32.0 [19.0, 45.5] | 35.0 [22.0, 52.0] | 35.5 [22.0, 55.3] | 35.0 [22.0, 54.0] |

| Pixel spacing, mm | 0.14 [0.14, 0.14] | 0.14 [0.14, 0.19] | 0.14 [0.14, 0.19] | 0.15 [0.14, 0.19] | 0.14 [0.14, 0.19] |

| Overall (n = 1209) | Training (n = 965) | Validation (n = 122) | Internal Test (n = 122) | p Value | |

|---|---|---|---|---|---|

| Sex | 0.94 | ||||

| Men, n (%) | 646 (53.4%) | 514 (53.3%) | 67 (54.9%) | 65 (53.3%) | |

| Women, n (%) | 563 (46.6%) | 451 (46.7%) | 55 (45.1%) | 57 (46.7%) | |

| Age, years | 55.7(13.5) | 55.7 (13.3) | 54.8 (13.0) | 56.1 (15.3) | 0.86 |

| Peritoneal dialysis catheter | 0.41 | ||||

| Yes, n (%) | 355 (29.4%) | 286 (29.6%) | 30 (24.6%) | 39 (32.0%) | |

| No, n (%) | 854 (70.6%) | 679 (70.4%) | 92 (75.4%) | 83 (68.0%) | |

| AAC score | |||||

| L1 anterior score | 0 [0, 1] | 0 [0, 1] | 0 [0, 1] | 0 [0, 0] | 0.47 |

| L1 posterior score | 0 [0, 1] | 0 [0, 1] | 0 [0, 1] | 0 [0, 1] | 0.56 |

| L2 anterior score | 0 [0, 2] | 0 [0, 2] | 0 [0, 2] | 0 [0, 2] | 0.75 |

| L2 posterior score | 0 [0, 2] | 0 [0, 2] | 0 [0, 2] | 0 [0, 2] | 0.86 |

| L3 anterior score | 1 [0, 3] | 1 [0, 3] | 1 [0, 3] | 0 [0, 3] | 0.32 |

| L3 posterior score | 0 [0, 2] | 0 [0, 2] | 0 [0, 2] | 0 [0, 2] | 0.25 |

| L4 anterior score | 0 [0, 3] | 1 [0, 3] | 1 [0, 3] | 0 [0, 3] | 0.22 |

| L4 posterior score | 1 [0, 3] | 1 [0, 3] | 1 [0, 3] | 0 [0, 2] | 0.06 |

| Total AAC score | 6 [0, 15] | 6 [0, 15] | 8 [0, 15] | 3 [0, 14] | 0.20 |

| AAC severity | 0.33 | ||||

| Mild: 0–4, n (%) | 559 (46.2%) | 439 (45.5%) | 53 (43.4%) | 67 (54.9%) | |

| Moderate: 5–15, n (%) | 376 (31.1%) | 302 (31.3%) | 42 (34.4%) | 32 (26.2%) | |

| Severe: 16–24, n (%) | 274 (22.7%) | 224 (23.2%) | 27 (22.1%) | 23 (18.9%) | |

| Overall | Training | Validation | Internal Test | |

|---|---|---|---|---|

| DSC | ||||

| Vertebrae T12–L5 | 0.98 [0.97, 0.98] | 0.98 [0.98, 0.99] | 0.96 [0.92, 0.97] | 0.95 [0.91, 0.97] |

| Aorta | 0.98 [0.96, 0.98] | 0.98 [0.97, 0.98] | 0.94 [0.90, 0.95] | 0.93 [0.89, 0.95] |

| Posterior wall calcification | 0.74 [0.58, 0.82] | 0.77 [0.66, 0.84] | 0.44 [0.14, 0.60] | 0.56 [0.03, 0.65] |

| Anterior wall calcification | 0.72 [0.59, 0.80] | 0.75 [0.66, 0.81] | 0.52 [0.29, 0.64] | 0.53 [0.18, 0.65] |

| VS | ||||

| Vertebrae T12–L5 | 0.99 [0.99, 1.00] | 0.99 [0.99, 1.00] | 0.98 [0.95, 0.99] | 0.98 [0.94, 0.99] |

| Aorta | 0.99 [0.98, 1.00] | 0.99 [0.99, 1.00] | 0.97 [0.95, 0.99] | 0.97 [0.94, 0.99] |

| Posterior wall calcification | 0.89 [0.79, 0.95] | 0.91 [0.82, 0.95] | 0.81 [0.52, 0.92] | 0.74 [0.52, 0.92] |

| Anterior wall calcification | 0.89 [0.81, 0.95] | 0.90 [0.82, 0.95] | 0.84 [0.66, 0.94] | 0.82 [0.65, 0.91] |

| HD (mm) | ||||

| Vertebrae T12–L5 | 2.76 [2.04, 6.97] | 2.48 [2.00, 3.35] | 15.7 [5.40, 36.2] | 22.2 [6.23, 34.4] |

| Aorta | 3.64 [2.79, 8.21] | 3.31 [2.68, 4.08] | 16.1 [8.95, 35.4] | 17.4 [8.96, 36.7] |

| Posterior wall calcification | 23.5 [9.20, 49.0] | 19.6 [6.76, 38.6] | 48.2 [26.2, 72.8] | 35.7 [16.3, 74.2] |

| Anterior wall calcification | 20.9 [8.96, 45.8] | 17.5 [7.49, 42.9] | 35.7 [22.5, 51.9] | 33.3 [17.1, 54.8] |

| Reference Score | Structured Clinical Report | Model Prediction | |||

|---|---|---|---|---|---|

| Score | Correlation Coefficient | Score | Correlation Coefficient | ||

| L1 anterior wall | 0.98 | 0.81 | |||

| 0 | 80 (53.3%) | 82 (54.7%) | 88 (58.7%) | ||

| 1 | 28 (18.7%) | 42 (28.0%) | 20 (13.3%) | ||

| 2 | 32 (21.3%) | 20 (13.3%) | 21 (14.0%) | ||

| 3 | 10 (6.7%) | 6 (4.0%) | 21 (14.0%) | ||

| L1 posterior wall | 0.97 | 0.83 | |||

| 0 | 74 (49.3%) | 75 (50.0%) | 81 (54.0%) | ||

| 1 | 29 (19.3%) | 41 (27.3%) | 17 (11.3%) | ||

| 2 | 26 (17.3%) | 20 (13.3%) | 27 (18.0%) | ||

| 3 | 21 (14.0%) | 14 (9.3%) | 25 (16.7%) | ||

| L2 anterior wall | 0.96 | 0.82 | |||

| 0 | 58 (38.7%) | 61 (40.7%) | 40 (26.7%) | ||

| 1 | 32 (21.3%) | 50 (33.3%) | 28 (18.7%) | ||

| 2 | 36 (24.0%) | 24 (16.0%) | 37 (24.7%) | ||

| 3 | 24 (16.0%) | 15 (10.0%) | 45 (30.0%) | ||

| L2 posterior wall | 0.97 | 0.84 | |||

| 0 | 65 (43.3%) | 70 (46.7%) | 60 (40.0%) | ||

| 1 | 26 (17.3%) | 40 (26.7%) | 24 (16.0%) | ||

| 2 | 37 (24.7%) | 25 (16.7%) | 27 (18.0%) | ||

| 3 | 22 (14.7%) | 15 (10.0%) | 39 (26.0%) | ||

| L3 anterior wall | 0.96 | 0.85 | |||

| 0 | 46 (30.7%) | 54 (36.0%) | 43 (28.7%) | ||

| 1 | 35 (23.3%) | 49 (32.7%) | 20 (13.3%) | ||

| 2 | 27 (18.0%) | 17 (11.3%) | 25 (16.7%) | ||

| 3 | 42 (28.0%) | 30 (20.0%) | 62 (41.3%) | ||

| L3 posterior wall | 0.96 | 0.84 | |||

| 0 | 50 (33.3%) | 56 (37.3%) | 51 (34.0%) | ||

| 1 | 30 (20.0%) | 52 (34.7%) | 20 (13.3%) | ||

| 2 | 40 (26.7%) | 25 (16.7%) | 37 (24.7%) | ||

| 3 | 30 (20.0%) | 17 (11.3%) | 42 (28.0%) | ||

| L4 anterior wall | 0.94 | 0.87 | |||

| 0 | 49 (32.7%) | 60 (40.0%) | 34 (22.7%) | ||

| 1 | 25 (16.7%) | 50 (33.3%) | 21 (14.0%) | ||

| 2 | 33 (22.0%) | 15 (10.0%) | 29 (19.3%) | ||

| 3 | 43 (28.7%) | 25 (16.7%) | 66 (44.0%) | ||

| L4 posterior wall | 0.95 | 0.9 | |||

| 0 | 37 (24.7%) | 43 (28.7%) | 50 (33.3%) | ||

| 1 | 21 (14.0%) | 49 (32.7%) | 19 (12.7%) | ||

| 2 | 40 (26.7%) | 26 (17.3%) | 23 (15.3%) | ||

| 3 | 52 (34.7%) | 32 (21.3%) | 58 (38.7%) | ||

| AAC severity | 0.89 | 0.88 | |||

| 1 | 36 (24.0%) | 58 (38.7%) | 20 (13.3%) | ||

| 2 | 79 (52.7%) | 74 (49.3%) | 90 (60.0%) | ||

| 3 | 35 (23.3%) | 18 (12.0%) | 40 (26.7%) | ||

| Total AAC score | 9 [5, 15] | 6 [3, 12] | 0.97 | 10 [6, 16] | 0.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Wang, X.; Xi, Z.; Li, J.; Zhang, X.; Wang, R. Automatic Segmentation and Quantification of Abdominal Aortic Calcification in Lateral Lumbar Radiographs Based on Deep-Learning-Based Algorithms. Bioengineering 2023, 10, 1164. https://doi.org/10.3390/bioengineering10101164

Wang K, Wang X, Xi Z, Li J, Zhang X, Wang R. Automatic Segmentation and Quantification of Abdominal Aortic Calcification in Lateral Lumbar Radiographs Based on Deep-Learning-Based Algorithms. Bioengineering. 2023; 10(10):1164. https://doi.org/10.3390/bioengineering10101164

Chicago/Turabian StyleWang, Kexin, Xiaoying Wang, Zuqiang Xi, Jialun Li, Xiaodong Zhang, and Rui Wang. 2023. "Automatic Segmentation and Quantification of Abdominal Aortic Calcification in Lateral Lumbar Radiographs Based on Deep-Learning-Based Algorithms" Bioengineering 10, no. 10: 1164. https://doi.org/10.3390/bioengineering10101164

APA StyleWang, K., Wang, X., Xi, Z., Li, J., Zhang, X., & Wang, R. (2023). Automatic Segmentation and Quantification of Abdominal Aortic Calcification in Lateral Lumbar Radiographs Based on Deep-Learning-Based Algorithms. Bioengineering, 10(10), 1164. https://doi.org/10.3390/bioengineering10101164