1. Introduction

Urban flooding triggered by extreme rainfall events is an important topic in urban resilience, which poses significant hazards, including life-threatening conditions, infrastructure damage, transportation paralysis, and substantial socioeconomic losses. Urban floodwater depth estimation serves as a foundational element for enhancing disaster response capabilities and strengthening, providing critical data to support disaster assessment and informed decision-making. Empirical analyses of catastrophic flood incidents reveal that the absence of robust, large-scale floodwater depth monitoring technologies remains a critical factor exacerbating disaster impacts [

1,

2,

3]. Effective monitoring of urban floodwater depth is indispensable for flood risk mitigation: when integrated with monitoring point location data and GIS technology, the perceived flood depth information can be applied to subsequent applications such as predicting future flood depths, issuing flood depth risk level warnings, planning emergency rescue and evacuation routes under dynamic flood depth conditions, guiding drainage and emergency response planning, and informing infrastructure planning [

4,

5]. This study focuses on rapid and accurate flood depth perception technology, as it serves as the prerequisite for the aforementioned applications [

6]. Such technologies empower both disaster management authorities and residents to implement proactive measures, thereby reducing casualties and safeguarding critical assets.

Current urban water depth sensing methods can be broadly categorized into four types [

2,

3]. The first category directly employs physical sensors, which transmit water depth data through dedicated monitoring devices [

7]. Contact sensors, such as pressure sensors, are submerged in water and measure depth by converting pressure signals into depth data based on the principle that pressure varies with water depth [

8]. Non-contact sensors, including sonar and ultrasonic devices, measure the distance between the sensor and the water surface by sending and receiving signals, then calculating the corresponding water depth. While effective, these sensor-based methods face challenges. Wireless sensors are often limited by battery life, and measurement accuracy can be affected by water waves and other disturbances. Moreover, prolonged exposure to humid environments increases the risk of sensor failure, leading to high maintenance costs. Another method involves using graduated water level gauges, where water depth is estimated by recognizing scale marks on the gauge from flood site images [

9,

10]. However, the small size of these gauges demands high image quality for accurate readings.

The second category is the multi-indicator empirical model method, which leverages limited data such as topography, rainfall patterns, and drainage capacity to develop empirical models for predicting water depth [

11,

12]. For example, Renata et al. [

13,

14] applied the Data-Based Mechanistic (DBM) approach and the State-Dependent Parameter (SDP) modeling method to construct models that predict water levels using data related to rainfall and water depth. Galasso and Senarath [

15] introduced a hybrid statistical–physical regression method that utilizes data from catchments with similar characteristics but richer flood records to establish statistical relationships among relevant variables. This method helps estimate flood depths in data-scarce regions prone to flooding. Baida et al. [

16] proposed a customized model that directly extracts key features from input topography data and rainfall time series for flood depth prediction. However, this method is hindered by significant delays, and both modeling errors and input data inaccuracies can substantially affect prediction accuracy.

The third category relies on topographical information and employs hydrodynamic models to simulate urban flooding dynamics within the study area [

17]. Liu et al. [

18] applied a hydrodynamic approach combined with an XGBoost model optimized by a genetic algorithm for flood depth prediction. Nhu et al. [

19] used satellite imagery alone to determine flood inundation extents and proposed a hydrodynamic model to estimate water levels. Similarly, Hung et al. [

20] developed an improved hydrodynamic model to estimate flood depth using satellite images. While effective, this method requires extensive flood data and detailed topographical information as inputs. It is also highly sensitive to initial and boundary conditions, limiting its applicability in regions with insufficient data management capabilities.

For the fourth category, with advancements in artificial intelligence (AI) and image recognition technologies [

21], leveraging on-site flood images combined with AI techniques has emerged as a promising direction for urban flood depth estimation. To address data scarcity, methods using deep neural networks for data augmentation have been proposed. Since pre-trained large models do not require extensive labeled data, Temitope et al. [

22] introduced an approach that employs a pre-trained large multimodal model (GPT-4 Vision) to automatically estimate flood depth from on-site flood photos. However, this method is inefficient, taking more than 10 s to process a single image that is not even full HD resolution. Researchers have also explored Generative Adversarial Networks (GANs) [

23] to generate various types of synthetic data [

24], but the reliability of the generated data is limited due to the scarcity of original data. Attempts have also been make to use remote sensing images for flood depth estimation [

25,

26], but this method tends to produce relatively large errors. Sirsant et al. [

27] combined flood images with geographical tags and an Adaboost optimizer for flood depth estimation. However, the availability of such geographically tagged images is limited, posing additional challenges.

Rapid and accurate estimation of urban flood depth without monitoring and hydrodynamic prediction data remains a challenge. With the growing use of social media and surveillance cameras, researchers have increasingly explored leveraging these fast, large-scale data sources. Approaches combining these data with methods such as ordinary gradient optimization [

28] and neural networks [

29,

30,

31,

32,

33,

34] have been reported to automatically extract relevant urban features and estimate flood depth. Urban features such as streetlights, flower beds, vehicles, and buildings typically have standard heights, making them reliable reference objects for estimating flood depth from images. For example, Liu et al. [

35] developed the BEW-YOLOv8 model for near fast flood depth monitoring of submerged vehicles by integrating bidirectional feature pyramid networks, effective squeeze-and-excitation, and intelligent intersection-over-union into the YOLOv8 model. Park et al. [

36] employed Mask-RCNN [

37] to extract vehicle targets from images and used the VGG [

38] network for flood feature extraction and depth estimation. Other methods for extracting reference targets include R-CNN [

39], YOLO [

40], and similar techniques.

Although theses applications of AI-based methods to extract reference objects and estimate flood depth from social media images have mitigated data scarcity challenges with improved efficiency and accuracy, they still present several limitations. First, although these methods use web crawlers to automatically gather relevant data, they require significant human intervention to design algorithms to extract text and image information related to specific flood events, remove redundancy, extract flooding features, etc. Second, these AI methods demand extensive labeling works, such as annotation for reference object detection and relevance checks from texts and images, which are time-consuming. Third, the neural networks employed for water depth estimation typically require computationally and memory-intensive training processes with images on their own datasets, resulting in poor transferability. Consequently, their accuracy tends to degrade when sampling or training data is insufficient.

To overcome the aforementioned limitations, this paper proposes a novel method for urban flood depth estimation that combines lightweight edge training with cutting-edge multimodal large models, such as the large language model DeepSeek-R1 [

41], along with multimodal models OpenCLIP [

42] and YOLO-World [

43]. The proposed method achieves improved accuracy, efficiency, and adaptability for fast flood monitoring in urban environments.The proposed method introduces three key innovations:

- (1)

This paper proposes a universal and reliable urban floodwater depth-sensing technique. Based on the designed framework that consists of pre-trained large models, our method achieves fully automated and intelligent event-specific flood data mining and relevance filtering, image-based flood/arbitrary target reference relevance filtering and detection, and automatic water-depth feature extraction from reference image regions. Therefore, this method inherits the high-level intelligence and generality of large models, eliminating time-consuming data collection, manual data labeling, and computationally expensive training for texts/images relevance filtering, object detection, and universal image compressive representation.

- (2)

To accommodate the rapid switching needs of different water level reference objects, this paper designs the final stage of water depth perception as a lightweight neural network that can be rapidly trained without relying on a GPU. This neural network takes a 512-dimensional vector—generated from the compressed representation of image regions obtained in previous stages—as input, and outputs the water depth. This approach eliminates image-based neural network training, drastically reducing computational/storage demands and only requiring a fast reference-level water depth labeling. When suitable reference objects change due to factors such as differences between cities, it only requires collecting corresponding flood submergence images of the reference objects for water depth labeling, followed by lightweight edge retraining to complete water depth perception. This two-stage design significantly reduces deployment costs.

- (3)

Our method improves the efficiency of current large model-based water depth perception from around 10 s to less than 0.5 s by using simple image classification, detection, and feature extraction instead of the time-consuming semantic understanding.

The rest of this paper is organized as follows:

Section 2 provides a detailed introduction to the proposed methodology.

Section 3 presents the results to validate the proposed approach, followed by brief conclusions in

Section 4.

2. Methodology

2.1. The Proposed Methodology Framework

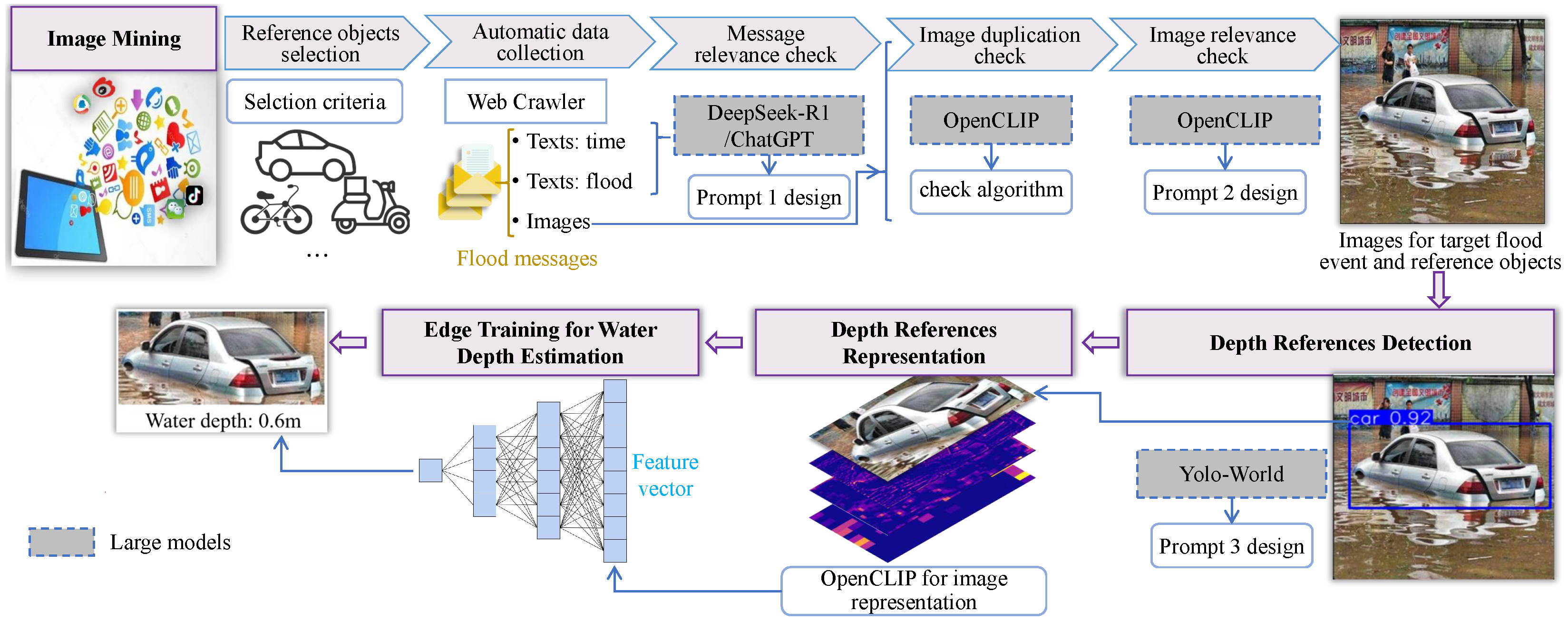

The proposed methodology framework is illustrated in

Figure 1 and comprises the following steps.

(1) Image mining. First, we establish the criteria for reference selection suitable for urban flood depth measurement. Then, a web crawler is used for automatic data collection to gather reference and flood-related messages. These messages should include texts for time information, texts for flood description, and images from multiple sources such as web pages, social media feeds, and Douyin. These messages are forwarded to the subsequent message relevance check, image duplication check, and image relevance check stages. These stages are powered by pre-trained large models, the designed prompt templates, and the check algorithm, thus ultimately outputting images for target flood event and reference objects.

(2) Depth references detection. This step aims to extract the smallest rectangular regions containing exclusively a single reference object in images. The first rationale is to achieve water depth estimation at the reference-object level rather than full-image coverage, thereby enhancing spatial resolution. The second rationale stems from the observation that proximal regions to the reference object contain sufficient hydrological information signatures, whereas distal areas exhibit decaying spatial correlations that would compromise the accuracy of depth estimation at the reference location.

(3) Depth references representation. This step employs a pre-trained multimodal large model to directly generate compressed representations of images, mapping high-storage-demand image data into a unique cross-modal feature vector that bridges images and text. This significantly reduces the data throughput required for the subsequent network, thereby enhancing training efficiency.

(4) Edge training for water depth estimation. This is the only step in this method that requires model training. A simple lightweight neural network, such as a multi-layer fully connected network or convolutional neural network, can be employed to learn the mapping from the reference region feature vectors obtained in Step 3 to water depth, aided by reference object image-level depth annotations. Once trained, the model can be directly applied to high-speed depth measurement.

This automated framework streamlines the entire process of flood depth analysis, from multi-source data collection to content filtering and depth estimation. Leveraging large language and multimodal models pre-trained on large-scale datasets such as COCO [

44] and ImageNet [

45], the framework exhibits strong generalizability and intelligence. These models adapt effectively to common reference objects without additional training, providing reliable feature representations. Notably, only a light-weight prediction neural network requires training at the edge or end devices, significantly reducing manual intervention, improving model adaptability, and effectively addressing the data scarcity challenge faced by traditional methods.

2.2. Flood Image Mining

Effective reference objects should meet three key criteria: (1) They should be common in flood scenes to ensure easy identification and widespread availability. Pedestrians, vehicles, and road signs are frequently observed in urban flood scenes, making them practical choices that enhance the model’s adaptability to diverse environments. (2) Reference objects should have stable and well-defined heights to serve as reliable benchmarks. For instance, adult heights typically range between 1.5 to 1.9 m, while vehicles of the same type follow standardized dimensions. Municipal fixtures like road/traffic signs also maintain fixed heights. (3) The visual characteristics of reference objects should vary noticeably at different water depths to support accurate waterline detection. Vehicles and pedestrians, for example, exhibit clear morphological changes as water levels rise, providing strong visual references for flood depth estimation. In contrast, utility poles, which appear similar over a wide range of heights, are less suitable for this purpose. By following these reference object selection criteria, the subsequent automated data collection can gather massive data that is both widely distributed and rich in water depth information.

The web crawler collects information from a variety of online sources, including web pages (e.g., news websites, government announcements, and social media feeds), social media posts (e.g., personal experiences and on-site photos shared by WeChat users), Facebook (e.g., user posts, group discussions, and public page content), and Douyin (e.g., relevant posts on the short-video platform). Each collected entry, referred to as a “message”, consists of three key components: a text timestamp (indicating when the message was published, which helps define the temporal dimension of the flood event), a text flood description (providing details about the flood situation, location, or other contextual information), and images (showing the specific scene for flood depth analysis). There are numerous engineering methods for implementing the data acquisition via web crawlers. The Python script

https://gitee.com/team-resource-billtang_0/water-depth-sensing-with-big-models-and-edge-nn.git (accessed on 6 October 2025) used in this paper supports configuring multiple keywords for a specific flood event, data retrieval latency for different platforms, etc., through a configuration file (config.py). Combined with the user’s own webpage URL library file, it can automatically acquire data. To obtain richer data, the keywords should encompass as many descriptive methods for the specific flood event as possible. This paper does not mandate a specific data storage format. The storage format used in the subsequent experimental section is as follows: disaster description text files (.txt) are placed in a dedicated folder, and images are placed in another separate folder. The text timestamp, the corresponding disaster description text file name, and the corresponding image filenames are stored in a CSV file (denoted as

) according to the message sequence number. This allows subsequent programs to quickly filter messages using this table. Depending on the quality of the crawled data, applicable preprocessing steps include discarding messages with missing image information, image size normalization, etc.

The collected messages often include information that is not associated with the target flood event. So, the large language model DeepSeek-R1 is employed to assess the text content and determine whether it pertains to a specific flood event. If the model concludes that the text is unrelated to the target flood event, the message is discarded. This filtering process effectively isolates high-quality data relevant to the focus event. To achieve precise and efficient large model filtering, this paper designs the DeepSeek-R1 prompt template. This template specifies core considerations for the large model and constrains its output to concise binary responses (Yes/No), thereby enhancing decision-making efficiency. For instance, in the case of the Zhengzhou 7·20 Flood Event, the prompt template used for this filtering process is shown in

Table 1. Users can employ other large models such as ChatGPT, QWen, etc., and write scripts to automatically load the flood description texts of each message from the CSV file to the large model interface, combined with the prompt template designed in this paper to automatically complete the relevance screening for specific flood events. The relevance of each filtered message can be recorded in a column of

using a binary method (e.g., 1 and 0 representing irrelevant and relevant, respectively).

To remove redundancy images in the remaining messages, OpenCLIP is employed. After reading the images of the relevant messages from

, as is shown in

Figure 2, this process begins by image representation to obtain one encoding from every images. For OpenCLIP, the popular encoding is a feature vector with 512 values. The key pseudocode to implement this encoding is given here

https://gitee.com/team-resource-billtang_0/water-depth-sensing-with-big-models-and-edge-nn.git (accessed on 6 October 2025). A database, initialized as empty, is utilized to store the encodings along with their corresponding image IDs. This image ID can be a unique file name obtained during the data crawling phase, or all images can be uniformly renamed in the preprocessing phase. The database can be implemented using arrays in the corresponding programming language, CSV files, etc. These encodings are then compared against those of existing in the database using similarity metrics such as cosine similarity or Euclidean distance. If any of the calculated similarity metrics is below a predefined threshold, the image is identified as a duplicate and discarded, otherwise the encoding is added to the database. To discard an image, the program can set the image ID corresponding to that image to an invalid value, such as −1. We use a threshold value rather than directly evaluate whether the compare vectors are same, so our method can handle duplicate images with slightly different field-of-view, color style, and lighting conditions. For

N images, this check algorithm only has a low complexity of

.

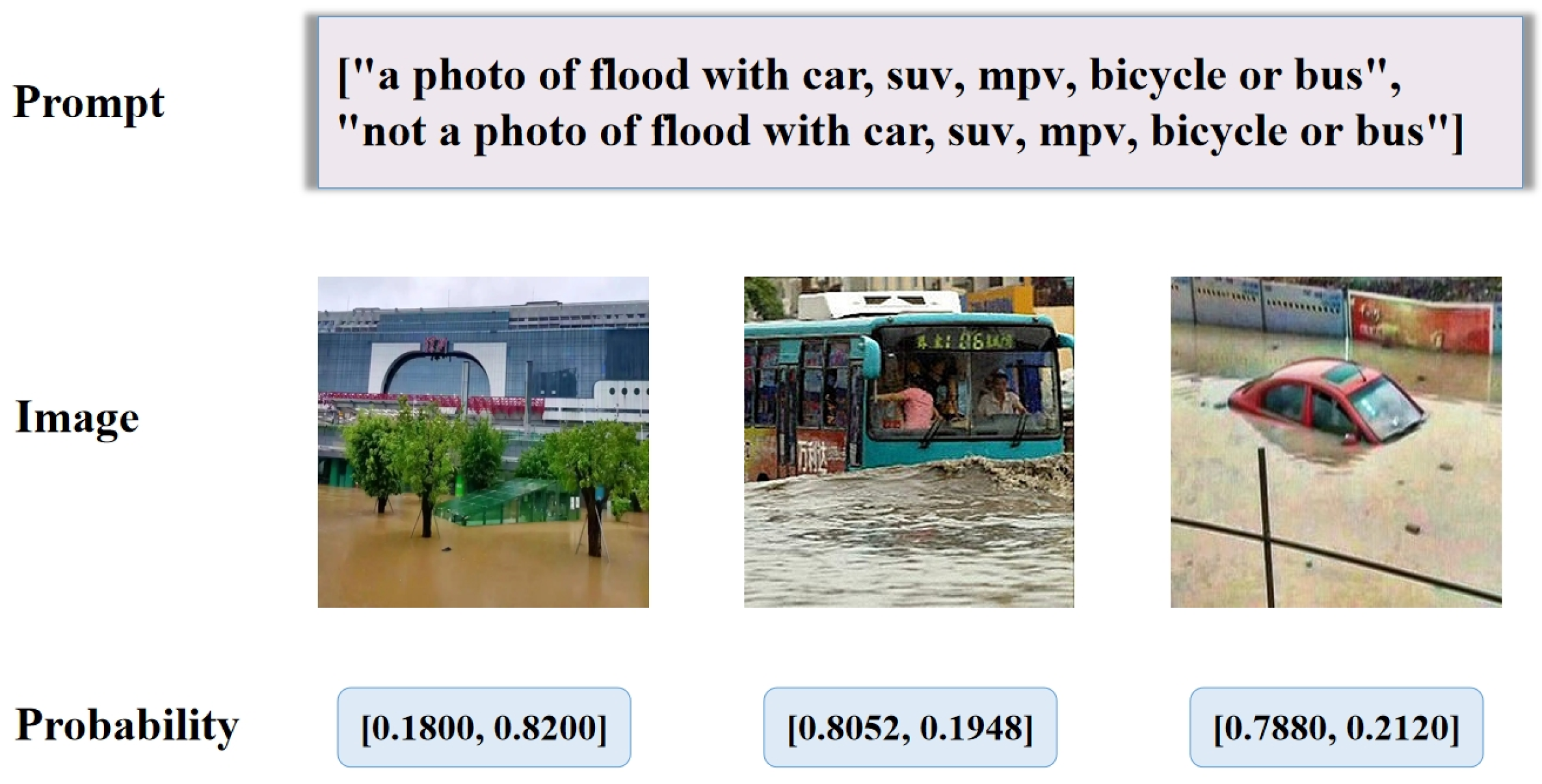

After image deduplication, OpenCLIP is further utilized to filter the remaining images containing the target reference objects through semantic evaluation. As is shown in

Table 2, by supplying specific prompt words, OpenCLIP calculates corresponding probabilities that indicate the likelihood of an image matching the provided prompt. If the probability that the image contains the target reference object exceeds that of it not containing the object, the image is retained; otherwise, it is discarded by setting the image ID corresponding to that image to an invalid value. This step effectively removes irrelevant images, ensuring the collected image dataset retains only high-quality images featuring the target reference objects with available image IDs.

2.3. Depth References Detection and Representation

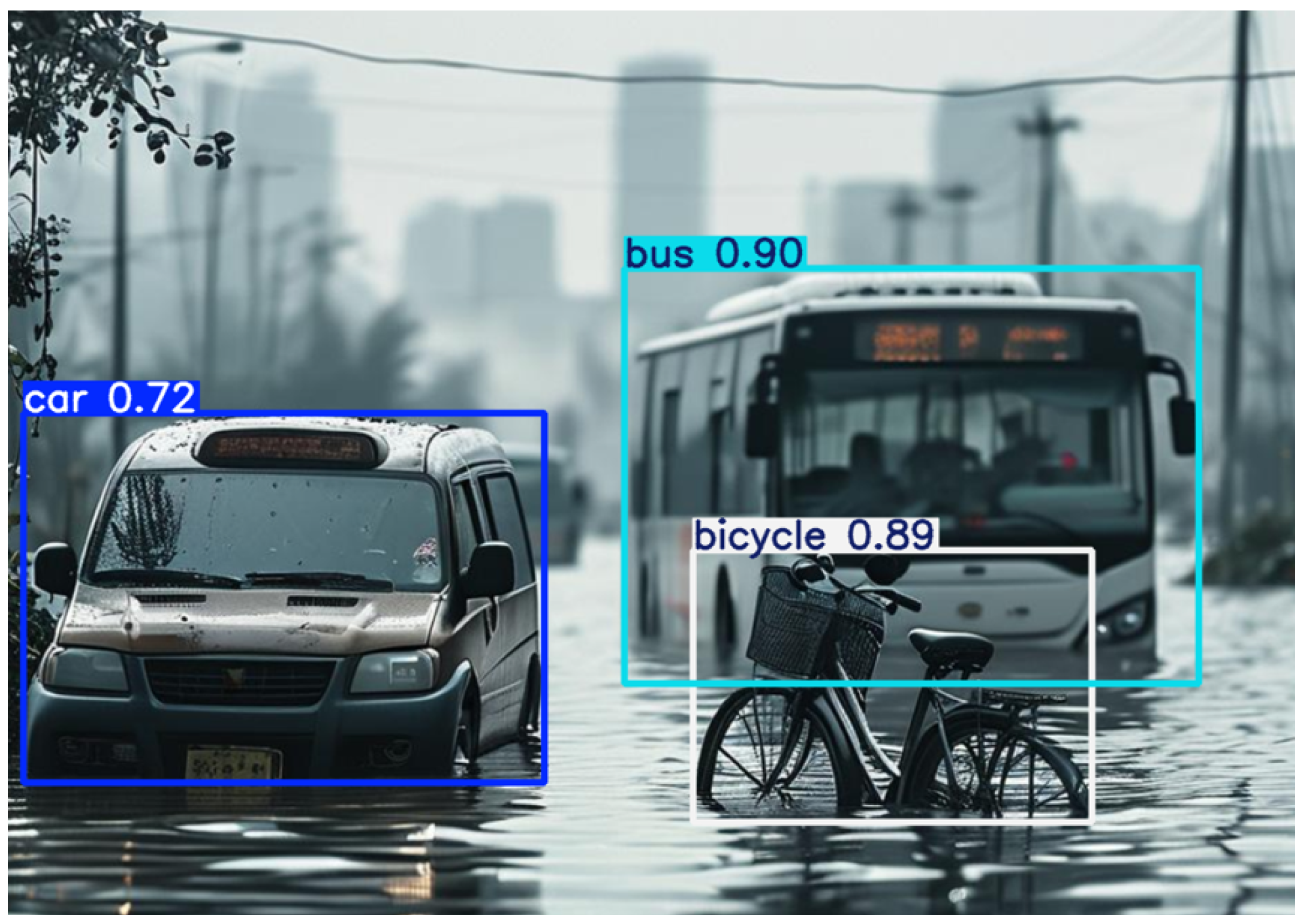

The depth references detection step uses YOLO-World to detect and locate these reference objects within the images. YOLO-World takes an image and textual prompts specifying arbitrary detection targets as input. Its outputs consist of minimum bounding boxes enclosing the targets and corresponding confidence scores. For flood reference object detection in this application, we designed the prompt templates shown in

Table 3. These templates are engineered to precisely characterize unobstructed reference objects within flood-inundated areas, thereby enabling the identification of regions containing both reference markers and water depth-related features. To ensure accuracy, a confidence threshold is applied, filtering out low-quality detections. The output bounding boxes on input images define the target reference regions.

After extracting the regions containing reference objects from the images, OpenCLIP is employed again to obtain robust and universal feature representations. This process loads a pretrained OpenCLIP model, preprocesses images into formats compatible with OpenCLIP’s input requirements, and extracts image feature embeddings. Readers can found key codes on link

https://github.com/mlfoundations/open_clip (accessed on 6 October 2025). OpenCLIP produces feature vectors that maintain a one-to-one correspondence with images. Visually similar images exhibit similar feature representations, while ensuring consistent alignment between image features and semantic characteristics. After this process, each reference object region image is converted into a 512-dimensional feature vector. Due to their strong generalization ability and versatility, these high-quality features represent flood depth information well.

2.4. The Simple Edge Training Neural Network for Water Depth Estimation

With the feature vectors of reference object regions extracted by OpenCLIP, this step constructs a lightweight neural network to achieve end-to-end flood depth estimation; we denote the neural network as Edge Neural Network (EdgeNN) because it only needs to be quickly updated at the network edge according to different needs. EdgeNN is architecture-agnostic and compatible with various neural network types, including fully connected networks and convolutional neural networks. The only mandatory requirements are that (1) the input layer dimension must precisely match the dimensionality of OpenCLIP’s output feature vectors, and (2) the output layer must be configured to predict a single water depth value. The previous preprocessing pipeline significantly simplifies the training data complexity, enabling even elementary architectures like Multilayer Perceptron (MLP) to achieve competitive prediction accuracy. When designing the network, we recommend prioritizing architectural simplicity while meeting accuracy thresholds, as this approach optimally balances performance with computational efficiency. Therefore, this paper recommends starting with a simple Multilayer Perceptron for testing, and then attempting more complex networks. For enhanced training stability and generalization capability, standard regularization techniques such as Dropout, Batch Normalization, and Layer Normalization can be incorporated during implementation.

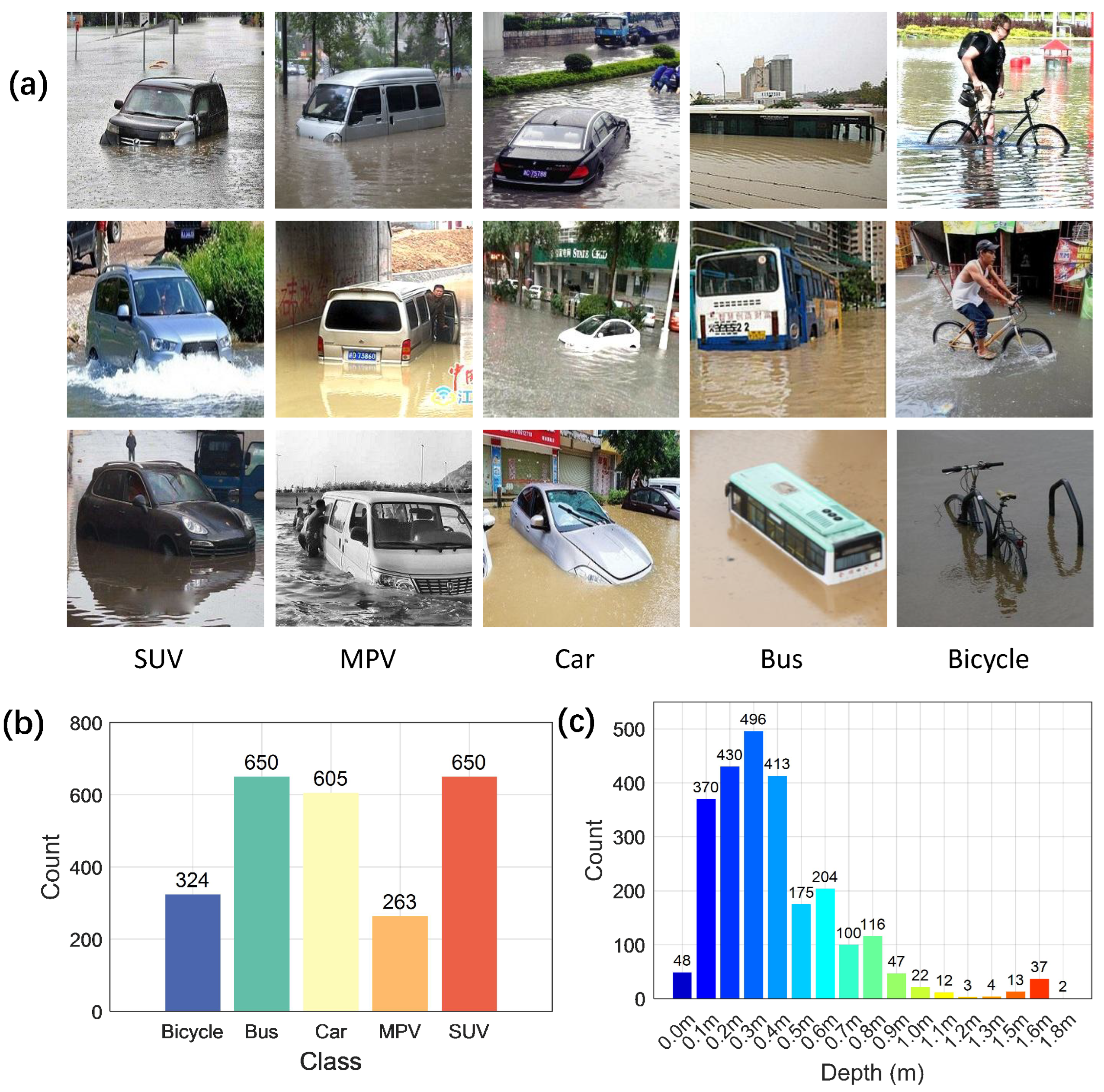

To train the EdgeNN, preparing a dataset with submerged reference object and water depth annotation is necessary. Typically, within a country or region, similar reference objects exhibit comparable submersion patterns across different cities and flood events; we can collect images of submerged reference object regions with water depth annotations to construct the training dataset. This process requires only simple image-level water depth labeling, eliminating the need for reference position or category annotations. After representing the image regions as feature vectors using OpenCLIP, they can be paired with water depth labels as input–output pairs to complete the dataset preparation. As data accumulates through practical applications, this water depth prediction network can demonstrate progressively improved forecasting capabilities. However, national or regional characteristics may lead to differences in common vehicles, such as Japan’s K-car, India’s Tata, the UK’s double-decker buses, and America’s pickup trucks. To enable EdgeNN to achieve plug-and-play universality, it should ideally include typical flood reference objects from various countries and cities worldwide. But collecting such a comprehensive dataset is challenging and labor-intensive. The original design intent of this paper is to allow users from different cities to quickly adapt based on the common flood reference objects in their own city or region, so users only need to collect submerged images of flood reference objects from their local area. This is also the reason for designing EdgeNN as a lightweight model. Optionally, after obtaining sufficient multi-type reference object data, HatNN can also be trained as a more complex neural network model to achieve plug-and-play universality.

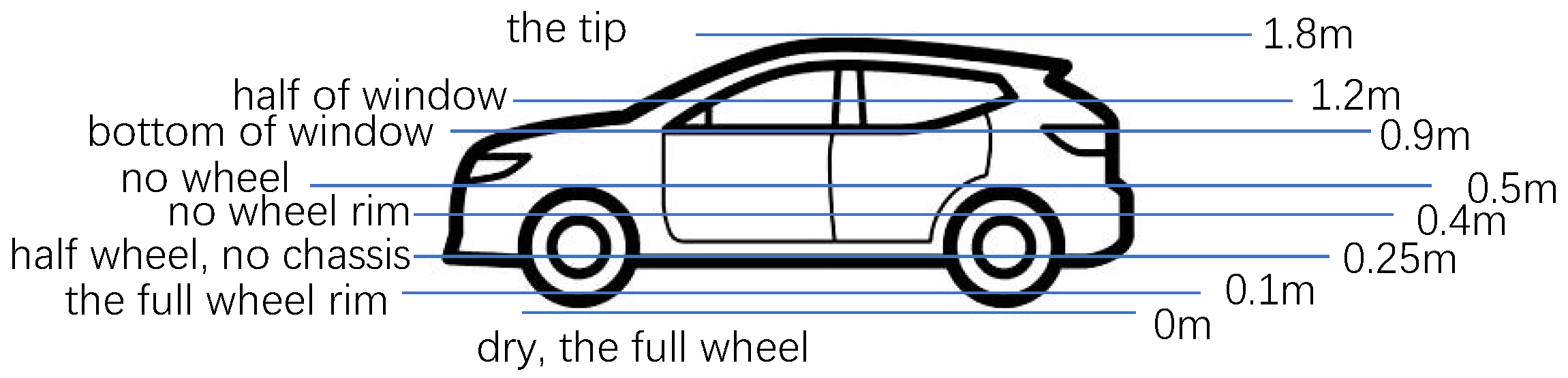

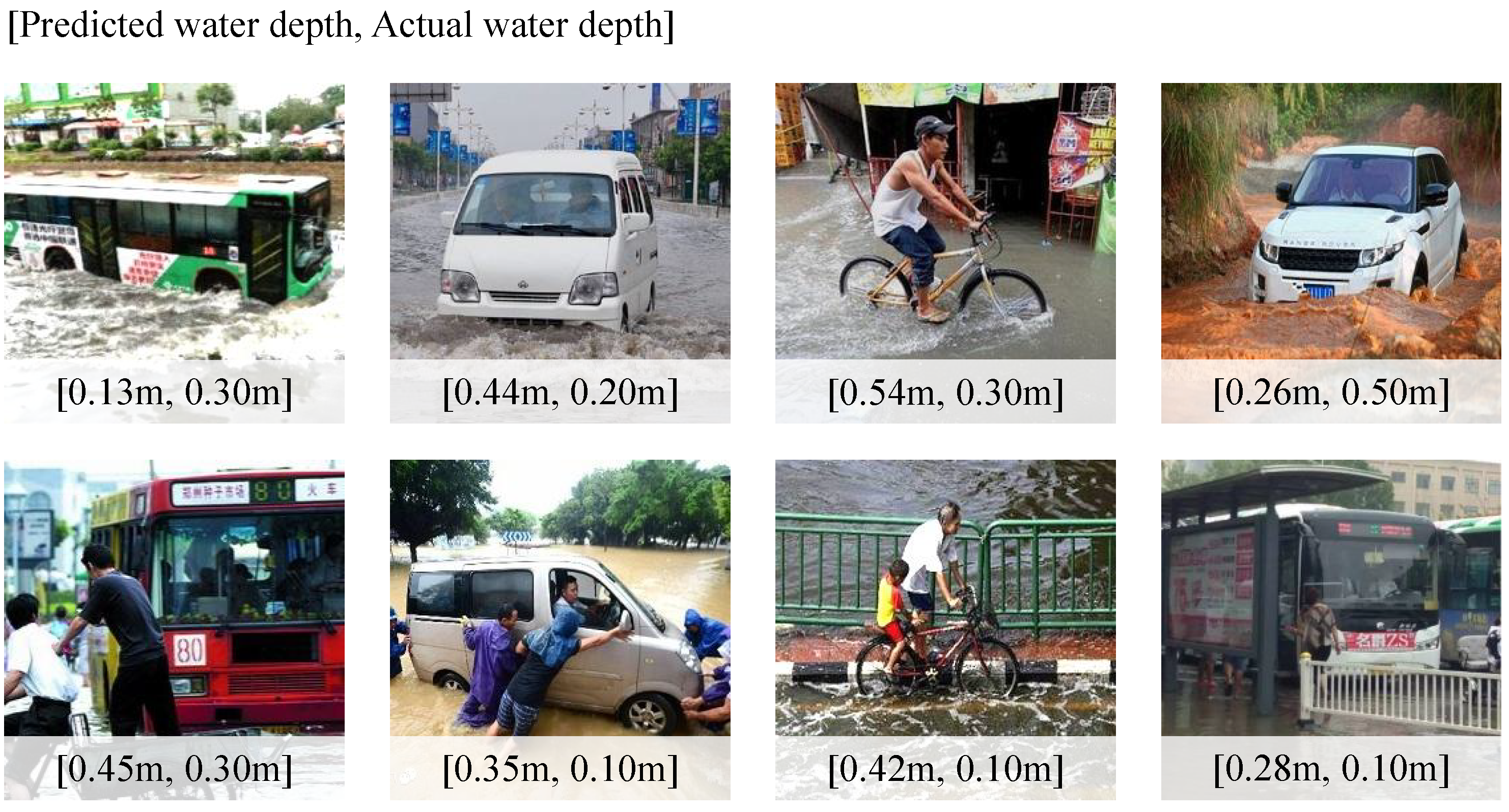

The water depth labeling of reference objects in the dataset directly affects the water depth perception accuracy of EdgeNN. Users can improve data reliability by combining objective techniques with expert subjective labeling based on actual conditions. The former can use IoT devices to collect actual water depth and image data on-site during flood events, such as drones equipped with water depth detection radar, water level gauges installed in culverts and other areas prone to flooding, etc. If conditions permit, objective labeling methods should be used more frequently. To reduce subjective errors in the latter, some methods can be adopted. First, establish clear criteria for judging submerged parts. For example, for pedestrians, judgments can be assisted based on their posture and joint confidence. Typically, body joints located below the water surface have significantly lower recognition confidence than those above the water. Utilizing this characteristic, estimation can be made based on the boundaries of exposed body parts. For example, for SUVs, refer to the criterion representation method shown in the figure below. Provide a standard side view of the object, draw lines at different key points to indicate water depth, and add multiple equivalent descriptive texts for each water depth. This allows labeling experts to automatically adapt to different perspectives, partial occlusions, etc. Second, independent labeling combined with cross-validation can be used. Due to variations in SUV sizes,

Figure 3 is only for reference; experts can make comprehensive judgments, with 2 to 3 experts independently labeling each data point, and samples with poor labeling consistency are discussed and decided in meetings.

Users should be aware of the usage boundaries imposed by the sample distribution limitations of the training dataset. First, the issue of geographical distribution limitations. Inadequate refinement in reference object categorization can lead to significant size variations within the same category; for example, cars belonging to the same class but having different sizes. When the training data region is the same as the application region, this issue does not affect model performance because the model has been calibrated during the water depth labeling process. EdgeNN does not determine water depth through reference object category but through the water depth labels. When the training and application regions differ, or when the model is applied to new reference objects without retraining (e.g., applying a model trained on regular cars to a region including K-cars, or applying a model trained on vehicles to pedestrians), EdgeNN loses reliability and is likely to make errors, as the model has not learned the characteristics of K-cars or pedestrians. Second, the issue of water depth distribution limitations. The sample water depth distribution in the training dataset should cover all possible water depths as much as possible, and the data volume for each water depth should not be too small. If all sample water depths range from 0 to 1 m, the model will likely fail when used to perceive flood scenarios with water depths exceeding 1 m.

4. Conclusions and Future Works

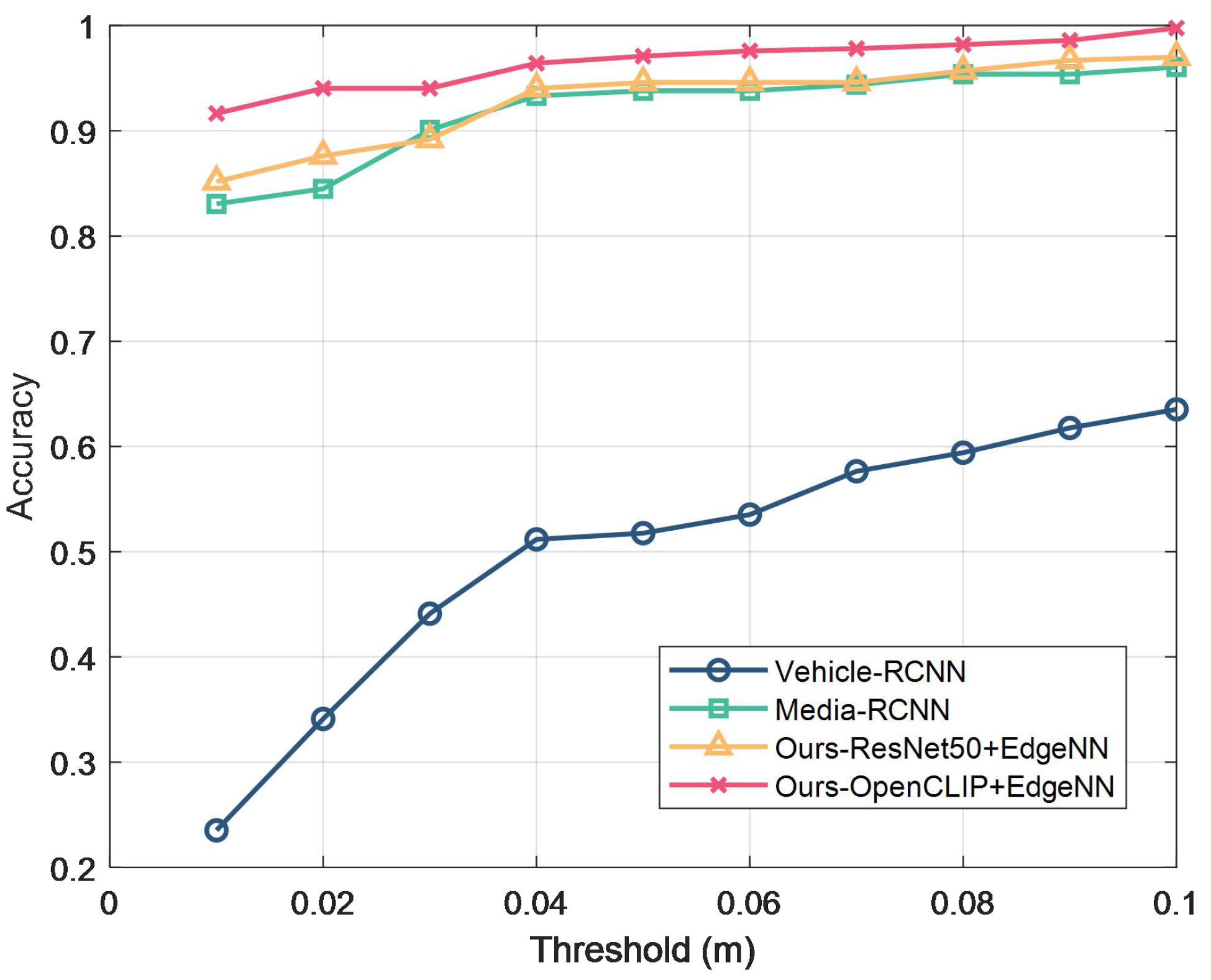

This study presents a lightweight framework that integrates pre-trained large models with edge training to estimate urban flood depth in data-scarce scenarios. By designing prompt templates for urban flood utilizing multimodal large models to automatically filter social media images and extract reference object features, this method eliminates the traditional time-consuming data collection, manual data labeling, and computationally expensive training for texts/images relevance filtering, object detection, and universal image compressive representation. By designing a lightweight flood depth sensing neural network as the final step, the method is easy to deploy. Ultimately, the proposed method significantly improves the accuracy and efficiency of urban flood depth estimation. Test results show that the proposed method reduces mean squared error by over 80% compared to existing approaches, with a maximum error of just 0.006. The method completes single-image processing in under 0.5 s, achieving fast performance. Additionally, the model demonstrates strong robustness to changes in viewpoint, lighting, and image quality. Since this method does not require large model training or hardware infrastructure construction, combining the ubiquity and abundance of social media images, this method achieves ubiquitous, green, and fast sensing of urban flood depth. The design concept of large model preprocessing combined with a separable, lightweight edge perception network, as presented in this paper, can be referenced not only for flood depth perception applications but also for other urban management areas such as road health monitoring and pollution monitoring.

The method presented in this paper still has limitations. Future work will focus on improving the data reliability and refining multimodal feature fusion strategies to enhance generalization in more complex environments. For example, by filtering out fake data collected by web crawlers and exploring more diverse objective labeling methods. Furthermore, an important data point in urban flood management is geographic tagging. After the relevance detection step in this paper, geographic tagging can be obtained by designing prompt templates similar to those in

Table 1 and utilizing large language models to acquire the corresponding GPS coordinates for each image scene. With geographic coordinates available, generating spatiotemporal dynamic water depth maps under challenges like uneven spatiotemporal sampling and data conflicts represents a crucial next step to support smart city initiatives and enhance resilience against extreme rainstorm and flood disasters.