1. Introduction

The effective management of inventory systems is a foundational challenge in today’s globalised and volatile economic landscape, with profound implications for the financial performance and resilience of modern enterprises. Supply chains are perpetually exposed to a wide array of stochastic phenomena that disrupt the flow of goods and information. Events such as equipment breakdowns and pandemics can lead to supplier outages whose durations are unpredictable and rarely follow the simple, memoryless patterns assumed in classical models [

1]. Compounding this, unpredictable customer demand and random production yields create a confluence of uncertainties that can culminate in substantial financial repercussions, either through costly stock-outs or the burden of excessive inventory holding costs. The central motivation for this research is therefore the pressing need for control policies that are not just mathematically optimal under idealised conditions, but are provably robust and effective in the face of these realistic, non-exponential disruptions. Managers require simple, implementable policies, such as the well-known base-stock policy, yet the theoretical guarantee of their optimality has not been established for systems facing this complex combination of uncertainties. This paper is motivated by the need to bridge this critical gap between classical inventory theory and the practical realities of modern supply chain risk management.

The theoretical bedrock of this field was established through the seminal contributions of Scarf [

2] and Iglehart [

3], who demonstrated the optimality of simple, threshold-based policies under specific, idealised conditions. For systems characterised by stationary stochastic demand, convex cost structures, and perfectly reliable supply, they proved that the optimal replenishment strategy is of the

or base-stock type. These policies, defined by a reorder point (

s) and an order-up-to level (

S), are not only analytically elegant but also highly practical, forming the conceptual basis for countless inventory management systems in industry. Throughout this paper, we refer to this class of policies as threshold-based policies, which includes the well-known

and base-stock structures.

However, the classical framework’s reliance on simplifying assumptions—such as memoryless demand processes, perfectly reliable suppliers, and deterministic lead times—limits its direct applicability in many real-world scenarios. This discrepancy has motivated a rich and extensive body of research aimed at extending inventory models to encompass more realistic and complex operational characteristics. A particularly critical area of modern research concerns the explicit modelling of supply-side uncertainties. A significant stream of literature has investigated the impact of supply disruptions, often modelling the supplier as a system that stochastically alternates between an available (‘ON’) state and an unavailable (‘OFF’) state, or a production system subject to random breakdowns [

4]. Other models consider disruptions in the form of entire supply batches being rejected upon arrival due to imperfect quality [

5]. While these models provide fundamental insights, they frequently assume that the durations of the ON/OFF states follow exponential distributions. This memoryless property fails to capture more complex, non-exponential patterns of failure and repair often observed in practice, a limitation that has been addressed in some models by employing more general distributions like Phase-Type (PH) distributions [

6]. Another crucial source of uncertainty is random production yield, where the quantity received from a supplier is a random variable that may differ from the quantity ordered. This phenomenon is prevalent in industries such as agriculture, semiconductor manufacturing, and pharmaceuticals. The risk posed by random yield often compels firms to adopt mitigation strategies, such as securing emergency backup sourcing options, which introduces further complexity into the decision-making process [

7]. While some recent work has started to jointly consider random yields and disruptions, the analysis is often confined to periodic-review settings and specific yield models [

8], leaving a gap in the understanding of their combined impact in a continuous-review framework.

To rigorously model systems that evolve deterministically between random events, the framework of Piecewise Deterministic Markov Processes (PDMPs) has proven to be an exceptionally powerful and versatile tool. Introduced by Davis [

9,

10], a PDMP is characterised by three local components: a deterministic flow, a state-dependent jump rate, and a transition measure. This structure is naturally suited to modelling continuous-review inventory systems. The PDMP framework has been successfully applied to a broad class of problems in operations research, including production, maintenance, and inventory control [

11], with notable applications in production-storage models featuring interruptions [

12]. The optimal control of PDMPs, particularly through discrete interventions like placing replenishment orders, is known as an impulse control problem [

13]. This formulation captures the essence of inventory replenishment decisions, which are discrete actions (impulses) that instantaneously change the state of the system. When the objective is to minimise the long-run average cost—a criterion often preferred in operations for its focus on steady-state performance—the analysis centres on the associated Average Cost Optimality Equation (ACOE). For impulse control problems, this equation typically takes the form of a quasi-variational inequality (QVI). The QVI elegantly represents the fundamental decision at every state: either continue without intervention, in which case the system dynamics are governed by the PDMP generator, or intervene at a cost, which instantaneously transitions the system to a new state. The optimal policy is defined by the boundary between the ‘continuation’ and ‘intervention’ regions [

14]. The analytical machinery for average cost control of PDMPs, including the development of policy iteration algorithms, has been substantially advanced in recent years [

15]. Furthermore, various numerical methods for the simulation and optimisation of PDMPs have been developed, using techniques ranging from quantization [

16] to broader simulation frameworks [

17], highlighting the practical relevance of this modelling approach.

While the PDMP framework provides the necessary dynamic structure, realistically modelling the non-exponential timing of disruptions requires a correspondingly flexible class of probability distributions. Phase-Type (PH) distributions, introduced by Neuts [

18], offer this versatility. As the distribution of the time to absorption in a finite-state continuous-time Markov chain, the PH class is dense in the space of all positive-valued distributions. The primary advantage of PH distributions lies in their inherent Markovian structure. By expanding the state space to include the current ‘phase’ of the PH distribution, a system with general, non-exponential event timings can be analysed within a larger, but still tractable, Markovian framework. This state-space expansion technique is a powerful method for overcoming the memoryless assumption and is seeing increased use in modern inventory models. Its utility has been demonstrated in models that capture non-exponential supplier ON/OFF durations [

6], non-exponential service and repair times for servers subject to breakdowns [

19], and even in related reliability contexts for modelling repairable deteriorating systems and procurement lead times [

20]. The analytical tractability of PH distributions is further enhanced by the powerful computational framework of Matrix-Analytic Methods [

21]. The same underlying mathematical structure has also given rise to related tools like Markovian Arrival Processes (MAPs), which can model not only non-exponential inter-arrival times but also their autocorrelation. Recent studies have shown that in systems with such correlated processes, state-dependent threshold policies remain optimal [

22], reinforcing the idea that threshold-based control is robust to more complex stochastic dynamics. The search for elegant structural properties and efficient algorithms is a recurring theme in the broader field; for instance, exploiting structural properties of Markov Decision Processes, such as skip-free transitions, has been shown to yield highly efficient policy iteration algorithms [

23], motivating the search for analogous structural properties in our more general PDMP setting.

Despite these powerful tools, a complete and rigorous analytical framework for the optimal control of a continuous-review inventory system that integrates PH-distributed supply and demand disruptions, random production yield, and a general long-run average cost objective has remained elusive. The inherent complexity of such integrated systems has led researchers to pursue several distinct analytical avenues. One major stream of research focuses on developing approximation algorithms for discrete-time versions of these problems, often for specific settings like capacitated perishable systems, and establishing their worst-case performance guarantees [

24,

25]. A second powerful approach is asymptotic analysis, which examines system behaviour in a specific regime, such as when penalty costs for lost sales become extremely large. This line of work has often demonstrated that simple base-stock-type policies are

asymptotically optimal, providing strong evidence for the robustness of threshold policies but not proving their exact optimality under general cost parameters [

26]. For instance, these studies show that as a system parameter (like backorder cost) tends to infinity, the cost difference between a simple base-stock policy and the true optimal policy vanishes. While these are powerful and important results that provide strong motivation for our work, they leave a critical question unanswered: is the policy optimal for a

finite, fixed set of system parameters, or is it merely a very good approximation in a specific limiting regime? A third stream, to which this paper belongs, employs the theory of stochastic optimal control to establish the

exact structure of the optimal policy for a general class of continuous-time processes. However, the existing literature within this stream tends to be fragmented, with models addressing only subsets of the complex features. For instance, models considering supply flexibility through dual-sourcing or emergency orders [

27] often simplify the underlying stochastic processes. Models that tackle demand uncertainty by incorporating advance order information often do so under idealized supply conditions [

28]. Even when multiple uncertainties like random yield and disruptions are considered jointly, the analysis is typically limited to infinite-horizon models with simplified assumptions or specific cost structures [

29,

30].

This leaves a critical gap in the literature, which frames the central research problem of this paper: there is no unified theory that rigorously proves the structure of the optimal policy for a system subject to a confluence of realistic, multifaceted uncertainties. This leads to our primary research question: Does the intuitive and simple structure of a state-dependent base-stock policy remain optimal in such a complex and realistic environment? To provide a complete and rigorous answer, we systematically address the following interconnected theoretical questions:

Can the existence of a solution to the corresponding Average Cost Optimality Equation (ACOE) be formally established, thereby guaranteeing that an optimal policy exists?

Can the convexity of the value function be proven, providing the cornerstone for establishing the optimality of the base-stock policy structure?

Is the resulting optimal policy computationally attainable, and can the theoretical convergence of a suitable algorithm, such as the Policy Iteration Algorithm (PIA), be proven?

A comprehensive affirmative answer to these questions would provide strong justification for the use of simple, implementable control rules in highly stochastic settings, offering managers guidance that is both robust and practical.

This paper addresses this critical gap by developing a complete theoretical framework for the optimal control of a continuous-review inventory system subject to multifaceted disruptions. We model the system as a PDMP with impulse control, where the sojourn times in different environmental states are governed by general PH distributions. The model also explicitly incorporates random production yield. While each of these features has been studied in isolation, our primary contribution stems from their novel synthesis within a single, unified framework that proves the structural properties of the optimal policy under their combined influence. Our objective is to rigorously characterise the replenishment policy that minimises the long-run average cost of the system.

This work makes four primary contributions. First, we construct a rigorous and general mathematical model that synthesises the PDMP framework with impulse control, PH-distributed disruptions, and random yield, capturing a wide range of realistic system dynamics in a single, unified structure. Second, we formally establish the existence of a solution to the corresponding ACOE. We achieve this by employing the vanishing discount approach, a powerful technique in the theory of Markov decision processes that connects the more tractable discounted cost problem to the long-run average cost problem [

31,

32]. Third, and most significantly, we prove that the optimal replenishment policy for this complex system possesses a state-dependent base-stock structure. This is our central structural result. It demonstrates that despite the non-exponential timings and multiple interacting sources of uncertainty, the optimal control logic retains an elegant and intuitive threshold-based form. This represents a significant generalisation of the classical optimality results of Scarf [

2]. Finally, to provide a complete analytical treatment, we formulate a Policy Iteration Algorithm (PIA) for computing the optimal base-stock levels and rigorously prove its theoretical convergence. The PIA is a cornerstone of dynamic programming, and its efficiency and convergence properties are subjects of ongoing research [

33,

34]. Its convergence in our generalised PDMP setting confirms that the optimal policy is not only structurally simple but also computationally attainable, thus completing the pathway from model formulation to the determination and computation of the optimal control strategy.

The remainder of this paper is organised as follows.

Section 2 provides the detailed mathematical formulation of the problem, constructing the state space, defining the system dynamics within the PDMP framework, and justifying the key modelling assumptions.

Section 3 introduces the ACOE and the verification theorem that establishes its connection to the optimal policy. In

Section 4, we prove the existence of a solution to the ACOE via the vanishing discount approach, establish our main result on the optimality of state-dependent base-stock policies, and prove the convergence of a Policy Iteration Algorithm.

Section 5 presents a numerical experiment to illustrate the theoretical results and provide managerial insights, quantifying the value of our modelling approach over simpler, memoryless approximations. Finally,

Section 6 concludes the paper with a summary of our contributions, a discussion of further remarks on the model, and directions for future research.

2. Mathematical Formulation

We model the inventory system as a Piecewise Deterministic Markov Process (PDMP), a powerful class of stochastic processes well-suited for systems that evolve deterministically between random events [

9,

10]. This section provides a rigorous formulation of the model. We begin by constructing the state space, meticulously defining each component to capture the system’s complex dynamics. We then introduce the control actions and the resulting process evolution, governed by an extended generator. Finally, we formulate the long-run average cost minimization problem.

2.1. System States, Model Parameters, and Key Assumptions

We model the inventory system as a Piecewise Deterministic Markov Process (PDMP) with impulse control, under a long-run average cost criterion. The fundamental definitions of the process generator, admissible control policies, and cost structure are standard in this field and are adapted from known works in literature (e.g., [

10,

15]). Our contribution lies in synthesising these concepts into a unified model for an inventory system with non-exponential disruptions and random yield, and in rigorously proving the structural properties of its optimal policy.

To ensure the process is Markovian, the state vector must contain all information necessary to describe its future probabilistic evolution. This requires tracking not only the current inventory level but also the status of the stochastic environments and the full pipeline of outstanding orders. We build the state vector component by component to motivate its structure. We begin by formally defining the components of the state vector.

Definition 1

(State Space). The state of the system at time is given by the vector . The components of are:

: The inventory level. A positive value, , denotes on-hand stock, while a negative value, , represents backlogged demand. No lost sales are allowed.

and : The discrete states of the demand and supply environments, respectively. These environments modulate the system’s dynamics.

and : The internal phases of the Phase-Type (PH) distributions that govern the sojourn times in the current demand and supply states. This structure allows for modelling non-exponential disruptions.

: The number of replenishment orders currently in transit (in the pipeline). M is the maximum number of outstanding orders.

for : The state of the i-th outstanding order. A key feature of our model is that the structure of an order depends on the supply environment at its time of placement:

- -

If placed when , the lead time is deterministic. The state is , where is the order quantity, is the i.i.d. random yield, and is the ‘age’ of the order.

- -

If placed when , the lead time is stochastic. The state is , where tracks the deterministic part of the lead time (L), and is the phase of the stochastic component (W).

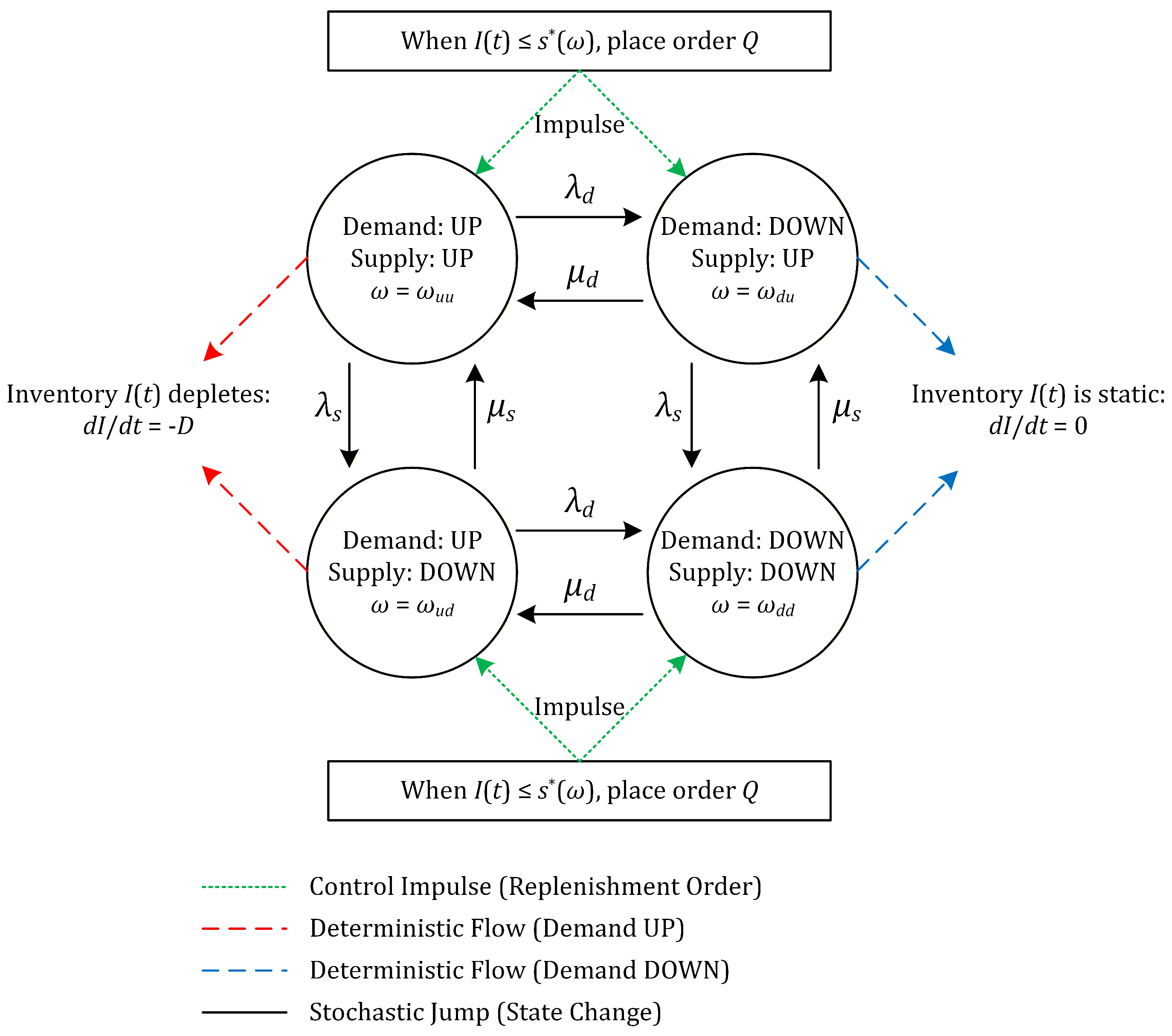

For notational convenience, we group the discrete and supplementary continuous variables as . The full state vector is then , and the state space E is a Borel subset of a finite-dimensional Euclidean space defined as: To aid comprehension of the system’s dynamics,

Figure 1 provides a schematic representation of the PDMP framework. The inventory level,

, evolves deterministically between random events, while the discrete environmental state,

, transitions stochastically. A control action (an impulse) is triggered when the inventory level hits a state-dependent threshold, causing an instantaneous jump in the pipeline state.

The model is parametrized by the following elements, which define the system’s physical and economic characteristics:

Stochastic environments: The sojourn times in the demand and supply environments are governed by independent Phase-Type (PH) distributions, characterized by their respective sub-infinitesimal generator matrices, and , and initial phase probability vectors.

Demand rate: The demand rate is a constant when the demand environment is in the ‘UP’ state and zero otherwise.

Lead times: The lead time includes a deterministic component . When the supply environment is ‘DOWN’, there is an additional stochastic component, W, which follows a PH-distribution.

Random yield: The received quantity is a random fraction Y of the ordered quantity, where Y follows a known distribution on .

Cost parameters: The cost structure includes positive constants for:

- -

Holding cost rate (),

- -

Backorder cost rate (),

- -

Fixed ordering cost (),

- -

Variable (per-unit) ordering cost ().

The control actions available to the decision maker are instantaneous decisions to place a replenishment order.

Definition 2

(Action Space). The action space for replenishment is . An action corresponds to placing an order of quantity Q.

2.2. Construction of the Controlled Process

Having defined the state space and the system’s parameters, we now formalise how a decision-maker can influence its evolution. An admissible control policy specifies the timing and sizing of replenishment orders, thereby shaping the stochastic process that describes the inventory system’s trajectory.

Definition 3

(Admissible Control Policy). An admissible control policy π is a sequence of replenishment decisions , where:

- 1.

is a stopping time with respect to the natural filtration of the process, representing the time of the n-th order. We set .

- 2.

is an -measurable random variable taking values in , representing the quantity ordered at time .

The set of all admissible policies is denoted by Π.

Remark 1

(On the Class of Admissible Policies). We make the following standard technical remarks regarding the class of admissible policies Π:

- 1.

Scope of Policies: The definition of an admissible policy is general and encompasses both history-dependent and randomised policies. However, a central result of this paper is to demonstrate that the optimal policy, which we seek within this broad class, is in fact a simple, deterministic, stationary Markov (state-feedback) policy.

- 2.

Non-Accumulation of Impulses: The impulse control framework implicitly requires that the stopping times are strictly increasing, i.e., , and that almost surely. This condition prevents the accumulation of an infinite number of interventions in a finite time interval, often termed ‘chattering’ controls. This is guaranteed in our setting because each intervention incurs a strictly positive fixed cost , making any infinite sequence of orders over a finite horizon infinitely costly and thus manifestly suboptimal.

- 3.

Measurability of Controls: For a state-feedback policy, defined by a function that maps a state x to an order quantity, we require that this function is Borel-measurable. This ensures that the resulting stochastic process remains well-defined. The existence of such a measurable selector is guaranteed in our context by standard results in dynamic programming and optimal control theory, particularly as the optimal policy is derived from the value function, which we prove to be continuous.

For any given policy and initial state , there exists a probability measure on the space of all possible system trajectories. The process evolves as follows:

Between replenishments, for , the process evolves as a PDMP governed by a generator , which we call the ‘no-order’ generator.

At replenishment time , the state undergoes an instantaneous transition. If the state just before the order is , the state immediately after is , where the pipeline component of is updated to reflect the new order . The inventory level I itself does not change at the moment of order placement.

The system’s evolution between replenishment orders is determined by three local characteristics: the flow , the jump rate , and the transition measure Q. These elements define the extended generator of the no-order process.

Definition 4

(Generator of the No-Order Process)

. The extended generator of the PDMP between replenishments acts on a suitable function from its domain . It is composed of a drift operator and a jump operator:where is the derivative along the flow:For our model, this corresponds to:The domain consists of functions for which this expression is well-defined and which satisfy appropriate boundary conditions that account for deterministic jumps, such as the arrival of orders with deterministic lead times. For the subsequent analysis, particularly the convergence arguments in the vanishing discount proof, it is necessary to formally define the functional space in which the generator operates and to state its key properties.

Lemma 1

(Functional Space for the Generator

)

. Let be the Lyapunov function from Assumption 2. We consider the generator as an operator on the Banach space of all continuous functions with finite weighted supremum norm:The domain of the generator, , is the subspace of functions for which the expression for in Definition 4 is well-defined and for which . The operator has the following crucial properties:- 1.

The domain is dense in the space .

- 2.

The operator is a closed linear operator on .

Remark 2.

The properties of denseness and closure, stated above, are fundamental technical results in the theory of Piecewise Deterministic Markov Processes. The proofs, which typically rely on semigroup theory and resolvent operator analysis, are beyond the scope of this paper but can be found in standard texts on the subject, such as Davis [10]. The closure property is particularly critical, as it provides the rigorous mathematical justification for passing limits through the generator (i.e., showing that if and , then ). This is implicitly used in the convergence arguments of the vanishing discount proof in Section 4. 2.3. Problem Formulation and Cost Structure

The objective is to find an admissible policy that minimizes the long-run average cost, which is composed of running costs for holding and backorders, and impulse costs for placing replenishment orders.

Definition 5

(Long-Run Average Cost)

. The instantaneous running cost rate at state is given bywhere . The cost associated with placing an order of size Q is . For a given admissible policy and initial state x, the long-run average cost is defined as:where is the number of orders placed up to time T. We make the following standard assumption about the structure of the running cost, which is crucial for determining the structure of the optimal policy.

Assumption 1

(Convex Costs). The instantaneous cost rate is a convex function of the inventory level I.

The central problem of this paper is to find a policy that minimizes this cost criterion over the set of all admissible policies.

Definition 6

(Optimal Control Problem)

. The optimal long-run average cost, , is the infimum of the cost functional over all admissible policies:An admissible policy is optimal if it achieves this infimum, i.e., 2.4. Summary of Key Model Features

For clarity, we summarize the main features of our model, which will be assumed throughout the paper. This framework synthesizes several realistic aspects of modern inventory systems.

System structure: The model considers a single-item, continuous-review inventory system with full backlogging. A maximum of M replenishment orders can be in the pipeline simultaneously.

Stochastic environments: Both the demand and supply processes are modulated by independent, two-state continuous-time Markov chains. The sojourn times in all environmental states are governed by Phase-Type (PH) distributions, allowing for non-exponential behaviour.

Demand rate: The demand rate is a constant when the demand environment is in the ‘UP’ state and is zero otherwise.

Lead time: The lead time is a deterministic constant if an order is placed when the supply environment is ‘UP’. It is a stochastic quantity , where W is a PH-distributed random variable, if the order is placed when the supply is ‘DOWN’.

Random yield: The received quantity is a random fraction of the ordered quantity , given by , where is an i.i.d. sequence of random variables with a known distribution on .

Costs: The cost structure includes positive constants for holding (), backorders (p), fixed ordering (K), and per-unit ordering (c).

Control: The control is of impulse type, where the decision-maker chooses the timing () and sizing () of replenishment orders.

Initial conditions: The initial state of the system, , is known and non-random.

Finally, for the long-run average cost criterion to be well-defined, we impose a standard stability condition on the system. Specifically, we assume a Foster–Lyapunov drift condition, which is a powerful form of Lyapunov condition common in the analysis of stochastic processes:

Assumption 2

(Lyapunov Condition)

. There exists a function , constants , , and for every admissible impulse control policy , its corresponding generator , such thatFurthermore, the running cost is bounded by this function, i.e., for some constant , and the intervention cost is bounded such that the expected post-replenishment value is controlled: for any order quantity , Remark 3

(On the Lyapunov Condition). Assumption 2 is a strong stability condition that is fundamental to long-run average cost analysis. While providing a formal proof for our specific model is complex, the assumption is economically intuitive. The function can be interpreted as a measure of the system’s ‘energy’ or distance from an ideal state (e.g., zero inventory). The condition implies that, on average, the system experiences a drift back towards a central region of the state space, driven by the economic incentives of the cost structure.

The economic intuition that the cost structure induces stability can be made mathematically rigorous. A constructive proof showing that our specific inventory model satisfies this assumption by building an explicit Lyapunov function is provided in

Appendix B.

2.5. On the Necessity of Key Model Assumptions

To achieve our theoretical outcomes, several key assumptions are made. Here, we justify their necessity within our analytical framework.

Cost Convexity: The assumption of a convex running cost function (Assumption 1) is the foundational pillar for our main structural result. Without it, the value function would not be guaranteed to be convex. The convexity of the value function is the critical property that ensures the optimal policy has the simple and elegant structure. Non-convex costs could lead to complex, multi-threshold, or even non-threshold optimal policies.

Full Backlogging: Allowing for full backlogging (i.e., an inventory level that can become arbitrarily negative) simplifies the state space by removing a boundary at zero. If we were to assume lost sales, the inventory level would be bounded, introducing a reflection at this boundary which would significantly complicate the proof of the value function’s convexity.

Lyapunov Condition (Bounded Moments): The Lyapunov-type stability condition (Assumption 2) is a technical requirement that is essential for long-run average cost analysis. It guarantees the ergodicity of the process under any stationary policy, ensuring that the average cost is well-defined and independent of the initial state. The vanishing discount approach, which connects the discounted problem to the average cost problem, relies fundamentally on this stability condition for convergence.

Phase-Type (PH) Structure: The use of PH distributions is a crucial modelling choice that balances generality and tractability. It allows us to model non-exponential event timings, which is a significant step towards realism. At the same time, the underlying Markovian structure of PH distributions allows us to embed the process into a finite-dimensional state space. Without this structure (e.g., using arbitrary general distributions), the state would need to track the elapsed time in a given state, leading to an infinite-dimensional state space and a far more complex, often intractable, analysis.

Pipeline Limit M: Limiting the maximum number of outstanding orders, M, is necessary to ensure the state space remains finite-dimensional. If an infinite number of orders were allowed in the pipeline, the state vector would require an infinite number of components to track the age and status of each order, making the problem analytically and computationally intractable. This assumption is also practically reasonable in most real-world logistics systems.

4. Solution of the Optimality Equation

The Verification Theorem establishes that if a solution to the Average Cost Optimality Equation (ACOE) exists, the optimal control problem is solved. The more substantial theoretical challenge, which we address in this section, is to prove that such a solution is guaranteed to exist. Our approach is the vanishing discount method. We first introduce a family of related discounted-cost problems, which are more tractable. We then establish key properties of their value functions, such as boundedness and equi-continuity. Finally, using an Arzelà–Ascoli argument, we show that as the discount factor vanishes, a subsequence of these solutions converges to a pair that solves the original ACOE.

4.1. Existence of an Optimal Policy via Vanishing Discount

Our approach to proving the existence of a solution to the ACOE is the vanishing discount method. This involves analysing a family of more tractable discounted-cost problems and showing that their solutions converge to a solution for the average-cost problem as the discount factor vanishes. This convergence relies critically on the stability of the system, which is guaranteed by the Lyapunov condition stated in Assumption 2 [

15].

The following lemmas establish key properties of the value functions for the associated discounted problems, which are essential for the vanishing discount argument.

Lemma 2

(Properties of the Discounted Value Function). Under Assumption 2, for any sufficiently small , there exists a constant M such that the discounted value function satisfies for all .

Proof. The discounted value function for a given initial state

is defined as the infimum of the total expected discounted cost over all admissible policies

:

where

Part 1: Lower Bound. The cost parameters are assumed to be non-negative: the running cost rate

for all

, the fixed cost

, and the variable cost

. The order quantities

are also non-negative. Consequently, the total cost functional

is non-negative for any policy

. The infimum of a set of non-negative numbers is also non-negative. Therefore, we immediately have the lower bound:

Part 2: Upper Bound. To establish an upper bound, it suffices to find a single admissible policy whose cost is bounded by a multiple of . Since is the infimum over all policies, its value must be less than or equal to the cost of this particular policy. We consider the simplest admissible policy: the ‘never order’ policy, denoted by , where no replenishment orders are ever placed.

Under

, the summation term in the cost functional is zero. The cost is purely the expected discounted running cost, which is given by:

Let

denote the extended generator of the PDMP under the no-ordering policy (as defined in Definition 4). The integral expression above is the definition of the resolvent operator

applied to the function

f. Thus, we have:

Our goal is now to bound

. From Assumption 2, we have a bound on the running cost in terms of the Lyapunov function:

. The resolvent operator

is a positive operator because its integral representation involves an expectation; that is, if

for all

x, then

. Applying this property and the linearity of the operator, we get:

The problem is now reduced to finding a bound for

. We use the Lyapunov drift condition from Assumption 2 for the no-order generator

:

Rearranging this inequality, we have:

Adding

to both sides gives:

The left-hand side is precisely

. Applying the resolvent operator

to both sides of the inequality preserves the inequality due to positivity:

By definition,

is the inverse of

, so the left-hand side simplifies to

. By linearity of

, the right-hand side becomes

. Thus,

The resolvent of a constant

is simply

. Substituting this in, we obtain:

Since

and

, the term

is strictly positive, allowing us to rearrange and solve for

:

Now, we combine the bounds. Substituting (

13) into (

12), and then into (

11), we get:

From Assumption 2,

for all

. We can therefore bound the constant term by a multiple of

:

This allows us to consolidate the bound into a single term proportional to

:

Let us define the term in parentheses as

:

For any fixed

,

is a finite positive constant. Let us choose an interval

for sufficiently small

. The function

is continuous on this interval and approaches a finite limit as

if

. If

, the term is unbounded near

. However, the lemma requires existence for any given sufficiently small

. For any fixed

, we can set

, which is finite. Let’s set

M more simply for a given

:

We have thus established that for any sufficiently small

, there exists a constant

such that

.

Conclusion. Combining the lower and upper bounds, we have . Since , this implies , which completes the proof. □

Lemma 3

(Equi-continuity of Centred Value Functions). Under the assumptions of Lemma 2, the family of centred value functions is equi-continuous on any compact subset of the state space E.

Proof. The proof proceeds by establishing a stronger property: the family of value functions is equi-Lipschitz continuous on any compact subset . Equi-continuity of the centred functions is then a direct consequence.

Let

be an arbitrary compact set. Our objective is to show that there exists a Lipschitz constant

, independent of

, such that for any

:

The proof is structured in three steps.

Step 1: Sub-optimality and Coupling Framework. Let

. By the definition of the value function, for any

, there exists a policy

that is

-optimal for the initial state

. That is,

Since

is an admissible policy for any starting state, its cost when starting from

provides an upper bound on the optimal cost

:

Combining these two inequalities, we can bound the difference

:

The core of the proof is to bound the difference in costs,

. To do this, we employ a coupling argument. We construct two sample paths of the PDMP, denoted by

and

, on the same probability space. Both processes start from different initial states,

and

, but are driven by the same policy

and are subjected to the same realization of all underlying random events (i.e., the same sequence of PH-sojourn times, random yields, etc.).

Step 2: Bounding the Expected Coupling Time. We define the coupling time,

, as the first time the two processes meet in the state space:

For all

, the trajectories are identical,

, because they start from the same state and are driven by the same policy and random inputs. The Lyapunov condition (Assumption 2) ensures geometric ergodicity of the process, which implies that the expected time to couple is finite and can be bounded. For any compact set

K, it is a standard result in the theory of stochastic processes under such drift conditions that there exists a constant

such that for any

:

Step 3: Bounding the Cost Difference. The difference in the total discounted cost between the two paths is non-zero only on the stochastic interval

. We have:

where

is the number of orders placed up to time

. The fixed costs

K cancel out perfectly because under the coupling, an order is placed on both paths at the same times

until time

.

Since K is compact, there exists a larger compact set such that for all . The running cost function and the policy decision functions (which determine ) are continuous on , and therefore Lipschitz continuous. Let and be the respective Lipschitz constants.

Instantaneous Cost Difference: Since , their distance is bounded by the diameter of , . Thus, the integral is bounded by .

Variable Ordering Cost Difference: The difference in ordered quantities can be bounded as . The expected number of orders up to time , , is also bounded by the Lyapunov condition. Specifically, there exists a constant such that . Combining these, we can bound the expected sum of variable cost differences by a term proportional to .

Aggregating these bounds, we can find a constant

, which depends on the Lipschitz constants

, the cost parameter

c, and properties of the compact set

, but is crucially independent of

. This leads to:

Substituting the bound on the expected coupling time from (

15) into (

16) gives:

Let

. Now we return to our initial inequality (

14):

By symmetry, we can reverse the roles of

and

to obtain the same bound for

. Therefore,

Since this holds for any

, we can let

to obtain the desired Lipschitz condition for the value functions:

Step 4: Conclusion for Centred Value Functions. The Lipschitz continuity of the value functions

on the compact set

K with a uniform constant

(independent of

) implies that the family is equi-Lipschitz continuous. For the centred value functions

, we have for any

:

Thus, the family

is also equi-Lipschitz continuous, and therefore equi-continuous, on any compact subset of

E. This completes the proof. □

With these foundational properties, the existence of a solution to the ACOE can be established through a standard Arzelà–Ascoli argument on the centred value functions, which is a cornerstone of the vanishing discount method. The key outcome is the following theorem.

Theorem 2

(Existence of ACOE Solution). Under Assumption 2, there exists a pair that solves the ACOE (6). The function is continuous, and is a constant.

Proof. The proof follows the standard vanishing discount approach and is structured in three main steps. We start with the family of discounted-cost problems and their associated value functions , which solve the discounted Hamilton–Jacobi–Bellman (HJB) equation, a quasi-variational inequality (QVI). We then show that as the discount factor , a subsequence of these solutions converges to a pair that solves the ACOE.

Step 1: Boundedness and Compactness of Centred Value Functions. Let be a fixed reference state. The family of centred value functions is defined as for . We first establish the properties of this family that will allow us to use the Arzelà–Ascoli theorem.

Local Uniform Boundedness of : As established in Lemma 2, for any sufficiently small

,

for some constant

M. The Lyapunov function

is continuous and therefore bounded on any compact set

. Thus, the family

is uniformly bounded on any compact set. Consequently, the family of centred value functions

is also uniformly bounded on any compact set

K:

Equi-continuity of : Lemma 3 establishes that the family is equi-Lipschitz on any compact subset of E. Since , the family is also equi-Lipschitz, and therefore equi-continuous, on any compact subset of E.

Boundedness of : The scaled value at the reference state, , is bounded. This is a direct consequence of Lemma 2, which states , implying . For in a bounded interval, e.g., , this value is bounded.

Step 2: Existence of a Convergent Subsequence via Arzelà-Ascoli. Given that the sequence is bounded for any sequence , by the Bolzano-Weierstrass theorem, there exists a convergent subsequence. Let us, by a slight abuse of notation, denote this subsequence again by and its limit by .

Let be an increasing sequence of compact sets such that . From Step 1, the sequence of functions is uniformly bounded and equi-continuous on the compact set . By the Arzelà-Ascoli theorem, there exists a subsequence, which we denote , that converges uniformly on to a continuous function .

Next, considering the sequence on the compact set , we can similarly extract a further subsequence that converges uniformly on to a continuous function . Since , this subsequence also converges uniformly on , and by uniqueness of limits, .

We continue this process for all . Now, consider the diagonal sequence . This sequence converges uniformly on every compact set to a continuous function h. For simplicity, we relabel this convergent subsequence as , so we have:

Step 3: Convergence in the HJB Equation to the ACOE. Each value function

solves the discounted HJB equation, which is a QVI. Substituting

, this QVI is:

Let us analyse the limit as

of the two terms in the ‘min’ operator.

1. Limit of the Continuation Term: Let

. Substituting for

, we get:

We analyse the limit of each component as

:

because and is locally uniformly bounded.

by construction of the subsequence.

The generator term converges to . This is the most technical part. The space of continuous functions with at most linear growth, endowed with a weighted supremum norm , is a Banach space. The generator is a closed operator on this space. The uniform bound from the Lyapunov condition implies that and h are in this space. The uniform convergence of to h on compact sets, combined with the weighted bound, ensures strong convergence in this Banach space. Since and we know that must converge (as shown below), it implies that must also converge. By the property of closed operators, its limit must be .

Thus, we conclude that .

2. Limit of the Impulse Term: Let

. The term

is a constant with respect to the infimum over

Q, so it can be separated:

Let

. The objective function inside the infimum is continuous in

Q and converges uniformly in

on compact sets. By Berge’s Maximum Theorem, the convergence of the functions implies the convergence of the infimum:

Therefore,

.

Since for each

k, we have

, and since the ‘min’ function is continuous, taking the limit as

yields:

This is precisely the ACOE (6). The continuity of the limit function

h has been established. The at-most-linear growth of

h follows from the linear growth of the bounding function

in the Lyapunov condition. The fact that

follows from the non-negativity of costs. This completes the proof of the existence of a solution to the ACOE. □

Numerical Illustration: A Vanishing-Discount Experiment

To complement the existence proof given by the vanishing-discount method, we present a small numerical experiment that (i) implements a simple finite-state approximation of the controlled PDMP, (ii) solves the associated discounted problems for discount rates tending to zero, and (iii) illustrates the vanishing-discount limits

where

is the

-discounted value function for the finite approximation and

is a fixed reference state.

We use a deliberately simple, transparent finite-state approximation that retains the essential features required to illustrate the vanishing-discount approach. The approximation is purely illustrative and does not aim to represent a full industrial instance; instead it demonstrates numerically the convergence properties used in the proof.

State space: inventory levels and a two-state supply/demand environment (e.g., ‘UP’ / ‘DOWN’). Time is discretised to unit steps solely for computational convenience; the theoretical results remain in continuous time. Demand in a step is 1 with probability and 0 otherwise, where in the ‘UP’ demand state and in the ‘DOWN’ state. The environment persists in its current state between steps with probability (switch probability ). Orders are modelled as instantaneous and the yield is set to one for simplicity. Cost parameters are: holding cost , backorder cost , fixed ordering cost , and per-unit ordering cost . Order sizes are integers and constrained so the post-order inventory remains within the truncated range.

For each discount rate , we solve the -discounted dynamic programming equations by value-iteration on the finite state space. At each state the action set comprises ‘do not order’ and ‘place order of size Q’ for integer up to the truncation bound. Value iteration is run until the sup-norm change is below . We then compute (with reference state ) and the centred functions .

Table 1 summarises the computed discounted values at the reference state and the corresponding scaled values

for a sequence of discount rates

. Iteration counts are reported to indicate numerical effort.

Two points should be noted:

The sequence is observed to stabilise as ; in this illustrative experiment the values approach approximately . This is precisely the behaviour guaranteed by the vanishing-discount arguments: the rescaled discounted value at a fixed reference state converges to the average cost .

The centred value functions converge numerically (uniformly on the finite state set used in the test). In particular, the sup-norm differences decrease in our computations; representative sup-norm differences between consecutive values above are , , , , (in the same order).

Although the example is small and simplified, the optimal impulse decisions recovered from the discounted problems stabilise as . For the sample states inspected, the policy converged to the same state-dependent base-stock behaviour across the smaller values of (for instance, when the supply environment is ‘DOWN’ and inventory is sufficiently negative the policy prescribes ordering to a higher reorder-up-to level than when the environment is ‘UP’). Hence, the numerical experiment supports the existence of the ACOE solution via the vanishing-discount method, and illustrates the emergence of a state-dependent base-stock structure in the finite approximation; the same structural argument is made rigorously in the preceding sections for the continuous PDMP model.

To conclude, this numerical experiment verifies the core steps of the vanishing-discount argument used in Theorem 2: (i) converges to a finite limit (the average cost ), (ii) the centred functions stabilise, and (iii) the optimal policies derived from the discounted problems stabilise and exhibit the state-dependent base-stock pattern. These observations corroborate the theoretical existence result and provide an accessible validation that the abstract vanishing-discount machinery is effective on a concrete finite approximation.

4.2. Structural Properties of the Optimal Policy

Having established the existence of the relative value function h, we now analyse its structural properties. We show that under Assumption 1 on the cost structure, the value function is convex. This property is the cornerstone for proving that the optimal policy has a simple and intuitive state-dependent base-stock, or , structure.

Proposition 1

(Convexity of the Value Function). Let be a solution to the ACOE as established in Theorem 2. Under Assumption 1, the function is convex in the inventory level I for each discrete state .

Proof. The proof proceeds by demonstrating the convexity of the discounted value function for any . The relative value function is obtained as the pointwise limit of a sequence of centred value functions . Since the pointwise limit of a sequence of convex functions is convex, establishing the convexity of is sufficient.

We establish the convexity of

with respect to the inventory level

I by induction on the value iteration sequence for the discounted problem. The discounted value function

is the unique fixed point of the Bellman operator

, i.e.,

. The value iteration algorithm is defined by

, with

. The Bellman operator is given by:

where the continuation cost operator

and the impulse cost operator

are defined as follows. For a given function

v, the continuation cost

is the expected total discounted cost starting from state

x assuming no replenishment orders are ever placed, and with

v serving as a terminal cost function. It is the solution to the resolvent equation

, which has the explicit probabilistic representation:

where

is the “never order” policy. The impulse cost is:

Let

.

Base Case: We initialize the value iteration with . This function is linear (and thus convex) in the inventory level I.

Inductive Hypothesis: Assume that for some , the value function iterate is convex in I for every fixed discrete state .

Inductive Step: We must show that is also convex in I. This involves analysing the convexity of the two components of the minimum operator, and , and then the properties of the minimum itself.

1. Convexity of the Impulse Term : The impulse cost is the minimal expected cost immediately following a replenishment decision. It can be formulated as an infimal convolution. Let the order-up-to level be

S. The order quantity is

. The impulse cost is:

where

denotes the post-replenishment discrete state. This expression can be written as

, the infimal convolution of two functions:

and

.

The function is linear in I and is therefore convex.

For : The term is linear in S. By the inductive hypothesis, is convex in S. The expectation operator preserves convexity. To see this, for any convex function and , Jensen’s inequality for conditional expectations yields . Thus, is convex in S. The sum of convex functions is convex, so is convex.

It is a fundamental result of convex analysis that the infimal convolution of two convex functions is convex (see, e.g., [

35]). Therefore,

is a convex function of

I.

2. Convexity of the Continuation Term : The continuation term is the solution

u to the equation

. By its definition as an expected discounted cost, we have

The convexity of

in

I is established by showing that the operator mapping the cost function

f to the value function

preserves convexity. Let

and

be two states with the same discrete component, and let

for

. Because the drift of the inventory level is linear (or state-independent), the process path starting from

is the convex combination of the paths starting from

and

. By Assumption 1,

f is convex in

I. Since expectation is a linear operator and preserves convexity, the expected value of a convex function of the process state is also convex with respect to the initial state. Thus,

is convex in

I.

3. Convexity of : The minimum of two convex functions is not generally convex. However, in the context of QVIs for optimal impulse control, convexity is preserved under specific structural conditions. The key is the structure of the intervention set . We show this is a convex set of the form by proving that the difference function is non-decreasing in I.

The derivative of the impulse cost with respect to I is , as the optimal order-up-to level is independent of the current inventory level I. To show is non-decreasing, we need to demonstrate that . This property, that the slope of the continuation value function is bounded below by the negative marginal ordering cost, is a fundamental economic principle in these models and can be shown to hold inductively. Since , the property holds for . Assuming it holds for , it can be shown to hold for .

With

established as non-decreasing, the intervention set is indeed of the form

. The function

is then constructed by ‘pasting’ the two convex functions

and

at the reorder point

. Convexity of

is then guaranteed by the smooth-pasting condition at this boundary:

This optimality condition ensures that

is not only convex but also continuously differentiable at the boundary between the continuation and intervention regions.

Since the operator preserves convexity and is convex, it follows by induction that is convex in I for all . As the value function is the pointwise limit of the sequence of convex functions , it is also convex in I. Consequently, the relative value function is also convex in I for every fixed discrete state . This completes the proof. □

The convexity of the value function directly implies that the optimal policy can be characterized by state-dependent thresholds, a structure well-known in inventory theory as an policy. The following theorem formalizes this crucial structural result for our PDMP model.

Theorem 3

(Structure of the Optimal Policy). Under Assumptions 2 and 1, the optimal replenishment policy is a state-dependent policy. That is, for each discrete environmental state , there exists an optimal order-up-to level and a reorder point such that it is optimal to place an order to raise the inventory level to whenever the current inventory level .

Before proceeding with the formal proof, we offer an economic justification for this crucial conclusion. The optimality of the state-dependent () structure stems directly from the convexity of the relative value function, which is itself a consequence of both the convex cost structure and, critically, the fact that the system’s dynamics preserve this convexity over time.

The justification begins with the standard and economically sound assumption of convex running costs (Assumption 1). This signifies that the marginal cost of deviating from an ideal inventory level is non-decreasing. For the value function , which represents the total expected future costs, to also be convex, the system’s transition operator must maintain this property. This preservation of convexity holds due to the fundamental nature of our PDMP model:

Deterministic Flow: Between random events, the inventory depletes deterministically at a constant rate. This linear evolution ensures that the expected cost accumulated during this period remains a convex function of the initial inventory level.

Stochastic Jumps: At random event times (e.g., a change in demand state or the arrival of a replenishment), the system transitions to a new state. The resulting expected future cost is an average over the possible outcomes. The process of taking an expectation is a linear operation that preserves the convexity of the value function.

Because both core components of the system’s dynamics preserve convexity, the value function inherits the convexity of the underlying cost functions. This property implies that the marginal value of an additional unit of stock is non-decreasing.

The convexity of h is the critical property that gives rise to the () structure. The decision to order involves comparing the cost of continuing, represented by , against the cost of intervening. The convexity of h ensures that the net benefit of ordering is also a convex function of the current inventory level I. A convex function of this nature partitions the state space into a single continuous ‘do-not-order’ region and ‘order’ regions at the extremes. For a replenishment problem, the relevant region is at low inventory levels. Consequently, there must exist a single threshold, the reorder point , below which ordering is optimal. The optimal target level, , is determined by finding the minimum of a related convex function and is therefore independent of the inventory level at which the order is placed. This intuitive economic reasoning holds even in our complex model, demonstrating the robustness of threshold-based control.

Proof. The proof follows from the convexity of the relative value function , established in Proposition 1. We will demonstrate that this convexity, combined with the structure of the cost functions, naturally induces a state-dependent policy. The proof is structured in several steps.

Step 1: The Optimal Action Rule and the Intervention Set. The decision to intervene (place an order) or to continue (not place an order) is governed by the complementarity condition (

6c) of the ACOE. An impulse action is optimal at a state

if and only if the impulse cost is less than or equal to the continuation cost. This defines the intervention region

as the set of states where:

Let the right-hand side of this inequality be denoted by

, which represents the minimal achievable expected post-intervention cost.

Step 2: Structure of the Minimal Post-Intervention Cost . We first analyse the structure of

. For a given pre-replenishment state

, the decision maker chooses an order quantity

Q to minimize the post-intervention cost. It is more convenient to formulate this in terms of the target inventory level

S. Let

. The post-intervention cost, as a function of the target level

S, is:

The minimal post-intervention cost is then

. We can separate the terms that depend on

I from those that depend on

S:

Let us define the function

. From Proposition 1,

is convex in

S. Since

is linear and the expectation operator preserves convexity,

is a convex function of

S. A convex function on

has a non-empty set of minimizers, which we denote by

. Crucially, this set of optimal order-up-to levels depends only on the post-replenishment discrete state

, not on the pre-order inventory level

I. Let

be any selection from this set.

The infimum in the expression for

is taken over

. Since

is convex, its minimum over the half-line

is achieved either at

(if

) or at

I (if

). However, the physical meaning of an (s,S) policy is to order up to a level, implying that the target level

S is typically greater than the current level

I. The structure of the problem naturally leads to this behaviour. Assuming an unconstrained minimizer

exists, the optimal target level is simply

. The expression for the minimal post-intervention cost then simplifies to:

The term

is a constant for a given environmental state

. Therefore, the minimal post-intervention cost

is a linear function of the inventory level

I with a slope of

.

Step 3: Characterization of the Intervention Set and the Reorder Point . The intervention rule from (

18) is to order when

. Let us analyse the difference function

.

From Proposition 1, is a convex function in I.

From Step 2, is a linear function in I.

Therefore, is also a convex function in I.

We now examine the asymptotic behaviour of . The cost rate grows linearly as . The value function inherits this asymptotic linear growth from the running costs. Since is also linear in I with slope , the difference will also exhibit asymptotic linear growth. Specifically, the convexity of implies that its slope is non-decreasing. For large positive I, the holding cost dominates, and the slope of h approaches . For large negative I, the backorder cost dominates, and the slope of h approaches . Since costs are positive, we can ensure that these slopes are greater than . This implies that .

A convex function on that tends to as its argument tends to must have a global minimum. The set where this function is non-positive, , is a closed interval, and consequently, the intervention set where must be of the form .

Our problem context is replenishment due to low inventory levels (stock-outs or backorders). Therefore, the economically relevant part of the intervention region is the lower tail,

. This set defines the reorder point

as the largest inventory level at which it is optimal to place an order:

The convexity of

guarantees that if it is optimal to order at inventory level

I, it is also optimal to order at any level

.

Conclusion and Synthesis. For each discrete state , we have established the existence of:

An optimal order-up-to level that depends only on the post-replenishment state .

A reorder point such that an order is placed if and only if the current inventory level satisfies . When an order is placed, the quantity is .

This pair, , defines a state-dependent policy. This completes the proof. □

Remark 4

(Computational Procedure and Qualitative Properties of the Policy). The constructive nature of the preceding proof provides a clear procedure for computing the optimal policy parameters from a given value function h. First, the optimal order-up-to level is found by solving the one-dimensional convex optimisation problem . Second, with known, the reorder point is found by computing the largest root of the convex function . Both of these computational steps can be performed efficiently using standard numerical search algorithms.

Furthermore, the optimal policy parameters exhibit intuitive monotonic properties. A formal proof of this monotonicity, while beyond the scope of this paper, can be obtained by showing that the Bellman operator preserves properties of submodularity in the state and action variables. The key insight is that if one discrete state is unambiguously ‘riskier’ than another ω (e.g., due to a higher backorder cost rate or a faster demand rate), the value function will reflect this, i.e., . More specifically, the marginal value of inventory, , will be different. This property is preserved through the value iteration process. Consequently, a riskier state leads to higher optimal inventory levels to buffer against that risk. This implies that if is riskier than ω, we would expect and . This confirms that the derived optimal policy structure not only exists but also aligns with fundamental principles of risk management in inventory theory.

4.3. The Abstract Policy Iteration Algorithm and Its Convergence

The structural properties of the optimal policy, particularly its state-dependent threshold nature, motivate the use of the Policy Iteration Algorithm (PIA) to find the optimal pair . Here, we define the algorithm in its abstract form, operating in the function space over the continuous state space E.

The algorithm iteratively improves a policy until it converges to the optimal solution of the ACOE. It begins with an initial policy and generates a sequence of policies and corresponding value function-cost pairs . Each iteration consists of two main steps:

- 1.

Policy Evaluation: For a given state-dependent

policy

, find the pair

that solves the linear Bellman equation for that policy:

subject to a normalization condition on

to ensure the uniqueness of the relative value function. Here,

is the generator of the process under the fixed policy

.

- 2.

Policy Improvement: Find a new policy

that is greedy with respect to the computed value function

. That is, for each state

x, the action

solves the one-step optimization problem:

This process is repeated until the policy no longer changes, i.e., . The following proposition formally states the convergence of this abstract procedure.

Proposition 2

(Convergence of the Policy Iteration Algorithm). Under the Lyapunov condition (Assumption 2), which ensures ergodicity for any fixed policy, the abstract Policy Iteration Algorithm generates a sequence of policies and average costs such that:

- 1.

The sequence of average costs is monotonically non-increasing, i.e., for all , and converges to the optimal average cost .

- 2.

The sequence of policies converges to the optimal policy , which satisfies the ACOE.

Proof. The proof is structured in two main parts. First, we demonstrate the monotonic improvement of the average cost. Second, we establish that the limit of the generated sequence is the optimal solution.

Part 1: Monotonic Improvement of the Average Cost. Let

be the pair generated at iteration

k for a given policy

. By definition of the Policy Evaluation step, this pair is the unique solution (with

unique up to an additive constant) to the Bellman equation for policy

:

where

is the state-dependent instantaneous cost rate under policy

.

The Policy Improvement step defines a new policy

that is greedy with respect to

. By its definition as the minimizer,

must satisfy:

Choosing

in the inequality above and substituting from (

19), we obtain:

Now, let

be the pair generated by the evaluation of policy

. It satisfies:

Subtracting (

22) from the inequality (

21) yields:

Let

. The Lyapunov condition (Assumption 2) ensures that the process is ergodic under any stationary policy

. Therefore, there exists a unique stationary probability measure

for the process governed by generator

. A fundamental property of a stationary measure is that for any function

g in the domain of the generator,

. We take the expectation of the above inequality with respect to

:

The left-hand side is zero. Since

and

are constants and

is a probability measure, the right-hand side is simply

. This leads to:

The sequence of average costs

is therefore monotonically non-increasing. Since costs are non-negative, the sequence is bounded below by 0. By the Monotone Convergence Theorem, it must converge to a limit, which we denote by

.

Part 2: Convergence to the Optimal Solution. We now establish that the limit of the sequence is the optimal solution.

Uniform Boundedness and Equi-continuity. A key consequence of the Lyapunov condition (Assumption 2) in the theory of average-cost MDPs is that the sequence of relative value functions is uniformly bounded in a weighted norm. That is, there exists a constant such that for all k and . This implies that on any compact subset , the sequence is uniformly bounded and equi-continuous.

Existence of a Convergent Subsequence. Since the state space E is a Polish space (and thus -compact), we can choose an increasing sequence of compact sets such that . By the Arzelà–Ascoli theorem and a diagonalization argument, we can extract a subsequence, which we re-index by j for clarity, such that as :

.

uniformly on every compact subset of E, where is a continuous function.

The corresponding greedy policies converge to a stationary policy that is greedy with respect to .

Optimality of the Limit. We now show that the limit pair

satisfies the ACOE. First, consider the policy evaluation equation for the subsequence:

As the policies converge, the generators and cost functions depend continuously on the policy. Given the uniform convergence of

on compact sets and the closure properties of the generator

, we can take the limit as

to obtain:

Next, consider the policy improvement inequality (

21) for an arbitrary stationary policy

:

Taking the limit as

along the subsequence, we get:

Equations (

23) and (

24), together, are precisely the ACOE for the policy

. This demonstrates that the limit point

is a solution to the ACOE.

Uniqueness and Convergence of the Entire Sequence. Under the ergodicity assumption, the average cost is unique, and the relative value function is unique up to an additive constant. Since we have shown that any convergent subsequence of must converge to this unique solution, a standard result from analysis implies that the entire sequence must converge to .

This completes the proof. □

Practical Implementation and Computational Considerations

While the PIA is presented in an abstract function space, its practical implementation requires a suitable discretisation of the continuous components of the state space. A common and effective approach involves the following steps:

State-Space Discretisation: The continuous inventory level, I, is discretised into a fine grid. The discrete components of the state (the environmental states and the internal phases of the PH distributions ) are already finite. The pipeline state, which includes the age of orders, would also be discretised. This results in a large but finite state-space Markov Decision Process (MDP) that approximates the original PDMP.

Policy Evaluation: For a given discretised state space and a fixed () policy, the Policy Evaluation step involves solving a system of linear equations. The size of this system is proportional to the number of states in the discretised grid. For large state spaces, this can be computationally intensive, and iterative methods such as value iteration are often employed as an alternative to direct matrix inversion.

Policy Improvement: The Policy Improvement step requires, for each state, finding the action that minimises the one-step cost. Due to the convexity of the value function established in Proposition 1, the search for the optimal order-up-to level S for each discrete state is a convex optimisation problem, which can be solved efficiently using numerical search methods (e.g., a bisection or ternary search).

The overall computational cost per iteration of the PIA is therefore dominated by the Policy Evaluation step. Qualitatively, the cost is polynomial in the size of the discretised state space. The primary challenge in implementation is the ‘curse of dimensionality,’ as the number of states grows exponentially with the number of continuous variables (e.g., ages of multiple outstanding orders) and the granularity of the grid. Nevertheless, for systems of moderate complexity, this approach provides a viable and robust pathway to computing a near-optimal policy.

6. Conclusions and Further Remarks

This paper has developed a comprehensive theoretical framework for the optimal control of a continuous-review inventory system operating under a confluence of realistic stochastic disruptions. By modelling the system as a Piecewise Deterministic Markov Process (PDMP) with impulse control, we have rigorously captured the dynamics of supply and demand environments governed by general Phase-Type (PH) distributions, whilst also incorporating the pervasive issue of random production yield. Our objective was to characterise the replenishment policy that minimises the long-run average cost of the system.

The primary contributions of this work are fourfold. First, we constructed a unified and tractable mathematical model that synthesises several complex, non-memoryless sources of uncertainty. Second, we formally established the existence of a solution to the associated Average Cost Optimality Equation (ACOE) by employing the vanishing discount approach, thereby guaranteeing that an optimal policy exists. The central theoretical contribution of this paper is our third result: a formal proof that the optimal control policy possesses a state-dependent structure. This demonstrates that even in a highly complex environment with interacting uncertainties and non-exponential event timings, the intuitive and elegant logic of threshold-based control remains optimal. This finding represents a significant generalisation of the classical results in stochastic inventory theory. Finally, to complete the analytical treatment, we established the theoretical convergence of a Policy Iteration Algorithm (PIA), confirming that the optimal policy parameters are not only structurally simple but also computationally attainable.

Beyond its theoretical contributions, this study offers several key managerial implications for inventory control under uncertainty. First and foremost, our central result provides a powerful justification for practitioners to continue using simple, intuitive state-dependent policies, even when their operational environment is complex and characterised by non-exponential disruptions and random yields. The manager’s task is therefore not to invent a new, complex policy structure, but to focus on accurately parametrising this robust threshold-based approach. Second, the state-dependent nature of the policy underscores the need for information systems that can track the current operational environment (e.g., supplier status, demand phase) to enable dynamic adjustment of inventory targets. Finally, our numerical experiment provides a clear directive: managers must look beyond average disruption times and actively measure and model the variability of these events. As demonstrated, systems with highly variable (less predictable) disruptions require significantly higher safety stocks to maintain service levels, and using a simple memoryless model in such cases can lead to costly strategic errors.

Notwithstanding these contributions, the proposed framework possesses certain limitations that naturally delineate promising avenues for future research. Our analysis is situated within a single-item, single-echelon context. A natural and important extension would be to investigate multi-echelon inventory systems, such as a central depot supplying multiple retail outlets, each subject to local disruptions. Such a setting would introduce profound challenges related to policy coordination and the characterisation of system-wide optimality.

Furthermore, our model assumes a standard linear cost structure. Future work could explore more complex cost functions, such as all-units or incremental quantity discounts, which would violate the convexity assumptions central to our proof and necessitate new analytical techniques. Another compelling direction lies in relaxing the assumption of exogenous environmental processes by allowing for strategic investments to alter the parameters of the PH distributions governing disruptions. A further avenue of investigation involves relaxing the assumption of full observability of the system state, particularly the internal phase of the PH distributions, which would reformulate the problem into a much more challenging partially observable setting.