Overcoming Barriers in Supply Chain Analytics—Investigating Measures in LSCM Organizations

Abstract

1. Introduction

- RO1: Identify and systematize the organizational barriers that appear when companies initiate, perform, or deploy SCA initiatives within the organization.

- RO2: Identify and allocate organizational measures that seek to cope with the depicted barriers.

2. Theoretical Background

2.1. Supply Chain Analytics

2.2. Barriers of Supply Chain Analytics

2.3. Measures to Fully Utilize the Benefits of Analytics

3. Methodology

3.1. Research Design

3.2. Data Collection

3.3. Data Analysis

3.4. Reliability

4. Results and Discussion

- (1)

- “Orientation about Analytics” describes actions, circumstances, and events before specific analytics initiatives are planned. During the orientation, the necessary conditions for applying analytics are created and employees are motivated to invent analytics initiatives to be executed.

- (2)

- “Planning of analytics initiative” describes actions, circumstances, and events during the set-up of a specific analytics initiative, in which the addressed business problem/ business case is specified, the approach designed, resources and budget committed, and relevant people are invited to participate.

- (3)

- “Execution of analytics initiative” describes actions, circumstances, and events during the development and creation of analytics solutions in specific analytics initiatives. For example, this includes interactions with data, applications of analytical methods, the use of technology, and interaction of analysts with business experts.

- (4)

- “Use of analytics solution” refers to actions, circumstances, and events after the solution development, which include the deployment of the analytics solution to users and their interaction with the solution in the short and long term.

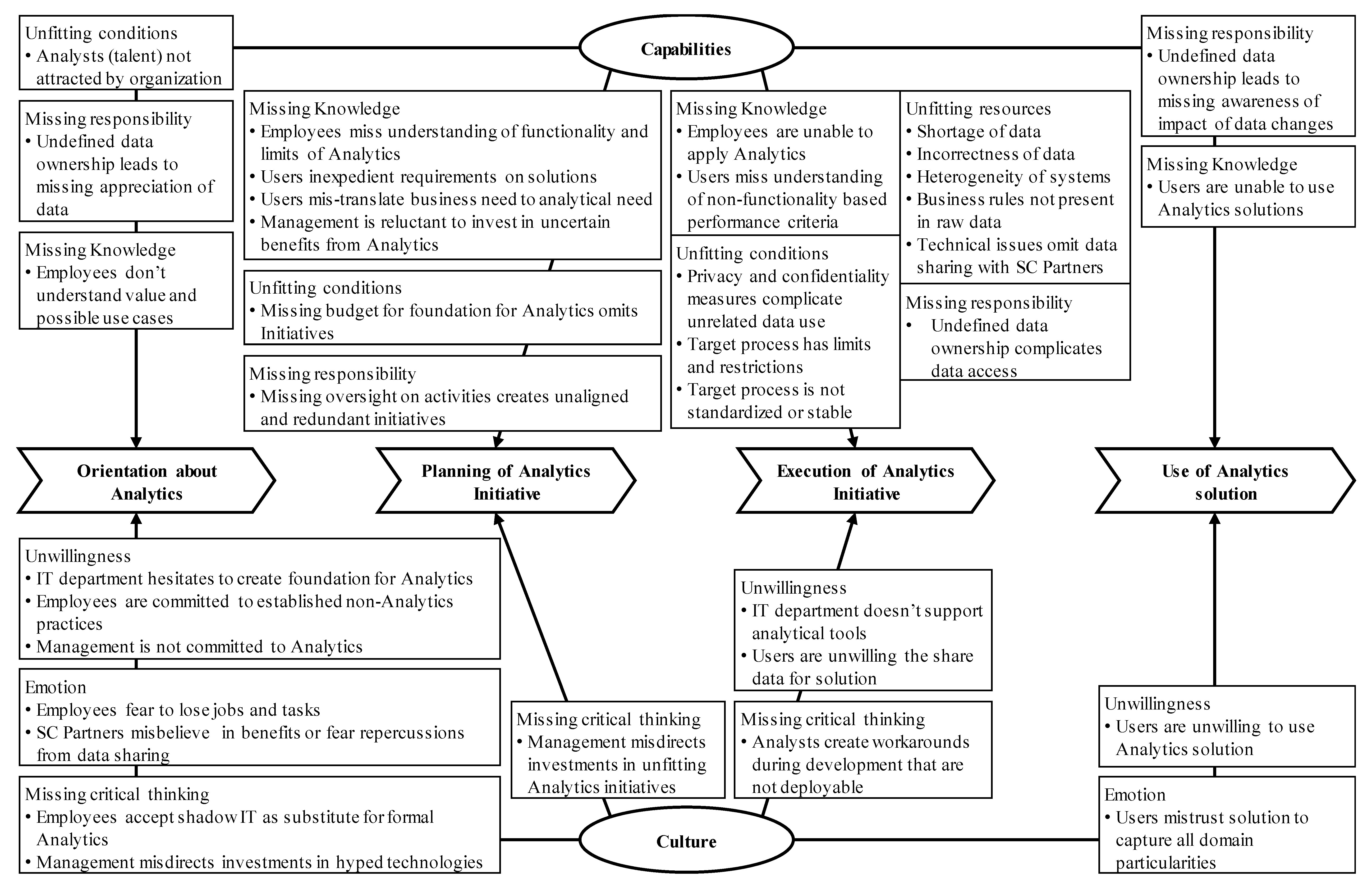

4.1. Barriers

4.1.1. Capability Barriers due to Unfitting Conditions

4.1.2. Capability Barriers due to Missing Responsibility

4.1.3. Capability Barriers due to Missing Knowledge

4.1.4. Capability Barriers due to Unfitting Resources

4.1.5. Culture Barriers due to Unwillingness

4.1.6. Culture Barriers due to Emotion

4.1.7. Culture Barriers due to Missing Critical Thinking

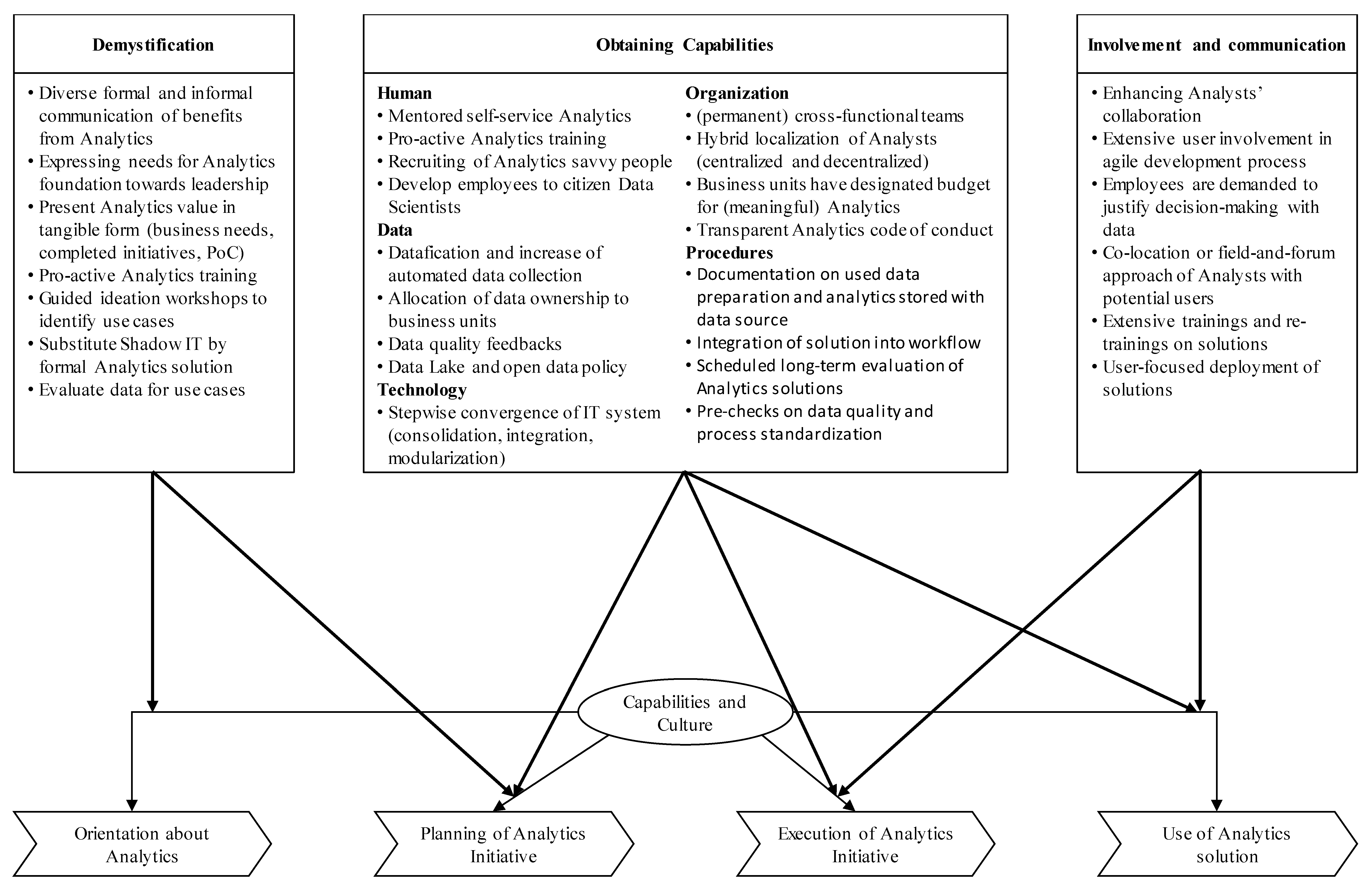

4.2. Measures

4.2.1. Measures Contributing to Demystification

4.2.2. Measures Contributing to Obtaining Capabilities—Human

4.2.3. Measures Contributing to Obtaining Capabilities—Data

4.2.4. Measures Contributing to Obtaining Capabilities—Technology

4.2.5. Measures Contributing to Obtaining Capabilities—Organization

4.2.6. Measures Contributing to Obtaining Capabilities—Procedures

4.2.7. Measures Contributing to Involvement and Communication

4.3. Discussion on Applying Measures and Handling Barriers

5. Conclusions

5.1. Managerial Implications

5.2. Limitations and Further Research

Author Contributions

Funding

Conflicts of Interest

References

- Monahan, S.; Ward, J.; Zimmermann, M.; Sonthalia, B.; Vanhencxthoven, M. Accelerating into Uncertainty; CSCMP: Lombard, IL, USA, 2017. [Google Scholar]

- Chung, G.; Gesing, B.; Chaturvedi, K.; Bodenbrenner, P. DHL Logistics Trend Radar; Innovation, DHL Customer Solutions & Innovation: Bonn, Germany, 2018. [Google Scholar]

- Reinsel, D.; Gantz, J.; Rydning, J. Data Age 2025: The Digitization of the World From Edge to Core; Seagate: Cupertino, CA, USA, 2018. [Google Scholar]

- Davenport, T.H.; Harris, J.G. Competing on Analytics: The New Science of Winning, 2nd ed.; Harvard Business School Press: Boston, MA, USA, 2017; ISBN 9781633693722. [Google Scholar]

- Souza, G.C. Supply chain analytics. Bus. Horiz. 2014, 57, 595–605. [Google Scholar] [CrossRef]

- Wang, G.; Gunasekaran, A.; Ngai, E.W.T.; Papadopoulos, T. Big data analytics in logistics and supply chain management: Certain investigations for research and applications. Int. J. Prod. Econ. 2016, 176, 98–110. [Google Scholar] [CrossRef]

- Chae, B.K.; Yang, C.; Olson, D.; Sheu, C. The impact of advanced analytics and data accuracy on operational performance: A contingent resource based theory (RBT) perspective. Decis. Support Syst. 2014, 59, 119–126. [Google Scholar] [CrossRef]

- Trkman, P. The critical success factors of business process management. Int. J. Inf. Manage. 2010, 30, 125–134. [Google Scholar] [CrossRef]

- Dutta, D.; Bose, I. Managing a big data project: The case of Ramco cements limited. Int. J. Prod. Econ. 2015, 165, 293–306. [Google Scholar] [CrossRef]

- Sanders, N.R. How to Use Big Data to Drive Your Supply Chain. Calif. Manage. Rev. 2016, 58, 26–48. [Google Scholar] [CrossRef]

- Lai, Y.; Sun, H.; Ren, J. Understanding the determinants of big data analytics (BDA) adoption in logistics and supply chain management. Int. J. Logist. Manag. 2018, 29, 676–703. [Google Scholar] [CrossRef]

- Schoenherr, T.; Speier-Pero, C. Data Science, Predictive Analytics, and Big Data in Supply Chain Management: Current State and Future Potential. J. Bus. Logist. 2015, 36, 120–132. [Google Scholar] [CrossRef]

- Kache, F.; Seuring, S. Challenges and opportunities of digital information at the intersection of Big Data Analytics and supply chain management. Int. J. Oper. Prod. Manag. 2017, 37, 10–36. [Google Scholar] [CrossRef]

- Richey, R.G.; Morgan, T.R.; Lindsey-Hall, K.; Adams, F.G. A global exploration of Big Data in the supply chain. Int. J. Phys. Distrib. Logist. Manag. 2016, 46, 710–739. [Google Scholar] [CrossRef]

- APICS. Exploring the Big Data Revolution; APICS: Chicago, IL, USA, 2015. [Google Scholar]

- Pearson, M.; Gjendem, F.H.; Kaltenbach, P.; Schatteman, O.; Hanifan, G. Big Data Analytics in Supply Chain: Hype or Here to Stay? Accenture: Munich, Germany, 2014. [Google Scholar]

- Thieullent, A.-L.; Colas, M.; Buvat, J.; KVJ, S.; Bisht, A. Going Big: Why Companies Need to Focus on Operational Analytics; Capgemini: Paris, France, 2016. [Google Scholar]

- Strauss, A.L.; Corbin, J.M. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory; SAGE Publications: Thousand Oaks, CA, USA, 1998. [Google Scholar]

- Brown, M. Illuminating Patterns of Perception: An Overview of Q Methodology; Carnegie-Mellon Univ: Pittsburgh, PA, USA, 2004. [Google Scholar]

- Valenta, A.L.; Wigger, U. Q-methodology: Definition and Application in Health Care Informatics. J. Am. Med. Informatics Assoc. 1997, 4, 501–510. [Google Scholar] [CrossRef] [PubMed]

- Holsapple, C.; Lee-Post, A.; Pakath, R. A unified foundation for business analytics. Decis. Support Syst. 2014, 64, 130–141. [Google Scholar] [CrossRef]

- Beer, D. Envisioning the power of data analytics. Information, Commun. Soc. 2018, 21, 465–479. [Google Scholar] [CrossRef]

- Gandomi, A.; Haider, M. Beyond the hype: Big data concepts, methods, and analytics. Int. J. Inf. Manage. 2015, 35, 137–144. [Google Scholar] [CrossRef]

- Cao, G.; Duan, Y.; Li, G. Linking Business Analytics to Decision Making Effectiveness: A Path Model Analysis. IEEE Trans. Eng. Manag. 2015, 62, 384–395. [Google Scholar] [CrossRef]

- Ransbotham, S.; Kiron, D.; Prentice, P.K. Minding the Analytics Gap. MIT Sloan Manag. Rev. 2015, 56, 63–68. [Google Scholar]

- Ghasemaghaei, M.; Hassanein, K.; Turel, O. Increasing firm agility through the use of data analytics: The role of fit. Decis. Support Syst. 2017, 101, 95–105. [Google Scholar] [CrossRef]

- Simchi-Levi, D.; Simchi-Levi, E.; Kaminsky, P. Designing and Managing the Supply Chain: Concepts, Strategies, and Cases, 3rd ed.; McGraw-Hill New York: Boston, MA, USA, 2003. [Google Scholar]

- Christopher, M. Logistics & Supply Chain Management; Financial Times Prentice Hall: New York, NY, USA, 2011. [Google Scholar]

- Dittfeld, H.; Scholten, K.; Van Donk, D.P. Burden or blessing in disguise: interactions in supply chain complexity. Int. J. Oper. Prod. Manag. 2018, 38, 314–332. [Google Scholar] [CrossRef]

- Bode, C.; Wagner, S.M. Structural drivers of upstream supply chain complexity and the frequency of supply chain disruptions. J. Oper. Manag. 2015, 36, 215–228. [Google Scholar] [CrossRef]

- Heckmann, I.; Comes, T.; Nickel, S. A critical review on supply chain risk – Definition, measure and modeling. Omega 2015, 52, 119–132. [Google Scholar] [CrossRef]

- Nitsche, B.; Durach, C.F. Much discussed, little conceptualized: supply chain volatility. Int. J. Phys. Distrib. Logist. Manag. 2018, 48, 866–886. [Google Scholar] [CrossRef]

- Herden, T.T.; Bunzel, S. Archetypes of Supply Chain Analytics Initiatives—An Exploratory Study. Logistics 2018, 2, 10. [Google Scholar] [CrossRef]

- Waller, M.A.; Fawcett, S.E. Data Science, Predictive Analytics, and Big Data: A Revolution That Will Transform Supply Chain Design and Management. J. Bus. Logist. 2013, 34, 77–84. [Google Scholar] [CrossRef]

- Brinch, M.; Stentoft, J.; Jensen, J.K.; Rajkumar, C. Practitioners understanding of big data and its applications in supply chain management. Int. J. Logist. Manag. 2018, 29, 555–574. [Google Scholar] [CrossRef]

- Oliveira, M.P.V.D.; McCormack, K.; Trkman, P. Business analytics in supply chains – The contingent effect of business process maturity. Expert Syst. Appl. 2012, 39, 5488–5498. [Google Scholar] [CrossRef]

- Zhu, S.; Song, J.; Hazen, B.T.; Lee, K.; Cegielski, C. How supply chain analytics enables operational supply chain transparency. Int. J. Phys. Distrib. Logist. Manag. 2018, 48, 47–68. [Google Scholar] [CrossRef]

- Ramanathan, R.; Philpott, E.; Duan, Y.; Cao, G. Adoption of business analytics and impact on performance: a qualitative study in retail. Prod. Plan. Control 2017, 28, 985–998. [Google Scholar] [CrossRef]

- Srinivasan, R.; Swink, M. An Investigation of Visibility and Flexibility as Complements to Supply Chain Analytics: An Organizational Information Processing Theory Perspective. Prod. Oper. Manag. 2018, 27, 1849–1867. [Google Scholar] [CrossRef]

- Trkman, P.; McCormack, K.; de Oliveira, M.P.V.; Ladeira, M.B. The impact of business analytics on supply chain performance. Decis. Support Syst. 2010, 49, 318–327. [Google Scholar] [CrossRef]

- Brynjolfsson, E. The productivity paradox of information technology. Commun. ACM 1993, 36, 66–77. [Google Scholar] [CrossRef]

- Hazen, B.T.; Boone, C.A.; Ezell, J.D.; Jones-Farmer, L.A. Data quality for data science, predictive analytics, and big data in supply chain management: An introduction to the problem and suggestions for research and applications. Int. J. Prod. Econ. 2014, 154, 72–80. [Google Scholar] [CrossRef]

- Roßmann, B.; Canzaniello, A.; von der Gracht, H.; Hartmann, E. The future and social impact of Big Data Analytics in Supply Chain Management: Results from a Delphi study. Technol. Forecast. Soc. Change 2018, 130, 135–149. [Google Scholar] [CrossRef]

- Wixom, B.H.; Yen, B.; Relich, M. Maximizing Value from Business Analytics. MIS Q. Exec. 2013, 12, 111–123. [Google Scholar]

- Ross, J.W.; Beath, C.M.; Quaadgras, A. You May Not Need Big Data After All. Havard Bus. Rev. 2013, 91, 90–98. [Google Scholar]

- Watson, H.J. Tutorial: Big Data Analytics: Concepts, Technologies, and Applications. Commun. Assoc. Inf. Syst. 2014, 34, 1247–1268. [Google Scholar] [CrossRef]

- Seddon, P.B.; Constantinidis, D.; Tamm, T.; Dod, H. How does business analytics contribute to business value? Inf. Syst. J. 2017, 27, 237–269. [Google Scholar] [CrossRef]

- McAfee, A.; Brynjolfsson, E. Big data: the management revolution. Harv. Bus. Rev. 2012, 90, 60–68. [Google Scholar]

- Marchand, D.A.; Peppard, J. Why IT fumbles analytics. Harv. Bus. Rev. 2013, 91, 104–112. [Google Scholar]

- Bose, R. Advanced analytics: opportunities and challenges. Ind. Manag. Data Syst. 2009, 109, 155–172. [Google Scholar] [CrossRef]

- Barton, D.; Court, D. Making advanced analytics work for you. Harv. Bus. Rev. 2012, 90, 78–83. [Google Scholar]

- Lavalle, S.; Lesser, E.; Shockley, R.; Hopkins, M.S.; Kruschwitz, N. Big Data, Analytics and the Path From Insights to Value. MIT Sloan Manag. Rev. 2011, 52, 21–32. [Google Scholar]

- Ellingsen, I.T.; Størksen, I.; Stephens, P. Q methodology in social work research. Int. J. Soc. Res. Methodol. 2010, 13, 395–409. [Google Scholar] [CrossRef]

- Rauer, J.; Kaufmann, L. Mitigating External Barriers to Implementing Green Supply Chain Management: A Grounded Theory Investigation of Green-Tech Companies’ Rare Earth Metals Supply Chains. J. Supply Chain Manag. 2015, 51, 65–88. [Google Scholar] [CrossRef]

- Saldanha, J.P.; Mello, J.E.; Knemeyer, A.M.; Vijayaraghavan, T.A.S. Implementing Supply Chain Technologies in Emerging Markets: An Institutional Theory Perspective. J. Supply Chain Manag. 2015, 51, 5–26. [Google Scholar] [CrossRef]

- Omar, A.; Davis-Sramek, B.; Fugate, B.S.; Mentzer, J.T. Exploring the Complex Social Processes of Organizational Change: Supply Chain Orientation From a Manager’s Perspective. J. Bus. Logist. 2012, 33, 4–19. [Google Scholar] [CrossRef]

- Kaufmann, L.; Carter, C.R.; Buhrmann, C. Debiasing the supplier selection decision: a taxonomy and conceptualization. Int. J. Phys. Distrib. Logist. Manag. 2010, 40, 792–821. [Google Scholar] [CrossRef]

- Nicholas, P.K.; Mandolesi, S.; Naspetti, S.; Zanoli, R. Innovations in low input and organic dairy supply chains—What is acceptable in Europe? J. Dairy Sci. 2014, 97, 1157–1167. [Google Scholar] [CrossRef]

- Miles, M.B.; Huberman, A.M.; Saldana, J. Qualitative Data Analysis: A Methods Sourcebook, 3rd ed.; Sage: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Yin, R.K. Case Study Research: Design and Methods; SAGE Publications: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Bode, C.; Hübner, D.; Wagner, S.M. Managing Financially Distressed Suppliers: An Exploratory Study. J. Supply Chain Manag. 2014, 50, 24–43. [Google Scholar] [CrossRef]

- Macdonald, J.R.; Corsi, T.M. Supply Chain Disruption Management: Severe Events, Recovery, and Performance. J. Bus. Logist. 2013, 34, 270–288. [Google Scholar] [CrossRef]

- Rivera, L.; Gligor, D.; Sheffi, Y. The benefits of logistics clustering. Int. J. Phys. Distrib. Logist. Manag. 2016, 46, 242–268. [Google Scholar] [CrossRef]

- McCracken, G. The Long Interview; Sage: Newbury Park, CA, USA, 1988; ISBN 0803933533. [Google Scholar]

- Eisenhardt, K.M. Building theories from case study research. Acad. Manag. Rev. 1989, 14, 532–550. [Google Scholar] [CrossRef]

- Gligor, D.M.; Autry, C.W. The role of personal relationships in facilitating supply chain communications: a qualitative study. J. Supply Chain Manag. 2012, 48, 24–43. [Google Scholar] [CrossRef]

- Manuj, I.; Sahin, F. A model of supply chain and supply chain decision-making complexity. Int. J. Phys. Distrib. Logist. Manag. 2011, 41, 511–549. [Google Scholar] [CrossRef]

- De Leeuw, S.; Minguela-Rata, B.; Sabet, E.; Boter, J.; Sigurðardóttir, R. Trade-offs in managing commercial consumer returns for online apparel retail. Int. J. Oper. Prod. Manag. 2016, 36, 710–731. [Google Scholar] [CrossRef]

- Kaufmann, L.; Denk, N. How to demonstrate rigor when presenting grounded theory research in the supply chain management literature. J. Supply Chain Manag. 2011, 47, 64–72. [Google Scholar] [CrossRef]

- Amin, Z. Q methodology - A journey into the subjectivity of human mind. Singapore Med. J. 2000, 41, 410–414. [Google Scholar]

- Thornton, L.M.; Esper, T.L.; Morris, M.L. Exploring the impact of supply chain counterproductive work behaviors on supply chain relationships. Int. J. Phys. Distrib. Logist. Manag. 2013, 43, 786–804. [Google Scholar] [CrossRef]

- Flint, D.J.; Woodruff, R.B.; Gardial, S.F. Exploring the Phenomenon of Customers’ Desired Value Change in a Business-to-Business Context. J. Mark. 2002, 66, 102–117. [Google Scholar] [CrossRef]

- Hirschman, E.C. Humanistic Inquiry in Marketing Research: Philosophy, Method, and Criteria. J. Mark. Res. 1986, 23, 237–249. [Google Scholar] [CrossRef]

- Lincoln, Y.S.; Guba, E.G. Establishing trustworthiness. In Naturalistic Inquiry; Sage: Newbury Park, CA, USA, 1985; pp. 289–331. [Google Scholar]

- Kiron, D.; Prentice, P.K.; Ferguson, R.B. Raising the Bar With Analytics. MIT Sloan Manag. Rev. 2014, 55, 28–33. [Google Scholar]

- Manyika, J.; Chui, M.; Brown, B.; Bughin, J.; Dobbs, R.; Roxburgh, C.; Byers, A.H. Big data: The next frontier for innovation, competition, and productivity. McKinsey Glob. Inst. 2011, 156. [Google Scholar]

- Janssen, M.; van der Voort, H.; Wahyudi, A. Factors influencing big data decision-making quality. J. Bus. Res. 2017, 70, 338–345. [Google Scholar] [CrossRef]

- Hayes, J. The theory and practice of change management, 5th ed.; Palgrave Macmillan: London, UK, 2018. [Google Scholar]

- Grossman, R.L.; Siegel, K.P. Organizational Models for Big Data and Analytics. J. Organ. Des. 2014, 3, 20. [Google Scholar] [CrossRef][Green Version]

- Díaz, A.; Rowshankish, K.; Saleh, T. Why data culture matters. McKinsey Q. 2018, 3, 36–53. [Google Scholar]

- Ransbotham, S.; Kiron, D.; Prentice, P.K. Beyond the Hype: The Hard Work behind Analytics Success; MIT Sloan Management Review; Massachusetts Institute of Technology: Cambridge, MA, USA, 2016. [Google Scholar]

- Kiron, D.; Shockley, R.; Kruschwitz, N.; Finch, G.; Haydock, M. Analytics: The Widening Divide. MIT Sloan Manag. Rev. 2012, 53, 1–23. [Google Scholar]

- Harris, J.G.; Craig, E. Developing analytical leadership. Strateg. HR Rev. 2011, 11, 25–30. [Google Scholar] [CrossRef]

- MacInnis, D.J. A Framework for Conceptual Contributions in Marketing. J. Mark. 2011, 75, 136–154. [Google Scholar] [CrossRef]

| Participant | Position (Anonymized) | Actor | analytics Exp. [yrs] |

|---|---|---|---|

| A | Manager (functional) Analytics | OEM | 8 |

| B | Data Scientist | LSP | 12 |

| C | Data Scientist | Supplier | 3 |

| D | Head of Analytics | OEM | 19 |

| E | Manager Analytics | Retail | 3 |

| F | Director Analytics | OEM | 8 |

| G | Manager Analytics | Supplier | 2 |

| H | Head of (functional) Analytics | LSP | 6 |

| I | Manager Analytics | Retail | 14 |

| J | Sen. Data Scientist | LSP | 4 |

| K | Data Scientist | analytics Provider | 3 |

| L | Data Scientist | analytics Provider | 3 |

| M | Head of Analytics | LSP | 3 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Herden, T.T.; Nitsche, B.; Gerlach, B. Overcoming Barriers in Supply Chain Analytics—Investigating Measures in LSCM Organizations. Logistics 2020, 4, 5. https://doi.org/10.3390/logistics4010005

Herden TT, Nitsche B, Gerlach B. Overcoming Barriers in Supply Chain Analytics—Investigating Measures in LSCM Organizations. Logistics. 2020; 4(1):5. https://doi.org/10.3390/logistics4010005

Chicago/Turabian StyleHerden, Tino T., Benjamin Nitsche, and Benno Gerlach. 2020. "Overcoming Barriers in Supply Chain Analytics—Investigating Measures in LSCM Organizations" Logistics 4, no. 1: 5. https://doi.org/10.3390/logistics4010005

APA StyleHerden, T. T., Nitsche, B., & Gerlach, B. (2020). Overcoming Barriers in Supply Chain Analytics—Investigating Measures in LSCM Organizations. Logistics, 4(1), 5. https://doi.org/10.3390/logistics4010005