EL V.2 Model for Predicting Food Safety Risks at Taiwan Border Using the Voting-Based Ensemble Method

Abstract

1. Introduction

2. Literature Review

2.1. Model Characteristic Risk Factors

2.2. Selection of Algorithms

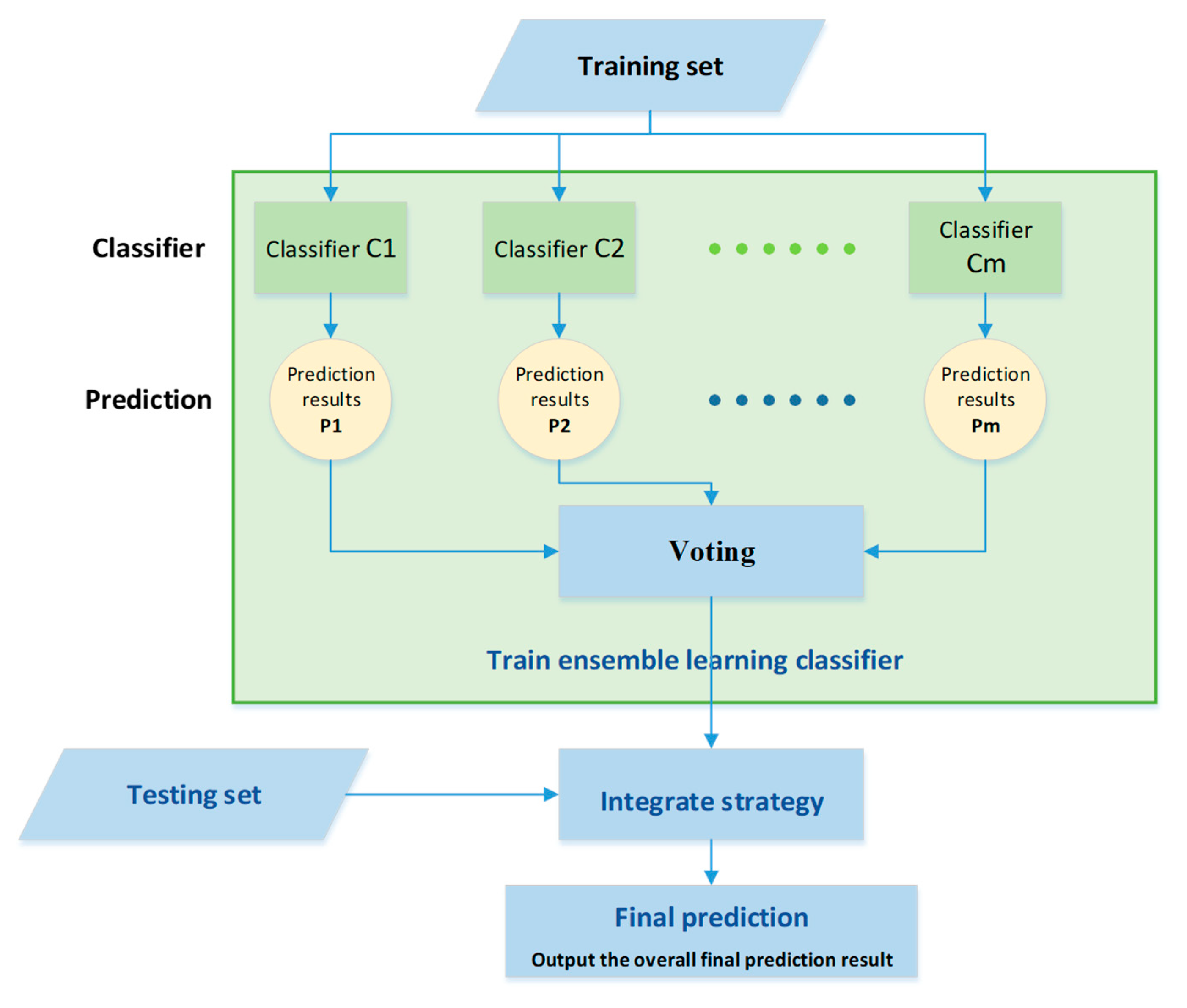

2.3. Improvement of Ensemble Learning Model

3. Materials and Methods

3.1. Data Sources and Analytical Tools

3.2. Research Methodology

3.2.1. Data Collection

3.2.2. Integration and Data Pre-Processing

3.2.3. Establishment of Risk Prediction Model

- Data processing:

- Selection of characteristic risk factors:

- Data exploration and modeling

- Establish the optimum prediction model

- ○

- Training set resamplingAccording to historical border inspection application data, the number of unqualified batches accounts for a small proportion of the total number of inspection applications, and modeling based on this data can easily lead to prediction bias. Therefore, in this study, we adopted two resampling methods (the synthesized minority oversampling technique (SMOTE) and proportional amplification) to deal with the data imbalance problem and tried to use the ratios of qualified to unqualified batches of 7:3, 6:4, 5:5, 4:6, and 3:7 for evaluation to find the best proportional parameters and unbalanced data processing method.

- ○

- Repeated modelingIn this study, after the training set was resampled to balance the number of qualified and unqualified cases, the data combination of “time interval (AD)/whether to include the vendor blacklist/data imbalance processing method” was used to reduce the misjudgment due to a single sampling error. There were two types of time intervals (AD): 2016–2017 and 2016–2017. Blacklisted vendors refer to those whose unqualified rate was greater than the average of the overall unqualified rate. The most commonly used methods for handling data imbalance were proportional amplification and SMOTE. Based on this combination, a total of six types A to F were formed, namely, A: 2016–2017/Yes/Proportional Amplification, B: 2016–2017/Yes/SMOTE, C: 2011–2017/Yes/Proportional Amplification, D: 2011–2017/Yes/SMOTE, E: 2011–2017/No/Proportional Amplification, and F: 2011–2017/No/SMOTE. Repeated modeling was conducted ten times, and the average was used to establish the model.

- ○

- Selection of the optimal modelThe validation data set was imported into the model to obtain seven classifiers established by seven algorithms. Then seven classifiers were integrated for integrated learning to extract the optimum prediction model from the predicted results.

3.2.4. Evaluation of the Prediction Effectiveness

3.2.5. Evaluation of the Prediction Effectiveness

4. Results

4.1. Resampling Method and Optimal Ratio

4.2. Generation of the Optimum Prediction Model

4.3. Model Prediction Effectiveness

5. Discussion

5.1. Fβ Was Employed to Regulate the Sampling Inspection Rate

5.2. Comparison between Single Algorithm and Ensemble Algorithm

5.3. Comparison of Prediction Effectiveness between EL V.2 and EL V.1 Models

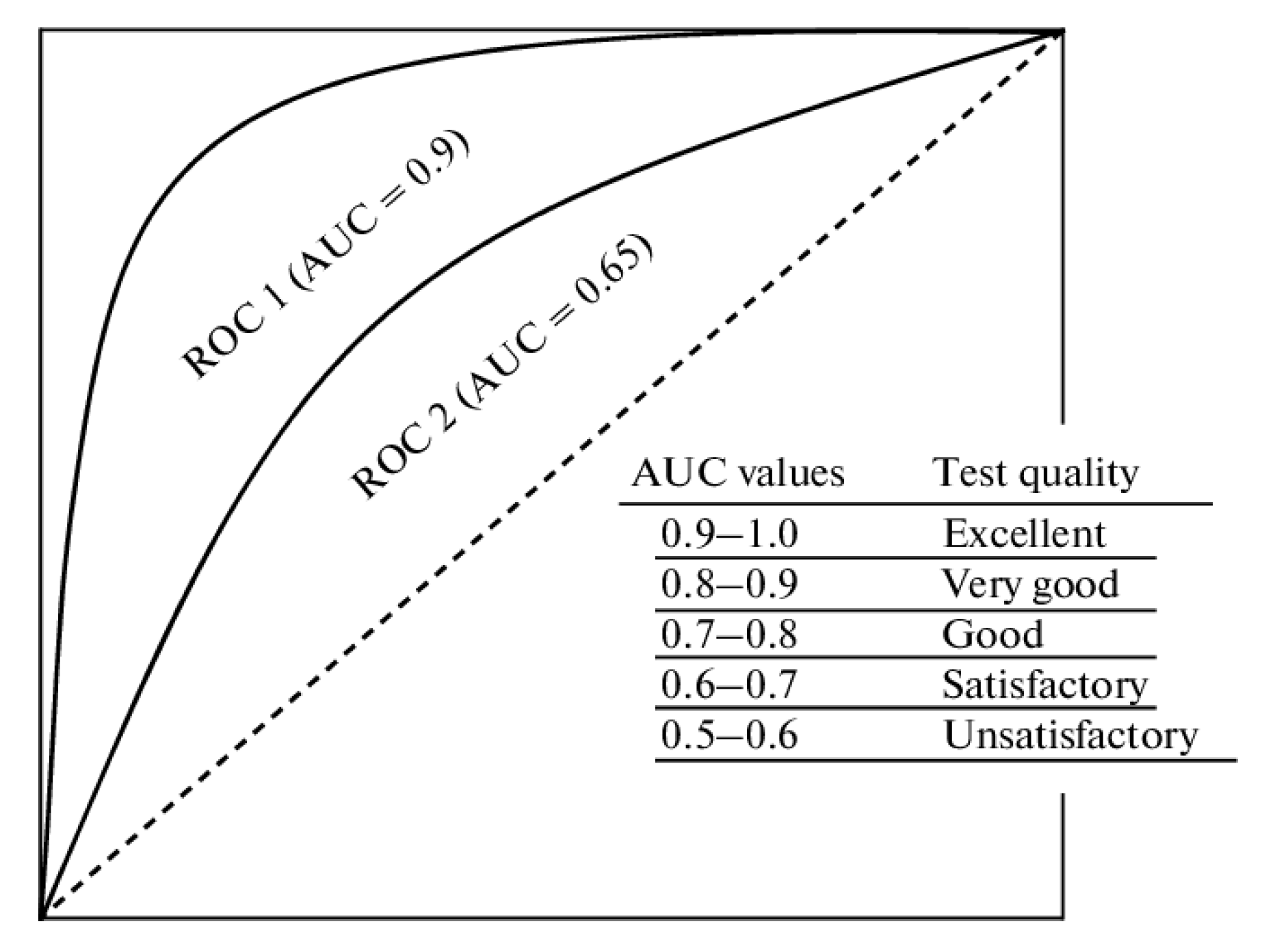

- The AUC of EL V.1 ranged from 53.43% to 69.03%, while the AUC of EL V.2 ranged from 49.40% to 63.39%. After a majority decision, the Bagging-CART model of EL V.2 with AUC less than 50% was considered unsuitable. By adopting a majority decision strategy through ensemble learning, the influence of the Bagging-CART model was diluted by the other six models. Thus, EL V.2 exhibited better robustness than EL V.1. The advantage of ensemble learning was that when a small number of algorithms were not suitable (worse than random sampling), there was a mechanism for eliminating or weakening influence. The performance of AUC showed that EL V.1 and EL V.2 had a greater prediction probability than randomly selecting unqualified cases (Table 11).

- The predictive evaluation index F1 (8.14%) and PPV (4.38%) of EL V.2 had better results compared to F1 (4.49%) and PPV (2.47%) of EL V.1, indicating that EL V.2 had better predictive effects than EL V.1 (Table 12).

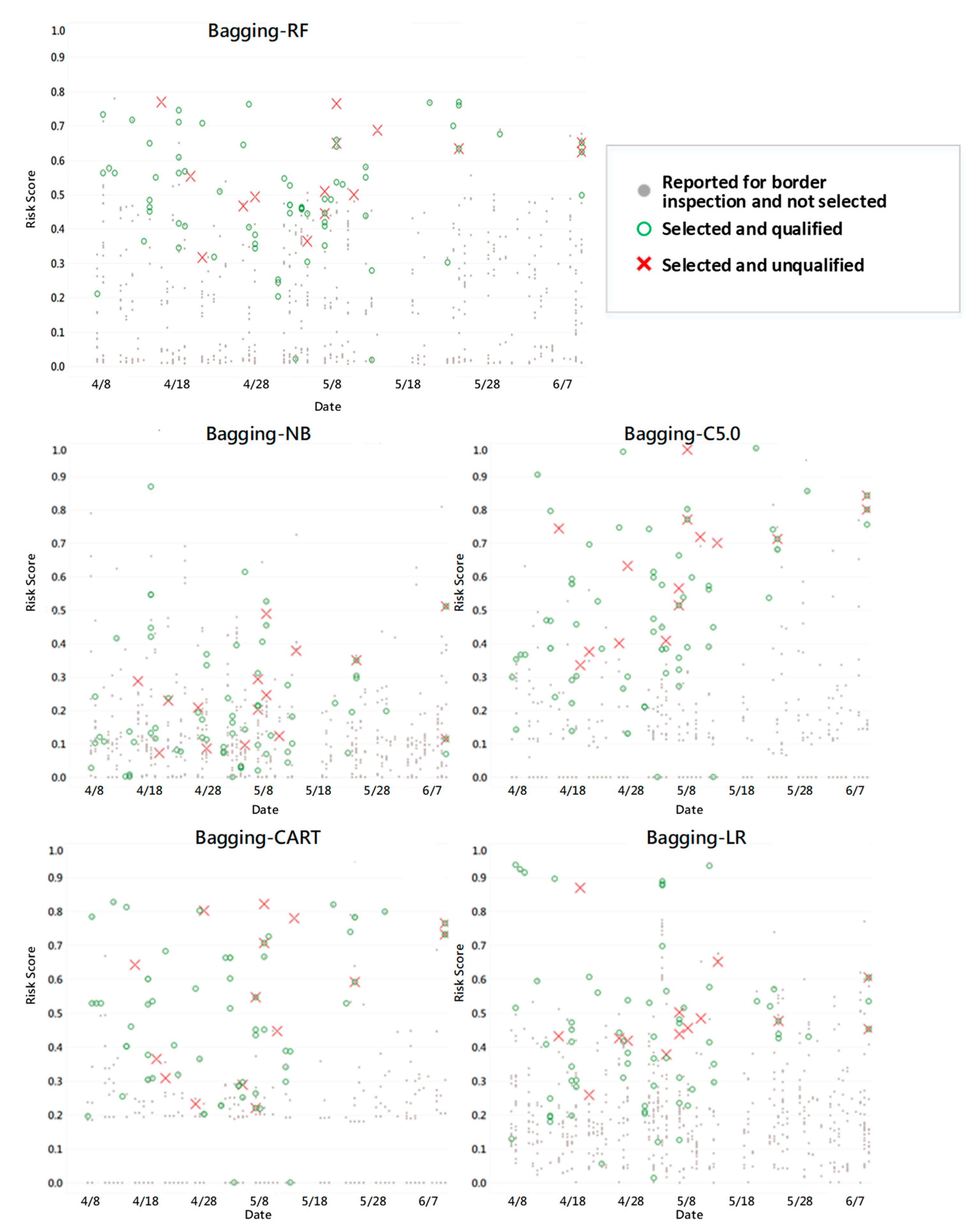

5.4. Evaluation of the Effectiveness of the Prediction Model after Its Launch

- EL V.2 is better than random sampling. After the ensemble learning model EL V.2, developed in this study, was launched online, the predicted results from 2020 to 2022 were reviewed. Based on the overall general sampling cases throughout the year, it was determined that the unqualified rate was 3.74% in 2020, 4.16% in 2021, and 3.01% in 2022, all of which were significantly higher than 2.09% in 2019. Further observation showed that the unqualified rates of cases recommended for sampling inspection through ensemble learning in 2020, 2021, and 2022 were 5.10%, 6.36%, and 4.39%, respectively, which were significantly higher than the 2.09% under random sampling inspection in 2019.

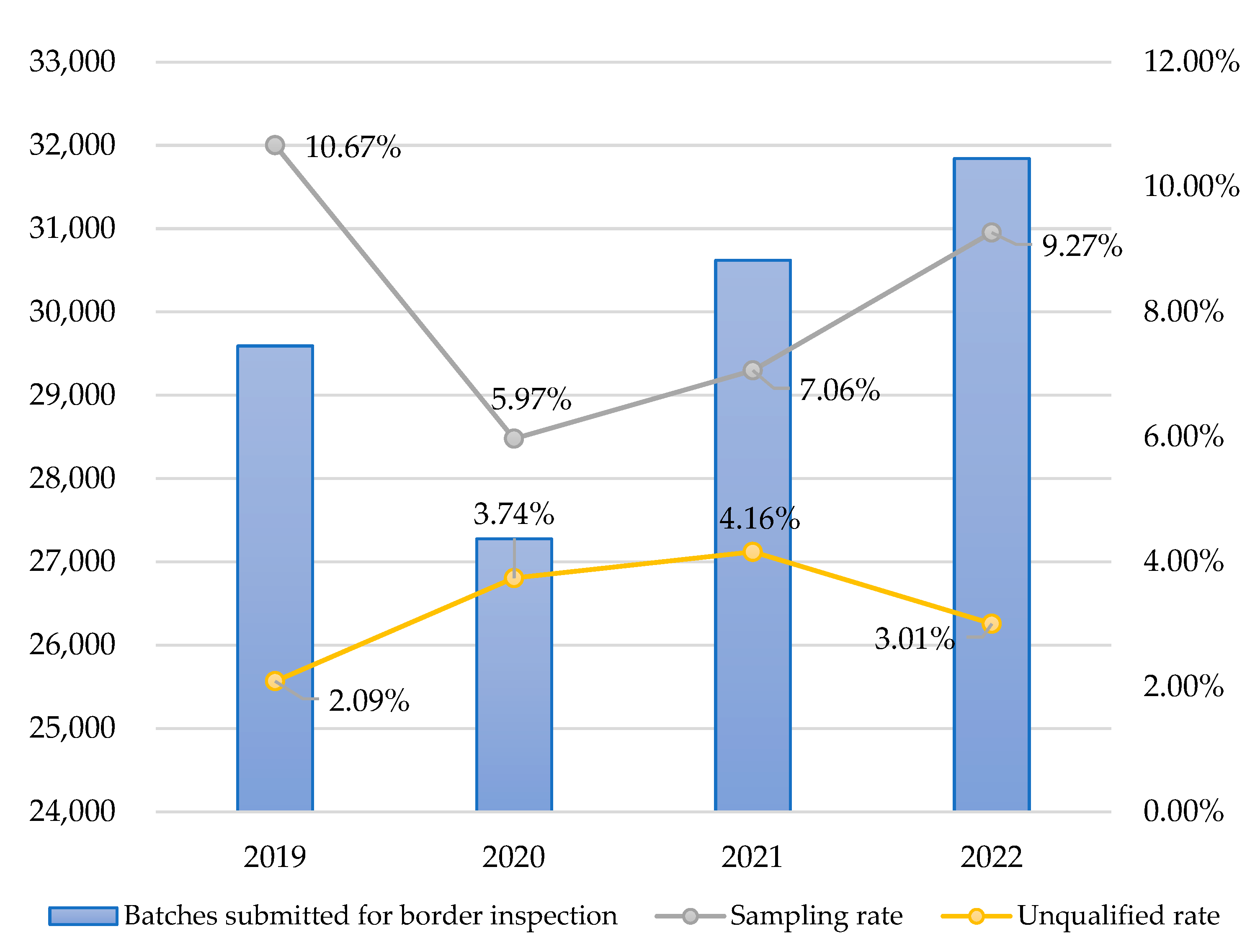

- The ensemble learning model should be periodically re-modeled. Based on Table 12, it can be observed that the unqualified rate showed a growing trend from 2019 to 2021 but a slight decrease in 2022 (Figure 5). The results of the further chi-square test showed that the unqualified rate in 2022 was still significantly higher than that in 2019 (p value = 0.000 *** < 0.001) (Table 14). However, for ensemble learning prediction models constructed using various machine learning algorithms, the factors and data required for modeling often change with factors such as the external environment and policies. Re-modeling was necessary to make the best adjustments to “data drift” or “concept drift” in the real world to prevent model failure. Drift refers to the degradation of predictive performance over time due to hidden external environmental factors. Due to the fact that data changed over time, the model’s capability to make accurate predictions may decrease. Therefore, it was necessary to monitor data drift and conduct timely reviews of modeling factors. When collecting new data, the data predicted by the model should be avoided to prevent the new model from overfitting when making predictions. The goal of this study is to enable the new model to adjust to changes in the external environment, which will be a sustained effort in the future.

- The trade-off between unqualified batch hit rate and computational efficiency needs to be established. While the rejection rate was improved using the model constructed with seven algorithms (i.e., EL V.2), there were approximately 0.1% of batches where the model took more than one minute to compute. The model was designed to facilitate inspectors at the border to make fast decisions on sampling. Considering computational efficiency and real-time prediction, random sampling would be automatically selected for batches with over 1 min computation time to avoid delay in border inspections due to model failure.

5.5. Research Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Abbreviated Name | Full Name |

|---|---|

| ACR | Accuracy rate |

| AUC | Area Under Curve |

| C5.0 | Decision Tree C5.0 |

| CART | Decision Tree CART |

| CPI | Corruption Perceptions Index |

| EL V.1 | First-generation ensemble learning prediction model |

| EL V.2 | Second-generation ensemble learning prediction model |

| EN | Elastic Net |

| FTMS | Food Traceability Management System |

| GBM | Gradient Boosting Machine |

| GDP | Gross Domestic Product |

| HDI | Human Development Index |

| IFIS | Import Food Information System |

| IMS | Inspection Management System |

| LR | Logistic Regression |

| LRI | Legal Rights Index |

| NB | Naïve Bayes |

| PMDS | Product Management Decision System |

| PPV | Positive Predictive Value |

| PREDICT | Predictive Risk-based Evaluation for Dynamic Import Compliance Targeting System |

| RASFF | Rapid Alert System for Food and Feed |

| RF | Random Forest |

| ROC | Receiver Operating Characteristic |

| RPFBS | Registration Platform of Food Businesses System |

| SMOTE | Synthesized Minority Oversampling Technique |

References

- Food and Drug Administration, Ministry of Health and Welfare of Taiwan. Analysis of Import Inspection Data of Food and Related Products at the Taiwan Border for the Year 107. Annu. Rep. Food Drug Res. 2019, 10, 404–408. Available online: https://www.fda.gov.tw (accessed on 20 July 2020).

- Bouzembrak, Y.; Marvin, H.J.P. Prediction of food fraud type using data from rapid alert system for food and feed (RASFF) and Bayesian network modelling. Food Control 2016, 61, 180–187. [Google Scholar] [CrossRef]

- Brandao, M.P.; Neto, M.G.; Anjos, V.C.; Bell, M.J.V. Detection of adulteration of goat milk powder with bovine milk powder by front-face and time resolved fluorescence. Food Control 2017, 81, 168–172. [Google Scholar] [CrossRef]

- Lin, Y. Food Safety in the Age of Big Data. Hum. Soc. Sci. Newslett. 2017, 19, 1. [Google Scholar]

- Feng, L.; Zhang, Z.; Ma, Y.; Du, Q.; Williams, P.; Drewry, J.; Luck, B. Alfalfa Yield Prediction Using UAV-Based Hyperspectral Imagery and Ensemble Learning. Remote Sens. 2020, 12, 2028. [Google Scholar] [CrossRef]

- Neto, H.A.; Tavares, W.L.F.; Ribeiro, D.C.S.Z.; Alves, R.C.O.; Fonseca, L.M.; Campos, S.V.A. On the utilization of deep and ensemble learning to detect milk adulteration. BioData Min. 2019, 12, 13. [Google Scholar] [CrossRef]

- Park, M.S.; Kim, H.N.; Bahk, G.J. The analysis of food safety incidents in South Korea, 1998–2016. Food Control 2017, 81, 196–199. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, S.; Han, W.; Li, C.; Liu, W.; Qiu, Z.; Chen, H. Detection of Adulteration in Infant Formula Based on Ensemble Convolutional Neural Network and Near-Infrared Spectroscopy. Foods 2021, 10, 785. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Z.; Zou, M.; Wen, X.; Wang, Z.; Li, Y.; Zhang, Q. A Voting-Based Ensemble Deep Learning Method Focused on Multi-Step Prediction of Food Safety Risk Levels: Applications in Hazard Analysis of Heavy Metals in Grain Processing Products. Foods 2022, 11, 823. [Google Scholar] [CrossRef]

- Parastar, H.; Kollenburg, G.V.; Weesepoel, Y.; Doel, A.V.D.; Buydens, L.; Jansen, J. Integration of handheld NIR and machine learning to “Measure & Monitor” chicken meat authenticity. Food Control 2020, 112, 107149. [Google Scholar] [CrossRef]

- Marvin, H.J.P.; Bouzembrak, Y.; Janssen, E.M.; van der Fels-Klerx, H.J.; van Asselt, E.D.; Kleter, G.A. A holistic approach to food safety risks: Food fraud as an example. Food Res. Int. 2016, 89, 463–470. [Google Scholar] [CrossRef]

- Bouzembrak, Y.; Klüche, M.; Gavai, A.; Marvin, H.J.P. Internet of Things in food safety: Literature review and a bibliometric analysis. Trends Food Sci. Technol. 2019, 94, 54–64. [Google Scholar] [CrossRef]

- Marvin, H.J.P.; Janssen, E.M.; Bouzembrak, Y.; Hendriksen, P.J.M.; Staats, M. Big data in food safety: An overview. Crit. Rev. Food Sci. Nutr. 2017, 57, 2286–2295. [Google Scholar] [CrossRef]

- U.S. Government Accountability Office. Imported Food Safety: FDA’s Targeting Tool Has Enhanced Screening, but Further Improvements Are Possible; GAO: Washington, DC, USA, 2016. Available online: https://www.gao.gov/products/gao-16-399 (accessed on 1 November 2021).

- Alam, T.M.; Shaukat, K.; Khelifi, A.; Aljuaid, H.; Shafqat, M.; Ahmed, U.; Nafees, S.A.; Luo, S. A Fuzzy Inference-Based Decision Support System for Disease Diagnosis. Comput. J. 2022, bxac068. [Google Scholar] [CrossRef]

- Athanasiou, M.; Zarkogianni, K.; Karytsas, K.; Nikita, K.S. An LSTM-based Approach Towards Automated Meal Detection from Continuous Glucose Monitoring in Type 1 Diabetes Mellitus. In Proceedings of the 2021 IEEE 21st International Conference on Bioinformatics and Bioengineering (BIBE), Kragujevac, Serbia, 25–27 October 2021. [Google Scholar] [CrossRef]

- Bicakci, K.; Tunali, V. Transfer Learning Approach to COVID-19 Prediction from Chest X-Ray Images. In Proceedings of the 2021 Innovations in Intelligent Systems and Applications Conference (ASYU) Intelligent Systems and Applications Conference (ASYU), Elazig, Turkey, 1–5 October 2021. [Google Scholar] [CrossRef]

- Nandanwar, H.; Nallamolu, S. Depression Prediction on Twitter using Machine Learning Algorithms. In Proceedings of the 2021 2nd Global Conference for Advancement in Technology (GCAT) Advancement in Technology (GCAT), Bangalore, India, 1–7 October 2021. [Google Scholar] [CrossRef]

- Daniels, J.; Herrero, P.; Georgiou, P.A. Deep Learning Framework for Automatic Meal Detection and Estimation in Artificial Pancreas Systems. Sensors 2022, 22, 466. [Google Scholar] [CrossRef]

- Hemmer, A.; Abderrahim, M.; Badonnel, R.; Chrisment, I. An Ensemble Learning-Based Architecture for Security Detection in IoT Infrastructures. In Proceedings of the 2021 17th International Conference on Network and Service Management (CNSM), Izmir, Turkey, 25–29 October 2021. [Google Scholar] [CrossRef]

- Ennaji, S.; Akkad NEHaddouch, K. A Powerful Ensemble Learning Approach for Improving Network Intrusion Detection System (NIDS). In Proceedings of the 2021 Fifth International Conference On Intelligent Computing in Data Sciences (ICDS), Fez, Morocco, 1–6 October 2021. [Google Scholar] [CrossRef]

- Larocque-Villiers, J.; Dumond, P.; Knox, D. Automating Predictive Maintenance Using State-Based Transfer Learning and Ensemble Methods. In Proceedings of the 2021 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Virtual, 1–7 October 2021. [Google Scholar] [CrossRef]

- Traore, A.; Chetoui, M.; Landry, F.G.; Akhloufi, M.A. Ensemble Learning Framework to Detect Partial Discharges and Predict Power Line Faults. In Proceedings of the 2021 IEEE Electrical Power and Energy Conference (EPEC), Toronto, ON, Canada, 22–31 October 2021. [Google Scholar] [CrossRef]

- Yang, X.; Zhou, D.; Song, W.; She, Y.; Chen, X. A Cable Layout Optimization Method for Electronic Systems Based on Ensemble Learning and Improved Differential Evolution Algorithm. IEEE Trans. Electromagn. Compat. 2021, 63, 1962–1971. [Google Scholar] [CrossRef]

- Ranawake, D.; Bandaranayake, S.; Jayasekara, R.; Madhushani, I.; Gamage, M.; Kumari, S. Tievs: Classified Advertising Enhanced Using Machine Learning Techniques. In Proceedings of the 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 27–30 October 2021. [Google Scholar] [CrossRef]

- Batool, D.; Shahbaz, M.; Shahzad Asif, H.; Shaukat, K.; Alam, T.M.; Hameed, I.A.; Ramzan, Z.; Waheed, A.; Aljuaid, H.; Luo, S. A Hybrid Approach to Tea Crop Yield Prediction Using Simulation Models and Machine Learning. Plants 2022, 11, 1925. [Google Scholar] [CrossRef]

- Adak, A.; Pradhan, B.; Shukla, N. Sentiment Analysis of Customer Reviews of Food Delivery Services Using Deep Learning and Explainable Artificial Intelligence: Systematic Review. Foods 2022, 11, 1500. [Google Scholar] [CrossRef]

- Dasarathy, B.V.; Sheela, B.V. A composite classifier system design: Concepts and methodology. Proc. IEEE 1979, 67, 708–713. [Google Scholar] [CrossRef]

- Hansen, L.K.; Salamon, P. Neural network ensembles. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 993–1001. [Google Scholar] [CrossRef]

- Polikar, R. Ensemble Based Systems in Decision Making. Circuits Syst. Mag. IEEE 2006, 6, 21–45. [Google Scholar] [CrossRef]

- Solano, E.S.; Affonso, C.M. Solar Irradiation Forecasting Using Ensemble Voting Based on Machine Learning Algorithms. Sustainability 2023, 15, 7943. [Google Scholar] [CrossRef]

- Chandrasekhar, N.; Peddakrishna, S. Enhancing Heart Disease Prediction Accuracy through Machine Learning Techniques and Optimization. Processes 2023, 11, 1210. [Google Scholar] [CrossRef]

- Alsulami, A.A.; AL-Ghamdi, A.S.A.-M.; Ragab, M. Enhancement of E-Learning Student’s Performance Based on Ensemble Techniques. Electronics 2023, 12, 1508. [Google Scholar] [CrossRef]

- Sugsnyadevi, K.; Malmurugan, N.; Sivakumar, R. OF-SMED: An Optimal Foreground Detection Method in Surveillance System for Traffic Monitoring. In Proceedings of the 2012 International Conference on Cyber Security, Cyber Warfare and Digital Forensic, CyberSec, Kuala Lumpur, Malaysia, 26–28 June 2012; pp. 12–17. [Google Scholar] [CrossRef]

- Pagano, C.; Granger, E.; Sabourin, R.; Gorodnichy, D.O. Detector Ensembles for Face Recognition in Video Surveillance. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, QLD, Australia, 10–15 June 2012; pp. 1–8. [Google Scholar] [CrossRef]

- Wang, R.; Bunyak, F.; Seetharaman, G.; Palaniappan, K. Static and Moving Object Detection Using Flux Tensor with Split Gaussian Models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 420–424. [Google Scholar] [CrossRef]

- Wang, T.; Sonoussi, H. Detection of Abnormal Visual Events via Global Optical Flow Orientation Histogram. IEEE Trans. Inf. Forensics Secur. 2014, 9, 998. [Google Scholar] [CrossRef]

- Tsai, C.J. New feature selection and voting scheme to improve classification accuracy. Methodol. Appl. 2019, 23, 12017–12030. [Google Scholar] [CrossRef]

- Food Safety Information Network. Available online: https://www.ey.gov.tw/ofs/A236031D34F78DCF (accessed on 22 July 2022).

- Ogutu, J.O.; Schulz-Streeck, T.; Piepho, H.P. Genomic selection using regularized linear regression models: Ridge regression, Lasso, elastic net and their extensions. BMC Proc. 2012, 6, S10. [Google Scholar] [CrossRef]

| Type | Factors | Data Sources |

|---|---|---|

| Product | Value, net weight, inspection methods, blacklisted products, packaging methods, validity period, products for which international recall alerts have been issued, manufacturing date, expiry date, etc. | Taiwan Food Cloud: Data on border inspections Product inspection and testing data Product alerts Information on international public opinion and product recall alerts: United States Food and Drug Administration (US FDA) https://www.fda.gov Food Safety and Inspection Service (FSIS) of the U.S. Department of Agriculture (USDA) https://www.fsis.usda.gov Rapid Alert System for Food and Feed (RASFF) of the European Union https://ec.europa.eu/food/safety/rasff_en Canadian Food Inspection Agency (CFIA) http://inspection.gc.ca Food Standards Agency (FSA) of the United Kingdom https://www.food.gov.uk Food Safety Authority of Ireland (FSAI) https://www.fsai.ie Food Standards Australia New Zealand (FSANZ) http://www.foodstandards.gov.au Consumer Affairs Agency (CAA) of Japan https://www.recall.caa.go.jp Singapore Food Agency (SFA) https://www.sfa.gov.sg China Food and Drug Administration (CFDA) http://gkml.samr.gov.cn Foodmate Network of China http://news.foodmate.net Centre for Food Safety (CFS) of Hong Kong http://www.cfs.gov.hk |

| Border inspection | Transportation time, month of inspection, quarter of inspection, year of inspection, method of transportation, agent importation, re-exportation, customs district, etc. | Taiwan Food Cloud: Management data of border inspections |

| Customs broker | Number of declarations filed, number of border inspection cancellations, number of days from the previous importation, rate of change of number of days taken for importation, number of cases of non-conforming labels and external appearances, number of batches forfeited or returned, number of inspections, number of failed inspections, number of failed document reviews, number of product classes, etc. | Taiwan Food Cloud: Food company registration data Data on border inspections Business registration data |

| Importer | Capital, years of establishment, number of branches, number of downstream vendors, number of company registration changes, number of late deliveries, sole focus on importation (yes/no), number of lines of businesses, new company (yes/no), district of registration, branch company (yes/no), blacklisted importer (yes/no), county/city, number of preliminary inspections, GHP inspections, HACCP inspections, label inspections, product inspections, number of lines of food businesses, factory registration (yes/no), delayed declaration of goods receipt/delivery (yes/no), interval between importations, variations in the interval between importations, variations in the number of days taken for importation, variations in total net weight, number of declarations filed, number of cases of non-conforming Chinese labels and external appearances, value, net weight, number of non-releases, number of batches detained, forfeited or returned, number of failed inspections, number of inspections, number of failed document reviews, number of border inspection cancellations, number of manufacturers, number of product classes for which declarations have been filed, total number of classes, etc. | Taiwan Food Cloud: Food company registration data Data on border inspections Product inspection and testing data Product flow data Business registration data |

| Manufacturer | Trademarks, interval between importations, rate of change of interval between importations, internationally alerted manufacturer (yes/no), internationally alerted brand (yes/no), number of cases of non-conforming Chinese labels and external appearances, number of batches detained, forfeited or returned, number of failed inspections, number of inspections, number of failed document reviews, number of declarations filed, number of border inspection cancellations, number of importers, number of product classes, etc. | Taiwan Food Cloud: Food company registration data Data on border inspections Product inspection and testing data Product alerts Information on international public opinion and product recall alerts: USFDA https://www.fda.gov FSIS https://www.fsis.usda.gov CFIA http://inspection.gc.ca FSA https://www.food.gov.uk RASFF https://ec.europa.eu/food/safety/rasff_en FSAI https://www.fsai.ie FSANZ http://www.foodstandards.gov.au CAA https://www.recall.caa.go.jp SFA https://www.sfa.gov.sg CFDA http://gkml.samr.gov.cn Foodmate Network of China http://news.foodmate.net CFS http://www.cfs.gov.hk |

| Country of manufacture | Country of manufacture of products subjected to inspection | Data on border inspections |

| GDP, economic growth rate, GFSI, CPI, HDI, LRI, regional PRI | Information on international public opinion and product recall alerts: https://data.oecd.org/gdp/gross-domestic-product-gdp.htm https://www.imf.org/en/Publications https://foodsecurityindex.eiu.com/ https://www.transparency.org/en/cpi/2020/index/nzl http://hdr.undp.org/en/2020-report https://data.worldbank.org/indicator/IC.LGL.CRED.XQ https://www.prsgroup.com/regional-political-risk-index/ |

| Characteristic Factor | Times | Characteristic Factor | Times | Characteristic Factor | Times | Characteristic Factor | Times |

|---|---|---|---|---|---|---|---|

| Country of production | 70 | Declaration acceptance unit | 17 | Whether there is a trademark | 5 | Frequency of business registration changes | 1 |

| Inspection method | 64 | Advance release | 17 | Product registration location | 5 | Is it an import broker? | 1 |

| Product classification code | 64 | Cumulative sampling number of imports | 17 | Regional political risk index | 5 | Average price per kilogram | 1 |

| Blacklist vendor | 47 | Human development index | 17 | Tax registration data available? | 5 | Acceptance month | 1 |

| Cumulative number of unqualified imports in sampling inspection | 45 | Packaging method | 15 | Non-punctual declaration rate of delivery | 5 | Acceptance season | 1 |

| Dutiable price in Taiwan dollars | 44 | Capital | 14 | Input/output mode | 4 | Acceptance year | 1 |

| Total net weight | 35 | Import cumulative number of new classifications | 13 | Percentage of remaining validity period for acceptance | 4 | Overdue delivery | 1 |

| Global food security indicator | 29 | Years of importer establishment | 10 | Whether there is factory registration? | 4 | Any business registration change? | 1 |

| Type of Obligatory inspection applicant | 26 | Number of companies in the same group | 10 | New company established within the past three months | 3 | Product classification code | 1 |

| Legal rights index | 26 | Is it a pure input industry? | 10 | Number of GHP inspection | 3 | Number of GHP inspection failures | 1 |

| Blacklist product | 25 | Customs classification | 10 | Accumulated number of unqualified imports | 3 | Number of HACCP inspection | 1 |

| Storage and transportation conditions | 23 | County and city level | 10 | Transportation time | 2 | Number of HACCP inspection | 1 |

| Packaging materials | 21 | Total number of imported product lines | 8 | GDP growth rate | 2 | Manufacturing date later than effective date | 1 |

| Cumulative number of reports | 20 | Number of downstream manufacturers | 7 | Number of branch companies | 2 | Valid for more than 5 years | 1 |

| Total classification number of imports | 20 | Is it a branch company? | 7 | Number of overdue deliveries | 2 | Acceptance date later than manufacturing date | 1 |

| Corruption perceptions index | 18 | Number of non-review inspections | 7 | Any intermediary trade? | 2 | Acceptance date later than the effective date | 1 |

| GDP | 17 | Rate of non-timely declaration of goods received | 7 | Cumulative number of imports not released | 2 | Number of business projects in the food industry | 1 |

| Type | Definition |

|---|---|

| True Positive, TP | Each batch of inspection applications was predicted as unqualified by the model, and it was actually unqualified. |

| False Positive, FP | Each batch of inspection applications was predicted as unqualified by the model, but it was actually qualified. |

| True Negative | Each batch of inspection applications was predicted as qualified by the model, and it was actually qualified. |

| False Negative | Each batch of inspection applications was predicted as qualified by the model, but it was actually unqualified. |

| Imbalanced Data Processing Methods and Sampling Ratio # | Precision (PPV) | Recall | F1 |

|---|---|---|---|

| SMOTE 7:3 | 6.03% | 64.91% | 11.03% |

| SMOTE 6:4 | 5.68% | 66.15% | 10.46% |

| SMOTE 5:5 | 5.48% | 75.16% | 10.22% |

| SMOTE 4:6 | 4.94% | 77.33% | 9.28% |

| SMOTE 3:7 | 4.80% | 81.68% | 9.06% |

| Equal magnification 7:3 | 4.62% | 87.89% | 8.77% |

| Data Set | Combination Number 1 | Algorithm | ACR | Recall | PPV | F1 | AUC | TN | FP | TP | FN | Sampling Rate | Rejection Rate |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Validation set | A1 | Bagging-C5.0 | 92.3% | 2.2% | 4.1% | 2.8% | 60.6% | 2451 | 70 | 3 | 135 | 2.75 | 4.11 |

| A2 | Bagging-CART | 86.6% | 25.4% | 12.2% | 16.5% | 69.5% | 2269 | 252 | 35 | 103 | 10.79 | 12.20 | |

| A3 | Bagging-EN | 27.9% | 90.6% | 6.2% | 11.5% | 68.0% | 617 | 1904 | 125 | 13 | 76.31 | 6.16 | |

| A4 | Bagging-GBM | 84.7% | 37.7% | 13.9% | 20.4% | 72.3% | 2200 | 321 | 52 | 86 | 14.03 | 13.94 | |

| A5 | Bagging-LR | 83.0% | 31.2% | 10.8% | 16.0% | 68.0% | 2164 | 357 | 43 | 95 | 15.04 | 10.75 | |

| A6 | Bagging-NB | 69.7% | 60.1% | 9.9% | 17.1% | 73.2% | 1769 | 752 | 83 | 55 | 31.40 | 9.94 | |

| A7 | Bagging-RF | 93.4% | 0.7% | 2.6% | 1.1% | 71.0% | 2483 | 38 | 1 | 137 | 1.47 | 2.56 | |

| A8 | EL | 85.5% | 28.3% | 12.0% | 16.8% | 72.5% | 2235 | 286 | 39 | 99 | 12.22 | 12.00 | |

| B1 | Bagging-C5.0 | 89.7% | 22.5% | 15.6% | 18.4% | 72.7% | 2353 | 168 | 31 | 107 | 7.48 | 15.58 | |

| B2 | Bagging-CART | 88.4% | 19.6% | 12.0% | 14.9% | 68.7% | 2323 | 198 | 27 | 111 | 8.46 | 12.00 | |

| B3 | Bagging-EN | 7.7% | 97.8% | 5.2% | 9.9% | 69.7% | 71 | 2450 | 135 | 3 | 97.22 | 5.22 | |

| B4 | Bagging-GBM | 90.1% | 26.8% | 18.6% | 22.0% | 73.1% | 2359 | 162 | 37 | 101 | 7.48 | 18.59 | |

| B5 | Bagging-LR | 87.9% | 28.3% | 14.9% | 19.5% | 71.2% | 2299 | 222 | 39 | 99 | 9.82 | 14.94 | |

| B6 | Bagging-NB | 79.6% | 50.0% | 12.7% | 20.3% | 73.3% | 2048 | 473 | 69 | 69 | 20.38 | 12.73 | |

| B7 | Bagging-RF | 90.6% | 24.6% | 18.9% | 21.4% | 75.2% | 2375 | 146 | 34 | 104 | 6.77 | 18.89 | |

| B8 | EL | 88.2% | 31.9% | 16.6% | 21.8% | 74.0% | 2300 | 221 | 44 | 94 | 9.97 | 16.60 | |

| C1 | Bagging-C5.0 | 93.4% | 11.6% | 23.2% | 15.5% | 67.2% | 2468 | 53 | 16 | 122 | 2.59 | 23.19 | |

| C2 | Bagging-CART | 86.6% | 39.9% | 16.8% | 23.6% | 69.7% | 2248 | 273 | 55 | 83 | 12.34 | 16.77 | |

| C3 | Bagging-EN | 81.3% | 11.6% | 4.1% | 6.0% | 50.1% | 2145 | 376 | 16 | 122 | 14.74 | 4.08 | |

| C4 | Bagging-GBM | 88.9% | 33.3% | 18.5% | 23.8% | 73.0% | 2318 | 203 | 46 | 92 | 9.36 | 18.47 | |

| C5 | Bagging-LR | 86.1% | 33.3% | 14.2% | 20.0% | 69.0% | 2244 | 277 | 46 | 92 | 12.15 | 14.24 | |

| C6 | Bagging-NB | 72.5% | 58.7% | 10.7% | 18.1% | 73.7% | 1847 | 674 | 81 | 57 | 28.39 | 10.73 | |

| C7 | Bagging-RF | 94.6% | 7.2% | 38.5% | 12.2% | 75.4% | 2505 | 16 | 10 | 128 | 0.98 | 38.46 | |

| C8 | EL | 92.0% | 23.2% | 22.9% | 23.0% | 73.6% | 2413 | 108 | 32 | 106 | 5.27 | 22.86 | |

| D1 | Bagging-C5.0 | 92.2% | 21.7% | 23.1% | 22.4% | 73.2% | 2421 | 100 | 30 | 108 | 4.89 | 23.08 | |

| D2 | Bagging-CART | 87.3% | 28.3% | 14.0% | 18.8% | 72.9% | 2282 | 239 | 39 | 99 | 10.46 | 14.03 | |

| D3 | Bagging-EN | 54.2% | 50.7% | 5.7% | 10.3% | 52.6% | 1370 | 1151 | 70 | 68 | 45.92 | 5.73 | |

| D4 | Bagging-GBM | 91.1% | 20.3% | 18.2% | 19.2% | 74.2% | 2395 | 126 | 28 | 110 | 5.79 | 18.18 | |

| D5 | Bagging-LR | 90.1% | 22.5% | 16.7% | 19.1% | 70.1% | 2366 | 155 | 31 | 107 | 7.00 | 16.67 | |

| D6 | Bagging-NB | 77.4% | 52.2% | 11.9% | 19.3% | 73.7% | 1986 | 535 | 72 | 66 | 22.83 | 11.86 | |

| D7 | Bagging-RF | 91.0% | 29.0% | 22.0% | 25.0% | 76.4% | 2379 | 142 | 40 | 98 | 6.84 | 21.98 | |

| D8 | EL | 90.2% | 28.3% | 19.4% | 23.0% | 75.1% | 2359 | 162 | 39 | 99 | 7.56 | 19.40 | |

| E1 | Bagging-C5.0 | 92.3% | 9.4% | 14.1% | 11.3% | 66.3% | 2442 | 79 | 13 | 125 | 3.46 | 14.13 | |

| E2 | Bagging-CART | 84.8% | 33.3% | 12.9% | 18.6% | 68.4% | 2210 | 311 | 46 | 92 | 13.43 | 12.89 | |

| E3 | Bagging-EN | 88.2% | 3.6% | 2.7% | 3.1% | 58.1% | 2341 | 180 | 5 | 133 | 6.96 | 2.70 | |

| E4 | Bagging-GBM | 88.1% | 27.5% | 14.9% | 19.3% | 71.5% | 2304 | 217 | 38 | 100 | 9.59 | 14.90 | |

| E5 | Bagging-LR | 85.9% | 32.6% | 13.8% | 19.4% | 69.1% | 2239 | 282 | 45 | 93 | 12.30 | 13.76 | |

| E6 | Bagging-NB | 73.2% | 58.0% | 10.9% | 18.3% | 73.7% | 1867 | 654 | 80 | 58 | 27.60 | 10.90 | |

| E7 | Bagging-RF | 94.5% | 2.9% | 26.7% | 5.2% | 73.1% | 2510 | 11 | 4 | 134 | 0.56 | 26.67 | |

| E8 | EL | 91.1% | 16.7% | 15.9% | 16.3% | 70.9% | 2399 | 122 | 23 | 115 | 5.45 | 15.86 | |

| F1 | Bagging-C5.0 | 92.4% | 7.2% | 11.9% | 9.0% | 71.1% | 2447 | 74 | 10 | 128 | 3.16 | 11.90 | |

| F2 | Bagging-CART | 89.9% | 9.4% | 8.3% | 8.8% | 67.2% | 2377 | 144 | 13 | 125 | 5.90 | 8.28 | |

| F3 | Bagging-EN | 62.3% | 19.6% | 2.9% | 5.1% | 55.6% | 1629 | 892 | 27 | 111 | 34.56 | 2.94 | |

| F4 | Bagging-GBM | 91.1% | 16.7% | 16.0% | 16.3% | 72.9% | 2400 | 121 | 23 | 115 | 5.42 | 15.97 | |

| F5 | Bagging-LR | 90.4% | 18.8% | 15.3% | 16.9% | 68.4% | 2377 | 144 | 26 | 112 | 6.39 | 15.29 | |

| F6 | Bagging-NB | 79.7% | 47.8% | 12.4% | 19.6% | 73.6% | 2053 | 468 | 66 | 72 | 20.08 | 12.36 | |

| F7 | Bagging-RF | 90.9% | 17.4% | 15.9% | 16.6% | 74.3% | 2394 | 127 | 24 | 114 | 5.68 | 15.89 | |

| F8 | EL | 91.7% | 10.1% | 12.5% | 11.2% | 72.1% | 2423 | 98 | 14 | 124 | 4.21 | 12.50 |

| Data Set | Combination Number 1 | Algorithm | ACR | Recall | PPV | F1 | AUC | TN | FP | TP | FN | Sampling Rate | Rejection Rate |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test set | C1 | Bagging-C5.0 | 94.7% | 8.0% | 15.7% | 10.6% | 68.0% | 3921 | 70 | 13 | 150 | 2.00 | 15.66 |

| C2 | Bagging-CART | 89.4% | 38.7% | 15.6% | 22.2% | 71.6% | 3649 | 342 | 63 | 100 | 9.75 | 15.56 | |

| C3 | Bagging-EN | 88.9% | 9.2% | 4.6% | 6.1% | 53.2% | 3678 | 313 | 15 | 148 | 7.90 | 4.57 | |

| C4 | Bagging-GBM | 87.8% | 39.9% | 13.8% | 20.5% | 72.0% | 3584 | 407 | 65 | 98 | 11.36 | 13.77 | |

| C5 | Bagging-LR | 49.7% | 64.4% | 4.9% | 9.1% | 51.6% | 1960 | 2031 | 105 | 58 | 51.42 | 4.92 | |

| C6 | Bagging-NB | 74.3% | 52.8% | 8.0% | 13.9% | 66.8% | 2999 | 992 | 86 | 77 | 25.95 | 7.98 | |

| C7 | Bagging-RF | 95.8% | 1.8% | 18.8% | 3.4% | 72.5% | 3978 | 13 | 3 | 160 | 0.39 | 18.75 | |

| C8 | EL | 90.9% | 31.9% | 16.4% | 21.6% | 69.9% | 3725 | 266 | 52 | 111 | 7.66 | 16.35 | |

| D1 | Bagging-C5.0 | 91.2% | 12.3% | 8.2% | 9.8% | 69.3% | 3767 | 224 | 20 | 143 | 5.87% | 8.20% | |

| D2 | Bagging-CART | 89.5% | 14.7% | 7.5% | 9.9% | 67.6% | 3693 | 298 | 24 | 139 | 7.75% | 7.45% | |

| D3 | Bagging-EN | 56.7% | 52.1% | 4.7% | 8.6% | 57.1% | 2272 | 1719 | 85 | 78 | 43.43% | 4.71% | |

| D4 | Bagging-GBM | 93.1% | 13.5% | 13.0% | 13.3% | 71.0% | 3844 | 147 | 22 | 141 | 4.07% | 13.02% | |

| D5 | Bagging-LR | 92.4% | 16.6% | 13.0% | 14.6% | 65.3% | 3811 | 180 | 27 | 136 | 4.98% | 13.04% | |

| D6 | Bagging-NB | 81.3% | 39.9% | 8.7% | 14.3% | 66.8% | 3313 | 678 | 65 | 98 | 17.89% | 8.75% | |

| D7 | Bagging-RF | 86.2% | 33.1% | 10.4% | 15.8% | 68.5% | 3525 | 466 | 54 | 109 | 12.52% | 10.38% | |

| D8 | EL | 91.4% | 19.6% | 12.3% | 15.1% | 69.0% | 3763 | 228 | 32 | 131 | 6.26% | 12.31% |

| Data Year | Overall Sampling Inspection | EL V.2 Sampling Inspection | ||||||

|---|---|---|---|---|---|---|---|---|

| Number of Inspection Application Batches | Sampling Rate | Rejection Rate | Prediction Batch Number 1 | Suggested Number of Inspection Batches | Sampling Rate | Number of Hit Batches | Hit Rate | |

| 2019 | 29,573 | 10.68% | 2.09% | 4154 | 318 | 7.66% | 52 | 16.35% |

| Model Differences | EL V.1 | EL V.2 | Description |

|---|---|---|---|

| Screening of characteristic risk factors | Single-factor analysis and stepwise regression were used to screen characteristic factors using simple statistical methods. |

| Prevent factor collinearity. Make the remaining factors more independent and important. |

| Add algorithms | 5 algorithms | 7 algorithms | When the prediction effect of multiple models is reduced, the AUC > 50% can still be retained for integration to improve the robustness of the model. |

| Adjust model parameters | Fβ regulated the sampling inspection rate. Five models had consistent values. | Fβ regulated the sampling inspection rate Seven models were independently adjusted. | The sampling rate was regulated, and the elasticity was set at 2–8%. |

| Beta | PPV (or Sampling Inspection Rejection Rate) | Recall (or Hit Rate of Unqualified Products) | Number of Border Inspection Application Batches | Number of Sampling Inspection Batches | Sampling Rate | Fβ Threshold | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Bagging-NB | Bagging-C5.0 | Bagging-CART | Bagging-LR | Bagging-RF | ||||||

| 1 | 20.00% | 33.33% | 249 | 5 | 2.01% | 0.94 | 0.76 | 0.48 | 0.46 | 0.68 |

| 1.2 | 20.00% | 33.33% | 249 | 5 | 2.01% | 0.94 | 0.76 | 0.48 | 0.46 | 0.68 |

| 1.4 | 15.38% | 66.67% | 249 | 13 | 5.22% | 0.94 | 0.46 | 0.48 | 0.46 | 0.64 |

| 1.6 | 21.43% | 100.00% | 249 | 14 | 5.62% | 0.94 | 0.46 | 0.48 | 0.46 | 0.6 |

| 1.8 | 21.43% | 100.00% | 249 | 14 | 5.62% | 0.94 | 0.46 | 0.48 | 0.46 | 0.6 |

| 2 | 21.43% | 100.00% | 249 | 14 | 5.62% | 0.31 | 0.46 | 0.48 | 0.46 | 0.6 |

| 2.2 | 21.43% | 100.00% | 249 | 14 | 5.62% | 0.31 | 0.46 | 0.48 | 0.46 | 0.6 |

| 2.4 | 17.65% | 100.00% | 249 | 17 | 6.83% | 0.31 | 0.46 | 0.43 | 0.46 | 0.6 |

| 2.6 | 16.67% | 100.00% | 249 | 18 | 7.23% | 0.31 | 0.33 | 0.43 | 0.46 | 0.6 |

| 2.8 | 16.67% | 100.00% | 249 | 18 | 7.23% | 0.31 | 0.33 | 0.43 | 0.46 | 0.6 |

| 3 | 8.82% | 100.00% | 249 | 34 | 13.65% | 0.31 | 0.33 | 0.28 | 0.12 | 0.6 |

| 3.2 | 5.45% | 100.00% | 249 | 55 | 22.09% | 0.31 | 0.18 | 0.28 | 0.12 | 0.25 |

| Recommended Threshold | PPV Unqualified Rate of Sampling Inspection | Recall Identification Rate of Unqualified Sampling | Sampling Rate |

|---|---|---|---|

| 0.39 | 13.29% | 100.00% | 9.29% |

| 0.40 | 13.29% | 100.00% | 9.29% |

| 0.41 | 14.79% | 100.00% | 8.41% |

| 0.42 | 15.16% | 100.00% | 7.96% |

| 0.43 | 15.45% | 100.00% | 7.56% |

| 0.44 | 15.45% | 100.00% | 7.56% |

| 0.45 | 20.43% | 100.00% | 6.19% |

| Model Revision | AUC of Algorithm | ||||||

|---|---|---|---|---|---|---|---|

| Bagging- EN | Bagging- LR | Bagging-GBM | Bagging- BN | Bagging- RF | Bagging-C5.0 | Bagging- CART | |

| EL V.1 | - | 69.03% | - | 53.43% | 57.40% | 63.20% | 63.17% |

| EL V.2 | 63.39% | 63.13% | 62.67% | 62.13% | 61.41% | 57.72% | 49.40% |

| Year of Analysis | Model Revision | Number of Algorithms | Recall | PPV | F1 |

|---|---|---|---|---|---|

| 2020 | EL V.1 | 5 | 25.00% | 2.47% | 4.49% |

| EL V.2 | 7 | 58.33% | 4.38% | 8.14% |

| General Sampling Inspection Items for Each Year | Number of Inspection Application Batches | Overall Sampling Rate | EL Sampling Rate | Overall Rejection Rate | EL Rejection Rate |

|---|---|---|---|---|---|

| 2022 | 27,074 | 10.90% (2952/27,074) | 6.48% (1754/27,074) | 3.01% (89/2952) | 4.39% (77/1754) |

| 2021 | 23,670 | 9.14% (2163/23,670) | 6.24% (1478/23,670) | 4.16% (90/2163) | 6.36% (84/1478) |

| 2020 | 26,823 | 6.07% (1629/26,823) | 2.78% (745/26,823) | 3.74% (61/1629) | 5.10% (38/745) |

| 2019 | 29,573 | 10.68% (3157/29,573) | - | 2.09% (66/3157) | - |

| General Sampling Inspection and Evaluation Items for Each Year | Annual Overall Sampling Inspection | EL Sampling Inspection | ||

|---|---|---|---|---|

| Annual Rejection Rate (Number of Unqualified Pieces/Total Number of Sampled Pieces) | p Value | EL Rejection Rate (Number of EL Unqualified Pieces/Number of EL Sampled Pieces) | p Value | |

| 2022 | 3.01% (89/2952) | 0.022 * | 4.39% (77/1754) | 0.000 *** |

| 2021 | 4.16% (90/2163) | 0.000 *** | 6.36% (84/1478) | 0.000 *** |

| 2020 | 3.74% (61/1629) | 0.001 ** | 5.10% (38/745) | 0.000 *** |

| 2019 | 2.09% (66/3157) | - | - | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, L.-Y.; Liu, F.-M.; Weng, S.-S.; Lin, W.-C. EL V.2 Model for Predicting Food Safety Risks at Taiwan Border Using the Voting-Based Ensemble Method. Foods 2023, 12, 2118. https://doi.org/10.3390/foods12112118

Wu L-Y, Liu F-M, Weng S-S, Lin W-C. EL V.2 Model for Predicting Food Safety Risks at Taiwan Border Using the Voting-Based Ensemble Method. Foods. 2023; 12(11):2118. https://doi.org/10.3390/foods12112118

Chicago/Turabian StyleWu, Li-Ya, Fang-Ming Liu, Sung-Shun Weng, and Wen-Chou Lin. 2023. "EL V.2 Model for Predicting Food Safety Risks at Taiwan Border Using the Voting-Based Ensemble Method" Foods 12, no. 11: 2118. https://doi.org/10.3390/foods12112118

APA StyleWu, L.-Y., Liu, F.-M., Weng, S.-S., & Lin, W.-C. (2023). EL V.2 Model for Predicting Food Safety Risks at Taiwan Border Using the Voting-Based Ensemble Method. Foods, 12(11), 2118. https://doi.org/10.3390/foods12112118