Abstract

There is a large amount of Information Technology and Communication (ITC) tools that surround scholar activity. The prominent place of the peer-review process upon publication has promoted a crowded market of technological tools in several formats. Despite this abundance, many tools are unexploited or underused because they are not known by the academic community. In this study, we explored the availability and characteristics of the assisting tools for the peer-reviewing process. The aim was to provide a more comprehensive understanding of the tools available at this time, and to hint at new trends for further developments. The result of an examination of literature assisted the creation of a novel taxonomy of types of software available in the market. This new classification is divided into nine categories as follows: (I) Identification and social media, (II) Academic search engines, (III) Journal-abstract matchmakers, (IV) Collaborative text editors, (V) Data visualization and analysis tools, (VI) Reference management, (VII) Proofreading and plagiarism detection, (VIII) Data archiving, and (IX) Scientometrics and Altmetrics. Considering these categories and their defining traits, a curated list of 220 software tools was completed using a crowdfunded database (AlternativeTo) to identify relevant programs and ongoing trends and perspectives of tools developed and used by scholars.

1. Introduction

Information and communications technologies have changed the nature and the tasks of the peer-reviewing process of scholarly publications. Academics and publishers have adapted to the changes introduced by the digital format. The transition from manuscripts has to lead to a revolution in the scholarly editorial industry. Digital format decreased the publishing time by offering a systematized submission and review. It also created new distribution schemes for articles such as Open Access (OA), or subscription-based access among other innovative platforms. While most of these changes have occurred on the publisher’s side, there have been a lot of new opportunities in the way that scholars produce and handle information. It is necessary to examine the nature and extent of the editorial process behind the production of scientific information to assess the depth and to understand how opportunities to fulfill functions have been pursuit by software developers in the past. This study is not only valuable for researchers, but for software developers as well. It can offer them critical insights for future developments, and the exploration of new markets, customer segments, channels, and value propositions. Peer-reviewing is an activity that the academics practice, but it is not necessarily the subject of their study. The development of a manuscript is inherently a multi-disciplinary activity that requires a thorough examination and preparation of a specialized document. The process also operates like a complicated system in which people perform diverse roles such as authors, validators (by citing), project managers, and collaborators (co-authoring) [1]. Many researchers might be unaware of the roles they have to play and the corresponding Information Technology and Communication (ITC) tools at their disposal. Moreover, compilations of software to deal with the peer-reviewing process are extremely rare. The ones available only deal with reference managers and social academic networks.

Assimilation of the assisting technology for peer-reviewing is usually deficient due to the lack of knowledge of the process itself and by the broad range of tools available to potential users. While undergraduates or graduate students might have a natural disposition to adopt new technologies, they lack in-depth knowledge of the technicalities of the peer-reviewing process that more experienced scholars possess. The disadvantage of having experienced users is that they are more prone to stick with tools that are more familiar to them, but not necessarily the most efficient and current.

1.1. Research Question and Objectives

To provide a baseline of the activities derived from producing scholarly documents, we identified the most common tasks among scholars regardless of particular disciplines, sectors, and countries. A new taxonomy was proposed based on a heterogeneous set of sources such as guides for the development of scientific and academic writing skills [2,3,4], journal and editorial guidelines [5,6,7,8], comprehensive studies on editorial and reviewing [9,10,11,12,13,14], guides for librarians [15,16,17,18,19,20,21,22], and by policies described directly from organizations relevant to the process.

The application of this taxonomy to a sample of ITC tools can help understand the extent and availability of these instruments. The analysis led us to question how well these tools can assist in the completion of the tasks required during peer-reviewing.

The peer-reviewing process as a whole engages different actors. For this review, we focused on the ITC tools that emerge from the author’s perspective and leaving other relevant viewpoints, such as reviewers or editors, for future work.

1.2. Peer-Review and the Digital Document

Peer review is the critical assessment of manuscripts submitted to journals by experts who are not part of the editorial staff [14]. The interaction within the scholar community enables the evaluation of research findings competence, originality, and significance by qualified experts. These factors are helpful for publishers to allocate resources appropriately, to maintain specific standards of quality, to provide the consent or correct copyright, and more subjectively, to grant prestige and fame among the reviewers [23,24]. Conference proceedings and journal articles also have practical applications on daily scholar activities like the recording precedence and ownership of an idea for authors, dissemination, certification, and archiving of knowledge [25]. Significant use of the peer-reviewing process is to link current ongoing investigations with previously published and validated documents. [26]. The ease and low cost of online data storage have led to the spread of policies that encourage or require public archiving of all data publication [4]. Recently, research evaluation and publicly funded projects have opened the debate of topics regarding the role of the emerging relationship among the stakeholders of the peer-reviewing process [27].

The peer-reviewed process has changed considerably in the last few decades. Those changes originated from the following problems: slow throughput analysis of documents, vulnerability to bias, and an overall lack of transparency within the interactions among authors, editors, and reviewers [28].

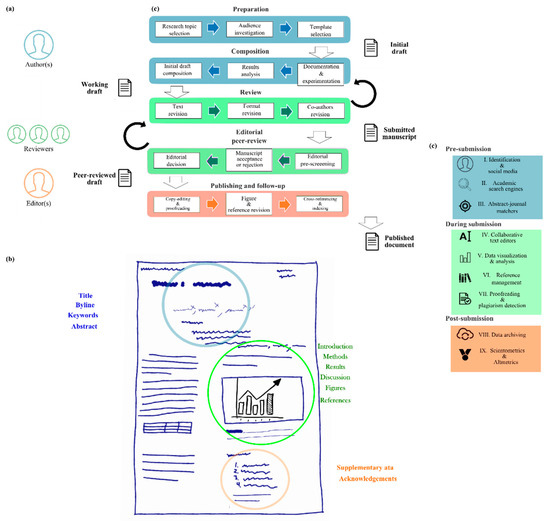

Figure 1 depicts a classical peer-reviewing process. Figure 1a,b show the participants and the interactions concerning document handling before (blue), during (green), and after (orange) submission, respectively. Peer-reviewing begins with the preparation of a draft by an author to a journal; then, an editor screens the relevance and quality of the manuscript, and if both requirements meet the standards of the journal, the editor will contact independent experts and remits it for further evaluation. Typically, the editor will reach two or three reviewers to judge if the manuscript should be accepted, accepted with minor or major changes or rejected based on scientific quality, clarity of presentation, and ethical compliances [23,24]. This process may involve two or up to three rounds in the pursuit of improving the quality of the manuscript. Reviewing is most frequently carried out following a single blinding process (the authors ignore the identity of the reviewers). Blinding aims to remove reviewer bias towards a document; by removing the names and affiliations of the authors of a manuscript, any bias for or against an author or institution might be eliminated or reduced [23]. The editor has the final decision on the article publication and is responsible for carrying on in the copy-editing of the article to make the text as clear and consistent as possible, to ensure the quality of graphs and illustrations, and appropriately referencing the cited articles. Just like the process, scholarly journals have changed dramatically as a result of the convergence of information and communications technology [9].

Figure 1.

Peer-review process. (a) Stakeholders; (b) editorial process; (c) Information Technology and Communication (ITC) assisting tools software taxonomy.

A biased attitude towards submitted manuscripts from the reviewers has been pointed out by critics of the most traditional forms of the peer-reviewing process [23,29]. Other important issues that peer-reviewing must address are confirmatory bias or the tendency of reviewers to accept outcomes that agree with commonly accepted theories [30], tendency to reject studies whose findings are negative (File Drawer problem) [30,31] and prejudices developed ad hominem (based on race, gender, or socio-political affiliations). Furthermore, innovative strategies such as double-blinding (the authors and the reviewers do not know each other identity), open peer reviews, and a supplementary review in a post-publication phase have also been explored as variants to the standardized editorial policies that scholarly journals employ. These approaches are non-complementary, but share the goal of improving the objectivity and accountability of the contributors. Besides the typical challenges for coordination and trust-building of working across long distances, scientific collaboration for groups is associated with problems to sustain the long-term relationship between organizations. Bos et al. [32] identified three underlying barriers: first, the leading edge and complex nature of scientific knowledge are inherently challenging to transfer; second, unmatched interests and goals between individual researchers; and third, the legal deterrents between institutions originated by the concern of protecting intellectual property values.

The principles of “Neutral Point of View” and good faith that scientist online communities exhibit, share a common ground with the collective social dynamic of scholarly knowledge. Recently developed tools for online communities like wikis or git [33] hold superior capabilities for handling non-linear workflow projects and has permeated the writing process before and during the submission of a manuscript with the added features of contributing asynchronously and incrementally.

The importance of the digital documents is supported by the register of 133 million digital objects to the date. The Digital Object Identifier [34], or DOI, is a unique alphanumeric string assigned by the International DOI Foundation to provide persistent identification of content on digital networks. A DOI name is permanently linked to an abstract or physical object to provide a persistent link to current information about that object, including information about where to find it [35]. During the peer-reviewing stage, these document identifiers are generated by the publisher through a registration agency (i.e., CrossRef [36]), once a manuscript is approved by the editors. After a document is available for distribution and redistribution, the impact of the information can be tracked in social media, scholarly media, and online with the digital signature provided by these registration systems.

2. Materials and Methods

Prior to examine the ITC tools, the authors established the following nine categories and 70 features by consensus: (I) Identification and social media, (II) Academic search engines, (III) Journal-abstract matchmakers, (IV) Collaborative text editors, (V) Data visualization and analysis, (VI) Reference management, (VII) Proofreading and plagiarism detection, (VIII) Data archiving, and (IX) Scientometrics and Altmetrics.

The systematic software search was conducted using the PRISMA guidelines for systematic literature reviews [37]. While these guidelines are not designed for software tools, the methodology was adapted to the purpose of this study.

The software listed in this review was identified by searching both the scientific literature and the commercial applications marketplace. English-language articles from January 2006 through August 2016 were retrieved from ProQuest, EBSCOhost, IEEE Xplor, Web of Science, and Science Direct.

The search was extended to find for alternatives of the leading applications in each of the categories using AlternativeTo [38] to provide a more extensive and current study. This platform is a free crowd-funded service that provides a curated list of substitute software based on task and focus. This service supports listings for Windows, Macintosh, Web and Cloud applications, and Android and iOS applications.

The following guidelines were considered to determine the taxonomy and features from this study:

- Tools that are designed to aid the composition, submission, or review of peer-reviewed documents.

- Tools endorsed or recommended by scholars (i.e., the software found in other compilation reviews), or by research-related institutions.

- Tools that offer direct solutions to improve the author’s experience while peer-reviewing submissions.

2.1. Inclusion and Exclusion Criteria

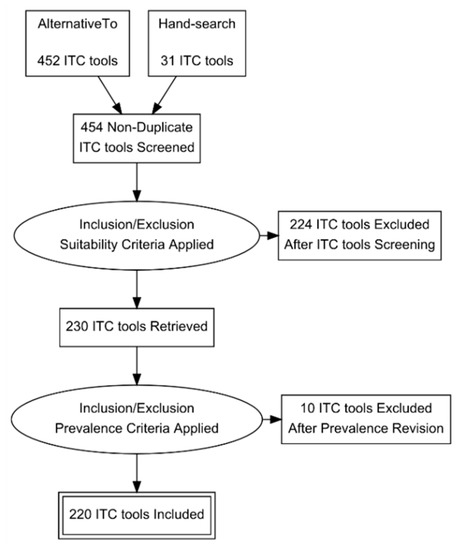

Some of the software applications that were excluded had a low compatibility score considering the functions of the categories in the taxonomy described in Table 1. Duplicity and availability were other exclusion criteria considered in this study (see Figure 2).

Table 1.

Assisting software for the peer-review process taxonomy and function.

Figure 2.

Assisting software search strategy.

2.2. Search Strategy

Due to the lack of studies of the ITC tools in online databases, a hand-search methodology was used on a selection of reference materials for authors, reviewers, and editors. Examination of these sources supported the development of a general taxonomy for software and lead to the identification of representative cases of software solutions that were used subsequently to find additional category members using AlternativeTo as a search engine for retrieving ITC tools for evaluation.

The platform allows developers or users to submit new applications, and users can provide feedback about their experiences. After identifying every leading software in each category, we performed an extended query looking for alternative software to create a more comprehensive list. Finally, discontinued software and software that did not show any core functions as a standalone application are not part of the final list. An example of software that does not meet the requirements is those that only provide a link to another service. A more precise description of the software exclusion criteria is depicted in Figure 2. The resulting list from the search strategy from the AlternativeTo database and the hand-search contains a total of 483 ITC tools. Following the taxonomy from Table 1, 234 tools were excluded. The final list is in the Supplementary materials from this document.

2.3. Data Extraction

Every tool was surveyed to determine the presence or absence of each of the 70 features designated in this study (see Supplementary Material S1). The scoring follows a value of one when the described function is present, and zero when it is not available. Secondary characteristics like usability, cost, or degree of sophistication in the analysis are outside of the scope of work of this article. The overall score of each application varies from 1 to 70. ITC tools with a value of zero are not part of the group sample. The final list of 220 ITC tools was analyzed using GraphPad Prism 7 to generate frequency histograms.

3. Results

Table 1 describes the main objective of the proposed taxonomy ITC tool for the proposed taxonomy. Details of the individual functions are described in the Supplementary File S1 and the relationship between these categories and the peer-reviewing process are described below.

3.1. Pre-Submission Tools

3.1.1. Identification and Social Media

There are millions of researchers and scholars working and publishing their work and many of them share name similarities. Identity ambiguity is a problem caused by sharing a common family name or surname with some of their peers in their field [39]. This situation can lead authors with an existing publication record to lose track of some of their work or to be attributed to a namesake. Identification of scholarly authors used to be a very competitive field among publishers. There are still a few identification services available for researchers: Scopus Author Identifier [40], Researcher iD [41], arXiv Author ID [42], Open Researcher & Contributor ID (ORCID) [43], and many others publishers that have been working on developing an international standard name identifier. However, most of those developers have already joined or are working closely with the ORCID Group to make ORCID freely available and interoperable with existing ID systems [44].

ORCID is a free service that assigns a unique identification number for every author of a scientific document. Authors can create a profile with personal and professional information and receive a 16 digit number assigned as a unique identification token [45]. Registration in ORCID provides means to match authors and reviewers to engage in a peer-review process upon publication. Moreover, it is also possible to access other scholarly services with that record. This identification system is free for the end-users, but there is a membership cost if you belong to a research funding agency, research organizations, or any publishing entity.

Social networking is a web-based service that allows individuals to create a public or semi-public profile with personal and professional information, maintain a list of contacts, and to share information between users. Currently, some members of Academia still stigmatize the use of social media as a waste of time. This perception is misguided and based on incorrect interpretations of the activities that academics are doing online [46]. The efficient use of social media that combine informal communication, networking, and publicity is an advantage for younger scholars [47].

Social networks offer a framework for users to display their knowledge, experience, and expertise in the pursuit of acquiring and conserving a professional reputation [48,49,50]. Scholarly oriented social media services, such as Mendeley [51], ResearchGate [52], or Academia.edu [53], have replicated the proliferation behavior of information of general audience services such as Facebook, Twitter, or Instagram. While some older academics still prefer to be discrete, there is a growing base of users that are starting to promote their research, extend their relations with their peers, and follow activities of projects or research groups on scholarly oriented social media services.

ResearchGate is a social platform with more than 10 million members. This site requires to sign up using an institutional e-mail address under academic, corporate, medical, or a general audience profile. Users can list their publications, post questions, or provide answers for other users. It also keeps some basic scientometric information over the contributions (cite counting) and publications of more than 100 million items that are free to access. Other social features include the interaction via questions and answers in forums, and the possibility to post or apply for academic job positions.

The benefits of an online presence of a scholar are to provide professional visibility and recognition. Scholars are increasingly using social media to share journal articles, thoughts, opinions, updates from conferences, meetings, and upcoming events [46].

The adoption of the digital distribution of academic publications changed the way researchers are evaluated. Beyond traditional indicators, Altmetrics measure the influence of academic performance employing social media tools. These tools can measure the impact of scholarly work in social media using general social networks information (i.e., Facebook data), or more specialized tools such as CiteULike [54] or other social services [55,56,57].

Social media help researchers to acquire recognition about their publications as authors. In contrast, confidentiality required when acting as a reviewer makes it difficult to be credited for these contributions even when they tend to be time-consuming [58]. Publons [59] is a free online social media service that record, verify, and highlight contributions of scholars that act as reviewers without compromising anonymity. In their system, the reviewer profile displays their level of activity, making it easier to cite their reviewing work in a curriculum vitae or for funding applications [60]. This level is a performance score used to compare them with their peers [61], Wiley-Blackwell started a partnership pilot program recently where reviewers of some journals could accredit their collaboration with this service. The publisher reported an improvement in reviewer acceptance rate and faster reviewing time [60]. This innovative approach help scholars to get the recognition and visibility required to improve and motivate the peer review activity [58].

3.1.2. Academic Search Engines

A literature review is a necessary step for any research work. Scholar research begins when authors reach for the knowledge to determine the state-of-the-art of a given topic. Nowadays, literature research that once took months or even years to complete can be finished significantly faster through the use of digital content. On the other hand, the number of articles and journals is continuously increasing, making it difficult for scholars to keep updated in their field of studies [3]. Despite the digital revolution, conducting a research project using data that other people have stored or gathered is more or less the same activity than before. The difference between digital and traditional media collections is that physical libraries are smaller, but well-organized, and the digital world is more extensive, but unorganized [62].

Connecting ongoing research with previously published work on a topic is also an objective of the peer-review process. Scholars are compelled to document widely on their research before and during their studies in a nonlinear manner [12]. Consulting sources for choosing a topic is a mandatory step for authors in the pre-submission phase. The next step is reading and scanning resources from preliminary background to an in-depth focal reading for preparation of the writing phase [62]. After a document is submitted, requests from reviewers can include to re-read and incorporate additional sources to increase the value of the material.

The use of databases and other search engines has become indispensable tools for the construction of academic knowledge. We recognize them as tools directed to support the search for scientific information. At the same time, the use of academic databases is to cover more in-depth and specialized subjects [63,64]. Search engines are the primary source of online information, and the academic versions present additional features like benchmarking tools, rankings, and Scientometrics. For the researchers, the search services must locate precise and relevant information, but also to evaluate the quality of the information available [65].

Academic databases are organized online collections of scientific and scholarly publications divided into two categories: bibliographic databases and full-text databases. Bibliographic databases could provide with information from a document, such as the title of the article, authors, abstract, references, links, and keywords, among others. In some databases, the full document for download is available, as Open Access or behind a paywall. Other advanced functions such as search history, tables, images search, subscription alert, bulk download of content, and the possibility of individual content purchase are only present in academic search engines. Other remarkable characteristics are the implementation of web 2.0 and social networking tools. Those two facilitate the interaction among researchers for finding potential partners, sharing information, and for discussing research issues [65,66].

3.1.3. Journal-Abstract Matchmakers

Once a significant part of a manuscript is complete, choosing an adequate publication can expedite its distribution and the overall impact of the research. For authors, the number of reads and citations in the most influential journals is the ultimate goal. Corresponding authors, or the person in charge to submit a manuscript, can choose journals with different scope and influence. The reasons for selecting a specific journal are diverse and vary among researchers. Some authors prefer to focus on the highest impact factor available, while others are inclined to select journals with other specific characteristics. Additional journal attractive traits are a broader audience, shorter reviewing times, fast publication periodicity, flexible Open Access options, and lower publication fees. Researchers can evaluate all these traits to select the best choice regarding the publication that matches their interests. There are a few ITC tools designed to aid authors to facilitate this task. Using a few parameters (Abstract, keywords, OA accessibility, research topic), it is possible to generate a list of probable matching journals. Pursuing an increase in the number of authors interested in contributing to their journal, publishers provide services to suggest the most suitable options among their titles. Most of the major publishers offer some abstract-journal matchmaking within their materials, such as Elsevier [67], Springer [68] or Wiley-Blackwell [69]. Evaluating compatibility among a higher number of sources can be done using a service called Jane. This service is available at no cost and includes all the journals in Medline [70]. A query in this application will return an ordered list of results with a confidence score for each item.

Another valuable tool for authors is SciRev [71], which is a reviewing platform of the peer-reviewing process, where authors can share their personal experiences during the submission process. The information provided by researchers become a score of each journal and can take part in benchmarking activities. These benchmarks include the duration of the first review round, the whole manuscript handling time, immediate rejection time, and all overall satisfaction in their analysis.

The Open Access Spectrum Evaluation Tool quantitatively scores journals degree of openness [72]. This tool offers a quantifiable mechanism to analyze publication policies. The information can be employed by authors, librarians, research funders, and government agencies to decide on policy direction. The registry is composed of around one thousand entries. The evaluation of the openness takes into consideration the rights of the readers and authors after its publication, accessibility to full-text article, and indexation on repositories.

Unfortunately, among the existing valuable journals, many low-quality publications take advantage of the eagerness of authors to get published by disregarding the quality of the manuscript for mere profit. These journals and publishers are qualified as “predatory” when their sole purpose is to make money without ethical considerations. A site maintained by Jeffrey Beall [73], a librarian and professor from the University of Colorado Denver, offers curated lists of potential, possible, or probable predatory scholarly open-access publishers and journals, following criteria established by the Committee on Publication Ethics [74]. Duke University offers a valuable checklist to evaluate publishers that consider prior publications, fees, operative governing body, acceptance or rejection rates, memberships, associations, and quality of the editorial board [75].

3.2. Submission Tools

3.2.1. Collaborative Text Processors

Text processing software is a universal tool in offices of any kind. In academics, the adoption of digitally formatted documents expedited the composition, distribution, and review of manuscripts. While submission by mail is allowed by a few journals, the possibility to contact and interact with co-authors and editors more directly offers considerable time and resources savings. Online submission systems provided by editorial houses provides a mean for distribution and tracking different versions of a manuscript to authors, reviewers, and editors. To prepare manuscripts, authors traditionally had two alternatives: standalone word processing such as Microsoft Word or the code-based composition LaTeX [76]. Microsoft Word employs a “What you see is what you get” (WYSIWYG) approach, where the user immediately can view the document as it will appear on the printed page. In contrast, LaTeX is a programming language that produces documents through an external editor by embodying the principle “What you get is what you mean.” A user-friendly interface, full control of the textual inputs, and outputs made Microsoft Word (including other WYSIWYG alternatives such as OpenOffice [77] or LibreOffice [78]) the default tool for most disciplines. LaTeX is the preferred option among mathematicians, physicists, and computer science researchers (45 to 97%), due to the ability to typeset natively mathematical equations, and the disposition of structured templates [79].

Collaborative writing is an essential skill in academia and industry. It combines the cognitive and communication requirement of writing with the social aspect of working together [80]. Until now, the process of collaborative writing focused on individual academics writing at different times on the same document and sending it back and forth between the collaborators.

Due to the interactive nature of the peer-reviewed process and the exhaustiveness required to disclose technical and sensitive information publicly, neither of the composition platforms are ideal. For Word documents, collaboration among peers becomes complicated because of the creation and management of different versions of the same material. This file will frequently require an extensive amount of time and effort to reach its final form. Similarly, code-based programming has shown to be challenging and time-consuming since it has a steep learning curve. In a recent study, Knauff and Nejasmic [81] have suggested that scientific journal submission using Latex should be limited only to articles with a considerable amount of mathematics.

Traditional text processing software has many limitations, but currently, three approaches can help overcome some of them. These options are as follow:

- Enhance the capabilities of Word using complementary software and services (plugins).

- Migrate to a cloud-based online word processing software that expedites the interaction among co-authors and editors.

- Use newer hybrid online platforms developed purposefully for research.

The first option deals with a strategy to improve the experience of researchers by incorporating complementary functionalities using add-ins to the standalone program. Reference management software and proofreading capabilities are good examples of a customized set of tools embedded in the standalone text processors [3].

Regarding the second option, real-time collaborative editors are an alternative emerging trend to offline word processors. Learning in a collaborative setting is a social interaction involving a community of learners and researchers where members acquire and share an experience or knowledge [82]. Cloud-based services such as Google Drive, Microsoft OneDrive, Apple iCloud, Box, or Dropbox are already popular services to maintain personal files synced and available among laptops, telephones, and tablets. Migration to web-based solutions allows the incorporation of collaborative functions that will enable several authors to edit and format a document online. For teams, these tools provide affordable means to share raw data (images, sounds, and any means of digital information) without iteratively sending and receiving e-mails with embedded data. For academics, though, cloud-based storage can be deterred by the perception of not having full control over their files. Fortunately, there are privacy and sharing settings to stipulate policies for controlling files for sensitive information; however, these are premium features that incur extra costs. Furthermore, trying to edit a manuscript from different instances at the same time will lock the file or create additional copies of the document causing likely conflicting versions. One significant benefit of collaborative tools is that they store all the revisions of the document. This feature can be used to gain insights on the progressive development of a manuscript. However, to use these tools, there are some caveats. For instance, every contributor should have an account and support for a third-party service is limited.

Text processors are evolving to become online collaborative platforms. The most widely used general collaborative word processor is Google Docs [83], although there are more technically oriented tools designed specifically for researchers [84]. The use of cloud-based application tools such as Google Docs or Office 365 [85] is continuously spreading in workplaces. These online versions perform in a very similar manner to traditional offline word processors.

Other collaborative text editing services implement the concepts of joint development of wikis and blog such as Typewrite [86], PenFlip [87], Etherpad [88], and Gobby [89]. These services use different markup languages (HTML, Markdown, or even LaTEX) to specify the layout and style of the text. Simple and straightforward interfaces, the possibility to add other contributors, and the possibility to interact with them during the drafting process through a chat window are notable features of these programs. However, managing citations, equations, and figures should be done manually by the authors; therefore, to compose manuscripts, these solutions are mostly confined to early draft versions of the manuscript.

Web-based LaTeX editors and WYSIWYG platforms are addressing the limitations of collaborative writing. These tools were developed expressively to provide a collaborative experience in the composition and publishing of academic documents. Online editors such as ShareLaTeX and Overleaf are built to ease the writing of LaTex code. These services use a window to code the content, and another one to compile and visualize the PDF file.

ShareLaTeX [90] is an online LaTeX editor that allows real-time coding and compiling of projects to PDF format. The availability of around 229 journal articles templates included in this platform provides the means to create and share manuscripts with peers. Private and public projects, a spell-checking capability in several languages, and one-click compilation to PDF are features of freely hosted accounts. Premium services include tracking changes, Dropbox, and GitHub [91] synchronization. ShareLaTeX is available under an open-source license, making it available publicly to be hosted privately by individuals or organizations.

Overleaf [92] (formerly WriteLatex) is based on LaTeX code and enhanced by a user-friendly interface that incorporates a synchronous WYSIWYG view with an extensive library of templates. A large amount of users (around 400,000) is pushing the integration of reference managers (CiteULike [54], Mendeley [51], Zotero [93], data archiving (figshare [94], arXiv [95], and bioRxiv [96]) and data visualization tools (plot.ly [97] and Mind The Graph [98]) from third-party solutions.

Overleaf allows imports from reference managers Zotero and CiteULike. Partnerships with third-party organizations have enabled the ability to send manuscripts directly to editorial services, organizations, and journals (IEEE [99], OSA [100], MDPI [101], PeerJ [102], Scientific Reports [103], and F1000 research [104]).

Authorea [105] is a service founded in 2014 with already around 60,000 registered users, and it offers a WYSIWYG interface and an extensive template library (more than 8000 templates) for journals. Several users can edit collaboratively different paragraphs at the same time. It also includes PubMed [106] and Crossref [36] for citation discovery and contains a built-in reference manager (importing references is available using BinTex format file). Document version tracking and commenting is possible for the authors. Drafts can be exported to Word documents, PDF, or LaTeX. Dashboards for account management, bibliometric data, and privately and local hosting networks are available for institutional administrators. These collaborative online text edition tools are offered freely for public documents or as a subscription service available for individuals or organizations with fees ranging from 5 to 25 USD, depending on the number of users and documents.

An open source software project founded by German Research Foundation, Fidus Writer [107,108] offers some of the core features of other collaborative text editors (collaborative writing, in-house reference management, and export compatibility to LaTEX or PDF) without the support of third-party organizations or a template library.

3.2.2. Data Visualization and Analysis

Data processing during research is a quintessential activity of the authors. Communicating observations and insights only with text from data coming from the theoretical or experimental analysis can be challenging. The visual nature of illustrations and chart graphs endow them the power to reveal patterns such as changes, differences, similarities, or exceptions that could be otherwise difficult to interpret [109]. During the composition of a document, reviewers, and editors (or thesis director for students) frequently guide authors to pay attention to the planning and execution of these visual aids. These aids can increase the readability of a manuscript substantially and even provide means for the diffusion of an article. Sometimes, attractive and appealing figures are rewarded with their inclusion as journals covers or have significant probabilities of being published in social and mainstream media. In some cases, where the eye-appeal borders art, these figures can be formally awarded [110]. The quality of these visual aids can play an essential role in the acceptance or rejection of a manuscript.

These images, also known as Figures, can be qualitative or quantitative. Typically, qualitative information corresponds to diagrams, sketches, maps, or photos present in the Introduction and the Materials and Methods sections. Some standard formats used more frequently by scholars are mind maps, diagrams, schematics, experimental setups, and prototypes photos. Meanwhile, tables or graphs (also known as plots) are quantitative values displayed within an area delineated by one or more axes and built as a visual object. Almost invariably, these types of visual elements supports the Discussion and Results section of a document (probably except some letter formats or review compilations) [109]. Matthews and Matthews [2], described the two functions that figures have for providing evidence when a visually notable event occurs. The first function is to improve the efficiency of information presented to the reader. The second one is to emphasize a particular feature of the research. Fortunately, data visualization literature is rich with helpful resources regarding this matter. There is a consensus among authors on the importance that images are self-explanatory and disclose information otherwise hard to communicate without visual elements. The excellence principle for traditional media is to provide the greatest number of ideas in the shortest time with the least ink in the smallest space possible [111]. In the past, publishers used to restrict the number of images (or charge additional fees) included in an article. For the digital version, however, these restrictions can be eluded to easily with the possibility to submit supplementary materials (images, videos, audio, or other formats) to digital repositories linked to the article (see Section 3.3.2).

The minimum requirements for acceptance of a figure are technical (image size and resolution) and described in the instructions of the authors (ITA) of any given publication. Basic editing of vector-based or bitmap software for resizing, cropping, and labeling the images does not require expensive specialized software. Scholars can download open-source editing software (GIMP [112], Pixlr [113], Sumopaint [114], Paint.NET [115], Inkscape [116], or Vectr [117], among others) or access online web services for cross-platform editing with smartphones and tablets. In most cases, these tools are sufficient for authors to create artwork by themselves for submission; occasionally, some researchers would need to outsource these duties. In any case, authors are encouraged to approach to recognized organizations such as the Guild of Natural Science Illustrators [118] or Association of Medical Illustrators [119] and consider in advance the additional costs and copyright implications [120]. Additionally, some editorial organizations offer illustration services directly. For this systematic review, we have included the digital tools expressly designed for aiding in the production of visual aids with scholarly purposes.

The term Data refers to any structured information encompassed in formats such as multidimensional arrays (matrices), spreadsheet-like data, or time series. Basic data visualization can be executed using productivity tools based on spreadsheets. Microsoft Excel [121] is by far the most popular tool for generating and customizing plots from existing templates. This software, along with some open-source alternatives, uses a grid of cells to input, manipulate, and analyze data. While for many researchers, basic tools are sufficient for their needs, some others will find technical difficulties processing a large amount of data or complex algorithms. Another limitation is that the plot formats are limited to bar, line, and scatter and pie charts. To convert these images into figures ready for submission, researchers typically require additional software to compose multiple plots in a single file or to employ more convoluted forms of data visualization (for example color maps or three-dimensional charts). To overcome these representation limitations of data, there are a range of options that vary widely in the degree of the specific requirements and technical background of the researcher. For projects where data processing is a critical component of research, high-level programming languages can be implemented for producing figures. Python [122] has become one of the most popular programming languages. For interactive data analysis, exploratory computing, and data visualization, Python performs as good as other domain-specific open source and commercial programming languages.

It is common to research, prototype, and test new ideas using a more domain-specific computing language such as Matlab [123] or R [124]. Then, those ideas can be parsed to be part of a more extensive production system written in Java, C#, or C++. NumPy [125], short for Numerical Python, is the foundational package for scientific computing in Python. Matplotlib [126] is the most popular Python library for producing plots and other two-dimensional (2D) data visualizations [126,127]. It was created by John D. Hunter and is now maintained by a large team of developers. It is well-suited for creating plots suitable for publication [128].

Many researchers require the production of figures beyond the restrictions of spreadsheet data programs. In these cases, there are specialized data analysis and graphing software such as Origin [129] from Originlabs, SigmaPlot [130] from Systat Software, or Prism [131] from GraphPad. These applications can process raw data and produce high-quality figures ready to be included as part of a presentation or to be submitted to a journal for review.

Data analysis is a crucial step in research for accepting or rejecting hypothesis based on facts. Depending on the field of study and particular requirements, researchers may opt among a large number of data analysis applications. However, Joseph M. Hilbe has recognized some classical tools such as SPSS, Sigmaplot, and SigmaStat to discuss the software characteristics, capabilities, and scope of these tools [132,133].

“Statistical Package for Social Science” is the original acronym for a widely used statistical software for business, health research, education, and hard sciences, which originated in 1968. The current version is commercialized currently by IBM [134] and offers the following functions:

- Editing and analyzing all sorts of data.

- Basic table and chart creation.

- Perform basic statistical tests and multivariate analyses.

- Nonparametric testing.

- Cluster analysis.

- Add-on modules for advanced statistics (GLMM, GLM, logilinear analysis, Bayesian statics, etc), Complex sampling and testing (decision trees, principal components analysis, neural networks, and time series analysis) and forecasting and decision trees (ARIMA modeling, C&RT, and Seasonal decomposition).

These features are offered with a subscription starting at 99 USD per month and 75 USD per month for the add-on modules. The success of SPSS has fostered competition and increased the offer of options available in the marketplace. An example of these options is SigmaStat [135], a wizard-based statistical software package designed to guide users through statistical analysis with a price tag (single payment scheme) of around $900 USD.

Another option is Statistica [136], which is an advanced analytics software originally developed by Statsoft and commercialized under TIBCO software. This tool provides data analysis, data management, statistics, data mining, machine learning, and data visualization procedures. It is offered under a quote-based scheme but offers an academic bundle price for the core program of around $150 USD.

Nonetheless, data analytical programs can still be prohibitively expensive for a lot of researchers. However, the open-source software R [124] offers a capable and free alternative for data visualization and analytics. R is based on a command base interface and is described as a language and environment for statistical computing and graphics. Certain features, such as language in parallel computing, being multiplatform, and operating under an open-source license, have boosted the importance of R. However, some authors have argued that free software implies additional and extensive learning and training for students [137]. Typically, to reduce the learning curve of R, libraries of functions or packages and an integrated development environment (IDE) such as R Studio [138] are available for scholars.

3.2.3. Reference Management

Dissertation thesis, journal articles, and support materials for conferences and proposals for fundraising activities are formats that researchers must likely compose throughout their career. Writing is a process of making linguistic choices of syntactic structures and repertories from the author knowledge [139]. Virtually every scholar uses text processing software. Beyond the basic features, some writing tools offer advanced proofreading (spelling, grammar, and syntax checking), the track of the readability of a text [140], availability of pre-formatted templates, and collaborative online edition capabilities.

The reference section is an organized list of works referred to a text; for the reader, it is the simplest way to access the work cited in an article. This section operates as an input to specialized citation databases that measure the influence of the scientific progress of papers, authors, journals, and academic organizations [4]. One of the most diligent tasks in the research process is to manage and cite documents properly. The reference managers are one of the essential tools that keep organized the library of references and PDF’s files, as well as to perform other functions [11,141].

The ideal referencing software should support all popular operating systems, allow the organization of references in groups or folders, file attaching and previsualization, the capability to export and import among several file format, integration with traditional text processors, database connectivity to facilitate literature search, and customization of reference styles.

The ideal referencing software should include the following features [142]:

- Support for all popular operating systems

- Allow the organization of references in groups or folders.

- File attaching and previsualization capabilities.

- The ability to export and import among several file formats.

- Allow the integration of traditional text processors.

- Database connectivity to facilitate literature search.

- Customization of reference styles.

Current reference managers software offers a dynamic set of extra features besides citing while writing [143,144]. The capability of these additional tools aims to fulfill the author’s requirements during a peer-reviewing process. It should be noted that there is a clear trend to offer supplementary access to cloud versions of the RM tools via web-based platforms. Latest applications are web-based only services (BibMe [145], Citethisforme [146], CiteULike [54], and EasyBib [147]) that allow adding referencing and citing without the traditional word processor program integration. Integration of the web services enables plagiarism detection during the citing process by highlighting uncited fragments of the text found online in the databases. This function does not substitute thorough professional subscription-based services provided to editorial offices like iThenticate or Turnitin. Furthermore, the availability of cloud services has shifted the paradigm of single-user collections of PDF files stored and cited using a traditional word processor to group-based and cloud-stored services that allow accessing and sharing resources by a larger group of persons.

Among the collaborative features present in the reference management software are the following: create groups and the ability to share folders (Citavi [148], CiteULike [54], Colwiz [149], EndNote [150], F1000Workspace [151], Mendeley [51], RefWorks [152], and Zotero [93]), jointly editing references (Citavi, Citethisforme, CiteULike, Colwiz, EasyBib, EndNote [150], F1000Workspace, Mendeley, and Zotero), and social network integration (CiteULike, Colwiz, Mendeley, and Zotero).

3.3. Post-Submission Tools

3.3.1. Proofreading and Plagiarism Detection

Plagiarism detection software can be used as a tool to identify parts of a manuscript that violates the ethical policies of journals and universities. Unfortunately, there have been plagiarism cases detected among all the levels and hierarchies of the peer-reviewing process. Authors can incur in plagiarism by verbatim or near-verbatim copying portions of other authors text, equations, or illustrations if they are not appropriately acknowledged. In some cases, this not deliberate practice, but a byproduct of unawareness of policies, poor English proficiency, or a forgiving attitude toward plagiarism derived from cultural values [153,154,155].

Unintentional plagiarism is the most commonly identified type of plagiarism. Some authors ignore or disregard the importance of properly citing and referring. Consequences of this attitude can be very severe. In some cases, this could imply the invalidity of previous work and an induced public apology, to been vetoed by the publishers, or expelled by the home institution of the author [156,157,158].

Automated text-matching detection grew out of universities vigilance regarding student assignments. It was only adopted more widely in the publishing industry in recent years. It was made particularly popular by the service CrossCheck using iParadigm’s iThenticate software, which is also employed by Turnitin [159]. Crossref Similarity Check [160] is an initiative established to help publishers prevent professional plagiarism and other forms of scholarly misconduct. A comparison between 80,000 scientific, technical, and medical journals of 500 publishers can be made generating a score. Ensuring research and publication integrity requires institutions to cooperate on all aspects of research and publication integrity [10]. While the Committee on Publication Ethics (COPE) does not recommend using percentages as cut-offs for detecting plagiarism, it suggests that the editors evaluate each paper individually. Whatsoever, the score itself provides a reasonable quality control check during all the phases of the peer-reviewed publishing process [159].

Turnitin and iThenticate [161,162] are the leading anti-plagiarism services employed by universities and publishers to screen documents. Manuscripts submitted are compared with billions of documents, an archived copy of the internet, a local database of submitted student papers, and a database of periodicals, journals, and on-line publications. Turnitin or Urkund report an originality report to estimate the percentage of matches between that document and the previously published material [163]. Nowadays, most major publishers will check the papers in some phase of the peer-reviewed process before publication. Upon screening, a high score on similarity with other documents can trigger a petition to the authors to revise the citation of the sources on a submitted manuscript, to reject a paper, or to report it to the corresponding authorities depending on a case by case basis consideration following ethical policies dictated by the publication.

For individuals, there are some services available such as Plagium [164], The Plagiarism Checker [165], PaperRater [166], DupliChecker [167], Viper [168], and others affordable alternatives such as Plagiarism detect [169] and Grammarly [170]. Those tools could be easily used by individuals, such as researchers, writers, or teachers.

3.3.2. Data Archiving

Providing the scientific community the necessary tools to recreate the experiments was one of the historical triggering agents that led to the modernization of the peer-review process. Clarity and openness in the methodologies are increasingly portrayed as an attendant trait of quality research. While repeating experiments might be impractical if not impossible for reviewers, it is conceivable that increasing the availability of data alongside the article will deter the submission of fraudulent work [171]. The ability to store and share complete sets of data is a powerful tool for strengthening the integrity of the process, while reaffirming the cooperative nature of knowledge. The growth in data repositories is motivated by opportunities for sharing dataset across communities, domains, and time [172]. Public data archiving is increasingly required by journals, so that they are preserved and made accessible to all online, and also for ecological reasons, taking this as a requirement for funding or publication.

A growing concern of peer-reviewing is to face the importance of digital datasets and computational research methods within scientific research [173]. The facility and low-cost of storing and sharing data online have led to the spread of policies that encourage or require public archiving of all data publication [4]. Submitting datasets and raw data to repositories can provide a framework to eliminate ethical and legal concerns of authors and their institutions, and decrease the fear of data being misused [174].

Readability and traceability require the appropriate documentation of the data format due to the diversity and types of repositories. Metadata formats are standardized, structured, machine-readable structures that make operations within the dataset possible. There are plenty of metadata standards for general purposes (Dublin Core) or field-specific (FITS for Astronomy, SDMX for statistical data, AgMES for Agriculture). In this study, around 40 entries were listed at the Digital Curation Centre [175].

Journals in partnership with libraries have established processes for ensuring the persistence and longevity of their publications. The expectation is the same for data presented in data papers. The use of persistent web identifiers, such as digital object identifiers to identify and locate both data and articles increases the likelihood that those links are still actionable in the future. Data repository portals are increasingly providing users in-line tools, such as quick-look viewers for the data, allowing plotting of particular datasets. Journals might partner with data repositories to enable researchers to submit data to a repository alongside their manuscript submission to a journal. This joint submission simplifies the process of linking articles with the underlying data [173].

The Registry of Research Data Repositories [176] or r3data.org is a global registry of research data repositories from all academic disciplines that includes (up to July 2019) more than 2000 entries. These repositories provide permanent storage and access of datasets to researchers, funding bodies, publishers, and scholarly institutions. The organization presents a search engine and browsing capabilities by subject, content type, and country. Each of the research data repositories is characterized by general information, access policy (open, restricted, or closed), legal aspects (the type of license), technical (data persistence and unique and citable service type), and quality standards (certified or support for repository standard). Many datasets are highly dynamic, regularly growing, or are duplicated, split, combined, corrected, reprocessed, or otherwise altered during use. DataCite [177] is a non-profit organization that supports the creation and allocation of DOIs and accompanying metadata. Nowadays, not only documents can be cited; data repositories can create means for data citation, discovery, and access. Data archives include services such as Dryad [178], Figshare [94], and Zenodo [179], where researchers can self-deposit research data with light documentation and minimal validation [174].

Dryad is a nonprofit organization that provides access to datasets at no cost. This service started in 2008 with an open-source DSpace repository software. The key features of the repository are that it is flexible regarding the data format, allows journals to set limited-time embargoes, and enables links between the manuscript and the dataset. While browsing and retrieving data is completely free. Submission of data implies charges for DPC (Data Publishing Charges) of $120 USD, and for data packages over 20 GB, there is an additional cost up to $50 for each additional 10 GB. However, there are institutional sponsors (associations, libraries, or companies) that cover these charges. Dryad welcomes data submission with any published article. Moreover, it offers direct integration during the peer-reviewing process for 107 journals, as found in a recent survey. Editors are encouraged to integrate submission capabilities into their sites.

Figshare [94] is a service that allows the deposition of any electronic information for owning and sharing. Since 2011, it has supported researchers from all disciplines and given a digital object identifier to every document. It allows to bring credit to the authors of these documents [180]. Academics can open an account without any cost and upload files with a size up to 5 GB. Unlimited data storage space for public data is also available. For private archives, the service offers several sharing alternatives (private link, collaborative spaces, and collections) that are accessible from a profile page. Publishers and research organizations have available a set of services to manage data and other contents.

Zenodo [179] is a service developed by the CERN (European Organization for Nuclear Research) as part of the OpenAIRE initiative to promote open scholarships, discoverability, and reusability of research publications, and data. All research outputs, regardless of the format or source, are accepted with up to 50 GB per dataset without any cost. The service presents similar features than other service providers such as DOI assignation, management based on collections, and flexible licensing. It has the added value of generating reports for the funding agency within the European Commission automatically. Extended grant support for the National Science Foundation (US), Wellcome Trust (UK), Foundation for Science and Technology (Portugal), National Health and Medical Research Council (Australia), Netherlands Organization for Scientific Research (The Netherlands), Australian Research Council (Australia), Ministry of Science and Education (Croatia), and the Ministry of Education, Science and Technological Development (Serbia) is reported as a feature of the service.

3.3.3. Scientometrics and Altmetrics

Scholarly and peer-reviewed documents can remain relevant long after being published. However, the success of a particular article or author cannot be reduced to a recipe. The prestige, influence, and relevance of any scholarly work are arguably hard to measure. However, there are quantitative characteristics that can be traced easily after its publication. Scientometrics is a term attributed to Vassiliy Vasiilievich Nalimov that describes the measurement of scientific activities [181]. This discipline quantifies the amount of money invested in research and development, the scientific personnel, and the production of intellectual property. A very relevant subset of scholars is focused on the analysis of the publications and their properties. A classical definition of bibliometrics is the application of mathematics and statistical methods to books on other media of communication [182].

The number of citations and the impact factor is the most common means to quantify the success of authors and journals. At the simplest level, the journal impact factor indicates the average number of times that an article in a journal is referenced by other manuscripts. The impact factor is calculated considering a period of two years; for example, the impact factor of a publication in 2011 is calculated by dividing the number of citations received in 2011 to the number of articles published in 2009 and 2010 with a given journal with the number of articles published in that period [9].

Services such as Web of Science [183], Scopus [40], or Google Scholar [184] offer public records of a total number of citations, citation by year, and other metrics for thesis, conference proceedings, articles, and journals. Eugene Garfield, the founder of the Institute for Scientific Information, devised the impact factor in 1955 [185]. The impact factors are listed in the Journal Citation Report, which was developed in the 1970s by Eugene Garfield and Irving Sher. Over the years, the Institute for Scientific Information has gone through several sales and acquisitions. Today, Journal Citation Reports is an annual publication by Clarivate Analytics. These rankings have special meaning when we are trying to issue a paper with the best coverage, biggest impact, and the most prestigious journal. Scientometrics study several aspects of the dynamics of science, with citation a core element that establishes formal links between the cited publications at the time. Scientometrics is the study of the quantitative aspects of the process of science as a communication system. Some others widely used indicators are the Journal Impact Factor (JIF), the Immediacy Index, Cited half-life, SJR (Scimago Journal Rank), SNIP (Source Normalized Impact per Paper), and h-index [186].

Knowing the Scientometric indicators can help scholars evaluate the quality of scientific contributions. These metrics are also for appraising the degree of success of projects funded by governments, public entities, and private organizations. Furthermore, Scientometrics can be a decisive factor for assigning jobs, endorsing promotions, and granting or denying scholarships and other forms of financial support. Publications in journals with a high impact index benefit the researcher and the institution that hosts the author. The researcher can climb on his scientific career and gain recognition in their field. For the institution, university, or research center, the benefit is having researchers assiduously publishing on impact journals [187]. Recently, social media has acquired a leading role in the means of how information is spread. For science, the extended use of the social web makes these tools and services an important source of information to consider. In the end, social media may have the potential to inform about the societal impact of science.

Altmetrics is a broad class of statistics which attempt to capture research impact based on the social web. The term is short for alternative metrics and comprises indicators able to capture the diffusion of articles online. Influence of an author, article, or journal is measured by the outspread in general social media services such as blogs, Facebook, Twitter, or YouTube. Compared to Scientometrics, they provide insights into the relevance of a particular topic from a boarder general audience. It is quicker than citation tracking because it is not restraint by the fulfillment of a complete peer-reviewed publication process. These indicators can be considered as an alternative or a complement to Scientometric indicators, which are available mainly on expensive license agreements and a small number of providers. The Altmetrics data can be retrieved from a variety of sources [188,189,190,191].

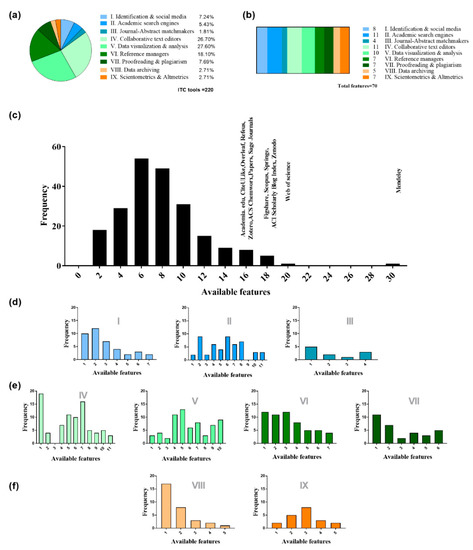

3.4. Review Result

Table 2 describes the individual and overall scores after the development of the systematic review. The individual values correspond to the taxonomy of nine categories above mentioned (see Figure 1c). A more comprehensive analysis of the systematized review results is depicted in Figure 3. Once the methodology for collection and exclusion was applied, a total of 220 software tools were selected and evaluated. The number of individual samples per category varied considerably due to the software available during the review. As an example, the minimum amount of software per category was 2.71% (VIII and IX) and a maximum of 27.60% for category V (see Figure 3a). All the tools mentioned were evaluated to determine if they complied with the attributes described in Table 1 and the supplementary files. The number of traits varied from 4 to 11 attributes (see Figure 3b). The overall score (see Figure 3c) shows that the tool with the highest number of qualities was Mendeley with 29 of 70 functions (approximately 40%). Other noteworthy tools are Web of Science, Figshare, Scopus, Springer, ACI Scholarly Blog Index, and Zenodo. Figure 3d–f show for each category the attribute frequency for before, during, and after submission. To explore this data more thoroughly a supplementary file has been prepared (Supplementary File S2).

Table 2.

Top-ranked assisting ITC tools.

Figure 3.

ITC tools analysis; (a) distribution of characterized tools by nominal category, (b) number of features analyzed by category; (c) frequency versus number of overall available features of ITC Tools, (d) frequency versus number of features of available features of pre-submission ITC tools (categories I, II and III); (e) frequency versus number of features of available features of during submission ITC tools (categories IV, V, VI and VII); and (f) frequency versus number of features of available features of post-submission ITC tools (categories VIII and IX). More information about the scoring with identification number (within brackets) in Supplementary File S2.

The overall score column in Table 2 shows the sum of the traits detected regardless of the software category. Mendeley, Web of Science, and Scopus tools obtained the highest score among all the reviewed software. Considering that an uneven number of traits compose the categories, the score should not be an indication of quality, but rather a sign of the degree of flexibility of the ITC tool to assist the peer-reviewing process.

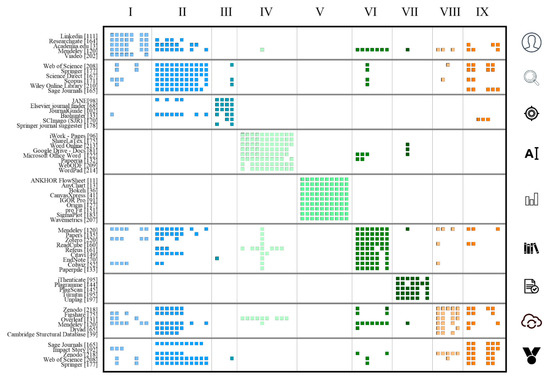

A closer look at the disposition among the top-ranked ITC tools (see Figure 4) shows that excelling within several categories concur with an overall high score (i.e., Mendeley, SAGE journals and Springer services appears multiple times in the curated selection). Contrary to expectations, a few examples did not have a prominent place in a particular category, but were still ranked with an overall high score. For instance, ACI scholarly blog index, CiteUlike, Papers [192], ACS Chemworx, Pubchase, and Authorea possess a wide variety of features without being ranked as one of the top five in any category. A likely explanation for this peculiarity is that the online web-based platform that these services share supports more versatile solutions. ACS Chemworx, Pubchase, and Citeulike combine features from reference managers with social media and Scientometrics, while Papers is a reference manager that incorporate powerful academic search engine capabilities.

Figure 4.

Top-ranked ITC tools by category trait map.

One difficulty for assessing the systematic review is the uneven size of tools and categories. However, it is not impossible to consider the number of available tools as evidence of the unequal interest of the audience for different types of software. More scholarly specific categories such as Journal-Abstract matchmakers, Scientometrics and Altmetrics, and Data archiving solutions are limited to smaller audiences and consequently represented with fewer applications. Other categories, such as Identification and Social Media and Proofreading and Plagiarism detection, rank in the middle of the number of applications available. We consider that the most thriving categories are those related to tasks performed during the composition of the drafts upon submission. For most scholars, writing a fully-featured manuscript that complies with the requirements established by an academic authority is fundamental for their roles. A glance at the origin of the ITC tools shows three developing sources with different motivations and goals. The first group is formed by publishers (i.e., Elsevier, Wiley, and Springer) that employ these applications as distribution channels of their editorial products (journals, databases, and search engines). A second group is composed of small software companies or individuals with specific expertise. This know-how is a particular technology that is bundled for its commercialization. Hence, there is a very competitive application ecosystem among data visualization, reference managers, and collaborative text editors. These are bolstered by software developed as a spinoff product of a school project or thesis dissertation. The third group is composed of enterprises conceived to cover the existing gap for supporting scholarly activities. While most of the products are grounded on a single category, they tend to fulfill a broader range of features as their existence is not necessarily tied up to existing products. In our point of view, app ecosystem and software licensing as a service (SAS) are monetizing mechanisms that encourage new developers to engage in these markets. The ability of developers to prove usability by integrating the functionalities performed by software before, during, and after submission into the current tools or creating new platforms from scratch will continue to be the approach to improve usability.

The relationship between taxonomy groups pushes the growth of ITC tools. A study carried out by Yu showed the effectiveness of the Researchgate score that can be used for measuring individual research performance [55]. Compared with subscription-based research information service (SciVal of Elsevier or Research Analytics of Thomson Reuters), more researchers have access to the ResearchGate service.

3.5. Top-Ranked ITC Tools

The first five top-ranked applications from the reviewing process were extracted from different categories (see Table 3): Mendeley (VI-Reference manager), Web of Science (II-Academic Search Engine), Scopus (II-Academic search engine), Springer (II-Academic search engine), and Figshare (VIII-Data archiving). It shows that the versatility to assist the peer-reviewing process comes from very different origins. Moreover, the distribution of the business model shows that connectivity is relevant as an added value in Business to Business scenarios.

Table 3.

Assisting software for the peer-review process taxonomy and function.

Mendeley is a desktop application with support for cloud services that rely on connectivity with third-party services and with the support provided by the Elsevier editorial group. In contrast, Web of Science and Scopus are commercialized products within the business-to-business approach to provide services to universities and other organizations, including service providers. Springer and Figshare assist the authors with the process to increase the amount of information (drafts and data) submitted to their databases.

4. Conclusions

The lack of a framework of available tools limits the possible benefits of the use of ITC technologies. A theoretical framework that covers the requirements and options of these technologies will encourage the appropriate selection of ITC tools. The development of a theoretical framework on the study of peer-reviewing requirements and options will encourage a more efficient selection and promotion of technological tools by representatives of organizations. The development of the present study showed a spectrum of options depending on the type of activity performed during the peer-reviewing process. Among the categories, there are significant differences between the available options. The systematic review shows that there is not a single tool capable of solving all the needs of scholars during the peer-reviewing process. The best-evaluated solutions were able to solve between 30% and 40% of the functions. The analysis within each category shows that several options can offer the core functions of a particular type of software. Moreover, this study suggests that scholars may find software solutions from other categories than the nominal core as an added value. Reference managers, academic search engines, and collaborative text editors showed the most significant advances in convergence.

While integration has been assessed as a secondary trait, it is necessary to develop further studies to determine more thoroughly the quality of the software and its usability. We foresee that in the future, it will be possible to find suites specifically designed to assist the peer-review process. These tools will be integrated on web platforms to increase the productivity and visibility of research.

5. Study Limitations

When interpreting the results of this study, some limitations should be taken into consideration. First, the criteria for the taxonomy are partially biased on the interpretation of the constituent duties and tasks involved in the peer-reviewing process. Hence, the search strategy may be ultimately neglecting some tools that might be relevant for a specific field of study. Secondly, the data extraction of the literature review was grounded solely on the abstract section for some cases when the full access was unavailable. Finally, the availability of certain features may change among versions of particular ITC tools.

Supplementary Materials

The following are available online at https://www.mdpi.com/2304-6775/7/3/59/s1, File S1: Taxonomy and features of software, File S2: Complete list of reviewed software.

Author Contributions

Conceptualization, J.I.M.-L. and S.B.-G.; methodology, A.M.-L. investigation, S.B.-G. and J.I.M.-L.; A.M.-L.; writing—review and editing, J.I.M.-L.; visualization, J.-I.M.L.; supervision, A.M.-L.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Borgman, C.L. Scholarship in the Digital Age: Information, Infrastructure, and the Internet, 1st ed.; The MIT Press: Cambridge, MA, USA, 2010; ISBN 978-0-262-51490-3. [Google Scholar]

- Matthews, J.R.; Matthews, R.W. Successful Scientific Writing: A Step-by-Step Guide for the Biological and Medical Sciences, 4th ed.; Cambridge University Press: Cambridge, UK, 2014; ISBN 978-1-107-69193-3. [Google Scholar]

- McMillan, V.E. Writing Papers in the Biological Sciences, 5th ed.; Bedford/St. Martin’s: Boston, MA, USA, 2011; ISBN 978-0-312-64971-5. [Google Scholar]

- Heard, S.B. The Scientist’s Guide to Writing: How to Write More Easily and Effectively throughout Your Scientific Career; Princeton University Press: Princeton, NJ, USA, 2016; ISBN 978-0-691-17022-0. [Google Scholar]

- Hanauer, S. How to Get Published. Available online: http://www.uta.fi/kirjasto/koulutukset/tutkijakoulutus/elsevier_seminaari_211114/Get%20Published%20Quick%20Guide_updatedurl.pdf (accessed on 12 August 2019).

- Author and Reviewer Tutorials-How to Peer Review | Springer. Available online: https://www.springer.com/gp/authors-editors/authorandreviewertutorials/howtopeerreview (accessed on 12 August 2019).

- Journal Authors. Available online: https://www.elsevier.com/authors/journal-authors (accessed on 12 August 2019).

- PLoS ONE: Accelerating the Publication of Peer-Reviewed Science. Available online: http://journals.plos.org/plosone/s/submission-guidelines (accessed on 12 August 2019).

- Morris, S.; Barnas, E.; LaFrenier, D.; Reich, M. The Handbook of Journal Publishing, 1st ed.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2013; ISBN 978-1-107-02085-6. [Google Scholar]

- Wager, E.; Kleinert, S. Cooperation Between Research Institutions and Journals on Research Integrity Cases: Guidance from the Committee on Publication Ethics COPE. Acta Inform. Med. 2012, 20, 136. [Google Scholar] [CrossRef] [PubMed]

- Brian Paltridge Learning to review submissions to peer reviewed journals: How do they do it? Int. J. Res. Dev. 2013, 4, 6–18.

- PLoS ONE: Accelerating the Publication of Peer-Reviewed Science. Available online: http://journals.plos.org/plosone/s/reviewer-guidelines (accessed on 12 August 2019).

- Wiley: Wiley-Publons Pilot Program Enhances Peer-Reviewer Recognition. Available online: http://www.wiley.com/WileyCDA/PressRelease/pressReleaseId-116922.html (accessed on 12 August 2019).