Peer Review of Reviewers: The Author’s Perspective

Abstract

1. Introduction

2. Methods

3. Results and Discussion

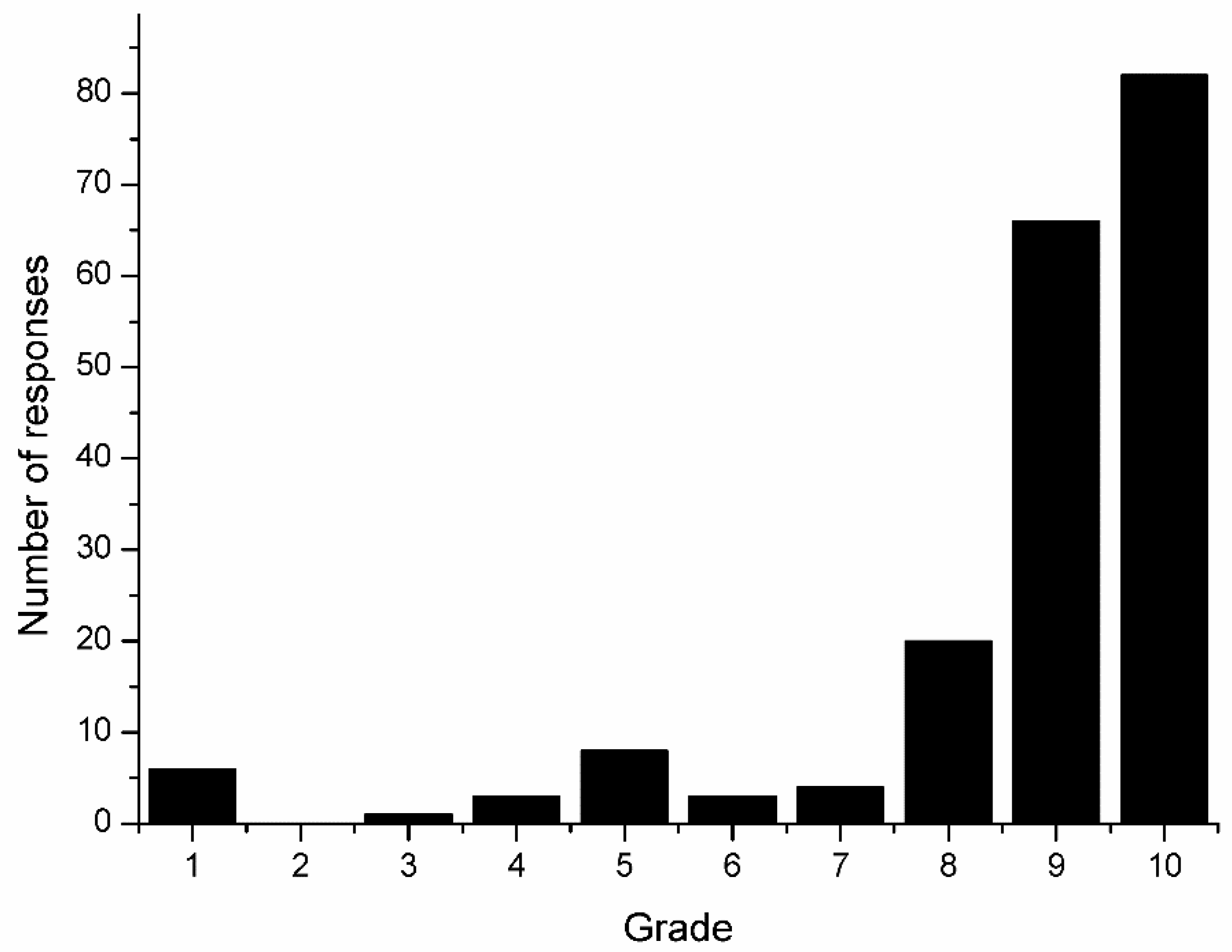

3.1. General Data

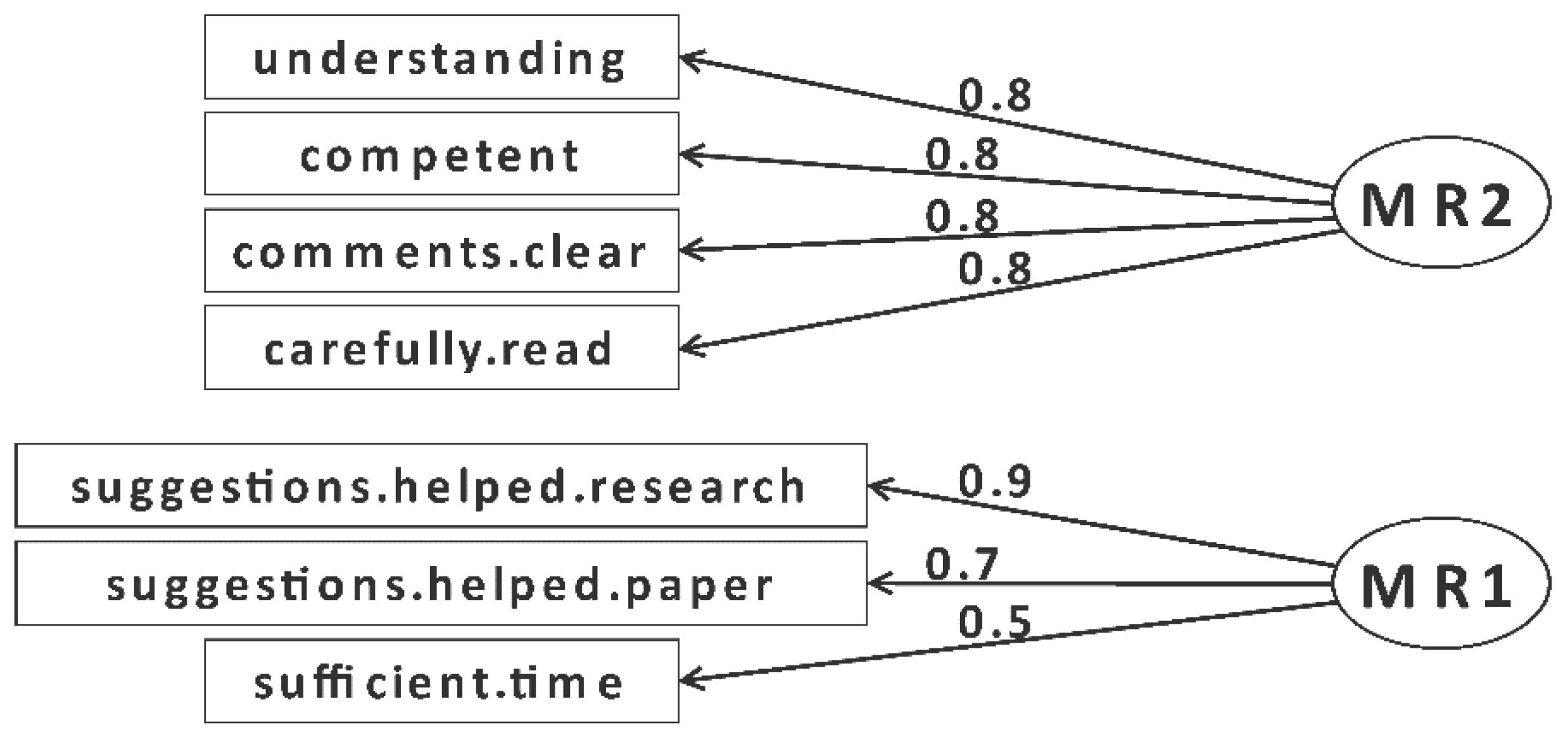

3.2. Data Analysis—Mixed-Effects Model

3.3. The Content Analysis of Textual Answers

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Statement

Conflicts of Interest

References

- Fletcher, R.H.; Fletcher, S.W. Evidence for the Effectiveness of Peer Review. Sci. Eng. Ethics 1997, 3, 35–50. [Google Scholar] [CrossRef]

- Davidoff, F. Improving Peer Review: Who’s Responsible? BMJ 2004, 328, 657–658. [Google Scholar] [CrossRef] [PubMed]

- DiDomenico, R.J.; Baker, W.L.; Haines, S.T. Improving Peer Review: What Reviewers Can Do. Am. J. Health. Syst. Pharm. 2017, 74, 2080–2084. [Google Scholar] [CrossRef] [PubMed]

- Gibson, M.; Spong, C.Y.; Simonsen, S.E.; Martin, S.; Scott, J.R. Author Perception of Peer Review. Obstet. Gynecol. 2008, 112, 646–652. [Google Scholar] [CrossRef]

- Squazzoni, F.; Brezis, E.; Marušić, A. Scientometrics of Peer Review. Scientometrics 2017, 113, 501–502. [Google Scholar] [CrossRef] [PubMed]

- Huisman, J.; Smits, J. Duration and Quality of the Peer Review Process: The Author’s Perspective. Scientometrics 2017, 113, 633–650. [Google Scholar] [CrossRef] [PubMed]

- Korngreen, A. Peer-Review System Could Gain from Author Feedback. Nature 2005, 438, 282. [Google Scholar] [CrossRef]

- Weber, E.J.; Katz, P.P.; Waeckerle, J.F.; Callaham, M.L. Author Perception of Peer Review: Impact of Review Quality and Acceptance on Satisfaction. JAMA 2002, 287, 2790–2793. [Google Scholar] [CrossRef]

- Dekanski, A.; Drvenica, I.; Nedic, O. Peer-Review Process in Journals Dealing with Chemistry and Related Subjects Published in Serbia. Chem. Ind. Chem. Eng. Q. 2016, 22, 491–501. [Google Scholar] [CrossRef]

- It’s Not the Size that Matters. Available online: https://publons.com/blog/its-not-the-size-that-matters/ (accessed on 28 February 2018).

- Ausloos, M.; Nedic, O.; Fronczak, A.; Fronczak, P. Quantifying the Quality of Peer Reviewers through Zipf’s Law. Scientometrics 2016, 106, 347–368. [Google Scholar] [CrossRef]

- Tomkins, A.; Zhang, M.; Heavlin, W.D. Reviewer Bias in Single—Versus Double-Blind Peer Review. Proc. Natl. Acad. Sci. USA 2017, 114, 12708–12713. [Google Scholar] [CrossRef]

- Fiala, D.; Havrilová, C.; Dostal, M.; Paralič, J.; Fiala, D.; Havrilová, C.; Dostal, M.; Paralič, J. Editorial Board Membership, Time to Accept, and the Effect on the Citation Counts of Journal Articles. Publications 2016, 4, 21. [Google Scholar] [CrossRef]

- Walker, R.; Barros, B.; Conejo, R.; Neumann, K.; Telefont, M. Personal Attributes of Authors and Reviewers, Social Bias and the Outcomes of Peer Review: A Case Study. F1000Research 2015, 4, 21. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.W.; Chi, C.-H.; van den Heuvel, W.-J. Imperfect Referees: Reducing the Impact of Multiple Biases in Peer Review. J. Assoc. Inf. Sci. Technol. 2015, 66, 2340–2356. [Google Scholar] [CrossRef]

- Siler, K.; Lee, K.; Bero, L. Measuring the Effectiveness of Scientific Gatekeeping. Proc. Natl. Acad. Sci. USA 2015, 112, 360–365. [Google Scholar] [CrossRef] [PubMed]

- Fein, C. Multidimensional Journal Evaluation of PLoS ONE. Libri 2013, 63, 259–271. [Google Scholar] [CrossRef]

- Moizer, P. Publishing in Accounting Journals: A Fair Game? Account. Organ. Soc. 2009, 34, 285–304. [Google Scholar] [CrossRef]

- Bornmann, L.; Daniel, H.-D. How Long Is the Peer Review Process for Journal Manuscripts? A Case Study on Angewandte Chemie International Edition. Chimia 2010, 64, 72–77. [Google Scholar] [CrossRef]

- Kljaković-Gašpić, M.; Hren, D.; Marušić, A.; Marušić, M. Peer Review Time: How Late Is Late in a Small Medical Journal? Arch. Med. Res. 2003, 34, 439–443. [Google Scholar] [CrossRef]

- Lyman, R.L. A Three-Decade History of the Duration of Peer Review. J. Sch. Publ. 2013, 44, 211–220. [Google Scholar] [CrossRef]

- García, J.A.; Rodriguez-Sánchez, R.; Fdez-Valdivia, J. Why the Referees’ Reports I Receive as an Editor Are so Much Better than the Reports I Receive as an Author? Scientometrics 2016, 106, 967–986. [Google Scholar] [CrossRef]

- García, J.A.; Rodriguez-Sánchez, R.; Fdez-Valdivia, J. Authors and reviewers who suffer from confirmatory bias. Scientometrics 2016, 109, 1377–1395. [Google Scholar] [CrossRef]

- Sedikides, C.; Strube, M. The multiply motivated self. Person. Soc. Psychol. Bull. 1995, 21, 1330–1335. [Google Scholar] [CrossRef]

- Coleman, M.D. Emotion and the Self-Serving Bias. Curr. Psychol. 2011, 30, 345–354. [Google Scholar] [CrossRef]

- Gelman, A.; Hill, J. Data Analysis Using Regression and Multilevel/Hierarchical Models; Cambridge University Press: Cambridge, UK, 2006; ISBN 9780511790942. [Google Scholar]

- Oosterhaven, J. Too Many Journals? Towards a Theory of Repeated Rejections and Ultimate Acceptance. Scientometrics 2015, 103, 261–265. [Google Scholar] [CrossRef] [PubMed]

- Starck, J.M. Scientific Peer Review; Springer Spektrum: Wiesbaden, Germany, 2017; ISBN 978-3-658-19915-9. [Google Scholar]

- Fox, C.W. Difficulty of Recruiting Reviewers Predicts Review Scores and Editorial Decisions at Six Journals of Ecology and Evolution. Scientometrics 2017, 113, 465–477. [Google Scholar] [CrossRef]

| Journal Name (ISSN) | No. of Responses | Portion, % |

|---|---|---|

| The Archives of Biological Sciences (1821-4339) | 11 | 5.7 |

| Chemical Industry & Chemical Engineering Quarterly (2217-7434) | 20 | 10.4 |

| Društvena istraživanja (1848-6096) | 2 | 1.0 |

| Hemijska industrija/Chemical Industry (2217-7426) | 7 | 3.6 |

| International Comparative Jurisprudence (2351-6674) | 2 | 1.0 |

| International Journal of the Commons (1875-0281) | 1 | 0.5 |

| Journal of Electrochemical Science and Engineering (1847-9286) | 16 | 8.3 |

| Journal of the Serbian Chemical Society (1820-7421) | 106 | 54.9 |

| Ljetopis socijalnog rada/Annual of Social Work (1848-7971) | 10 | 5.2 |

| Mljekarstvo (1846-4025) | 1 | 0.5 |

| Muzikologija (2406-0976) | 14 | 7.3 |

| Pravni zapisi (2406-1387) | 1 | 0.5 |

| Preventivna pedijatrija (2466-3247) | 2 | 1.0 |

| Question | Average Grade (No. of Responses) | ||

|---|---|---|---|

| Acc. (179) | Rej. (14) | Total (193) | |

| After how many weeks, after submitting the manuscript, did you get reports? | 8.2 | 16.1 | 8.8 |

| How many reports have you received? | 1.9 | 1.2 | 1.8 |

| Did the reviewer show reasonable understanding of your work? (1—not at all… 5—fully) | 4.5 | 2.6 | 4.4 |

| Do you think that the reviewer was competent to review your paper? (1—not at all… 5—fully competent) | 4.6 | 2.8 | 4.5 |

| According to your estimation, did the reviewer carefully and thoroughly read the paper? (1—not at all… 5—yes, very carefully and thoroughly) | 4.7 | 3.0 | 4.6 |

| Were the reviewer’s comments clear? (1—not at all… 5—yes, completely clear) | 4.7 | 2.6 | 4.6 |

| Did the reviewer’s comments, suggestions… help you to improve the quality of the paper? (1—not at all… 5—yes, very much) | 4.5 | 2.5 | 4.4 |

| Do you think that the reviewer’s comments, suggestions… will be useful for your upcoming research? (1—not at all… 5—very useful) | 4.3 | 2.5 | 4.2 |

| According to your impression, did the reviewer dedicate sufficient time to review? (1—not at all… 5—adequate time) | 4.5 | 3.2 | 4.4 |

| Please give an overall assessment grade of the reviewer (1—Bad… 10—Excellent) | 8.9 | 4.7 | 8.7 |

| Duration of the survey completion (sec) | 1164 | 1381 | 1246 |

| Random Effects | |||||

|---|---|---|---|---|---|

| Group Name | SD 1 | ||||

| Author (Intercept) | 0.968 | ||||

| Journal (Intercept) | 0.335 | ||||

| Residual | 1.418 | ||||

| Fixed Effects | Estimate | SE 2 | df | t | p |

| (Intercept) | 8.482 | 0.471 | 156.18 | 18.023 | 0.000 *** |

| No. of weeks | −0.027 | 0.013 | 220.33 | 2.037 | 0.043 * |

| No. of reports | −0.259 | 0.131 | 155.35 | 1.974 | 0.050 * |

| Final decision: Rejected | −4.106 | 0.484 | 248.66 | 8.491 | 0.000 *** |

| Speed: On time | 1.122 | 0.352 | 189.19 | 3.188 | 0.002 ** |

| Speed: Fast | 1.317 | 0.402 | 185.89 | 3.277 | 0.001 ** |

| Coefficients | Estimate | SE | T | p |

|---|---|---|---|---|

| (Intercept) | 8.052 | 0.215 | 37.479 | 0.000 *** |

| No. of weeks | −0.005 | 0.006 | −0.890 | 0.375 |

| No. of reports | −0.026 | 0.066 | −0.392 | 0.695 |

| Final decision: Rejected | −0.165 | 0.259 | −0.637 | 0.525 |

| Speed: On time | 0.738 | 0.169 | 4.369 | 0.000 *** |

| Speed: Fast | 0.838 | 0.194 | 4.321 | 0.000 *** |

| Competence factor | 1.423 | 0.067 | 21.101 | 0.000 *** |

| Helpfulness factor | 1.024 | 0.055 | 18.678 | 0.000 *** |

| Coefficients | Estimate | SE | t | p |

|---|---|---|---|---|

| (Intercept) | 0.336 | 0.237 | 1.422 | 0.157 |

| No. of weeks | −0.010 | 0.007 | −1.420 | 0.157 |

| No. of reports | −0.162 | 0.072 | −2.240 | 0.026 * |

| Final decision: Rejected | −2.173 | 0.245 | −8.867 | 0.000 *** |

| Speed: On time | 0.234 | 0.186 | 1.255 | 0.211 |

| Speed: Fast | 0.228 | 0.213 | 1.073 | 0.285 |

| Decision coherent: No | 0.298 | 0.372 | 0.801 | 0.424 |

| Coefficients | Estimate | SE | T | p |

|---|---|---|---|---|

| (Intercept) | −0.216 | 0.290 | −0.743 | 0.459 |

| No. of weeks | −0.014 | 0.008 | −1.704 | 0.090 |

| No. of reports | 0.075 | 0.089 | 0.844 | 0.400 |

| Final decision: Rejected | −0.771 | 0.301 | −2.566 | 0.011 * |

| Speed: On time | 0.233 | 0.228 | 1.020 | 0.310 |

| Speed: Fast | 0.335 | 0.261 | 1.283 | 0.201 |

| Decision coherent: No | 0.576 | 0.456 | 1.264 | 0.208 |

| Question | Average Grade (No. of Responses) | ||

|---|---|---|---|

| Acc. | Rej. | Total | |

| Did the reviewer’s comments, suggestions… help you to improve the quality of the paper? (1—not at all… 5—yes, very much). If you wish, please state how? | 4.6 (36) | 2.0 (4) | 4.3 (40) |

| Did you have an impression that some non-scientific factor influenced the review and the final reviewer’s suggestion? * Please explain. | Yes (5) | Yes (3) | Yes (8) |

| Category | Subcategory | No. of Responses |

|---|---|---|

| Authors satisfaction with reviewers’ comments and suggestions for improvement of paper quality | Improvement of the specific part of the paper | 13 |

| General satisfaction | 7 | |

| Additional explanation | 5 | |

| Increase of actuality | 2 | |

| Benefit for future work | 2 | |

| Elimination of unnecessary data | 2 | |

| Different formulation | 2 | |

| Conceptual changes | 1 | |

| Total | 34 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Drvenica, I.; Bravo, G.; Vejmelka, L.; Dekanski, A.; Nedić, O. Peer Review of Reviewers: The Author’s Perspective. Publications 2019, 7, 1. https://doi.org/10.3390/publications7010001

Drvenica I, Bravo G, Vejmelka L, Dekanski A, Nedić O. Peer Review of Reviewers: The Author’s Perspective. Publications. 2019; 7(1):1. https://doi.org/10.3390/publications7010001

Chicago/Turabian StyleDrvenica, Ivana, Giangiacomo Bravo, Lucija Vejmelka, Aleksandar Dekanski, and Olgica Nedić. 2019. "Peer Review of Reviewers: The Author’s Perspective" Publications 7, no. 1: 1. https://doi.org/10.3390/publications7010001

APA StyleDrvenica, I., Bravo, G., Vejmelka, L., Dekanski, A., & Nedić, O. (2019). Peer Review of Reviewers: The Author’s Perspective. Publications, 7(1), 1. https://doi.org/10.3390/publications7010001