Library Assessment Research: A Content Comparison from Three American Library Journals

Abstract

1. Introduction

Either service quality or satisfaction can be an end in itself; each is worthy of examination as a framework for evaluating library services from a customer’s or user’s perspective. By paying proper attention to assessment, service quality, and satisfaction, libraries are, in effect, promoting continuous quality improvement. Improvements lead to change, and library leaders must manage that change and ensure that the library’s assessment plan is realistic and realized. Both service quality and satisfaction should be part of any culture of assessment and evaluation.[1] (p. 120)

2. Literature Review

2.1. Value

2.2. Developing a Service Orientation

2.3. Measuring Quality

2.4. Assessment Trends

3. Methodology

- To what extent have site-specific, front-line library service and general satisfaction assessments studies been published by three internationally recognized, high-impact academic library journals within a recent 5-year period?

- How does the coverage of criteria-qualifying assessment content compare among the three journals?

3.1. Materials

3.2. Procedures

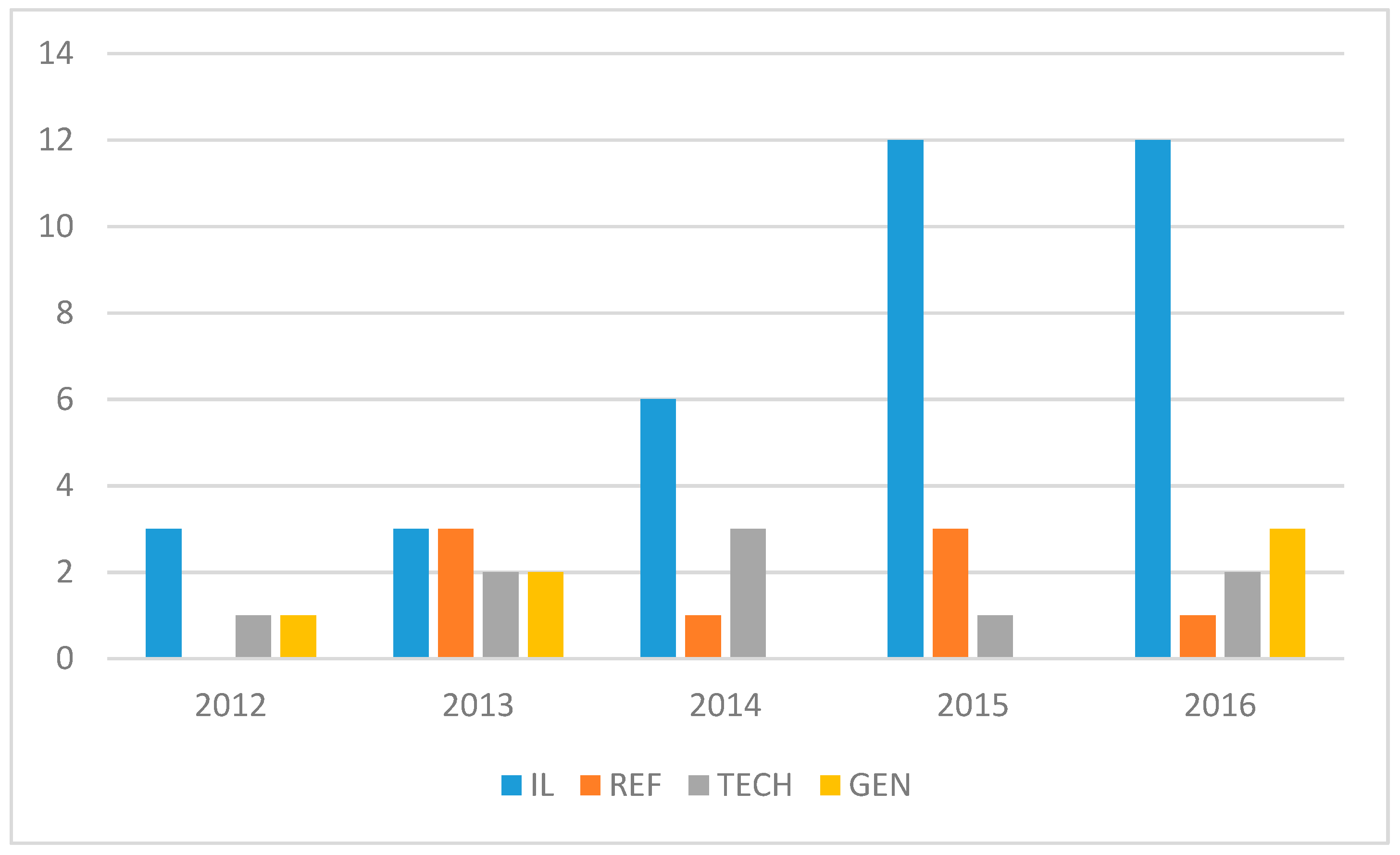

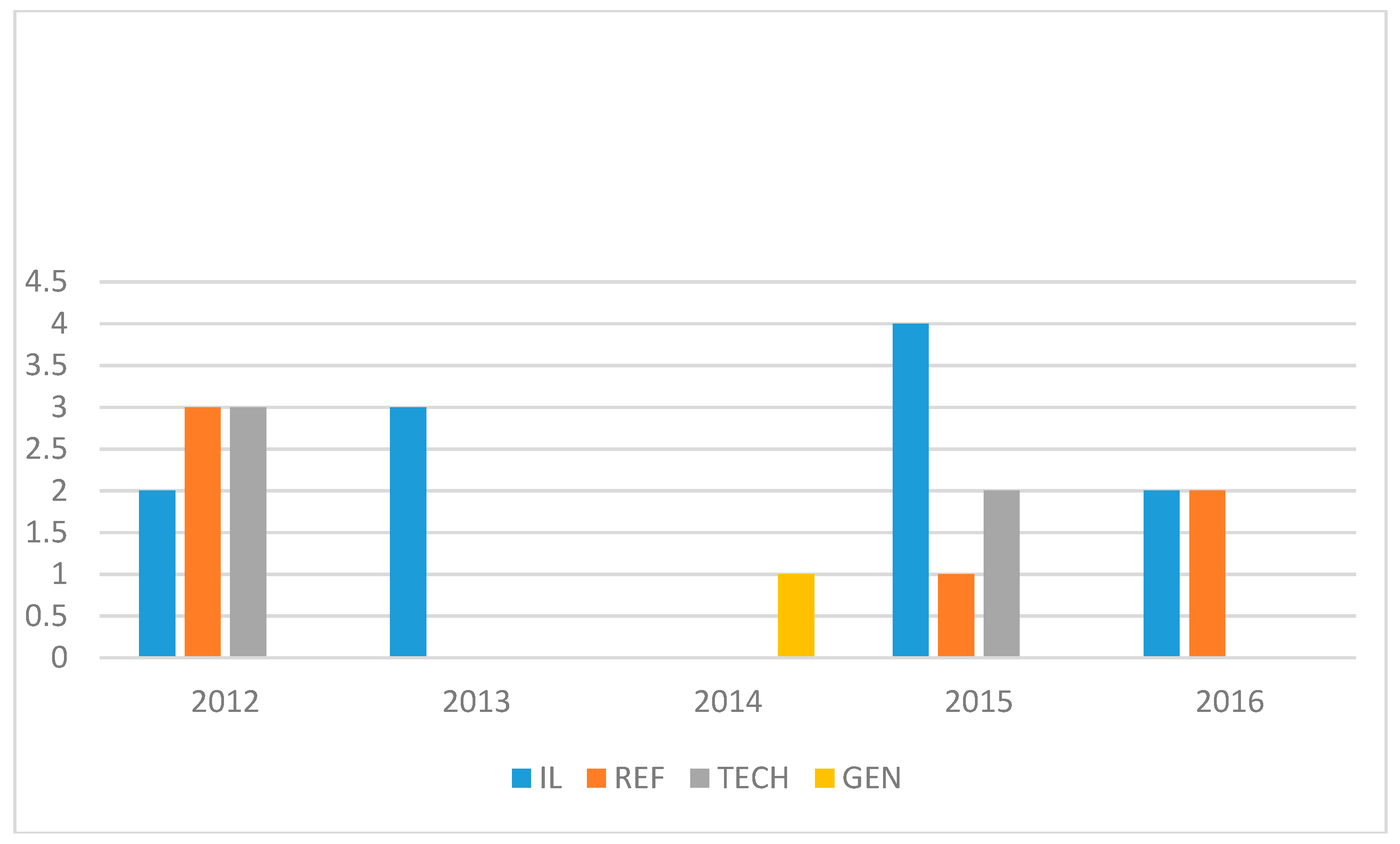

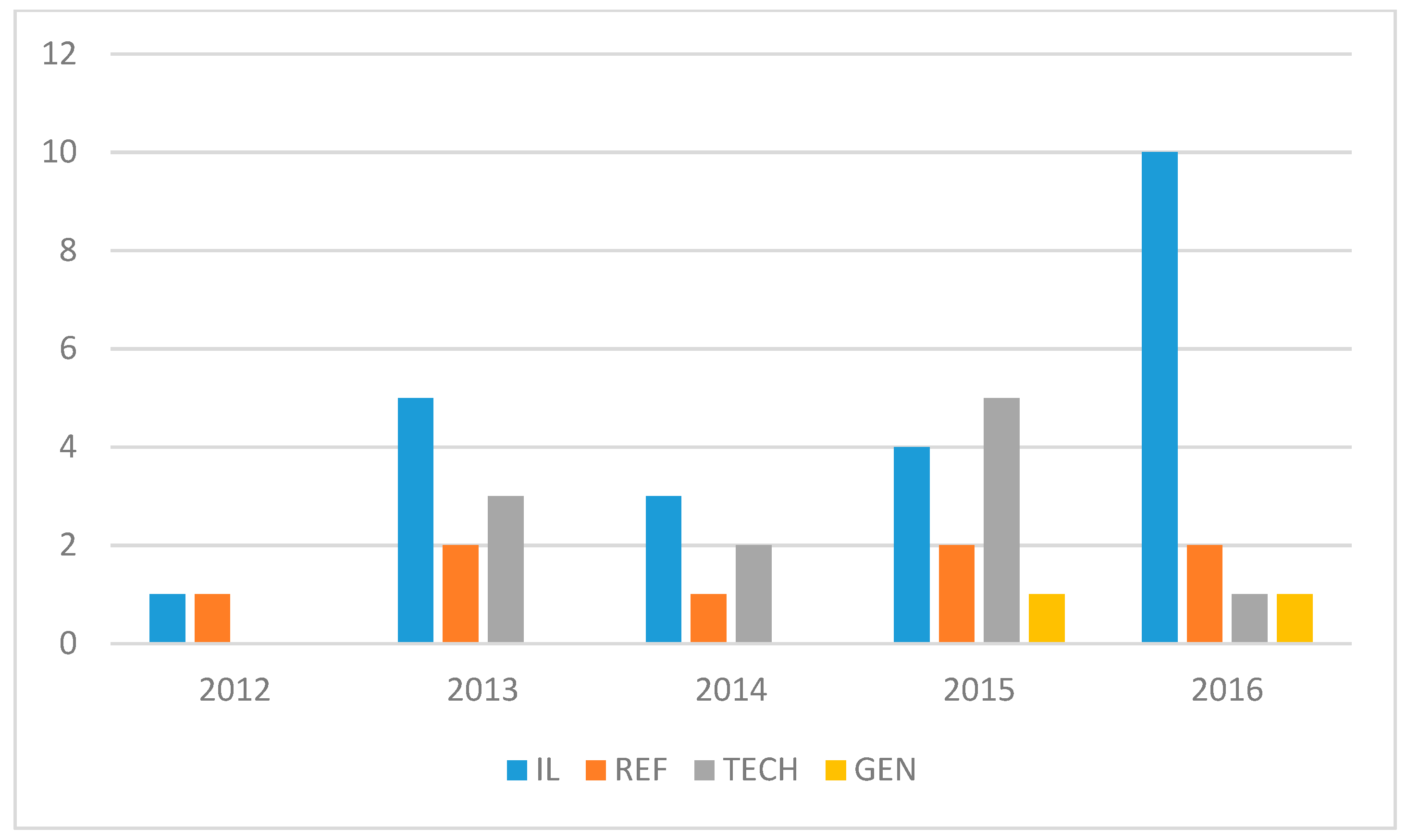

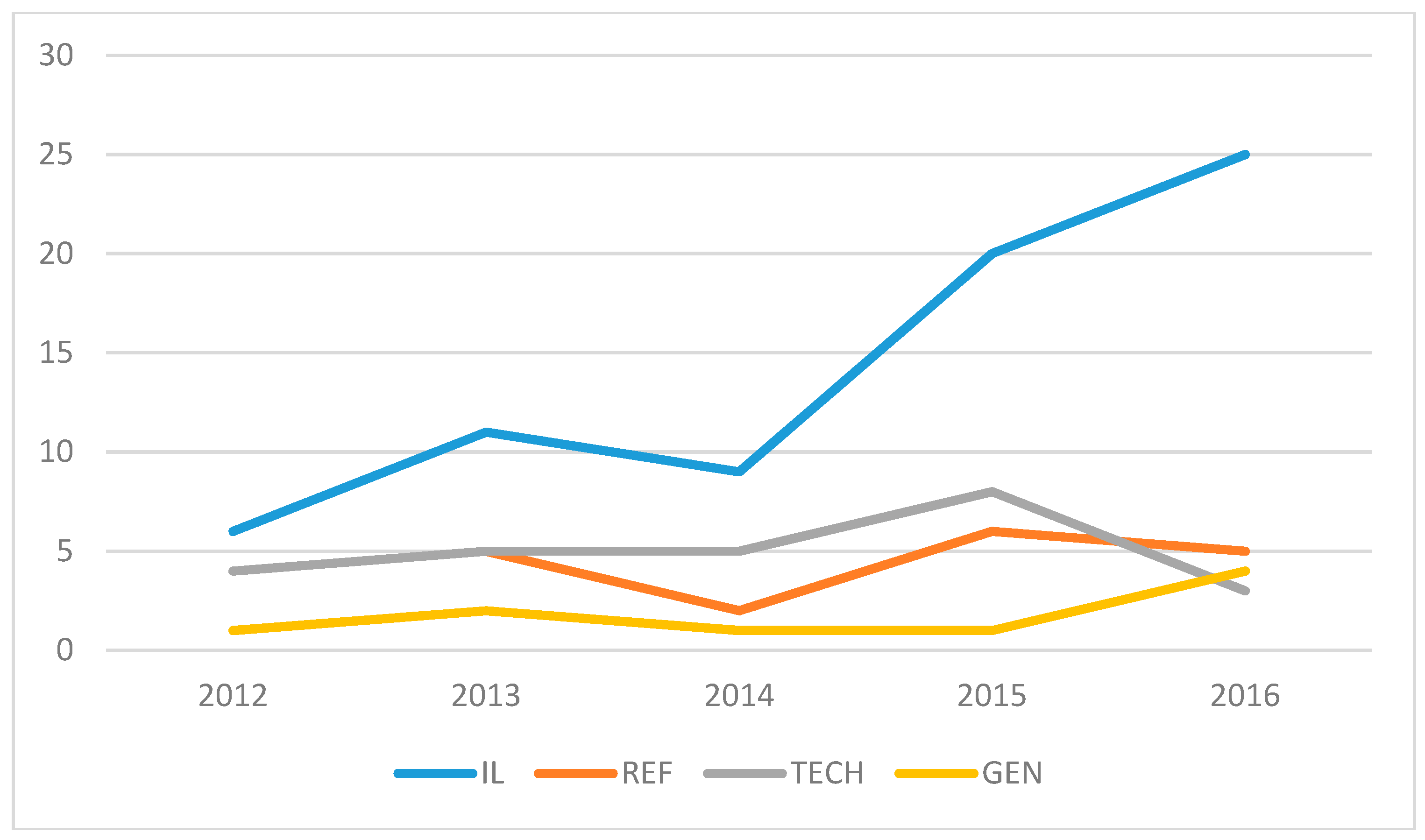

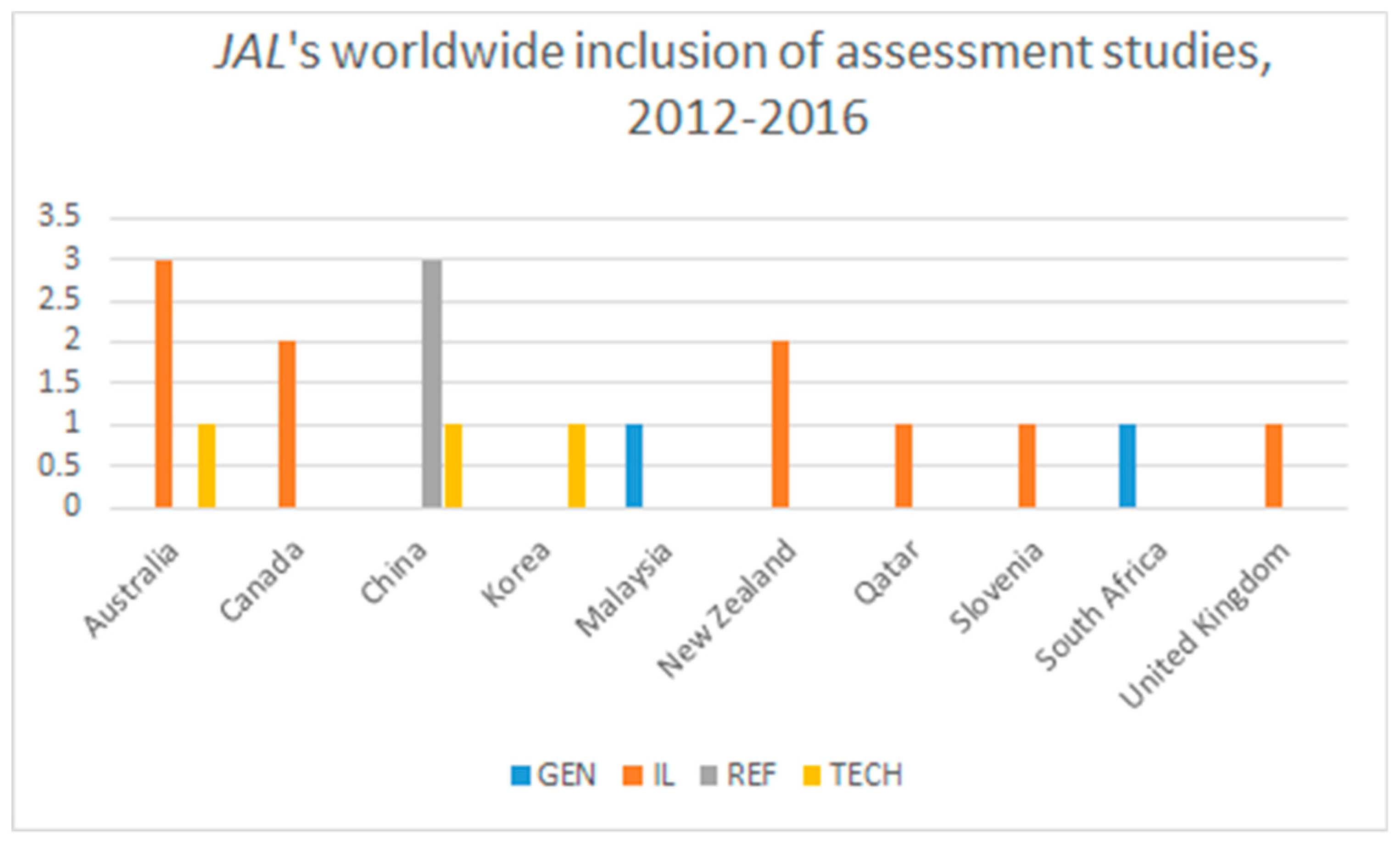

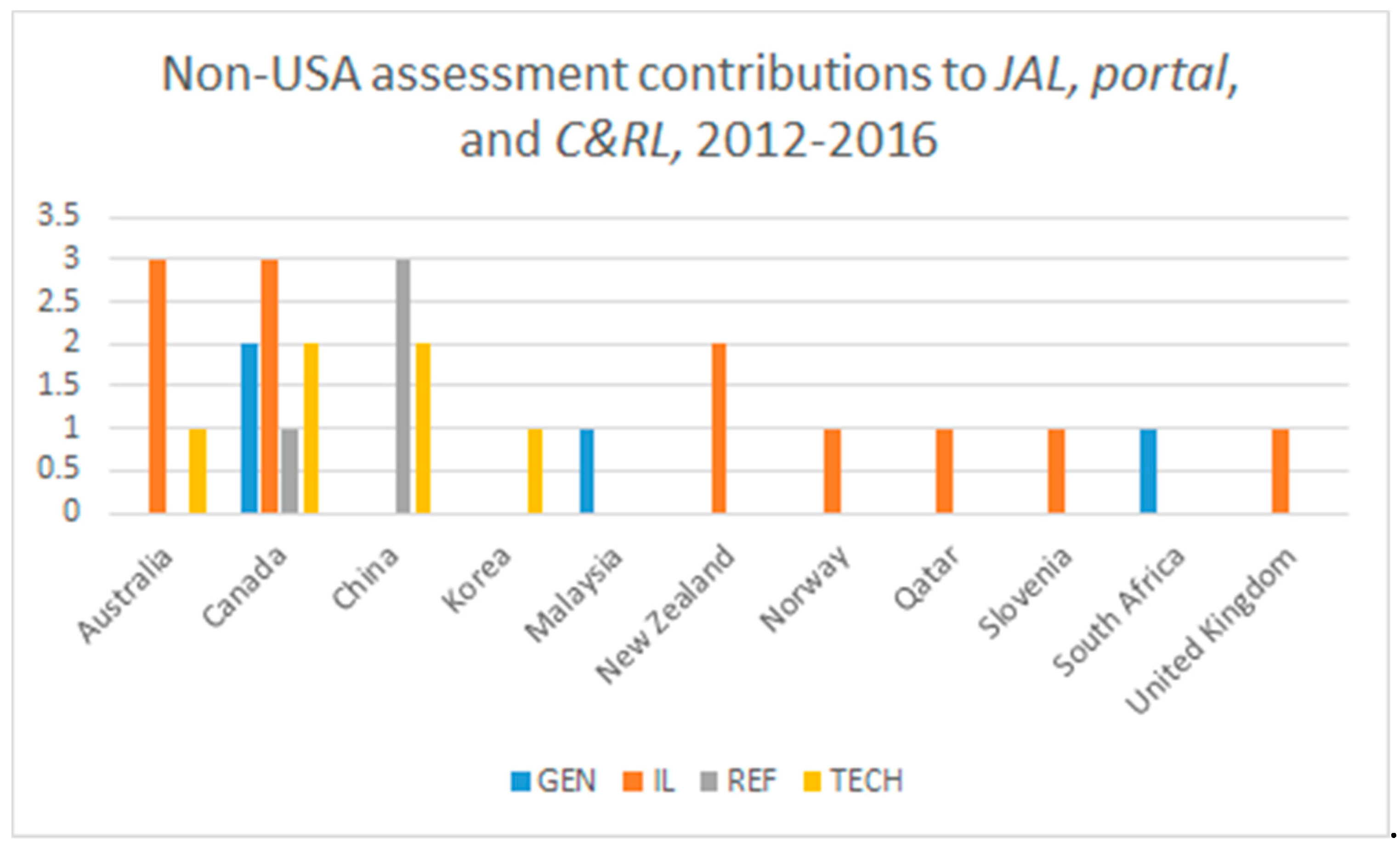

4. Findings

5. Discussion

Further Considerations

6. Conclusions

Author Contributions

Conflicts of Interest

Appendix A

| Journal | Journal of Academic Librarianship | Service | Site |

| Article Title | |||

| v.38n.1 (2012) | Still Digitally Divided? An Assessment of Historically Black College and University Library Web Sites | TECH | USA |

| v.38n.2 (2012) | NA | ||

| v.38n.3 (2012) | An Investigation of Affect of Service Using a LibQUAL+™ Survey and an Experimental Study | IL | USA |

| v.38n.4 (2012) | Approaches to Learning Information Literacy: A Phenomenographic Study | IL | Australia |

| v.38n.5 (2012) | Beyond the Web Tutorial: Development and Implementation of an Online, Self-Directed Academic Integrity Course at Oakland University | IL | USA |

| Assessing the Research Needs of Graduate Students at Georgetown University | GEN | USA | |

| v.39n.1 (2013) | NA | ||

| v.39n.2 (2013) | International Students’ Perception of Library Services and Information Resources in Chinese Academic Libraries | REF | China |

| Reference Reviewed and Re-Envisioned: Revamping Librarian and Desk-Centric Services with LibStARs and LibAnswers | REF | USA | |

| Now it’s Necessary: Virtual Reference Services at Washington State University, Pullman | REF | USA | |

| Measuring the Disparities between Biology Undergraduates’ Perceptions and Their Actual Knowledge of Scientific Literature with Clickers | IL | USA | |

| Designing Authentic Learning Tasks for Online Library Instruction | IL | USA | |

| v.39n.3 (2013) | Web 2.0 and Information Literacy Instruction: Aligning Technology with ACRL Standards | TECH | USA |

| Guided and Team-Based Learning for Chemical Information Literacy | IL | USA | |

| v.39n.4 (2013) | NA | ||

| v.39n.5 (2013) | Wide Awake at 4 AM: A Study of Late Night User Behavior, Perceptions and Performance at an Academic Library | GEN | USA |

| v.39n.6 (2013) | LibQUAL Revisited: Further Analysis of Qualitative and Quantitative Survey Results at the University of Mississippi | GEN | USA |

| Developing Data Management Services at the Johns Hopkins University | TECH | USA | |

| v.40n.1 (2014) | NA | ||

| v.40n.2 (2014) | Integrating Information Literacy into Academic Curricula: A Professional Development Programme for Librarians at the University of Auckland | IL | New Zealand |

| Tying Television Comedies to Information Literacy: A Mixed-Methods Investigation | IL | USA | |

| Higher Education and Emerging Technologies: Shifting Trends in Student Usage | TECH | USA | |

| v.40n.3-4 (2014) | Four Pedagogical Approaches in Helping Students Learn Information Literacy Skills | IL | USA |

| Student Engagement in One-Shot Library Instruction | IL | USA | |

| Library Value in the Classroom: Assessing Student Learning Outcomes from Instruction and Collections | IL | USA | |

| Distance Students’ Attitude Toward Library Help Seeking | REF | USA | |

| A Library and the Disciplines: A Collaborative Project Assessing the Impact of eBooks and Mobile Devices on Student Learning | TECH | USA | |

| v.40n.5 (2014) | Copyright and You: Copyright Instruction for College Students in the Digital Age | IL | USA |

| v.40n.6 (2014) | Web-based Citation Management Tools: Comparing the Accuracy of Their Electronic Journal Citations | TECH | USA |

| v.41n.1 (2015) | Library Instruction and Themed Composition Courses: An Investigation of Factors that Impact Student Learning | IL | USA |

| v.41n.2 (2015) | Language in Context: A Model of Language Oriented Library Instruction | IL | USA |

| The Effectiveness of Online Versus In-person Library Instruction on Finding Empirical Communication Research | IL | USA | |

| NICE Evidence Search: Student Peers’ Views on their Involvement as Trainers in Peer-based Information Literacy Training | IL | UK | |

| Library Instruction for Romanized Hebrew | IL | Canada | |

| Integrating Information Literacy, the POGIL Method, and iPads into a Foundational Studies Program | IL | USA | |

| v.41n.3 (2015) | Exploring Chinese Students’ Perspective on Reference Services at Chinese Academic Libraries: A Case Study Approach | REF | China |

| A New Role of Chinese Academic Librarians—The Development of Embedded Patent Information Services at Nanjing Technology University Library, China | REF | China | |

| v.41n.4 (2015) | “It’s in the Syllabus”: Identifying Information Literacy and Data Information Literacy Opportunities Using a Grounded Theory Approach | IL | USA |

| Student, Librarian, and Instructor Perceptions of Information Literacy Instruction and Skills in a First Year Experience Program: A Case Study | IL | USA | |

| Mapping the Roadmap: Using Action Research to Develop an Online Referencing Tool | TECH | Australia | |

| Beyond Embedded: Creating an Online-Learning Community Integrating Information Literacy and Composition Courses | IL | USA | |

| v.41n.5 (2015) | The Effect of a Situated Learning Environment in a Distance Education Information Literacy Course | IL | USA |

| v.41n.6 (2015) | Comparison of Native Chinese-speaking and Native English-speaking Engineering Students’ Information Literacy Challenges | IL | Canada |

| Standing By to Help: Transforming Online Reference with a Proactive Chat System | REF | USA | |

| Faculty and Librarians’ Partnership: Designing a New Framework to Develop Information Fluent Future Doctors | IL | Qatar | |

| v.42n.1 (2016) | Measuring the Effect of Virtual Librarian Intervention on Student Online Search | IL | USA |

| Surveying Users’ Perception of Academic Library Services Quality: A Case Study in Universiti Malaysia Pahang (UMP) Library | GEN | Malaysia | |

| Research Consultation Assessment: Perceptions of Students and Librarians | REF | USA | |

| Information Behavior and Expectations of Veterinary Researchers and their Requirements for Academic Library Services | GEN | South Africa | |

| v.42n.2 (2016) | Impact of Assignment Prompt on Information Literacy Performance in First-year Student Writing | IL | USA |

| v.42n.3 (2016) | Student Use of Keywords and Limiters in Web-scale Discovery Searching | IL | USA |

| Flipped Instruction for Information Literacy: Five Instructional Cases of Academic Libraries | IL | USA | |

| Finding Sound and Score: A Music Library Skills Module for Undergraduate Students | IL | Australia | |

| A Collaborative Approach to Integrating Information and Academic Literacy into the Curricula of Research Methods Courses | IL | New Zealand | |

| v.42n.4 (2016) | Information Literacy Training Evaluation: The Case of First Year Psychology Students | IL | Slovenia |

| Use It or Lose It? A Longitudinal Performance Assessment of Undergraduate Business Students’ Information Literacy | IL | USA | |

| v.42n.5 (2016) | Assessing and Serving the Workshop Needs of Graduate Students | GEN | USA |

| Heuristic Usability Evaluation of University of Hong Kong Libraries’ Mobile Website | TECH | China | |

| A Pragmatic and Flexible Approach to Information Literacy: Findings from a Three-Year Study of Faculty-Librarian Collaboration | IL | USA | |

| v.42n.6 (2016) | Assessing Graduate Level Information Literacy Instruction with Critical Incident Questionnaires | IL | USA |

| Effects of Information Literacy Skills on Student Writing and Course Performance | IL | USA | |

| Providing Enhanced Information Skills Support to Students from Disadvantages Backgrounds: Western Sydney University Library Outreach Program | IL | Australia | |

| User Acceptance of Mobile Library Applications in Academic Libraries: An Application of the Technology Acceptance Model | TECH | Korea |

Appendix B

| Journal | Portal: Libraries and the Academy | Service | Site |

| Article Title | |||

| v.12n.1 (2012) | Moving Beyond Assumptions: The Use of Virtual Reference Data in an Academic Library | REF | USA |

| Evaluating Open Source Software for Use in Library Initiatives: A Case Study Involving Electronic Publishing | TECH | USA | |

| Guiding Design: Exposing Librarian and Student Mental Models of Research Guides | REF | USA | |

| v.12n.2 (2012) | McGill Library Makes E-books Portable: E-reader Loan Service in a Canadian Academic Library | TECH | Canada |

| v.12n.3 (2012) | Performance-based Assessment in an Online Course: Comparing Different Types of Information Literacy Instruction | IL | USA |

| Implementing the Customer Contact Center: An Opportunity to Create a Valid Measurement System for Assessing and Improving a Library’s Telephone Services | REF | USA | |

| Incoming Graduate Students in the Social Sciences: How Much Do They Really Know About Library Research? | IL | USA | |

| v.12n.4 (2012) | Rising Tides: Faculty Expectations of Library Websites | TECH | USA |

| v.13n.1 (2013) | The Apprentice Researcher: Using Undergraduate Researchers’ Personal Essays to Shape Instruction and Services | IL | USA |

| v.13n.2 (2013) | NA | ||

| v.13n.3 (2013) | Talking About Information Literacy: The Mediating Role of Discourse in a College Writing Classroom | IL | USA |

| v.13n.4 (2013) | Assessing Affective Learning Using a Student Response System | IL | USA |

| v.14n.1 (2014) | NA | ||

| v.14n.2 (2014) | NA | ||

| v.14n.3 (2014) | NA | ||

| v.14n.4 (2014) | The Measureable Effects of Closing a Branch Library: Circulation, Instruction, and Service Perception | GEN | Canada |

| v.15n.1 (2015) | NA | ||

| v.15n.2 (2015) | The Student/Library Computer Science Collaborative | TECH | USA |

| v.15n.3 (2015) | Serving the Needs of Performing Arts Students: A Case Study | REF | USA |

| “I Never Had to Use the Library in High School”: A Library Instruction Program for At-Risk Students | IL | USA | |

| Impacting Information Literacy Learning in First-Year Seminars: A Rubric-Based Evaluation | IL | USA | |

| Examining Mendeley: Designing Learning Opportunities for Digital Scholarship | TECH | USA | |

| v.15n.4 (2015) | Standing Alone No More: Linking Research to a Writing Course in a Learning Community | IL | USA |

| Learning by Doing: Developing a Baseline Information Literacy Assessment | IL | USA | |

| v.16n.1 (2016) | “I Felt Like Such a Freshman”: First-Year Students Crossing the Library Threshold | IL | USA |

| The Value of Chat Reference Services: A Pilot Study | REF | USA | |

| v.16n.2 (2016) | NA | ||

| v.16n.3 (2016) | The Impact of Physically Embedded Librarianship on Academic Departments | REF | USA |

| Assessment for One-Shot Library Instruction: A Conceptual Approach | IL | USA | |

| v.16n.4 (2016) | NA |

Appendix C

| Journal | College & Research Libraries | Service | Site |

| Article Title | |||

| v.73n.1 (2012) | NA | ||

| v.73n.2 (2012) | NA | ||

| v.73n.3 (2012) | Citation Analysis as a Tool to Measure the Impact of Individual Research Consultations | REF | USA |

| v.73n.4 (2012) | Why One-shot Information Literacy Sessions Are Not the Future of Instruction: A Case for Online Credit Courses | IL | USA |

| v.73n.5 (2012) | NA | ||

| v.73n.6 (2012) | NA | ||

| v.74n.1 (2013) | NA | ||

| v.74n.2 (2013) | NA | ||

| v.74n.3 (2013) | How Users Search the Library from a Single Search Box | TECH | USA |

| The Daily Image Information Needs and Seeking Behavior of Chinese Undergraduate Students | TECH | China | |

| Trends in Image Use by Historians and the Implications for Librarians and Archivists | TECH | USA | |

| v.74n.4 (2013) | Revising the “One-Shot” through Lesson Study: Collaborating with Writing Faculty to Rebuild a Library Instruction Session | IL | USA |

| The Research Process and the Library: First-Generation College Seniors vs. Freshmen | IL | USA | |

| v.74n.5 (2013) | Instructional Preferences of First-Year College Students with Below-Proficient Information Literacy Skills: A Focus Group Study | IL | USA |

| Paths of Discovery: Comparing the Search Effectiveness of EBSCO Discovery Service, Summon, Google Scholar, and Conventional Library Resources | IL | USA | |

| Where Do We Go from Here? Informing Academic Library Staffing through Reference Transaction Analysis | REF | USA | |

| v.74n.6 (2013) | Assessment in the One-Shot Session: Using Pre- and Post-tests to Measure Innovative Instructional Strategies among First-Year Students | IL | USA |

| Why Some Students Continue to Value Individual, Face-to-Face Research Consultations in a Technology-Rich World | REF | USA | |

| v.75n.1 (2014) | Making a Case for Technology in Academia | TECH | USA |

| v.75n.2 (2014) | They CAN and They SHOULD: Undergraduates Providing Peer Reference and Instruction | REF | USA |

| v.75n.3 (2014) | |||

| v.75n.4 (2014) | Undergraduates’ Use of Social Media as Information Sources | IL | USA |

| v.75n.5 (2014) | Faculty Usage of Library Tools in a Learning Management System | TECH | USA |

| Plagiarism Awareness among Students: Assessing Integration of Ethics Theory into Library Instruction | IL | USA | |

| v.75n.6 (2014) | The Whole Student: Cognition, Emotion, and Information Literacy | IL | USA |

| v.76n.1 (2015) | “Pretty Rad”: Explorations in User Satisfaction with a Discovery Layer at Ryerson University | TECH | Canada |

| Maximizing Academic Library Collections: Measuring Changes in Use Patterns Owing to EBSCO Discovery Service | TECH | USA | |

| v.76n.2 (2015) | An Information Literacy Snapshot: Authentic Assessment across the Curriculum | IL | USA |

| v.76n.3 (2015) | Question-Negotiation and Information Seeking in Libraries | REF | USA |

| The Role of the Academic Library in Promoting Student Engagement in Learning | IL | USA | |

| v.76n.4 (2015) | Library Catalog Log Analysis in E-book Patron-Driven Acquisitions (PDA): A Case Study | TECH | USA |

| The Perceived Impact of E-books on Student Reading Practices: A Local Study | TECH | USA | |

| Universal Design for Learning (UDL) in the Academic Library: A Methodology for Mapping Multiple Means of Representation in Library Tutorials | TECH | USA | |

| v.76n.5 (2015) | Degrees of Impact: Analyzing the Effects of Progressive Librarian Course Collaborations on Student Performance | IL | USA |

| v.76n.6 (2015) | Getting More Value from the LibQUAL+® Survey: The Merits of Qualitative Analysis and Importance-Satisfaction Matrices in Assessing Library Patron Comments | GEN | Canada |

| Integrating Library Instruction into the Course Management System for a First-Year Engineering Class: An Evidence-Based Study Measuring the Effectiveness of Blended Learning on Students’ Information Literacy Levels | IL | Canada | |

| Changes in Reference Question Complexity Following the Implementation of a Proactive Chat System: Implications for Practice | REF | USA | |

| v.77n.1 (2016) | Metadata Effectiveness in Internet Discovery: An Analysis of Digital Collection Metadata Elements and Internet Search Engine Keywords | TECH | USA |

| Exploring Peer-to-Peer Library Content and Engagement on a Student-Run Facebook Group | REF | USA | |

| v.77n.2 (2016) | Examining the Relationship between Faculty-Librarian Collaboration and First-Year Students’ Information Literacy Abilities | IL | USA |

| Assessing the Value of Course-Embedded Information Literacy on Student Learning and Achievement | IL | USA | |

| Personal Librarian for Aboriginal Students: A Programmatic Assessment | REF | Canada | |

| Mixed or Complementary Messages: Making the Most of Unexpected Assessment Results | IL | USA | |

| Making Strategic Decisions: Conducting and Using Research on the Impact of Sequenced Library Instruction | IL | USA | |

| Identifying and Articulating Library Connections to Student Success | GEN | USA | |

| Beyond the Library: Using Multiple, Mixed Measures Simultaneously in a College-Wide Assessment of Information Literacy | IL | USA | |

| v.77n.3 (2016) | The Librarian Leading the Machine: A Reassessment of Library Instruction Methods | IL | USA |

| v.77n.4 (2016) | Academic Librarians in Data Information Literacy Instruction: A Case Study in Meteorology | IL | Norway |

| v.77n.5 (2016) | Undergraduates’ Use of Google vs. Library Resources: A Four-Year Cohort Study | IL | USA |

| v.77n.6 (2016) | A Novel Assessment Tool for Quantitative Evaluation of Science Literature Search Performance: Application to First-Year and Senior Undergraduate Biology Majors | IL | USA |

| Assessing the Scope and Feasibility of First-Year Students’ Research Paper Topics | IL | USA |

References

- Hernon, P.; Dugan, R.E. An Action Plan for Outcomes Assessment in Your Library; American Library Association: Chicago, IL, USA; London, UK, 2002; ISBN 0-8389-0813-16. [Google Scholar]

- Oakleaf, M. The Value of Academic Libraries: A Comprehensive Research Review and Report. Available online: http://www.ala.org/acrl/sites/ala.org.acrl/files/content/issues/value/val_report.pdf (accessed on 6 December 2017).

- Lakos, A.; Phipps, S.E. Creating a culture of assessment: A catalyst for organizational change. Portal Libr. Acad. 2004, 4, 345–361. [Google Scholar] [CrossRef]

- Albert, A.B. Communicating library value—The missing piece of the assessment puzzle. J. Acad. Librariansh. 2014, 40, 634–637. [Google Scholar] [CrossRef]

- Oakleaf, M. Building the assessment librarian guildhall: Criteria and skills for quality assessment. J. Acad. Librariansh. 2013, 39, 126–128. [Google Scholar] [CrossRef]

- The Association of College and Research Libraries (ACRL). Proficiencies for Assessment Librarians and Coordinators. Available online: http://www.ala.org/acrl/standards/assessment_proficiencies (accessed on 11 December 2017).

- Nitecki, D.A.; Wiggins, J.; Turner, N.B. Assessment is not enough for libraries to be valued. Perform. Meas. Metr. 2015, 16, 197–210. [Google Scholar] [CrossRef]

- Town, J.S. Value, impact, and the transcendent library: Progress and pressures in performance measurement and evaluation. Libr. Q. 2011, 81, 111–125. [Google Scholar] [CrossRef]

- Thompson, J. Redirection in Academic Library Management; Library Association: London, UK, 1991; ISBN 0851574688. [Google Scholar]

- Heath, F. Library assessment: The way we have grown. Libr. Q. 2011, 81, 7–25. [Google Scholar] [CrossRef]

- Kyrillidou, M.; Cook, C. The evolution of measurement and evaluation of libraries: A perspective from the Association of Research Libraries. Libr. Trends 2008, 56, 888–909. [Google Scholar] [CrossRef]

- Wang, H. From “user” to “customer”: TQM in academic libraries? Libr. Manag. 2006, 27, 606–620. [Google Scholar] [CrossRef]

- McElderry, S. Readers and resources: Public services in academic and research libraries, 1876–1976. Coll. Res. Libr. 1976, 37, 408–420. [Google Scholar] [CrossRef][Green Version]

- Coleman, V.; Xiao, Y.; Blair, L.; Chollett, B. Toward a TQM paradigm: Using SERVQUAL to measure library service quality. Coll. Res. Libr. 1997, 58, 237–249. [Google Scholar] [CrossRef]

- Hiller, S. Another tool in the assessment toolbox: Integrating LIBQUAL+™ into the University of Washington Libraries assessment program. J. Libr. Adm. 2004, 40, 121–137. [Google Scholar] [CrossRef]

- Hiller, S.; Kyrillidou, M.; Self, J. When the evidence is not enough: Organizational factors that influence effective and successful library assessment. Perform. Meas. Metr. 2008, 9, 223–230. [Google Scholar] [CrossRef]

- Farkas, M.; Hinchcliffe, L.; Houk, A. Bridges and barriers: Factors influencing a culture of assessment in academic libraries. Coll. Res. Libr. 2015, 76, 150–169. [Google Scholar] [CrossRef]

- McClure, C.R.; Samuels, A.R. Factors affecting the use of information for academic library decision making. Coll. Res. Libr. 1985, 46, 483–498. [Google Scholar] [CrossRef][Green Version]

- Koufogiannakis, D.; Kloda, L.; Pretty, H. A big step forward: It’s time for a database of evidence summaries in library and information practice. Evid. Based Libr. Inf. Pract. 2016, 11, 92–95. [Google Scholar] [CrossRef]

- Hufford, J.R. A review of the literature on assessment in academic and research libraries, 2005 to August 2011. Portal Libr. Acad. 2013, 13, 5–35. [Google Scholar] [CrossRef]

- American Library Association. Value of Academic Libraries Report. Available online: http://www.acrl.ala.org/value/?page_id=21 (accessed on 7 December 2017).

- Oakleaf, M.; Whyte, A.; Lynema, E.; Brown, M. Academic libraries & institutional learning analytics: One path to integration. J. Acad. Librariansh. 2017, 43, 454–461. [Google Scholar] [CrossRef]

- Crawford, G.A.; Feldt, J. An analysis of the literature on instruction in academic libraries. Ref. User Serv. Assoc. 2007, 46, 77–88. [Google Scholar] [CrossRef][Green Version]

- Luo, L.; McKinney, M. JAL in the past decade: A comprehensive analysis of academic library research. J. Acad. Librariansh. 2015, 41, 123–129. [Google Scholar] [CrossRef]

- Mahraj, K. Reference Services Review: Content analysis, 2006–2011. Ref. Serv. Rev. 2012, 40, 182–198. [Google Scholar] [CrossRef]

- Clark, K.W. Reference Services Review: Content analysis, 2012–2014. Ref. Serv. Rev. 2016, 44, 61–75. [Google Scholar] [CrossRef]

- The Association of College and Research Libraries (ACRL). RoadShows. Available online: http://www.ala.org/acrl/conferences/roadshows (accessed on 4 January 2018).

- Documented Library Contributions to Student Learning and Success: Building Evidence with Team-Based Assessment in Action Campus Projects. Available online: http://www.ala.org/acrl/sites/ala.org.acrl/files/content/issues/value/contributions_y2.pdf (accessed on 11 December 2017).

- Guide for Authors—The Journal of Academic Librarianship. Available online: https://www.elsevier.com/journals/the-journal-of-academic-librarianship/0099-1333/guide-for-authors (accessed on 5 August 2017).

- Portal: Libraries and the Academy. Available online: https://www.press.jhu.edu/journals/portal-libraries-and-academy (accessed on 5 August 2017).

- Submissions. Available online: http://crl.acrl.org/index.php/crl/about/submissions#authorGuidelines (accessed on 3 January 2018).

- Krippendorff, K. Content Analysis: An Introduction to Its Methodology, 2nd ed.; Sage Publications: Thousand Oaks, CA, USA, 1980; ISBN 0-7619-1544-3. [Google Scholar]

- Stemler, S.E. A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Pract. Assess. Res. Eval. 2004, 9, 1–11. [Google Scholar]

- Oakleaf, M. Using rubrics to assess information literacy: An examination of methodology and interrater reliability. J. Assoc. Inf. Sci. Technol. 2009, 60, 969–983. [Google Scholar] [CrossRef]

- Davies, K.; Thiele, J. Library research: A domain comparison of two library journals. Community Jr. Coll. Libr. 2013, 19, 1–9. [Google Scholar] [CrossRef]

- VanScoy, A.; Fontana, C. How reference and information science is studied: Research approaches and methods. Libr. Inf. Sci. Res. 2016, 38, 94–100. [Google Scholar] [CrossRef]

- Koufogiannakis, D.; Slater, L.; Crumley, E. A content analysis of librarianship research. J. Inf. Sci. 2004, 30, 227–239. [Google Scholar] [CrossRef]

- Noh, Y. Imagining library 4.0: Creating a model for future libraries. J. Acad. Librariansh. 2015, 41, 786–797. [Google Scholar] [CrossRef]

- Booth, A. Is there a future for evidence based library and information practice? Evid. Based Libr. Inf. Pract. 2011, 6, 22–27. [Google Scholar] [CrossRef]

- Erlinger, A. Outcomes assessment in undergraduate information literacy instruction: A systematic review. Coll. Res. Libr. 2017. accepted. [Google Scholar]

| Study & Publication | Information Literacy | Reference | Technology |

|---|---|---|---|

| Mahraj (2006–2011) | |||

| RSR | 49% | 28% | 18% |

| Qualifying articles | 119 | 68 | 43 |

| Clark (2012–2014) | |||

| RSR | 47% | 12% | 30% |

| Qualifying articles | 54 | 14 | 35 |

| Current study (2012–2016) | |||

| JAL | 61% | 14% | 15% |

| Qualifying studies | 36 | 8 | 9 |

| Current study (2012–2016) | |||

| portal | 48% | 25% | 27% |

| Qualifying studies | 11 | 6 | 5 |

| Current study (2012–2016) | |||

| CRL | 52% | 18% | 25% |

| Qualifying studies | 23 | 8 | 11 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Allen, E.J.; Weber, R.K.; Howerton, W. Library Assessment Research: A Content Comparison from Three American Library Journals. Publications 2018, 6, 12. https://doi.org/10.3390/publications6010012

Allen EJ, Weber RK, Howerton W. Library Assessment Research: A Content Comparison from Three American Library Journals. Publications. 2018; 6(1):12. https://doi.org/10.3390/publications6010012

Chicago/Turabian StyleAllen, Ethan J., Roberta K. Weber, and William Howerton. 2018. "Library Assessment Research: A Content Comparison from Three American Library Journals" Publications 6, no. 1: 12. https://doi.org/10.3390/publications6010012

APA StyleAllen, E. J., Weber, R. K., & Howerton, W. (2018). Library Assessment Research: A Content Comparison from Three American Library Journals. Publications, 6(1), 12. https://doi.org/10.3390/publications6010012