Open Access and the Changing Landscape of Research Impact Indicators: New Roles for Repositories

Abstract

1. Scholarly Communication Online and the Crisis of Traditional Measures

2. New Tools and Venues in an Open Access Scenario

- (1)

- Open access citation advantage ranges between 25% and 250% depending on the discipline. This is a quality advantage driven by the intrinsic value of works and not by the mere fact of being freely available [20];

- (2)

- Open access, either through voluntary self-archiving or mandatory deposit, has a positive effect on the scientific impact of new articles [14];

- (3)

- There may be a correlation between research dissemination through social web and downloads from repositories [21];

- (4)

- HTML/PDF paper downloads, coupled with social media shares, are a better indicator of public interest than absolute usage statistics [22];

- (5)

- As supplementary indicators, scholarly altmetrics can show value added for open access content, and this fact could act as a new incentive for authors to increase their repository self-archiving rates [23];

- (6)

- (7)

- Academic citations are often closely related to social reference management bookmarks [26];

- (8)

- Bookmarking services (Mendeley, Research Gate, CiteuLike) are increasingly used by scholars and are a better measure of scholarly interest than social media (Twitter, Facebook) where public interest may be stronger [22];

- (9)

- Author blog posts are especially meaningful for the scientific community insofar as they provide commentary and critique of newly published articles, thus enriching original works.

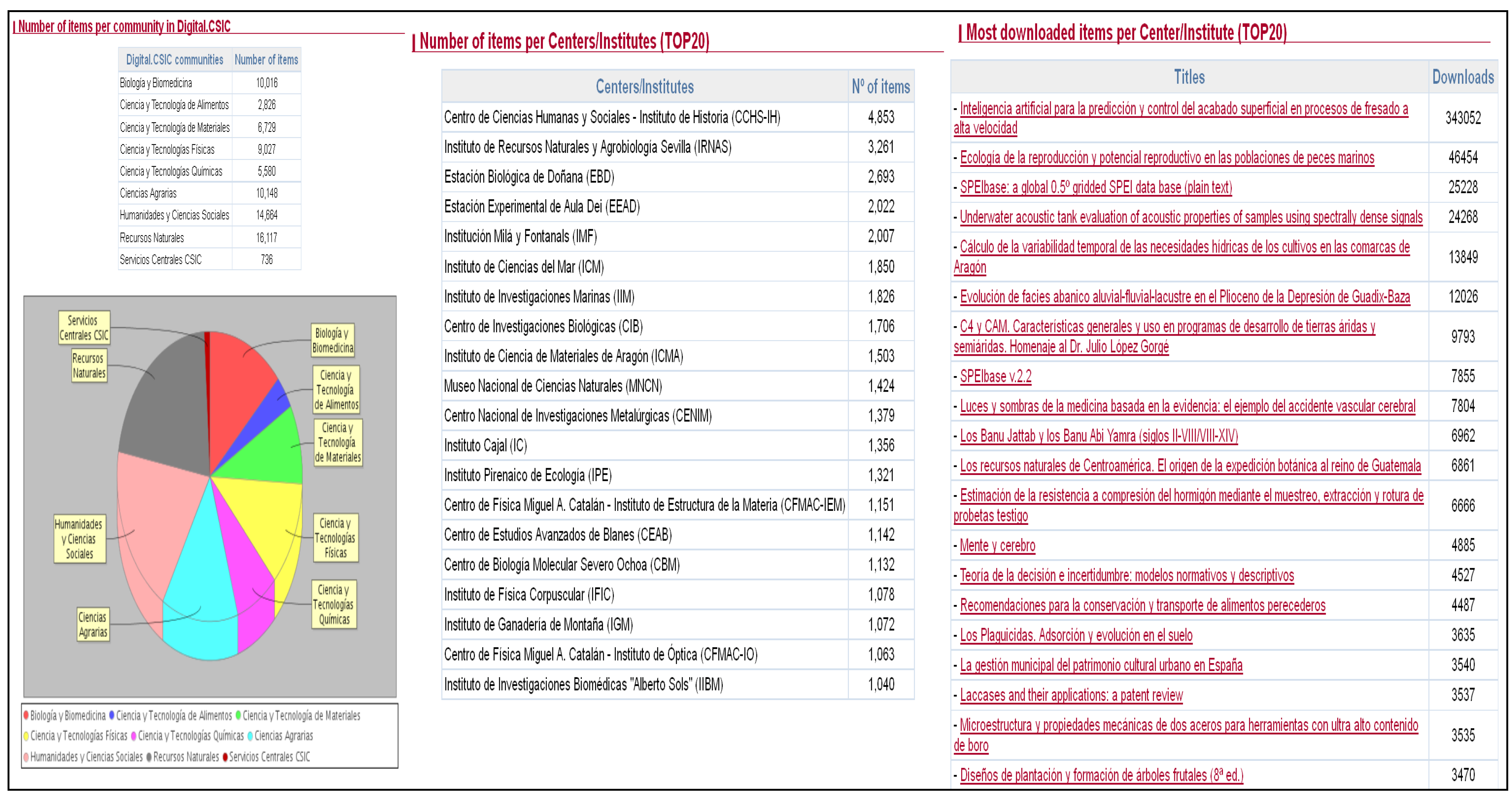

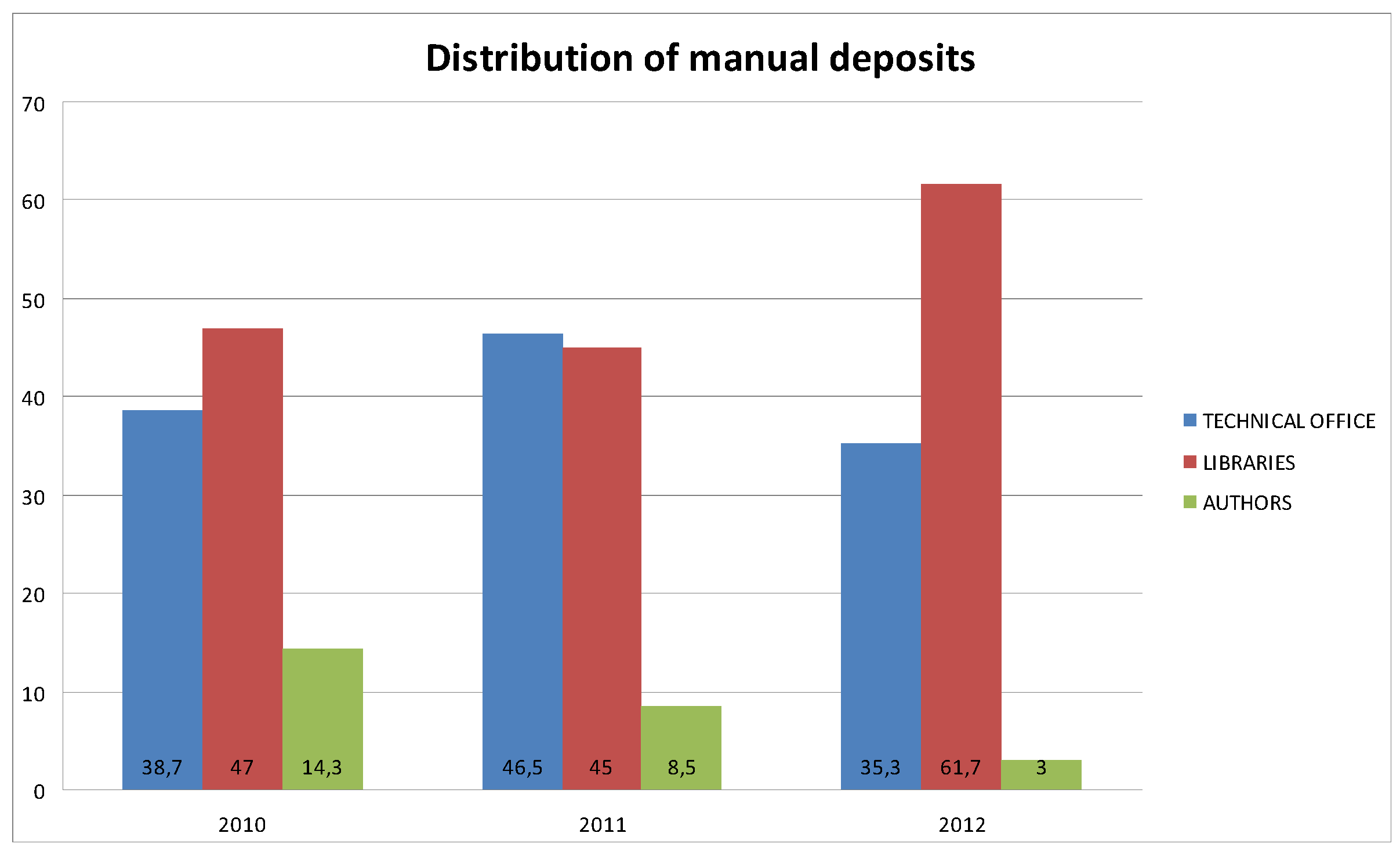

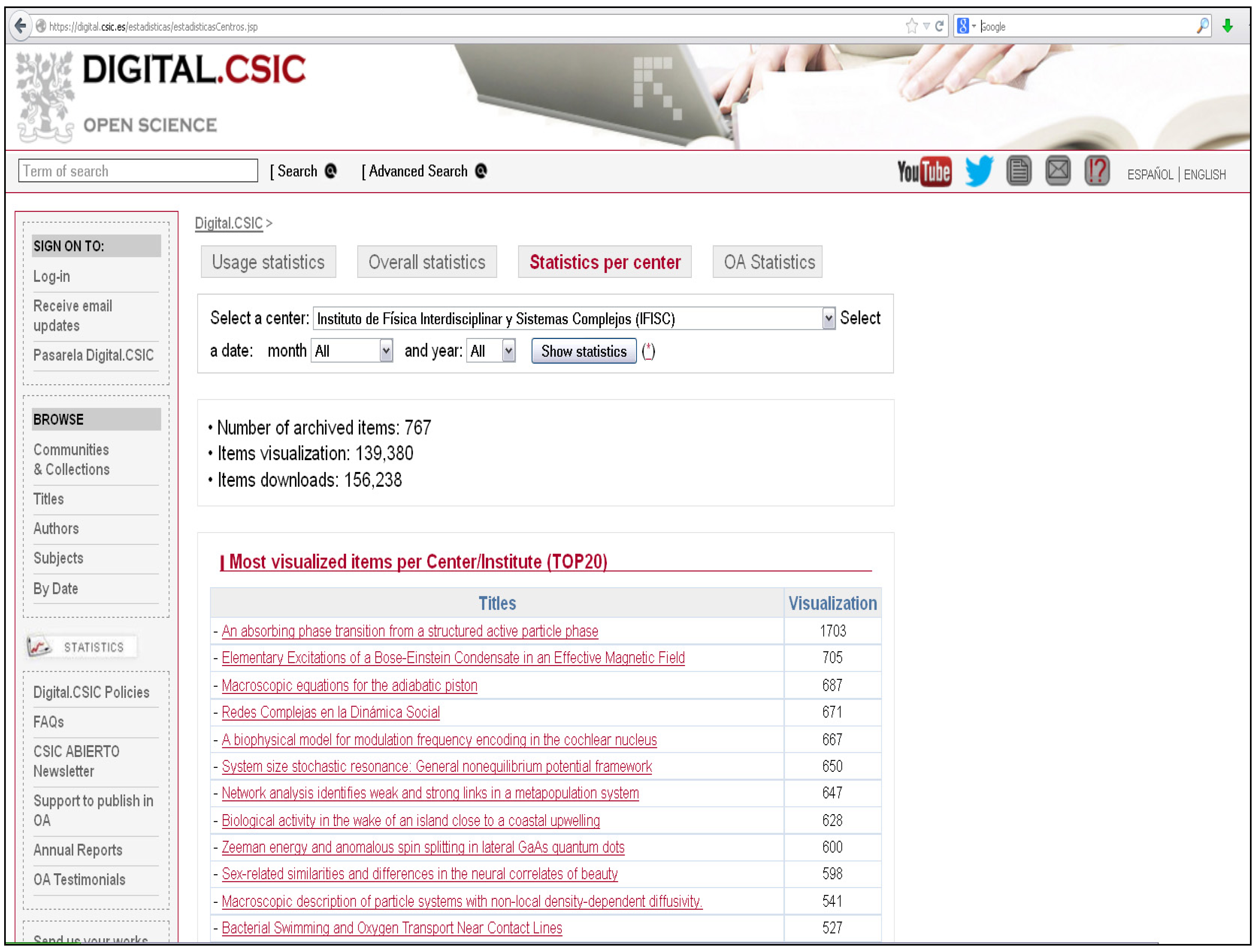

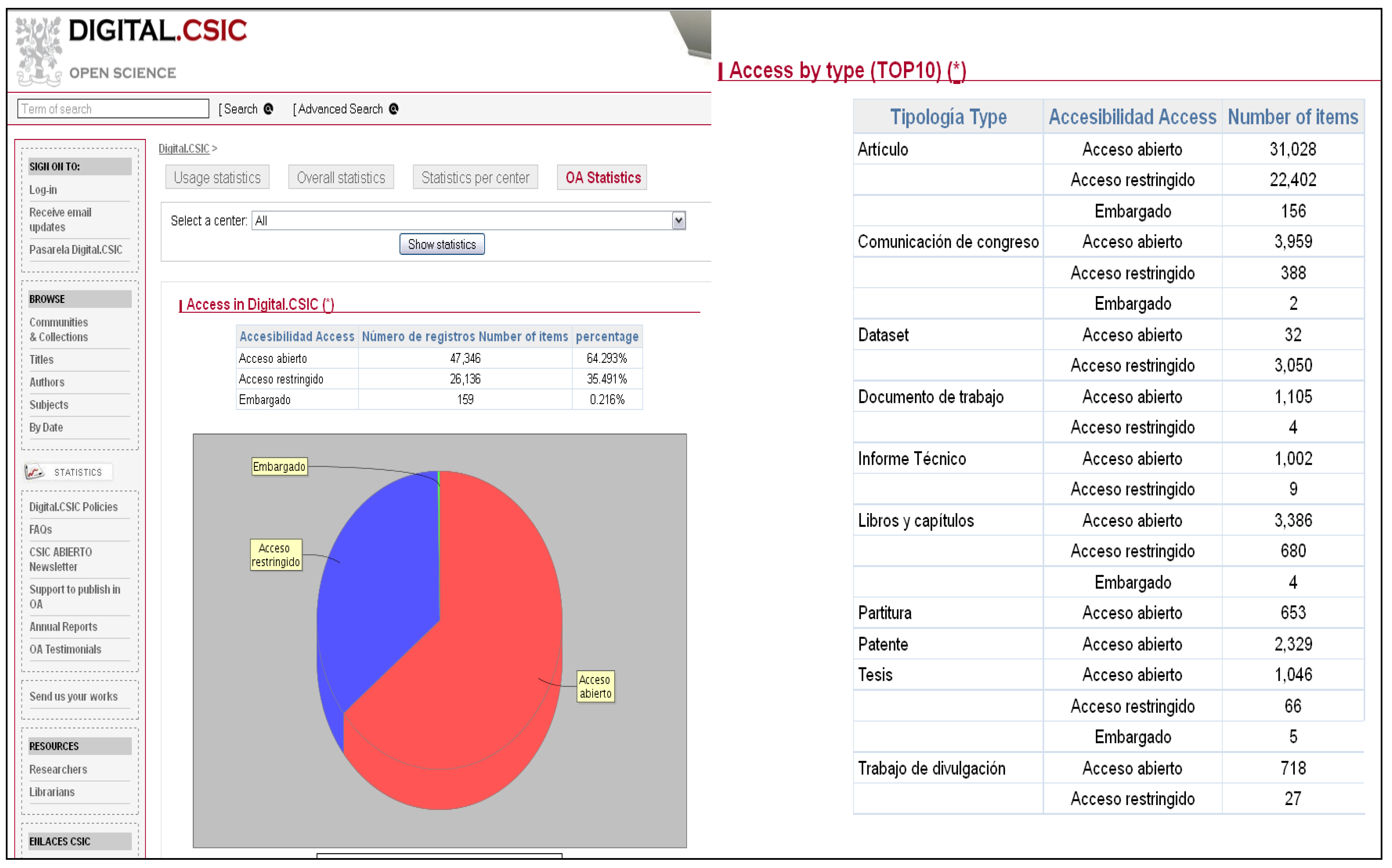

3. Analyzing Impact Indicators at DIGITAL.CSIC: An Institutional Repository Case

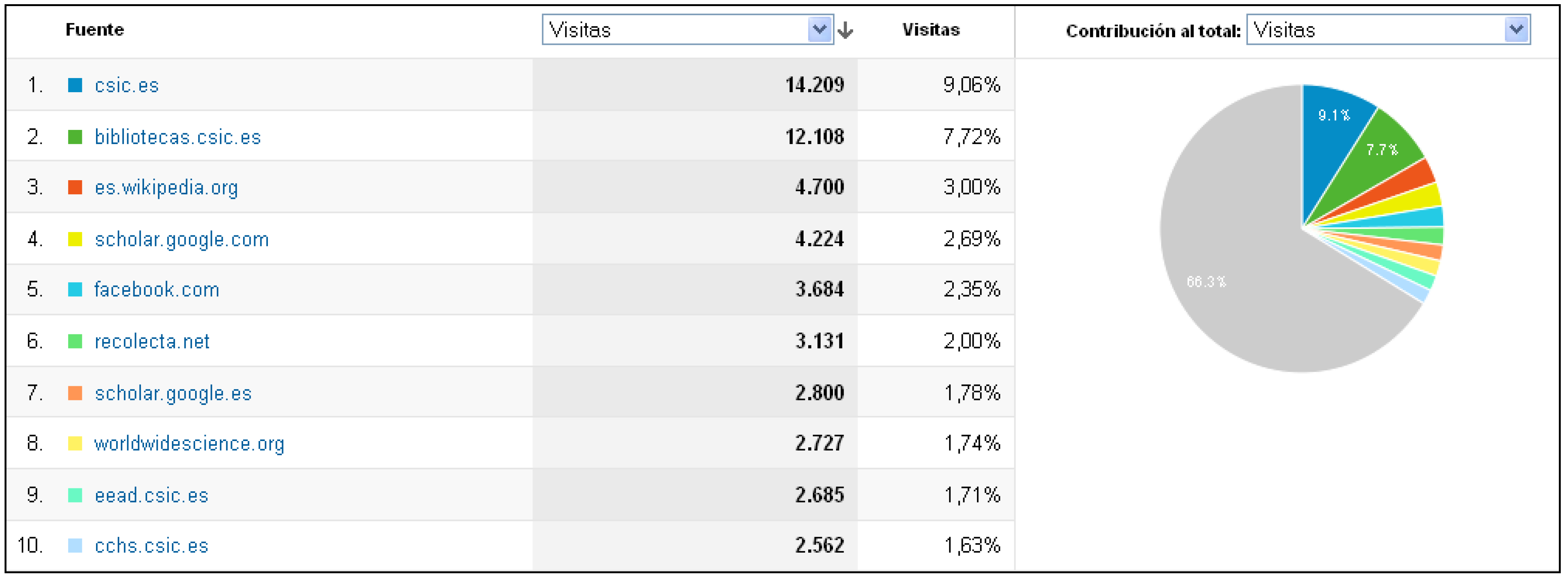

3.1. Statistics

| CSIC institute | Percentage of OA items at DIGITAL.CSIC | Percentage of restricted access items at DIGITAL.CSIC | Full text downloads from DIGITAL.CSIC |

|---|---|---|---|

| Estación Experimental de Aula Dei (EEAD) | 95% (1846) | 5% (87) | 744,277 |

| Centro de Ciencias Humanas y Sociales–Instituto de Historia (CCHS-IH) | 36% (3067) | 64% (1,715) | 648,021 |

| Instituto de Ciencias del Patrimonio (INCIPIT) | 99.5% (626) | 0.4% (3) | 388,877 |

| Institución Milá y Fontanals (IMF) | 95% (1877) | 4.7% (93) | 388,325 |

| Instituto de Ciencias de la Construcción Eduardo Torroja (IETCC) | 58% (354) | 41.5% (254) | 410,977 |

| CSIC institute | Number of items in DIGITAL.CSIC | Downloads from DIGITAL.CSIC | Open access items in DIGITAL.CSIC |

|---|---|---|---|

| Centro de Ciencias Humanas y Sociales–Instituto de Historia (CCHS-IH) | 4784 | 648,021 | 36% (3,067) |

| Instituto de Recursos Naturales y Agrobiología Sevilla (IRNAS) | 3082 | 196,327 | 87% (2,084) |

| Estación Biológica de Doñana (EBD) | 2633 | 178,604 | 84.4% (2,223) |

| Institución Milá y Fontanals (IMF) | 1971 | 388,325 | 95% (1877) |

| Estación Experimental de Aula Dei (EEAD) | 1937 | 744,277 | 95% (1,846) |

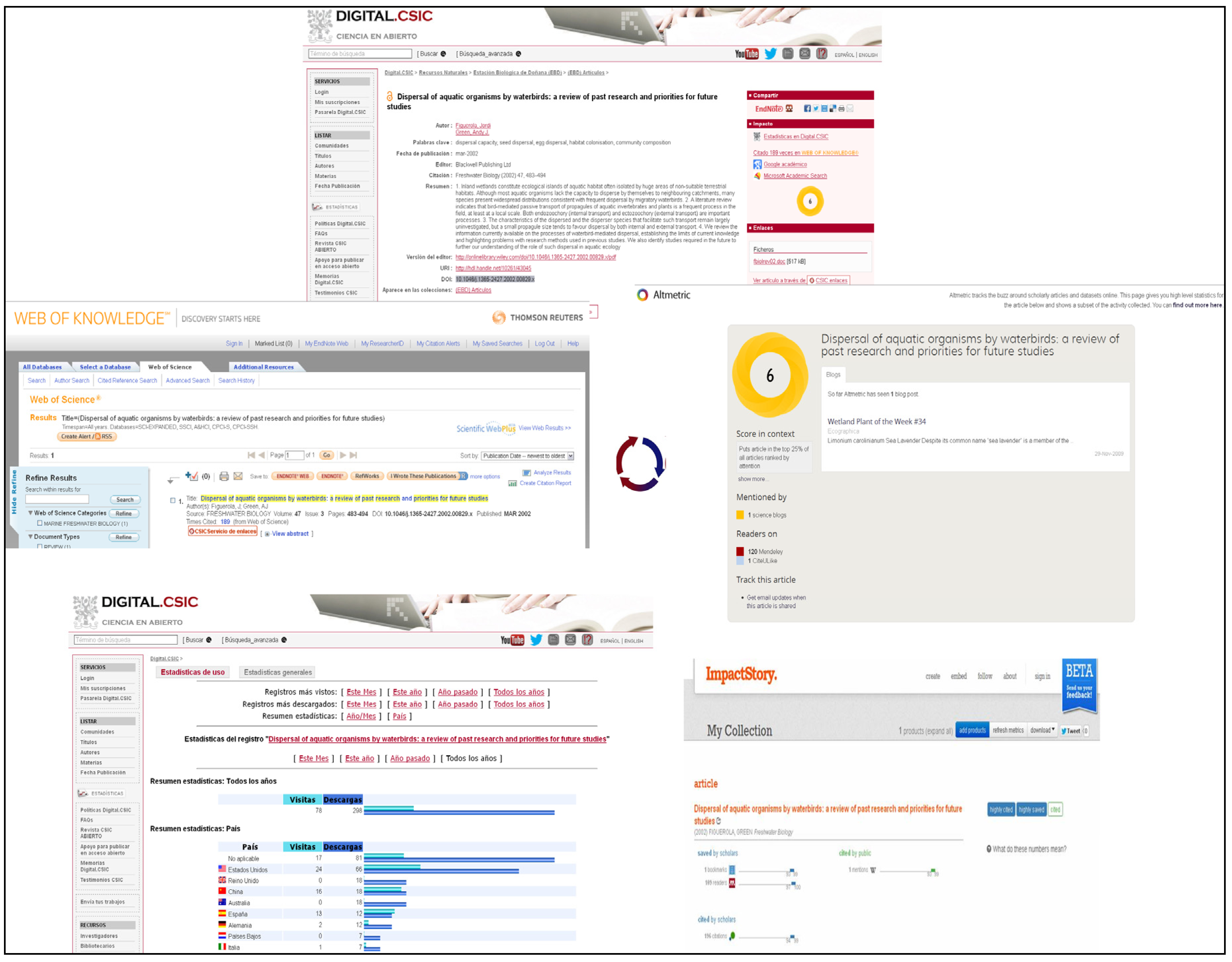

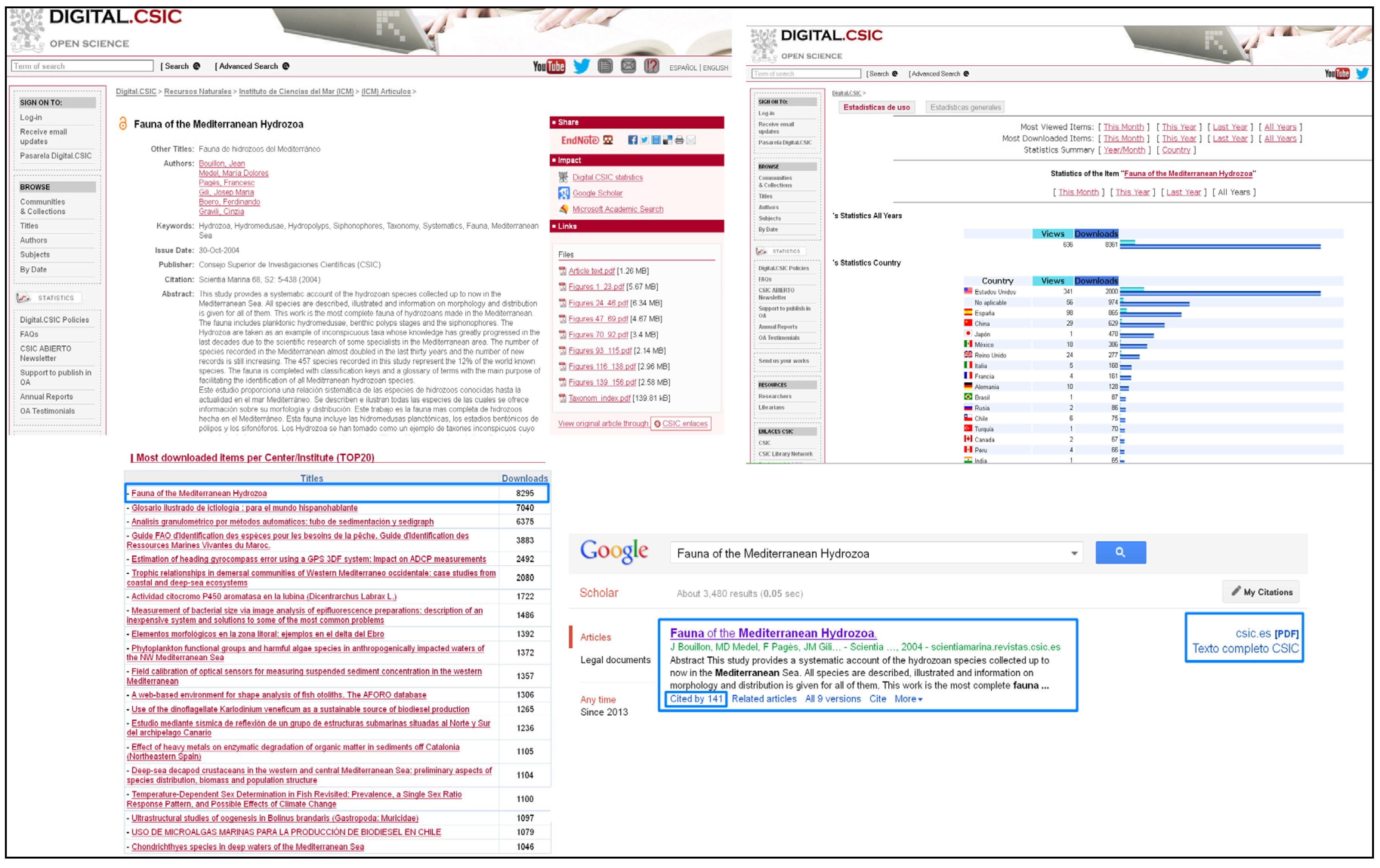

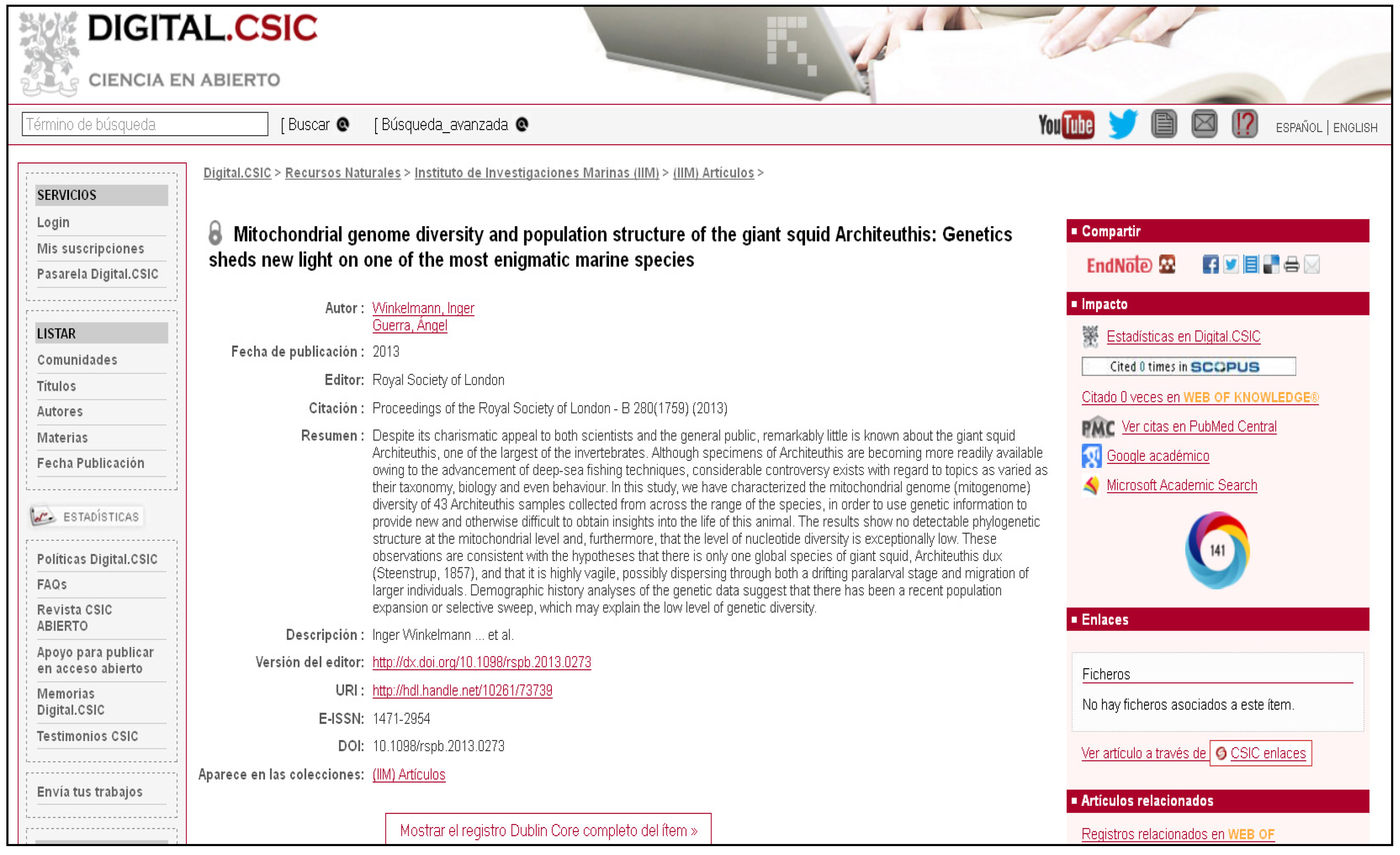

3.2. Impact Data at Item Level

| CSIC author | CSIC scientific area and institute affiliation | Works indexed by Scopus | Works at DIGITAL.CSIC |

|---|---|---|---|

| Jordi Figuerola | Natural Resources, EBD | 126 items | 129 items |

| José Luis Chiara | Chemistry,CENQUIOR | 56 items | 66 items |

| Angel Mantecón | Agricultural Sciences, IGM | 77 items | 472 items |

| Antonio Pich | Physics, IFIC | 147 items | 188 items |

| Antonio Almagro | Humanities, EEA | 6 items | 229 items |

| Francisca Puertas | Materials, IETCC | 111 items | 112 items |

3.3. Other Tools to Track Web Performance

4. Open Access and Evaluation Frameworks

5. Conclusions

Conflict of Interest

References

- Finardi, U. Correlation between journal impact factor and citation performance: An experimental study. J. Inf. 2013, 7, 357–370. [Google Scholar]

- The PLoS Medicine Editors. The impact factor game. PLoS Med. 2006, 3, e291. [CrossRef]

- The Matthew Effect in Science—Citing the Most Cited. Available online: http://blogs.bbsrc.ac.uk/index.php/2009/03/the-matthew-effect-in-science/ (accessed on 23 April 2013).

- MacRoberts, M.H.; MacRoberts, B.R. Problems of citation analysis: A study of uncited and seldom-cited influences. J. Am. Soc. Inf. Sci. Technol. 2010, 61, 1–12. [Google Scholar] [CrossRef]

- Davis, P.M. Access, Readership, Citations: A Randomized Controlled Trial of Scientific Journal Publishing. Dissertation Presented in 2010 to the Faculty of the Graduate School of Cornell University. Available online: http://ecommons.cornell.edu/bitstream/1813/17788/1/Davis,%20Philip.pdf (accessed on 27 April 2013).

- Buschman, M.; Michalek, A. Are Alternative Metrics Still Alternative? 2013, ASI&ST Bulletin April/May. Available online: http://www.asis.org/Bulletin/Apr-13/AprMay13_Buschman_Michalek.html (accessed on 25 April 2013).

- Van Noorden, R. Researchers feel pressure to cite superfluous papers. Nature News. 2012. Available online: http://www.nature.com/news/researchers-feel-pressure-to-cite-superfluous-papers-1.9968 (accessed on 25 April 2013).

- Lozano, G.; Larivière, V.; Gingras, Y. The weakening relationship between the impact factor and papers’ citations in the digital age. J. Am. Soc. Inf. Sci. Technol. 2012, 63, 2140–2145. [Google Scholar] [CrossRef]

- Joseph, H. The impact of open access on research and scholarship. Reflections on the Berlin 9 Open Access Conference. Coll. Res. Libr. News 2012, 73, 83–87. [Google Scholar]

- Davis, P.; Lewenstein, M.; Bruce, V.; Simon, D.H.; Booth, J.G.; Connolly, M.J.L. Open access publishing, article downloads, and citations: Randomised controlled trial. BMJ. 2008, 337, p. a568. Available online: http://www.bmj.com/content/337/bmj.a568 (accessed on 22 April 2013).

- Swan, A. The Open Access citation advantage: Studies and results to date. 2010. Available online: http://eprints.soton.ac.uk/268516/ (accessed on 25 April 2013).

- OPCIT. The effect of open access and downloads (“hits”) on citation impact: A bibliography of studies. Available online: http://opcit.eprints.org/oacitation-biblio.html (accessed on 24 April 2013).

- Antelman, K. Do open access articles have a greater research impact? Coll. Res. Libr. 2004, 65, 372–382. [Google Scholar]

- Gargouri, Y.; Hajjem, C.H.; Lariviere, V.; Gingras, Y.; Carr, L.; Brody, T.; Harnad, S. Self-selected or mandated, open access increases citation impact for higher quality research. PLOS One 2010, 5, e13636. [Google Scholar]

- Willmott, M.A.; Dunn, K.H.; Duranceau, E.F. The accessibility quotient: A new measure of open access. J. Libr. Sch. Commun. 2012, 1, p. eP1025. Available online: http://jlsc-pub.org/jlsc/vol1/iss1/7/ (accessed on 25 April 2013).

- Google Scholar Metrics. Available online: http://scholar.google.com/citations?view_op=top_venues (accessed on 28 April 2013).

- IRUS-UK. Available online: http://www.irus.mimas.ac.uk/ (accessed on 28 April 2013).

- JISC Open Citations. Available online: http://opencitations.net/ (accessed on 27 April 2013).

- Plum Analytics Current List of Metrics. Available online: http://www.plumanalytics.com/metrics.html (accessed on 27 April 2013).

- Wagner, A.B. Open access citation advantage: An annotated bibliography. Issues Sci. Technol. Libr. 2010. Available online: http://www.istl.org/10-winter/article2.html (accessed on 27 April 2013).

- Terras, M. The impact of social media on the dissemination of research: Results of an experiment. J. Digit. Hum. 2012, 1. Available online: http://journalofdigitalhumanities.org/1–3/the-impact-of-social-media-on-the-dissemination-of-research-by-melissa-terras/ (accessed on 28 April 2013).

- Lin, J.; Fenner, M. The many faces of article-level metrics. Available online: http://www.asis.org/Bulletin/Apr-13/AprMay13_Lin_Fenner.html (accessed on 23 April 2013).

- Konkie, S.; Scherer, D. New opportunities for repositories in the age of altmetrics. Available online: http://www.asis.org/Bulletin/Apr-13/AprMay13_Konkiel_Scherer.html (accessed on 23 April 2013).

- Priem, J.; Piwowar, H.A.; Hemminger, B.M. Altmetrics in the wild: Using social media to explore scholarly impact. Available online: http://arxiv.org/abs/1203.4745 (accessed on 13 July 2013).

- Brody, T.; Harnad, S.; Carr, L. Earlier web usage statistics as predictors of later citation impact. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 1060–1072. [Google Scholar] [CrossRef]

- Haustein, S.; Siebenlist, T. Applying social bookmarking data to evaluate journal usage. J. Inf. 2011, 5, 446–457. [Google Scholar]

- Institutional repository of Spanish National Research Council, Digital.CSIC. Available online: http://digital.csic.es (accessed on 29 April 2013).

- Bernal, I. Percepciones y participación en el acceso abierto en el CSIC: Informe sobre la Encuesta de Digital.CSIC para investigadores. Report, CSIC. 2010. Available online: https://digital.csic.es/handle/10261/28543 (Spanish), https://digital.csic.es/handle/10261/28547 (English) (accessed on 28 April 2013).

- Bernal, I.; Pemau-Alonso, J. Estadísticas para repositorios. Sistema métrico de datos de Digital.CSIC. El Professional de la Información. 2010, 19, pp. 534–543. Available online: http://digital.csic.es/10261/27913 (accessed on 24 April 2013).

- Martínez-Giménez, C.; Albiñana, C. Colaboración de la Biblioteca en el control y la difusión de la producción científica del Instituto: integrando Digital.CSIC, www, Conciencia y Memoria desde el diseño de un Servicio bibliotecario complementario con gran valor potencial y visibilidad. Report, CSIC. 2012. Available online: https://digital.csic.es/handle/10261/44840 (accessed on 30 April 2013).

- Swan, A.; Sheridan, B. Open access self-archiving: An author study. Available online: http://www.jisc.ac.uk/uploaded_documents/Open%20Access%20Self%20Archiving-an%20author%20study.pdf (accessed on 3 May 2013).

- Figuerola, J.; Green, A.J. Dispersal of aquatic organisms by waterbirds: A review of past research and priorities for future studies. Freshw. Biol. 2002, 47, pp. 483–494. Available online: http://digital.csic.es/handle/10261/43045 (accessed on 12 July 2013).

- Williams, P. N.; Villada, A.; Deacon, C.; Raab, A.; Figuerola, J.; Green, A.J.; Feldmann, J.; Meharg, A. Greatly Enhanced Arsenic Shoot Assimilation in Rice Leads to Elevated Grain Levels Compared to Wheat and Barley. Environ. Sci. Technol. 2007, 41, 6854–6859. [Google Scholar] [CrossRef]

- Green, A.J.; Figuerola, J.; Sánchez, M.I. Implications of waterbird ecology for the dispersal of aquatic organisms. Acta Oecologica. 2002, 23, pp. 177–189. Available online: http://digital.csic.es/handle/10261/43043 (accessed on 12 July 2013).

- Correa Valencia, M. Inteligencia artificial para la predicción y control del acabado superficial en procesos de fresado a alta velocidad. PhD Dissertation Presented in 2010 to the Faculty of Informatics of Madrid Politechnic University. Available online: http://digital.csic.es/handle/10261/33107 (accessed on 12 July 2013).

- Ranz Guerra, C.; Cobo, P. Underwater acoustic tank evaluation of acoustic properties of samples using spectrally dense signals. J. Acoust. Soc. Am. 1999, 105, p. 1054. Available online: http://digital.csic.es/handle/10261/7145 (accessed on 12 July 2013).

- Aranda, J.; Armada, M.; Cruz, J.M. Automation for the Maritime Industries. Instituto de Automática Industrial: Madrid, Spain, 2004. Available online: http://digital.csic.es/handle/10261/2906 (accessed on 12 July 2013).

- Galán Allué, J.M. Cuatro viajes en la literatura del antiguo Egipto. Consejo Superior de Investigaciones Científicas: Madrid, Spain, 2000. Available online: http://digital.csic.es/handle/10261/36807 (accessed on 12 July 2013).

- Puertas, F.; Santos, H.; Palacios, M.; Martínez-Ramírez, S. Polycarboxylate superplasticiser admixtures: Effect on hydration, microstructure and rheological behaviour in cement pastes. Adv. Cem. Res. 2005, 17, 77–89. [Google Scholar] [CrossRef] [Green Version]

- CSIC Open Access Journals. Available online: http://revistas.csic.es/index_en.html (accessed on 2 May 2013).

- Bouillon, J.; Medel, M.D.; Pagès, F.; Gili, J.M.; Boero, F.; Gravili, C. Fauna of the Mediterranean Hydrozoa. Sci. Mar. 2004, 68, pp. 5–282. Available online: http://digital.csic.es/handle/10261/2366 (accessed on 12 July 2013).

- Winkelmann, I.; Campos, P.F.; Strugnell, J.; Cherel, Y.; Smith, P.J.; Kubodera, T.; Allcock, L.; Kampmann, M.L.; Schroeder, H.; Guerra, A.; Norman, M.; Finn, J.; Ingrao, D.; Clarke, M.; Gilbert, M.T.P. Mitochondrial genome diversity and population structure of the giant squid Architeuthis: Genetics sheds new light on one of the most enigmatic marine species. Proc. R. Soc. B. 2013, 280, p. 1759. Available online: http://digital.csic.es/handle/10261/73739 (accessed on 12 July 2013).

- Thomas, F. A short account of Leonardo Torres' endless spindle. Mech. Mach. Theory. 2008, 43, pp. 1055–1063. Available online: http://digital.csic.es/handle/10261/30460 (accessed on 12 July 2013).

- Moraga, J.F.H. New Evidence on Emigrant Selection. Rev. Econ. Stat. 2011, 93, pp. 72–96. Available online: http://digital.csic.es/handle/10261/4353 (accessed on 12 July 2013).

- Ranking Webometrics. Available online: http://research.webometrics.info/ (accessed on 2 May 2013).

- REF. Assessment Framework and Guidance on Submissions. Available online: http://www.ref.ac.uk/media/ref/content/pub/assessmentframeworkandguidanceonsubmissions/02_11.pdf (accessed on 24 April 2013).

- Wellcome. Trust Position statement in support of open and unrestricted access to published research. Available online: http://www.wellcome.ac.uk/About-us/Policy/Spotlight-issues/Open-access/Policy/index.htm (accessed on 23 April 2013).

- HEFCE/REF Open Access and submission to the REF Post-2014, 2013 Letter. Available online: http://www.hefce.ac.uk/media/hefce/content/news/news/2013/open_access_letter.pdf (accessed on 24 April 2013).

- Rentier, B.; Thirion, P. The Liège ORBi model: Mandatory policy without rights retention but linked to assessment processes. Presentation at Berlin 9 Pre-Conference Workshop. 2011. Available online: http://www.berlin9.org/bm~doc/berlin9-rentier.pdf (accessed on April 26 2013).

- Guidelines for tenure and promotion reviews at Indiana University Bloomington. 2012. Available online: http://www.indiana.edu/~vpfaa/WRAP_SDDU/BL-PROV-COMM/VPFAA%20-%20New/docs/promotion_tenure_reappointment/pt-revised-review-guidelines.pdf (accessed on 26 April 2013).

- Spain’s Law of Science, Technology and Innovation 2011, Article 37 “Dissemination in open access”. Available online: http://www.boe.es/boe/dias/2011/06/02/pdfs/BOE-A-2011-9617.pdf (accessed on 12 July 2013).

- Open Access policy by Community of Madrid with regard to funded CSIC research (2008) and to general funding calls (2009). Available online: https://www.madrimasd.org/quadrivium/convocatorias/Portals/13/Documentacion/ordencsic2008.pdf and http://www.madrimasd.org/informacionidi/convocatorias/2009/documentos/Orden_679-2009_19-02-09_Convocatoria_Ayuda_Programas_Actividades_Tecnonologia.pdf (accessed on 12 July 2013).

- Open access policy by Principality of Asturias. 2009. Available online: https://sede.asturias.es/portal/site/Asturias/menuitem.1003733838db7342ebc4e191100000f7/?vgnextoid=d7d79d16b61ee010VgnVCM1000000100007fRCRD&fecha=03/02/2009&refArticulo=2009-03201 (accessed on 12 July 2013).

- Bernal, I.; Román-Molina, J.; Pemau-Alonso, J.; Chacón, A. CSIC Bridge: Linking Digital.CSIC to Institutional CRIS. Poster OR2012, Edinburgh. Available online: https://digital.csic.es/handle/ 10261/54304 (accessed on 3 May 2013).

- CSIC Institutional Action Plan CSIC 2010–2013. Available online: http://www.csic.es/web/guest/plan-de-actuacion-2010–2013 (accessed on 29 April 2013).

- Eigenfactor Journal Cost Effectiveness for Open Access Journals. Available online: http://www.eigenfactor.org/openaccess/ (accessed on 25 April 2013).

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Bernal, I. Open Access and the Changing Landscape of Research Impact Indicators: New Roles for Repositories. Publications 2013, 1, 56-77. https://doi.org/10.3390/publications1020056

Bernal I. Open Access and the Changing Landscape of Research Impact Indicators: New Roles for Repositories. Publications. 2013; 1(2):56-77. https://doi.org/10.3390/publications1020056

Chicago/Turabian StyleBernal, Isabel. 2013. "Open Access and the Changing Landscape of Research Impact Indicators: New Roles for Repositories" Publications 1, no. 2: 56-77. https://doi.org/10.3390/publications1020056

APA StyleBernal, I. (2013). Open Access and the Changing Landscape of Research Impact Indicators: New Roles for Repositories. Publications, 1(2), 56-77. https://doi.org/10.3390/publications1020056