Artificial Intelligence in Endodontic Education: A Systematic Review with Frequentist and Bayesian Meta-Analysis of Student-Based Evidence

Abstract

1. Introduction

2. Materials and Methods

2.1. Protocol and Registration

2.2. Eligibility Criteria

2.3. Operational Definition of AI-Assisted Tools

2.4. PICO Framework

- Population (P): Dental students undergoing formal training in endodontics at any stage, including preclinical undergraduate courses, clinical undergraduate courses, postgraduate students, and residents in endodontic specialty programs. Studies evaluating AI performance on validated student assessment instruments used in endodontic education were also eligible.

- Intervention (I): Use of AI-based tools (CNNs, LLMs, chatbots) integrated into learning activities, diagnostic assistance, or assessment of students, or applied to outputs generated by students within an educational setting.

- Comparator (C): Student performance obtained within validated educational or assessment frameworks.

- Outcomes (O): Primary outcomes included diagnostic performance metrics (accuracy, sensitivity, specificity, F1-score, and AUC) when AI results were directly compared with student performance. Secondary outcomes encompassed educational indicators such as assessment consistency, feedback quality, academic integrity verification, and student learning-related measures.

2.5. Information Sources and Search Strategy

2.6. Study Selection

2.7. Data Extraction

2.8. Outcome Measures

2.9. Data Synthesis and Meta-Analysis

2.10. Risk of Bias and Evidence Certainty

3. Results

3.1. Study Selection

3.2. Study Characteristics

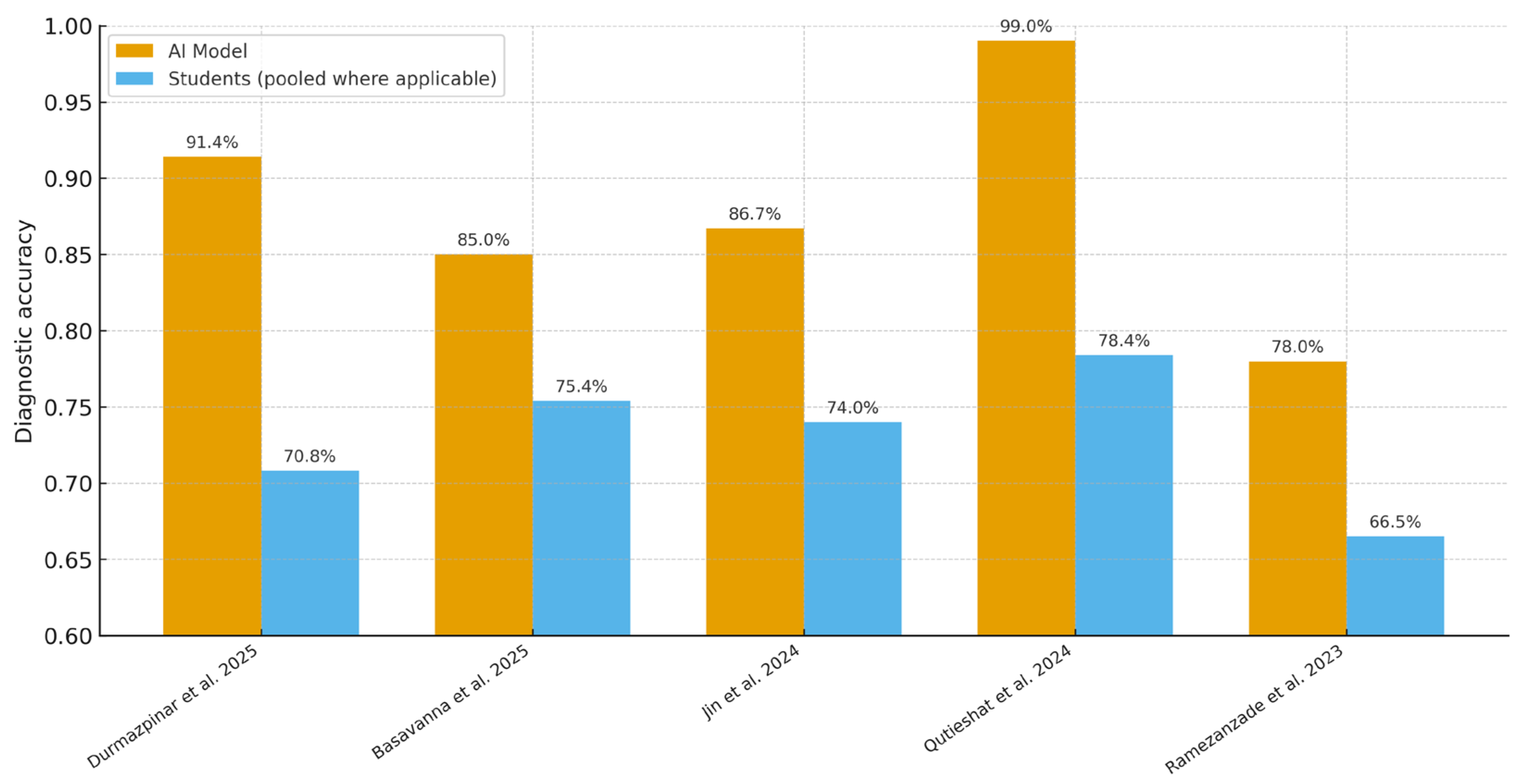

3.3. Diagnostic Accuracy of AI vs. Students

3.4. Educational Utility and Student Perceptions

3.5. Student Engagement, Accessibility, Motivation, and Trust

3.6. AI-Augmented Learning Scenarios

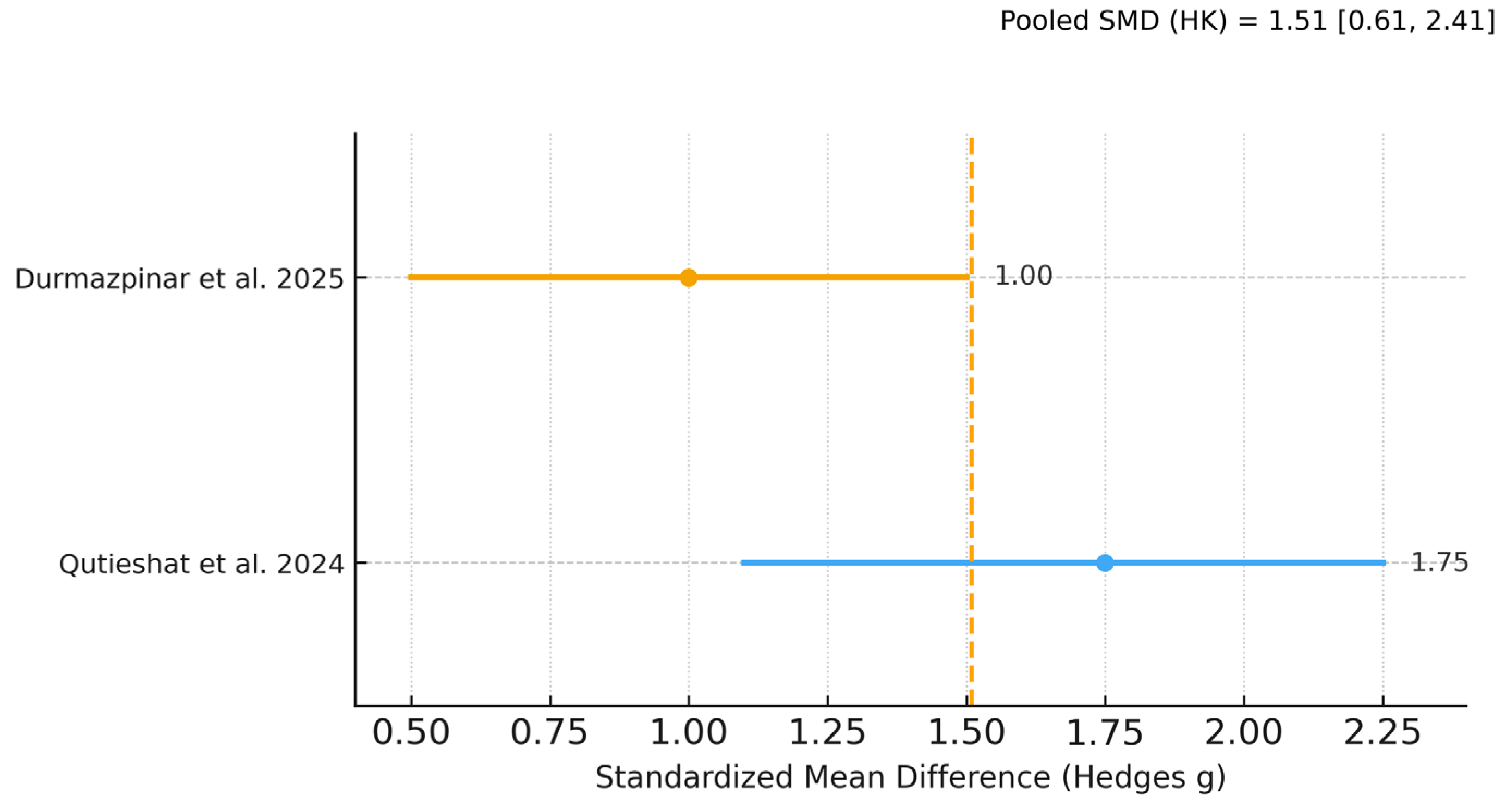

3.7. Meta-Analysis of Diagnostic Performance

3.8. Exploratory Analyses

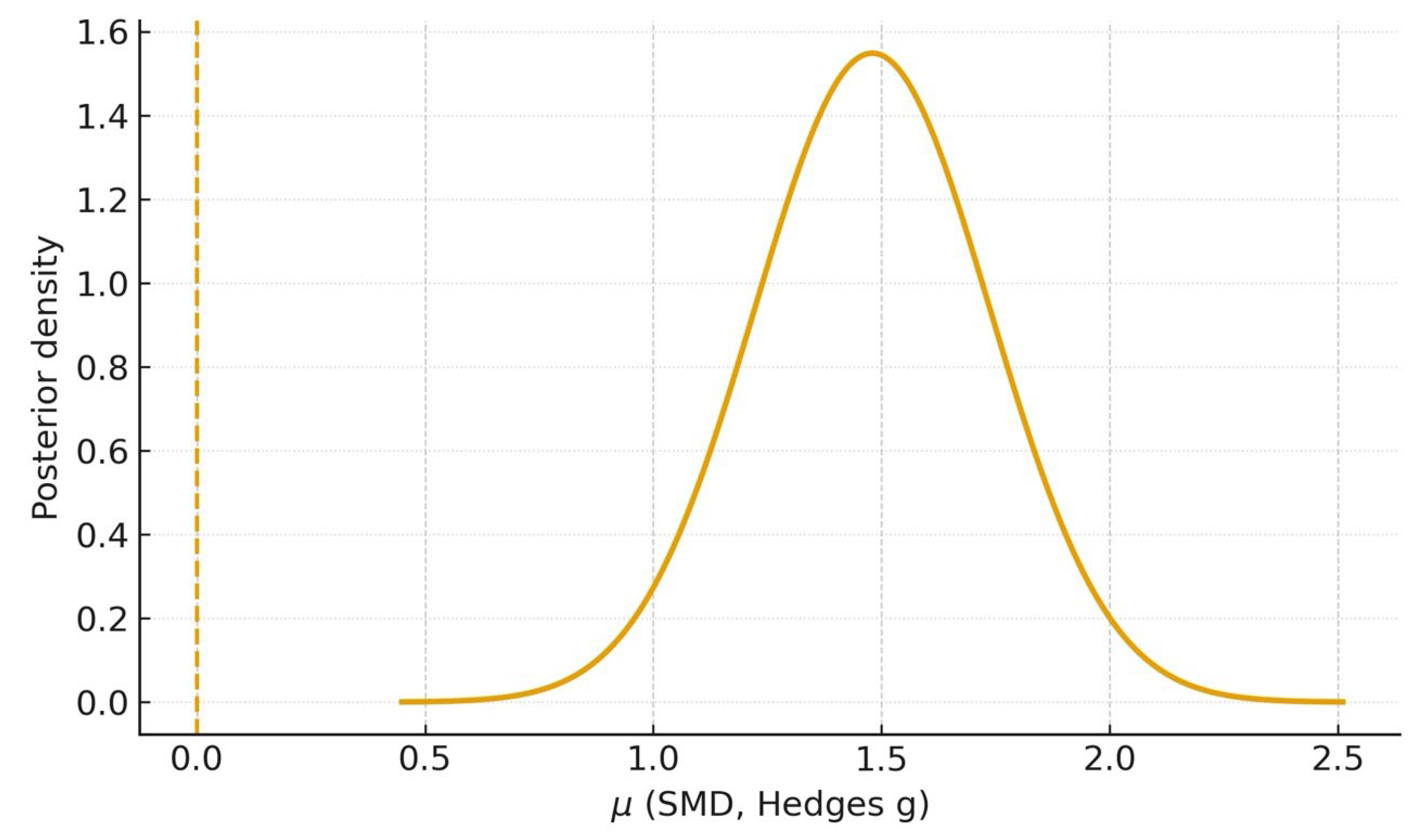

3.9. Bayesian Meta-Analysis

3.10. Risk of Bias Assessment

3.11. Certainty of Evidence (GRADE Assessment)

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Yadalam, P.K.; Anegundi, R.V.; Ardila, C.M. Integrating Artificial Intelligence Into Orthodontic Education and Practice. Int. Dent. J. 2024, 74, 1463. [Google Scholar] [CrossRef]

- Ardila, C.M.; Vivares-Builes, A.M. Artificial Intelligence through Wireless Sensors Applied in Restorative Dentistry: A Systematic Review. Dent. J. 2024, 12, 120. [Google Scholar] [CrossRef]

- Ardila, C.M.; Yadalam, P.K. AI and dental education. Br. Dent. J. 2025, 238, 294. [Google Scholar] [CrossRef]

- Schwendicke, F.; Samek, W.; Krois, J. Artificial intelligence in dentistry: Chances and challenges. J. Dent. Res. 2020, 99, 769–774. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.H.; Asch, S.M. Machine learning and prediction in medicine—Beyond the peak of inflated expectations. N. Engl. J. Med. 2017, 376, 2507–2509. [Google Scholar] [CrossRef] [PubMed]

- Ammar, N.; Kühnisch, J. Diagnostic performance of artificial intelligence-aided caries detection on bitewing radiographs: A systematic review and meta-analysis. Jpn. Dent. Sci. Rev. 2024, 60, 128–136. [Google Scholar] [CrossRef]

- Schlenz, M.A.; Michel, K.; Wegner, K.; Schmidt, A.; Rehmann, P.; Wöstmann, B. Undergraduate dental students’ perspective on the implementation of digital dentistry in the preclinical curriculum: A questionnaire survey. BMC Oral Health. 2020, 20, 78. [Google Scholar] [CrossRef]

- Senders, J.T.; Arnaout, O.; Karhade, A.V.; Dasenbrock, H.H.; Gormley, W.B.; Broekman, M.L.; Smith, T.R. Natural and artificial intelligence in neurosurgery: A systematic review. Neurosurgery 2018, 83, 181–192. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against healthcare professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef] [PubMed]

- Wartman, S.A.; Combs, C.D. Medical Education Must Move from the Information Age to the Age of Artificial Intelligence. Acad. Med. 2018, 93, 1107–1109. [Google Scholar] [CrossRef] [PubMed]

- Yadalam, P.K.; Anegundi, R.V.; Natarajan, P.M.; Ardila, C.M. Neural Networks for Predicting and Classifying Antimicrobial Resistance Sequences in Porphyromonas gingivalis. Int. Dent. J. 2025, 75, 100890. [Google Scholar] [CrossRef]

- Yadalam, P.K.; Arumuganainar, D.; Natarajan, P.M.; Ardila, C.M. Artificial intelligence-powered prediction of AIM-2 inflammasome sequences using transformers and graph attention networks in periodontal inflammation. Sci. Rep. 2025, 15, 8733. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Munn, Z.; Barker, T.H.; Moola, S.; Tufanaru, C.; Stern, C.; McArthur, A.; Matthew, S.; Edoardo, A. Methodological quality of case series studies: An introduction to the JBI critical appraisal tool. JBI Evid. Synth. 2020, 18, 2127–2133. [Google Scholar] [CrossRef]

- Guyatt, G.H.; Oxman, A.D.; Vist, G.E.; Kunz, R.; Falck-Ytter, Y.; Alonso-Coello, P.; Schünemann, H.J. GRADE: An emerging consensus on rating quality of evidence and strength of recommendations. BMJ 2008, 336, 924–926. [Google Scholar] [CrossRef]

- Durmazpinar, P.M.; Ekmekci, E. Comparing diagnostic skills in endodontic cases: Dental students versus ChatGPT-4o. BMC Oral Health 2025, 25, 457. [Google Scholar] [CrossRef]

- Basavanna, R.S.; Adhaulia, I.; Dhanyakumar, N.M.; Joshi, J. Evaluating the accuracy of deep learning models and dental postgraduate students in measuring working length on intraoral periapical X-rays: An in vitro study. Contemp. Clin. Dent. 2025, 16, 15–18. [Google Scholar] [CrossRef]

- Jin, L.; Zhou, W.; Tang, Y.; Yu, Z.; Fan, J.; Wang, L.; Liu, C.; Gu, Y.; Zhang, P. Detection of C-shaped mandibular second molars on panoramic radiographs using deep convolutional neural networks. Clin. Oral Investig. 2024, 28, 646. [Google Scholar] [CrossRef] [PubMed]

- Qutieshat, A.; Al Rusheidi, A.; Al Ghammari, S.; Alarabi, A.; Salem, A.; Zelihic, M. Comparative analysis of diagnostic accuracy in endodontic assessments: Dental students vs. artificial intelligence. Diagnosis 2024, 11, 259–265. [Google Scholar]

- Ramezanzade, S.; Dascalu, T.L.; Ibragimov, B.; Bakhshandeh, A.; Bjørndal, L. Prediction of pulp exposure before caries excavation using artificial intelligence: Deep learning-based image data versus standard dental radiographs. J. Dent. 2023, 138, 104732. [Google Scholar] [CrossRef]

- Hiraiwa, T.; Ariji, Y.; Fukuda, M.; Kise, Y.; Nakata, K.; Katsumata, A.; Fujita, H.; Ariji, E. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac. Radiol. 2019, 48, 20180218. [Google Scholar] [CrossRef]

- Sismanoglu, S.; Capan, B.S. Performance of artificial intelligence on Turkish dental specialization exam: Can ChatGPT-4.0 and gemini advanced achieve comparable results to humans? BMC Med. Educ. 2025, 25, 214. [Google Scholar] [CrossRef]

- Giannakopoulos, K.; Kavadella, A.; Aaqel Salim, A.; Stamatopoulos, V.; Kaklamanos, E.G. Evaluation of the Performance of Generative AI Large Language Models ChatGPT, Google Bard, and Microsoft Bing Chat in Supporting Evidence-Based Dentistry: Comparative Mixed Methods Study. J. Med. Internet Res. 2023, 25, e51580. [Google Scholar] [CrossRef]

- Riedel, M.; Kaefinger, K.; Stuehrenberg, A.; Ritter, V.; Amann, N.; Graf, A.; Recker, F.; Klein, E.; Kiechle, M.; Riedel, F.; et al. ChatGPT’s performance in German OB/GYN exams—Paving the way for AI-enhanced medical education and clinical practice. Front. Med. 2023, 10, 1296615. [Google Scholar] [CrossRef]

- Ibrahim, M.; Omidi, M.; Guentsch, A.; Gaffney, J.; Talley, J. Ensuring integrity in dental education: Developing a novel AI model for consistent and traceable image analysis in preclinical endodontic procedures. Int. Endod. J. 2025; online version of record. [Google Scholar] [CrossRef]

- Díaz-Flores-García, V.; Labajo-González, E.; Santiago-Sáez, A.; Perea-Pérez, B. Detecting the manipulation of digital clinical records in dental practice. Radiography 2017, 23, e103–e107. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Ayhan, M.; Kayadibi, I.; Aykanat, B. RCFLA-YOLO: A deep learning-driven framework for the automated assessment of root canal filling quality in periapical radiographs. BMC Med. Educ. 2025, 25, 894. [Google Scholar] [CrossRef]

- Saghiri, M.A.; Garcia-Godoy, F.; Gutmann, J.L.; Lotfi, M.; Asgar, K. The reliability of artificial neural network in locating minor apical foramen: A cadaver study. J. Endod. 2012, 38, 1130–1134. [Google Scholar] [CrossRef] [PubMed]

- Al-Anesi, M.S.; AlKhawlani, M.M.; Alkheraif, A.A.; Al-Basmi, A.A.; Alhajj, M.N. An audit of root canal filling quality performed by undergraduate pre-clinical dental students, Yemen. BMC Med. Educ. 2019, 19, 350. [Google Scholar] [CrossRef]

- Ridao-Sacie, C.; Segura-Egea, J.J.; Fernández-Palacín, A.; Bullón-Fernández, P.; Ríos-Santos, J.V. Radiological assessment of periapical status using the periapical index: Comparison of periapical radiography and digital panoramic radiography. Int. Endod. J. 2007, 40, 433–440. [Google Scholar] [CrossRef]

- Owolabi, L.F.; Adamu, B.; Taura, M.G.; Isa, A.I.; Jibo, A.M.; Abdul-Razek, R.; Alharthi, M.M.; Alghamdi, M. Impact of a longitudinal faculty development program on the quality of multiple-choice question item writing in medical education. Ann. Afr. Med. 2021, 20, 46–51. [Google Scholar] [CrossRef]

- Ten Cate, O.; Carraccio, C.; Damodaran, A.; Gofton, W.; Hamstra, S.J.; Hart, D.E.; Richardson, D.; Ross, S.; Schultz, K.; Warm, E.J.; et al. Entrustment Decision Making: Extending Miller’s Pyramid. Acad. Med. 2021, 96, 199–204. [Google Scholar] [CrossRef]

- Nissen, L.; Rother, J.F.; Heinemann, M.; Reimer, L.M.; Jonas, S.; Raupach, T. A randomised cross-over trial assessing the impact of AI-generated individual feedback on written online assignments for medical students. Med. Teach. 2025, 47, 1544–1550. [Google Scholar] [CrossRef]

- Brügge, E.; Ricchizzi, S.; Arenbeck, M.; Keller, M.N.; Schur, L.; Stummer, W.; Holling, M.; Lu, M.H.; Darici, D. Large language models improve clinical decision making of medical students through patient simulation and structured feedback: A randomized controlled trial. BMC Med. Educ. 2024, 24, 1391. [Google Scholar] [CrossRef]

- Chang, O.; Holbrook, A.M.; Lohit, S.; Deng, J.; Xu, J.; Lee, M.; Cheng, A. Comparability of Objective Structured Clinical Examinations (OSCEs) and Written Tests for Assessing Medical School Students’ Competencies: A Scoping Review. Eval. Health Prof. 2023, 46, 213–224. [Google Scholar] [CrossRef]

- Schwartzstein, R.M. Clinical reasoning and artificial intelligence: Can ai really think? Trans. Am. Clin. Climatol. Assoc. 2024, 134, 133–145. [Google Scholar] [PubMed]

- Sivarajkumar, S.; Kelley, M.; Samolyk-Mazzanti, A.; Visweswaran, S.; Wang, Y. An Empirical Evaluation of Prompting Strategies for Large Language Models in Zero-Shot Clinical Natural Language Processing: Algorithm Development and Validation Study. JMIR Med. Inform. 2024, 12, e55318. [Google Scholar] [CrossRef] [PubMed]

- Hicks, S.A.; Strümke, I.; Thambawita, V.; Hammou, M.; Riegler, M.A.; Halvorsen, P.; Parasa, S. On evaluation metrics for medical applications of artificial intelligence. Sci. Rep. 2022, 12, 5979. [Google Scholar] [CrossRef]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Van Calster, B.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M.; et al. TRIPOD+AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 2024, 385, e078378. [Google Scholar] [CrossRef] [PubMed]

- Künzle, P.; Paris, S. Performance of large language artificial intelligence models on solving restorative dentistry and endodontics student assessments. Clin. Oral Investig. 2024, 28, 575. [Google Scholar] [CrossRef] [PubMed]

- Mertens, S.; Krois, J.; Cantu, A.G.; Arsiwala, L.T.; Schwendicke, F. Artificial intelligence for caries detection: Randomized trial. J. Dent. 2021, 115, 103849. [Google Scholar] [CrossRef]

- Liu, J.; Liu, X.; Shao, Y.; Gao, Y.; Pan, K.; Jin, C.; Ji, H.; Du, Y.; Yu, X. Periapical lesion detection in periapical radiographs using the latest convolutional neural network ConvNeXt and its integrated models. Sci. Rep. 2024, 14, 25429. [Google Scholar] [CrossRef]

- Sonoda, Y.; Kurokawa, R.; Hagiwara, A.; Asari, Y.; Fukushima, T.; Kanzawa, J.; Gonoi, W.; Abe, O. Structured clinical reasoning prompt enhances LLM’s diagnostic capabilities in diagnosis please quiz cases. Jpn. J. Radiol. 2025, 43, 586–592. [Google Scholar] [CrossRef]

- AlSaad, R.; Abd-Alrazaq, A.; Boughorbel, S.; Ahmed, A.; Renault, M.A.; Damseh, R.; Sheikh, J. Multimodal Large Language Models in Health Care: Applications, Challenges, and Future Outlook. J. Med. Internet Res. 2024, 26, e59505. [Google Scholar] [CrossRef]

- Baaij, A.; Kruse, C.; Whitworth, J.; Jarad, F. European Society of Endodontology Undergraduate Curriculum Guidelines for Endodontology. Int. Endod. J. 2024, 57, 982–995. [Google Scholar] [CrossRef]

- AlMaslamani, M.J.; AlNajar, I.J.; Alqedra, R.A.; ElHaddad, M. Dental undergraduate students’ perception of the endodontic specialty. Saudi Endod. J. 2024, 14, 113–120. [Google Scholar] [CrossRef]

- Kaur, G.; Thomas, A.R.; Samson, R.S.; Varghese, E.; Ponraj, R.R.; Nagraj, S.K.; Shrivastava, D.; Algarni, H.A.; Siddiqui, A.Y.; Alothmani, O.S.; et al. Efficacy of electronic apex locators in comparison with intraoral radiographs in working length determination—A systematic review and meta-analysis. BMC Oral Health 2024, 24, 532. [Google Scholar] [CrossRef] [PubMed]

- Alkahtany, S.M.; Alabdulkareem, S.E.; Alharbi, W.H.; Alrebdi, N.F.; Askar, T.S.; Bukhary, S.M.; Almohaimede, A.A.; Al-Manei, K.K. Assessment of dental students’ knowledge and performance of master gutta-percha cone selection and fitting during root canal treatment: A pilot study. BMC Med. Educ. 2024, 24, 371. [Google Scholar] [CrossRef] [PubMed]

- Choudhari, S.; Ramesh, S.; Shah, T.D.; Teja, K.V.L. Diagnostic accuracy of artificial intelligence versus dental experts in predicting endodontic outcomes: A systematic review. Saudi Endod. J. 2024, 14, 153–163. [Google Scholar] [CrossRef]

- Setzer, F.C.; Li, J.; Khan, A.A. The Use of Artificial Intelligence in Endodontics. J. Dent. Res. 2024, 103, 853–862. [Google Scholar] [CrossRef] [PubMed]

- Alfadley, A.; Shujaat, S.; Jamleh, A.; Riaz, M.; Aboalela, A.A.; Ma, H.; Orhan, K. Progress of Artificial Intelligence-Driven Solutions for Automated Segmentation of Dental Pulp Space on Cone-Beam Computed Tomography Images. A Systematic Review. J. Endod. 2024, 50, 1221–1232. [Google Scholar] [CrossRef]

| Study | AI Task | AI Model | Comparator (Student Level) | Outcome Metric | AI Metric(s) (%) | Student Metric(s) (%) |

|---|---|---|---|---|---|---|

| Durmazpinar et al. (2025) [20] | Endodontic case diagnosis | ChatGPT-4o (LLM) | 3rd/5th year students | Accuracy (%) | 91.4 | 60.8 (3rd-yr); 79.5 (5th-yr) |

| Basavanna et al. (2025) [21] | Working-length determination (in vitro; periapical X-rays) | Deep CNN | Postgraduate students | Accuracy (%) | 85.0 | 75.4 |

| Jin et al. (2024) [22] | C-shaped mandibular second molar detection (panoramic) | CNN (ResNet, DenseNet) | Graduate students (also reports novice dentists, specialists) | Accuracy (%), AUC | Accuracy 86.7% (ResNet-101, Group A); AUC 0.910 (95% CI 0.883–0.940) | Specialist = 81.2%; Novice dentist = 77.2%; Graduate student = 74.0% |

| Qutieshat et al. (2024) [23] | Endodontic condition diagnosis | ChatGPT-4 (LLM) | Junior and senior undergraduates | Accuracy (%) | 99.0 | 77.0 (junior); 79.7 (senior) |

| Ramezanzade et al. (2023) [24] | Pulp exposure prediction | CNN + radiographic metrics | Dental students | F1-score, Accuracy, Sensitivity, Specificity, AUC | 71.0, 78.0, 62.0, 83.0, 0.73 | F1 = 58.0–61.0; Accuracy = 65.0–68.0 |

| Study | AI Accuracy (%) | Student Accuracy (%) | Difference (pp) |

|---|---|---|---|

| Durmazpinar et al. [20] | 91.38 | 70.77 a | +20.61 |

| Basavanna et al. [21] | 85.0 | 75.4 | +9.6 |

| Jin et al. [22] | 86.70 | 74.00 b | +12.7 |

| Qutieshat et al. [23] | 99.0 | 78.36 c | +20.64 |

| Ramezanzade et al. [24] | 78.00 | 66.50 d | +11.50 |

| Unweighted mean (all 5) | not applicable | not applicable | +15.01 |

| LLM subgroup mean | not applicable | not applicable | +20.63 |

| CNN subgroup mean | not applicable | not applicable | +11.27 |

| Sensitivity (excluding Qutieshat) | not applicable | not applicable | +13.60 |

| Study | Participant Selection | Index Test (Conduct/Blinding; Prespecified Threshold) | Reference Standard | Flow & Timing | Overall |

|---|---|---|---|---|---|

| Durmazpinar 2025 [20] | Unclear | Low | Low | Low | Low |

| Basavanna 2025 [21] | Unclear | Unclear | Low | Low | Moderate |

| Jin 2024 [22] | Low | Low | Unclear | Low | Low |

| Qutieshat 2024 [23] | Unclear | Low | Low | Low | Low |

| Ramezanzade 2023 [24] | Unclear | Unclear | Unclear | Low | Moderate |

| Outcome | Studies Contributing | Effect Estimate (SMD, 95% CI) | Certainty (GRADE) | Footnotes |

|---|---|---|---|---|

| Diagnostic accuracy (AI vs. students)—primary meta-analysis | k = 2 (studies [20,23]) | Hedges g = 1.48 (95% CI 0.60–2.36) | Moderate (⨁⨁⨁◯) | Downgraded for inconsistency (I2 ≈ 84%) a; not downgraded for imprecision b; no serious risk-of-bias or indirectness concerns c; publication bias not assessed (k < 10). |

| Diagnostic accuracy (AI vs. students)—sensitivity (incl. SD imputation) | k = 3 (adds [21]) | Hedges g = 1.45 (95% CI 0.77–2.14) | Moderate (⨁⨁⨁◯) | Same judgment; I2 ≈ 77%. Large and consistent direction of effect across all five student-based studies supports upgrading one level for magnitude d. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ardila, C.M.; Pineda-Vélez, E.; Vivares-Builes, A.M. Artificial Intelligence in Endodontic Education: A Systematic Review with Frequentist and Bayesian Meta-Analysis of Student-Based Evidence. Dent. J. 2025, 13, 489. https://doi.org/10.3390/dj13110489

Ardila CM, Pineda-Vélez E, Vivares-Builes AM. Artificial Intelligence in Endodontic Education: A Systematic Review with Frequentist and Bayesian Meta-Analysis of Student-Based Evidence. Dentistry Journal. 2025; 13(11):489. https://doi.org/10.3390/dj13110489

Chicago/Turabian StyleArdila, Carlos M., Eliana Pineda-Vélez, and Anny M. Vivares-Builes. 2025. "Artificial Intelligence in Endodontic Education: A Systematic Review with Frequentist and Bayesian Meta-Analysis of Student-Based Evidence" Dentistry Journal 13, no. 11: 489. https://doi.org/10.3390/dj13110489

APA StyleArdila, C. M., Pineda-Vélez, E., & Vivares-Builes, A. M. (2025). Artificial Intelligence in Endodontic Education: A Systematic Review with Frequentist and Bayesian Meta-Analysis of Student-Based Evidence. Dentistry Journal, 13(11), 489. https://doi.org/10.3390/dj13110489