Identification of Model Particle Mixtures Using Machine-Learning-Assisted Laser Diffraction

Abstract

:1. Introduction

2. Experimental Methods

2.1. Setup and Data Acquisition

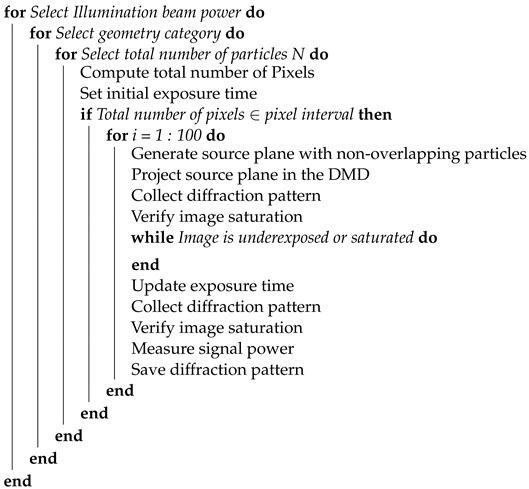

| Algorithm 1: Pseudo-code of experimental data collection. |

|

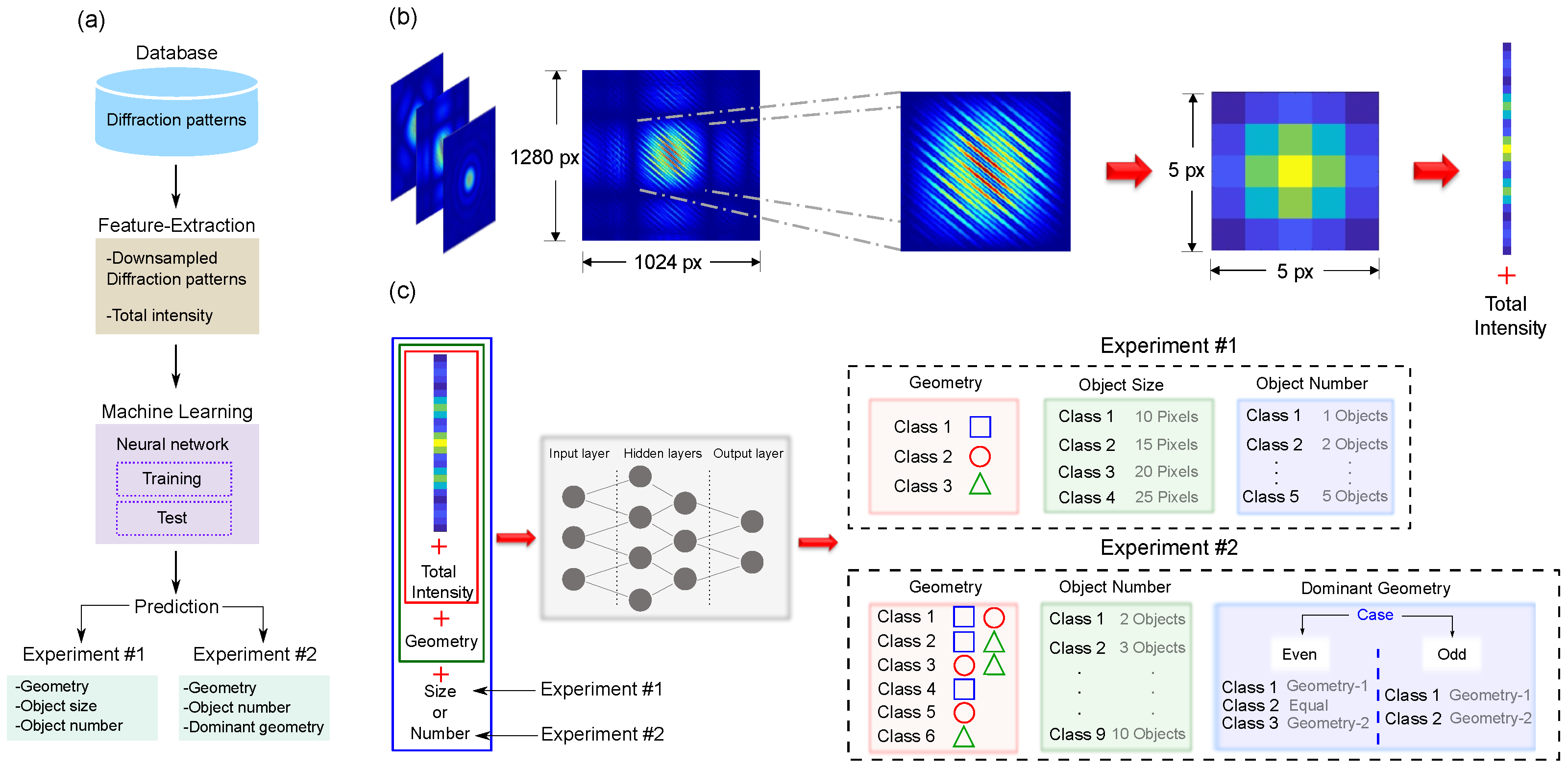

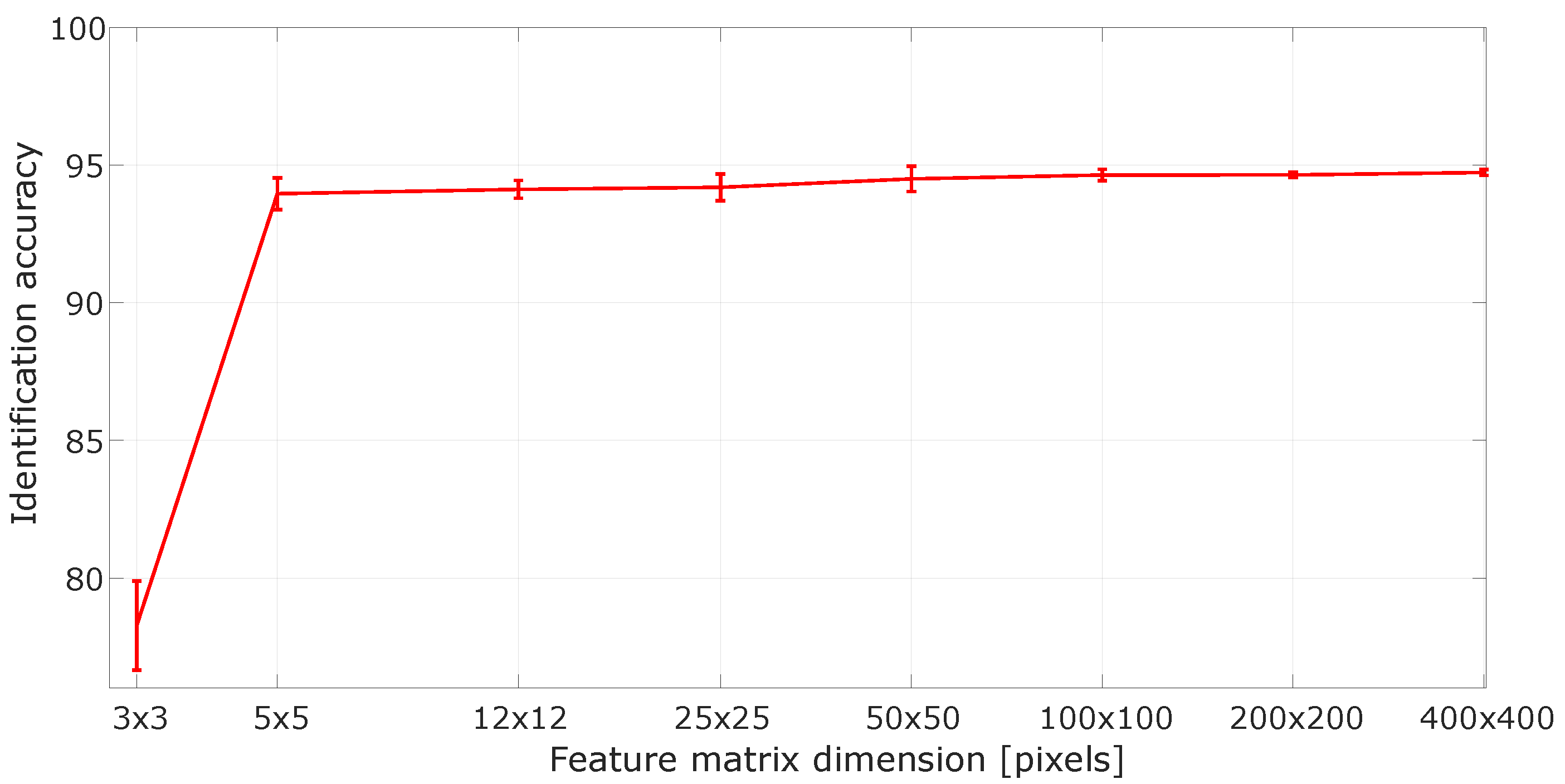

2.2. Neural Network Architecture and Processing

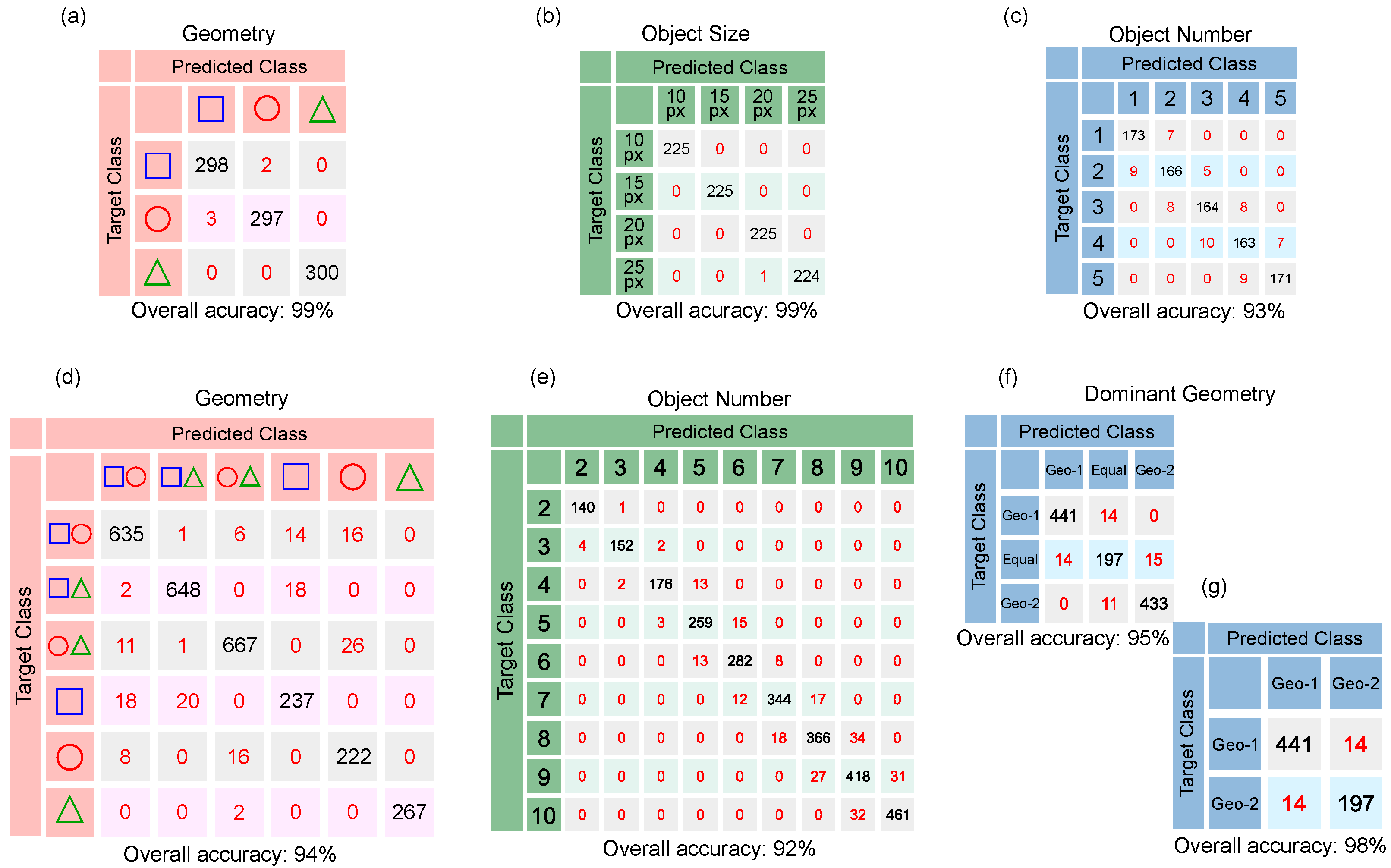

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DMD | Digital Micromirror Device |

| SLS | Static Light Scattering |

| DLS | Dynamic Light Scattering |

| STA | Scattering Tracking Analysis |

| LD | Laser Diffraction |

| NN | Neural Network |

| ML | Machine Learning |

| CCD | Charge-coupled Device |

References

- Banada, P.P.; Guo, S.; Bayraktar, B.; Bae, E.; Rajwa, B.; Robinson, J.P.; Hirleman, E.D.; Bhunia, A.K. Optical forward-scattering for detection of Listeria monocytogenes and other Listeria species. Biosens. Bioelectron. 2007, 22, 1664–1671. [Google Scholar] [CrossRef] [PubMed]

- Park, J.E.; Kim, K.; Jung, Y.; Kim, J.H.; Nam, J.M. Metal nanoparticles for virus detection. ChemNanoMat 2016, 2, 927–936. [Google Scholar] [CrossRef]

- Shekunov, B.Y.; Chattopadhyay, P.; Tong, H.H.Y.; Chow, A.H.L. Particle size analysis in pharmaceutics: Principles, methods and applications. Pharm. Res. 2007, 24, 203. [Google Scholar] [CrossRef] [PubMed]

- Dhamoon, R.K.; Paplo, H.; Aggarwal, G.; Gupta, M. Particle size characterization-techniques, factors and quality-by-design approach. Int. J. Drug Deliv. 2018, 10, 1–11. [Google Scholar]

- Robins, M.M. Particle size analysis in food. In Encyclopedia of Analytical Chemistry; Meyers, R.A., McGorring, R.J., Eds.; Wiley Online Library: Hoboken, NJ, USA, 2006. [Google Scholar]

- Zhang, J.; Liu, D.; Liu, Y.; Yu, Y.; Hemar, Y.; Regenstein, J.M.; Zhou, P. Effects of particle size and aging of milk protein concentrate on the biophysical properties of an intermediate-moisture model food system. Food Biosci. 2020, 37, 100698. [Google Scholar] [CrossRef]

- Roy, S.; Assafrão, A.; Pereira, S.; Urbach, H. Coherent Fourier scatterometry for detection of nanometer-sized particles on a planar substrate surface. Opt. Express 2014, 22, 13250–13262. [Google Scholar] [CrossRef] [Green Version]

- Tinke, A.P.; Carnicer, A.; Govoreanu, R.; Scheltjens, G.; Lauwerysen, L.; Mertens, N.; Vanhoutte, K.; Brewster, M.E. Particle shape and orientation in laser diffraction and static image analysis: Size distribution analysis of micrometer sized rectangular particles. Powder Technol. 2008, 186, 154. [Google Scholar] [CrossRef] [Green Version]

- Imhof, H.K.; Laforsch, C.; Wiesheu, A.C.; Schmid, J.; Anger, P.M.; Niessner, R.; Ivleva, N.P. Pigments and plastic in limnetic ecosystems: A qualitative and quantitative study on microparticles of different size classes. Water Res. 2016, 98, 64–74. [Google Scholar] [CrossRef]

- Parrish, K.; Fahrenfeld, N. Microplastic biofilm in fresh-and wastewater as a function of microparticle type and size class. Environ. Sci. Water Res. Technol. 2019, 5, 495–505. [Google Scholar] [CrossRef]

- Brown, D.M.; Wilson, M.R.; MacNee, W.; Stone, V.; Donaldson, K. Size-dependent proinflammatory effects of ultrafine polystyrene particles: A role for surface area and oxidative stress in the enhanced activity of ultrafines. Toxicol. Appl. Pharmacol. 2001, 175, 191–199. [Google Scholar] [CrossRef] [Green Version]

- Oberdörster, G.; Finkelstein, J.; Johnston, C.; Gelein, R.; Cox, C.; Baggs, R.; Elder, A. Acute pulmonary effects of ultrafine particles in rats and mice. Res. Rep. 2000, 96, 5–74. [Google Scholar]

- Merkus, H.G. Particle Size Measurements: Fundamentals, Practice, Quality; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Malvern Ltd. A Basic Guide to Particle Characterization; Malvern Ltd.: Malvern, UK, 2015. [Google Scholar]

- Xu, R. Light scattering: A review of particle characterization applications. Particuology 2015, 18, 11–21. [Google Scholar] [CrossRef]

- Bradley Deutsch, B.; Beams, R.; Novotny, L. Nanoparticle detection using dual-phase interferometry. Appl. Opt. 2010, 49, 4921–4925. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stetefeld, J.; McKenna, S.A.; Patel, T.R. Dynamic light scattering: A practical guide and applications in biomedical sciences. Biophys. Rev. 2016, 8, 409. [Google Scholar] [CrossRef] [PubMed]

- Rawle, A. Basic Principles of Particle Size Analysis; Malvern Instruments: Malvern, UK, 1995. [Google Scholar]

- ISO 13320:2020; Particle Size Analysis—Laser Diffraction Methods. ISO: Geneva, Switzerland, 2020.

- Blott, S.J.; Croft, D.J.; Pye, K.; Saye, S.E.; Wilson, H.E. Particle size analysis by laser diffraction. Geol. Soc. Lond. Spec. Publ. 2004, 232, 63. [Google Scholar] [CrossRef]

- Chen, J.; Clay, N.E.; Park, N.h.; Kong, H. Non-spherical particles for targeted drug delivery. Chem. Eng. Sci. 2015, 125, 20–24. [Google Scholar] [CrossRef] [Green Version]

- Cooley, M.; Sarode, A.; Hoore, M.; Fedosov, D.A.; Mitragotri, S.; Sen Gupta, A. Influence of particle size and shape on their margination and wall-adhesion: Implications in drug delivery vehicle design across nano-to-micro scale. Nanoscale 2018, 10, 15350–15364. [Google Scholar] [CrossRef] [PubMed]

- Ting, J.M.; Meachum, L.; Rowell, J.D. Effect of particle shape on the strength and deformation mechanisms of ellipse-shaped granular assemblages. Eng. Comput. 1995, 12, 99–108. [Google Scholar] [CrossRef]

- Zou, R.; Yu, A.B. Evaluation of the packing characteristics of mono-sized non-spherical particles. Powder Technol. 1996, 88, 71–79. [Google Scholar] [CrossRef]

- Ma, Z.; Merkus, H.G.; de Smet, J.G.; Heffels, C.; Scarlett, B. New developments in particle characterization by laser diffraction: Size and shape. Powder Technol. 2000, 111, 66–78. [Google Scholar] [CrossRef]

- Ma, Z.; Merkus, H.G.; Scarlett, B. Extending laser diffraction for particle shape characterization: Technical aspects and application. Powder Technol. 2001, 118, 180–187. [Google Scholar] [CrossRef]

- Blott, S.J.; Pye, K. Particle shape: A review and new methods of characterization and classification. Sedimentology 2008, 55, 31–63. [Google Scholar] [CrossRef]

- Hentschel, M.L.; Page, N.W. Selection of descriptors for particle shape characterization. Part. Part. Syst. Charact. Meas. Descr. Part. Prop. Behav. Powders Disperse Syst. 2003, 20, 25–38. [Google Scholar] [CrossRef]

- Hovenier, J.; Lumme, K.; Mishchenko, M.; Voshchinnikov, N.; Mackowski, D.; Rahola, J. Computations of scattering matrices of four types of non-spherical particles using diverse methods. J. Quant. Spectrosc. Radiat. Transf. 1996, 55, 695–705. [Google Scholar] [CrossRef]

- Mishchenko, M.I.; Travis, L.D.; Mackowski, D.W. T-matrix computations of light scattering by nonspherical particles: A review. J. Quant. Spectrosc. Radiat. Transf. 1996, 55, 535–575. [Google Scholar] [CrossRef]

- Jia, R.; Zhang, X.; Cui, F.; Chen, G.; Li, H.; Peng, H.; Cao, Z.; Pei, S. Machine-learning-based computationally efficient particle size distribution retrieval from bulk optical properties. Appl. Opt. 2020, 59, 7284–7291. [Google Scholar] [CrossRef] [PubMed]

- Altman, L.E.; Grier, D.G. CATCH: Characterizing and tracking colloids holographically using deep neural networks. J. Phys. Chem. B 2020, 124, 1602–1610. [Google Scholar] [CrossRef] [Green Version]

- Daniels, A.L.; Calderon, C.P.; Randolph, T.W. Machine learning and statistical analyses for extracting and characterizing “fingerprints” of antibody aggregation at container interfaces from flow microscopy images. Biotechnol. Bioeng. 2020, 117, 3322–3335. [Google Scholar] [CrossRef]

- Hundal, H.; Rohani, S.; Wood, H.; Pons, M. Particle shape characterization using image analysis and neural networks. Powder Technol. 1997, 91, 217–227. [Google Scholar] [CrossRef]

- Rivenson, Y.; Koydemir, H.C.; Wang, H.; Wei, Z.; Ren, Z.; Gunaydın, H.; Zhang, Y.; Gorocs, Z.; Liang, K.; Tseng, D.; et al. Deep Learning Enhanced Mobile-Phone Microscopy. ACS Photonics 2018, 5, 2354. [Google Scholar] [CrossRef]

- Nascimento, C.; Guardani, R.; Giulietti, M. Use of neural networks in the analysis of particle size distributions by laser diffraction. Powder Technol. 1997, 90, 89–94. [Google Scholar] [CrossRef]

- Hewitt, C.F.; Whalley, P.B. Advanced optical instrumentation methods. Int. J. Multiphase Flow 1980, 6, 139. [Google Scholar] [CrossRef]

- Kang, S.; Lyoo, P.; Kim, D.; Park, J. Laser diffraction pattern analysis of various two-dimensional regular-shaped model particles. Adv. Powder Technol. 1994, 5, 33–42. [Google Scholar] [CrossRef]

- Yevick, A.; Hannel, M.; Grier, D.G. Machine-learning approach to holographic particle characterization. Opt. Express 2014, 22, 26884. [Google Scholar] [CrossRef] [PubMed]

- Hannel, M.D.; Abdulali, A.; O’Brien, M.; Grier, D.G. Machine-learning techniques for fast and accurate feature localization in holograms of colloidal particles. Opt. Express 2018, 26, 15221–15231. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Helgadottir, S.; Argun, A.; Volpe, G. Digital video microscopy enhanced by deep learning. Optica 2019, 6, 506. [Google Scholar] [CrossRef] [Green Version]

- Kolenov, D.; Pereira, S. Machine learning techniques applied for the detection of nanoparticles on surfaces using coherent Fourier scatterometry. Opt. Express 2020, 28, 19163–19186. [Google Scholar] [CrossRef]

- Hussain, R.; Noyan, M.A.; Woyessa, G.; Marín, R.R.R.; Martinez, P.A.; Mahdi, F.M.; Finazzi, V.; Hazlehurst, T.A.; Hunter, T.N.; Coll, T.; et al. An ultra-compact particle size analyser using a CMOS image sensor and machine learning. Light Sci. Appl. 2020, 9, 21. [Google Scholar] [CrossRef] [Green Version]

- Guardani, R.; Nascimento, C.; Onimaru, R. Use of neural networks in the analysis of particle size distribution by laser diffraction: Tests with different particle systems. Powder Technol. 2002, 126, 42–50. [Google Scholar] [CrossRef]

- Kolenov, D.; Davidse, D.; Le Cam, J.; Pereira, S. Convolutional neural network applied for nanoparticle classification using coherent scatterometry data. Appl. Opt. 2020, 59, 8426–8433. [Google Scholar] [CrossRef]

- Perez-Leija, A.; Guzman-Silva, D.; de J. León-Montiel, R.; Grafe, M.; Heinrich, M.; Moya-Cessa, H.; Busch, K.; Szameit, A. Endurance of quantum coherence due to particle indistinguishability in noisy quantum networks. npj Quantum Inf. 2018, 4, 45. [Google Scholar] [CrossRef] [Green Version]

- Svozil, D.; Kvasnicka, V.; Pospichal, J. Introduction to multi-layer feed-forward neural networks. Chemom. Intell. Lab. Syst. 1997, 39, 43–62. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 19 December 2021).

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw. 1993, 6, 525–533. [Google Scholar] [CrossRef]

- Shore, J.; Johnson, R. Properties of cross-entropy minimization. IEEE Trans. Inf. Theory 1981, 27, 472–482. [Google Scholar] [CrossRef] [Green Version]

- De Boer, P.T.; Kroese, D.P.; Mannor, S.; Rubinstein, R.Y. A tutorial on the cross-entropy method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Shebani, A.; Iwnicki, S. Prediction of wheel and rail wear under different contact conditions using artificial neural networks. Wear 2018, 406, 173–184. [Google Scholar] [CrossRef] [Green Version]

- You, C.; Quiroz-Juárez, M.A.; Lambert, A.; Bhusal, N.; Dong, C.; Perez-Leija, A.; Javaid, A.; de J. León-Montiel, R.; Magaña-Loaiza, O.S. Identification of light sources using machine learning. Appl. Phys. Rev. 2020, 7, 021404. [Google Scholar] [CrossRef]

- Xing, F.; Xie, Y.; Su, H.; Liu, F.; Yang, L. Deep learning in microscopy image analysis: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 4550–4568. [Google Scholar] [CrossRef]

- Rivenson, Y.; Göröcs, Z.; Günaydin, H.; Zhang, Y.; Wang, H.; Ozcan, A. Deep learning microscopy. Optica 2017, 4, 1437–1443. [Google Scholar] [CrossRef] [Green Version]

- Mennel, L.; Symonowicz, J.; Wachter, S.; Polyushkin, D.K.; Molina-Mendoza, A.J.; Mueller, T. Ultrafast machine vision with 2D material neural network image sensors. Nature 2020, 579, 62–66. [Google Scholar] [CrossRef]

- Nakazawa, T.; Kulkarni, D.V. Wafer map defect pattern classification and image retrieval using convolutional neural network. IEEE Trans. Semicond. Manuf. 2018, 31, 309–314. [Google Scholar] [CrossRef]

- O’Leary, J.; Sawlani, K.; Mesbah, A. Deep Learning for Classification of the Chemical Composition of Particle Defects on Semiconductor Wafers. IEEE Trans. Semicond. Manuf. 2020, 33, 72–85. [Google Scholar] [CrossRef]

| Number of Pixels | Power [W] |

|---|---|

| ≤500 | 200 |

| 501–1000 | 100 |

| 1001–1500 | 50 |

| 1501–3000 | 25 |

| ≥3001 | 15 |

| Experiment | Neural Network | Accuracy | Number of Hidden Layers | Number of Neurons by Layer |

|---|---|---|---|---|

| 1 | Geometry | 99% | 1 | 5 |

| Object Size | 99% | 1 | 5 | |

| Object Number | 93% | 2 | Layer 1 = 20; Layer 2 = 5 | |

| 2 | Geometry | 94% | 2 | Layer 1 = 30; Layer 2 = 20 |

| Object Number | 92% | 2 | Layer 1 = 80; Layer 2 = 50 | |

| Dominant geometry (even) | 95% | 2 | Layer 1 = 30; Layer 2 = 20 | |

| Dominant geometry (odd) | 98% | 2 | Layer 1 = 30; Layer 2 = 20 |

| Experiment | Neural Network | Training [s] | Test [s] |

|---|---|---|---|

| 1 | Geometry | ± | ± |

| Object Size | ± | ± | |

| Object Number | ± | ± | |

| 2 | Geometry | 4.7212 ± | ± |

| Object Number | ± 4.6636 | ± | |

| Dominant geometry (even) | 1.045 ± | ± | |

| Dominant geometry (odd) | ± | ± |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Villegas, A.; Quiroz-Juárez, M.A.; U’Ren, A.B.; Torres, J.P.; León-Montiel, R.d.J. Identification of Model Particle Mixtures Using Machine-Learning-Assisted Laser Diffraction. Photonics 2022, 9, 74. https://doi.org/10.3390/photonics9020074

Villegas A, Quiroz-Juárez MA, U’Ren AB, Torres JP, León-Montiel RdJ. Identification of Model Particle Mixtures Using Machine-Learning-Assisted Laser Diffraction. Photonics. 2022; 9(2):74. https://doi.org/10.3390/photonics9020074

Chicago/Turabian StyleVillegas, Arturo, Mario A. Quiroz-Juárez, Alfred B. U’Ren, Juan P. Torres, and Roberto de J. León-Montiel. 2022. "Identification of Model Particle Mixtures Using Machine-Learning-Assisted Laser Diffraction" Photonics 9, no. 2: 74. https://doi.org/10.3390/photonics9020074

APA StyleVillegas, A., Quiroz-Juárez, M. A., U’Ren, A. B., Torres, J. P., & León-Montiel, R. d. J. (2022). Identification of Model Particle Mixtures Using Machine-Learning-Assisted Laser Diffraction. Photonics, 9(2), 74. https://doi.org/10.3390/photonics9020074