1. Introduction

The proliferation of social media (SM) has fundamentally reshaped human interaction, creating unprecedented opportunities for connection and communication [

1,

2]. However, this digital landscape has also cultivated a pervasive dark side: cyberbullying. Defined as intentional and repeated harm inflicted through electronic text and media, cyberbullying has emerged as a significant societal problem with severe psychological consequences for its victims, including depression, anxiety, and social isolation [

3,

4]. The sheer volume and velocity of online content make manual moderation untenable, creating a critical need for automated systems that can accurately and reliably detect harmful language in real time [

5,

6].

Early attempts at automated detection relied on keyword matching and traditional machine learning models, but these methods were often brittle and failed to grasp the contextual nuances of human language [

7,

8,

9]. The advent of deep learning, particularly the development of large pre-trained language models (PLMs) based on the Transformer architecture, marked a paradigm shift [

10,

11]. Models like BERT (Bidirectional Encoder Representations from Transformers) [

12] and its successor, RoBERTa [

13], established new standards in natural language understanding by learning deep contextual relationships between words, becoming the de facto foundation for a wide array of NLP tasks, including cyberbullying detection [

14,

15,

16,

17].

Despite their success, the standard fine-tuning approach for these powerful models—typically involving the addition of a simple linear classification layer—presents its own limitations [

18]. This approach may not fully leverage the rich, sequential information encoded in the transformer’s outputs or effectively focus on the most toxic parts of a sentence, especially in cases of sarcasm [

19], indirect aggression [

20], or long-form harassment [

21]. A significant research gap therefore exists in developing more sophisticated architectures that can better interpret the contextual and sequential patterns specific to harmful online language [

1].

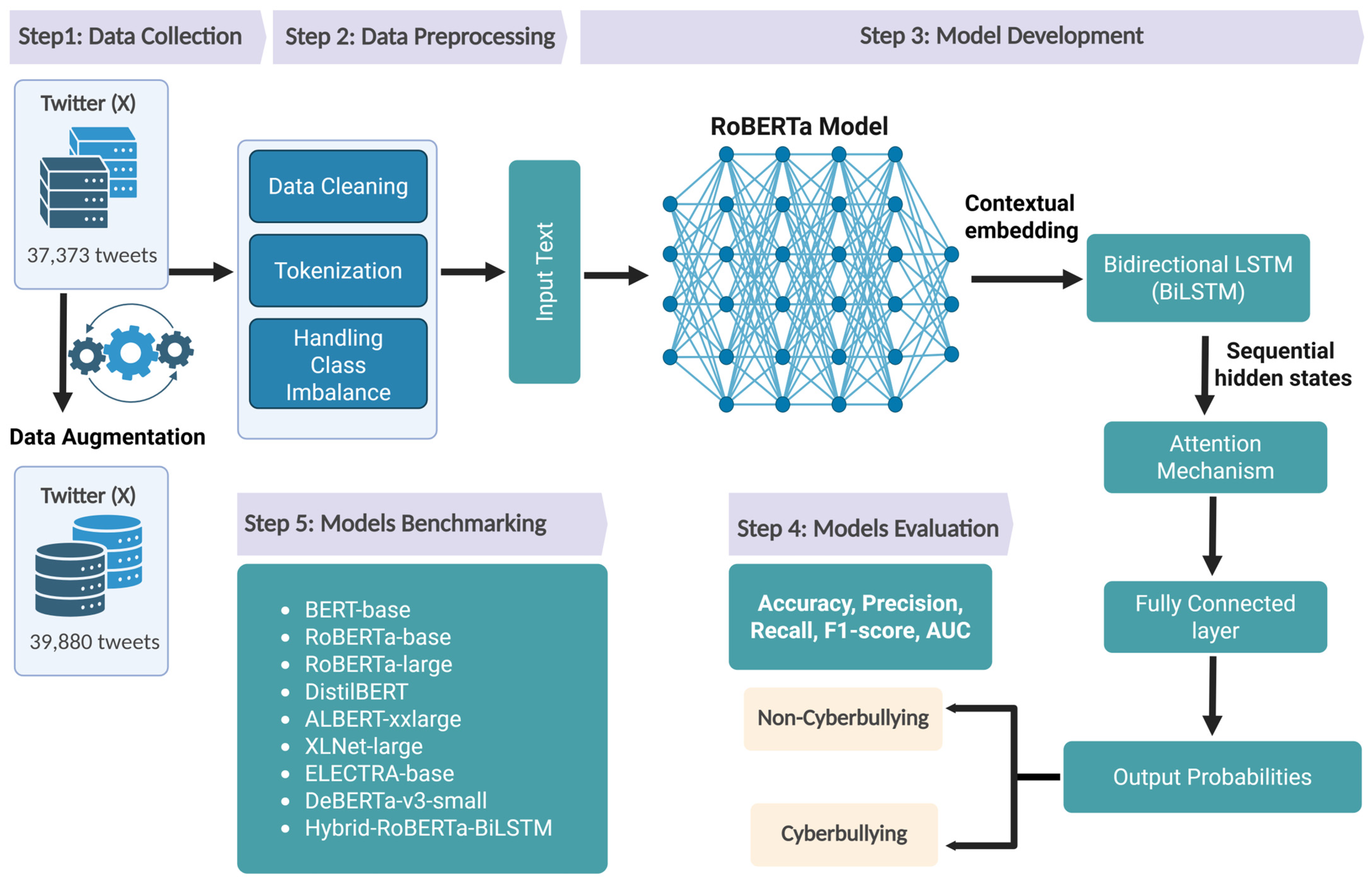

To address these shortcomings, this study introduces a novel hybrid deep learning architecture, the Hybrid-RoBERTa-BiLSTM with the Attention model. Our aim is to develop and validate a more powerful and nuanced detection system by synergizing the strengths of multiple neural components. The primary contributions of this work are threefold:

We propose a novel hybrid architecture, the Hybrid-RoBERTa-BiLSTM-Attention model, that synergizes a pre-trained RoBERTa encoder with a BiLSTM to capture long-range sequential dependencies and an attention mechanism to focus on the most discriminative features for classification.

We introduce a methodologically rigorous optimization process using the Optuna framework to systematically tune the model’s hyperparameters, ensuring the robustness and reproducibility of our findings.

We conduct a comprehensive benchmark against eight state-of-the-art models, demonstrating that our proposed architecture achieves new state-of-the-art performance and offers an optimal balance between predictive accuracy and computational efficiency.

The remainder of this paper is organized as follows.

Section 2 provides a review of related work in automated cyberbullying detection and relevant deep learning architectures.

Section 3 details our proposed methodology, including the data preprocessing techniques, the architecture of the Hybrid-RoBERTa-BiLSTM-Attention model, and the systematic hyperparameter optimization process.

Section 4 presents the experimental results, offering a comprehensive performance analysis of our model and a comparative benchmark against state-of-the-art baselines. Finally,

Section 5 concludes the paper by summarizing our findings, discussing their implications, and suggesting directions for future research.

2. Related Works

The rise of SM and online communication platforms has led to an alarming increase in cyberbullying incidents, causing significant psychological and emotional harm to individuals [

1,

5]. This pervasive issue has spurred researchers to explore automated methods for detecting cyberbullying to enhance online safety. However, detecting cyberbullying remains challenging due to the nuanced and context-dependent nature of online language, which often involves sarcasm, slang, and cultural references that complicate automated detection [

14].

Early efforts in cyberbullying detection focused on keyword-based filtering and rule-based approaches. These traditional methods, while straightforward, struggled to capture the complexities of online communication. For instance, a basic keyword-based system might detect offensive words but fail to consider context, leading to high false positives or negatives. Studies such as those by Dinakar et al. [

22] and Nahar et al. [

23] explored these initial approaches but found them insufficient in handling the nuanced language of SM, especially for cyberbullying, where context is critical. The limitations of keyword-based detection led to the adoption of machine learning (ML) models, which provided more flexibility by learning from data rather than relying solely on predefined rules. Traditional ML algorithms such as Support Vector Machines (SVMs) [

24] and logistic regression were employed to improve cyberbullying detection by considering various textual features. Although these models showed improvement over keyword filtering, they required hand-crafted features, which limited their adaptability and performance in dynamic online environments. Additionally, these models were prone to generalization issues as they struggled to capture the contextual and sequential nature of language [

25].

With the advent of deep learning, researchers shifted towards neural networks, particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), to enhance cyberbullying detection. CNNs, known for their ability to detect local patterns, were initially applied to sentiment analysis [

26] and extended to cyberbullying detection tasks [

27]. RNNs, especially Long Short-Term Memory (LSTM) networks, proved effective in capturing sequential dependencies, as seen in works by Agrawal and Awekar [

28]. These models provided a significant leap forward by allowing automatic feature extraction, but they still struggled with capturing long-range dependencies, a critical factor in understanding context-dependent bullying language. Suliman et al. [

29] introduces a stacking ensemble learning approach that combines multiple deep neural network methods, along with an enhanced BERT model (BERT-M), to detect cyberbullying on SM platforms like X (formerly known as Twitter) and Facebook. The dataset was preprocessed to remove irrelevant information, and word2vec with Continuous Bag of Words (CBOWs) was utilized for feature extraction. The stacked model achieved an F1-score of 0.964, precision of 0.950, and recall of 0.92, with a detection time of 3 min. The authors in [

30] utilized word embeddings combined with a CNN for cyberbullying detection in SM text, achieving an accuracy of 94.2%. Their experiments were conducted on Twitter data. Al-Ajlan and Ykhlef [

31] employed a CNN enhanced by a metaheuristic optimization algorithm for cyberbullying classification, using a dataset of 20,000 randomly selected tweets. Zhang et al. [

32] introduced a pronunciation-based CNN to detect cyberbullying, drawing on datasets from Twitter and Formspring. Zhao and Mao [

33] developed a text-based detection method that extracted cyberbullying features using a variant of stacked denoising autoencoders called marginalized stacked denoising autoencoders, conducting experiments on Twitter and MySpace data. In Lu et al. [

34], the authors implemented a character-level CNN with shortcut connections to detect cyberbullying in both Chinese and English datasets, performing their experiments on the Chinese Weibo and English Tweet datasets. Lastly, Kumari and Singh [

35] focused on analyzing multimodal data, integrating both text and images to detect cyberbullying content, using a dataset of 2100 posts, where each post included an image accompanied by a comment.

Despite these advancements, both CNNs and RNNs faced challenges in handling long-term dependencies [

36] and complex language constructs [

26]. Sarcasm, cultural references, and implicit offensive language often went undetected, as these models were limited by their inability to fully understand context over long sequences. This gap in detecting nuanced language led to the adoption of Transformer-based architectures, which could overcome these limitations by utilizing self-attention mechanisms to model relationships across entire sentences or even larger text bodies.

Transformer models, introduced by Vaswani et al. [

10], revolutionized NLP by implementing a self-attention mechanism that allowed for efficient handling of dependencies between words. The BERT model, introduced by Devlin et al. [

12], further advanced this approach by incorporating bidirectional context, allowing it to understand each word based on both preceding and succeeding words in a sentence. BERT’s success in various NLP tasks has made it a popular choice for cyberbullying detection. Paul and Saha [

37] employed a pre-trained BERT model for cyberbullying identification using three corpora: Formspring (12k posts), Twitter (16k posts), and Wikipedia (100k posts). Their findings show that BERT outperforms slot-gated or attention-based deep learning models. Similarly, studies by Mishra et al. [

38] and Muneer et al. [

1] demonstrated that BERT outperformed traditional and deep learning models in detecting harmful language due to its robust contextual comprehension. However, the standard fine-tuning paradigm, which typically adds a simple linear layer on top of the transformer’s output, may not be fully optimized for the intricate task of cyberbullying detection. This approach can underutilize the rich sequential information encoded by the transformer and may fail to dynamically focus on the most salient, toxic cues within a text.

As the summary in

Table 1 indicates, while the field has progressed to powerful transformer models, a significant research gap persists in the architectural design of the classification head. The standard fine-tuning approach for models like BERT and RoBERTa often employs a simple linear layer that underutilizes the rich sequential information from the encoder and lacks a dynamic mechanism to focus on the most salient toxic cues. To address these shortcomings and advance the state of the art, this study makes the following primary contributions. First, we propose a hybrid architecture that combines the powerful contextual embeddings of RoBERTa with a BiLSTM network to capture sequential dependencies and an attention mechanism to focus on the most salient features. Second, we employ a systematic hyperparameter optimization strategy using the Optuna framework to ensure a methodologically rigorous and reproducible result.

4. Experimental Results and Discussion

In this section, we present the empirical results of our study. We first detail the experimental setup, followed by a comprehensive evaluation of our proposed model’s performance. We then conduct a comparative analysis against established baseline models and conclude with a discussion of the key findings.

4.1. Experimental Setup

All experiments were conducted on a high-performance computing (HPC) node equipped with two NVIDIA H100 GPUs, each providing 80 GB of HBM3 memory. This powerful hardware configuration allowed for the implementation of complex architectures and the use of large batch sizes. The software stack was built on a Linux environment with CUDA 12.2, PyTorch 2.3, and the Hugging Face Transformers library (version 4.42.0). Our proposed RoBERTa-BiLSTM-Attention model and all baseline models were implemented using this framework. The fine-tuning process was accelerated using mixed-precision (FP16) computation to improve throughput. To ensure training stability and prevent overfitting, we employed an early stopping mechanism with a patience of one epoch, monitoring the validation set’s F1-score. Key hyperparameters for the proposed model, such as learning rate, LSTM dimensions, and dropout rate, were systematically determined using the Optuna framework, as detailed in

Section 3.3.1. The final parameters used for fine-tuning are summarized in

Table 3 for full reproducibility.

4.2. Performance of the Proposed Model

To validate the training stability and generalization capability of our proposed model, we present the learning curves in

Figure 4. The plots for training and validation loss show a steady decrease before converging, while the accuracy curves show a corresponding increase, plateauing as the model reaches optimal performance. The minimal and stable gap between the training and validation curves indicates that our use of dropout and an early stopping strategy was effective in preventing overfitting, confirming that the model is robust and generalizes well to unseen data.

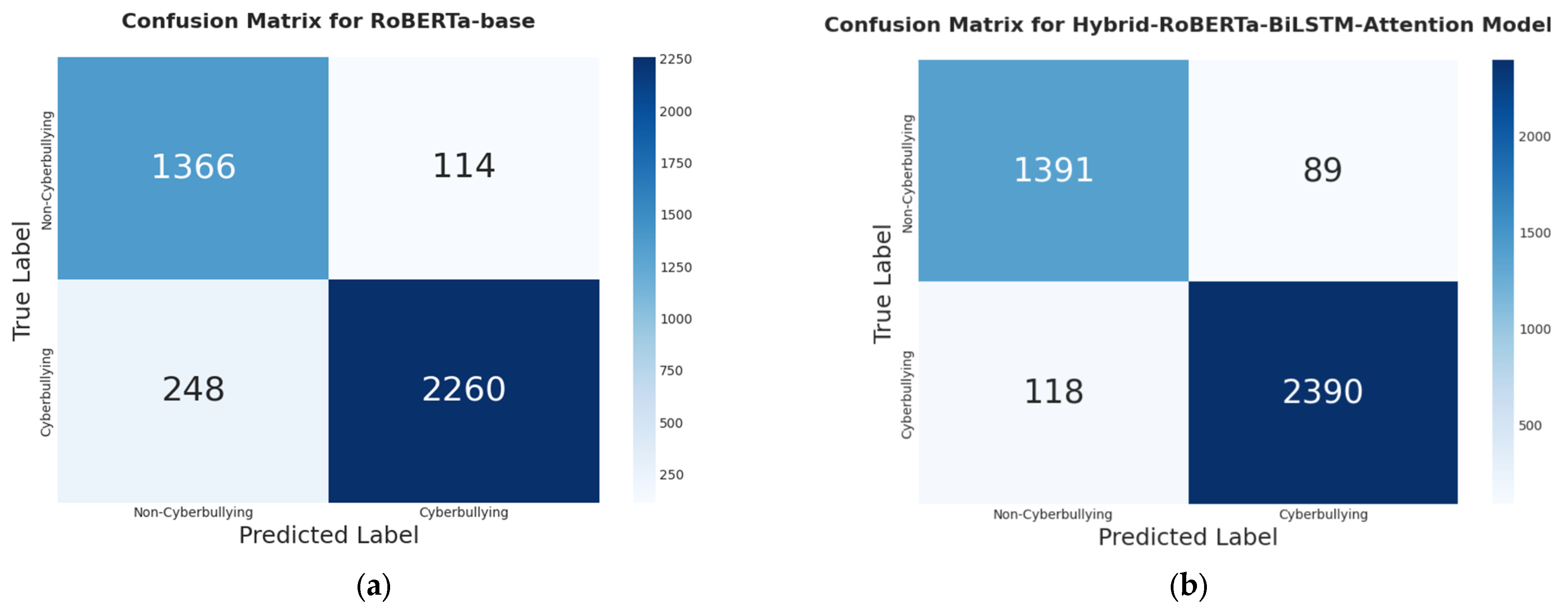

Our proposed Hybrid-RoBERTa-BiLSTM-Attention model achieved exceptional performance on the held-out test set, demonstrating its effectiveness for the complex task of cyberbullying classification. The integration of the BiLSTM and attention mechanism on top of RoBERTa’s contextual embeddings proved highly successful in identifying the nuanced patterns characteristic of cyberbullying classification. As detailed in

Table 4, the model achieved an overall accuracy of 94.8%, a robust F1-score of 95.9%, and a remarkable AUC of 98.5%, indicating superior discriminative ability between classes. The model’s effectiveness is further illustrated by its confusion matrix, shown in

Figure 5b. The high count of true positives (2390) and true negatives (1391) compared to the low number of false negatives (118) and false positives (89) confirms the model’s high degree of reliability. The well-balanced precision (96.4%) and recall (95.3%) signify that the architecture not only correctly identifies the majority of cyberbullying instances but also maintains a very low rate of misclassifying benign content.

Furthermore, the hybrid architecture’s integration of bidirectional LSTM layers with multi-head attention mechanisms enabled robust capture of both lexical patterns (via RoBERTa embeddings) and contextual escalation cues (via sequential modeling), reflected in its class-leading AUC of 98.5%, as shown in

Figure 6.

To provide a more detailed breakdown of the model’s performance on a per-class basis,

Table 5 presents the full classification report. The report shows that the model achieves a high F1-score for both the minority “non-cyberbullying” class (0.931) and the majority “cyberbullying” class (0.958). This demonstrates that our model is not biased towards one class and performs robustly across the entire dataset, effectively distinguishing between the two categories. The support values also confirm that the evaluation was conducted on a sufficient number of samples for each class.

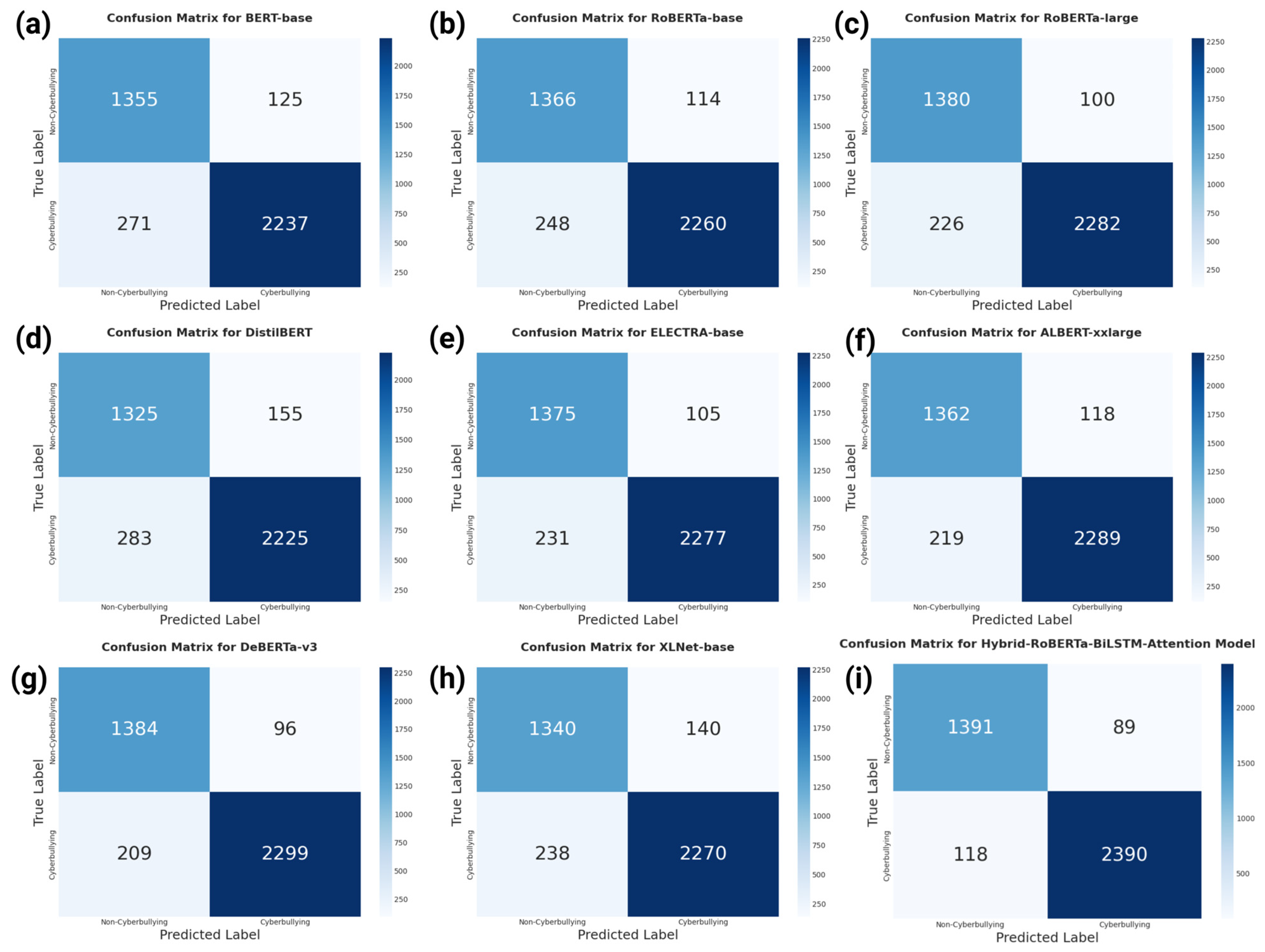

4.3. Comparative Analysis and Benchmarking with State-of-the-Art Models

To establish the performance of our proposed model relative to the state-of-the-art (SOTA), we conducted a rigorous benchmark against eight leading transformer architectures: BERT-base, RoBERTa-base, RoBERTa-large, DistilBERT, ALBERT-xxlarge, XLNet-large, ELECTRA-base, and DeBERTa-v3-small. We opted to re-implement and evaluate these models on the same specific dataset to ensure a fair, direct comparison. The results of this comprehensive benchmark, illustrated in the performance heatmap (

Figure 6), demonstrate the clear superiority of our proposed Hybrid-RoBERTa-BiLSTM model, achieving the highest scores across all five evaluated metrics. This outcome validates our hypothesis that a specialized hybrid architecture can outperform standard fine-tuning approaches for complex classification tasks.

Our proposed model achieved an F1-score of 95.8% and an AUC of 98.5%. Notably, it surpassed the strongest baseline, DeBERTa-v3-small, by a significant margin of 2.0 percentage points on the F1-score (95.8% vs. 93.8%). The improvement is even more pronounced in recall, where our model achieved 95.3%—a 3.6-point gain over DeBERTa-v3—indicating a substantially better ability to identify true cyberbullying cases. Furthermore, when compared to its own backbone, RoBERTa-base, our hybrid model shows a marked improvement of 1.6 points on the F1-score and 0.9 points on the AUC, confirming the significant contribution of the BiLSTM and attention layers.

The superior performance can be attributed to the architectural synergy of the model’s components. While all models leverage powerful contextual embeddings from a transformer base, our architecture enhances the classification process. The BiLSTM layer captures long-range sequential dependencies and word-order nuances critical for understanding context, sarcasm, and indirect aggression. Subsequently, the attention mechanism allows the model to dynamically weigh the importance of different words, focusing on the most discriminative tokens (e.g., insults, threats) before making a final prediction. This sophisticated classification head provides a more nuanced understanding of the text than the standard linear layer used in the baseline models.

To provide a more granular view of model behavior,

Figure 7 presents the confusion matrices for the proposed model and all eight baselines. A visual inspection of these matrices reveals not just that our model performs better, but how. The matrix for our Hybrid-RoBERTa-BiLSTM model (

Figure 7i) shows the most desirable error profile among all contenders. When compared directly to the strongest baseline, DeBERTa-v3 (

Figure 7g), our model demonstrates a substantial 43% reduction in False Negatives (118 vs. 209). This is a critical improvement, as it signifies an enhanced capability to correctly identify instances of actual cyberbullying that other models miss, a key requirement for any effective content moderation system. Simultaneously, our model maintains a marginally lower False Positive count (89 vs. 96), indicating it achieves this higher sensitivity without sacrificing precision. This superior trade-off between minimizing missed cyberbullying cases and avoiding the incorrect flagging of benign content underscores the practical advantages of our proposed architecture.

The F1-score, which represents the harmonic mean of precision and recall, is arguably the most critical metric for evaluating performance on imbalanced classification tasks like cyberbullying detection. The comparative ranking of models by F1-score, presented in

Figure 8, offers the clearest evidence of our proposed model’s architectural advantages. Our Hybrid-RoBERTa-BiLSTM model achieved a top F1-score of 0.9585, establishing a new state-of-the-art performance on this dataset. Crucially, it outperformed the next-best model, RoBERTa-large, by a substantial margin of 1.44 percentage points (0.9585 vs. 0.9441). This pronounced gap visually separates our model from the cluster of other high-performing baselines, whose scores are tightly grouped in the 0.93–0.94 range. This advantage directly reflects the architecture’s enhanced ability to balance the identification of true cyberbullying cases (Recall) while maintaining high precision, a capability that sets it apart from even the most powerful standard transformer models for this task.

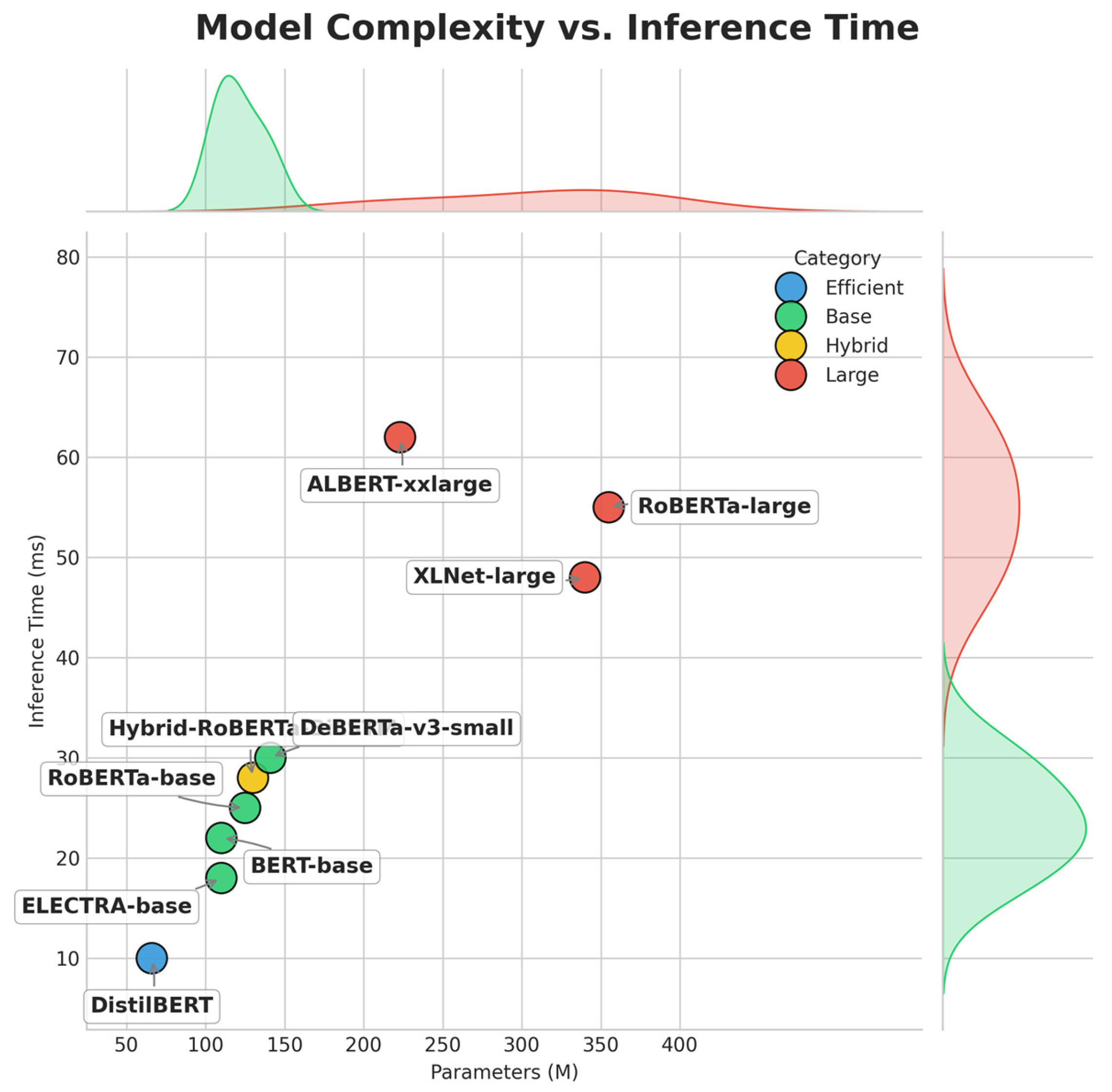

4.4. Complexity and Efficiency Analysis

Beyond predictive accuracy, the practical utility of a model is determined by its computational efficiency. We evaluated each model based on its size (number of parameters) and speed (inference time per sample) to analyze the performance-to-cost trade-off. This analysis, summarized in

Table 6, reveals the distinct advantage of our proposed architecture.

Our Hybrid-RoBERTa-BiLSTM model achieves its state-of-the-art F1-score of 0.9585 with a moderate size of only 130 M parameters. This makes it significantly more efficient than the largest models, such as RoBERTa-large (355 M) and XLNet-large (340 M), while still outperforming them.

Figure 9 positions our hybrid model in a highly desirable quadrant of high performance and low latency. While models like DistilBERT offer the fastest inference (10 ms), they do so with considerable sacrifice in accuracy. Conversely, the largest models provide diminishing returns, as their significant computational overhead does not translate to superior performance. This analysis demonstrates that our proposed architecture represents an optimal balance, providing state-of-the-art accuracy without demanding prohibitive computational resources, making it a practical and effective solution for real-world deployment.

4.5. Theoretical and Practical Implication

The implications of this work are twofold. From a scientific perspective, it validates the hypothesis that investing in specialized, hybrid architectures for the classification head can yield substantial performance gains over even larger, more complex baseline transformers. From a practical standpoint, our model offers a more effective and reliable solution for real-world content moderation systems. Its notable reduction in false negatives means fewer instances of cyberbullying are missed, while its high precision ensures that benign content is not incorrectly flagged. Furthermore, the efficiency analysis revealed that this state-of-the-art performance is achieved with moderate computational resources, making it a viable solution for deployment at scale.

5. Conclusions

This study introduced a novel hybrid deep learning architecture, the Hybrid-RoBERTa-BiLSTM-Attention model, for the critical task of cyberbullying detection. Through a rigorous process of systematic hyperparameter optimization and a comprehensive benchmark against eight state-of-the-art transformer models, we demonstrated the unequivocal superiority of our proposed approach. The results confirm that our model achieves a new state-of-the-art performance, leading across all key metrics, including a significantly higher F1-score (0.9585) and the AUC (0.9850). The success is attributed to the architectural synergy where the BiLSTM captures long-range sequential context, and the attention mechanism dynamically focuses on the most discriminative linguistic features—capabilities that go beyond standard fine-tuning.

Despite the promising results, we acknowledge several limitations that provide clear directions for future research. First, our model’s performance was validated on a specific English-language dataset. Its generalizability to other languages, dialects, or online platforms with distinct communication norms remains an open question. Second, our work is exclusively focused on textual data. Modern online harassment is increasingly multi-modal, involving images, memes, and videos, which our current architecture is not designed to analyze. Third, the model classifies text in isolation and does not consider broader conversational or social graph context, such as the history of interaction between users, which can be crucial for interpreting ambiguous messages. Finally, like many complex deep learning models, the “black-box” nature of our architecture presents challenges for interpretability, a key consideration for real-world moderation systems where transparent reasoning is often required. Future work should aim to address these challenges to build more comprehensive, context-aware, and transparent online safety systems.