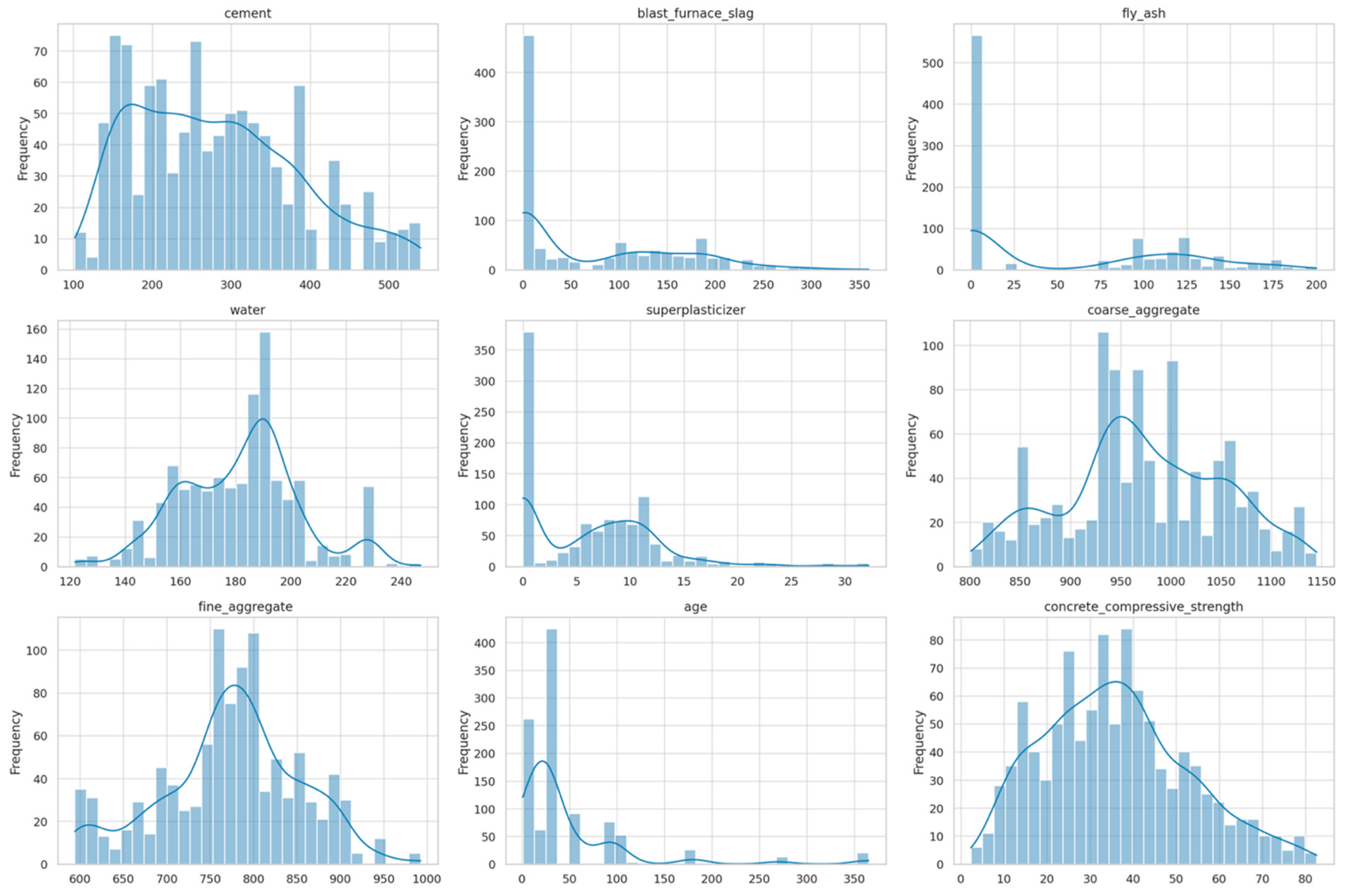

In this section, we present a comprehensive analysis of the experimental results through three integrated stages. First, we explore the dataset characteristics to gain an understanding of the distribution, range, and relationships among the input features and the target variable. This step is essential to provide context for subsequent modeling efforts and to ensure data quality. Next, we assess the predictive performance of the hybrid CNN-LSTM + XGBoost model and analyze feature importance using model-agnostic interpretability techniques such as SHAP, EFI, and PFI. This allows us to validate the effectiveness of our feature engineering approach and to identify the most influential variables in predicting concrete compressive strength. Finally, we focus on the RL component, where the agent is trained to select optimal concrete types based on predicted strength outcomes. The learned policy is evaluated through visual analyses including reward progression curves, action distributions, and policy trajectory maps, aiming to highlight the robustness and adaptability of the decision-making framework.

4.2. Prediction Modeling and Results

This section of the study focuses on two primary objectives: first, modeling and presenting the prediction results using the proposed hybrid framework; and second, analyzing feature importance through interpretability techniques such as SHAP, EFI, and PFI to investigate the role of input variables in model performance. The goal of these analyses is twofold: to evaluate the model’s accuracy in predicting concrete compressive strength and to understand how independent variables influence the output.

To comprehensively assess model performance, a total of ten machine learning and deep learning algorithms were implemented. These included: XGBoost, Random Forest, LightGBM, Decision Tree, KNN, SVM, ANN, CNN, LSTM, and the proposed hybrid model, CNN-LSTM + XGBoost. A summary of the evaluation results based on MAE, RMSE, and R

2 metrics is presented in

Table 3.

The results reported in

Table 4 demonstrate that the proposed hybrid model significantly outperformed the other algorithms. By leveraging the temporal feature extraction capability of the CNN-LSTM architecture and combining it with the predictive strength of XGBoost, the model achieved the lowest prediction errors (MAE and RMSE) and the highest R

2. In contrast, baseline models such as KNN and Decision Tree showed lower accuracy, and even advanced models like LSTM and CNN individually did not reach the predictive performance of the hybrid approach. This comparison highlights that incorporating a hybrid architecture can substantially enhance model performance in predicting complex data structures such as concrete material properties.

To specifically assess the benefit of integrating spatial and temporal features, we compared the performance of the proposed fused model with simpler configurations where only CNN or only LSTM features were used as input to the XGBoost meta-learner. The results show that while the LSTM + XGBoost model achieved an RMSE of 4.41 and an R2 of 0.93, and the CNN + XGBoost model obtained an RMSE of 4.63 and an R2 of 0.92, the proposed CNN-LSTM + XGBoost configuration improved the RMSE to 3.96 and R2 to 0.95. These results correspond to a relative RMSE reduction of 10.2% compared to the best-performing single-stream baseline, indicating that the joint modeling of spatial and temporal dynamics provides richer and more discriminative representations for predicting concrete compressive strength.

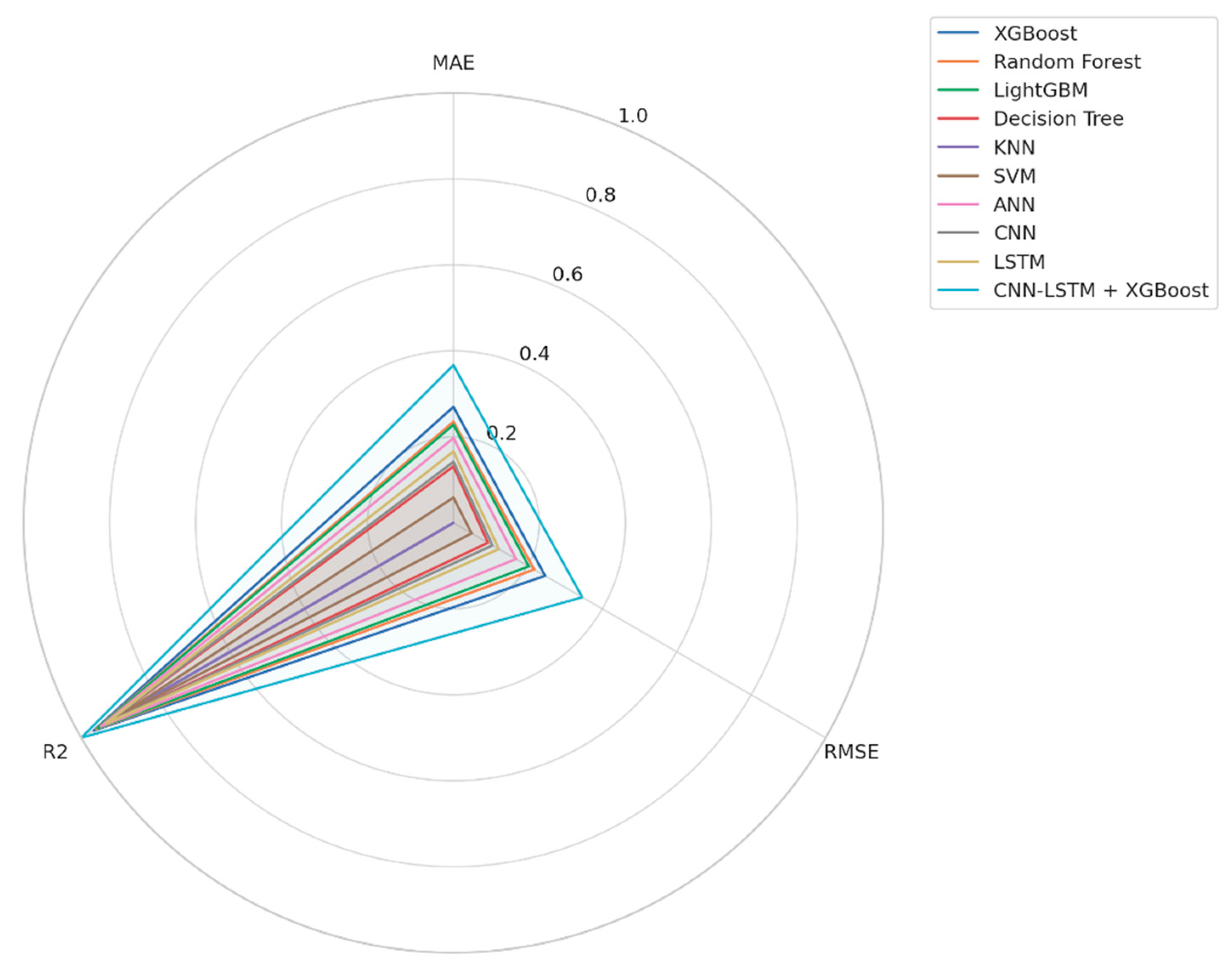

Figure 3 presents a normalized comparison of the performance of ten machine learning and deep learning algorithms across three key metrics: MAE, RMSE, and R

2. As illustrated, the proposed hybrid model, CNN-LSTM + XGBoost, consistently outperforms all other models across all evaluation criteria. Its enclosed area in the radar plot is noticeably larger than the rest, reflecting superior performance. This distinction is particularly evident in the R

2 metric, which represents the overall explanatory power of the model. The hybrid model also achieves the lowest MAE and RMSE values, indicating both high predictive accuracy and reduced error rates.

While other models such as XGBoost and Random Forest exhibit relatively strong performance, the integration of temporal feature extraction capabilities via CNN-LSTM with the regression power of XGBoost has led to a significant improvement in predictive outcomes.

To comprehensively assess the predictive performance of the proposed model, supplementary statistical analyses were conducted based on three key metrics: MAE, RMSE, and R

2.

Table 5 presents the average differences in these metrics between the proposed hybrid model (CNN-LSTM + XGBoost) and the baseline algorithms. The results indicate that our model significantly outperforms all competitors across all three criteria.

For instance, the MAE and RMSE values achieved by the proposed model are 2.97 and 4.08, respectively—both substantially lower than the closest competitor (XGBoost). Moreover, the R2 value of 0.94 reflects the model’s superior capacity to explain the variability in concrete compressive strength. These findings not only confirm the numerical superiority of the hybrid approach but also statistically demonstrate its advantage over conventional methods.

To further validate the effectiveness of the proposed hybrid model, a diverse range of baseline models—spanning from traditional empirical regressors (e.g., Linear and Ridge Regression) to advanced machine learning and neural architectures (e.g., Random Forest, XGBoost, LSTM, and CNN)—were included in the comparative analysis. As detailed in

Table 5, the hybrid CNN-LSTM + XGBoost framework consistently outperformed all benchmarks across MAE, RMSE, and R

2 metrics. This comprehensive inclusion of both classical and state-of-the-art methods ensures a robust evaluation of predictive boundaries. The results empirically confirm that the hybrid model not only captures complex nonlinear patterns but also offers superior generalization, outperforming even strong learners such as standalone CNN or XGBoost. Hence, the hybrid configuration sets a new upper bound in terms of predictive accuracy within the domain of concrete strength estimation.

To reinforce the numerical insights presented in

Table 5,

Figure 4 provides a graphical comparison of model performance across three key metrics: MAE, RMSE, and R

2. As shown in the left subplot, the MAE value for the hybrid model (CNN-LSTM + XGBoost) is significantly lower than that of all baseline models. This advantage is particularly pronounced when compared to classical models such as linear regression, Ridge regression, and Support Vector Regression (SVR).

The middle subplot illustrates that the hybrid model also achieves superior performance in terms of RMSE, recording a value of 4.08—the lowest deviation from actual values among all models. While advanced deep learning models such as LSTM and CNN perform better than classical algorithms, they still yield approximately one unit higher RMSE compared to the proposed model.

The right subplot highlights that the hybrid model attains the highest R2 = 0.94, indicating the strongest explanatory power in capturing the variability of concrete compressive strength. Although XGBoost and CNN also reach R2 values around 0.90, the observed margin, albeit numerically small, is both statistically and practically meaningful.

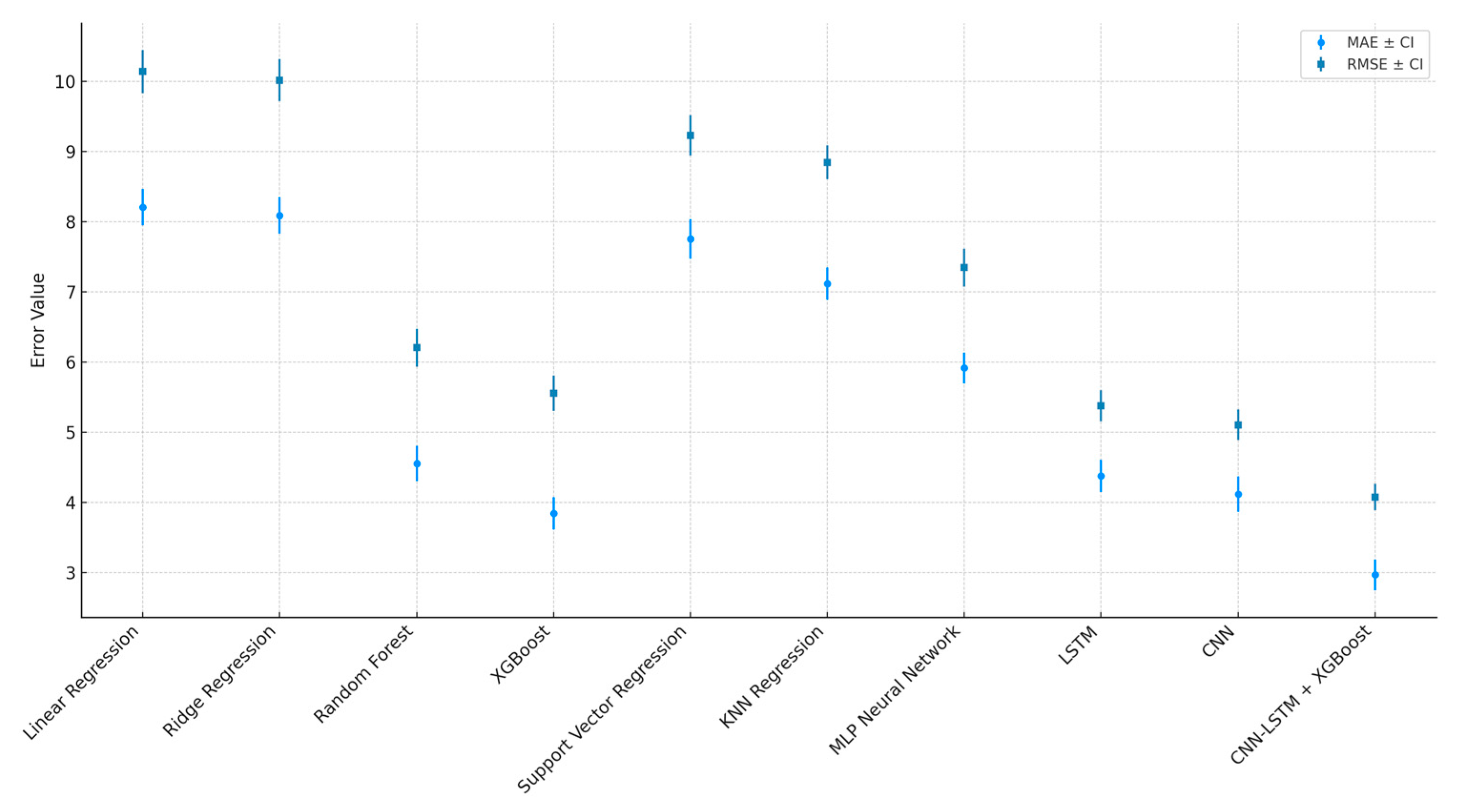

To assess the reliability of model performance and avoid over-reliance on point estimates, a bootstrap procedure with 1000 resamples was employed to compute 95% confidence intervals for the MAE and RMSE metrics. The results of this analysis are presented in

Table 6. As shown, the proposed hybrid model not only achieves the lowest mean error but also exhibits the narrowest confidence intervals. For instance, its MAE falls within the interval [2.86, 4.84], and its RMSE lies within [4.35, 6.79]. These intervals are significantly narrower than those of other models, indicating that the performance of the proposed approach is not only accurate but also stable and robust to data variability.

In contrast, models such as Linear Regression and SVR demonstrate substantially wider intervals—e.g., the MAE for linear regression ranges from 7.28 to 9.16, and RMSE from 9.01 to 11.37—suggesting that these models are more sensitive to fluctuations in data samples and exhibit lower generalizability.

Figure 5 illustrates the performance of ten predictive algorithms for estimating concrete compressive strength, based on two key metrics: MAE and RMSE, along with their respective 95% confidence intervals. The light blue bars represent the average MAE, while the dark blue bars correspond to the average RMSE for each model. Vertical lines above each bar indicate the confidence intervals, calculated via bootstrapping with 1000 resamples from the test set. These intervals reflect the variability and uncertainty associated with each model’s performance, enabling a more rigorous and scientific comparison.

As shown, the hybrid CNN-LSTM + XGBoost model not only achieves the lowest values for both MAE and RMSE but also exhibits the narrowest confidence intervals. This highlights its superior stability and reliability compared to the other models evaluated.

Figure 6 presents the evolution of the MAE throughout the training process of the proposed hybrid model. As illustrated, the error rate for both the training and validation sets decreases sharply during the initial epochs—from an initial value of approximately 30 to around 7 within the first 10 epochs. Subsequently, the error continues to decline gradually, stabilizing at approximately 4 in the later stages of training. The consistent downward trend observed in both the blue curve (Train MAE) and the orange curve (Validation MAE) indicates an absence of overfitting and demonstrates the model’s strong capacity to learn the underlying patterns within the dataset. Overall,

Figure 6 confirms that the proposed model performs reliably and accurately during the learning process.

To investigate the impact of temporal context length on model performance, a sensitivity analysis was conducted by varying the sliding window size used in the CNN-LSTM architecture. This parameter controls the number of past observations included in each training sequence, thereby affecting the model’s ability to capture temporal dependencies in the input data.

As shown in

Table 7, the window size was varied from 3 to 7, and the hybrid model was evaluated using the same data splits and hyperparameters across all settings. The results indicate that a window size of 5 provides the most balanced performance, achieving the lowest MAE (2.97), the lowest RMSE (4.08), and the highest R

2 (0.94). Smaller window sizes, such as 3 or 4, reduced the temporal depth and led to underfitting, while larger window sizes increased training complexity without significant improvement in accuracy.

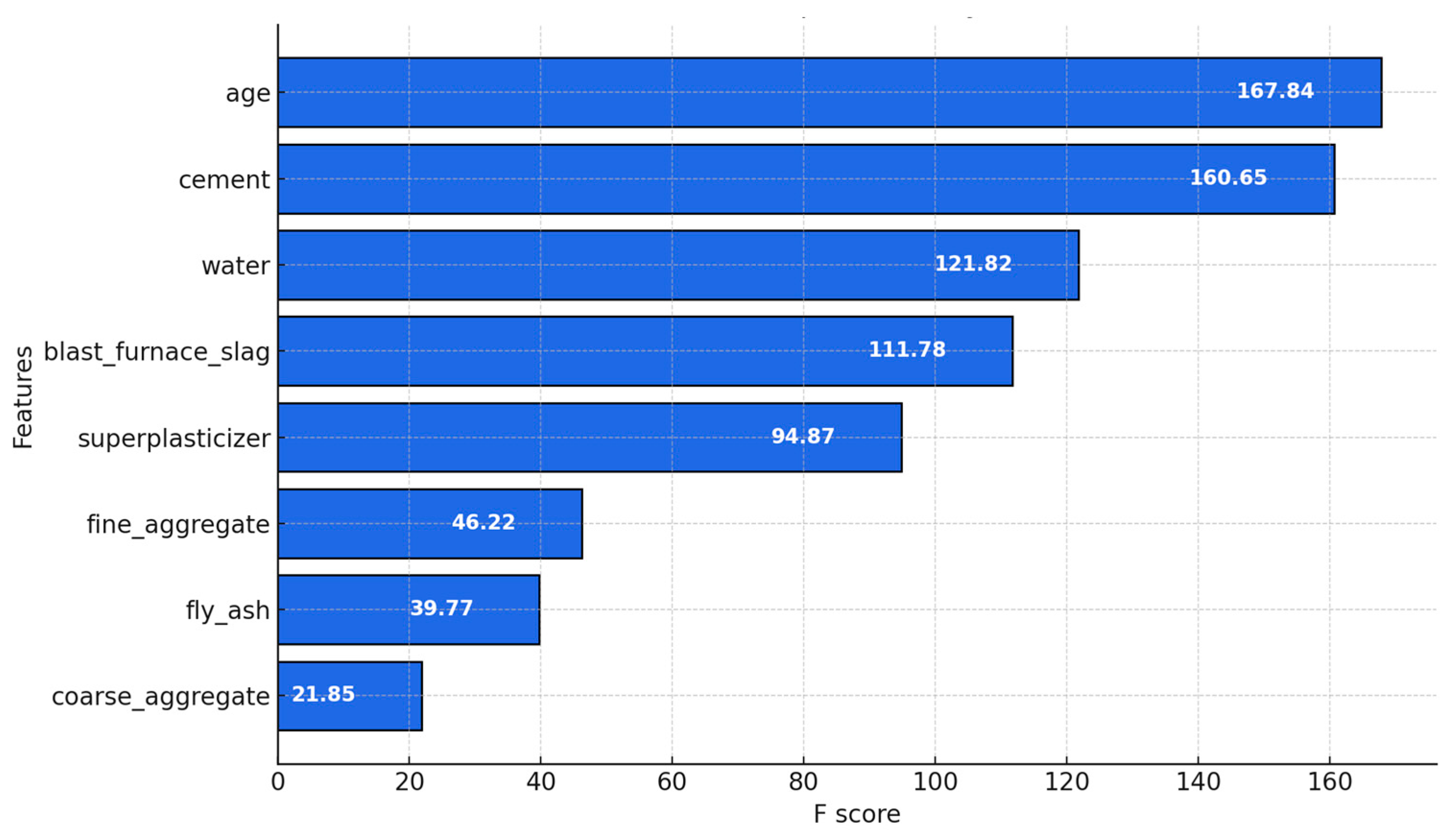

Figure 7 illustrates the relative importance of each input feature in the prediction process of the proposed model. The chart is constructed using the Gain metric, which measures the improvement in information gained through decision tree splits. The results clearly indicate that the variables age, cement, and water contribute most significantly to enhancing the model’s predictive performance. In particular, the age of the sample emerges as the most influential factor, with an F-Score of 167.84. In contrast, features such as coarse aggregate and fly ash exhibit the least impact on the model’s predictions.

Figure 8 presents the relationship between the actual and predicted values generated by the proposed model. In this scatter plot, each point represents a single observation, where the horizontal axis corresponds to the actual compressive strength, and the vertical axis indicates the predicted value. As shown, the data points are relatively concentrated around the diagonal line with a slope of one (y = x), indicating a high level of accuracy in the model’s predictions. The high density and close proximity of points to this ideal line confirm the model’s ability to capture meaningful relationships between the input features and the target variable. This pattern clearly demonstrates the model’s robustness in replicating the patterns observed during training.

SHAP analysis is an advanced interpretability method for machine learning models, grounded in the cooperative game theory concept of Shapley values. This technique fairly attributes a contribution score to each feature for a given prediction, allowing for a nuanced understanding of how individual inputs influence the output. Unlike classical approaches such as global feature importance, which aggregate effects across all samples, SHAP enables both local (per-instance) and global (dataset-wide) interpretability. This dual capacity not only identifies the most influential features but also reveals the directionality of their impact—whether a feature increases or decreases the predicted outcome.

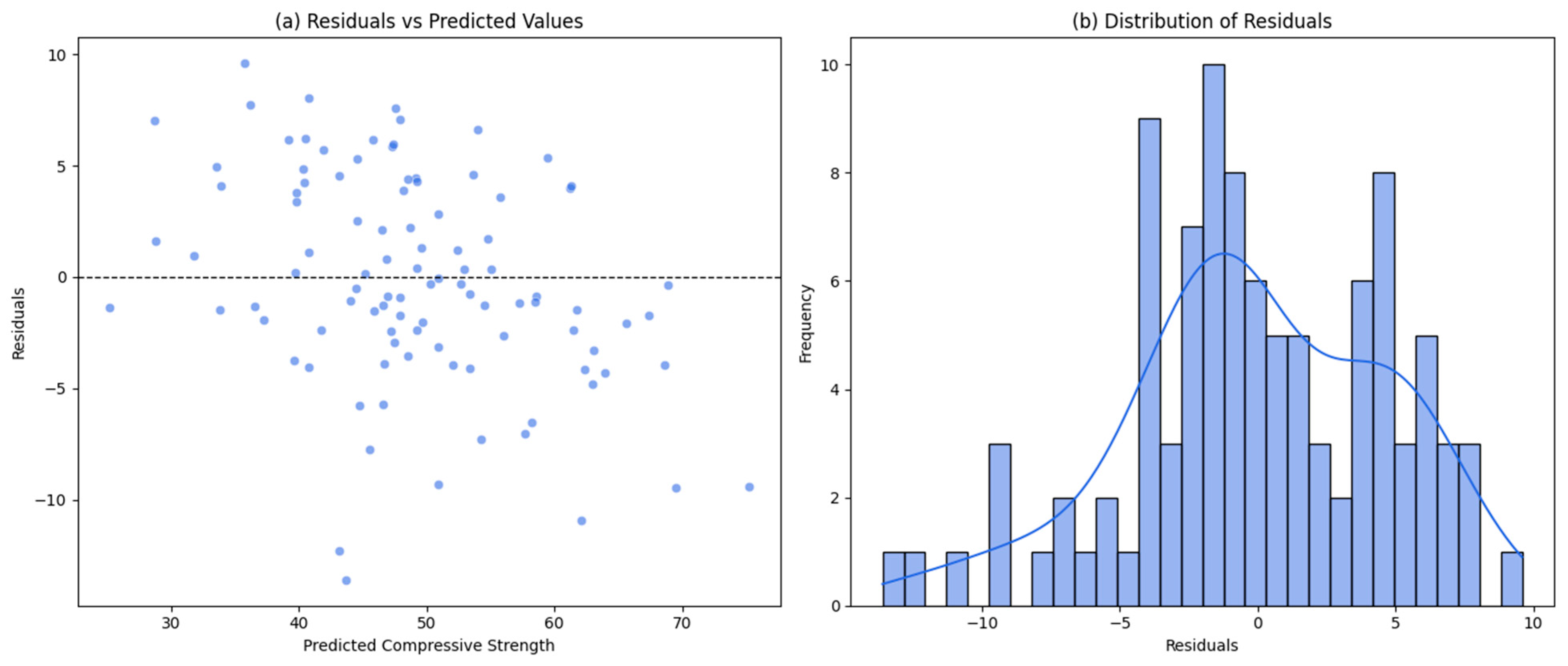

Figure 9 comprises two subplots: Panel (a) illustrates the residuals plotted against the predicted compressive strength values. The random dispersion of points around the horizontal axis without any discernible pattern suggests the absence of systematic bias in the model. This indicates that the model successfully generalizes across diverse input combinations and does not suffer from heteroscedasticity.

Panel (b) presents a histogram of residuals overlaid with a kernel density estimate (KDE). The results show that the residuals follow an approximately normal distribution centered near zero, with no significant skewness or heavy tails. This symmetric distribution around zero supports the statistical validity of the model, indicating that the errors are largely random and non-structured, in line with classical modeling assumptions.

Table 8 presents a comparative analysis of the computational complexity of five different models, evaluating them in terms of the number of trainable parameters, total training time, and per-sample inference time. As shown, classical models such as linear regression exhibit minimal complexity, with only 9 trainable parameters and negligible time requirements for both training and inference. The XGBoost algorithm demonstrates moderate complexity while maintaining relatively fast performance.

On the other end of the spectrum, deep learning models such as CNN and LSTM involve tens of thousands of trainable parameters and consequently require significantly more training time. The proposed hybrid model (CNN-LSTM + XGBoost), with over 58,000 parameters, exhibits the highest computational complexity; however, this added complexity is compensated by a notable improvement in prediction accuracy. Furthermore, despite its advanced architecture, the model maintains an inference time of only 2.10 milliseconds per instance, rendering it highly suitable for engineering applications such as optimal concrete mix design. These results suggest that although hybrid models entail higher computational demands, their accuracy-to-complexity ratio makes them entirely justifiable for critical decision-making scenarios.

Figure 10 illustrates the effect of varying input noise levels on the MAE of the proposed model. As the level of noise increases from 0% to 15%, a gradual upward trend in MAE is observed, indicating a reduction in predictive accuracy when the model is exposed to noisy data. Nevertheless, the slope of the error increase remains relatively mild, demonstrating the hybrid model’s (CNN-LSTM + XGBoost) relative robustness against input data perturbations. This characteristic is particularly valuable for real-world applications, where input data are often subject to uncertainty and measurement inaccuracies.

Figure 11 presents the MAE of the concrete compressive strength prediction model across four distinct age intervals: 1–7, 8–28, 29–90, and 91–365 days. As observed, the model exhibits relatively better performance within mid-range intervals (e.g., 8–28 days), where the MAE is lower, whereas the error tends to increase in the very early (1–7 days) and later stages (91–365 days). This pattern may stem from significant physical changes in concrete during early hydration stages or environmental influences affecting long-term behavior. Such an analysis enhances the understanding of model performance over the material’s lifespan and underscores the importance of ensuring prediction stability throughout the full life cycle of concrete.

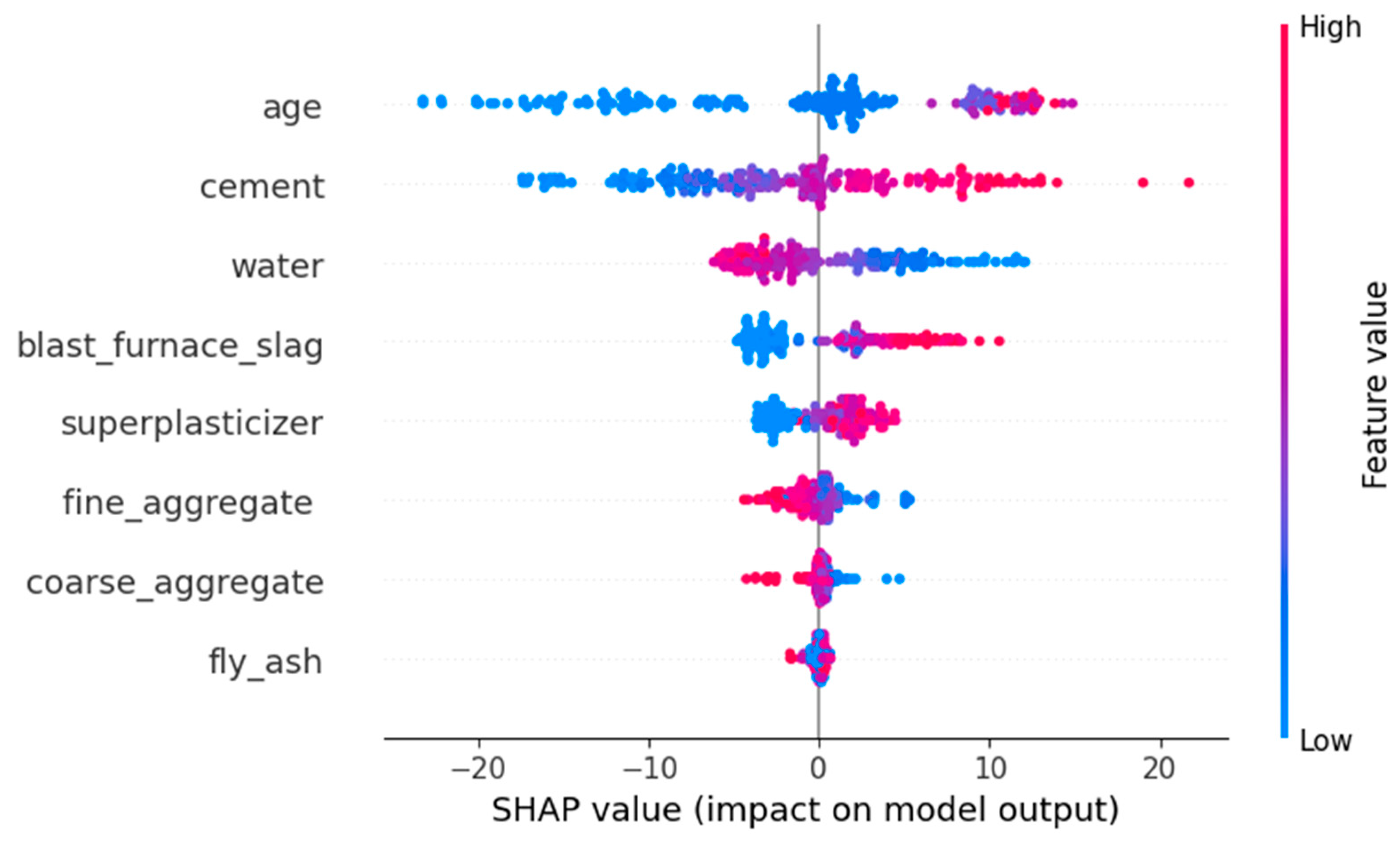

Figure 12 visualizes the interpretability of the proposed model using SHAP values. In this summary plot, each dot represents an individual data instance, where the horizontal position indicates the SHAP value—i.e., the degree to which that specific feature contributes to the model’s output. The color gradient of the points reflects the feature’s magnitude for each instance: red for high values and blue for low values.

The SHAP analysis reveals that features such as age and cement content exert the most significant positive influence on the predicted compressive strength of concrete. As their values increase, the model output also rises accordingly. Conversely, variables such as water content and fly ash typically exhibit a negative contribution, indicating that higher values of these features tend to decrease the predicted compressive strength. This pattern confirms that the machine learning model not only captures statistical dependencies but also aligns with established scientific principles in concrete engineering, reinforcing the model’s validity and interpretability.

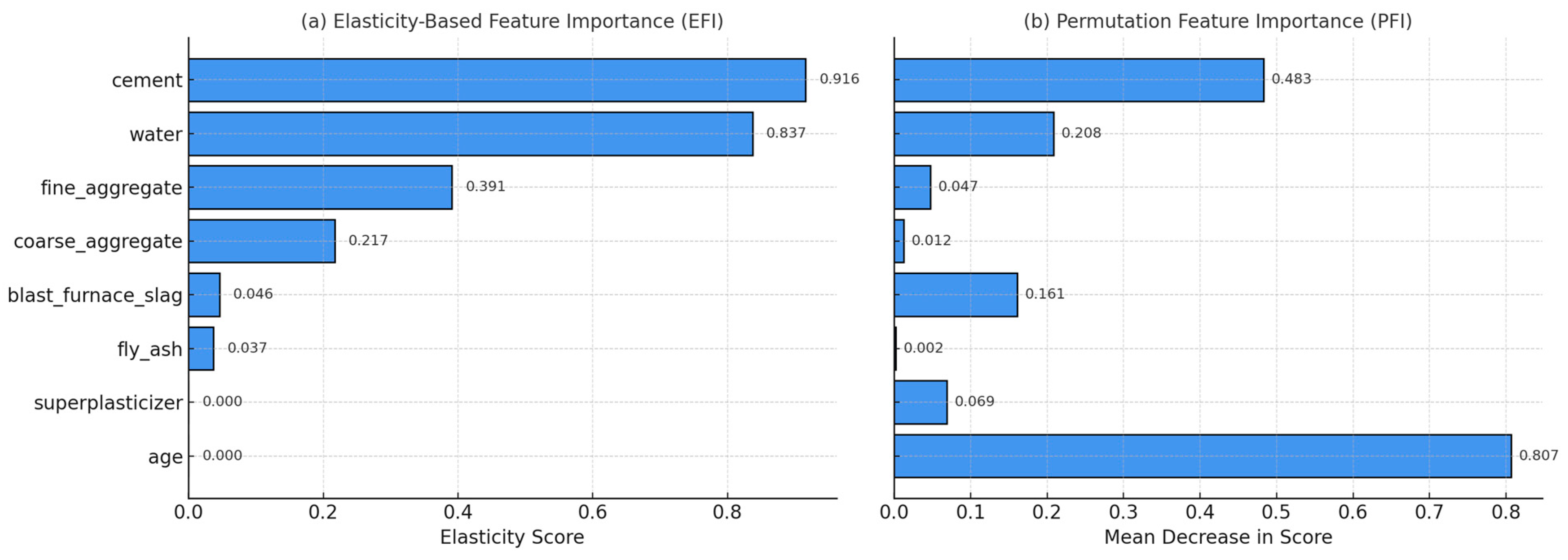

Figure 13 presents the results of two complementary feature importance analyses: (a) EFI and (b) PFI. The EFI method quantifies the output sensitivity of the model to relative percentage changes in each input variable, drawing from the economic concept of elasticity. Specifically, it evaluates how much the predicted compressive strength changes when a given feature increases by a small percentage, holding all else constant. Features with higher elasticity values are deemed more influential.

As shown in

Figure 13a, cement and water exhibit the highest elasticity, indicating that small relative changes in their values produce substantial variations in the model’s output. In contrast, superplasticizer and fly ash demonstrate minimal influence, with negligible changes in prediction accuracy under similar perturbations.

In parallel, the PFI approach evaluates the drop in model performance when the values of each feature are randomly shuffled, thereby disrupting their relationships with the output.

Figure 13b shows that age and cement are the most critical variables in terms of maintaining prediction accuracy, as their permutation leads to the most significant declines in model performance.

Although the rankings differ slightly between the two methods due to their distinct conceptual foundations—EFI focuses on sensitivity, while PFI centers on disruption—the overall findings consistently underscore the pivotal role of core concrete ingredients and specimen age in predicting compressive strength.

Figure 14 illustrates a correlation matrix visualized using a heatmap, representing the pairwise linear relationships among the input features and target variable. The correlation coefficients range from −1 to +1, where values close to +1 indicate strong positive correlation, values near −1 denote strong negative correlation, and values around 0 suggest no linear relationship.

The analysis reveals several notable patterns. A moderate to strong positive correlation (0.50) is observed between cement content and concrete compressive strength, implying that higher cement dosage generally leads to stronger concrete. Similarly, superplasticizer exhibits a moderate positive correlation (0.37) with compressive strength, reflecting its known role in enhancing concrete performance.

Conversely, water content shows a significant negative correlation (−0.29) with compressive strength, aligning with established engineering principles whereby excess water weakens the concrete matrix. Furthermore, a strong negative correlation (−0.66) exists between water and superplasticizer, suggesting a substitutive relationship in mixture designs where increasing superplasticizer may reduce water demand. Overall, this correlation matrix offers valuable insights into the internal structure of the dataset, informing feature selection and guiding interpretation prior to model development.

To evaluate the accuracy, robustness, and validity of the proposed model’s results, a comprehensive comparison was conducted among three interpretability methods in machine learning—SHAP, EFI, and PFI—and the classical statistical method of correlation analysis.

Table 8 presents this comparison, listing the importance values assigned by each interpretability technique alongside the Pearson correlation coefficient of each key feature with the target variable, i.e., concrete compressive strength.

As shown in the table, features such as cement, water, and age, which are consistently ranked highly by SHAP, EFI, and PFI, also exhibit statistically significant correlations with the target. For instance, cement shows a positive correlation coefficient of 0.498 and high scores across all three interpretability methods, reaffirming its central role in concrete strength prediction. While age does not register significance in EFI, it ranks prominently in both SHAP (7.938) and especially PFI (0.807), underscoring its importance in model behavior under real-world data variation.

On the other hand, features like fly ash and coarse aggregate, which consistently receive low importance scores, also lack meaningful correlation with the output variable, further validating their limited predictive relevance from both a statistical and machine learning standpoint.

Collectively,

Table 9 plays a critical role in substantiating the construct validity of the proposed model. The alignment between classic statistical correlations and modern interpretability scores provides a strong basis for drawing reliable conclusions and enhances confidence in model-informed decision-making.

4.3. Reinforcement Learning Analysis

In this section of the study, following the development of a machine learning-based predictive model for concrete compressive strength, the next step focused on optimizing the concrete mixture design using RL. The primary objective was to design an intelligent agent capable of selecting the optimal concrete composition—yielding maximum compressive strength—under varying conditions. To ensure that the agent was trained within a valid and reliable decision environment, the accuracy of the predictive model was first assessed using a Predicted vs. Actual plot. Additionally, Loss vs. Epoch curves were examined to analyze the convergence of MAE and RMSE during model training.

Key input features with the highest influence on strength predictions were identified using XGBoost output along with SHAP and PFI analyses. These features were passed to the RL agent to guide its decisions based on the most critical factors. The agent’s learning performance was evaluated using a Reward vs. Episode plot, which illustrates the progression of policy effectiveness over time. Moreover, the distribution of the agent’s final actions was analyzed through the Action Frequency histogram and the Concrete Type Selection heatmap. For further comparison, training curves of classical and hybrid models (e.g., CNN-LSTM) were visualized to assess the relative advantage of the proposed approach. Finally, the RL agent’s stability and performance in reaching the optimal mixture configuration were examined through the Final Reward Histogram and the Policy Evolution Map.

To further assess the effectiveness of the adopted dueling architecture, we conducted a comparative analysis with two alternative reinforcement learning structures: standard DQN and Double DQN. All three models were trained under identical conditions using the same state representations and reward functions. The goal was to quantify improvements in learning dynamics and prediction accuracy attributable to the dueling design. As shown in

Table 10, the Dueling DDQN model achieved the lowest MAE (3.12) and RMSE (4.21), along with the highest R

2 score (0.92), outperforming both baseline models. Additionally, it required fewer episodes to reach stable convergence, as reflected by the reduced episode count at convergence threshold (62 episodes vs. 87 for DQN). These results empirically validate the architectural choice of using a dueling network structure, as it not only accelerates learning but also leads to more accurate and robust policy formation in concrete mixture optimization.

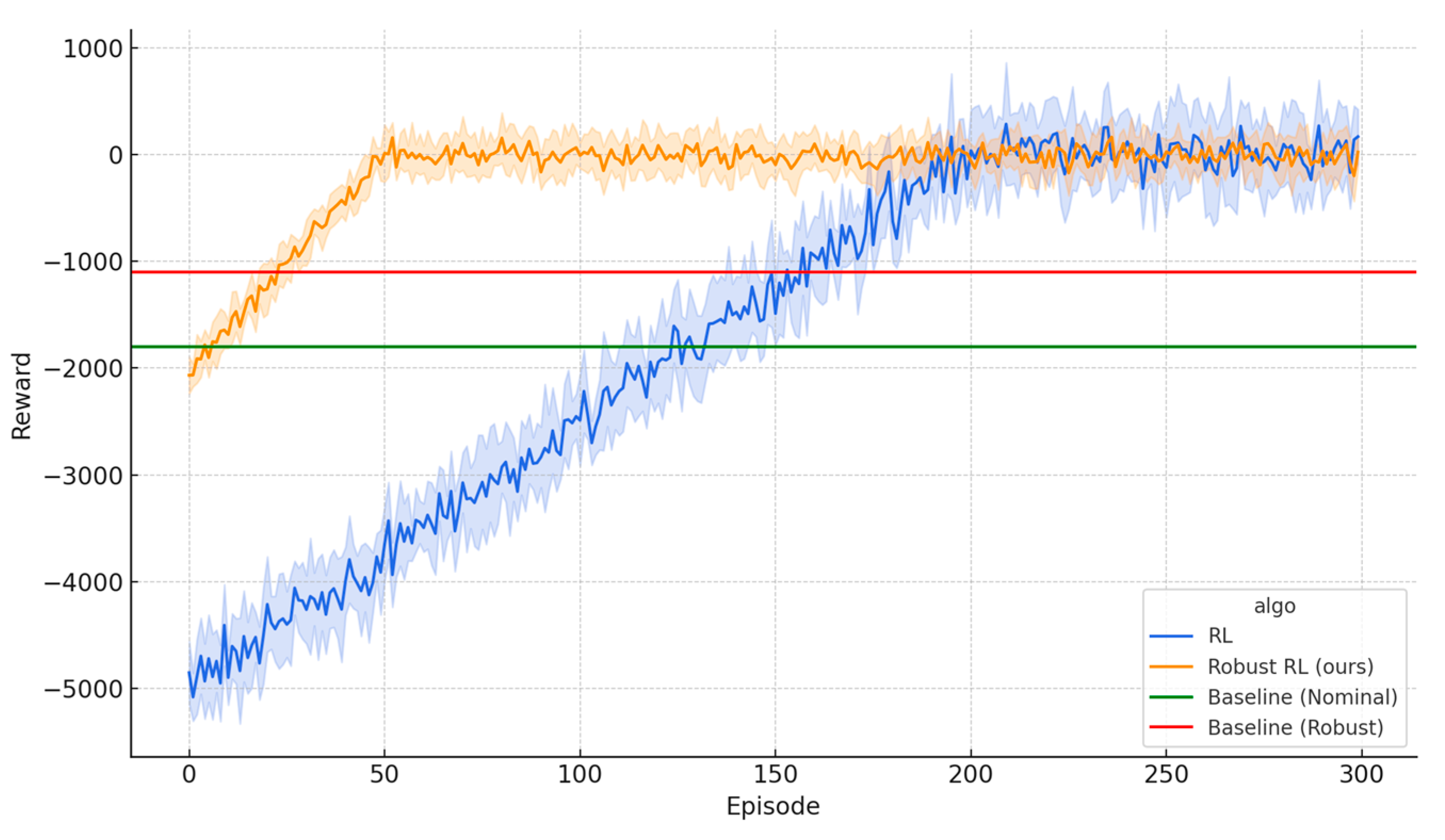

Figure 15 illustrates the reward trajectories over training episodes for three distinct algorithms: classical RL (blue), the proposed robust RL (orange), and two baseline strategies—Nominal Baseline (green) and Robust Baseline (red). As shown, the robust RL algorithm rapidly converges toward higher reward values early in training and consistently outperforms both the classical RL and baseline approaches. While classical RL also improves steadily, it requires a greater number of episodes to achieve convergence.

The red and green horizontal lines represent benchmark performance levels under adverse and nominal conditions, respectively. The fact that both RL approaches surpass these benchmarks confirms the superiority of adaptive learning strategies over static methods. In particular, the proposed robust RL demonstrates both enhanced resilience to noise and volatility in the environment and the ability to achieve the highest ultimate reward, indicating the strength of its final policy in selecting optimal concrete compositions.

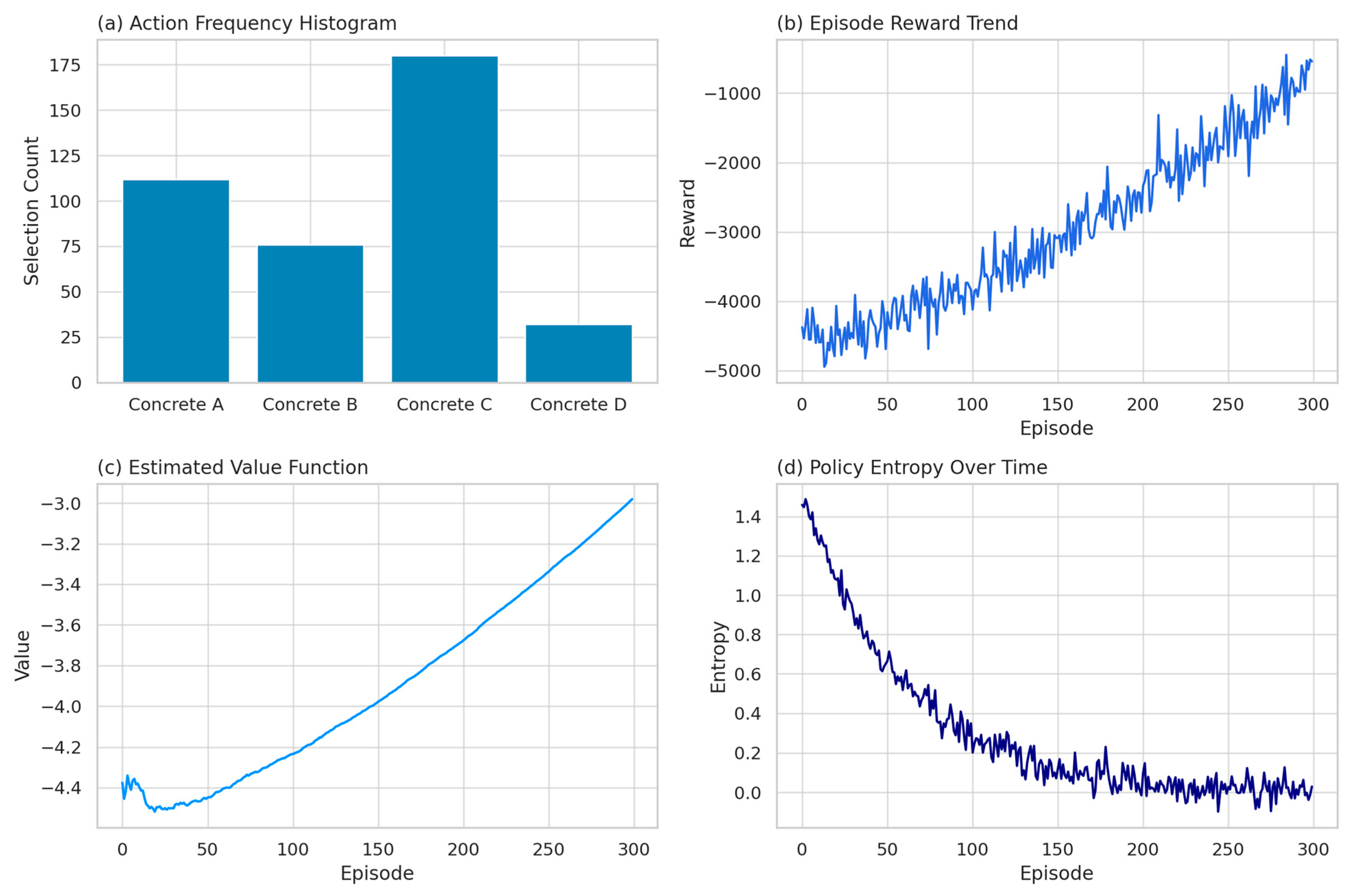

Figure 16 visualizes four essential aspects of the RL agent’s learning and decision-making processes, collectively evaluating its performance in terms of stability, policy improvement, and decision quality.

Subplot (a), the Action Frequency Histogram, illustrates the distribution of the agent’s decisions across four predefined concrete types (Concrete A to D). Following the completion of the training process, the agent demonstrates a pronounced preference for Concrete C, suggesting that this mixture likely achieves higher compressive strength or exhibits superior stability under varying conditions. Conversely, the relatively infrequent selection of Concrete D may indicate its lower predicted performance or higher associated risk. While the labels A to D are abstract identifiers within the learning environment, scientific interpretability necessitates a precise definition of each concrete composition.

Specifically, Concrete A comprises 340 kg/m3 of Portland cement and 180 kg/m3 of water, yielding a water-to-cement ratio of approximately 0.53, with no mineral admixtures. Concrete B is formulated with 300 kg/m3 of blended cement, incorporating 20% fly ash as a partial substitute, 165 kg/m3 of water, and includes 5 kg/m3 of superplasticizer. Concrete C, engineered for optimal strength, contains 400 kg/m3 of high-performance cement (including 10% silica fume), 160 kg/m3 of water, a reduced w/c ratio of approximately 0.40, and is enhanced with 8 kg/m3 of superplasticizer. Finally, Concrete D is composed of 280 kg/m3 of standard cement with 30% slag replacement, 190 kg/m3 of water, and minimal additives, representing a cost-efficient mixture with comparatively lower performance. Such specification allows for rigorous interpretation of the agent’s preferences and links the decision behavior directly to engineering parameters.

Subplot (b), Episode Reward Trend, depicts the cumulative reward across training episodes. Initially, the agent incurs substantial negative rewards, indicative of poor or inefficient decisions. However, as training progresses and the agent gains more experience, rewards steadily increase and converge toward neutral or positive values. This upward trend signals continuous improvement in policy learning and increasingly effective selection of concrete mixes. The observed fluctuations are natural and result from the exploratory nature of the RL algorithm.

Subplot (c), Estimated Value Function, shows the evolution of the agent’s estimated value function over time. A consistent upward trajectory—from approximately −4.4 to near-zero—demonstrates improved accuracy in state-value estimation and better long-term reward prediction. This confirms the agent’s growing competence and confidence in its policy decisions.

Finally, subplot (d), Policy Entropy Over Time, reflects the level of uncertainty in the agent’s decision-making. High entropy in early episodes (around 1.4) corresponds to active exploration among available actions. A gradual decrease in entropy, approaching zero toward later episodes, suggests the stabilization of the learned policy and convergence toward deterministic, optimized decisions under various environmental states.

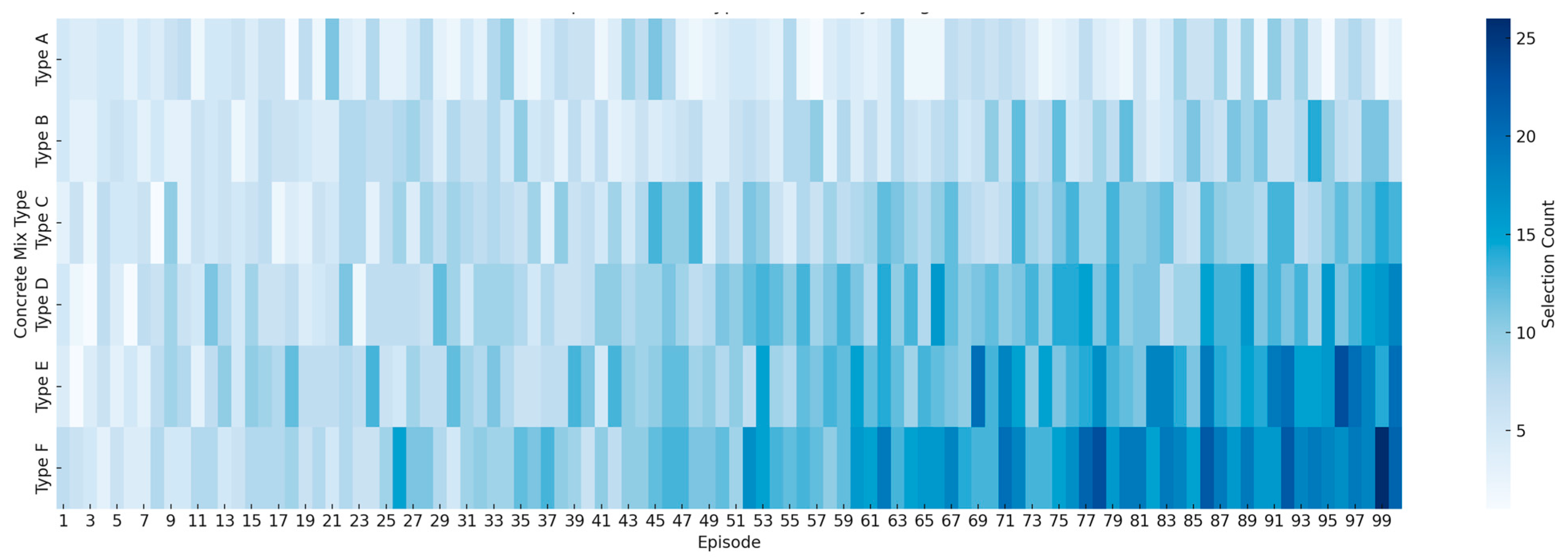

Figure 17 illustrates a heatmap representing the decisions made by the RL agent across 100 training episodes. In this visualization, the horizontal axis denotes the episode number, while the vertical axis corresponds to six different concrete mix types (Type A to Type F). The color intensity in each cell indicates how frequently a particular concrete type was selected by the agent in a specific episode, with darker shades signifying more frequent selections.

Analysis of this figure reveals that the RL agent initially exhibits a relatively uniform and scattered distribution of choices, indicative of the exploration phase during early training. As training progresses, a gradual convergence toward specific combinations—particularly Type E and especially Type F—is observed. This pattern implies that the agent has identified mix configurations yielding higher rewards and has accordingly adjusted its policy to favor repeated selection of those types. Concrete E is characterized by a low cement content (e.g., 130–170 kg/m3) and a high fly ash substitution (up to 200 kg/m3), combined with moderate water content (around 180 kg/m3) and the presence of superplasticizer (e.g., 4–6 kg/m3). This mix design reflects a cost-effective, eco-friendly alternative often used in large-volume or hot-weather concreting applications. Concrete F, in contrast, features a high cement dosage (e.g., above 500 kg/m3) and reduced water content (below 150 kg/m3), with enhanced flowability achieved through superplasticizer use (typically 7–9 kg/m3). This formulation targets high-performance structural applications where early strength and durability are critical.

A key insight from this figure is the agent’s adaptive and nonlinear decision-making behavior. For instance, in certain episodes, there is a notable increase in the selection of Types C and D, which later shifts toward a consistent preference for Type F. This shift suggests ongoing policy updates driven by the agent’s accumulated reward feedback. Such dynamics demonstrate the agent’s capacity to adapt its decisions in potentially variable or uncertain environments, ultimately prioritizing more robust concrete compositions.

Nevertheless, the manuscript must clarify the exact nature of each concrete type (A to F). It should be specified whether these labels represent actual formulations with defined component ratios or are abstract identifiers in a discretized decision space. Such clarification is essential for ensuring rigorous scientific interpretation and avoiding ambiguity in evaluating the agent’s policy performance.

Figure 18 presents the trend of validation mean squared error (MSE) over the course of training for three predictive models: the CNN-LSTM model (light blue dashed line), the XGBoost model (blue dotted line), and the proposed hybrid model that integrates CNN-LSTM and XGBoost (dark blue solid line). The horizontal axis represents the number of training episodes, while the vertical axis shows the MSE value.

According to the figure, the hybrid model consistently outperforms the standalone models throughout the training process. Particularly in the early episodes (0 to 30), the hybrid model demonstrates a steeper decline in error, indicating a faster learning rate and greater stability. Furthermore, the final MSE of the hybrid model approaches zero and remains lower than that of the other two models at the end of training, suggesting superior generalization capability and effective prevention of overfitting.

This performance indicates that the integration of the CNN-LSTM architecture—capable of capturing temporal and spatial patterns in concrete data—with the nonlinear modeling strength of XGBoost successfully compensates for the individual limitations of each model. As a result, the hybrid architecture yields a highly accurate and stable prediction framework. Such an approach is particularly well-suited for complex systems like concrete compressive strength prediction, which is influenced by correlated and nonlinear variables.

It is important to emphasize that the proposed hybrid model (CNN-LSTM + XGBoost), whose predictive performance is assessed in

Figure 18, serves as the reward function within the RL framework. In other words, the RL agent relies on the output of this hybrid model to evaluate and select optimal concrete mix compositions. Therefore, the high accuracy and stability demonstrated by the hybrid model—particularly its consistent reduction in validation MSE—directly influence the effectiveness of the policy learning process in RL. This structural integration creates a cohesive link between the predictive modeling and decision-making components of the study, ensuring that the RL agent is trained within a reliable and well-informed decision environment.

To rigorously evaluate the performance of the RL agent in selecting concrete mix designs, two distinct strategies were designed and implemented. Strategy 1 employs a hybrid architecture that combines deep learning (CNN-LSTM) for feature extraction and machine learning (XGBoost) for prediction. In this strategy, complex patterns and temporal dependencies in the concrete data are first extracted using a CNN-LSTM network. These extracted features are then passed to an XGBoost model for compressive strength prediction and reward computation. The final output of the hybrid model serves as the reward function for the RL agent. This approach not only facilitates the modeling of nonlinear and intricate relationships but also significantly enhances the accuracy and stability of the agent’s decisions by leveraging the hybrid structure.

In contrast, Strategy 2 represents a baseline approach that relies solely on the XGBoost model for prediction and reward determination. In this setting, the agent lacks mechanisms for deep feature extraction and instead makes decisions based solely on raw input variables and the tree-based model structure. This strategy was specifically designed to serve as a benchmark for evaluating the impact of combining deep learning architectures with traditional algorithms within RL frameworks.

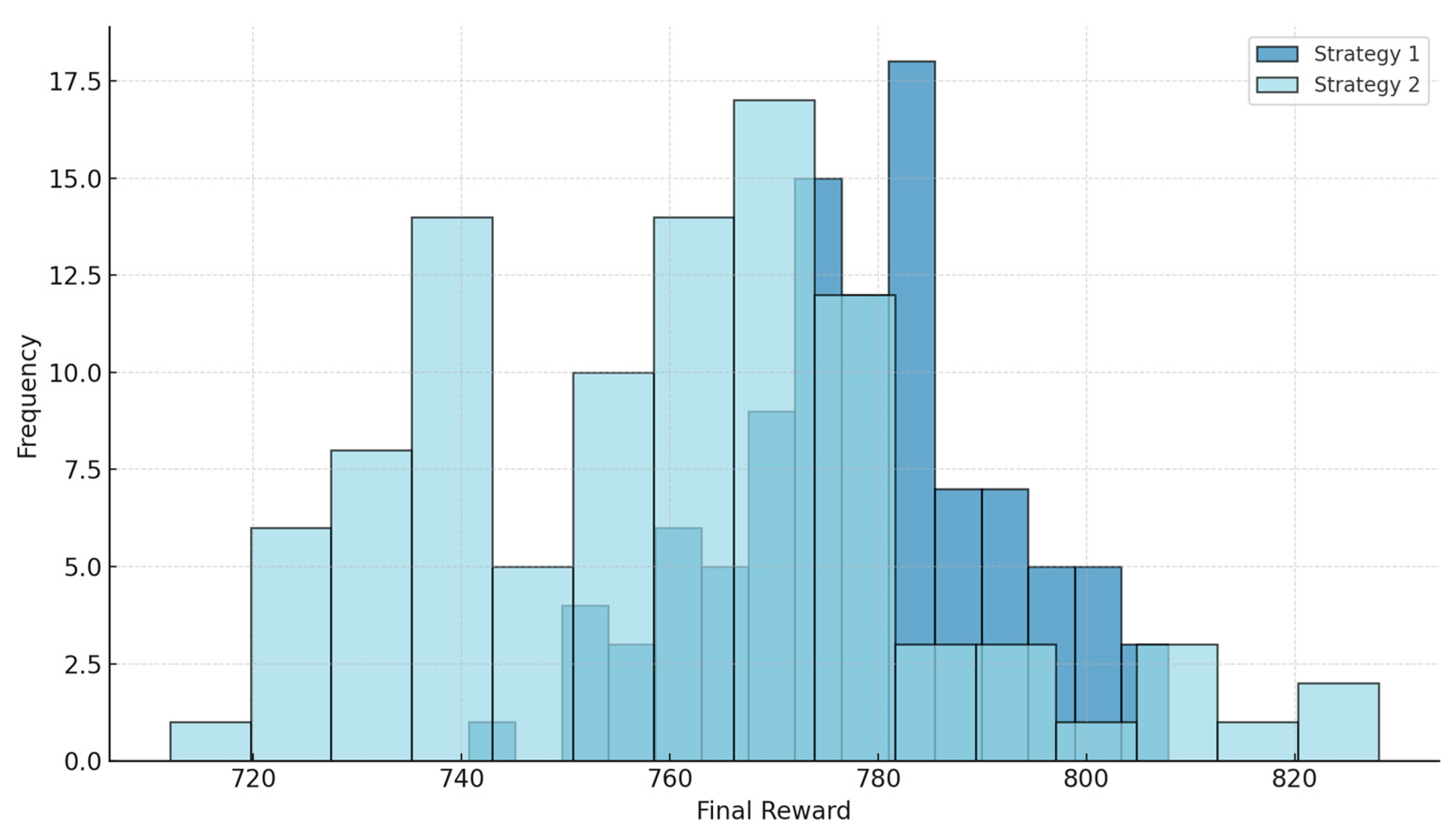

A comparative analysis of these two strategies is illustrated in

Figure 19, which displays a dual-segment histogram of final reward distributions obtained across learning episodes. As shown, Strategy 1 exhibits a more homogeneous distribution, with higher concentration in the upper reward intervals and lower dispersion, whereas Strategy 2 demonstrates greater variance and a broader spread of final reward values. These contrasts clearly indicate that the proposed hybrid approach (Strategy 1) not only achieves higher rewards but also develops a more stable, robust, and generalizable policy. These findings affirm the effectiveness of integrating deep and RL architectures in optimizing engineering decisions such as concrete mix selection.

Figure 19 illustrates the distribution of final rewards obtained from RL episodes under two different strategies. The horizontal axis represents the final reward value achieved in each learning episode, while the vertical axis indicates the frequency of occurrence within each reward range. The two histograms—distinguished by dark blue for Strategy 1 and light blue for Strategy 2—collectively demonstrate the extent to which each strategy succeeded in developing effective policies for concrete mix selection.

According to the chart, Strategy 1 not only achieves a higher average reward but also exhibits lower variance. The peak of this distribution is observed around 780, with most samples concentrated within the [770–800] range. This behavior indicates that Strategy 1 established a more stable, reliable, and optimally performing policy compared to Strategy 2. In contrast, the distribution of Strategy 2 is more dispersed, with a notable frequency in the [730–770] interval and instances of reward values even below 720. This suggests that although Strategy 2 may occasionally produce strong outcomes, its decision-making policy is less stable and more susceptible to variability.

From a statistical perspective, the distribution of Strategy 1 skews toward higher values and approximates a normal distribution, whereas Strategy 2 presents a more asymmetric and widely spread profile. These differences may stem from the disparity in modeling approaches—namely, the use of a hybrid deep learning structure in Strategy 1 versus a standalone classical algorithm in Strategy 2—as well as variations in training parameters and environmental conditions.

In summary, this histogram provides a clear and detailed comparison of the qualitative differences between the two decision-making strategies, showing that Strategy 1 outperforms in terms of final reward levels, consistency in decision-making, and reliability. Accordingly, Strategy 1 can be recommended as the preferred implementation for concrete mix selection under uncertainty.

Table 10 presents a numerical complement to the analysis of the two RL strategies by providing key statistical indicators, including the mean final reward, standard deviation, minimum, and maximum values for each strategy. According to

Table 11, Strategy 1, which is based on the hybrid CNN-LSTM and XGBoost model, not only achieves a higher mean reward (783.75) but also exhibits a lower variance (10.84), indicating greater consistency and stability in learning an optimal policy. In contrast, Strategy 2, despite demonstrating success in some episodes, suffers from greater dispersion and a lower mean reward (760.45) compared to Strategy 1, reflecting lower efficiency and coherence in its policy learning process.

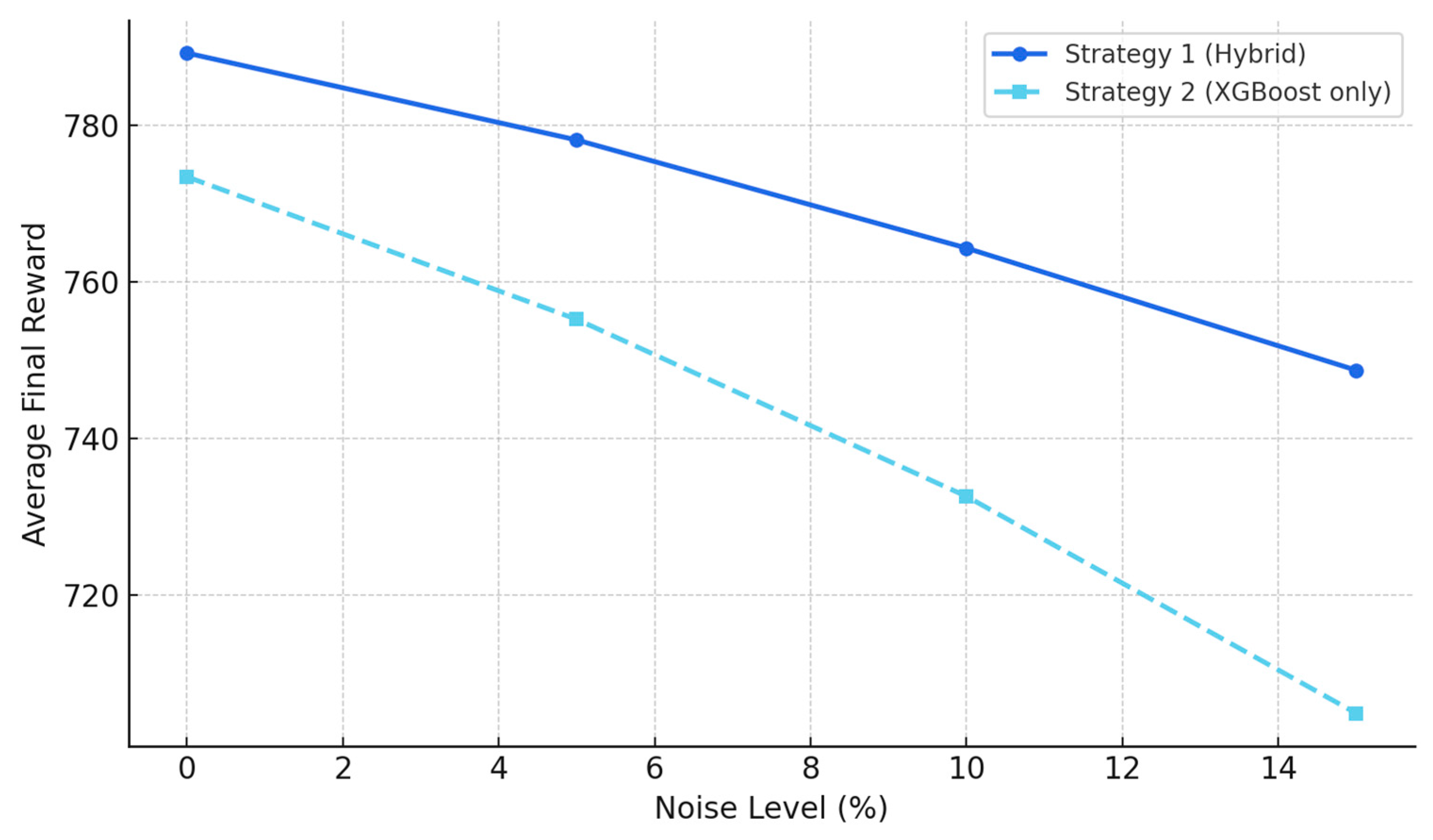

To complete the evaluation of the RL agent’s performance under realistic conditions, a robustness analysis was conducted to assess the stability of the two proposed strategies against input noise. This analysis aimed to examine the sensitivity of the learned decision policies to minor perturbations in environmental data. To this end, varying levels of noise (0%, 5%, 10%, and 15%) were added to the RL agent’s input values, and the average final reward was calculated for each strategy. The results are illustrated in

Figure 20.

According to the figure, although both strategies experience a decline in performance as noise levels increase, the degradation is significantly more pronounced in Strategy 2 (based solely on the XGBoost model). For instance, when the noise level reaches 15%, the mean reward in Strategy 2 falls below 710, whereas Strategy 1 (the hybrid CNN-LSTM + XGBoost model) maintains a mean reward close to 750. This difference highlights that Strategy 1, owing to its hybrid architecture and capacity for extracting high-level features from input data, exhibits superior resilience to environmental noise.

Moreover, the near-linear and gradual decrease in reward observed in Strategy 1 indicates a stable performance trajectory in fluctuating conditions. This trait is particularly valuable in engineering applications—such as construction—where measurement inaccuracies are common. In contrast, the steeper and less predictable reward drop in Strategy 2 underscores the vulnerability of classical models that lack deep learning components when faced with uncertainty. Overall, the sensitivity analysis strongly supports the effectiveness and robustness of the proposed hybrid strategy in handling imperfect input data.

Figure 21 presents the policy evolution map of the RL agent across 100 training episodes. The horizontal axis denotes the episode number (1 to 100), while the vertical axis represents a series of discrete environmental states (State 0 to State 9), each potentially corresponding to specific contextual settings such as initial material compositions or performance requirements of the concrete. The color intensity of each cell reflects the selected action in a given episode-state pair, where actions—ranging from 0 to 3—correspond to different concrete types.

During the early episodes (0 to 30), the map exhibits a scattered and heterogeneous pattern, indicative of the agent’s exploration phase. In this phase, the RL agent actively samples a broad range of actions across different states to evaluate their impact on outcomes. Although this behavior may appear unstable, it is a critical component of RL, ensuring the agent acquires sufficient experience across the decision space.

As training progresses—particularly between episodes 40 to 80—discernible action patterns begin to emerge. For instance, in certain states such as State 3 or State 7, the agent consistently selects a particular concrete type (e.g., Action 2 or 3), signaling the emergence of a quasi-optimal policy for those states. At this stage, the agent has learned that specific combinations yield higher rewards in certain conditions and shifts from random behavior toward goal-oriented decision-making.

In the final episodes (80 to 100), the map demonstrates increased regularity, indicating a stabilization of the agent’s policy. Most states are now associated with one or two dominant actions, reflecting the agent’s convergence to a confident and robust policy. Nevertheless, a few states still show variability in decisions, which may be attributed to environmental dynamics or the comparable performance of multiple concrete types.

Overall, the policy evolution map in

Figure 21 offers clear evidence of the RL agent’s learning trajectory—from exploration to exploitation—and gradual optimization of decisions across various states. When interpreted alongside prior diagrams, this map provides a comprehensive view of the internal decision-making mechanics of the proposed model and highlights the agent’s ability to develop policies with high generalizability and operational stability.