Abstract

This paper proposes a hybrid harmony search algorithm that incorporates a method of reinitializing harmonies memory using a particle swarm optimization algorithm with an improved opposition-based learning method (IOBL) to solve continuous optimization problems. This method allows the algorithm to obtain better results by increasing the search space of the solutions. This approach has been validated by comparing the performance of the proposed algorithm with that of a state-of-the-art harmony search algorithm, solving fifteen standard mathematical functions, and applying the Wilcoxon parametric test at a 5% significance level. The state-of-the-art algorithm uses an opposition-based improvement method (IOBL). Computational experiments show that the proposed algorithm outperforms the state-of-the-art algorithm. In quality, it is better in fourteen of the fifteen instances, and in efficiency is better in seven of fifteen instances.

1. Introduction

Currently, society requires maximum benefits at minimum costs. In order to achieve this, optimization techniques are generally used. However, many real-world optimization problems are considered high computational complexity and are called NP-hard. Moreover, these problems have the characteristic that exact solution methods cannot obtain optimal solutions in reasonable times. Using metaheuristic methodologies is considered a good alternative that offers satisfactory solutions for the user in a reasonable time. Harmony search algorithms constitute a metaheuristic methodology for solving continuous optimization problems proposed by Zong Woo Geem [1].

The process of musical improvisation inspires the methodology of harmonic search. In this, a predefined number of musicians try to tune the tone of their instruments until they achieve a pleasant harmony. In nature, harmony is a relationship between several sound waves with different frequencies. Therefore, the quality of improvised harmony is determined by aesthetic estimation. In order to improve aesthetic valuation and find the best harmony, musicians perform multiple rehearsals [2].

Harmony search algorithms are currently considered a competitive alternative to solve a large number of optimization problems that have several advantages over other metaheuristics that are available in the state-of-the-art. For example, they only require adjusting a relatively small number of parameters [3,4,5].

In ref. [6], a harmony search algorithm using an improved OBL mechanism (IOBL) is proposed. This improved version uses randomness to create a new possible solution and improve the convergence process of such an algorithm. In addition, the IOBL mechanism is used in the upgrade process.

In the literature, we can find improved versions of harmony search that have taken features from the particle swarm optimization (PSO) algorithm [7,8]. Among them, we can find the algorithm proposed by Omran and Mahdavi called global-best harmony search [9], which modifies the pitch setting within the new harmonies improvisation step and generates a new harmony close to the best harmony memory (HM) harmony. This mechanism is inspired by updating a particle in the PSO algorithm, which is influenced by the best position visited by the same particle and by the position of the best particle in the swarm. For his part, Geem proposed the particle-swarm harmony search [10] algorithm, in which he takes the contribution of Omran and Mahdavi [9] and introduces it as a fourth way of generating a new harmony which they call particle-swarm consideration and conserving the original pitch setting, this within the improvisation step of new harmonies. Both algorithms use the mechanism inspired by the PSO algorithm in the new harmonies improvisation step. In the case of the hybrid harmony search algorithm, a version of the particle swarm optimization algorithm is used to which the IOBL technique (PSO-IOBL) has been applied to regenerate a part of the harmony memory (HM) each time the search process harmonic exceeds the allowed stagnation percentage.

The main contributions of this work are:

- A new harmony search optimization algorithm to solve continuous functions.

- A new harmony memory reset mechanism applying a particle swarm optimization algorithm to improve the quality of the solutions.

- The incorporation of the improved opposition-based learning technique (IOBL) in the process of reinitializing the memory of harmonies.

2. Basic Harmony Search Algorithm

In a harmony search algorithm (Algorithm 1), harmony is a feasible solution, and each decision variable of harmony is called a note [1]. Several harmonies (HMS) are stored in a harmony memory (HM). Assuming that the goal is to minimize or maximize an aptitude function (f) subject to decision variables x, the problem is defined in Equation (1):

where f is the aptitude function, X[i] = (I = 1, 2, …, D) is the decision variable i, and D denotes the dimensions of the problem.

Min or Max f(x1, x2, …, xD)

The harmony search algorithm is generalized; the following steps are obtained:

- Initialize a new memory of harmonies (HM).

- Improvise a new harmony.

- The new harmony must be included or excluded from HM.

- Steps 2 and 3 must be repeated until the stop criterion is met; when the stop criterion is met, go to step 5.

- The best harmony stored in HM is returned as an optimal solution.

| Algorithm 1. Harmony search |

| Inputs: MaxIt: maximum number of iterations, HMS: harmonies memory size, nNew: memory size of new harmonies, HMCR: Rate of consideration of the memory of harmonies, PAR: Pitch adjustment rate, bw: bandwidth, bw_damp: bandwidth upgrade, D: number of dimensions, Cost: calculation of the objective function, Xnew: new harmony, u: upper bound, l: lower bound, NEW: new set of harmonies, HM: harmony memory. Outputs: bestSol: best solution found. Used functions: random_number [0,1]: generates a random value between 0 and 1. 1. Initialize new random harmony memory HM of size HMS 2. Sort HM by Cost 3. bestSol = HM (1) 4. for it = 1 to MaxIt do 5. for k = 1 to nNEW do 6. Generate new random harmony Xnew 7. for j = 1 to D do 8. r = random_number [0,1] 9. if r ≤ HMCR then 10. Randomly select harmony X[i] stored in HM 11. X[new,j] = X[i,j] 12. end if 13. r = random_number [0,1] 14. if r < PAR then 15. X[new,j] = X[new,j] + bw × random_number [0,1] × |u[j] – l[j]| 16. end if 17. end for 18. Evaluate(Xnew) 19. Add Xnew to memory of new harmonies NEW 20. end for 21. HM ∪ NEW 22. Sort HM by Cost 23. Truncate HM to HMS harmonies 24. bestSol = HM (1) 25. bw = bw × bw_damp 26. end for 27. return bestSol |

3. Method of Improving Opposition-Based Learning (IOBL)

In [6], a new, improved version of the original OBL (IOBL) is presented, which includes randomness in improving the diversity of the solution. Said improvement provides better performance in continuous optimization problems. This improved version updates the harmony search algorithm allowing a better solution space exploitation (Algorithm 2).

This technique starts by generating a random value r [0,1] multiplied by each value stored in the current solution vector X[D]. Then, the objective value for each modified element is calculated and evaluated if it is less than the objective value before the modification; if so, the value is updated at the current position of the array. Otherwise, it advances to the next position. This process ends when all the values of the solution vector have been visited.

| Algorithm 2. Improving opposition-based learning (IOBL) |

| Inputs: X[D] = (x1, x2, …, xD): initial solution, D: number of dimensions, r: random value. Outputs: X[D] = (x1, x2, …, xD): improved solution. Used functions: random_number [0,1]: generates a random value between 0 and 1, objectivefunction(X): calculates the cost of the objective value. 1. r = random_number [0,1] 2. While (i < D) do 3. X[i]′ = X[i] × r 4. end While 5. if (objectivefunction(X′) < objectivefunction(X)) then 6. X = X′ 7. end if 8. return X |

4. General Structure of the Hybrid Harmony Search Algorithm (HHS-IOBL)

This section describes all the elements that make up the structure of the hybrid algorithm proposed in this research, called the hybrid algorithm of harmony search with improved learning based on the opposition (HHS-IOBL).

4.1. Algorithm Parameters

Table 1 describes the parameters and variables used in the HHS-IOBL and HS-IOBL algorithms.

Table 1.

Parameters and variables used.

4.2. Particle Swarm Optimization Algorithm with IOBL (PSO-IOBL)

This paper proposes the use of a particle swarm algorithm [7,8] (Algorithm 3) as a method to reinitialize the memory of harmonies. The primary function of this algorithm is to help in the convergence of the harmonic search process. This algorithm also uses the IOBL technique to enable better exploration in the solution space. In steps 1 and 2, an initial population of particles is generated. The best global particle is obtained concerning its objective value. Step 3 calculates the minimum (velMin) and maximum (velMax) speed with which these particles will move in the solution space. From steps 4 to 13, each particle is updated concerning its position and velocity. Step 14 calculates the objective function of each particle. From steps 15 to 22, the best particle is obtained concerning its objective value and is compared with the global solution; if its value is better than the global solution, it is updated. From steps 23 to 35, the global solution obtained from the previous steps is improved with the IOBL technique, the objective value of this applied improvement is obtained, and it is compared again with the global solution; in case of having a better objective value, the global solution is replaced, and the inertial weight (w) is updated. This process continues until the maximum number of iterations (MaxIt) is achieved. Finally, in step 36, the algorithm returns the best overall solution obtained during the process.

| Algorithm 3. Particle swarm optimization with IOBL (PSO-IOBL) |

| Inputs: MaxIt: Maximum number of iterations, nPop: Population size, w: Inertia weight, wdamp: Inertia weight damping ratio, c1: Personal learning coefficient, c2: Global learning coefficient, D: Number of dimensions, varMin: The minimum value that a decision variable can take, varMax: The maximum value that a decision variable can take, HM: Harmony memory. Outputs: GlobalBest: best solution found. Used Functions: InitializePopulation (HM): generates an initial population, random_number [0,1]: generates a random value between 0 and 1, getBestGlobal (particles): obtain the best global particle, Max (particles[i].position[j], varMin):obtain the maximum of two values, Min (particles[i].position[j], varMax):obtain the minimum of two values, IOBL (particle): applies the technique of improving opposition- based learning, Evalute (particles[i]): calculate the objective function of the particles. 1: particles = InitializePopulation (HM) 2: GlobalBest = getBestGlobal (particles) 3: calculate velMin and velMax 4: for it = 1 to MaxIt do 5: for i = 1 to nPop do 6: for j = 1 to D do 7: particles[i] = w × particlesi.velocity[j] + c1 × random_number [0,1] × (particles[i].bestPosition[j] − particles[i].position[j]) + c2 × random_number [0,1] × (GlobalBest.position[j] − particles[i].position[j]); 8: particles[i].velocity[j] = Max (particles[i].velocity[j], velMin); 9: particles[i].velocity[j] = Min (particles[i].velocity[j], velMax); 10: particles[i].position[j] = particles[i].posicion + particles[i].velocity[j]; 11: particles[i].position[j] = Max (particles[i].position[j], varMin); 12: particles[i].position[j] = Min (particles[i].position[j], varMax); 13: end for 14: Evalute (particles[i]) 15: if particles[i].cost < particles[i].bestCost then 16: particles[i].bestPosition = particles[i].position 17: particles[i].bestCost = particles[i].cost 18: if particles[i].bestCost < GlobalBest.cost then 19: GlobalBest = particles[i] 20: end if 21: end if 22: end for 23: for i = 1 to nPop do 24: primeParticle = IOBL (particle) 25: end for 26: Evaluate (primeParticle) 27: if primeParticle.cost < particle[i].bestCost then 28: particulas[i] = primeParticle 29: if particles[i].cost < GlobalBes.cost then 30: GlobalBest = particles[i] 31: end if 32: end if 33: end for 34: w = w × wdamp 35: end for 36: return GlobalBest |

4.3. Hybrid Harmony Search Algorithm (HHS-IOBL)

This research paper proposes the hybridization of a harmony search algorithm using a particle swarm algorithm that incorporates the IOBL technique to reinitialize the memory of harmonies in cases where there is local stagnation (the objective value cannot be further optimized) during a certain number of iterations. The mechanism of a reinitialization of harmonies (Algorithm 4) replaces a percentage ζ of the harmonies stored in memory (HM). In such a way that a new solution is generated through the particle swarm algorithm, which receives the memory of harmonies as a parameter to be used as the initial population, the solution is compared to an HM[i] harmony taken randomly from HM if the new solution has a better objective value, then it replaces HM[i] memory. This mechanism is incorporated into the basic harmony search algorithm to transform it into HHS-IOBL (Algorithm 5). In addition, a simple mechanism to determine the stagnation of a solution is also incorporated within the general iteration cycle. After joining HM with new, ordering, and truncating HM (see steps 21 to 24), it is determined if HM (1) is equal to bestSol if they are equal, the stagnation variable is increased. Otherwise, this variable is zero (see steps 26 to 30). When stagnation ≥ MaxIt × ς the harmonies reinitialization mechanism is executed, the stagnation variable becomes zero, and HM is sorted by the objective value (see steps 31 to 36). This process continues as long as the number of iterations is not exceeded and, in the end, the best solution found bestSol returns.

| Algorithm 4. Reinitializing Harmony Memory |

| Inputs: ζ: Percentage of population replacement, HM: Harmony memory, HMS: Harmony memory size. Outputs: HM: new harmony memory. Used Functions: random_integer [1, HMS]: generates a random value between 1 and HMS, PSO_IOBL(HM): calculates a new solution using the PSO algorithm. 1. numRegen = ζ × HMS 2. for i = 1 to numRegen do 3. index = random_integer [1, HMS] 4. newSolution = PSO_IOBL(HM) 5. if newSolution.cost < HM[indice].cost then 6. HM[indice] = newSolution 7. end if 8. end for |

| Algorithm 5. Hybrid Harmony Search—IOBL |

| Inputs: MaxIt: maximum number of iterations, HMS: harmony memory size, nNew: memory size of new harmonies, HMCR: Rate of consideration of the memory of harmonies, PAR: Pitch adjustment rate, bw: bandwidth, bw_damp: bandwidth upgrade, D: number of dimensions, ζ: Percentage of HM replacement, ς: percentage of stagnation allowed. Outputs: bestSol: best solution found. Used functions: Cost: random_number [0,1]: generates a random value between 0 and 1. 1. Initialize new random harmony memory HM of size HMS 2. stagnation = 0 3. Sort HM by Cost 4. bestSolt = HM (1) 5. for it = 1 to MaxIt do 6. for k = 1 to nNEW do 7. Generate new random harmony xnew 8. for j = 1 to D do 9. r = random_number [0,1] 10. if r ≤ HMCR then 11. Randomly select harmony X[i]stored in HM 12. X[new,j] = X[i,j] 13. end if 14. r = random_number [0,1] 15. if r < PAR then 16. X[new,j] = X[new,j] + bw × random_number [0,1] × |u[j] − l[j]| 17. end if 18. end for 19. Evaluate (Xnew) 20. Add Xnewto memory of new harmonies NEW 21. end for 22. HM ∪ NEW 23. Sort HM by cost 24. Truncate HM to HMS harmonies 25. if it > 1 then 26. if HM(1) = bestSol then 27. stagnation = stagnation + 1 28. else 29. stagnation = 0 30. end if 31. if stagnation > MaxIt × ς then 32. reinitializingHarmonyMemory (HM) 33. end if 34. end if 35. bestSol = HM(1) 36. bw = bw × bw_damp 37. end for 38. return bestSol |

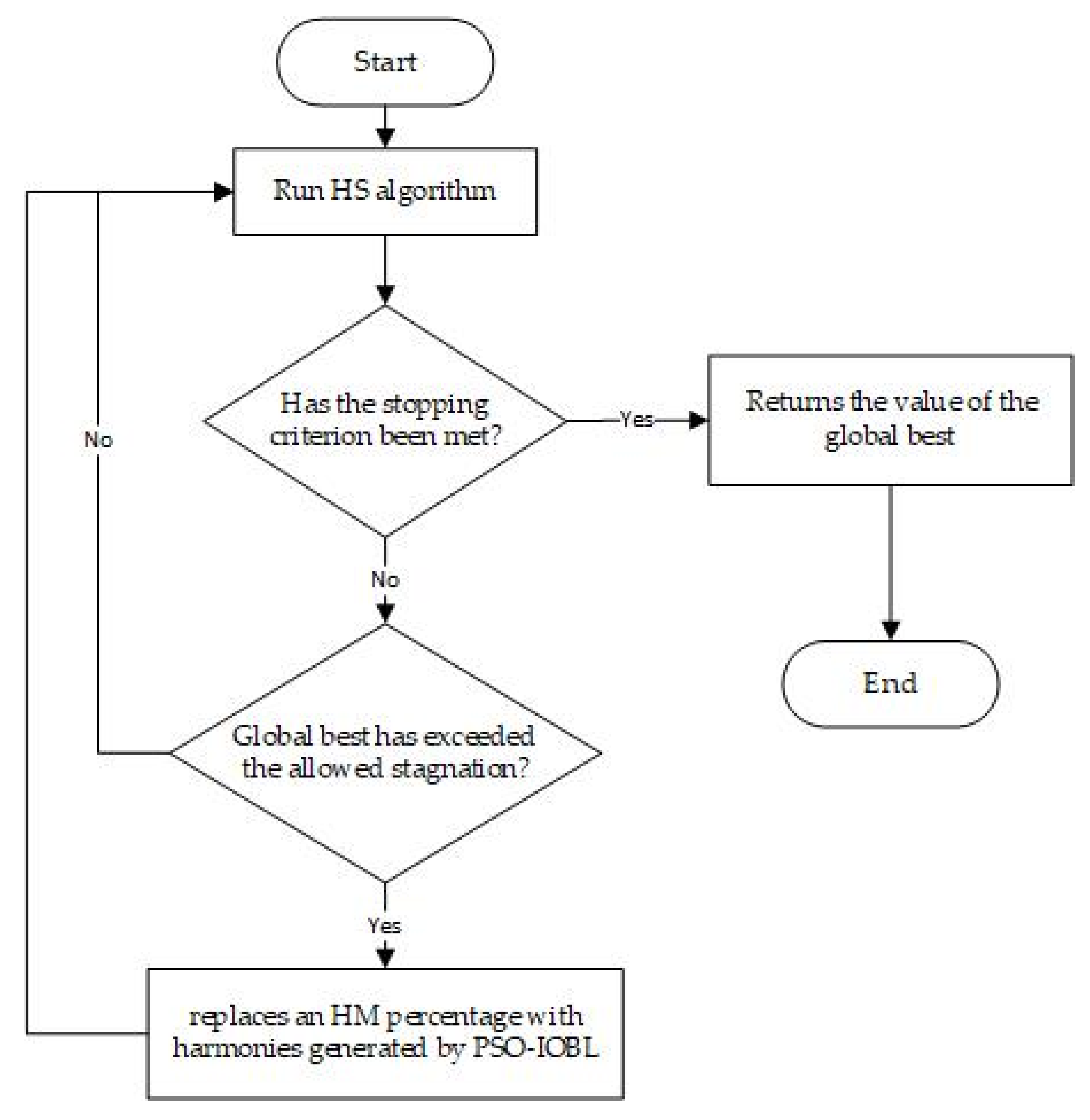

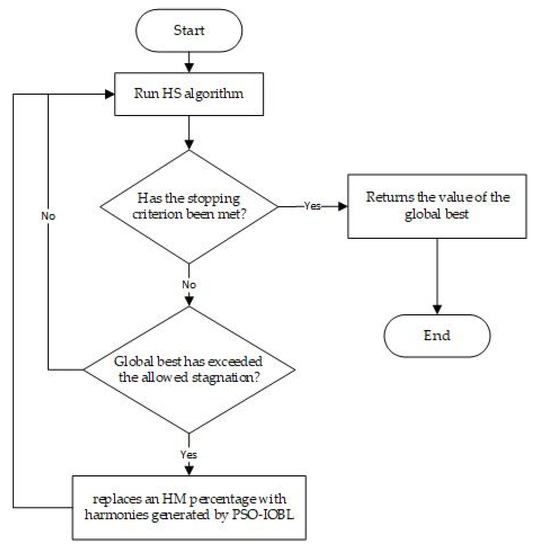

The previous process is shown in Figure 1, where the interaction of HS and PSO-IOBL can be observed more generally.

Figure 1.

General scheme of the HHS algorithm.

5. Computational Experiments

Table 2 shows the fifteen mathematical functions used in this work, their optimal value, and the range of variables used in the experiments performed, the first nine functions were taken from [6], and the last six were taken from [11]. For each algorithm and mathematical function, 30 independent runs were performed. The computer and software configuration is an AMD Ryzen 7 3700U 4-core 8-thread processor and 8 GB of RAM.

Table 2.

Mathematical functions used.

6. Results

Table 3 shows the results obtained with the proposed hybrid algorithm (HHS-IOBL) and the harmony search algorithm that incorporates the IOBL technique (HS-IOBL). The first column lists the fifteen functions used in the experiments, the second lists the worst, best, and average measurements, and the third and fourth columns show the objective values (OV) and times when the best solution is found (t Best), respectively. The fifth and sixth columns show the objective values (OV) and times when the best solution is found (t Best) of the algorithm against which the comparison is made. A Wilcoxon hypothesis test has been carried out to determine the significance of the results obtained for OV and t best. The seventh and eighth columns show the p-values for OV (p-value 1) and for t best (p-value 2) obtained with the Wilcoxon non-parametric test with 5% significance. The shaded cell in gray represents the lowest objective value (OV) or the shortest time when the best solution is found (t Best), as the case may be. The symbol ↑ represents a significant difference in favor of the reference algorithm (HHS-IOBL). The symbol ↓ represents a significant difference in favor of the algorithm against which it is compared (HS-IOBL). Moreover, the symbol -- represents no significant difference in favor of either algorithm.

Table 3.

Results obtained with the HHS-IOBL and HS-IOBL algorithms.

As can be seen, in quality, the performance of the HHS-IOBL algorithm outperforms the HS-IOBL algorithm by fourteen out of fifteen instances. Only in one case does it have the same performance as the HS-IOBL algorithm. In efficiency, the HHS-IOBL algorithm obtains the following results for the HS-IOBL algorithm: it is better in four instances, in another four, they have the same performance, and in seven instances, the HS-IOBL algorithm is better. Based on these results, it can be affirmed that the HHS-IOBL hybrid algorithm is a competitive alternative to the HS-IOBL algorithm and that incorporating the IOBL technique in the execution process of the particle swarm algorithm generates an improvement in the performance of the algorithm. Even when the HS-IOBL algorithm is better in efficiency, we can affirm that the proposed algorithm reflects a clear superiority in the quality of the results in terms of the objective function.

7. Conclusions

In this work, we approach the global optimization of real continuous functions. We propose a hybrid harmony search algorithm that incorporates a method of reinitializing harmonies memory using a particle swarm optimization algorithm with an improved opposition-based learning method (IOBL). This approach has been validated by comparing the performance of the proposed algorithm with the performance of the state-of-the-art harmony search algorithm, solving fifteen standard mathematical functions, and applying the Wilcoxon parametric test at a 5% significance level. The results of the computational experiments show that the proposed algorithm outperforms the state-of-the-art algorithm in quality and efficiency.

The main reason for the remarkable performance of the proposed algorithm is that the incorporation of a harmony memory reset mechanism prevents premature convergence of the hybrid algorithm.

Now, we are working to apply this approach to solve problems of other domains and to include a dynamic parameter tuning process in the algorithm.

Author Contributions

Conceptualization: H.J.F.-H.; methodology: E.V.-H.; research: E.V.-H.; software: J.A.B.-H. and M.Á.G.-M.; formal analysis: H.J.F.-H.; writing, proofreading, and editing: H.J.F.-H., J.A.B.-H., M.Á.G.-M. and A.B.-d.-Á. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The source code can be downloaded from https://github.com/JAlfredoBrambila/HybridHarmonySearch (accessed on 13 January 2023).

Acknowledgments

The authors thank CONACYT for supporting the projects with number A1-S-11012 of the Call for Basic Scientific Research 2017–2018 and project number 12397 of the Support Program for Scientific, Technological and Innovation Activities (PAACTI) in order to participate in the Call 2020-1 Support for Scientific Research Projects, Technological Development and Innovation in Health in the face of the Contingency by COVID-19. Alfredo Brambila, Miguel Angel García Morales, and Eduardo Villegas Huerta thank CONACYT for the support 760308, 731279, and 001818, respectively. Héctor Fraire thanks the Tecnológico Nacional de México for supporting the research project 10362.21-P.

Conflicts of Interest

Authors declare that they have no conflict of interest.

References

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Askarzadeh, A.; Esmat, R. Harmony Search Algorithm: Basic Concepts and Engineering Applications. In Recent Developments in Intelligent Nature-Inspired Computing; Srikanta, P., Ed.; IGI Global: Hershey, PA, USA, 2017; pp. 1–36. [Google Scholar]

- Fesanghary, M.; Mahdavi, M.; Alizadeh, Y. Hybridizing harmony search algorithm with sequential quadratic programming for engineering optimization problems. Comput. Methods Appl. Mech. Eng. 2008, 197, 3080–3091. [Google Scholar] [CrossRef]

- Geem, Z.W.; Sim, K.-B. Parameter-setting-free harmony search algorithm. Appl. Math. Comput. 2010, 217, 3881–3889. [Google Scholar] [CrossRef]

- Vasebi, A.; Fesanghary, M.; Bathaee, S.M.T. Combined heat and power economic dispatch by harmony search algorithm. Int. J. Electr. Power Energy Syst. 2007, 29, 713–719. [Google Scholar] [CrossRef]

- Alomoush, A.A.; Alsewari, A.R.A.; Zamli, K.Z.; Alrosan, A.; Alomoush, W.; Alissa, K. Enhancing three variants of harmony search algorithm for continuous optimization problems. Int. J. Electr. Comput. Eng. 2021, 11, 2088–8708. [Google Scholar] [CrossRef]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the Sixth International Symposium on Micromachine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Joint Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; IEEE Press: New York, NY, USA, 1995; pp. 1942–1948. [Google Scholar]

- Omran, M.G.H.; Mahdavi, M. Global-best harmony search. Appl. Math. Comput. 2008, 198, 643–656. [Google Scholar] [CrossRef]

- Geem, Z.W. Particle-swarm harmony search for water network design. Eng. Optim. 2009, 41, 297–311. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).