Abstract

In this study, a new smoothing nonlinear penalty function for constrained optimization problems is presented. It is proved that the optimal solution of the smoothed penalty problem is an approximate optimal solution of the original problem. Based on the smoothed penalty function, we develop an algorithm for finding an optimal solution of the optimization problems with inequality constraints. We further discuss the convergence of this algorithm and test this algorithm with three numerical examples. The numerical examples show that the proposed algorithm is feasible and effective for solving some nonlinear constrained optimization problems.

1. Introduction

Consider the following constrained optimization problem:

where the functions , are continuously differentiable functions.

Let be the feasible solution set and we assume that is not empty.

For a general constrained optimization problem, the penalty function method has attracted many researchers in both theoretical and practical aspects. However, to obtain an optimal solution for the original problem, the conventional quadratic penalty function method usually requires that the penalty parameter tends to infinity, which is undesirable in practical computation. In order to overcome the drawbacks of the quadratic penalty function method, exact penalty functions were proposed to solve problem . Zangwill [1] first proposed the exact penalty function

where is a penalty parameter, and . It was proved that there exists a fixed constant , for any , and any global solution of the exact penalty problem is also a global solution of the original problem. Therefore, the exact penalty function methods have been widely used for solving constrained optimization problems (see, e.g., [2,3,4,5,6,7,8,9]).

Recently, the nonlinear penalty function of the following form has been investigated in [10,11,12,13]:

where is assumed to be positive and . It is called the k-th power penalty function in [14,15]. Obviously, if , the nonlinear penalty function is reduced to the exact penalty function. In [12], it was shown that the exact penalty parameter corresponding to is substantially smaller than that of the exact penalty function. Rubinov and Yang [13] also studied a penalty function as follows:

where such that for any , and . The corresponding penalty problem of is defined as

In fact, the original problem is equivalent to the problem as follows:

Obviously, the penalty problem is the exact penalty problem of problem defined as (1).

It is noted that these penalty functions and are not differentiable at x such that for some , which prevents the use of gradient-based methods and causes some numerical instability problems in its implementation, when the value of the penalty parameter becomes large [3,5,6,8]. In order to use existing gradient-based algorithms, such as a Newton method, it is necessary to smooth the exact penalty function. Thus, the smoothing of the exact penalty function attracts much attention [16,17,18,19,20,21,22,23,24]. Pinar and Zenios [21] and Wu et al. [22] discussed a quadratic smoothing approximation to nondifferentiable exact penalty functions for constrained optimization. Binh [17] and Xu et al. [23] proposed a second-order differentiability technique to the exact penalty function. It is shown that the optimal solution of the smoothed penalty problem is an approximate optimal solution of the original optimization problem. Zenios et al. [24] discussed an algorithm for the solution of large-scale optimization problems.

In this study, we aim to develop the smoothing technique for the nonlinear penalty function (3). First, we define the following smoothing function by

where and . By considering this smoothing function, a new smoothing nonlinear penalty function is obtained. We use this smoothing nonlinear penalty function that is able to convert a constrained optimization problem into minimizations of a sequence of continuously differentiable functions and propose a corresponding algorithm for solving constrained optimization problems.

The rest of this paper is organized as follows. In Section 2, we propose a new smoothing penalty function for inequality constrained optimization problems, and some fundamental properties of its are proved. In Section 3, an algorithm based on the smoothed penalty function is presented and its global convergence is proved. In Section 4, we report results on application of this algorithm to three test problems and compare the results obtained with other similar algorithms. Finally, conclusions are discussed in Section 5.

2. Smoothing Nonlinear Penalty Functions

In this section, we first construct a new smoothing function. Then, we introduce our smoothing nonlinear penalty function and discuss its properties.

Let be as follows:

where . Obviously, the function is on for , but it is not for . It is useful in defining exact penalty functions for constrained optimization problems (see, e.g., [14,15,21]). Consider the nonlinear penalty function

and the corresponding penalty problem

As previously mentioned, for any and , the function is defined as:

where .

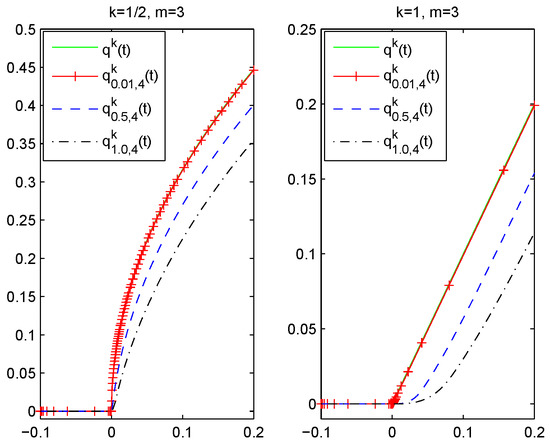

Figure 1 shows the behavior of and .

Figure 1.

The behavior of and

In the following, we discuss the properties of .

Lemma 1.

For and any , we have

- (i)

- is continuously differentiable for on , where

- (ii)

- .

- (iii)

- .

Proof.

(i) First, we prove that is continuous. Obviously, the function is continuous at any . We only need to prove that continuous at the separating points: 0 and .

(1) For , we have

which implies

Thus, is continuous at .

(2) For , we have

which implies

Thus, is continuous at .

Next, we will show that is continuously differentiable, i.e., is continuous. Actually, we only need to prove that is continuous at the separating points: 0 and .

(1) For , we have

which implies

Thus, is continuous at .

(2) For , we have

which implies

Thus, is continuous at .

(ii) For , by the definition of and we have

When , let . Then, we have . Consider the function:

and we have

Obviously, for . Moreover, and . Hence, we have

When , we have

Thus, we have

That is,

(iii) For , from (ii), we have

which is .

This completes the proof. ☐

In this study, we always assume that and is large enough, such that for all . Let

Then, is continuously differentiable at any and is a smooth approximation of . We have the following smoothed penalty problem:

Lemma 2.

We have that

for any and .

Proof.

For any , we have

Note that

for any .

By Lemma 1, we have

which implies

Hence,

This completes the proof. ☐

Lemma 3.

Let and be optimal solutions of problem and problem respectively. If is a feasible solution to problem , then is an optimal solution for problem .

Proof.

Under the given conditions, we have that

Therefore, , which is .

Since is an optimal solution and is feasible to problem , which is

Therefore, is an optimal solution for problem .

This completes the proof. ☐

Theorem 1.

Let and be the optimal solutions of problem and problem respectively, for some and . Then, we have that

Furthermore, if satisfies the conditions of Lemma 3 and is feasible to problem , then is an optimal solution for problem .

Proof.

By Lemma 2, for and , we obtain

That is,

and

From the definition of and the fact that are feasible for problem , we have

Note that , and from (8), we have

Therefore, , which is .

As is feasible to and by Lemma 3, is an optimal solution to , we have

Thus, is an optimal solution for problem .

This completes the proof. ☐

Definition 1.

A feasible solution of problem is called a KKT point, if there exists a such that the solution pair satisfies the following conditions:

Theorem 2.

Suppose the functions in problem (P) are convex. Let and be the optimal solutions of problem and problem respectively. If is feasible to problem , and there exists a such that the pair satisfies the conditions in Equations (9) and (10), then we have that

Proof.

Since the functions are continuously differentiable and convex, we see that

Therefore, . Thus,

By Lemma 2, we have

It follows that

Since is feasible to , which is

then

and, by , we have

3. Algorithm

In this section, by considering the above smoothed penalty function, we propose an algorithm to find an optimal solution of problem , defined as Algorithm 1.

Definition 2.

For , a point is called an ϵ-feasible solution to , if it satisfies .

| Algorithm 1: Algorithm for solving problem |

| Step 1: Let the initial point . Let and choose a constant such that , let and go to Step 2. Step 2: Use as the starting point to solve the following problem: Step 3: If is -feasible for problem , then the algorithm stops and is an approximate optimal solution of problem . Otherwise, let and . Then, go to Step 2. |

Remark 1.

From we can easily see that as , the sequence and the sequence .

Theorem 3.

For , suppose that for and the set

Let be the sequence generated by Algorithm 1. If and the sequence is bounded, then is bounded and the limit point of is the solution of .

Proof.

First, we prove that is bounded. Note that

From the definition of , we have

Suppose, on the contrary, that the sequence is unbounded and without loss of generality as , and . Then, , and from Equations (17) and (18), we have

which contradicts with the sequence being bounded. Thus, is bounded.

Next, we prove that the limit point of is the solution of problem . Let be a limit point of . Then, there exists the subset such that for , where is the set of natural numbers. We have to show that is an optimal solution of problem . Thus, it is sufficient to show and .

(i) Suppose . Then, there exists and the subset , such that for any and some .

If , from the definition of and is the optimal solution according j-th values of the parameters for any , we have

which contradicts with and .

If or , from the definition of and is the optimal solution according j-th values of the parameters for any , we have

which contradicts with and .

Thus, .

(ii) For any , we have

We know that , so . Therefore, holds.

This completes the proof. ☐

4. Numerical Examples

In this section, we apply the Algorithm 1 to three test problems. The proposed algorithm is implemented in Matlab (R2011A, The MathWorks Inc., Natick, MA, USA).

In each example, we take . Then, it is expected to get an -solution to problem with Algorithm 1, and the numerical results are presented in the following tables.

Example 1.

Consider the following problem ([20], Example 4.1)

For , let and choose . The results are shown in Table 1.

Table 1.

Results of Algorithm 1 with for Example 1.

For , let and choose . The results are shown in Table 2.

Table 2.

Results of Algorithm 1 with for Example 1.

The results in Table 1 and Table 2 show that the convergence of Algorithm 1 and the objective function values are almost the same. By Table 1, we obtain that an approximate optimal solution after two iterations with function value . In [20], the obtained approximate optimal solution is with function value . Numerical results obtained by our algorithm are slightly better than the results in [20].

Example 2.

Consider the following problem ([22], Example 3.2)

For , let , and choose . The results are shown in Table 3.

Table 3.

Results of Algorithm 1 with for Example 2.

For , let and choose . The results are shown in Table 4.

Table 4.

Results of Algorithm 1 with for Example 2.

The results in Table 3 and Table 4 show that the convergence of Algorithm 1 and the objective function values are almost the same. By Table 3, we obtain an approximate optimal solution is after 2 iterations with function value . In [22], the obtained global solution is with function value . Numerical results obtained by our algorithm are much better than the results in [22].

Example 3.

Consider the following problem ([26], Example 4.1)

For , let and choose . The results are shown in Table 5.

Table 5.

Results of Algorithm 1 with for Example 3.

For , let , and choose . The results are shown in Table 6.

Table 6.

Results of Algorithm 1 with for Example 3.

The results in Table 5 and Table 6 show that the convergence of Algorithm 1 and the objective function values are almost the same. By Table 5, we obtain that an approximate optimal solution is after two iterations with function value . In [26], the obtained approximate optimal solution is with function value . Numerical results obtained by our algorithm are slightly better than the results in [26].

5. Conclusions

In this study, we have proposed a new smoothing approach to the nonsmooth penalty function and developed a corresponding algorithm to solve constrained optimization with inequality constraints. It is shown that any optimal solution of the smoothed penalty problem is shown to be an approximate optimal solution or a global solution of the original optimization problem. Furthermore, the numerical results given in Section 4 show that the Algorithm 1 has a good convergence for an approximate optimal solution.

Acknowledgments

The authors would like to express their gratitude to anonymous referees’ detailed comments and remarks that help us improve our presentation of this paper considerably. This work is supported by grants from the National Natural Science Foundation of China (No. 11371242 and 61572099).

Author Contributions

All authors contributed equally to this work.

Conflicts of Interest

The authors declare no competing interests.

References

- Zangwill, W.I. Nonlinear programming via penalty function. Manag. Sci. 1967, 13, 334–358. [Google Scholar] [CrossRef]

- Bazaraa, M.S.; Goode, J.J. Sufficient conditions for a globally exact penalty function without convexity. Math. Program. Study 1982, 19, 1–15. [Google Scholar]

- Di Pillo, G.; Grippo, L. An exact penalty function method with global conergence properties for nonlinear programming problems. Math. Program. 1986, 36, 1–18. [Google Scholar] [CrossRef]

- Di Pillo, G.; Grippo, L. Exact penalty functions in constrained optimization. SIAM J. Control. Optim. 1989, 27, 1333–1360. [Google Scholar] [CrossRef]

- Han, S.P.; Mangasrian, O.L. Exact penalty function in nonlinear programming. Math. Program. 1979, 17, 251–269. [Google Scholar] [CrossRef]

- Lasserre, J.B. A globally convergent algorithm for exact penalty functions. Eur. J. Oper. Res. 1981, 7, 389–395. [Google Scholar] [CrossRef]

- Mangasarian, O.L. Sufficiency of exact penalty minimization. SIAM J. Control. Optim. 1985, 23, 30–37. [Google Scholar] [CrossRef]

- Rosenberg, E. Exact penalty functions and stability in locally Lipschitz programming. Math. Program. 1984, 30, 340–356. [Google Scholar] [CrossRef]

- Yu, C.J.; Teo, K.L.; Zhang, L.S.; Bai, Y.Q. A new exact penalty function method for continuous inequality constrained optimization problems. J. Indus. Mgmt. Optimiz. 2010, 6, 895–910. [Google Scholar] [CrossRef]

- Huang, X.X.; Yang, X.Q. Convergence analysis of a class of nonlinear penalization methods for constrained optimization via first-order necessary optimality conditions. J. Optim. Theory Appl. 2003, 116, 311–332. [Google Scholar] [CrossRef]

- Rubinov, A.M.; Glover, B.M.; Yang, X.Q. Extended Lagrange and penalty functions in continuous optimization. Optimization 1999, 46, 327–351. [Google Scholar] [CrossRef]

- Rubinov, A.M.; Yang, X.Q.; Bagirov, A.M. Penalty functions with a small penalty parameter. Optim. Methods Softw. 2002, 17, 931–964. [Google Scholar] [CrossRef]

- Rubinov, A.M.; Yang, X.Q. Lagrange-Type Functions in Constrained Non-Convex Optimization; Kluwer Academic Publishers: Dordrecht, Netherlands, 2003. [Google Scholar]

- Binh, N.T.; Yan, W.L. Smoothing approximation to the k-th power nonlinear penalty function for constrained optimization problems. J. Appl. Math. Bioinform. 2015, 5, 1–19. [Google Scholar]

- Yang, X.Q.; Meng, Z.Q.; Huang, X.X.; Pong, G.T.Y. Smoothing nonlinear penalty functions for constrained optimization. Numer. Funct. Anal. Optimiz. 2003, 24, 351–364. [Google Scholar] [CrossRef]

- Binh, N.T. Smoothing approximation to l1 exact penalty function for constrained optimization problems. J. Appl. Math. Inform. 2015, 33, 387–399. [Google Scholar] [CrossRef]

- Binh, N.T. Second-order smoothing approximation to l1 exact penalty function for nonlinear constrained optimization problems. Theor. Math. Appl. 2015, 5, 1–17. [Google Scholar]

- Binh, N.T. Smoothed lower order penalty function for constrained optimization problems. IAENG Int. J. Appl. Math. 2016, 46, 76–81. [Google Scholar]

- Chen, C.H.; Mangasarian, O.L. Smoothing methods for convex inequalities and linear complementarity problems. Math. Program. 1995, 71, 51–69. [Google Scholar] [CrossRef]

- Meng, Z.Q.; Dang, C.Y.; Jiang, M.; Shen, R. A smoothing objective penalty function algorithm for inequality constrained optimization problems. Numer. Funct. Anal. Optimiz. 2011, 32, 806–820. [Google Scholar] [CrossRef]

- Pinar, M.C.; Zenios, S.A. On smoothing exact penalty function for convex constrained optimization. SIAM J. Optim. 1994, 4, 486–511. [Google Scholar] [CrossRef]

- Wu, Z.Y.; Lee, H.W.J.; Bai, F.S.; Zhang, L.S. Quadratic smoothing approximation to l1 exact penalty function in global optimization. J. Ind. Manag. Optim. 2005, 1, 533–547. [Google Scholar]

- Xu, X.S.; Meng, Z.Q.; Sun, J.W.; Huang, L.G.; Shen, R. A second-order smooth penalty function algorithm for constrained optimization problems. Comput. Optim. Appl. 2013, 55, 155–172. [Google Scholar] [CrossRef]

- Zenios, S.A.; Pinar, M.C.; Dembo, R.S. A smooth penalty function algorithm for network-structured problems. Eur. J. Oper. Res. 1995, 83, 220–236. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S.T. Numerical Optimization; Springer: New York, NY, USA, 1999. [Google Scholar]

- Sun, X.L.; Li, D. Value-estimation function method for constrained global optimization. J. Optim. Theory Appl. 1999, 102, 385–409. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).