2.1. Persuasive Design and EdTech Nudges

Persuasive design has become mainstream in digital learning environment design, especially with educational technologies continuing to embrace strategies and tactics from marketing and behavior change systems (

Akgün & Topal, 2018;

Alslaity et al., 2023;

Huang et al., 2024;

Knight, 2025). Rooted in the assumptions of Persuasive Systems Design (PSD) principles, gamification systems, and behavioral psychology, EdTech platforms now incorporate features like badges, streak counters, pop-up reminders, and social comparison functionalities to shape learner engagement and motivation. Yet, this ubiquity of persuasive features necessarily invites questions regarding their psychological impacts, particularly in learning environments where long-term autonomy and cognitive well-being are most essential.

Increasing numbers of studies have attempted to trace the impact of persuasion strategies on student motivation, frequently with respect to Self-Determination Theory (SDT). (

F. A. Orji et al., 2024), illustrating that strategies such as self-monitoring and commitment-consistency are more aligned with SDT’s competence and autonomy motivations than more controlling external aspects such as competition or social comparison. These results suggest that not all persuasive design methods are equally effective or healthy in a psychological sense; instead, the extent to which a design promotes intrinsic motivation is moderated by the motivational needs congruence of the users.

This is encapsulated in comprehensive research on user types that creates notable differences in people’s susceptibility to persuasive tactics. For example, (

R. Orji et al., 2018) found that ‘players’ and ‘socialisers’—on the basis of the HEXAD gamification model of user types—are highly responsive to reward-based and social approaches, while ‘disruptors’ dislike externally forced structures like goal-setting and punishment. Such distinctions render personalization vital in persuasive learning systems. (

Alslaity et al., 2023), in a systematic review of personalization-based persuasive technologies, observe that, while gamification and PSD principles are used extensively in education, their impact is context-dependent and usually moderated by user characteristics like personality, initial motivation, and nudging susceptibility.

Individualized EdTech systems are increasingly popular but their cognitive and ethical effects from the use of persuasive strategies are still in their infancy. (

Murillo-Muñoz et al., 2021) observe that persuasive learning systems are used without reflection on how persuasion converges with learning goals, cognitive load, or user empowerment. Parallel to this, (

Huang et al., 2024) present empirical support that perceived persuasiveness acts as a mediator of the gamified reward’s influence on sustainable mobile app users’ behavior, indicating that persuasion is not merely a superficial design aspect, but an internalized psychological process capable of enabling as well as limiting action.

From a critical design perspective, the use of persuasive techniques in educational settings needs to be treated responsibly. (

Martin & Kwaku, 2019) state that reciprocity, consistency, and liking are generally persuasive but are highly context-dependent in terms of acceptability and efficacy across HEXAD profiles. Additionally, (

Sheetal & Singh, 2023) point towards severe ethical issues by observing that, in gamified retail environments, persuasive strategies may tip towards manipulation, reducing trust and control, comparisons that might be pertinent in EdTech scenarios where consent and knowledge of students are typically superficial.

The case for ethically grounded adaptive persuasive systems is supported by emerging machine learning-driven personalization trends. (

Knutas et al., 2019) promote rule-based systems mapping persuasive content to user profiles without inducing psychological misalignment. This supports the (

McCarthy et al., 2024) report, which foregrounds socio-cognitive representations and user journeys over static demographic classifications. These methods redirect the discussion from general persuasion to context-aware nudging, not only what captures users, but also what maintains cognitive autonomy and learning efficiency.

Even with these encouraging advances, the education field falls behind the likes of health and sustainability in systematically establishing the impact of persuasion interventions. Most education research, (

Murillo-Muñoz et al., 2021) observe, uses a variety of persuasion strategies without assessing their individual or overall impact. In addition, while immersive learning spaces (

Wiafe et al., 2024) and mobile learning spaces (

Za et al., 2022) have identified more results pertaining to persuasive features, these are less likely to sever the mechanisms of psychological pressure or the effect of Perceived Personalization, which is highly important in explaining unintended effects like digital fatigue or crowding-out of motivation.

Recent studies also identify large language model (LLM)-powered chatbots as credible and responsive agents in learning contexts. LLM-powered classroom flipping and peer-questioning assistance, for instance, can act as just-in-time nudgers, making visible cues, prompts, and follow-up questions that nudge participation at scale (

Wiafe et al., 2024). While such agents can enable engagement, they amplify ongoing debates concerning transparency, manipulation, and learner control in digital nudging (

Ng et al., 2024). In SDT, the same support feels autonomy-supportive (choice, rationale, reversibility) or controlling (invisible intent, overprompting). In agreement with CLT, poorly calibrated chatbot prompts risk extraneous cognitive load. These tensions require not only measuring persuasive chatbots on engagement metrics, but also on autonomy and cognitive imprints (

Wiafe et al., 2024). In our model, LLM chatbots utilize Perceived Persuasiveness of Platform Design (PPS) via anthropomorphic authority cues and framing appeals; Nudge Exposure (NE) via prompt rhythm, rate, and initiative; and provide Perceived Personalization (PP) via context-sensitive explanation and task adaptation. Therefore, chatbot design decisions are expected to undergo cognitive overload (COG) and perceived autonomy (PAUTO) to influence intrinsic motivation (INTR), explaining why dialogic agents can facilitate as well as discourage motivation. Locating this technology within ongoing controversies also implies transparency by design, issuing “why this/why now?” rationales of prompts, openly visible nudge settings, snooze/opt-out under user management, and graduated proactivity in order to keep persuasion autonomy-supportive and cognitively unobtrusive. Methodologically, a signal of cost and value both require mixed measures across engagement: (i) quantitative measures (valenced perceived persuasiveness scales separating beneficial from controlling influence; prompt cadence and interruption logs; dismissal/override rates; mean cognitive-load and autonomy measures) and (ii) qualitative measures (think-alouds, trace-based interviews) to access reports of control and manipulation. Lastly, considering the subgroup-sensitive effects of persuasion, LLM nudging should be compared using multi-group contrasts (e.g., digital literacy, experience with persuasive features, gender/age), just as our MGA approach, to allow for proactiveness and personalization intensity to be tuned to learner profiles and project dose–response “rate limits” to be determined to prevent overloading without undermining autonomy. Supporting this argument, a 3-week comparison study with secondary school students (

N = 74) concluded that a generative AI self-regulated learning (SRLbot) chatbot bested a rule-based one in science knowledge, behavioral engagement, and motivation; chatbot interaction numbers significantly predicted gains in SRL; and students credited personalized support and flexibility for benefits (

Ng et al., 2024). Students also indicated less learning anxiety and more consistent study habits with the generative AI design, which implies that transparent user-controllable LLM prompts can act as autonomy-supportive nudges when timely and sensitive in their use of context, with a note of warning that cadence and cognitive load should always be monitored so as not to control or overwhelm.

This research attempts to fill these gaps by empirically investigating the two psychological mechanisms elicited by persuasive design in intelligent learning spaces, i.e., perceived psychological pressure and autonomy, and how they influence intrinsic motivation. In the process, it helps expand knowledge about how persuasive strategies not only engage more, but influence learners’ emotional and mental conditions for better or worse. It also underscores the importance of ethical-by-design principles in the learning UX, reaffirming the excellence of coupling persuasive factors with the users’ personality and motivational theories, as opposed to boilerplate gamification templates. Building on the preceding review of persuasive design in EdTech, we next consider how psychological pressure and cognitive load shape users’ responses to such features.

2.2. Psychological Pressure and Cognitive Load

The sudden shift to online education amid the COVID-19 pandemic heightened psychological tension and cognitive load for every stakeholder in education, which revealed inherent limits in virtual learning environments (

Antoniadou et al., 2022;

Appel & Fernández, 2022;

Bozkurt et al., 2020;

Cheng et al., 2023). Exponential scholarship conveys how emergency remote teaching (ERT) resulting from the pandemic incited psychological loads on both students and employees, not just derailing pedagogical continuity, but further magnifying emotional load and cognitive fatigue. (

Srivastava et al., 2024) have posited that Indian instructors felt less meaningfulness, psychological safety, and emotional availability with the abrupt transition to online schooling. Coping mechanisms were institutionally centered and on knowledge sharing; though these acted as buffer effects in de-catastrophizing, they did not necessarily stop the cognitive and affective dissonance accumulated in speeded digital migration.

Cognitive overload to the learner is the most important problem in collaborative and solitary e-learning settings. (

Cheng et al., 2023), in their research with expectation confirmation theory, established that high student cognitive load decreased learner satisfaction and perceived utility of web-based systems, creating a feedback loop that minimized learner engagement. This is corroborated by (

Lee et al., 2024), who, in an experiment, demonstrated that high-fidelity instruments such as real-time monitoring dashboards of student activity actually enable instructor cognitive overload and stress unless calibrated. This is proof that computer-based interventions to enhance teaching effectiveness could unwittingly shift burdens of cognition without adequately aligning users’ information processing capacity.

Technostress, as a cognate psychological phenomenon encompassing digital overload, insecurity, and fatigue, has also drawn increasing empirical interest in turn. (

Asad et al., 2023), drawing on a behemoth study in Pakistan, validated the existence of very high relationships between technostressors—namely, techno-insecurity—and the psychological well-being of postgraduate students. By implication, their results point to differential sensitivity to technology-induced stress by gender and knowledge of areas, which is of concern with respect to digital uptake equity among learner subgroups. Information overload and uncertainty also exacerbate cognitive exhaustion in virtual environments. (

Wei et al., 2025) illustrated how micro-video websites, which initially appear to be interactive, could potentially lead to user weariness and abandonment as a mechanism for cognitive load when it exceeds perceived content value. This is more common in learning environments where message intricacy, screen time, and interference with learning sessions lead to prolonged mental exhaustion and diminishing attention spans.

In addition, numerous studies have reported difficulties in cognitive and psychological equilibrium under flipped and blended learning paradigms. (

Antoniadou et al., 2022) identified that dental students embraced e-learning for theoretical education but lost confidence regarding the mastering of practical skills, a cognitive dissonance in the methods of learning and content type. Similarly, (

Kovtaniuk et al., 2025) noted the potential of resources like Canva to mitigate certain cognitive loads via the provision of aesthetically pleasing content; yet, these resources cannot fully remedy deeper issues regarding motivation and attentional fragmentation.

It is noteworthy that scholarship recognizes teachers’ psychological workload. (

Gould et al., 2022) restated Cognitive Load Theory (CLT) as teacher-centered, calling for minimizing extraneous load through systematic digital pedagogy and teacher professional development. Their redefinition of CLT emphasizes the too-often-overlooked emotional labor that teachers need to perform in order to maintain an online “classroom presence” in the context of technologies and psychological burnout. Further global syntheses, such as the multi-national research by (

Bozkurt et al., 2020), reproduce this result in reporting to what extent ERT exacerbated already existing digital divides and amplified emotional vulnerability amongst students, teachers, and families. The authors call for a pedagogy of care—founded on empathy and emotional attunement—as an unavoidable counter-trend to digital acceleration and its psychosocial consequences.

Taken together, these studies draw a subtle picture of psychological and cognitive dynamics in virtual learning environments. Virtual technology introduces novel affordances for scalable learning, but unobtrusive stressors are introduced that impact well-being, learning efficiency, and teaching effectiveness. A general failing across studies is a lack of adequate theorizing of how individual differences—personality, resilience, and digital self-efficacy—mediate reactions to cognitive load (

Bozkurt et al., 2020;

Cheng et al., 2023;

Gould et al., 2022).

Moreover, few researchers put together psychological pressure with theory such as motivational autonomy or perceived control, leaving us without a proper appreciation of how students and teachers navigate mental requirements in coercive or gamified online learning environments. By integrating psychological pressure and cognitive load into its framework as critical components, this research suggests a more holistic approach to understanding EdTech persuasion and placing cognitive and affective costs centrally within user experience and system ethics.

2.3. Autonomy and Motivation in Smart Learning

After a flourishing body of interdisciplinary literature, the dynamics between autonomy, motivation, and wise learning environments have taken center stage in digital learning research (

Coelho et al., 2025;

Daniel et al., 2024;

Dicheva et al., 2023;

Fan, 2023). Central to this research is the use of Self-Determination Theory (SDT), which situates autonomy, competence, and relatedness as inherent psychological needs facilitating intrinsic motivation (

Ismailov & Ono, 2021;

Kian et al., 2022;

Schmid & Schoop, 2022). Spanning various settings—from gamified learning systems to phone-based language learning apps—empirical research repeatedly confirms that pedagogic relevance and perceptions of learner autonomy are central to maintaining motivation and engagement in smart learning environments.

For example, (

Terkaj et al., 2024) speak of the motivational effect of virtual learning factories in higher education. In their study, they suggest that students can be induced to explore authentic industrial situations through interactive immersive environments and, thus, promote perceived autonomy and interest. This is also elucidated by (

Yu et al., 2023), who confirm that mobile English learners are much more motivated and have better learning achievement compared to traditional ones, and they state that autonomy-supportive mobile instruments promote strategic learning behavior and enhanced outcomes.

There are cumulative learning effects based on gamification research. (

Dicheva et al., 2023) reveal the manner in which virtual currency within gamified settings induces motivation but does not induce intrinsic motivation or accomplishment in learning. On the other hand, (

Kian et al., 2022) display how aspects of game features appealing to students’ psychological types, i.e., those triggering group pursuit, challenge, and time pressure, can internalize extrinsic rewards in terms of intrinsic motivation, especially when based on SDT and directed towards learner types. But, they also caution that poorly designed features like too much choice or questioning can overwhelm and dismantle engagement and require scaffolding and customization of e-pedagogy.

The tension between autonomy and instructional design is also present in comparative research comparing synchronous and asynchronous learning. (

Zsifkovits et al., 2025) conclude that asynchronous modes are more socially accepted, but synchronous designs initiate more intrinsic motivation to a higher degree, being moderated by perceived autonomy. The tension between flexibility and interactive immediacy explains that autonomy is not so much a question of learner control, but of feeling psychologically free and capable within designed settings.

Greater complexity may be noted when discussing the emotional and motivational effect of distance learning during a crisis. (

Ismailov & Ono, 2021) present that, in the pandemic situation of the COVID-19 pandemic, activities maximizing autonomy, personal meaning, and social interaction were optimal to maintain learners’ motivation and those perceived as too difficult or irrelevant harmed motivation. In the same line, (

Suriagiri et al., 2022) conclude that, although digital technology creates greater enjoyment and interest, the traditional classroom setting still perpetuates greater autonomy and belongingness or high satisfaction. They believe that hybrid models can best serve the multiple needs of the learners.

Interpreting these findings, (

Coelho et al., 2025) adopt an experimental approach in systematically testing the effects of badges, points, and challenges on motivation. While all gamified conditions enhanced learning compared to a disclosed control group, sole usage of badges caused cognitive overload without necessarily enhancing motivation. Their research substantiates the contention that autonomy-supporting design must balance feedback mechanisms against learners’ cognitive capacity and motivational needs. Finally, as a point of culmination, (

Daniel et al., 2024), through their comprehensive systematic review, identify emergent pedagogies—flipped classrooms, virtual reality, and AI-supported feedback—as key enablers of blended learning performance and motivation. They emphasize the facilitator role of support in the creation of advanced self-regulated learning environments that are both technologically rich and pedagogically rich.

Collectively, these papers refer to the awareness that learner autonomy is both the outcome and mediator of effective smart learning environments. In whatever guise—via adaptive gamification, mobile frontends, or asynchronous collaboration—motivation is served best where digital affordances intersect the learners’ contextual requirements and their psychological requirements. But, they generate paradoxes—such as the possible benefits and tensions of interactivity at the same time—and need to be carefully designed based on evidence. In integrating autonomy, orientation, and motivational theory with affective and persuasive elements of EdTech, the present study contributes to this new discipline by providing an integrated model in attempting to satisfy cognitive, motivational, and emotional dynamics of technology-enhanced learning. To this end, the following hypotheses were formed:

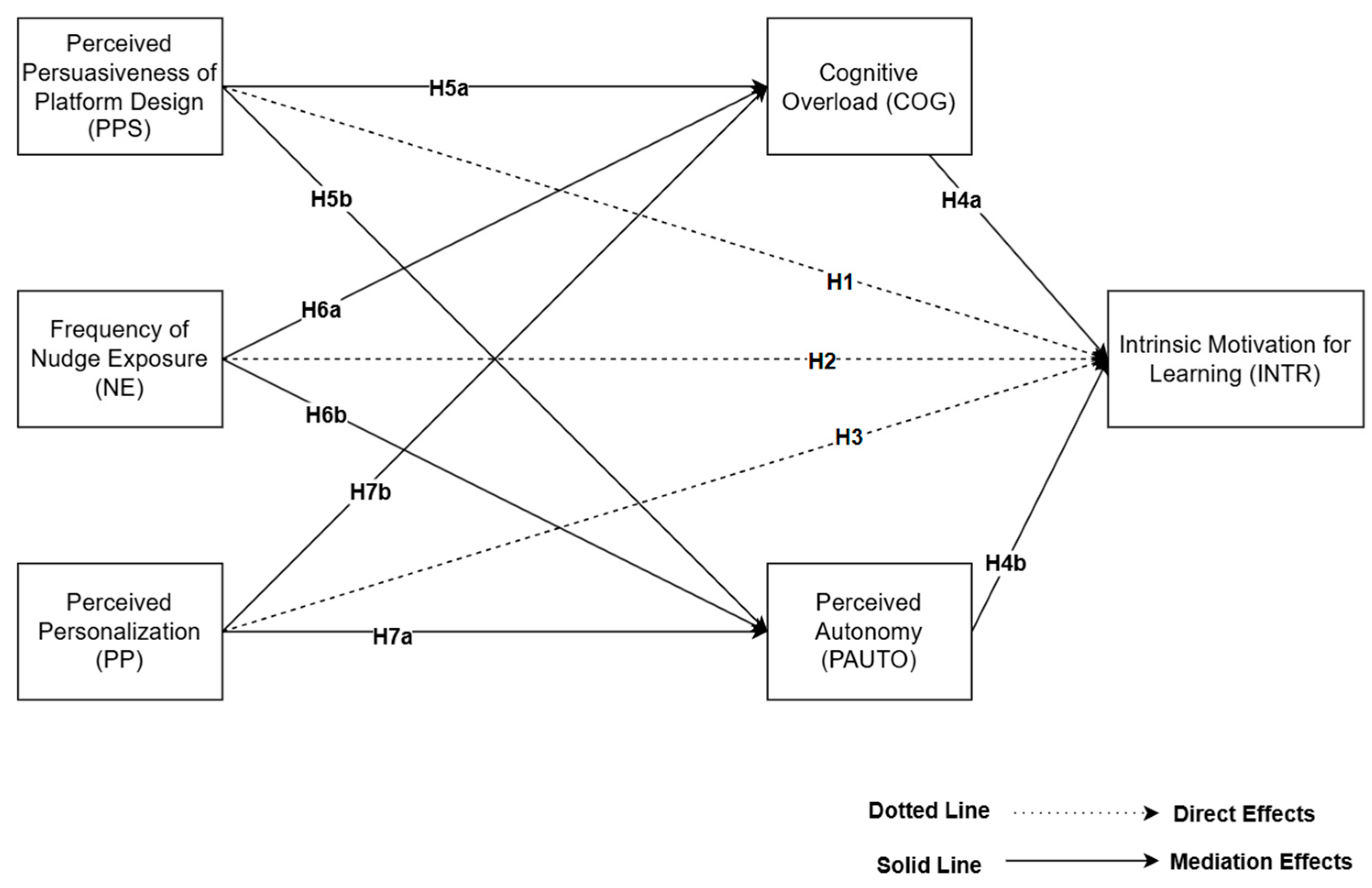

H1. Perceived Persuasiveness of Platform Design (PPS) has a direct effect on Intrinsic Motivation for Learning (INTR).

H2. Frequency of Nudge Exposure (NE) has a direct effect on Intrinsic Motivation for Learning (INTR).

H3. Perceived Personalization (PP) has a direct effect on Intrinsic Motivation for Learning (INTR).

H4a. Cognitive overload (COG) has a direct effect on Intrinsic Motivation for Learning (INTR).

H4b. Perceived autonomy (PAUTO) has a direct effect on Intrinsic Motivation for Learning (INTR).

H5a. Cognitive overload mediates the relationship between Perceived Persuasiveness of Platform Design and Intrinsic Motivation for Learning.

H5b. Perceived autonomy mediates the relationship between Perceived Persuasiveness of Platform Design and Intrinsic Motivation for Learning.

H6a. Cognitive overload mediates the relationship between Frequency of Nudge Exposure and Intrinsic Motivation for Learning.

H6b. Perceived autonomy mediates the relationship between Frequency of Nudge Exposure and Intrinsic Motivation for Learning.

H7a. Cognitive overload mediates the relationship between Perceived Personalization and Intrinsic Motivation for Learning.

H7b. Perceived autonomy mediates the relationship between Perceived Personalization and Intrinsic Motivation for Learning.