Unlocking Patient Resistance to AI in Healthcare: A Psychological Exploration

Abstract

1. Introduction

2. Literature Review

2.1. AI Applications in Healthcare

2.2. A Comprehensive Analysis of Innovation Resistance Models

2.3. Prior Studies on AI Resistance

3. Research Design

3.1. The Conduct of the Qualitative Study

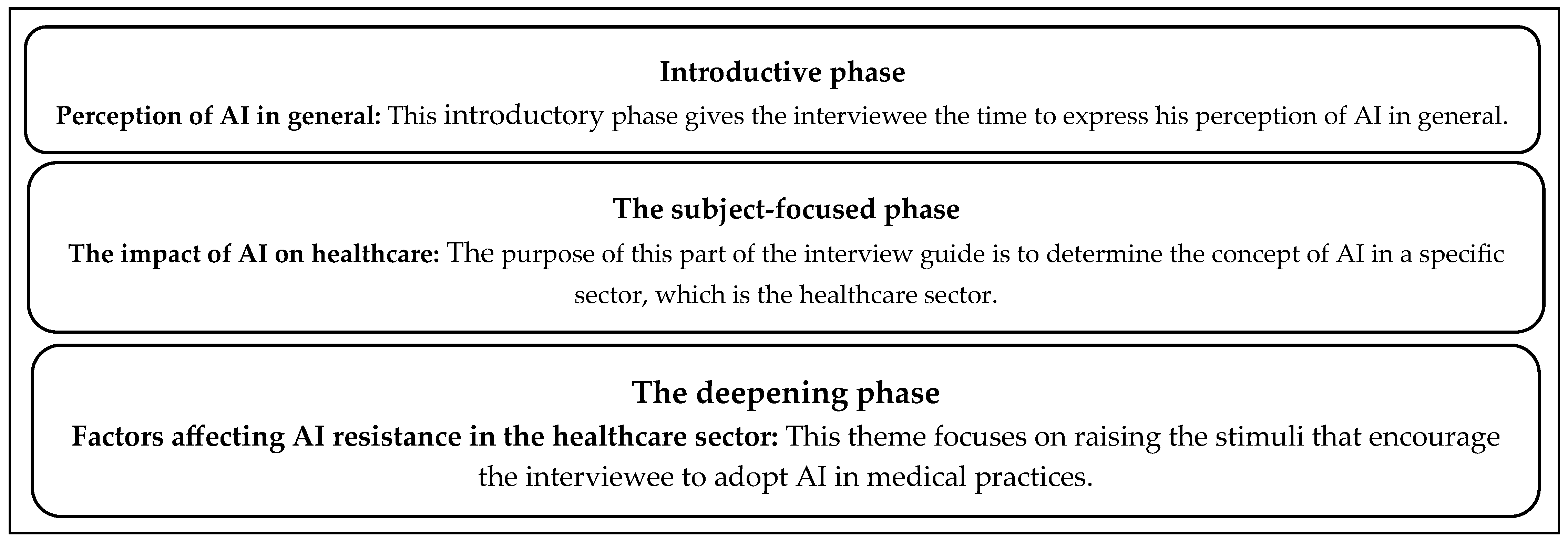

3.1.1. Interview Guideline

3.1.2. Data Collection of the Qualitative Study

3.1.3. Participants

3.1.4. Data Analysis for the Qualitative Study

- -

- Homogeneity: units of analysis belong to the same register.

- -

- Mutual exclusion: a unit can only be assigned to one category.

- -

- Relevance: categories align with the content and theoretical framework.

- -

- Productivity: results must be information rich.

- -

- Objectivity: different coders should achieve the same results.

- -

- Simplification of answers without losing detail.

- -

- Identification of plausible themes, aspects, and typologies.

- -

- Identification of variables and their interrelations.

- -

- Development of tables highlighting results using simple statistical operations (e.g., percentage calculations).

3.1.5. Reliability and Validity of Qualitative Research

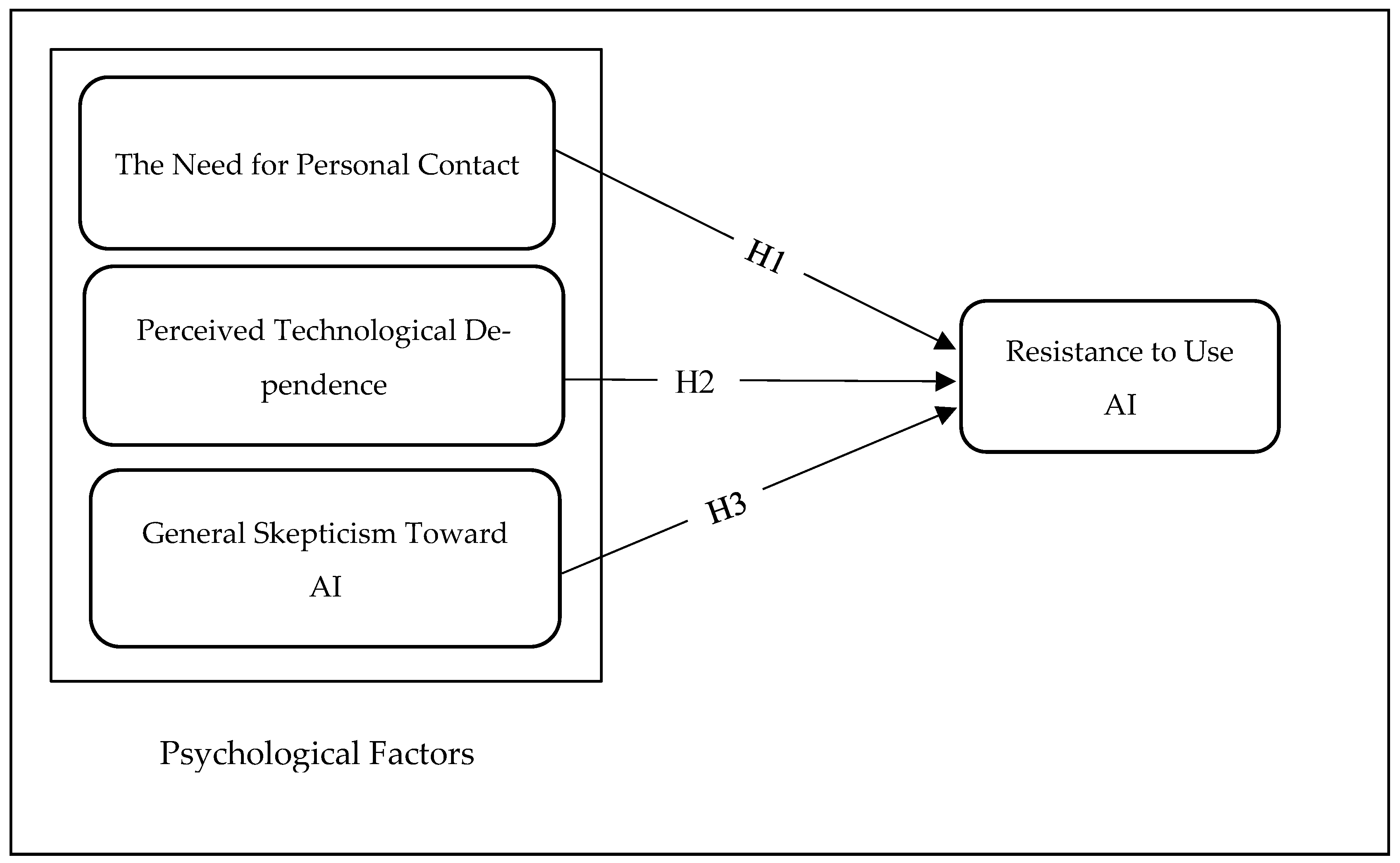

3.2. Hypothesis Development

3.2.1. The Need for Personal Contact

3.2.2. Perceived Technological Dependence

3.2.3. General Skepticism

3.3. The Conduct of the Quantitative Study

3.3.1. Data Collection of the Quantitative Study

3.3.2. Research Methods and Instrument

3.3.3. Data Analysis of the Quantitative Study

3.3.4. Demographics of Respondents Participating in the Quantitative Study

3.4. Ethical Considerations

4. Results

4.1. The Results of EFA

4.2. The Results of CFA

4.2.1. Goodness-of-Fit Indices for the Measurement Model

4.2.2. Convergent and Discriminant Validity

4.3. The Results of SEM

The Links Between Psychological Factors and Resistance to Use AI

5. Discussion

6. Implications

7. Limitation and Further Research Opportunities

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

List of Acronym

| Acronym | Full Form |

| AI | Artificial Intelligence |

| IoMT | Internet of Medical Things |

| SEM | Structural Equation Modeling |

| AMOS | Analysis of Moment Structures |

| EFA | Exploratory Factor Analysis |

| CFA | Confirmatory Factor Analysis |

| SPSS | Statistical Package for the Social Sciences |

| NVIVO | Qualitative Data Analysis Software |

| NPC | Need for Personal Contact |

| PTD | Perceived Technological Dependence |

| GSAI | General Skepticism toward AI |

| AIH | AI in Healthcare |

| SaMD | Software as a Medical Device |

| TAM | Technology Acceptance Model |

| UTAUT | Unified Theory of Acceptance and Use of Technology |

| IRT | Innovation Resistance Theory |

| ML | Machine Learning |

| DL | Deep Learning |

| NLP | Natural Language Processing |

References

- Agarwal, R., Dugas, M., Gao, G., & Kannan, P. K. (2020). Emerging technologies and analytics for a new era of value-centered marketing in healthcare. Journal of the Academy of Marketing Science, 48, 9–23. [Google Scholar] [CrossRef]

- Agatstein, K. (2023). Chart review is dead; Long live chart review: How artificial intelligence will make human review of medical records obsolete, one day. Population Health Management, 26(6), 438–440. [Google Scholar] [CrossRef]

- Agrawal, N. (2024). Neuralink: Linking AI with the human mind. Lambert Post. Available online: https://thelambertpost.com/news/neuralink-linking-ai-with-the-human-mind/ (accessed on 17 July 2024).

- Ahmed, M. I., Spooner, B., Isherwood, J., Lane, M., Orrock, E., & Dennison, A. (2023). A systematic review of the barriers to the implementation of artificial intelligence in healthcare. Cureus, 15(10), e46454. [Google Scholar] [CrossRef] [PubMed]

- Ahuja, A. S. (2019). The impact of artificial intelligence in medicine on the future role of the physician. PeerJ, 7, e7702. [Google Scholar] [CrossRef]

- Al-Dhaen, F., Hou, J., Rana, N. P., & Weerakkody, V. (2023). Advancing the understanding of the role of responsible AI in the continued use of IoMT in healthcare. Information Systems Frontiers, 25(6), 2159–2178. [Google Scholar] [CrossRef]

- Alhashmi, S. F., Salloum, S. A., & Mhamdi, C. (2019). Implementing artificial intelligence in the United Arab Emirates healthcare sector: An extended technology acceptance model. International Journal of Information Technology and Language Studies, 3(3), 27–42. [Google Scholar]

- Alowais, S. A., Alghamdi, S. S., Alsuhebany, N., Alqahtani, T., Alshaya, A. I., Almohareb, S. N., Aldairem, A., Alrashed, M., Saleh, K. B., Badreldin, H. A., Al Yami, M. S., & Al Harbi, S. (2023). Revolutionizing healthcare: The role of artificial intelligence in clinical practice. BMC Medical Education, 23(1), 689. [Google Scholar] [CrossRef] [PubMed]

- Alsheibani, S. A., Cheung, D. Y., & Messom, D. C. (2019). Factors inhibiting the adoption of artificial intelligence at organizational-level: A preliminary investigation. In Americas conference on information systems 2019 (p. 2). Association for Information Systems. [Google Scholar]

- Ameyaw, E. E., Edwards, D. J., Kumar, B., Thurairajah, N., Owusu-Manu, D. G., & Oppong, G. D. (2023). Critical factors influencing adoption of blockchain-enabled smart contracts in construction projects. Journal of Construction Engineering and Management, 149(3), 04023003. [Google Scholar] [CrossRef]

- Asan, O., Bayrak, A. E., & Choudhury, A. (2020). Artificial intelligence and human trust in healthcare: Focus on clinicians. Journal of Medical Internet Research, 22(6), e15154. [Google Scholar] [CrossRef]

- Ayanwale, M. A., & Ndlovu, M. (2024). Investigating factors of students’ behavioral intentions to adopt chatbot technologies in higher education: Perspective from expanded diffusion theory of innovation. Computers in Human Behavior Reports, 14, 100396. [Google Scholar] [CrossRef]

- Bajwa, J., Munir, U., Nori, A., & Williams, B. (2021). Artificial intelligence in healthcare: Transforming the practice of medicine. Future Healthcare Journal, 8(2), e188–e194. [Google Scholar] [CrossRef] [PubMed]

- Bardin, L. (2007). L’analyse de contenu (13th ed.). Presses Universitaires de France. [Google Scholar]

- Beam, A. L., & Kohane, I. S. (2018). Big data and machine learning in health care. JAMA: Journal of the American Medical Association, 319(13), 1317–1318. [Google Scholar] [CrossRef] [PubMed]

- Behera, R. K., Bala, P. K., & Rana, N. P. (2023). Assessing factors influencing consumers’ non-adoption intention: Exploring the dark sides of mobile payment. Information Technology & People, 36(7), 2941–2976. [Google Scholar]

- Berelson, B. (1952). Content analysis in communication research. Free Press. [Google Scholar]

- Bhattacherjee, A., & Hikmet, N. (2007). Physicians’ resistance toward healthcare information technology: A theoretical model and empirical test. European Journal of Information Systems, 16(6), 725–737. [Google Scholar] [CrossRef]

- Bouarar, A. C., Mouloudj, S., Umar, T. P., & Mouloudj, K. (2023). Antecedents of physicians’ intentions to engage in digital volunteering work: An extended technology acceptance model (TAM) approach. Journal of Integrated Care, 31(4), 285–299. [Google Scholar] [CrossRef]

- Byrne, B. M. (2001). Structural equation modeling: Perspectives on the present and the future. International Journal of Testing, 1(3–4), 327–334. [Google Scholar] [PubMed]

- Cadario, R., Longoni, C., & Morewedge, C. K. (2021). Understanding, explaining, and utilizing medical artificial intelligence. Nature Human Behaviour, 5(12), 1636–1642. [Google Scholar] [CrossRef]

- Carracedo-Reboredo, P., Linares-Blanco, J., Rodríguez-Fernandez, N., Cedron, F., Novoa, F. J., Carballal, A., Maojo, V., Pazos, A., & Fernandez-Lozano, C. (2021). A review on machine learning approaches and trends in drug discovery. Computational and Structural Biotechnology Journal, 19, 4538–4558. [Google Scholar] [CrossRef] [PubMed]

- Cavana, R., Delahaye, B., & Sekeran, U. (2001). Applied business research: Qualitative and quantitative methods. John Wiley & Sons. [Google Scholar]

- Chaibi, A., & Zaiem, I. (2022). Doctor’s resistance to artificial intelligence in healthcare. International Journal of Healthcare Information Systems and Informatics, 17(1), 1–13. [Google Scholar] [CrossRef]

- Charfi, A. A. (2012). L’expérience d’immersion en ligne dans les environnements marchands de réalité virtuelle [Ph.D. thesis, University Paris Dauphine]. Available online: https://ideas.repec.org/b/dau/thesis/123456789-9785.html (accessed on 23 December 2024).

- Charlton, J. P. (2002). A factor-analytic investigation of computer ‘addiction’ and engagement. British Journal of Psychology, 93(3), 329–344. [Google Scholar] [CrossRef]

- Chen, C. C., Chang, C. H., & Hsiao, K. L. (2022). Exploring the factors of using mobile ticketing applications: Perspectives from innovation resistance theory. Journal of Retailing and Consumer Services, 67, 102974. [Google Scholar] [CrossRef]

- Chew, H. S. J., & Achananuparp, P. (2022). Perceptions and needs of artificial intelligence in health care to increase adoption: A scoping review. Journal of Medical Internet Research, 24(1), e32939. [Google Scholar] [CrossRef] [PubMed]

- Choudhary, R., Kaushik, A., & Igulu, K. T. (2022). Artificial Intelligence in Healthcare. In M. Gupta, D. Sharma, & H. Gupta (Eds.), Revolutionizing business practices through artificial intelligence and data-rich environments (pp. 1–20). IGI Global. [Google Scholar] [CrossRef]

- Choudrie, J., Junior, C. O., McKenna, B., & Richter, S. (2018). Understanding and conceptualising the adoption, use and diffusion of mobile banking in older adults: A research agenda and conceptual framework. Journal of Business Research, 88, 449–465. [Google Scholar] [CrossRef]

- Claudy, M. C., Garcia, R., & O’Driscoll, A. (2015). Consumer resistance to innovation—A behavioral reasoning perspective. Journal of the Academy of Marketing Science, 43, 528–544. [Google Scholar] [CrossRef]

- Coronato, A., Naeem, M., De Pietro, G., & Paragliola, G. (2020). Reinforcement learning for intelligent healthcare applications: A survey. Artificial Intelligence in Medicine, 109, 101964. [Google Scholar] [CrossRef]

- Creswell, J. W. (2013). Research design: Qualitative, quantitative, and mixed methods approaches (4th ed.). Sage Publications. [Google Scholar]

- Curran, J. M., Meuter, M. L., & Surprenant, C. F. (2003). Intentions to use self-service technologies: A confluence of multiple attitudes. Journal of Service Research, 5(3), 209–224. [Google Scholar] [CrossRef]

- Dabholkar, P. A., & Bagozzi, R. P. (2002). An attitudinal model of technology-based self-service: Moderating effects of consumer traits and situational factors. Journal of the Academy of Marketing Science, 30(3), 184–201. [Google Scholar] [CrossRef]

- Davenport, T., & Kalakota, R. (2019). The potential for artificial intelligence in healthcare. Future Healthcare Journal, 6(2), 94–98. [Google Scholar] [CrossRef]

- Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35, 982–1003. [Google Scholar] [CrossRef]

- Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1992). Extrinsic and intrinsic motivation to use computers in the workplace 1. Journal of Applied Social Psychology, 22(14), 1111–1132. [Google Scholar] [CrossRef]

- Davis, R. A. (2001). A cognitive-behavioral model of pathological Internet use. Computers in Human Behavior, 17(2), 187–195. [Google Scholar] [CrossRef]

- Denecke, K., & Baudoin, C. R. (2022). A review of artificial intelligence and robotics in transformed health ecosystems. Frontiers in Medicine, 9, 795957. [Google Scholar] [CrossRef] [PubMed]

- Deo, N., & Anjankar, A. (2023). Artificial intelligence with robotics in healthcare: A narrative review of its viability in India. Cureus, 15(5), e39416. [Google Scholar] [CrossRef] [PubMed]

- Dhagarra, D., Goswami, M., & Kumar, G. (2020). Impact of trust and privacy concerns on technology acceptance in healthcare: An Indian perspective. International Journal of Medical Informatics, 141, 104164. [Google Scholar] [CrossRef] [PubMed]

- Dora, M., Kumar, A., Mangla, S. K., Pant, A., & Kamal, M. M. (2022). Critical success factors influencing artificial intelligence adoption in food supply chains. International Journal of Production Research, 60(14), 4621–4640. [Google Scholar] [CrossRef]

- Dwivedi, Y. K., Balakrishnan, J., Das, R., & Dutot, V. (2023). Resistance to innovation: A dynamic capability model based enquiry into retailers’ resistance to blockchain adaptation. Journal of Business Research, 157, 113632. [Google Scholar] [CrossRef]

- Dwivedi, Y. K., Hughes, L., Ismagilova, E., Aarts, G., Coombs, C., Crick, T., Duan, Y., Dwivedi, R., Edwards, J., Eirug, A., Galanos, V., Ilavarasan, P. V., Janssen, M., Jones, P., Kar, A. K., Kizgin, H., Kronemann, B., Lal, B., Lucini, B., … Williams, M. D. (2019). Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. International Journal of Information Management, 57, 101994. [Google Scholar] [CrossRef]

- Eloranta, S., & Boman, M. (2022). Predictive models for clinical decision making: Deep dives in practical machine learning. Journal of Internal Medicine, 292, 278–295. [Google Scholar] [CrossRef]

- Esmaeilzadeh, P. (2020). Use of AI-based tools for healthcare purposes: A survey study from consumers’ perspectives. BMC medical Informatics and Decision Making, 20, 1–19. [Google Scholar] [CrossRef]

- Evrard, Y., Pras, B., & Roux, E. (2009). Market: Études et recherches en marketing (8th ed.). Dunod. [Google Scholar]

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. [Google Scholar] [CrossRef]

- Fletcher, R. R., Nakeshimana, A., & Olubeko, O. (2021). Addressing fairness, bias, and appropriate use of artificial intelligence and machine learning in global health. Frontiers in Media SA, 3, 561802. [Google Scholar] [CrossRef] [PubMed]

- Fujimori, R., Liu, K., Soeno, S., Naraba, H., Ogura, K., Hara, K., Sonoo, T., Ogura, T., Nakamura, K., & Goto, T. (2022). Acceptance, barriers, and facilitators to implementing artificial intelligence-based decision support systems in emergency departments: A quantitative and qualitative evaluation. JMIR Formative Research, 6(6), e36501. [Google Scholar] [CrossRef]

- Gaczek, P., Pozharliev, R., Leszczyński, G., & Zieliński, M. (2023). Overcoming consumer resistance to AI in general health care. Journal of Interactive Marketing, 58(2–3), 321–338. [Google Scholar] [CrossRef]

- Gao, S., He, L., Chen, Y., Li, D., & Lai, K. (2020). Public perception of artificial intelligence in medical care: Content analysis of social media. Journal of Medical Internet Research, 22(7), e16649. [Google Scholar] [CrossRef]

- Ghaffar Nia, N., Kaplanoglu, E., & Nasab, A. (2023). Evaluation of artificial intelligence techniques in disease diagnosis and prediction. Discovery Artificial Intelligence, 3(1), 5. [Google Scholar] [CrossRef]

- Greenhalgh, T., Swinglehurst, D., & Stones, R. (2014). Rethinking resistance to ‘big IT’: A sociological study of why and when healthcare staff do not use nationally mandated information and communication technologies. Available online: https://pubmed.ncbi.nlm.nih.gov/27466649/ (accessed on 23 December 2024).

- Guarte, J. M., & Barrios, E. B. (2006). Estimation under purposive sampling. Communications in Statistics-Simulation and Computation, 35(2), 277–284. [Google Scholar] [CrossRef]

- Hafeez-Baig, A., & Gururajan, R. (2010). Adoption phenomena for wireless handheld devices in the healthcare environment. Journal of Communication in Healthcare, 3(3–4), 228–239. [Google Scholar] [CrossRef]

- Hajiheydari, N., Delgosha, M. S., & Olya, H. (2021). Skepticism and resistance to IoMT in healthcare: Application of behavioral reasoning theory with configurational perspective. Technological Forecasting and Social Change, 169, 120807. [Google Scholar] [CrossRef]

- Hameed, B. Z., Naik, N., Ibrahim, S., Tatkar, N. S., Shah, M. J., Prasad, D., Hegde, P., Chlosta, P., Rai, B. P., & Somani, B. K. (2023). Breaking barriers: Unveiling factors influencing the adoption of artificial intelligence by healthcare providers. Big Data and Cognitive Computing, 7(2), 105. [Google Scholar] [CrossRef]

- Hasanein, A. M., & Sobaih, A. E. E. (2023). Drivers and consequences of ChatGPT use in higher education: Key stakeholder perspectives. European Journal of Investigation in Health, Psychology and Education, 13(11), 2599–2614. [Google Scholar] [CrossRef] [PubMed]

- He, J., Baxter, S. L., Xu, J., Xu, J., Zhou, X., & Zhang, K. (2019). The practical implementation of artificial intelligence technologies in medicine. Nature Medicine, 25, 30–36. [Google Scholar] [CrossRef] [PubMed]

- He, W., Zhang, Z. J., & Li, W. (2021). Information technology solutions, challenges, and suggestions for tackling the COVID-19 pandemic. International Journal of Information Management, 57, 102287. [Google Scholar] [CrossRef] [PubMed]

- Heidenreich, S., & Kraemer, T. (2016). Innovations—doomed to fail? Investigating strategies to overcome passive innovation resistance. Journal of Product Innovation Management, 33(3), 277–297. [Google Scholar] [CrossRef]

- Heinze, J., Thomann, M., & Fischer, P. (2017). Ladders to m-commerce resistance: A qualitative means-end approach. Computers in Human Behavior, 73, 362–374. [Google Scholar] [CrossRef]

- Holden, R. J., & Karsh, B. T. (2010). The technology acceptance model: Its past and its future in health care. Journal of Biomedical Informatics, 43(1), 159–172. [Google Scholar] [CrossRef] [PubMed]

- Hseih, J. J. P. A., & Lin, C. H. (2017, July 16–20). A study of factors affecting acceptance of AI technology in healthcare. Proceedings of the 21st Pacific Asia Conference on Information Systems, Langkawi, Malaysia. [Google Scholar]

- Irimia-Diéguez, A., Velicia-Martín, F., & Aguayo-Camacho, M. (2023). Predicting FinTech innovation adoption: The mediator role of social norms and attitudes. Financial Innovation, 9(1), 36. [Google Scholar] [CrossRef]

- Iserson, K. V. (2024). Informed consent for artificial intelligence in emergency medicine: A practical guide. American Journal of Emergency Medicine, 76, 225–230. [Google Scholar] [CrossRef] [PubMed]

- Jiang, F., Jiang, Y., Zhi, H., Dong, Y., Li, H., Ma, S., Wang, Y., Dong, Q., Shen, H., & Wang, Y. (2017). Artificial intelligence in healthcare: Past, present and future. Stroke and Vascular Neurology, 2(4), 230–243. [Google Scholar] [CrossRef]

- Joachim, V., Spieth, P., & Heidenreich, S. (2018). Active innovation resistance: An empirical study on functional and psychological barriers to innovation adoption in different contexts. Industrial Marketing Management, 71, 95–107. [Google Scholar] [CrossRef]

- Johnson, K. B., Wei, W. Q., Weeraratne, D., Frisse, M. E., Misulis, K., Rhee, K., Zhao, J., & Snowdon, J. L. (2020). Precision medicine, AI, and the future of personalized health care. Clinical and Translational Science, 14(1), 86–93. [Google Scholar] [CrossRef]

- Ju, N., & Lee, K. H. (2021). Perceptions and resistance to accept smart clothing: Moderating effect of consumer innovativeness. Applied Sciences, 11(7), 3211. [Google Scholar] [CrossRef]

- Jussupow, E., Spohrer, K., & Heinzl, A. (2022). Identity threats as a reason for resistance to artificial intelligence: Survey study with medical students and professionals. JMIR Formative Research, 6(3), e28750. [Google Scholar] [CrossRef] [PubMed]

- Kaplan, A., & Haenlein, M. (2019). Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Business Horizons, 62(1), 15–25. [Google Scholar] [CrossRef]

- Kaur, P., Dhir, A., Singh, N., Sahu, G., & Almotairi, M. (2020). An innovation resistance theory perspective on mobile payment solutions. Journal of Retailing and Consumer Services, 55, 102059. [Google Scholar] [CrossRef]

- Khanna, N. N., Maindarkar, M. A., Viswanathan, V., Fernandes, J. F. E., Paul, S., Bhagawati, M., Ahluwalia, P., Ruzsa, Z., Sharma, A., Kolluri, R., & Singh, I. M. (2022). Economics of artificial intelligence in healthcare: Diagnosis vs. treatment. Healthcare, 10(12), 2493. [Google Scholar] [CrossRef] [PubMed]

- Khanra, S., Dhir, A., Islam, A. N., & Mäntymäki, M. (2020). Big data analytics in healthcare: A systematic literature review. Enterprise Information Systems, 14(7), 878–912. [Google Scholar] [CrossRef]

- Kim, D. H., & Lee, Y. J. (2020). The effect of innovation resistance of users on intention to use mobile health applications. Journal of the Korean BIBLIA Society for Library and Information Science, 31(1), 5–20. [Google Scholar]

- Kim, H. W., & Kankanhalli, A. (2009). Investigating user resistance to information systems Implementation: A status quo bias perspective. MIS Quarterly, 33(3), 567–582. [Google Scholar] [CrossRef]

- Kim, J.-Y., & Heo, W. (2022). Artificial intelligence video interviewing for employment: Perspectives from applicants, companies, developers and academicians. Information Technology & People, 35(3), 861–878. [Google Scholar]

- Kishor, A., & Chakraborty, C. (2022). Artificial intelligence and internet of things based healthcare 4.0 monitoring system. Wireless Personal Communications, 127(2), 1615–1631. [Google Scholar] [CrossRef]

- Köse, A. (2023). Artificial intelligence in health and applications. In A. Bouarar, K. Mouloudj, & D. Martínez Asanza (Eds.), Integrating digital health strategies for effective administration (pp. 20–31). IGI Global. [Google Scholar] [CrossRef]

- Laï, M.-C., Brian, M., & Mamzer, M.-F. (2020). Perceptions of artificial intelligence in healthcare: Findings from a qualitative survey study among actors in France. Journal of Translational Medicine, 18, 14. [Google Scholar] [CrossRef]

- Lapointe, L., & Rivard, S. (2006). Getting physicians to accept new information technology: Insights from case studies. CMAJ, 174, 1573–1578. [Google Scholar] [CrossRef]

- Laukkanen, T. (2016). Consumer adoption versus rejection decisions in seemingly similar service innovations: The case of the Internet and mobile banking. Journal of Business Research, 69, 2432–2439. [Google Scholar] [CrossRef]

- Laukkanen, T., & Kiviniemi, V. (2010). The role of information in mobile banking resistance. International Journal of Bank Marketing, 28(5), 372–388. [Google Scholar] [CrossRef]

- Lebcir, R., Hill, T., Atun, R., & Cubric, M. (2021). Stakeholders’ views on the organizational factors affecting the application of artificial intelligence in healthcare: A scoping review protocol. BMJ Open, 11(3), e044074. [Google Scholar] [CrossRef]

- Lee, C., & Yu, S. (1994). A study on the innovation resistance of consumers in the adoption process of new products: Focused on the innovation resistance model. Korean Management Review, 23(3), 217–249. [Google Scholar]

- Lee, K., & Joshi, K. (2017). Examining the use of status quo bias perspective in IS research: Need for re-conceptualizing and incorporating biases. Information Systems Journal, 27(6), 733–752. [Google Scholar] [CrossRef]

- Lee, M. K., & Rich, K. (2021, May 8–13). Who is included in human perceptions of AI?: Trust and perceived fairness around healthcare AI and cultural mistrust. 2021 CHI Conference on Human Factors in Computing Systems (pp. 1–14), Online Virtual. [Google Scholar]

- Leong, L. Y., Hew, T. S., Ooi, K. B., & Wei, J. (2020). Predicting mobile wallet resistance: A two-staged structural equation modeling-artificial neural network approach. International Journal of Information Management, 51, 102047. [Google Scholar] [CrossRef]

- Licoppe, C., & Heurtin, J. P. (2001). Managing one’s availability to telephone communication through mobile phones: A French Case Study of the development dynamics of mobile phone use. Personal and Ubiquitous Computing, 5(2), 99–108. [Google Scholar] [CrossRef]

- Lu, H. Y. (2008). Sensation-seeking, Internet dependency, and online interpersonal deception. CyberPsychology & Behavior, 11, 227–231. [Google Scholar]

- Madan, R., & Ashok, M. (2023). AI adoption and diffusion in public administration: A systematic literature review and future research agenda. Government Information Quarterly, 40(1), 101774. [Google Scholar] [CrossRef]

- Maleki Varnosfaderani, S., & Forouzanfar, M. (2024). The role of AI in hospitals and clinics: Transforming healthcare in the 21st century. Bioengineering, 11(4), 337. [Google Scholar] [CrossRef]

- Manco, L., Maffei, N., Strolin, S., Vichi, S., Bottazzi, L., & Strigari, L. (2021). Basic of machine learning and deep learning in imaging for medical physicists. Physica Medica, 83, 194–205. [Google Scholar] [CrossRef] [PubMed]

- Mani, Z., & Chouk, I. (2017). Drivers of consumers’ resistance to smart products. Journal of Marketing Management, 33(1–2), 76–97. [Google Scholar] [CrossRef]

- Mani, Z., & Chouk, I. (2018). Consumer resistance to innovation in services: Challenges and barriers in the internet of things era. Journal of Product Innovation Management, 35(5), 780–807. [Google Scholar] [CrossRef]

- Markus, M. L. (1983). Power, politics, and MIS implementation. Communications of the ACM, 26(6), 430–444. [Google Scholar]

- Merhi, M. I. (2023). An evaluation of the critical success factors impacting artificial intelligence implementation. International Journal of Information Management, 69, 102545. [Google Scholar] [CrossRef]

- Migliore, G., Wagner, R., Cechella, F. S., & Liébana-Cabanillas, F. (2022). Antecedents to the adoption of mobile payment in China and Italy: An integration of UTAUT2 and innovation resistance theory. Information Systems Frontiers, 24(6), 2099–2122. [Google Scholar] [CrossRef] [PubMed]

- Morel, K. P., & Pruyn, A. T. H. (2003). Consumer skepticism toward new products. In ACR European Advances (pp. 351–358). Association for Consumer Research. [Google Scholar]

- Mugabe, K. V. (2021). Barriers and facilitators to the adoption of artificial intelligence in radiation oncology: A New Zealand study. Technical Innovations & Patient Support in Radiation Oncology, 18, 16–21. [Google Scholar] [CrossRef]

- Murdoch, B. (2021). Privacy and artificial intelligence: Challenges for protecting health information in a new era. BMC Medical Ethics, 22, 122. [Google Scholar] [CrossRef]

- Nel, J., & Boshoff, C. (2019). Online customers’ habit-inertia nexus as a conditional effect of mobile-service experience: A moderated-mediation and moderated serial-mediation investigation of mobile-service use resistance. Journal of Retailing and Consumer Services, 47, 282–292. [Google Scholar] [CrossRef]

- Neumann, O., Guirguis, K., & Steiner, R. (2024). Exploring artificial intelligence adoption in public organizations: A comparative case study. Public Management Review, 26(1), 114–141. [Google Scholar] [CrossRef]

- Nilsen, E. R., Dugstad, J., Eide, H., Gullslett, M. K., & Eide, T. (2016). Exploring resistance to implementation of welfare technology in municipal healthcare services—A longitudinal case study. BMC Health Services Research, 16(1), 1–14. [Google Scholar] [CrossRef] [PubMed]

- Olawade, D. B., Wada, O. J., David-Olawade, A. C., Kunonga, E., & Abaire, O. J. (2023). Using artificial intelligence to improve public health: A narrative review. Frontiers in Public Health, 11, 1196397. [Google Scholar] [CrossRef] [PubMed]

- Oren, O., Gersh, B. J., & Bhatt, D. L. (2020). Artificial intelligence in medical imaging: Switching from radiographic pathological data to clinically meaningful endpoints. The Lancet Digital Health, 2(9), e486–e488. [Google Scholar] [CrossRef] [PubMed]

- Pai, F. Y., & Huang, K. I. (2011). Applying the technology acceptance model to the introduction of healthcare information systems. Technological Forecasting and Social Change, 78(4), 650–660. [Google Scholar] [CrossRef]

- Park, N., Kim, Y.-C., Shon, H. Y., & Shim, H. (2013). Factors influencing smartphone use and dependency in South Korea. Computers in Human Behavior, 29(4), 1763–1770. [Google Scholar] [CrossRef]

- Pinto-Coelho, L. (2023). How artificial intelligence is shaping medical imaging technology: A survey of innovations and applications. Bioengineering, 10(12), 1435. [Google Scholar] [CrossRef] [PubMed]

- Rackham, N. (1988). From experience: Why bad things happen to good new products. Journal of Product Innovation Management, 15(3), 201–207. [Google Scholar] [CrossRef]

- Ram, S. (1987). A model of innovation resistance. In M. Wallendorf, & P. Anderson (Eds.), Advances in consumer research (pp. 208–215). Association for Consumer Research. [Google Scholar]

- Ram, S., & Sheth, J. N. (1989). Consumer resistance to innovations: The marketing problem and its solutions. Journal of Consumer Marketing, 6(2), 5–14. [Google Scholar] [CrossRef]

- Rasheed, H. M. W., He, Y., Khizar, H. M. U., & Abbas, H. S. M. (2023). Exploring consumer-robot interaction in the hospitality sector: Unpacking the reasons for adoption (or resistance) to artificial intelligence. Technological Forecasting and Social Change, 192, 122555. [Google Scholar] [CrossRef]

- Ratchford, M., & Barnhart, M. (2012). Development and validation of the technology adoption propensity (TAP) index. Journal of Business Research, 65(8), 1209–1215. [Google Scholar] [CrossRef]

- Rawashdeh, A., Bakhit, M., & Abaalkhail, L. (2023). Determinants of artificial intelligence adoption in SMEs: The mediating role of accounting automation. International Journal of Data and Network Science, 7(1), 25–34. [Google Scholar] [CrossRef]

- Reddy, S., Fox, J., & Purohit, M. P. (2019). Artificial intelligence-enabled healthcare delivery. Journal of the Royal Society of Medicine, 112(1), 22–28. [Google Scholar] [CrossRef] [PubMed]

- Rowlands, J. (2021). Interviewee transcript review as a tool to improve data quality and participant confidence in sensitive research. International Journal of Qualitative Methods, 20, 16094069211066170. [Google Scholar] [CrossRef]

- Russell, S. J., & Norvig, P. (2016). Artificial intelligence: A modern approach. Pearson. [Google Scholar]

- Sadiq, M., Adil, M., & Paul, J. (2021). An innovation resistance theory perspective on purchase of eco-friendly cosmetics. Journal of Retailing and Consumer Services, 59, 102369. [Google Scholar] [CrossRef]

- Samuelson, W., & Zeckhauser, R. (1988). Status quo bias in decision making. Journal of Risk and Uncertainty, 1(1), 7–59. [Google Scholar] [CrossRef]

- Saunders, M., Lewis, P., & Thornhill, A. (2012). Research methods for business students (6th ed.). Pearson Education Limited. [Google Scholar]

- Seth, H., Talwar, S., Bhatia, A., Saxena, A., & Dhir, A. (2020). Consumer resistance and inertia of retail investors: Development of the resistance adoption inertia continuance (RAIC) framework. Journal of Retailing and Consumer Services, 55, 102071. [Google Scholar] [CrossRef]

- Shankar, V. (2018). How artificial intelligence (AI) is reshaping retailing. Journal of Retailing, 94(4), vi–xi. [Google Scholar] [CrossRef]

- Shu, Q., Tu, Q., & Wang, K. (2011). The Impact of computer self-efficacy and technology dependence on computer-related technostress: A social cognitive theory perspective. International Journal of Human-Computer Interaction, 27(10), 923–939. [Google Scholar] [CrossRef]

- Singh, N., Jain, M., Kamal, M. M., Bodhi, R., & Gupta, B. (2024). Technological paradoxes and artificial intelligence implementation in healthcare. An application of paradox theory. Technological Forecasting and Social Change, 198, 122967. [Google Scholar] [CrossRef]

- Sobaih, A. E. (2024). Ethical concerns for using artificial intelligence chatbots in research and publication: Evidences from Saudi Arabia. Journal of Applied Learning and Teaching, 7(1). [Google Scholar] [CrossRef]

- Sobaih, A. E. E., Elshaer, I. A., & Hasanein, A. M. (2024). Examining students’ acceptance and use of ChatGPT in Saudi Arabian higher education. European Journal of Investigation in Health, Psychology and Education, 14(3), 709–721. [Google Scholar] [CrossRef] [PubMed]

- Strohm, L., Hehakaya, C., Ranschaert, E. R., Boon, W. P., & Moors, E. H. (2020). Implementation of artificial intelligence (AI) applications in radiology: Hindering and facilitating factors. European Radiology, 30(10), 5525–5532. [Google Scholar] [CrossRef] [PubMed]

- Talwar, S., Dhir, A., Kaur, P., & Mäntymäki, M. (2020). Barriers toward purchasing from online travel agencies. International Journal of Hospitality Management, 89, 102593. [Google Scholar] [CrossRef]

- Teddlie, C., & Yu, F. (2007). Mixed methods sampling: A typology with examples. Journal of Mixed Methods Research, 1(1), 77–100. [Google Scholar] [CrossRef]

- Temessek, Z. (2008). The role of qualitative research in broadening the understanding of knowledge management. The Learning Organization, 15(2), 159–172. [Google Scholar]

- Tsai, T. H., Lin, W. Y., Chang, Y. S., Chang, P. C., & Lee, M. Y. (2020). Technology anxiety and resistance to change behavioral study of a wearable cardiac warming system using an extended TAM for older adults. PLoS ONE, 15(1), e0227270. [Google Scholar] [CrossRef] [PubMed]

- Turja, T., Aaltonen, I., Taipale, S., & Oksanen, A. (2020). Robot acceptance model for care (RAM-care): A principled approach to the intention to use care robots. Information & Management, 57(5), 103220. [Google Scholar]

- UKRI. (2022). Artificial intelligence technologies. UKRI. Available online: https://www.ukri.org/what-we-do/browse-our-areas-of-investment-and-support/artificial-intelligence-technologies/ (accessed on 17 September 2024).

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. [Google Scholar] [CrossRef]

- Venkatesh, V., Thong, J. Y., & Xu, X. (2012). Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Quarterly, 36(1), 157–178. [Google Scholar] [CrossRef]

- Walker, R. H., & Johnson, L. W. (2006). Why consumers use and do not use technology-enabled services. Journal of Services Marketing, 20(2), 125–135. [Google Scholar] [CrossRef]

- Walker, R. H., Craig-Lees, M., Hecker, R., & Francis, H. (2002). Technology-enabled service delivery: An investigation of reasons affecting customer adoption and rejection. International Journal of Service Industry Management, 13(1), 91–106. [Google Scholar] [CrossRef]

- Weber, R. P. (1990). Basic content analysis (Vol. 49). Sage. [Google Scholar]

- Xue, Y., Zhang, X., Zhang, Y., & Luo, E. (2024). Understanding the barriers to consumer purchasing of electric vehicles: The innovation resistance theory. Sustainability, 16(6), 2420. [Google Scholar] [CrossRef]

- Yang, Y., Ngai, E. W., & Wang, L. (2024). Resistance to artificial intelligence in health care: Literature review, conceptual framework, and research agenda. Information & Management, 61(4), 103961. [Google Scholar]

- Young, K. S. (2004). Internet addiction: A new clinical phenomenon and its consequences. American Behavioral Scientist, 48(4), 402–415. [Google Scholar] [CrossRef]

- Yu, C. S., & Chantatub, W. (2016). Consumers’ resistance to using mobile banking: Evidence from Thailand and Taiwan. International Journal of Electronic Commerce Studies, 7(1), 21–38. [Google Scholar] [CrossRef]

- Yu, K.-H., Beam, A. L., & Kohane, I. S. (2018). Artificial intelligence in healthcare. Nature Biomedical Engineering, 2(10), 719–731. [Google Scholar] [CrossRef]

- Zhang, W., Cai, M., Lee, H. J., Evans, R., Zhu, C., & Ming, C. (2024). AI in Medical Education: Global situation, effects and challenges. Education and Information Technologies, 29(4), 4611–4633. [Google Scholar] [CrossRef]

- Zhou, B., Yang, G., Shi, Z., & Ma, S. (2022). Natural language processing for smart healthcare. IEEE Reviews in Biomedical Engineering, 17, 4–18. [Google Scholar] [CrossRef]

- Zikmund, W. G. (2010). Business research methods (8th ed.). South-Western Cengage Learning. [Google Scholar]

| AI Techniques | Application in Healthcare |

|---|---|

| Medical Imaging Analysis (Oren et al., 2020; Manco et al., 2021; Pinto-Coelho, 2023). |

|

| Predictive Analytics (Ghaffar Nia et al., 2023). |

|

| Charting, Chatbots, and Virtual Assistants (Agatstein, 2023; Alowais et al., 2023). |

|

| AI-driven Robots (Deo & Anjankar, 2023; Denecke & Baudoin, 2022). |

|

| Virtual Screening (Carracedo-Reboredo et al., 2021) |

|

| Theory | Description | Authors |

|---|---|---|

| Status Quo Bias | The Status Quo Bias theory provides valuable insights into individuals’ inclination to maintain the status quo rather than embracing new systems, rooted in established psychological principles. It delineates psychological commitment, cognitive misperception, and rational decision-making as pivotal factors influencing decision inertia. While acknowledging the facilitating role of perceived value, the theory may oversimplify intricate decision processes and neglect external factors impacting decision-making dynamics. Despite offering a framework for comprehending resistance to change, it may not comprehensively encapsulate the nuanced dynamics of individual decision-making or accommodate situational influences. | Samuelson and Zeckhauser (1988), H. W. Kim and Kankanhalli (2009), K. Lee and Joshi (2017), Hajiheydari et al. (2021) |

| Ram (1987) Theory | Proposes two elements influencing resistance to innovation: innovation characteristics and consumer characteristics. Innovation characteristics include the features and effects of new goods on consumers, while consumer characteristics are psychological traits influencing resistance. Ram and Sheth (1989) considered two categories of hurdles to innovation adoption: functional and psychological. The functional ones include subcategories such as usage, value, and risk hurdles, and are active forms of resistance stemming from the innovation’s characteristics and features (Heidenreich & Kraemer, 2016). These hurdles arise when adopting innovation necessitates significant changes, leading to concerns about risk, usage, and value. In contrast, psychological hurdles include traditional and image barriers, rooted in consumers’ existing worldviews and preexisting perceptions and traditions (Yu & Chantatub, 2016). | Ram (1987), Ram and Sheth (1989) |

| Expanded Ram Model | Researchers expanded the Ram and Sheth model. Laukkanen and Kiviniemi (2010) explored the impact of company information on resistance barriers. Joachim et al. (2018) proposed a broader framework, including a more inclusive classification of product- and service-specific hurdles. Mani and Chouk (2018) introduced additional obstacles: technological vulnerability, and ideological and personal barriers. | Laukkanen and Kiviniemi (2010), Joachim et al. (2018), Mani and Chouk (2018) |

| Yu and Lee Model | Refined Ram’s model of innovation resistance distinguishes between innovation resistance and hurdles. Yu and Lee proposed that only the customer and innovation aspects in Ram’s model give rise to innovation resistance, while the process of propagation acts as a societal barrier to innovation diffusion (C. Lee & Yu, 1994). | C. Lee and Yu (1994) |

| Study | Focus | Key Findings |

|---|---|---|

| Alhashmi et al. (2019) | AI adoption barriers in Australian organizations | Identified barriers using the TOE framework; provided insights and a research agenda for executives and managers. |

| Zhang et al. (2024) | AI in medical education | Review identified challenges including performance improvement, effectiveness, AI training data, and algorithms. |

| Strohm et al. (2020) | Implementation barriers in clinical radiology | Inconsistent technical performance, unstructured processes, uncertain added value, and varying acceptance/trust. |

| Cadario et al. (2021) | Resistance to medical AI | Challenges in understanding algorithms and illusory understanding of human decision-making; proposed interventions. |

| Gao et al. (2020) | Social media analysis of attitudes toward AI doctors | Revealed positive attitudes tempered by concerns about technology maturity and company trustworthiness. |

| Fujimori et al. (2022) | AI-based decision support systems in emergency departments | Highlighted system performance and compatibility as significant challenges. |

| Ahmed et al. (2023) | Barriers to AI adoption in healthcare | A systematic review identified hurdles in six key areas: ethics, liability, regulatory, workforce, social, and patient safety; emphasized the need for understanding and overcoming these barriers for effective AI implementation in healthcare. |

| Bhattacherjee and Hikmet (2007) | Theoretical model of physician resistance to HIT usage | Identified perceived threat and compatibility as key factors in resistance intentions. |

| Gaczek et al. (2023) | Consumer resistance to AI healthcare recommendations | Impact of diagnosis trustworthiness and health anxiety; social proof as a mitigating factor. |

| Mugabe (2021) | AI adoption in radiation oncology in New Zealand | Noted low levels of expertise as a hindrance to AI use. |

| Jussupow et al. (2022) | Professional identity threats in medical AI resistance | Examined perceived self-threat, temporal distance of AI, and differences between students and professionals. |

| Chaibi and Zaiem (2022) | Barriers to AI adoption among physicians in Tunisia | Poor infrastructure, including financial resources, specialized training, performance risks, perceived costs, technology dependency, and fears of AI replacing human jobs. |

| Theme | Subtheme | Citation Times | % of the Theme | Verbatim | General Explanation |

|---|---|---|---|---|---|

| Psychological Factors Affecting Patient Resistance to AI | Need for Personal Contact | 38 | 88.37% |

| The sub-theme “Need for Personal Contact” highlights participants’ strong preference for human interaction, empathy, and trust in healthcare settings. Patients express concerns about AI replacing essential human qualities, such as emotional understanding, personalized care, and the human touch. The quotes emphasize that trust, emotional security, and face-to-face communication are vital for their well-being, which they believe AI cannot replicate. Participants also worry about AI’s inability to fully capture nuanced health details that doctors might identify in person. |

| Perceived Technological dependence | 13 | 30.23% |

| The sub-theme “Perceived Technological Dependence” reflects participants’ concerns about over-reliance on AI in healthcare. The quotes highlight fears about losing human involvement in decision-making, the risk of technology failures, and the erosion of patients’ personal control over their care. Participants emphasize the importance of maintaining human intuition, judgment, and autonomy, which they feel are diminished when healthcare depends too heavily on AI. | |

| General Skepticism | 17 | 39.53%. |

| The sub-theme “General Skepticism” captures participants’ doubts and lack of confidence in AI technology for healthcare. The quotes reflect concerns about the complexity and personal nature of healthcare, where participants prefer human judgment over machines. Fears of errors, misdiagnoses, and the unknown risks associated with AI highlight a general discomfort and distrust in relying on technology for critical health decisions. Participants also expressed reluctance to adopt AI due to feeling like test subjects for unproven technologies. |

| Constructs | Measurement Items |

|---|---|

| The Need for Personal Contact (NPC) | NPC1. “I prefer to deal face-to-face with my doctor”. NPC2. “I am more reassured by dealing face-to-face with my doctor”. NPC3. “My particular service requirements are better served by doctors”. NPC4. “I prefer face-to-face contact to explain what I want to my doctor and to answer my questions”. NPC5. “I feel like I’m more in control when dealing with my doctor than with automated systems”. NPC6. “I like interacting with my doctor and medical staff in general”. |

| Perceived Technological Dependence (PTD) | PTD1. “I am afraid of becoming dependent on AI technology”. PTD2. “I am afraid that my doctor become dependent on AI technology”. PTD3. “AI technology will reduce my autonomy and my doctor’s autonomy”. PTD4. “I think my social life will suffer from my use of AI technology”. |

| General Skepticism Toward AI (GSAI) | GSAI1. “I am skeptical about AI technology”. GSAI2. “I do not think AI technology will be successful”. GSAI3. “I doubt that AI technology can actually do what its manufacturers promise”. |

| Resistance to Use AI (RU) | RU1. “In sum, the possible use of AI technology to manage my health would cause problems that I don’t need”. RU2. “AI technology to manage my health would be connected with too many uncertainties”. RU3. “Using AI technology for managing my health is not for me”. RU4. “I am likely to be opposed to the use of AI technology for managing my health”. RU5. “I do not need AI technology to manage my health”. |

| Factor Loadings | CR | AVE | p-Value | |

|---|---|---|---|---|

| The Need for Personal Contact | 0.989 | 0.935 | ||

| NPC1 | 0.980 | *** | ||

| NPC2 | 0.946 | *** | ||

| NPC3 | 0.966 | *** | ||

| NPC4 | 0.970 | *** | ||

| NPC5 | 0.974 | *** | ||

| NPC6 | 0.966 | |||

| Perceived Technological Dependence | 0.974 | 0.902 | ||

| PTD1 | 0.965 | *** | ||

| PTD2 | 0.944 | *** | ||

| PTD3 | 0.968 | *** | ||

| PTD4 | 0.923 | |||

| General Skepticism Towards AI | 0.976 | 0.931 | ||

| GSAI1 | 0.959 | *** | ||

| GSAI 2 | 0.968 | *** | ||

| GSAI 3 | 0.967 | *** | ||

| Resistance to Use AI | 0.981 | 0.911 | ||

| RU1 | 0.945 | *** | ||

| RU2 | 0.933 | *** | ||

| RU3 | 0.966 | *** | ||

| RU4 | 0.952 | *** | ||

| RU5 | 0.975 | *** |

| Hypotheses | Path Coefficient | Standard Error | C.R. | p Values | Results |

|---|---|---|---|---|---|

| NPC → RU | 0.515 | 0.021 | 17.058 | *** | Accepted |

| PTD → RU | 0.620 | 0.023 | 18.973 | *** | Accepted |

| GSAI → RU | 0.222 | 0.019 | 7.900 | *** | Accepted |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the University Association of Education and Psychology. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sobaih, A.E.E.; Chaibi, A.; Brini, R.; Abdelghani Ibrahim, T.M. Unlocking Patient Resistance to AI in Healthcare: A Psychological Exploration. Eur. J. Investig. Health Psychol. Educ. 2025, 15, 6. https://doi.org/10.3390/ejihpe15010006

Sobaih AEE, Chaibi A, Brini R, Abdelghani Ibrahim TM. Unlocking Patient Resistance to AI in Healthcare: A Psychological Exploration. European Journal of Investigation in Health, Psychology and Education. 2025; 15(1):6. https://doi.org/10.3390/ejihpe15010006

Chicago/Turabian StyleSobaih, Abu Elnasr E., Asma Chaibi, Riadh Brini, and Tamer Mohamed Abdelghani Ibrahim. 2025. "Unlocking Patient Resistance to AI in Healthcare: A Psychological Exploration" European Journal of Investigation in Health, Psychology and Education 15, no. 1: 6. https://doi.org/10.3390/ejihpe15010006

APA StyleSobaih, A. E. E., Chaibi, A., Brini, R., & Abdelghani Ibrahim, T. M. (2025). Unlocking Patient Resistance to AI in Healthcare: A Psychological Exploration. European Journal of Investigation in Health, Psychology and Education, 15(1), 6. https://doi.org/10.3390/ejihpe15010006