Text Mining of Hazard and Operability Analysis Reports Based on Active Learning

Abstract

:1. Introduction

- An improved text mining method is proposed to mine the key information in HAZOP reports, and active learning is introduced into chemical safety entity recognition tasks for the first time;

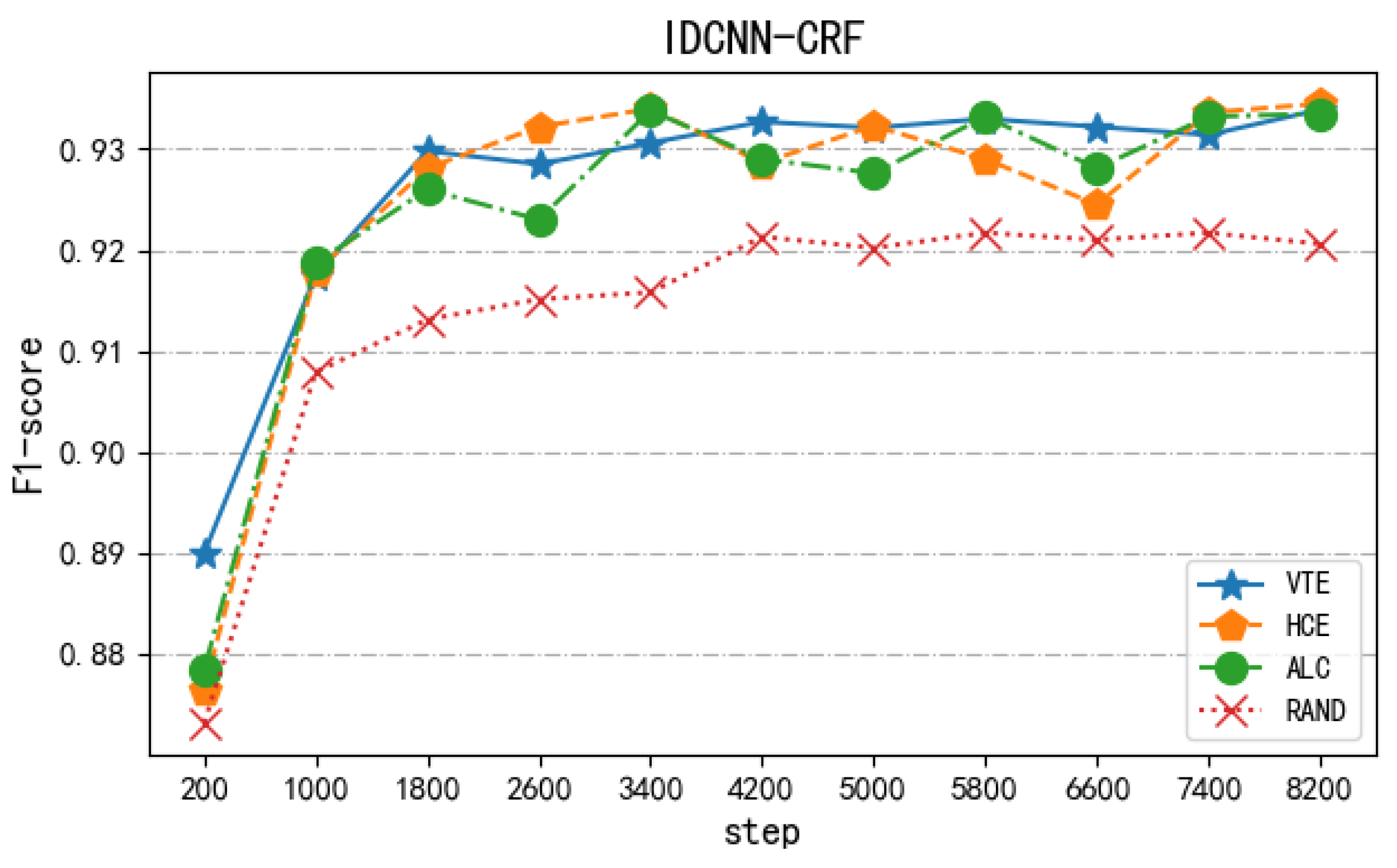

- Three novel sampling algorithms, VTE, HCE, and ALC, are proposed. High-quality samples can be effectively screened out by these three algorithms, which improves the ability of the model to understand HAZOP text;

- Experiments prove that the method and the three algorithms are reliable and advanced.

2. Related Research

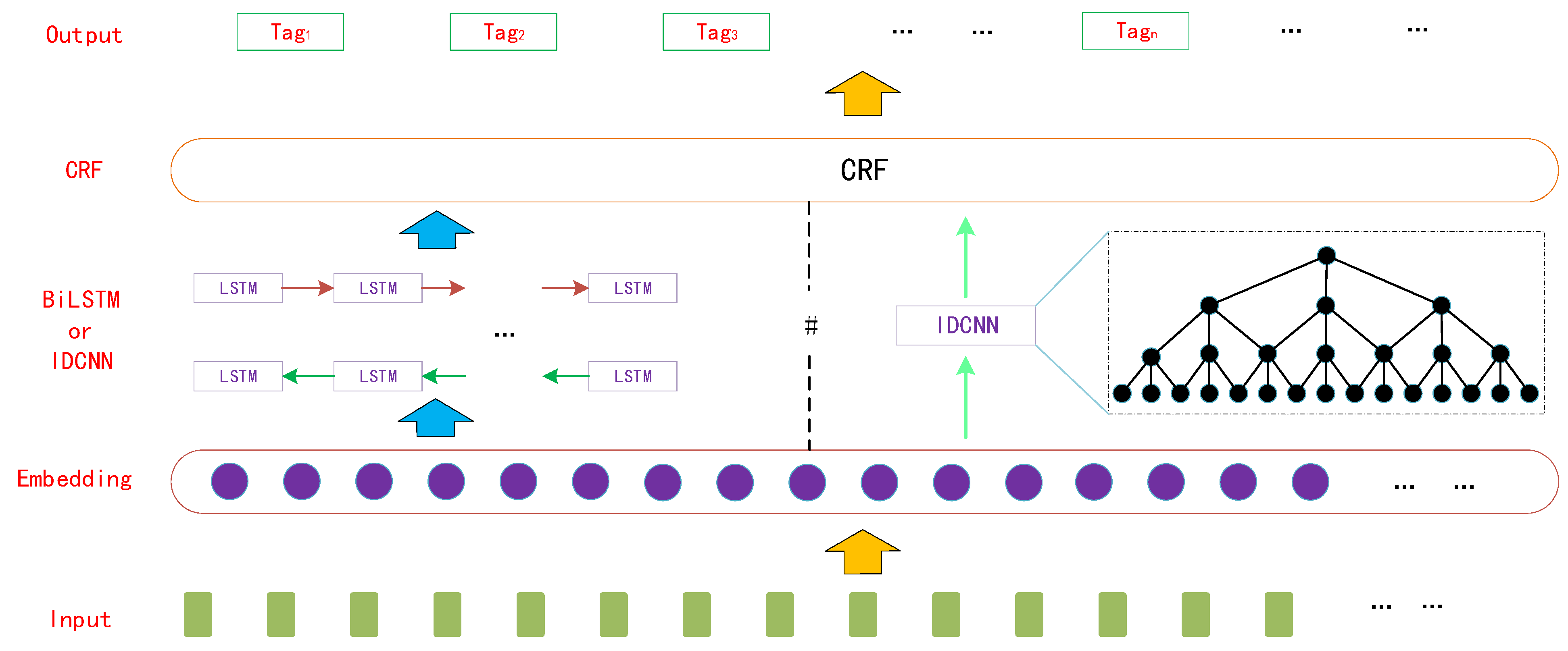

2.1. NER Model

2.2. The Characteristics of HAZOP Report

- There are a lot of proprietary expressions about process, equipment, and materials, such as “Fischer Tropsch reactor”, “Rich liquid flash tank”, and so on;

- There are a great variety of professional words, and the formation of these words is different from the general field. They have low causality, fuzzy semantic information, and different expressions for the same entity;

- Different types of entities are nested with each other. For example, “recycle gas compressor” corresponds to different entity types in different situations. It may be “recycle gas” material or “compressor” equipment, which can be a great obstacle for the understanding ability of the model;

- Polysemy is common. For example, the part of speech of “interrupt” is different between “interrupt device” and “device interrupt”. In some scenes, the guiding words of “low”, “small”, and “little” have the same meaning.

- Compared with entities in the general domain, chemical safety entities contain more characters, such as “antifreeze dehydration tower overhead air cooler”, “release gas compressor reflux cooler”, and “ethylene glycol recovery tower overhead knockout tank”, etc., which have negative effects on model fitting.

3. Method

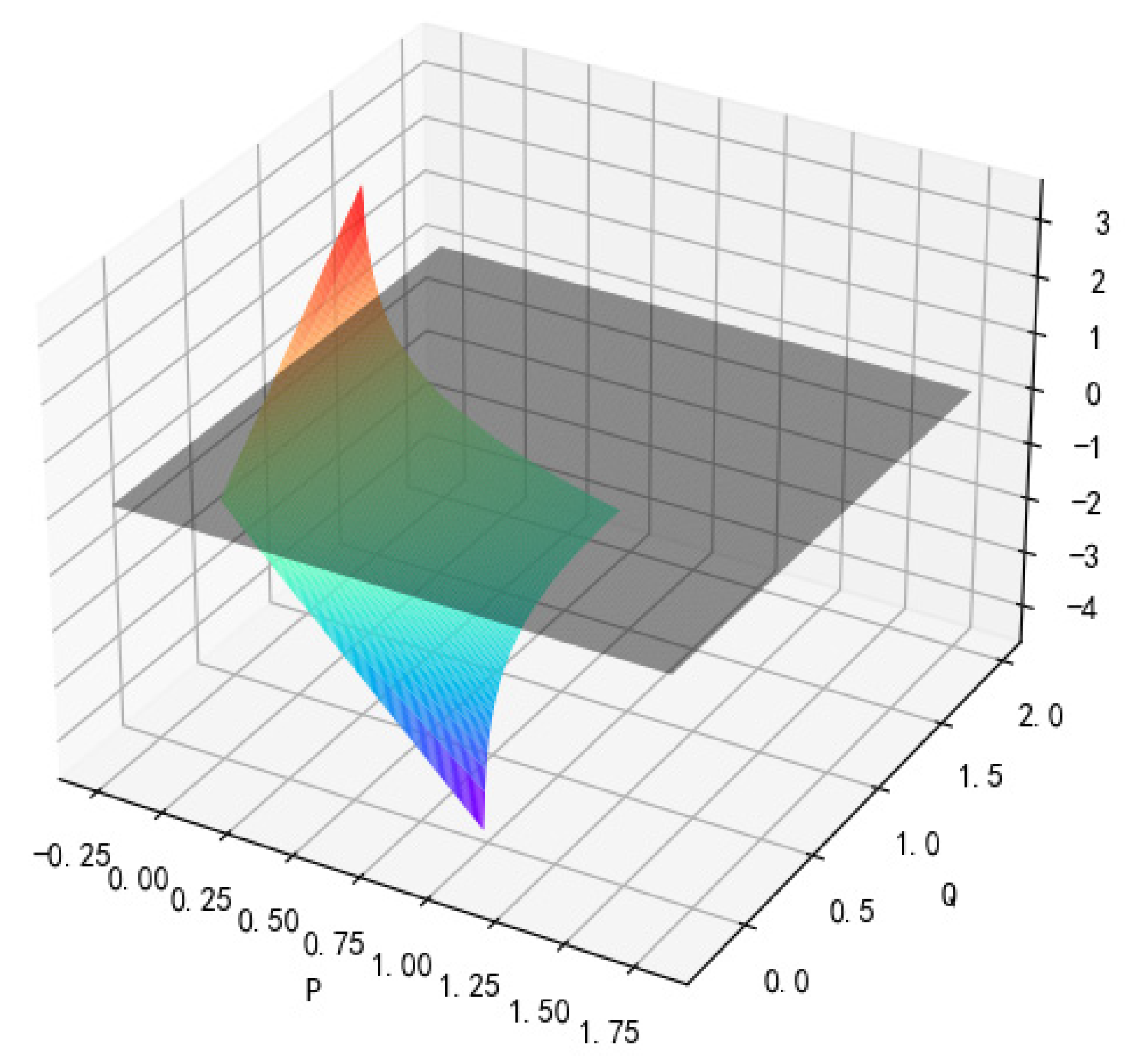

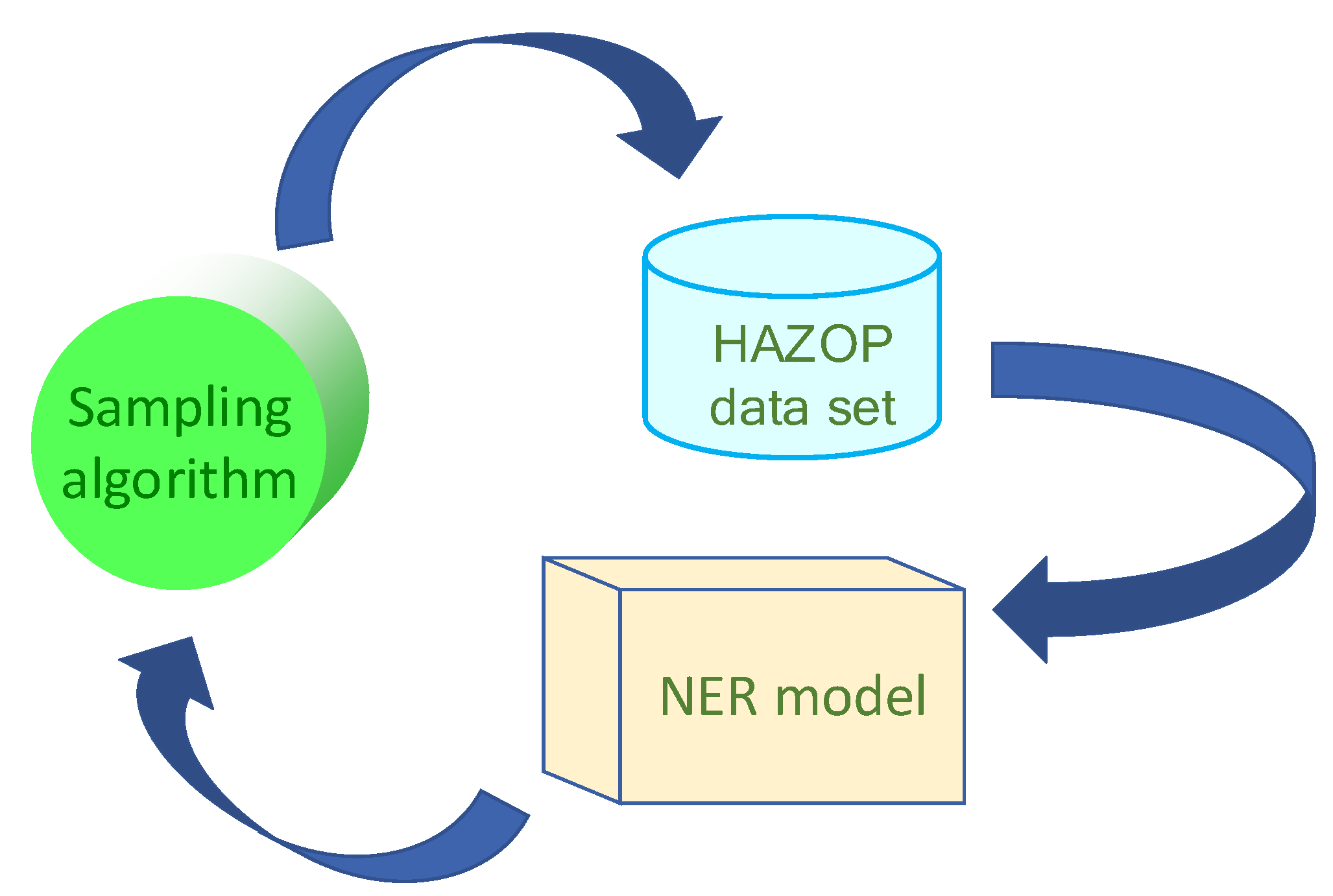

3.1. Sampling Algorithm

3.2. Training Process

- Partial samples are used to train the initial model;

- The label probabilities of other samples are calculated by the initial model. According to these probabilities, high-quality samples are selected from the data set by the sampling algorithm;

- The selected samples are added to the training set;

- Steps 1–3 are repeated until the number of training sets reaches the specified value;

- The final training set is used to retrain the model.

4. Experiment

4.1. Experimental Data

4.2. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rasmussen, B. Chemical process hazard identification. Reliab. Eng. Syst. Saf. 1989, 24, 11–20. [Google Scholar] [CrossRef]

- Redmill, F.; Chudleigh, M.; Catmur, J. System Safety: HAZOP and software HAZOP. Ind. Manag. Data Syst. 1999, 10, 140. [Google Scholar]

- Grishman, R.; Sundheim, B. Message Understanding Conference-6: A Brief History. Proc. 16 Conf. Comput. Ling. 1996, 8, 466–471. [Google Scholar]

- Habibi, M.; Weber, L.; Neves, M.; Wiegandt, D.L.; Leser, U. Deep Learning with Word Embeddings improves Biomedical Named Entity Recognition. Bioinformatics 2017, 33, i37–i48. [Google Scholar] [CrossRef]

- Dan, P.; Leite, F. Information Extraction for Freight-Related Natural Language Queries. Int. Workshop Comput. Civ. Eng. 2015, 2015, 427–435. [Google Scholar]

- Moon, S.; Chung, S.; Chi, S. Bridge damage recognition from inspection reports using NER based on recurrent neural network with active learning. J. Perform. Constr. Facil. 2020, 34, 04020119. [Google Scholar] [CrossRef]

- Nadeau, D.; Sekine, S. A survey of named entity recognition and classification. Lingvisticae Investig. 2007, 30, 3–26. [Google Scholar] [CrossRef]

- Yadav, V.; Bethard, S. A Survey on Recent Advances in Named Entity Recognition from Deep Learning models. arXiv 2019, arXiv:1910.11470. [Google Scholar]

- Li, J.; Sun, A.; Han, J.; Li, C. A survey on deep learning for named entity recognition. IEEE Trans. Knowl. Data Eng. 2020, 1. [Google Scholar] [CrossRef] [Green Version]

- Jallan, Y.; Brogan, E.; Ashuri, B.; Clevenger, C.M. Application of natural language processing and text mining to identify patterns in construction-defect litigation cases. J. Leg. Aff. Disput. Resolut. Eng. Constr. 2019, 11, 04519024. [Google Scholar] [CrossRef]

- Settles, B. Active Learning Literature Survey; University of Wisconsin Madison: Madison, WI, USA, 2009. [Google Scholar]

- Settles, B. Active Learning. Synth. Lect. Artif. Intell. Mach. Learn. 2012, 6, 1–114. [Google Scholar] [CrossRef]

- Fu, Y.; Zhu, X.; Li, B. A survey on instance selection for active learning. Knowl. Inf. Syst. 2013, 35, 249–283. [Google Scholar] [CrossRef]

- Li, X.; Guo, Y. Adaptive Active Learning for Image Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 23–28 June 2013; pp. 859–866. [Google Scholar]

- Vununu, C.; Lee, S.; Kwon, K. A Classification Method for the Cellular Images Based on Active Learning and Cross-Modal Transfer Learning. Sensors 2021, 21, 1469. [Google Scholar] [CrossRef]

- Nath, V.; Yang, D.; Landman, B.A.; Xu, D.; Roth, H.R. Diminishing uncertainty within the training pool: Active learning for medical image segmentation. IEEE Trans. Med. Imaging. 2020, 1. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Yao, C.; Niu, C.; Li, W.; Shen, T. Text mining of hypereutectic al-si alloys literature based on active learning. Mater. Today Commun. 2021, 26, 102032. [Google Scholar] [CrossRef]

- Wang, W.; Yang, T.; Harris, W.H.; Gómez-Bombarelli, R. Active learning and neural network potentials accelerate molecular screening of ether-based solvate ionic liquids. Chem. Commun. 2020, 56, 8920–8923. [Google Scholar] [CrossRef]

- Bi, H.; Perello-Nieto, M.; Santos-Rodriguez, R.; Flach, P. Human activity recognition based on dynamic active learning. IEEE J. Biomed. Health Inform. 2020, 8, 1. [Google Scholar] [CrossRef]

- Huang, Z.; Wei, X.; Kai, Y. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Strubell, E.; Verga, P.; Belanger, D.; Mccallum, A. Fast and accurate entity recognition with iterated dilated convolutions. arXiv 2017, arXiv:1702.02098. [Google Scholar]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1, pp. 326–366. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.; Dean, J. Distributed Representations of Words and Phrases and their Compositionality. arXiv 2013, arXiv:1310.4546, 2013. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural Architectures for Named Entity Recognition. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2016, arXiv:1511.07122. [Google Scholar]

- Burr, S.; Mark, C. An Analysis of Active Learning Strategies for Sequence Labeling Tasks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP ‘08), Honolulu, HI, USA, 25 October 2008; pp. 1070–1079. [Google Scholar]

- Shen, Y.; Yun, H.; Lipton, Z.; Kronrod, Y.; Anandkumar, A. Deep Active Learning for Named Entity Recognition. In Proceedings of the 2nd Workshop on Representation Learning for NLP, Vancouver, CA, USA, 17–16 August 2017. [Google Scholar]

- Sloane, N.; Wyner, A. A Mathematical Theory of Communication; Wiley-IEEE Press: Piscataway, NJ, USA, 2009. [Google Scholar]

- Aron, C.; Andrew, M. Reducing labeling effort for structured prediction tasks. In Proceedings of the 20th National Conference on Artificial Intelligence-(AAAI’05), Pittsburgh, Pennsylvania, 9–13 July 2005. [Google Scholar]

- Li, L.; Zhou, R.; Huang, D. Two-phase biomedical named entity recognition using CRFs. Comput. Biol. Chem. 2009, 33, 334–338. [Google Scholar] [CrossRef]

- Jia, Y.; Xu, X. Chinese Named Entity Recognition Based on CNN-BiLSTM-CRF. In Proceedings of the 2018 IEEE 9th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 23–25 November 2018. [Google Scholar]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent Models of Visual Attention. arXiv 2014, arXiv:1406.6247. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

| Text | The Level Controller Has Failed | ||||||

|---|---|---|---|---|---|---|---|

| Tagging | B-EQU | I-EQU | I-EQU | I-EQU | I-EQU | O | O |

| Predicted Value | Positive | Negative | |

|---|---|---|---|

| True Value | |||

| Positive | True Positive (TP) | False Negative (FN) | |

| Negative | False Positive (FP) | True Negative (TN) | |

| Model | P (%) | R (%) | F1 (%) |

|---|---|---|---|

| VTE-BiLSTM-CRF | 94.03 | 95.23 | 94.62 |

| HCE-BiLSTM-CRF | 94.30 | 94.57 | 94.43 |

| ALC-BiLSTM-CRF | 93.66 | 93.62 | 93.64 |

| BiLSTM-CRF | 87.58 | 90.10 | 88.82 |

| Model | P (%) | R (%) | F1 (%) |

|---|---|---|---|

| VTE-IDCNN-CRF | 93.26 | 93.52 | 93.39 |

| HCE-IDCNN-CRF | 93.52 | 93.38 | 93.45 |

| ALC-IDCNN-CRF | 93.72 | 93.05 | 93.38 |

| IDCNN-CRF | 89.04 | 90.88 | 89.95 |

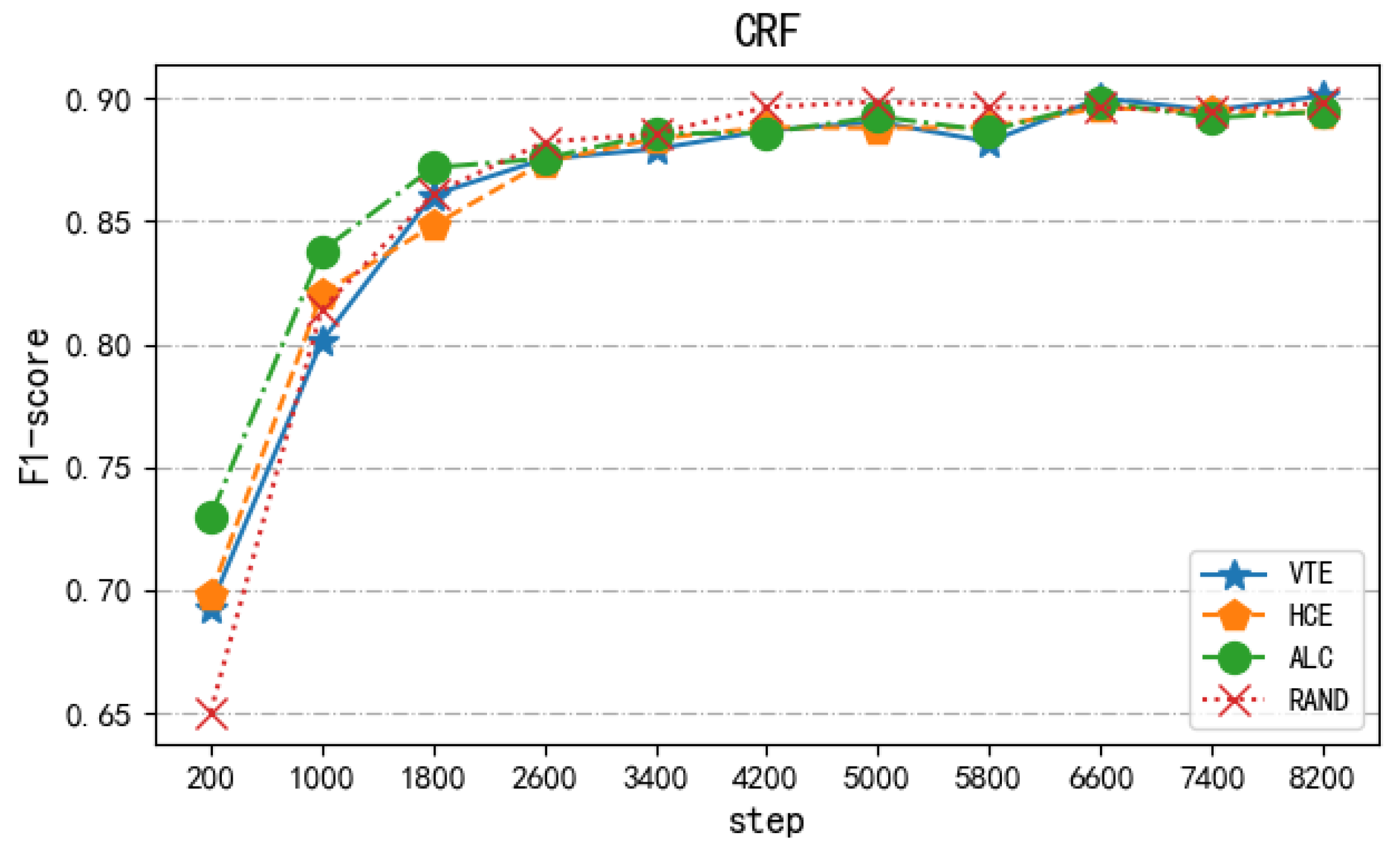

| Model | P (%) | R (%) | F1 (%) |

|---|---|---|---|

| VTE-CRF | 89.43 | 90.60 | 90.01 |

| HCE-CRF | 89.45 | 89.79 | 89.62 |

| ALC-CRF | 88.31 | 90.64 | 89.46 |

| CRF | 69.69 | 73.39 | 71.49 |

| Model | P (%) | R (%) | F1 (%) |

|---|---|---|---|

| BiLSTM-CRF (base) | 87.58 | 90.10 | 88.82 |

| CNN-BiLSTM-CRF | 90.79 | 91.59 | 91.19 |

| IDCNN-BiLSTM-CRF | 92.84 | 90.67 | 91.74 |

| BiLSTM-Attention-CRF | 88.64 | 86.55 | 87.58 |

| BERT-BiLSTM-CRF | 93.21 | 93.41 | 93.31 |

| VTE-BiLSTM-CRF | 94.03 | 95.23 | 94.62 |

| HCE-BiLSTM-CRF | 94.30 | 94.57 | 94.43 |

| ALC-BiLSTM-CRF | 93.66 | 93.62 | 93.64 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Zhang, B.; Gao, D. Text Mining of Hazard and Operability Analysis Reports Based on Active Learning. Processes 2021, 9, 1178. https://doi.org/10.3390/pr9071178

Wang Z, Zhang B, Gao D. Text Mining of Hazard and Operability Analysis Reports Based on Active Learning. Processes. 2021; 9(7):1178. https://doi.org/10.3390/pr9071178

Chicago/Turabian StyleWang, Zhenhua, Beike Zhang, and Dong Gao. 2021. "Text Mining of Hazard and Operability Analysis Reports Based on Active Learning" Processes 9, no. 7: 1178. https://doi.org/10.3390/pr9071178

APA StyleWang, Z., Zhang, B., & Gao, D. (2021). Text Mining of Hazard and Operability Analysis Reports Based on Active Learning. Processes, 9(7), 1178. https://doi.org/10.3390/pr9071178