1. Introduction

In chemical engineering, the use of models is indispensable to describe, design and optimize processes—both on a lab and on production scales, both with academic and with industrial backgrounds. However, each model prediction is only as good as the model, which means that the reliability of models to describe and predict the outcome of real-world processes is crucial.

A precise model gives a good understanding of the underlying phenomenon and is the basis for reliable simulation and optimization results. These models often depend on a variety of parameters that need to be estimated from measured data. Therefore, experiments were performed and measurements were taken in order to obtain a good estimate. Ideally, these experiments should be as informative as possible, such that the estimate of the model parameters is most accurate within the measurement errors.

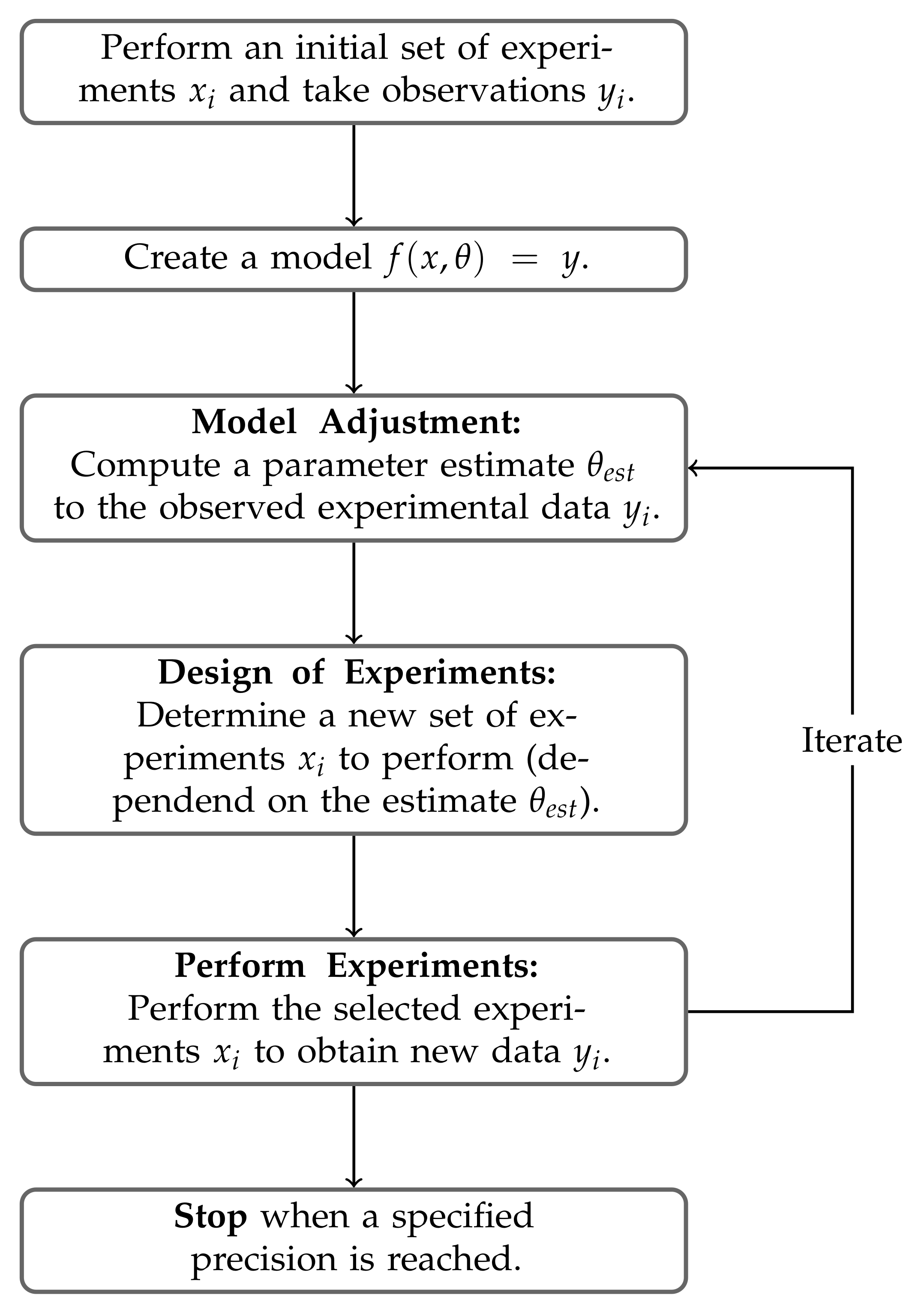

A common approach is an iterative workflow, which is presented in

Figure 1. One begins by performing an initial set of experiments and measures corresponding results. With this data, a model

that describes the experiments was constructed. Additionally, an estimate

of the unknown parameters

was computed (

model adjustment). The next step was to find a new set of experiments to perform. Finding appropriate experiments is the subject of the

model-based design of experiments (MbDoE), also called

optimal experimental design (OED). The new experiments were performed and new data were collected. The process of adjusting the model parameters, determining experiments and performing these experiments is iterated until one is satisfied with the parameter estimate

. A detailed overview on this iterative model identification workflow and each individual step can be found in [

1] or [

2,

3,

4].

In the model-based design of experiments, the current information of the model is used in order to find optimal experiments. Here, optimal means finding experiments that give the most information on the unknown parameters

. Equivalently, the error (variance) of the estimate

is minimized [

1,

5,

6,

7]. MbDoE has been considered for a variety of minimization criteria of the variance [

1] as well as for a variety of model types. These include non-linear models, multi-response models and models with distributional assumptions on the observations—see [

1]. More recently dynamical models

—possibly given via differential equations

—have also gained more traction in MbDoE—see [

2,

8,

9,

10]. Duarte et al. proposed a formulation for implicit models

in [

11].

The computed optimal designs will depend on the information currently available from the model. In particular, they depend on the current estimate

of the unknown parameters

. Such designs are called

locally optimal. In contrast, one can also compute

robust optimal designs that take the uncertainty of the current estimate

into account. Such robust designs are often used in the initial iteration, when no estimate of the parameters is given or the estimate is assumed to contain large errors. In later iterations of the workflow, the estimate will be more precise and the locally optimal design is more reliable. In [

8,

9,

12,

13] different approaches to robust experimental design are presented. Designs that are robust in the parameters, however, are not the subject of this paper; we only consider locally optimal designs.

The computation of locally optimal designs proves to be very challenging, as one has to consider integer variables, how many experiments to perform as well as continuous variables and which experiments to perform. One can therefore solve the optimal design problem for continuous designs, which do not depend on the number of experiments [

1,

5,

14,

15,

16] and instead assign each experiment

a weight

. The design points with strictly positive weights

then indicates which experiments to perform, whereas the size of the weights indicates how often an experiment should be performed.

Classical algorithms to compute optimal continuous designs include the vector direction method by Wynn and Federov [

1,

17], a vector exchange method by Boehning [

18] and a multiplicative algorithm by Tritterington [

19]. These methods are based on the heralded Kiefer-Wolfowitz Equivalence Theorem [

1,

20] and compute locally optimal continuous designs.

Recently, a variety of new algorithms were developed in order to compute optimal continuous designs. Prominent examples include the cocktail algorithm [

21], an algorithm by Yang, Biedermann and Tang [

22], an adaptive grid algorithm by Duarte [

23] or the random exchange algorithm [

24]. These algorithms also rely on the Kiefer-Wolfowitz Equivalence Theorem. Each algorithm requires the repeated solution of the optimization problem

where

denotes the directional derivative [

22] of the design criterion and is in general a non-linear function.

Thus, finding global solutions to this problem is very challenging. One approach is to replace the design space

X with a finite grid

and solve the optimization problem with brute-force [

21,

22,

24]. This works very well for ‘small’ input spaces and models. However, in applications one often finds high-dimensional models, which are additionally time expensive to evaluate. In particular, dynamical models fall under this category. Here, control or input functions need to be parameterized in order to be representable in the OED setting. Depending on their detail, these parameterizations can result in a large amount of input variables.

For such high-dimensional models, a grid-based approach is not viable. To compute the values one has to evaluate the Fisher information matrix at every design point x of the grid . The Fisher information matrix depends on the Jacobian of the model functions f and is thus time-expensive to compute. The time for evaluating these matrices on a fine, high-dimensional grid scales exponentially with the dimension and, therefore, will eventually be computationally too expensive. Thus, a different approach is needed for these models.

One should note that solving the non-linear program (NLP) (

1) with a global solver in every iteration is not an alternative. This also requires many evaluations, and finding global solutions of arbitrary NLPs is quite difficult or time-consuming in general.

Other state-of-the-art approaches to find optimal experimental designs include reformulations of the optimization problem as a semidefinite program (SDP) [

23,

25,

26] or a second-order cone program (SOCP) [

27]. These programs can be solved very efficiently and are not based on an iterative algorithm. Additionally, these problems are convex and, thus, every local solution is already globally optimal. However, the formulation as SDP (or SOCP) is again based on a grid

or special structure of the model functions and the Fisher information matrix

has to be evaluated at all grid points. Also, for such a grid-like discretization, an algorithm for maximum matrix determinant computations—the

MaxVol [

28]—has been used to compute D-optimal designs efficiently.

In contrast to continuous designs, some advances have also been made in the computation of exact optimal designs. These designs do not associate weights to the design points but instead assign each design point

with an integer number

of repetitions. In recent years, methods based on mixed-integer programming have been presented in [

29,

30,

31] to compute such designs.

In the following we motivate, introduce and discuss a novel, computationally efficient algorithm to compute locally optimal continuous designs, which can be applied to high-dimensional models. This algorithm adaptively selects points to evaluate via an approximation of the directional derivative . Thus, the algorithm does not require evaluations of the model f on a fine grid. Using two examples from chemical engineering, we illustrate that the novel algorithm can drastically reduce the runtime of the computations and relies on significantly less evaluations of the Jacobian . Hence, the new algorithm can be applied to models that were previously considered too complex to perform model-based design of experiment strategies.

2. Theoretical Background

In this section, we give a brief overview of the existing theory regarding model-based design of experiments. In particular, we introduce the Kiefer-Wolfowitz Equivalence Theorem, which is the foundation of most state-of-the-art design of experiment algorithms.

Afterwards, we introduce Gaussian process regression (GPR), which is a machine learning method used to approximate functions. We give an overview on the theory and comment on two implementation details.

2.1. Design of Experiments

In the design of experiments, one considers a model

f mapping inputs from a design space

X to outputs in

Y. The model depends on parameters

and is given by

Let the spaces and Y be sub-spaces of and , respectively.

A

continuous design is a set of tuples

for

, where the

are design points and the weights

are positive and sum to

. A continuous design is often denoted as

The design points with are called the support points of the design. The notion of a continuous design can be generalized by considering design measures , which correspond to a probability measure on the design space X.

For a design point

x the

Fisher information matrix is defined as

where

denotes the Jacobian matrix of

f with respect to

. For a continuous design

the

(normalized) Fisher information matrix is given as weighted sum of the Fisher information matrices

,

Note, that the Jacobian matrix

depends on the unknown parameters

and therefore so does the Fisher information matrix. For locally optimal designs the best current estimate

of the parameters

is inserted—as stated in

Section 1. Such an estimate can be obtained via a least squares estimator. For observations

at the design points

this estimator is given as

such that the estimator minimizes the squared error of the model [

1]. In general this is an unconstrained optimization problem over the model dependent parameter space

.

In the design of experiments, one aims at finding a design , which minimizes a measurement function of the Fisher information matrix, the design criterion. Commonly used design criteria include the

The optimal experimental design (OED) problem is then given as

This problem is also denoted by

where

corresponds to the space of all design measures on

X.

Under mild assumptions on the design criterion

and the design space

X optimality conditions for the Optimization Problem (

11) are known. We refer to [

1] for these assumptions. In particular, the directional derivative

of the design criterion

is required. These derivatives are known for the A-, E- and (log-)D-Criterion and given by

, where is the number of unknown model parameters.

.

, where is the algebraic multiplicity of , the are positive factors summing to unity and the are normalized, linear independent eigenvectors of .

The following theorems give global optimality conditions and can be found in [

1,

5].

Theorem 1. The following holds:

An optimal design exists, with at most support points.

The set of optimal designs is convex.

is necessary and sufficient for the design to be (globally) optimal.

For almost every support point of we have .

Typically, the optimality conditions presented in Theorem 1 are known as the

Equivalence Theorems. These present a reformulation of the results from Theorem 1 and are contributed to [

20].

Theorem 2. (Kiefer-Wolfowitz Equivalence Theorem). The following optimization problems are equivalent:

.

Remark 1. Performing the experiments of a design ξ one obtains an estimate of the model parameters θ. With this estimate a prediction on the model outputs can be given. The variance of this prediction is computed asFor details on the computations we refer to [1], Chapter 2.3. We observe that the directional derivative of the D-Criterion is given by . The Equivalence Theorem for the D-Criterion thus states that an optimal design minimizes the maximum variance of the prediction . As the variance is an indication on the error in the prediction, we also (heuristically) say that the design minimizes the maximum prediction error.

Based on the Kiefer–Wolfowitz Equivalence Theorem 2, a variety of algorithms have been derived to compute optimal continuous designs. Here two such algorithms are introduced beginning with the vertex direction method (VDM), which is sometimes also called Federov-Wynn algorithm.

From Theorem 1 it follows that the support of an optimal design coincides with the minima of the function . Thus the minimum of is of particular interest. These considerations result in an iterative scheme where

the minimum for a given design is computed and then

the point is added to the support of .

Recall from Remark 1, that the directional derivative of the D-Criterion is correlated to the prediction error for a design . For the D-Criterion, one thus selects the design point with the largest variance in the prediction in every iteration. This point is then added to the support in order to improve the design.

Adding a support point to the design requires a redistribution of the weights. In the VDM, weights are uniformly shifted from all previous support points

to the new support point

. The point

is assigned the weight

and the remaining weights are set to

for an

. In [

1] (Chapter 3) and [

17] a detailed description of the algorithm with suitable choices of

and proof of convergence is given.

Next, a state-of-the-art algorithm by Yang, Biedermann and Tang introduced in [

22]—which we call the

Yang-Biedermann-Tang (YBT) algorithm—is given. This algorithm improves the distribution of the weights in each iteration and thus converges in less iterations to a (near) optimal design.

In the VDM, the weights are shifted uniformly to the new support point

, in the YBT algorithm, the weights are distributed optimally among a set of candidate points instead. For a given set

one thus considers the optimization problem

With an optimal solution

of Problem (

14) a design

is obtained by assigning each design point

the weight

.

For the YBT algorithm the optimization Problem (

14) is solved in each iteration, which results in the following iterative scheme:

For the candidate point set

solve Problem (

14) to obtain optimal weights

.

Obtain the design by combining the candidate points with the optimal weights .

Solve .

If , the design is (near) optimal.

Else, add to the candidate points to obtain and iterate.

In the YBT algorithm, two optimization problems are solved in each iteration

n. The weight optimization (see Problem (

14)) is a convex optimization problem [

14,

15]. In [

22] it is proposed to use an optimization based on Newton’s method. However, Problem (

14) can also be reformulated as a semidefinite program (SDP) or as second-order cone program (SOCP), see [

14,

23,

25,

26,

27]. Both SDPs and SOCPs can be solved very efficiently and we recommend reformulating the Weight Optimization Problem (

14).

The optimization of the directional derivative

on the other hand is not convex in general. A global optimization is therefore difficult. A typical approach to resolve this issue is to consider a finite design space

. The function

is then evaluated for every

to obtain the global minimum. Continuous design spaces

X are substituted by a fine equidistant grid

. This approach is also utilized by other state-of-the-art algorithms like Yu’s cocktail algorithm [

21] or the random exchange method [

24].

2.2. Gaussian Process Regression

Gaussian process regression (GPR) is a machine learning method used to approximate functions. In our proposed design of experiments algorithm we use this method to approximate the directional derivative

. Here we give a brief introduction into the theory, then we comment on some considerations for the implementation. For details we refer to [

32].

Consider a function

that one wants to approximate by a function

. As no information on the value

is available, the values are assumed to follow a prior distribution

. In Gaussian process regression the prior distribution is assumed to be given via a Gaussian process

G. Thus the values

are normally distributed. The process

G is defined by its mean

and its covariance kernel

. The covariance kernel hereby always is a symmetric non-negative definite function. In the following, the mean

m is set as zero—

for all

—as is usual in GPR [

32].

Next, the function

g is evaluated on a set of inputs

. The distribution

is conditioned on the tuples

with

in order to obtain a posterior distribution

. This posterior distribution then allows for more reliable predictions on the values

. The conditioned random variable is denoted by

, where we set

. The distribution of

is again a normal distribution with mean

and variance

We use the notations

and

, as well as

in the Equations (

15) and (

16). One can derive the formulas using Bayes rule, a detailed description is given in [

32]. The data pair

is called the

training data of the GPR.

The posterior expectation given in Equation (

15) is now used to approximate the unknown function

g. Therefore, the approximation is set as

. The posterior variance given in Equation (

16) on the other hand is an indicator of the quality of the approximation. Note in particular that the approximation

interpolates the given training data

, such that for every

it holds that

For the training points it holds that , also indicating that the approximation is exact.

The approximating function

also is a linear combination of the kernel functions

. Via the choice of the kernel

k one can thus assign properties to the function

. One widely used kernel is the

squared exponential kernel given by

For this kernel the approximation always is a function. Other popular kernels are given by the Matern class of kernels , which are functions in . The covariance kernel k—in contrast to the mean m—thus has a large influence on the approximation.

We now comment on two details of the implementation of Gaussian process regression. First we discuss the addition of White noise to the covariance kernel

k. Here an additional White noise term is added to the kernel to obtain

where

denotes the delta function. This function takes the values

and

if

. For the new kernel

with

the matrix

always is invertible. However, the approximation

arising from the adapted kernel

need not interpolate the data and instead allows for deviations

. The variance of these deviations is given by

.

Second, we discuss the hyperparameter selection of the kernel

k. Often the kernel

k depends on additional hyperparameters

. For the squared exponential kernel

these parameters are given as

.

In order to set appropriate values of

a loss function

is considered. The hyperparameters

are then set to minimize the loss

. Examples and discussions of loss functions can be found in [

32]. For our implementation, we refer to

Section 6.

3. The Adaptive Discretization Algorithm

In this section, we introduce our novel design of experiments algorithm. We call this adaptive discretization algorithm with Gaussian process regression the

ADA-GPR. This algorithm combines model-based design of experiments with approximation techniques via Gaussian process regression. The algorithm is an iterative method based on the Kiefer-Wolfowitz Equivalence Theorem 2. In each iteration

n we verify if the optimality condition is fulfilled. If this is the case, Theorem 2 states that the design

is optimal. Otherwise we expand the support of the design

by adding a new design point

. For the selection of

we employ an approximation of

via a GPR—introduced in

Section 2.2. In order to obtain the design

in the next iteration, we use an optimal weight distribution—as seen in the YBT algorithm.

As previously noted, the YBT algorithm is typically applied to a fine grid in the continuous design space X. This adjustment is made, as finding a global minimum of the directional derivative is challenging in general. For each point of the design space we have to evaluate the Jacobian matrix in order to compute the Fisher information matrix . We thus have to pre-compute the Fisher information matrices at every point for grid–based design of experiment algorithms.

In order to obtain reliable designs the grid has to be a fine grid in the continuous design space X. The number of points increases exponentially in the dimension and so then does the pre-computational time required to evaluate the Jacobian matrices. Grid-based methods—like the YBT algorithm—are therefore problematic when we have a high-dimensional design space and can lead to very long runtimes. For particular challenging models, they may not be viable at all.

The aim of our novel algorithm is to reduce the evaluations of the Fisher information matrices and thereby reduce the computational time for high-dimensional models.

We observe, that in the YBT algorithm solely the Fisher information matrices of the candidate points are used to compute optimal weights . The matrices at the remaining points are only required to find the minimum via a brute-force approach.

In order to reduce the number of evaluations, we thus propose to only evaluate the Jacobian matrices of the candidate points . With the Jacobians at these points we can compute exact weights for the candidate points. The directional derivative however is approximated in each iteration of the algorithm. For the approximation we use Gaussian process regression. As training data for the approximation, we also use the candidate points and the directional derivative at these points. As we have evaluated the Jacobians at these points, we can compute the directional derivative via matrix multiplication.

We briefly discuss why GPR is a viable choice for the approximation of

. In the YBT algorithm, we iteratively increase the number of candidate points

. Thus, we want an approximation that can be computed for an arbitrary amount of evaluations and that has a consistent feature set. As stated in

Section 2.2, we can compute a GPR with arbitrary training points and we can control the features of the approximation via the choice of the kernel

k. Additionally, we not only obtain an approximation via GPR, but also the variance

. The variance gives information on the quality of the approximation, which can also be useful in our considerations.

In

Section 2.2 we have discussed, that

is the appropriate choice to approximate the directional derivative

. This suggests to select the upcoming candidate points via

However, we also want to incorporate the uncertainty of the approximation into our selection. Inspired by Bayesian optimization [

33,

34,

35] we consider an approach similar to the Upper-Confidence Bounds (UCB). Here we additionally subtract the variance from the expectation for our point selection. This results in the following point

We call the function

used to select the next candidate point

the

acquisition function of the algorithm. This denotation is inspired by Bayesian optimization, too (see [

35]).

The two terms and hereby each represent a single objective. Optimization of the expectation results in points that we predict to be minimizers of . These points then help improve our design and thereby the objective value. Optimization of the variance on the other hand leads to points where the current approximation may have large errors. Evaluating at those points improves the approximation in the following iterations. By considering the sum of both terms we want to balance these two goals.

We make one last adjustment to the point acquisition. We want to avoid having a bad approximation that does not correctly represent minima of the directional derivative. Thus—if the directional derivative at the new candidate point

is not negative and

—we select the upcoming point only to improve the approximation. This is achieved by selecting the point

according to

The uncertainty in the approximation is represented by the variance and evaluating at a point of high variance therefore increases the accuracy of the approximation. We do not use the variance maximization in successive iterations. This means that the point

is again selected via the acquisition function presented in Equation (

23).

The proposed algorithm is given in Algorithm 1. We call this adaptive algorithm the ADA-GPR.

| Algorithm 1 Adaptive Discretization Algorithm with Gaussian Process Regression (ADA-GPR) |

Select an arbitrary initial candidate point set . Evaluate the Jacobian matrices at the candidate points and assemble these in the set . Set . Iterate over : Compute optimal weights for the candidate points (Optimization Problem ( 14)) and combine the candidate points and the weights to obtain the design . Compute the directional derivative at the candidate points via the values in . Gather these in the set . Compute a Gaussian process regression for the training data . Solve . Compute the Jacobian matrix and the directional derivative at the point . Update the sets and . If : Set . Else if :

|

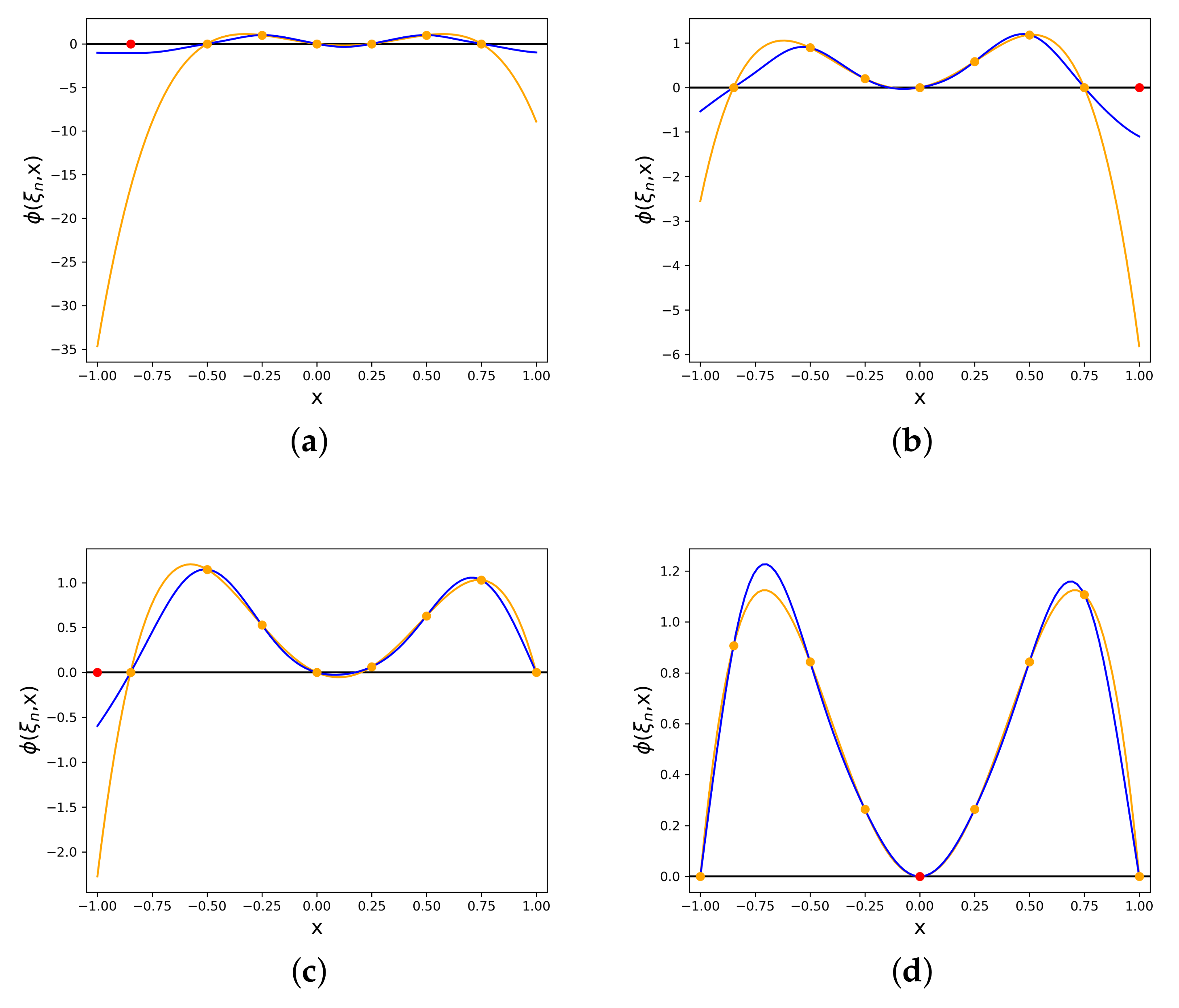

In

Figure 2, the adaptive point acquisition in the first 4 iterations of the ADA-GPR for a mathematical toy example

is illustrated. We can observe how the algorithm selects the next candidate point

in these illustrations. We note that the point selected via the acquisition function can differ from the point we select via the exact values of

.

To end this section, we discuss the quality of the design we obtain with the novel algorithm. For the YBT algorithm as well as the VDM, it is shown in [

1,

22] that the objective values converge to the optimal objective value with increasing number of iterations. We also have a stopping criterion that gives an error bound on the computed design. We refer to [

1] for details. As we are using an approximation in the ADA-GPR, we cannot derive such results. The maximum error in our approximation will always present a bound for the error in the objective values of the design. However, we highlight once more that the aim of the ADA-GPR is to efficiently compute an approximation of an optimal design—where efficiency refers to less evaluations of the Jacobian matrix

. For models that we cannot evaluate on a fine grid in the design space the ADA-GPR then presents a viable option to obtain designs in the continuous design space

X.

4. Results

We now provide two examples from chemical engineering to illustrate the performance of the new ADA-GPR (

Section 3) compared to known the VDM and YBT algorithm (both

Section 2.1). In this section we compute optimal designs with respect to the log-D-Criterion. The corresponding optimization problem (Problem (

11)) we solve with each algorithm is given by

In our examples, the design space X is given as a range in . The resulting bounds on x are given in each example specifically.

We then compare the results and the runtimes of the different methods. The models we present in this section are evaluated in CHEMASIM and CHEMADIS, the BASF inhouse simulators (Version 6.6 [

36] BASF SE, Ludwigshafen, Germany), using the standard settings.

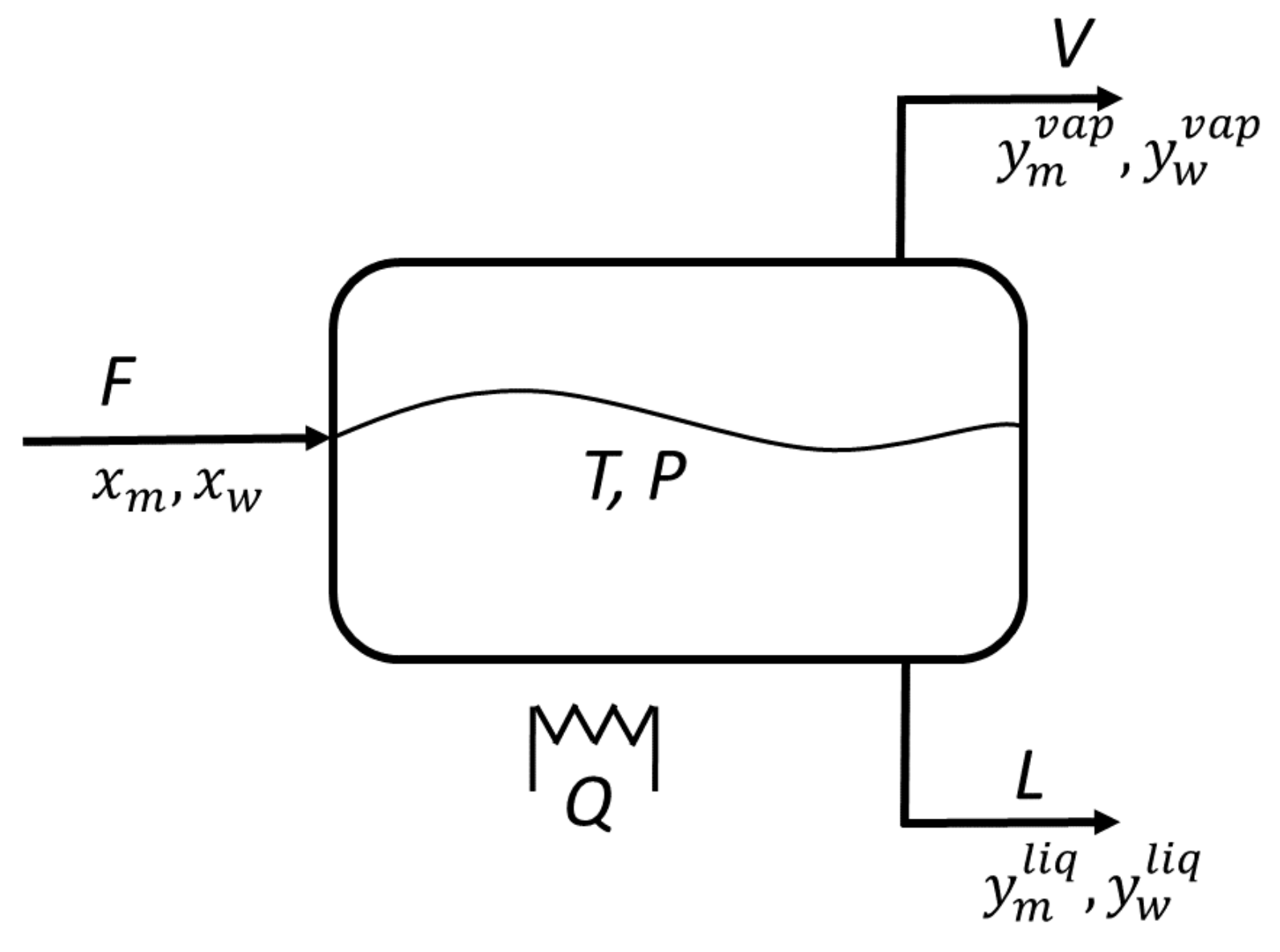

The first example we consider is a flash. A liquid mixture consisting of two components enters the flash, where the mixture is heated and partially evaporates. One obtains an vapor liquid equilibrium at temperature

T and pressure

P. In

Figure 3, the flash unit with input and output streams is sketched. The relations in the flash unit are governed by the

MESH equations (see

Appendix A and [

37]).

Initially, we consider a mixture composed of methanol and water and compute optimal designs for this input feed. In the second step, we replace the water component with acetone and repeat the computations. The model, and in particular, the

MESH equations remain the same, however, parameters of the replaced component can vary. Details on the parameters for vapor pressure are given in the

Appendix A.

In the following, we denote the molar concentrations of the input stream by and , those of the liquid output stream by and and those of the vapor output stream by and . Additionally, we introduce the molar flow rates F of the input stream, V of the vapor output stream and L of the liquid output stream.

We consider the following design of experiments setup:

two inputs, P and . Here P denotes the pressure in the flash unit that ranges from 0.5–5 bar. The variable gives the molar concentration of methanol in the liquid input stream. This concentration is between 0 and 1 /.

two outputs, T and . The temperature in degree Celsius at equilibrium T is measured as well as the molar concentration / of the evaporated methanol at equilibrium.

four model parameters

and

. These are parameters of the activity coefficients

and

in the

MESH equations (

Appendix A)—the so-called non-random two-liquid (NRTL) parameters.

We fix the flow rates

/h and

/h. Given the inputs

P and

we can then solve the system of equations to obtain the values of

T and

. We represent this via the model function

f as

with

.

For the VDM and the YBT algorithm we need to replace the continuous design space with a grid. Therefore, we set

This set corresponds to a grid with 9191 design points.

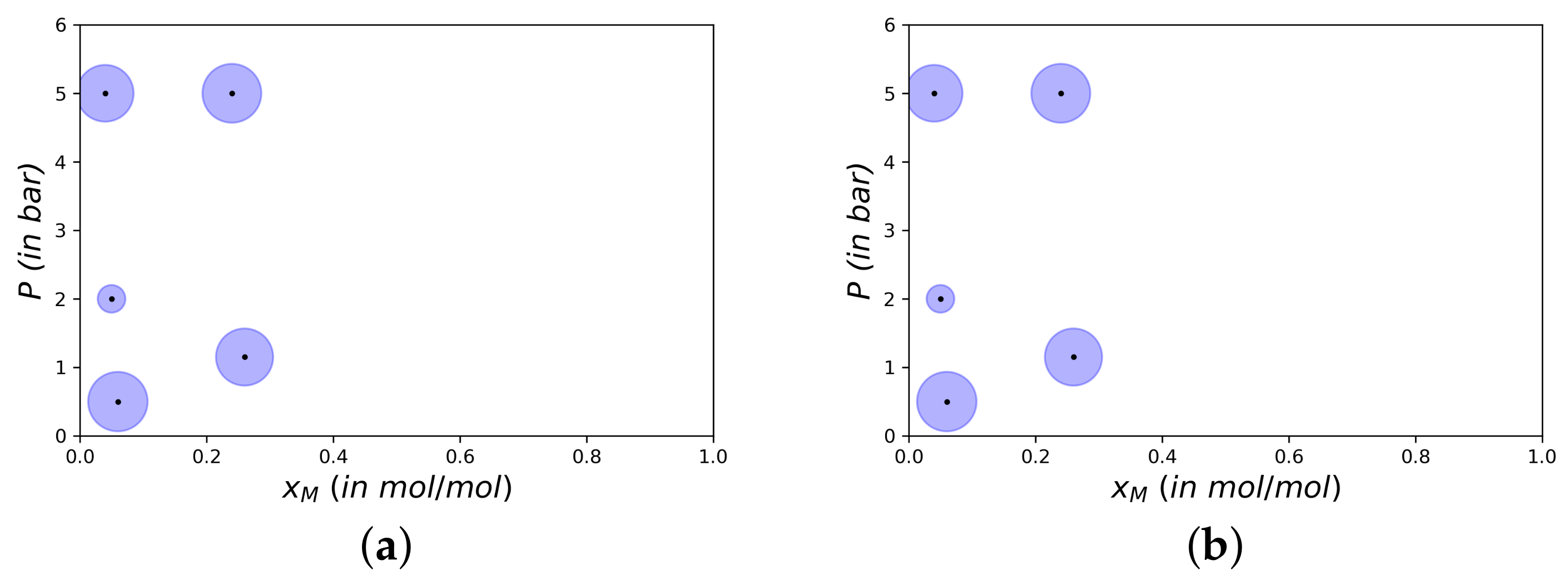

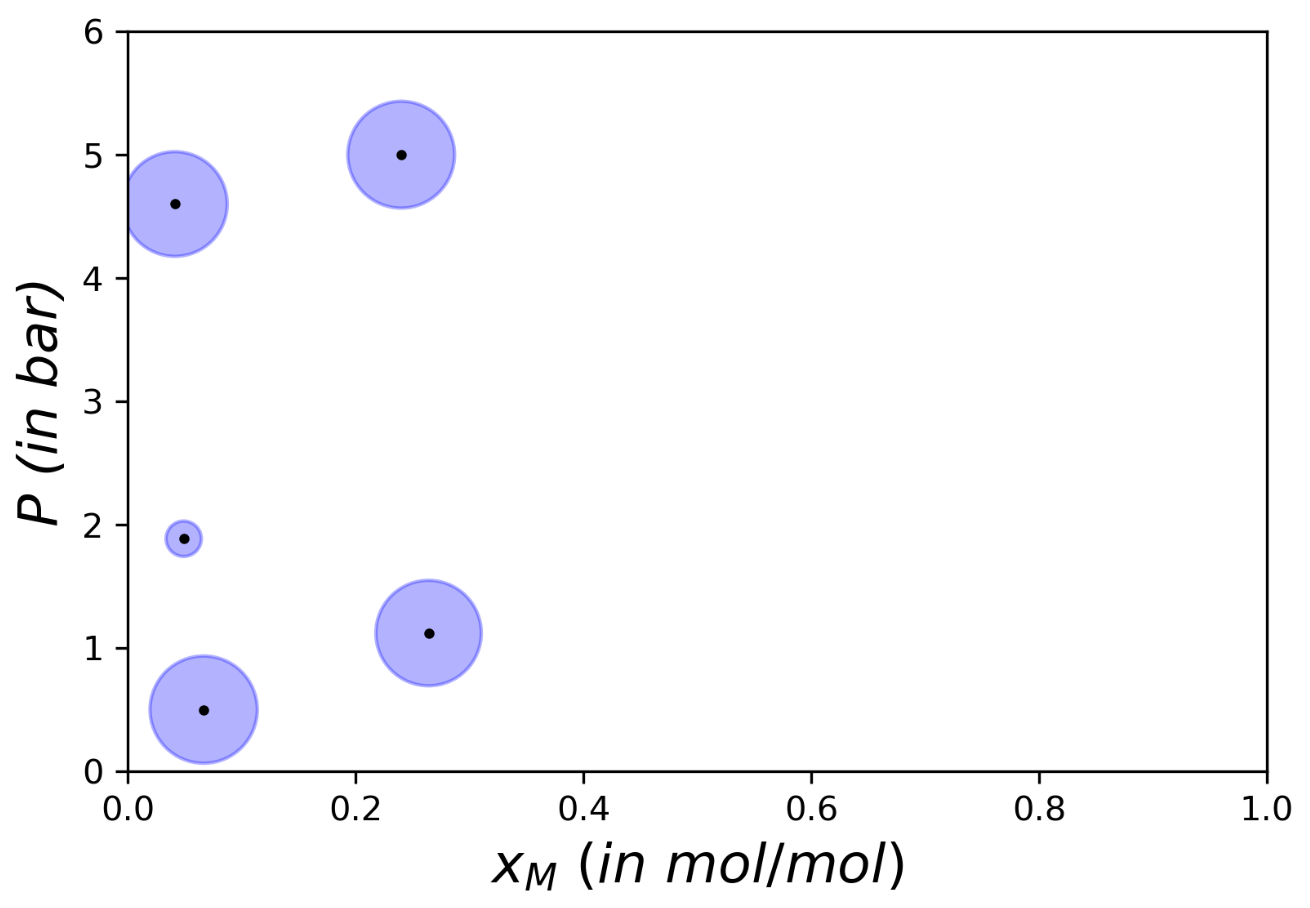

The designs

and

which the VDM and YBT algorithm compute for a flash with methanol–water input feed are given in

Table 1 and

Table 2 and in

Figure 4. We note that only design points with a weight larger than 0.001 are given—in particular for the VDM, where the weights of the initial points only slowly converge to 0.

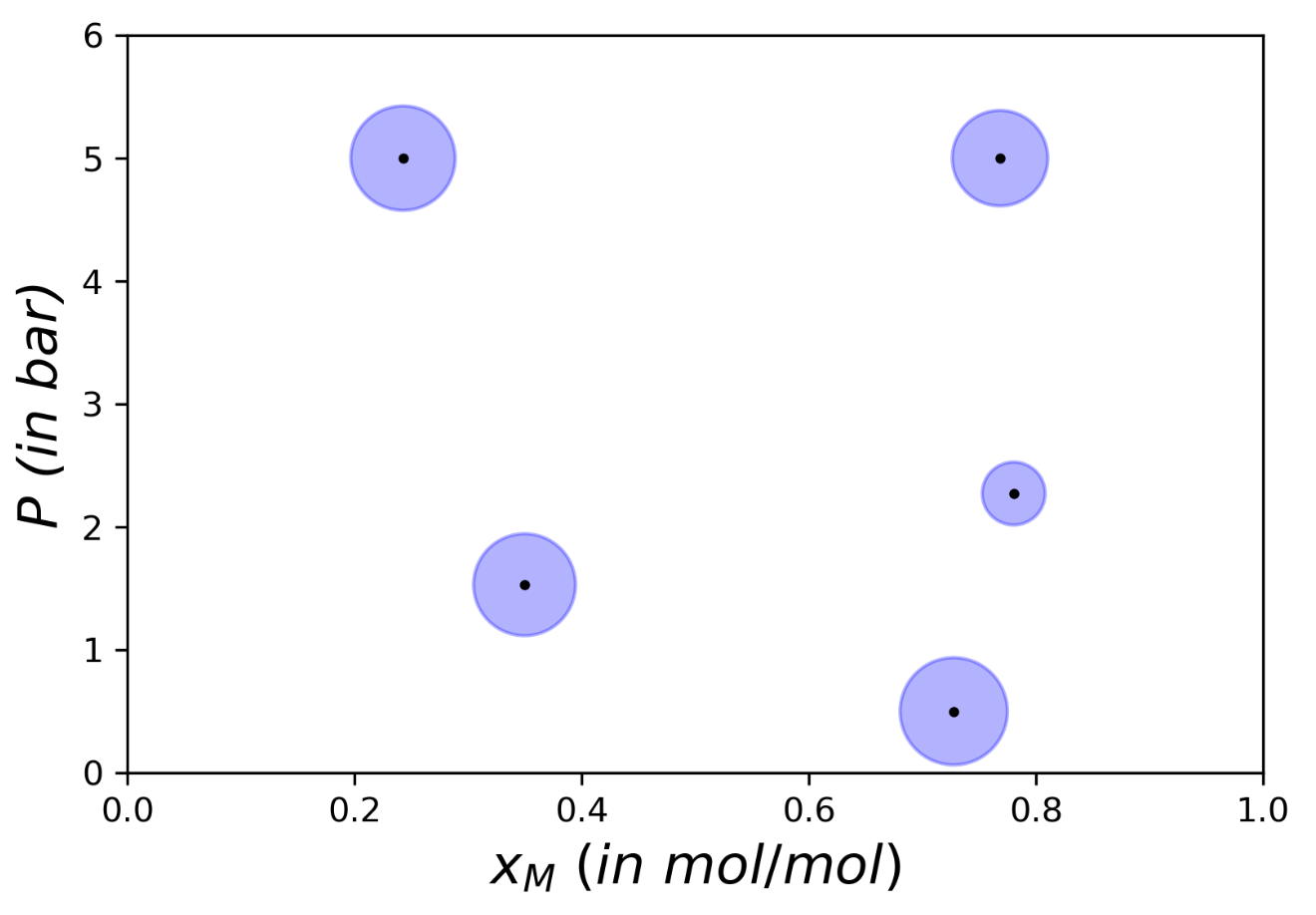

The ADA-GPR, on the other hand, is initialized with 50 starting candidate points. We obtain the design

given in

Table 3 and in

Figure 5. Here, we also only give points with a weight larger than 0.001. Additionally, we have clustered some of the design points. We refer to

Section 6 for details on the clustering.

In

Table 4 the objective value, the total runtime, the number of iterations and the number of evaluated Jacobian matrices

is listed. We recall, that we aim at maximizing the objective value in Optimization Problem (

25) and a larger objective value is therefore more favorable. A detailed breakdown of the runtime is given in

Table 5. Here, we differentiate between the time contributed to evaluations of the Jacobians

, the optimization of the weights

, the optimization of the hyperparameters

of the GPR and the optimization of the point acquisition function.

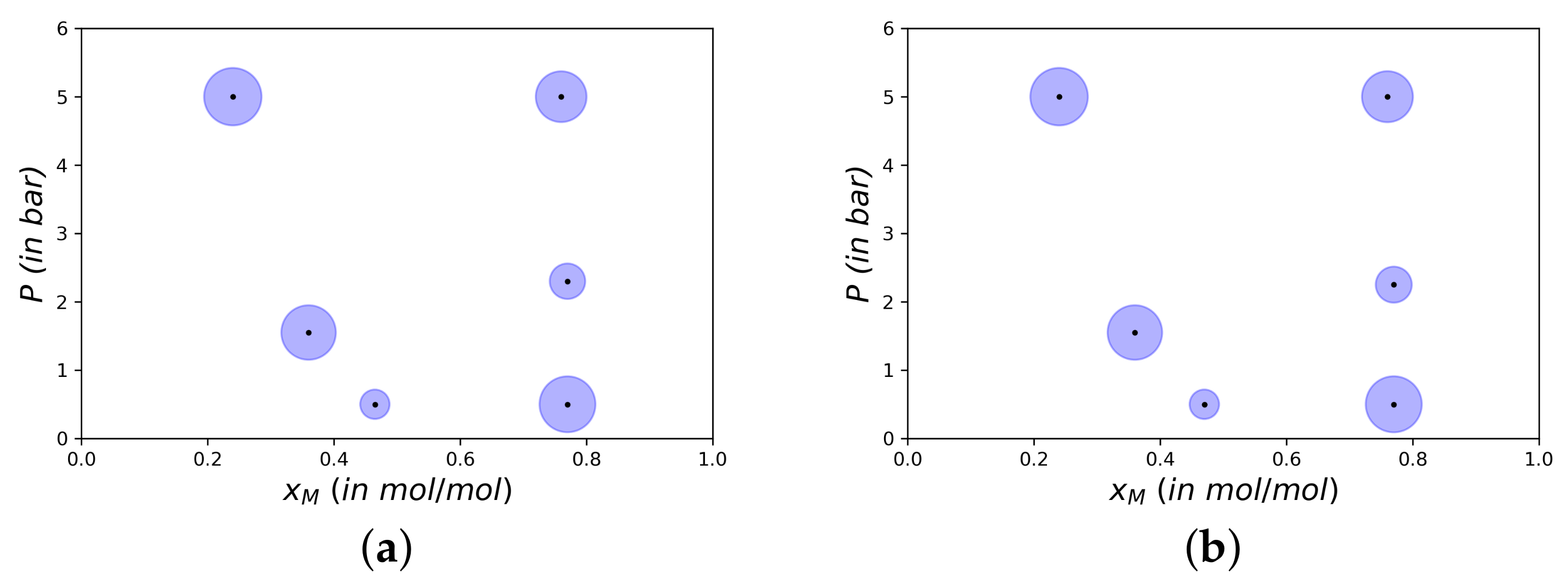

Next, we replace the mixture of water and methanol by a new mixture consisting of methanol and acetone. The designs

and

—computed by the VDM, YBT algorithm and the ADA-GPR respectively—are given in

Table 6,

Table 7 and

Table 8 as well as in

Figure 6 and

Figure 7. We initialize the algorithms with the same number of points as for the water-methanol mixture. A detailed breakdown of the objective values, number of iterations and the runtime is given in

Table 9 and

Table 10.

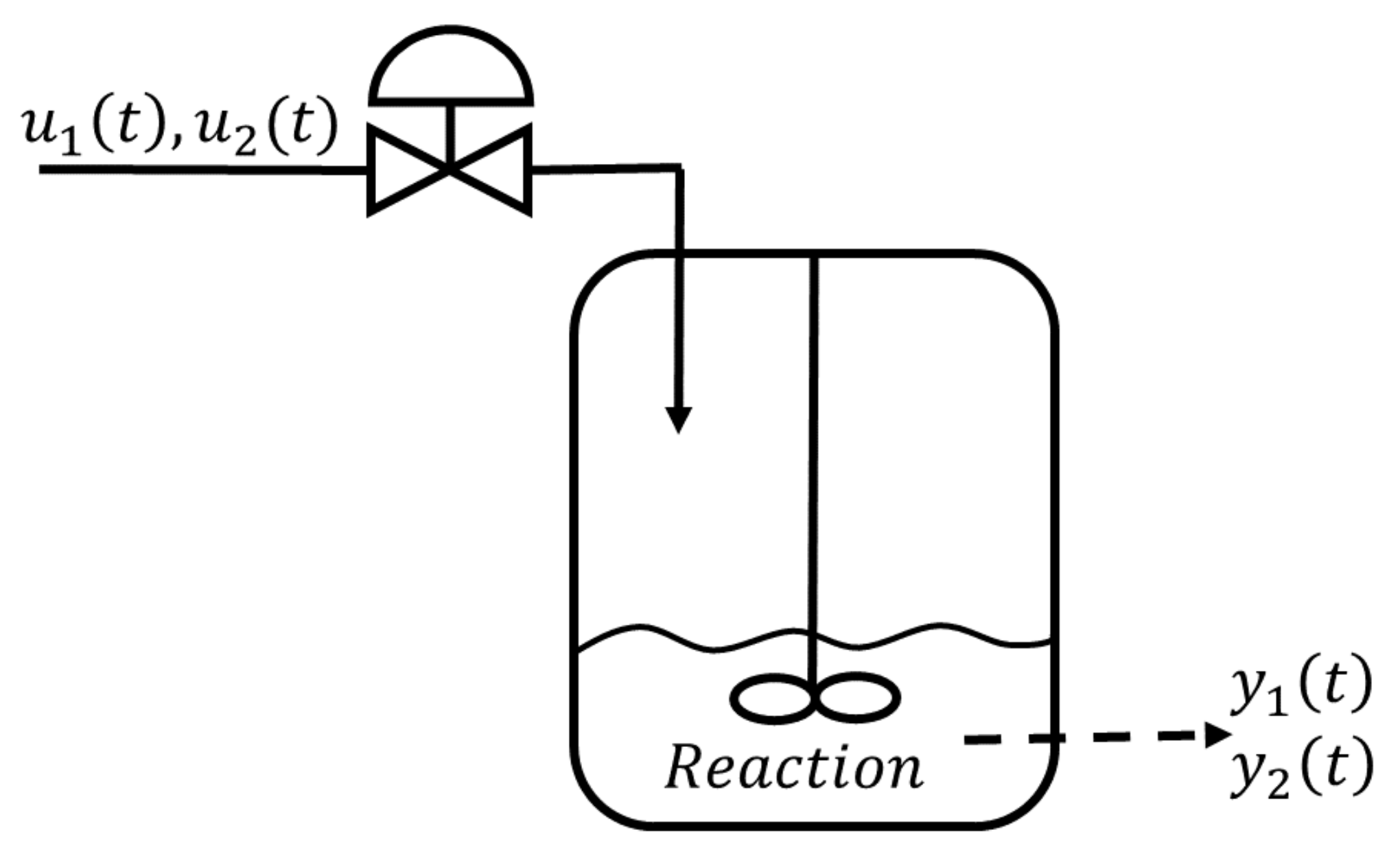

The second example we consider is the fermentation of baker’s yeast. This model is taken from [

8,

13], where a description of the model is given and OED results for uncertain model parameters

are presented.

Yeast and a substrate are put into a reactor and the yeast ferments. Thus the substrate concentration

decreases over time, while the biomass concentration

increases. During this process we add additional substrate into the reactor via an input feed. This feed is governed by two (time-dependent) controls

and

. Here,

denotes the dilution factor while

denotes the substrate concentration of the input feed. A depiction of the setup is given in

Figure 8.

Mathematically, the reaction is governed by the system of equations

The time t is given in hours h. We solve the differential equations inside the CHEMADIS Software by a 4th order Runge-Kutta method with end time .

In order to obtain a design of experiments setup we parameterize the dynamical system and replace the time-dependent functions by time-independent parameters. As inputs, we consider the functions

and the initial biomass concentration

. The functions

with

are modeled as step functions with values

for

, where

. This results in a 11-dimensional design space with design points

We bound the initial biomass concentration

by 1 g/L and 10 g/L, the dilution factor

by the range 0.05–0.2 h

−1 and the substrate concentration of the feed

by 5–35 g/L. In

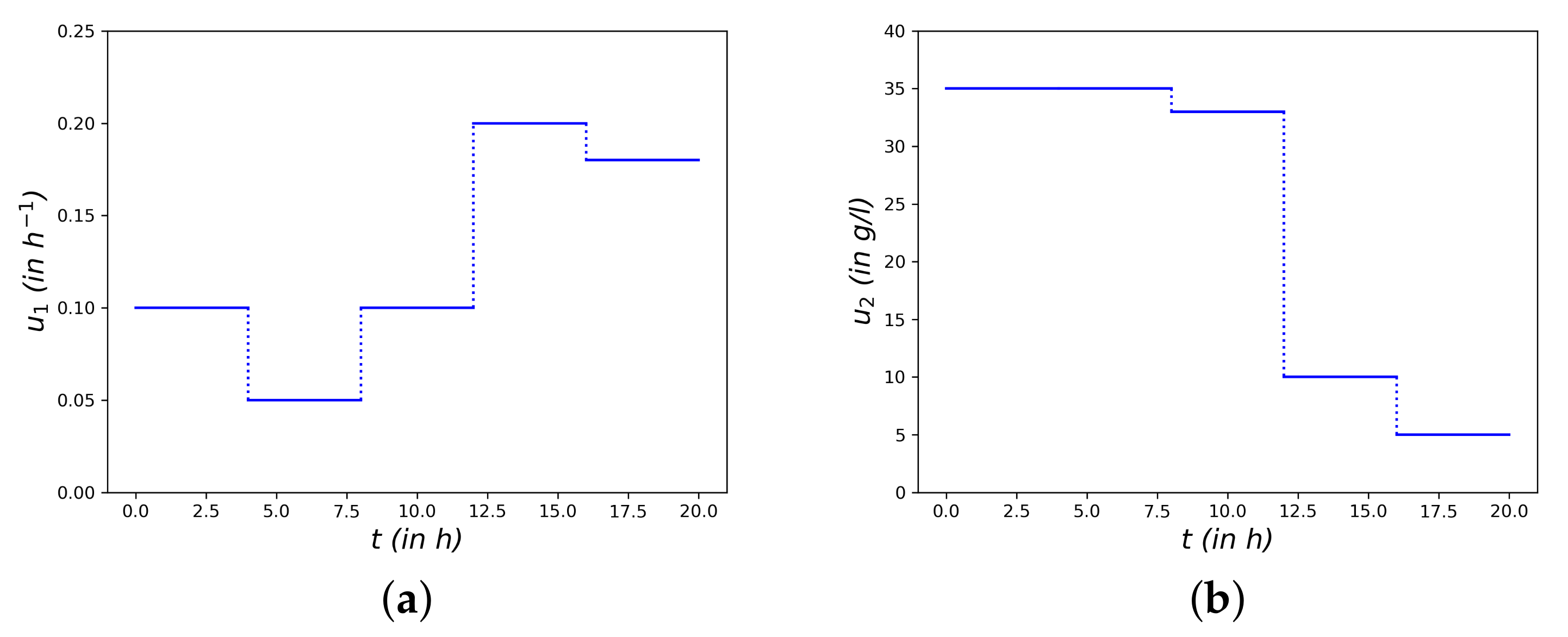

Figure 9, an example of the parameterized functions

and

is plotted.

As outputs, we take measurements of the biomass concentration

in g/L and the substrate concentration

in g/L. These measurements are taken at the 10 time points

for

, each. Thus we obtain a 20-dimensional output vector

The model parameters are , for which we insert our current best estimate for . This leaves one degree of freedom in the model, the initial substrate concentration, which we set as g/L.

For the VDM and the YBT algorithm we introduce the grid consisting of 15,552 design points. As the design space

X has 11 dimensions this grid is very coarse, despite the large amount of points. The designs

and

computed with the VDM and YBT algorithm are given in

Table 11 and

Table 12. The ADA-GPR is initiated with 200 design points. The computed design

is given in

Table 13. Again we do not list points with a weight smaller than 0.001 and perform the clustering described in

Section 6 for the ADA-GPR. As for the flash, we also give a detailed breakdown of the objective value, number of iterations and the runtime in

Table 14 and

Table 15.

5. Discussion

In this section, we discuss the numerical results presented in

Section 4. Both examples presented differ greatly in complexity and input dimension and are discussed separately.

For the flash we observe that the ADA-GPR can compute near optimal designs in significantly less time than the state-of-the-art YBT algorithm. In particular, we need less evaluations of the Jacobian and can drastically reduce the time required for these evaluations. This is due to the fact, that the ADA-GPR operates on the continuous design space and uses an adaptive sampling instead of a pre-computed fine grid. The time reduction is also noticeable in the total runtime. Despite requiring additional steps—like the optimization of the hyperparameters of the GPR—as well as requiring more iterations before the algorithm terminates, the ADA-GPR is faster than the YBT algorithm. The runtime needed is reduced by a factor greater than 10.

We observe that the VDM and the YBT algorithm compute designs with a larger objective value. This occurs as we use an approximation in the ADA-GPR instead of the exact function values . Therefore we expect to have small errors in our computations. In particular for low dimensional design spaces—where the sampling of a fine grid is possible—we expect the grid based methods to result in better objective values. However, from a practical point of view this difference is expected to be negligible.

For the yeast fermentation we make a similar observation. The ADA-GPR can significantly reduce the number of evaluations of the Jacobian as well as the runtime. In contrast to the flash distillation, ADA-GPR also computes a design with a larger—and thereby considerably better—objective value than the VDM and the YBT algorithm.

As the design space is 11-dimensional, the grid consisting of 15,552 design points is still very coarse. The computation of the Jacobians for these points takes a long time—more than 30 h. As we have a coarse grid, we do not expect the designs to be optimal on the continuous design space. In comparison, the ADA-GPR operates on the continuous design space and selects the next candidate points based on the existing information. We see that the adaptive sampling in the continuous space leads to a better candidate point set than the (coarse) grid approach. Using a finer grid for the VDM and the YBT algorithm is however not possible, as the computations simply take too long.

We conclude that the ADA-GPR outperforms the state-of-the-art YBT algorithm for models with high-dimensional design spaces—where sampling on a fine grid is computationally not tractable. Additionally, the ADA-GPR computes near optimal designs for models with low-dimensional design spaces in less time. The algorithm is particular useful for dynamical models, where a parameterization of the dynamic components can lead to many new design variables. We hereby make use of an adaptive point selection based on information from the current candidate points instead of selecting an arbitrary fixed grid.

The new algorithm also leaves room for improvement. In

Table 5,

Table 10 and

Table 15 we see that we can reduce the runtime contributed to the evaluations of the Jacobian. The runtimes for the optimization of the acquisition function as well as the optimization of the hyperparameters may increase compared to the YBT algorithm. Here, more advanced schemes for estimating the hyperparameters can be helpful.

Further room for improvement of the ADA-GPR is the stopping criterion and the resulting precision of the solution. Both VDM and YBT algorithm have a stopping criterion which gives an error bound on the objective value, see [

1]. For the ADA-GPR we have no such criterion and instead use a heuristic termination criterion—see

Section 6 for a detailed description. A more rigorous stopping criterion is left for future research.

6. Materials and Methods

In this section, we present details on our implementations of the VDM, the YBT algorithm and the ADA-GPR, which are introduced in

Section 2.1 and

Section 3. We implemented all methods using

python [

38]. The models

f were evaluated using CHEMASIM and CHEMADIS, the BASF inhouse simulators (Version 6.6 [

36] BASF SE, Ludwigshafen, Germany).

We begin by describing the grid-based VDM and YBT algorithm. For both methods, we take a grid

as input consisting of at least

design points, where

denotes the number of unknown parameters

. We select a random initial set of candidate points

consisting of

grid points

. This amount of points is suggested in [

22], a larger amount is possible as well and can increase the numeric stability.

In the VDM, we assign each candidate points

the weight

in order to obtain the initial design

. In the YBT algorithm, we instead solve the optimal weights problem

We solve this problem by reformulating it as a SDP and use the

MOSEK Software [

39] (MOSEK ApS, Copenhagen, Denmark) to solve the SDP. We assign the optimal weights

to the candidate points

to obtain the initial design

.

Should the Fisher information matrix of the initial design be singular, we discard the design and the candidate points and select a new set of random candidate points.

Next, we describe how we have implemented the iterative step of each algorithm. In order to obtain

we evaluate the function

for every grid point

. We then add

to the set of candidate points

and adjust the weights. For the VDM, we assign the new candidate point

the weight

. The weights

of all the previous candidate points are adjusted by multiplying with the factor

, resulting in the update

This factor is chosen according to [

1], Chapter 3.1.1. In the YBT algorithm we instead adjust the SDP to also account for the new candidate point

and re-solve the weight optimization problem. With the updated weights we obtain the design

and can iterate.

Last we discuss our stopping criterion. We set a value and stop the algorithm as soon as . The computed design then fulfills . Setting a smaller value of increases the precision of the design, but also increases the number of iterations needed. Additionally we terminate the algorithm if we reach 10,000 iterations.

Now we discuss our implementation of the novel ADA-GPR. As we want to use a Gaussian process regression, it is helpful to scale the inputs. We thus map the design space to the unit cube .

In the ADA-GPR we select a number

of initial candidate points. For the examples from

Section 4 we have selected 50 and 200 initial points respectively. The initial points are set as the first

points of the

-dimensional Sobol-sequence [

40,

41]. This is a pseudo-random sequence which uniformly fills the unit cube

. In our experience, one has to set

significantly larger than

, on the one hand to ensure the initial Fisher information matrix is not singular, on the other hand to obtain a good initial approximation via the GPR. We obtain the weights for the candidate points

analogously to the YBT Algorithm by solving the SDP formulation of the optimal weights problem with

MOSEK.

Next, we compute a Gaussian process regression for the directional derivative

based on the evaluations

. For this GPR, we use the machine learning library

scikit–learn [

42] with the squared exponential kernel—the radial basis function

RBF. The kernel is dependent on 3 hyperparameters, a pre-factor

, the length scale

l and a regularity factor

. The parameters

and

l are chosen via the

scikit-learn built-in loss function. They are chosen every time we fit the Gaussian process to the data, i.e., in every iteration. For the factor

, we use cross-validation combined with a grid search, where we consider the 21 values

. As the cross-validation of the hyperparameters can be time-expensive, we do not perform this step in every iteration. Instead, we adjust

in the initial 10 iterations and then only every 10th iteration afterwards.

Now we discuss the optimization of the acquisition function

In order to obtain a global optimum we perform a multistart, where we perform several optimization runs from different initial values. In our implementation we perform 10 optimization runs. The initial points are selected via the

-dimensional Sobol-sequence in the design space

. We recall, that the Sobol-sequence was also used to select the initial candidate points. In order to avoid re-using the same points, we store the index of the last Sobol-point we use. When selecting the next batch of Sobol-points, we take the points succeeding the stored index. Then, we increment the index. For the optimization we use the

L-BFGS-B method from the

scipy.optimize library [

43,

44].

Last, we present the stopping heuristic we use for the ADA-GPR. Throughout the iterations we track the development of the objective value and use the progress made as stopping criterion for the algorithm. For the initial 50 iterations, we do not stop the algorithm. After the initial iterations we consider the progress made over the last 40 percent of the total iterations. However we set a maximum of 50 iterations that we consider for the progress. For the current iteration

, we thus compute

and consider the progress

If , we stop the computation. Else, we continue with the next iteration.

For the tables and figures from

Section 4 we have clustered the results from the ADA-GPR. Here we have proceeded as described in the following. We iterate through the support points of the computed design

. Here we denote these support points by

. For each point

we check if a second distinct point

exists, such that

. If we find such a pair, we add these points to one joint cluster

. If we find a third point

with either

or

, the point

is added to the cluster

as well.

If for a point no point exists such that , the point initiates its own cluster .

After all points are divided into clusters, we represent each cluster

by a single point

with weight

. The point

is selected as average over all points

in the cluster

via the formula

The weight

is set as sum of the weights assigned to the points in the cluster

and is computed via

7. Conclusions

In this paper we have introduced a novel algorithm—the ADA-GPR—to efficiently compute locally optimal continuous designs. The algorithm uses an adaptive grid sampling method based on the Kiefer-Wolfowitz Equivalence Theorem. To avoid extensive evaluations of the Fisher information matrix, we approximate the directional derivative via a Gaussian process regression.

The ADA-GPR is particularly useful for models with high-dimensional design spaces, where traditional grid-based methods cannot be applied. Such models include dynamical systems, which need to be parameterized for the design of experiments setup. Additionally, optimal designs in low-dimensional spaces can also be computed efficiently by the ADA-GPR. In this paper, we have considered two examples from chemical engineering to verify these results.

In future work we want to further improve the ADA-GPR. For this purpose, we want to investigate the quality of the computed designs in order to have an indication on the optimal value. Another focus is the optimization of the acquisition function and the hyperparameters of the GPR, where we hope to improve the runtime even further. For this purpose we want to consider more advanced optimization and machine learning methods. Last, we want to extend the ADA-GPR to other design of experiment settings. These include incorporating existing experiments and considering robust designs instead of locally optimal ones.