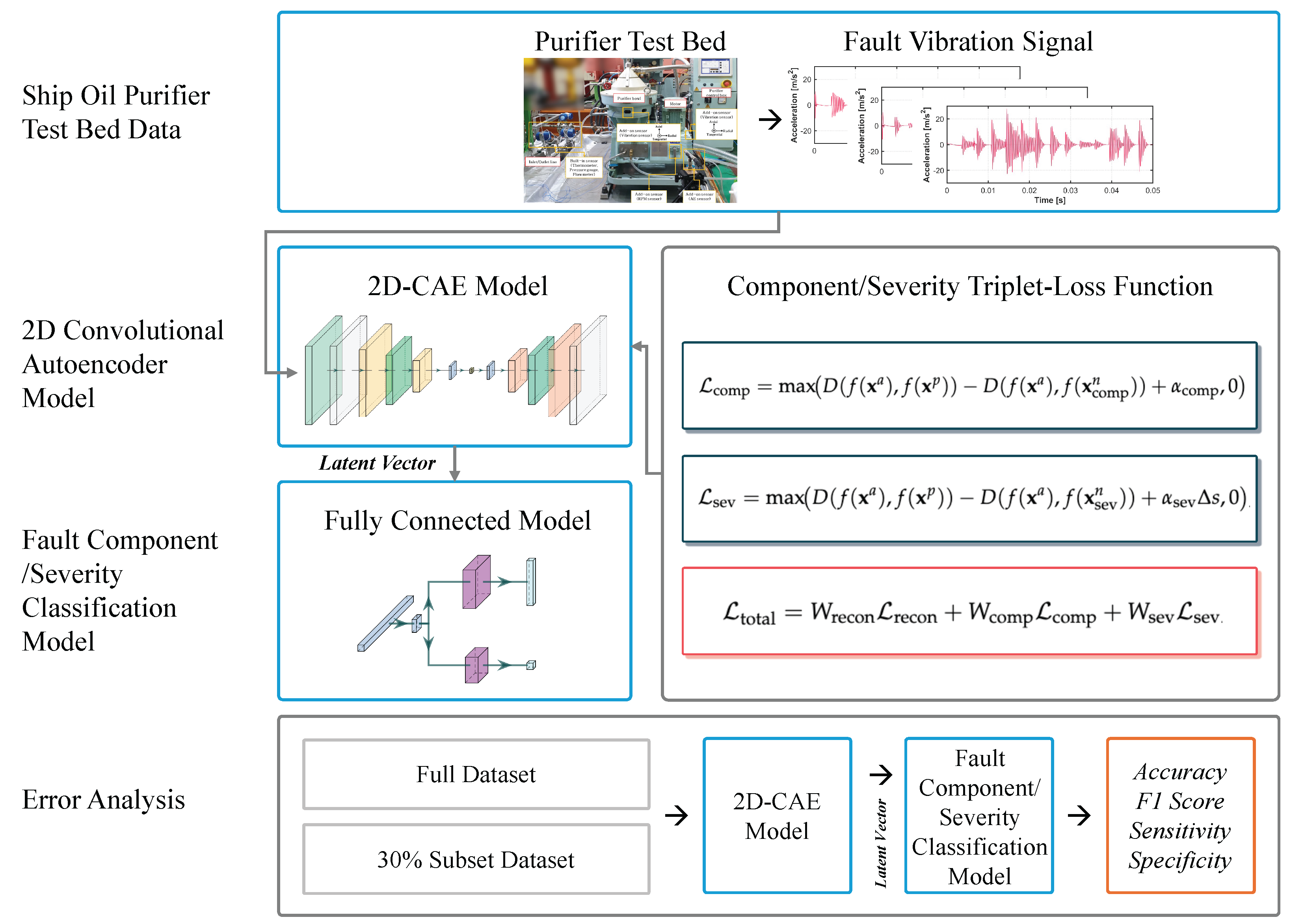

3.1. Experimental Results

To validate the efficacy of the proposed structured latent-space learning approach, an ablation study was conducted. This study compared the full framework (AS-LSLF) with a baseline two-dimensional convolutional autoencoder (2D-CAE) trained only with the standard reconstruction loss, combined with a classification network for both component and severity classifications. The experiments were performed on a PC with an Intel Core Ultra 7265 K processor (Santa Clara, CA, USA), 128 GB of RAM, and an NVIDIA RTX 5090 GPU (Santa Clara, CA, USA). This setup used Python 3.11 (Wilmington, DE, USA) and Pytorch 2.8.0 (New York City, NY, USA) to facilitate the best utilization of the latest software capabilities and GPU acceleration. The hyper-parameters for each model were optimized using grid search optimization. The hyper-parameters for grid search optimization included the learning rate, the number of layers, filter sizes, and regularization terms, as shown in

Table 2. Following this search, the optimal parameters for the final model were a latent dimension size of 256, a learning rate of

, a weight decay of

, a reconstruction weight of

, a component triplet weight of

, a severity triplet weight of

, a triplet margin of

, and a classification weight of

. The models were trained for a maximum of 50 epochs, with a batch size of 16. To prevent overfitting and determine the optimal training duration, an early stopping mechanism was employed with a patience of 7 epochs, monitoring the validation loss. The dataset was shuffled and split into a 70:30 train–test split and was stratified to maintain class proportions across all sets.

Figure 5 illustrates the training loss and accuracy of the proposed AS-LSLF model. The loss curve demonstrates stable convergence of the combined objective function, with the multi-task learning approach effectively balancing the reconstruction quality and latent space structuring.

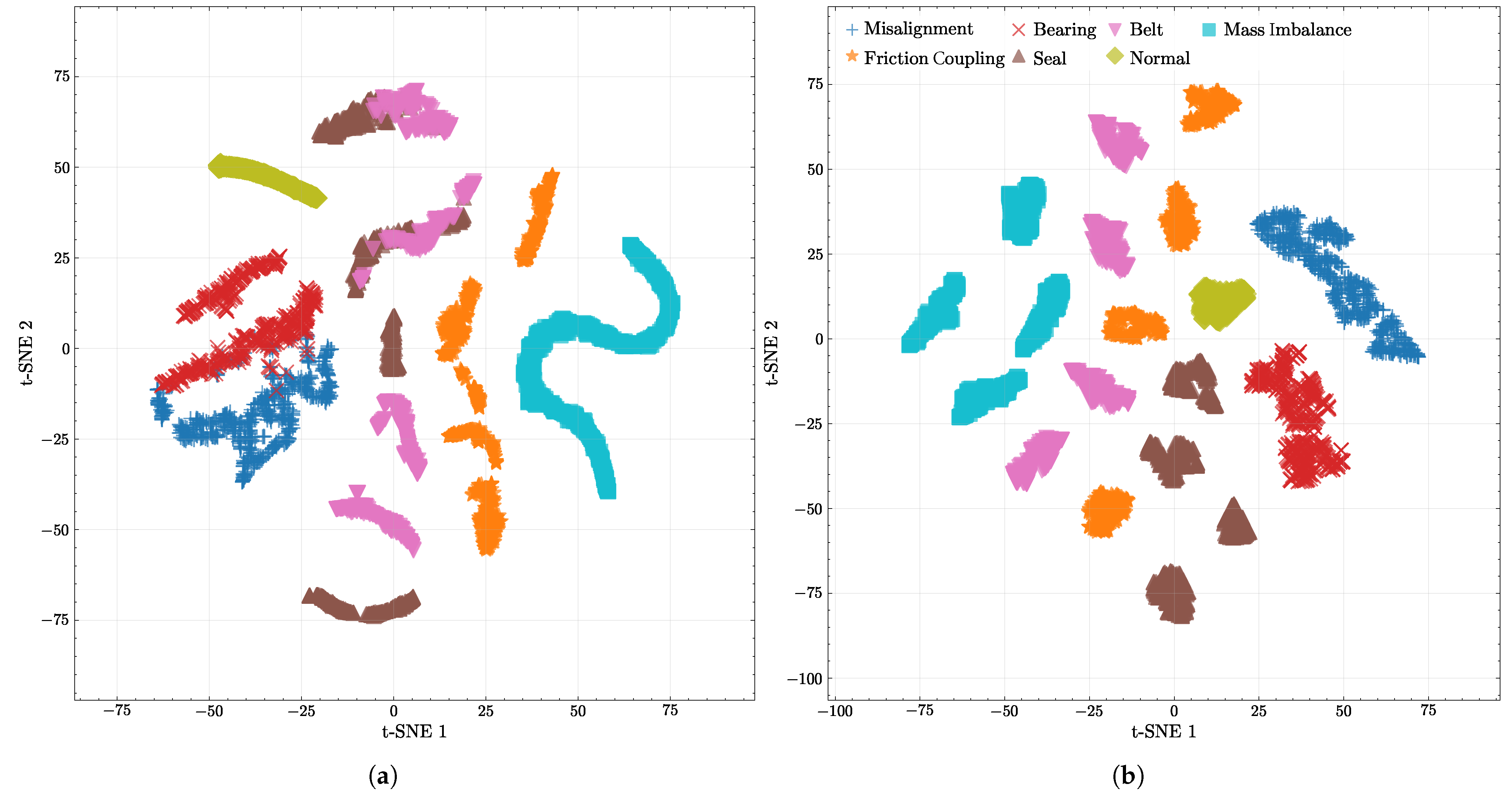

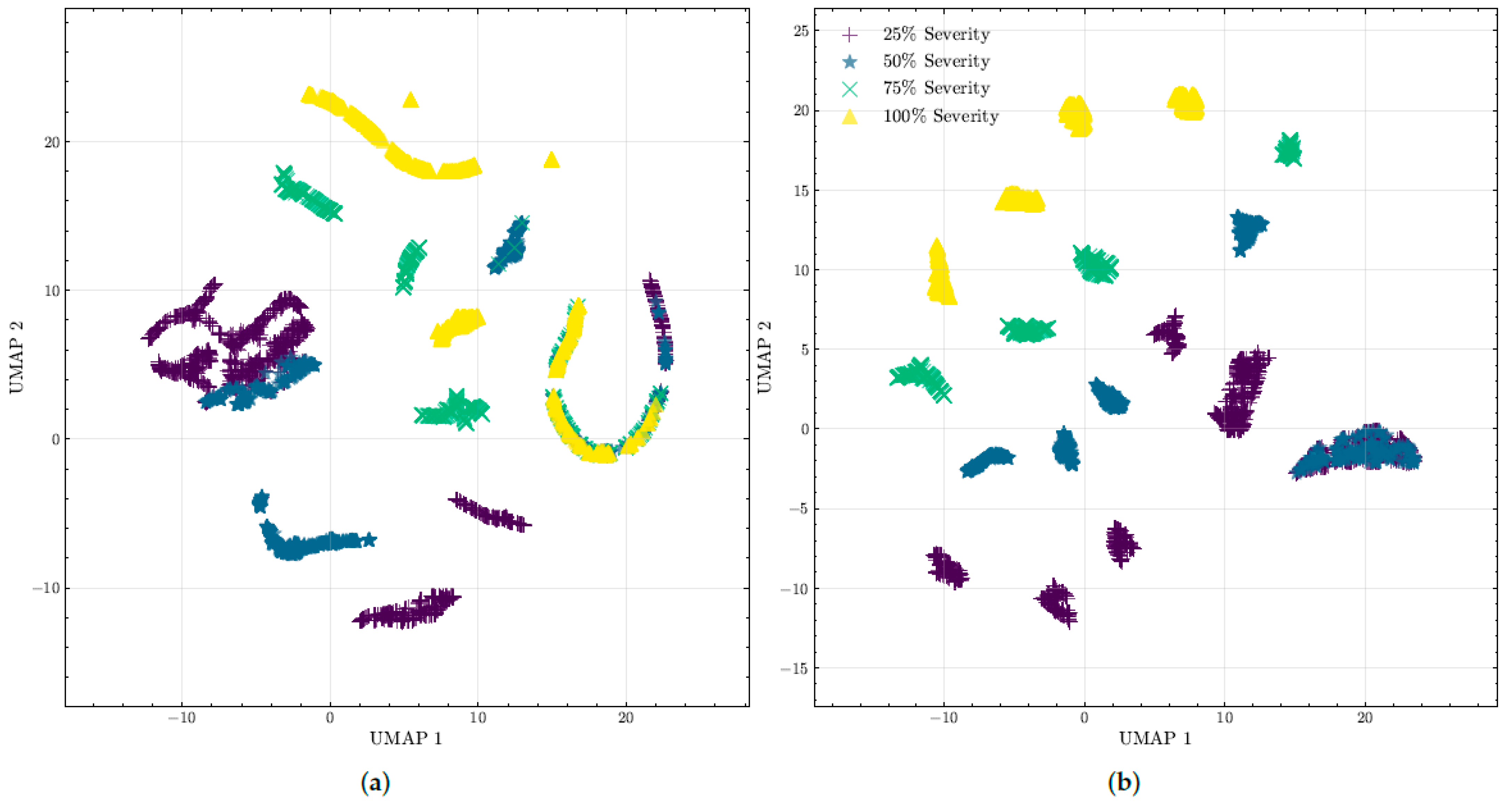

To show the capability of the proposed framework to capture the latent organization of diagnostic measurements, we visualized the latent vector from the baseline 2D-CAE and the proposed AS-LSLF encoder as two-dimensional maps. Specifically, we adopted the Uniform Manifold Approximation and Projection (UMAP) [

22] and t-Distributed Stochastic Neighbor Embedding (t-SNE) [

23] to project the high-dimensional embeddings into a plane for qualitative assessment. UMAP provides a topology-aware low-dimensional representation that preserves both the neighborhood structure and coarse global geometry of the original space. By contrast, t-SNE emphasizes the local neighborhood fidelity by minimizing the Kullback–Leibler divergence between pairwise similarity distributions in the original space and the embedded space, offering a complementary view of the learned latent features.

Figure 6 and

Figure 7 depict separability across fault component groups, while

Figure 8 and

Figure 9 summarize the stratification by fault severity. In the baseline 2D-CAE embeddings, several clusters intermix, indicating weaker discrimination between classes. In contrast, the proposed AS-LSLF framework yields cleaner decision regions with markedly clearer boundaries, most prominently under UMAP, demonstrating an enhanced ability to disentangle operating conditions. The reduced overlap and wider margins achieved by the proposed model indicate more discriminative latent representations than the baseline, supporting improved downstream inference and health state characterization.

This structural superiority translates into improved quantitative performance, as demonstrated in

Table 3 and

Table 4, which summarize the downstream classification results from both models. Additionally,

Table 5,

Table 6,

Table 7,

Table 8,

Table 9 and

Table 10 provide detailed quantitative results using four key metrics: Accuracy, F1 Score, Sensitivity, and Specificity. Each metric is reported separately for the component classification and severity classification using test data for performance comparison. Finally,

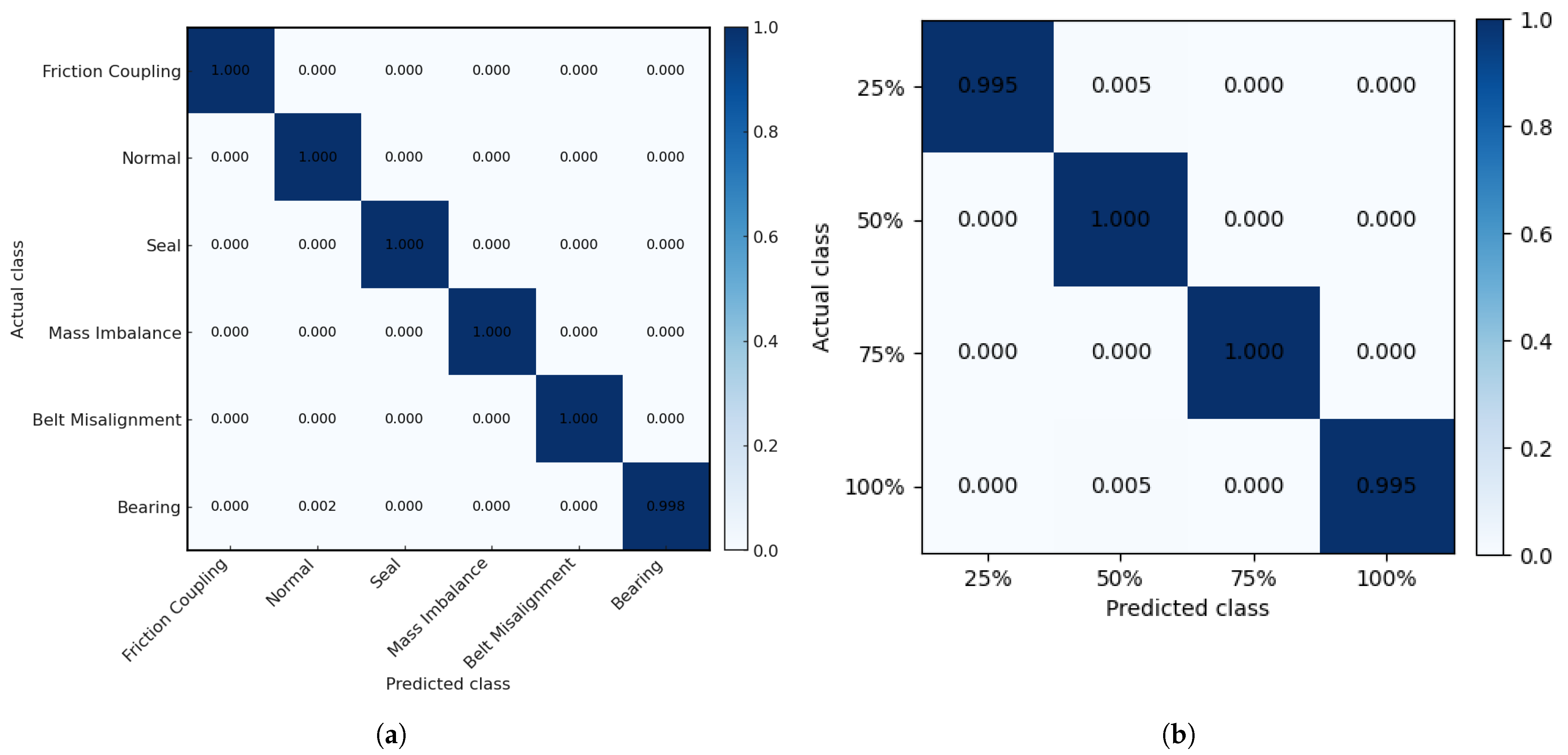

Figure 10 shows the confusion matrix for component classification and fault severity classification of proposed AS-LSLF model. The performance metrics for a generic multiclass classification model are formally defined as follows:

where TP (True Positive) denotes correctly predicted instances of each class, TN (True Negative) indicates correctly predicted instances not belonging to each class, FN (False Negative) indicates instances incorrectly predicted as belonging to other classes, FP (False Positive) represents instances incorrectly predicted as the given class, and N denotes the total number of classes.

Table 3.

Component classification performance comparison for the ablation study.

Table 3.

Component classification performance comparison for the ablation study.

| Model | Accuracy (%) | F1 (%) | Sensitivity (%) | Specificity (%) |

|---|

| AS-LSLF (Proposed) | 99.98 | 99.97 | 99.96 | 99.99 |

| 2D-CAE | 99.89 | 99.82 | 99.81 | 99.97 |

Figure 6.

UMAP-based projections of the learned latent manifolds from the baseline and proposed models. (a) Baseline 2D-CAE. (b) Proposed AS-LSLF.

Figure 6.

UMAP-based projections of the learned latent manifolds from the baseline and proposed models. (a) Baseline 2D-CAE. (b) Proposed AS-LSLF.

Figure 7.

t-SNE projections of the learned latent manifolds from the baseline and proposed models. (a) Baseline 2D-CAE. (b) Proposed AS-LSLF.

Figure 7.

t-SNE projections of the learned latent manifolds from the baseline and proposed models. (a) Baseline 2D-CAE. (b) Proposed AS-LSLF.

Figure 8.

UMAP visualization of the learned latent space for fault severity. (a) Baseline 2D-CAE. (b) Proposed AS-LSLF.

Figure 8.

UMAP visualization of the learned latent space for fault severity. (a) Baseline 2D-CAE. (b) Proposed AS-LSLF.

Figure 9.

t-SNE visualization of the learned latent space for fault severity. (a) Baseline 2D-CAE. (b) Proposed AS-LSLF.

Figure 9.

t-SNE visualization of the learned latent space for fault severity. (a) Baseline 2D-CAE. (b) Proposed AS-LSLF.

Figure 10.

Confusion matrix of proposed AS-LSLF model. (a) Component classification. (b) Fault severity classification.

Figure 10.

Confusion matrix of proposed AS-LSLF model. (a) Component classification. (b) Fault severity classification.

Table 4.

Fault severity classification performance comparison for the ablation study.

Table 4.

Fault severity classification performance comparison for the ablation study.

| Model | Accuracy (%) | F1 (%) | Sensitivity (%) | Specificity (%) |

|---|

| AS-LSLF (Proposed) | 99.88 | 99.87 | 99.75 | 99.89 |

| 2D-CAE | 99.14 | 98.95 | 98.90 | 99.35 |

To further assess the robustness and generalizability of our proposed model and the ablation model, we performed a five-fold cross-validation. The dataset was partitioned into five subsets of equal size. In each fold, one subset was used for testing, while the remaining four were used for training. This process was repeated five times, ensuring that each subset was used as the test set exactly once. The results, presented as the mean and standard deviation of the performance metrics across the five folds, are shown in

Table 5 and

Table 6. The consistently high performance across all folds with low standard deviations demonstrates the stability and reliability of our proposed approach.

Table 5.

Five-fold cross-validation results for component classification.

Table 5.

Five-fold cross-validation results for component classification.

| Model | Accuracy (%) | F1 (%) | Sensitivity (%) | Specificity (%) |

|---|

| AS-LSLF (Proposed) | 99.18 ± 0.013 | 99.17 ± 0.015 | 99.16 ± 0.014 | 99.19 ± 0.014 |

| 2D-CAE | 98.99 ± 0.014 | 98.92 ± 0.015 | 98.91 ± 0.013 | 99.07 ± 0.014 |

Table 6.

Five-fold cross-validation results for fault severity classification.

Table 6.

Five-fold cross-validation results for fault severity classification.

| Model | Accuracy (%) | F1 (%) | Sensitivity (%) | Specificity (%) |

|---|

| AS-LSLF (Proposed) | 99.08 ± 0.023 | 98.97 ± 0.014 | 98.85 ± 0.018 | 98.99 ± 0.013 |

| 2D-CAE | 98.24 ± 0.025 | 98.05 ± 0.033 | 98.00 ± 0.019 | 98.45 ± 0.024 |

3.2. Performance Comparison with Other Methods

To further establish the superiority of the proposed framework, its diagnostic performance was benchmarked against several state-of-the-art deep learning models commonly employed for time-series classification. The selected baselines include a two-dimensional CNN (2D-CNN) [

19], a standard one-dimensional CNN (1D-CNN) [

24], and a bidirectional long short-term memory network (BiLSTM) [

25]. From a computational standpoint, the proposed AS-LSLF framework introduces additional complexity during training compared with a standard 2D-CNN, owing to the pairwise distance calculations required by the triplet-loss. Its inference time, however, remains comparable with the 2D-CNN. While more computationally intensive than the 1D-CNN due to its two-dimensional architecture, AS-LSLF is substantially more efficient than the BiLSTM model, whose sequential processing is inherently slower for long time series. To evaluate the robustness and data efficiency, which is critical for real-world maritime applications where acquiring extensive labeled fault data is often infeasible, the analysis was conducted under two data-utilization regimes: full and 30% of the available training data.

With full training data (

Table 7 and

Table 8), the proposed AS-LSLF framework outperforms all baselines across nearly every metric. The performance gap further widens under the reduced 30% dataset setting (

Table 9 and

Table 10); while the baseline performance drops sharply, AS-LSLF remains remarkably resilient thanks to the metric-learning composite loss that regularizes the latent space, while the BiLSTM model struggles due to lack of training data.

Table 7.

Component classification performance comparison with full training data.

Table 7.

Component classification performance comparison with full training data.

| Model | Accuracy (%) | F1 (%) | Sensitivity (%) | Specificity (%) |

|---|

| AS-LSLF (Proposed) | 99.98 | 99.97 | 99.96 | 99.99 |

| 2D-CAE | 99.89 | 99.82 | 99.81 | 99.97 |

| 2D-CNN | 99.88 | 99.88 | 99.87 | 99.96 |

| 1D-CNN | 97.1 | 96.9 | 96.8 | 97.5 |

| BiLSTM | 96.68 | 96.40 | 96.20 | 97.10 |

Table 8.

Fault severity classification performance comparison with full training data.

Table 8.

Fault severity classification performance comparison with full training data.

| Model | Accuracy (%) | F1 (%) | Sensitivity (%) | Specificity (%) |

|---|

| AS-LSLF (Proposed) | 99.88 | 99.87 | 99.75 | 99.89 |

| 2D-CAE | 99.14 | 98.95 | 98.90 | 99.35 |

| 2D-CNN | 99.88 | 99.88 | 99.80 | 99.90 |

| 1D-CNN | 92.43 | 91.98 | 91.50 | 93.80 |

| BiLSTM | 78.13 | 78.09 | 78.09 | 82.50 |

Table 9.

Component classification performance comparison with 30% training data utilization.

Table 9.

Component classification performance comparison with 30% training data utilization.

| Model | Accuracy (%) | F1 (%) | Sensitivity (%) | Specificity (%) |

|---|

| AS-LSLF (Proposed) | 99.88 | 99.87 | 99.75 | 99.89 |

| 2D-CAE | 97.71 | 97.30 | 96.80 | 97.90 |

| 2D-CNN | 97.90 | 97.6 | 97.5 | 98.1 |

| 1D-CNN | 94.34 | 93.76 | 92.50 | 94.80 |

| BiLSTM | 71.88 | 63.06 | 58.40 | 75.20 |

Table 10.

Fault severity classification performance comparison with 30% training data utilization.

Table 10.

Fault severity classification performance comparison with 30% training data utilization.

| Model | Accuracy (%) | F1 (%) | Sensitivity (%) | Specificity (%) |

|---|

| AS-LSLF (Proposed) | 99.87 | 99.80 | 99.80 | 99.90 |

| 2D-CAE | 95.77 | 95.63 | 95.63 | 99.56 |

| 2D-CNN | 94.21 | 93.95 | 93.95 | 99.26 |

| 1D-CNN | 90.38 | 89.46 | 89.46 | 98.11 |

| BiLSTM | 61.92 | 58.62 | 58.62 | 90.44 |