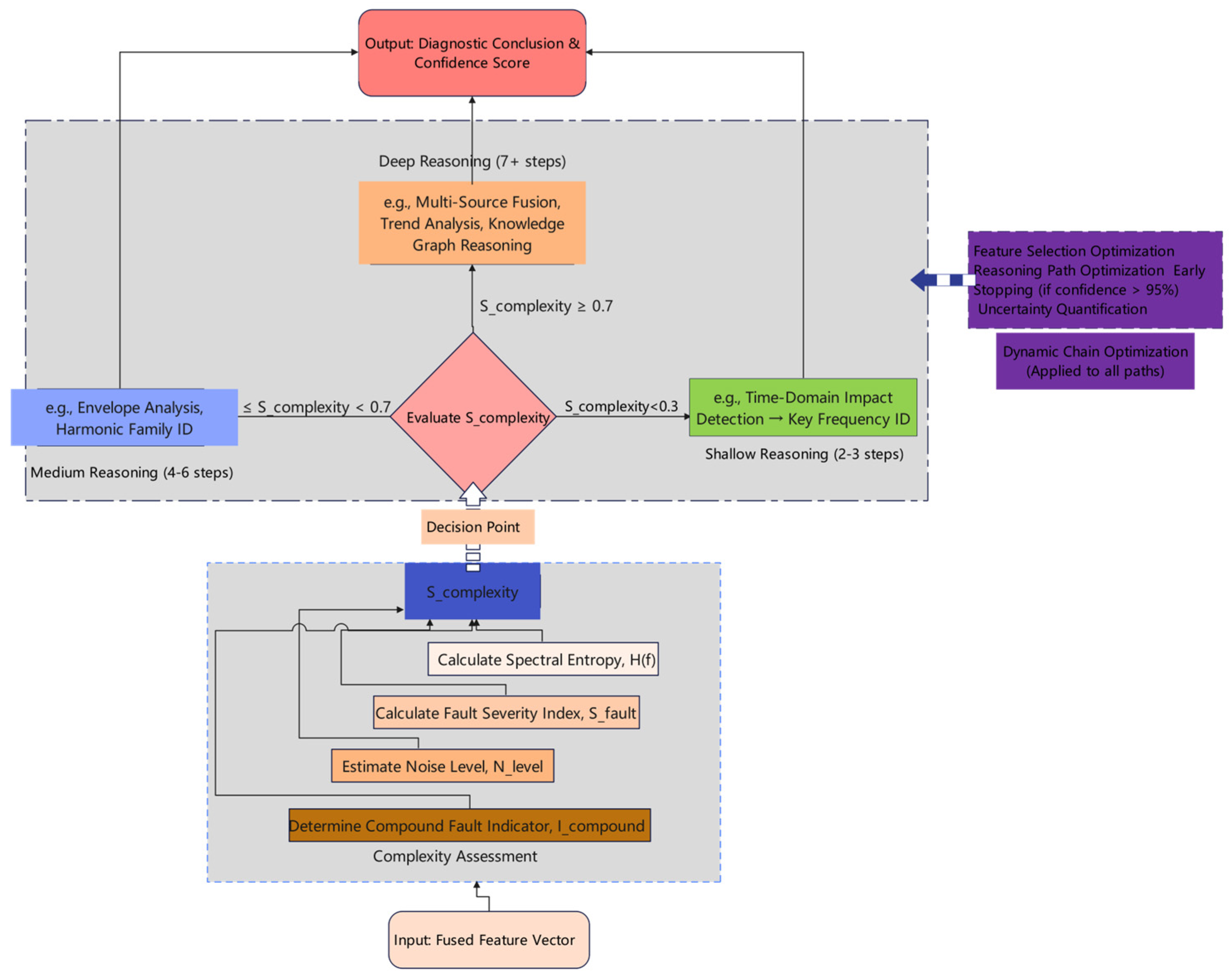

3.4.1. Adaptive Reasoning Depth Control

A key innovation of the VA-COT framework is its ability to dynamically balance diagnostic precision and computational efficiency [

36]. To achieve this, we developed an Adaptive Reasoning Depth Control mechanism. This mechanism can assess online the complexity of the current vibration signal and dynamically allocate appropriate computational resources to the VA-COT engine, thereby maximizing reasoning efficiency while ensuring diagnostic accuracy.

The logical flow of this mechanism is illustrated in detail in

Figure 2.

The mechanism first assesses the complexity of the input signal using a comprehensive scoring function, Scomplexity. This score integrates four key indicators [

37]:

where

H(

f) is the spectral entropy measuring spectral disorder,

Sfault is a fault severity index,

Nlevel is the estimated noise level, and

Icompound is an indicator for the presence of multiple concurrent faults.

Based on the calculated complexity score, the system selects one of three reasoning depths:

Shallow Reasoning (e.g., score < 0.3): For clear, simple faults, a 2–3 step process is used for rapid diagnosis.

Medium Reasoning (e.g., 0.3 ≤ score < 0.7): For cases with moderate noise or minor compound faults, a 4–6 step analysis involving more advanced tools is activated.

Deep Reasoning (e.g., score ≥ 0.7): For highly complex or severe compound faults, a comprehensive analysis of 7 or more steps is invoked, integrating multi-source information and knowledge graph-based reasoning.

To further enhance efficiency, the reasoning chain itself is dynamically optimized. This includes on-the-fly feature selection based on preliminary judgments, path optimization using reinforcement learning from historical cases, and an early stopping mechanism that terminates the reasoning process once a diagnostic conclusion reaches a high confidence threshold (e.g., 95%), preventing unnecessary computation.

To ensure the effectiveness and logical integrity of the Adaptive Reasoning Depth Control mechanism, this section elaborates on its triggering mechanism, specifically the calculation of the complexity score, Scomplexity [

38]. This scoring process is a critical pre-processing step designed to triage the diagnostic difficulty of a signal before committing to the formal, multi-step VA-COT reasoning chain.

Crucially, it must be clarified that the indicators used in Equation (5) are all fast, preliminary estimations derived using computationally inexpensive heuristics. Their sole purpose is to select an appropriate reasoning path, not to serve as the final diagnostic output, thereby resolving any suspicion of circular reasoning. The rapid estimation methods for each indicator are as follows:

Fault Severity Index (Sfault): This is a heuristic preliminary index, not the final precise score. It is rapidly estimated by comparing the overall energy (RMS value) of the current vibration signal to a historical baseline established during the equipment’s healthy operation. For instance, a signal with energy 50% above its baseline will yield a significantly higher preliminary severity index than one that is 10% above.

Spectral Entropy (H(f)): This metric is directly calculated from the Fast Fourier Transform (FFT) spectrum, which is already generated during the preprocessing stage, to measure spectral disorder. It is a standard and computationally cheap calculation.

Noise Level (Nlevel): The noise level is estimated by analyzing the signal’s spectral floor or the average energy in non-dominant frequency bands. This serves as a quick proxy for the signal-to-noise ratio.

Compound Fault Indicator (Icompound): This indicator is estimated via a fast spectral analysis algorithm that identifies and counts the number of independent, prominent harmonic families present in the spectrum (e.g., a rotational frequency harmonic family, a specific bearing fault frequency harmonic family, etc.). The presence of multiple independent harmonic families is a strong indicator of a more complex compound fault.

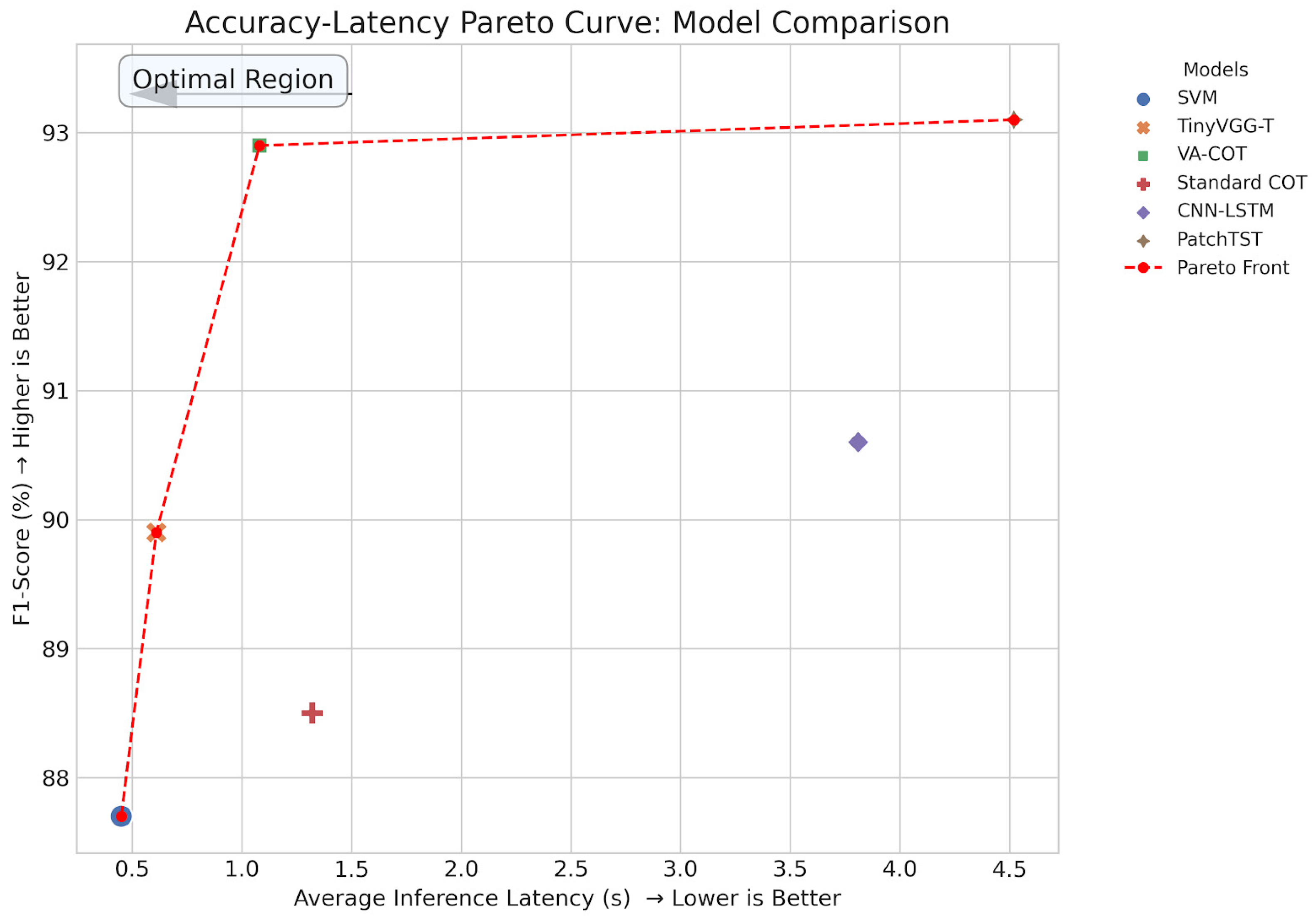

Regarding the weights (w1, w2, w3, w4) in Equation (5), they are not set empirically. Instead, they are treated as critical hyperparameters and are determined through a rigorous optimization process. Using a validation subset of our benchmark dataset, a Grid Search methodology was employed to systematically test various weight combinations [

39]. The objective function for evaluating each combination was a weighted balance between diagnostic accuracy (F1-Score) and average inference latency. The set of weights that yielded the optimal performance on this objective function was ultimately selected, ensuring that the mechanism allocates computational resources most effectively while maintaining high diagnostic precision [

40].

Through this two-stage strategy—a rapid preliminary assessment followed by a selected-depth reasoning—the VA-COT framework can effectively adapt its behavior to the task’s difficulty, resolving the potential for circular logic and achieving a balance between diagnostic accuracy and computational efficiency.

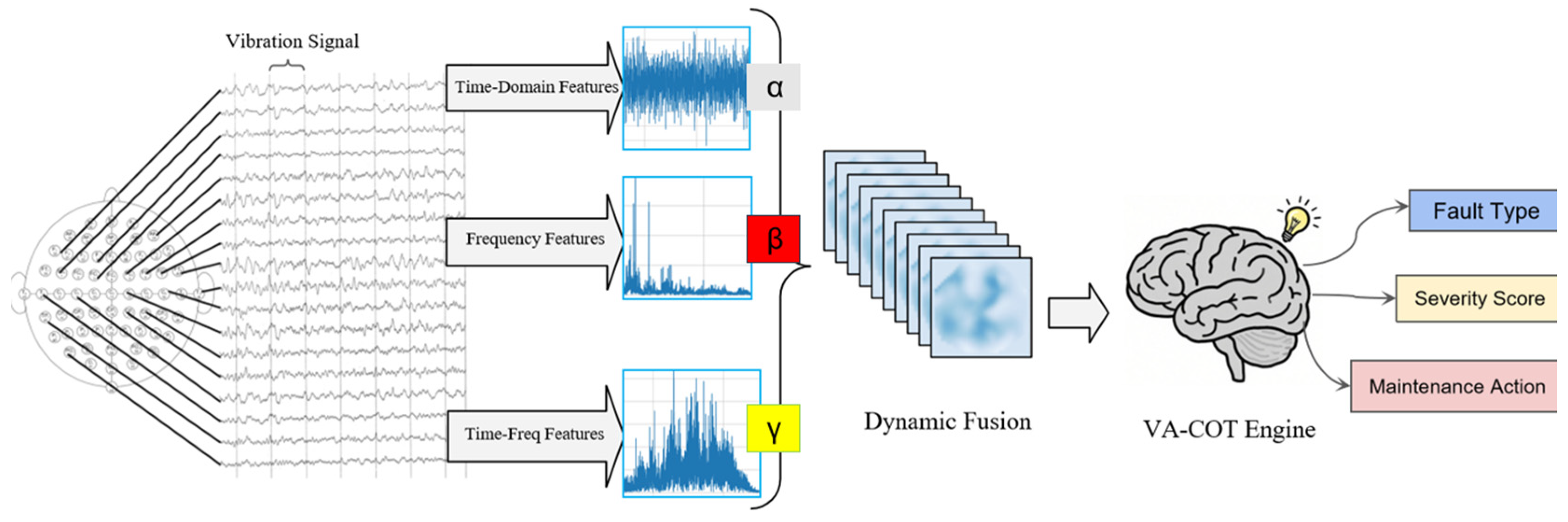

3.4.2. The VA-COT Hybrid Reasoning Engine: Concepts and Mechanisms

To address the challenge of applying Chain-of-Thought (CoT) reasoning to numerical vibration data, the VA-COT engine is designed not as a natural language generator, but as a knowledge-guided hybrid reasoning engine. It operationalizes the diagnostic process of a human expert by creating a sequence of structured, verifiable steps [

41]. The

“chain of thought

” is an internal, structured data representation, not a textual output from the core engine [

42]. This hybrid approach synergizes a rule-based knowledge base for interpretability with a lightweight neural network for data-driven accuracy [

43].

- A.

The Structured Thought Node

The fundamental unit of the reasoning chain is the “Thought Node,” a structured object that captures the state of a single diagnostic step. The entire chain is a chronologically ordered list of these nodes. Each node is defined as follows:

ThoughtNode = {

step_id: Integer,

//The sequence number of the step (e.g., 1, 2, 3).

current_hypothesis: String,

//The primary hypothesis being tested in this step (e.g., “Investigating potential bearing fault”).

supporting_evidence: Dict,

//Quantitative evidence from the feature vector supporting the hypothesis (e.g., {“Kurtosis”: 4.5, “BPFO_Amplitude”: 0.8}).

confidence_score: Float,

//The calculated confidence (0.0 to 1.0) in the current hypothesis.

status: Enum

//The outcome of the investigation for this node: [“Confirmed”, “Rejected”, “Requires_Further_Analysis”].

}

```

This structure transforms the abstract CoT concept into a concrete computational entity, allowing the diagnostic path to be explicitly tracked and audited.

- B.

The Iterative Reasoning Process

The VA-COT engine constructs the chain of Thought Nodes through a Hypothesis–Verification–Iteration loop, which begins after the complexity score $S\_{complexity}$ has determined the required reasoning depth (Shallow, Medium, or Deep).

Initiation (Step 0: Anomaly Detection): The process starts with the fused multi-domain feature vector. The engine first checks macro-level health indicators (e.g., overall RMS, Peak value). If these indicators breach the baseline established by the intelligent alarm mechanism (Section 3.3.1), the first node, `ThoughtNode_0`, is generated with the hypothesis “Anomaly Detected.” Hypothesis Generation and Selection (The Hybrid Core): For each subsequent step, the engine generates and selects a new hypothesis using its hybrid core. This process is detailed further in the following section.

Evidence Focusing and Confidence Update: Once a hypothesis is selected, the engine focuses its analysis on the most relevant features from the input vector. This targeted evidence is used to calculate a new confidence_score for the hypothesis.

Iteration and Termination: The engine evaluates the confidence_score. If it exceeds the predefined threshold (e.g., 95%), the hypothesis is marked as “Confirmed,” and the reasoning chain may terminate early to prevent unnecessary computation. If the confidence is low, the loop continues to the next step until the maximum depth is reached or a conclusion is found.

To ensure the auditability and reproducibility of the reasoning process, the components of the hybrid engine are precisely defined. Our knowledge base consists of 92 rules derived from domain expertise and maintenance manuals, covering the primary failure modes of the target equipment. In cases where multiple rules are triggered simultaneously by the evidence from a ThoughtNode, all resulting candidate hypotheses are passed to the Hypothesis Scorer. This neural network thus acts as the conflict resolution mechanism, using data-driven evidence to arbitrate between competing, rule-based suggestions. The Hypothesis Scorer itself is a lightweight Multi-Layer Perceptron (MLP) with two hidden layers (64 and 32 neurons, respectively) using ReLU activation functions, optimized for high-speed inference on edge devices. Further details on the reasoning engine’s specifications are provided in

Appendix C.

- C.

Connecting the Reasoning Steps: The Decision and Transition Mechanism

The transition from one reasoning step (N) to the next (N + 1) is a structured process that ensures the diagnostic path is logical and efficient. This mechanism directly answers how the output of one step informs the input for the next.

Let us use an example: after the engine confirms an “impact in the time domain” (ThoughtNode_N), it “decides” to check for “specific frequency harmonics” in ThoughtNode_N + 1 as follows:

Knowledge-Guided Interrogation Targeting: The confirmed hypothesis from ThoughtNode_N (e.g., “Impulsive Fault Signature Detected”) is used to query the expert knowledge base. The knowledge base contains explicit mappings between fault signatures and subsequent analytical procedures. A rule such as IF signature IS “Impulsive” THEN next_target IS “Analyze_Frequency_Spectrum_for_Harmonics” dictates the next logical action. This provides a transparent, rule-based justification for the transition.

Scoping the Hypothesis Space: The determined next_target (“Analyze_Frequency_Spectrum”) is then used to constrain the search space for the next set of hypotheses. The engine queries the knowledge base again with this new context (e.g., GIVEN context IS “Impulsive_Fault” AND target IS “Analyze_Spectrum”, retrieve plausible root_causes). This results in a refined, focused list of candidates, such as [“Outer Race Defect (BPFO)”, “Inner Race Defect (BPFI)”, “Rolling Element Defect (BSF)”], preventing the consideration of irrelevant faults.

Data-Driven Selection: Finally, the lightweight neural “Hypothesis Scorer” takes this refined list of candidates and the full feature vector as input. Guided by the interrogation target, it prioritizes the frequency-domain features and assigns a relevance score to each candidate. The hypothesis with the highest score (e.g., “Outer Race Bearing Defect,” if a strong BPFO signal is present) is selected as the current_hypothesis for ThoughtNode_N + 1.

This structured transition ensures the entire Chain-of-Thought proceeds in a manner that is both mechanistically sound and interpretable to a human expert.

- D.

Final Report Generation

The output of the core VA-COT engine is the final, structured list of Thought Nodes. This list is then passed to a separate, post-processing “Report Generation Module.” This module uses a template-based system to translate the structured findings into the human-readable diagnostic text, such as that presented in the industrial case study. For example, a confirmed ThoughtNode with hypothesis = “rubbing characteristics” and evidence pointing to the “free-end bearing” is mapped to the corresponding sentence in a predefined report template. This decouples the core numerical reasoning from complex natural language generation, ensuring efficiency and reliability.