1. Introduction

During the part manufacturing process, unstable operational procedures lead to a decline in surface quality, resulting in surface defects such as material shortages, scratches, and dents [

1]. These defects not only affect the appearance and market value of the parts but may also cause safety incidents during subsequent use. Therefore, part surface defect detection has become a crucial aspect of the product manufacturing process [

2]. Currently, part surface defect detection methods mainly include manual inspection and sensor-based detection. Manual inspection is a traditional method where operators visually observe the surface condition of the part to assess the appearance quality of the product [

3]. However, this method is easily influenced by subjective factors, has low efficiency, and suffers from poor detection accuracy [

4]. To address this issue, researchers have developed intelligent detection methods for identifying surface defects in parts, including techniques such as sensor-based detection and visual inspection. Sensor-based detection involves using smart sensors to collect data on the surface condition of parts, followed by computer analysis to determine the relationship between the acquired signals and surface defects, thereby assessing surface quality [

5,

6]. Although different types of signals can provide rich characterization information and offer a more comprehensive reflection of defect conditions, signal acquisition requires complex sensors, which not only increases detection costs but also results in lower efficiency. Moreover, the signals collected by sensors represent only an indirect characterization of defects, making it difficult to establish a precise relationship between the signals and specific defect types. Therefore, identifying an intelligent, intuitive, and universally applicable method for surface defect detection has become a key focus for researchers. In recent years, with the development of computer technology, machine vision detection based on image processing has shown significant advantages in industrial part surface defect detection. This method combines the human eye observation from manual inspection with the computer analysis characteristics of sensor detection. Compared to manual inspection, it is more intelligent and accurate; compared to sensor detection, it is more intuitive and has higher detection efficiency, making it widely used in the detection of industrial product surface defects [

7,

8]. The image processing-based surface defect detection process for parts mainly includes the following steps: image acquisition [

9], image preprocessing [

10,

11], and image recognition [

12,

13]. However, due to the limitations of the hardware conditions of image acquisition devices and the complexity of the detection environment, the acquired images often have low resolution, resulting in significant differences between the acquired images and the actual part surfaces. This, in turn, affects the accuracy of defect detection and may even cause false detections [

14]. Therefore, it is necessary to optimize the image quality before performing part surface defect detection.

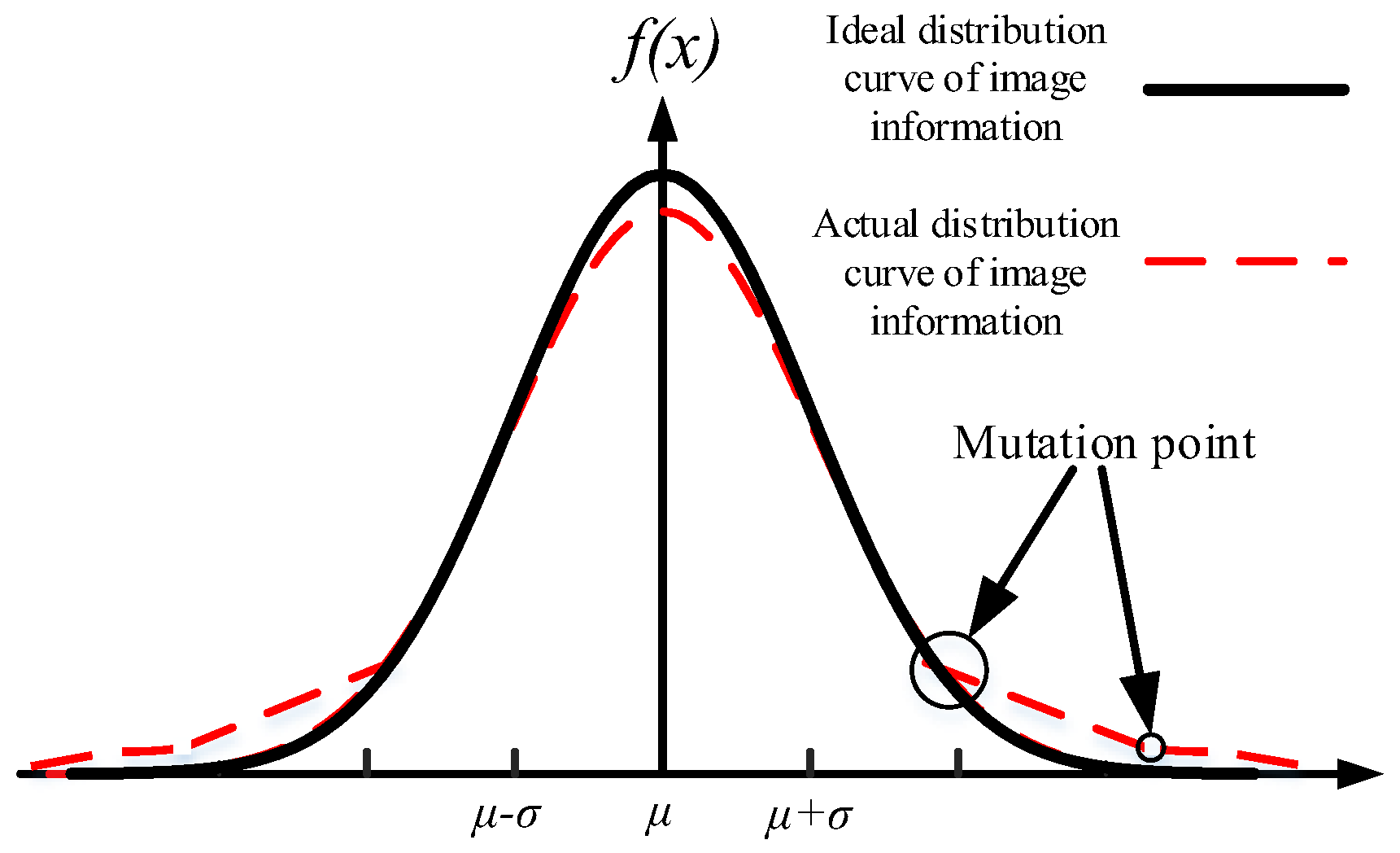

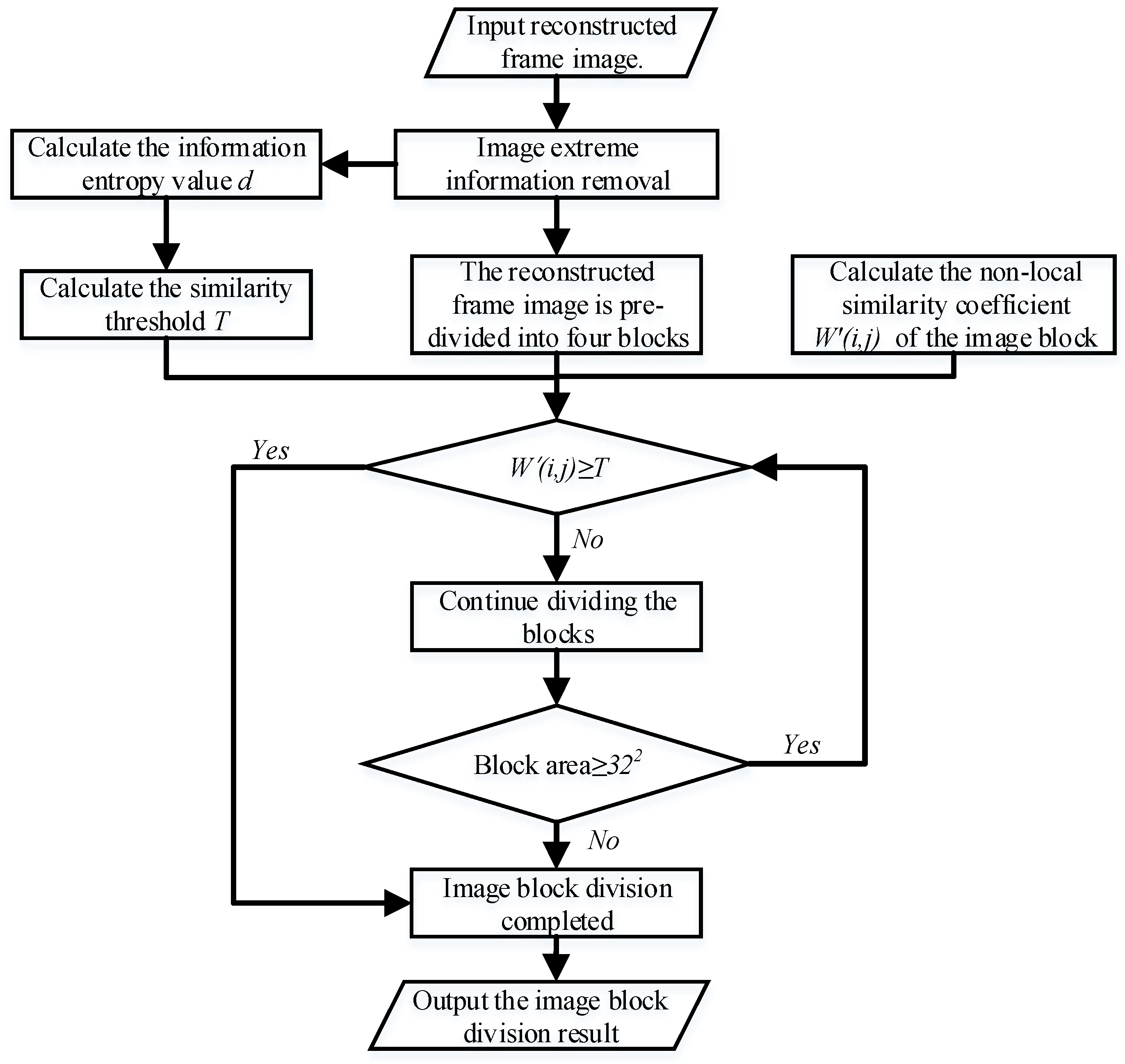

To address the above problems, this paper proposes a part image super-resolution reconstruction method based on adaptive multi-scale object tracking. The method first selects the middle frame from the input sequence of part images as the reconstruction frame. By calculating the similarity, statistical properties, and local features of the image information, the method adaptively divides the reconstruction frame image into blocks based on a similarity threshold and the non-local similarity coefficients of image blocks. Then, the Lucas–Kanade (LK) optical flow method is used to estimate the motion parameters of the sequence images, obtaining displacement information between the images. Furthermore, an object tracking algorithm is introduced to construct a multi-frame image feature tracking sampling model. The sub-blocks of the reconstruction frame are sampled, and by combining the motion displacement information estimated by the optical flow method, the other sequence images are registered with the reconstruction frame image, tracking and sampling similar features from all images. Finally, the K-means algorithm is used to cluster the sampled features. A quadtree non-linear reconstruction algorithm is applied to overlay and reconstruct the features of the reconstruction frame image, thereby generating a high-quality target image. The flowchart of the proposed method is shown in

Figure 1.

The main contributions of this study are as follows:

- (1)

An adaptive image block strategy is proposed, which dynamically adjusts the block scale based on prior information analysis of image blocks. This addresses the limitations of traditional fixed block methods, improving the ability to capture features in detailed areas while optimizing the allocation of computational resources in smooth areas, thereby enhancing image reconstruction accuracy while ensuring efficient computation;

- (2)

An improved optical flow method combined with a kernel correlation filter is adopted to achieve feature tracking sampling between sequential images while constructing an efficient feature tracking model that enhances feature similarity matching accuracy. This resolves the issue of image information distortion caused by pixel-level displacement between sequential part images;

- (3)

A super-resolution reconstruction algorithm based on multi-frame feature aggregation is proposed. By introducing multi-scale feature fusion technology, it optimizes image edge clarity and texture details while reducing algorithmic complexity, providing a low-cost and efficient image optimization solution for industrial inspection.

The remainder of this manuscript is organized as follows: In

Section 2, we present the Literature Review, discussing the most relevant works and methods in the field of part surface defect detection and image super-resolution reconstruction.

Section 3 introduces the proposed method, including the details of the adaptive multi-scale object tracking approach for part image super-resolution reconstruction.

Section 4 presents the experimental setup and results, including a comparison with existing methods and a detailed analysis of the performance. In

Section 5, we discuss the findings, including the limitations of the proposed method and potential areas for future improvement. Finally,

Section 6 concludes the study and highlights the contributions made by this research.

2. Literature Review

Part image quality optimization can be achieved through enhancing the hardware performance of image acquisition devices or using software algorithm processing. In terms of improving image quality through hardware performance, researchers [

15,

16] have proposed using high-resolution camera equipment to capture clear images to ensure the accuracy and reliability of the detection process, thereby optimizing the performance of the detection system. Additionally, Lins et al. [

17,

18] used the structural similarity index algorithm to calculate image similarity and select images that are similar to high-quality reference images, ensuring image quality during the detection process and achieving higher tool wear detection accuracy. Although improving hardware performance can directly enhance the quality of acquired images, in application scenarios with unstable lighting or complex production environments, the improvement of image quality through hardware performance alone is limited. Furthermore, high-resolution industrial cameras are expensive. For mechanical manufacturing scenarios that require large-scale deployment of visual inspection equipment, the economic cost of improving hardware performance is relatively high [

19]. Therefore, to save costs and increase the flexibility of image optimization, using software algorithms for processing has become a more effective approach. Super-resolution reconstruction algorithms can effectively enhance image quality, obtain clear edge information, and improve the accuracy of subsequent image analysis.

Existing image super-resolution reconstruction methods are mainly divided into interpolation-based, reconstruction-based, and learning-based types. Interpolation-based super-resolution methods include bilinear interpolation, neighborhood interpolation, and tricubic interpolation, among others. These methods use known pixels from low-resolution images to compute and infer unknown pixels, achieving high-resolution image reconstruction [

20]. However, these methods struggle to recover detailed information in images, often leading to blurred edges and jagged artifacts. To address the limitations of interpolation methods, Zhang et al. [

21] proposed a single-frame super-resolution method based on rational fractal interpolation, combining rational interpolation and fractal properties. By calculating the local fractal dimension and adaptively adjusting the scaling factor, they improved the retention of edge and texture details. Building on this, Zhang et al. [

22] further improved the method by proposing a super-resolution method based on progressive iterative approximation. By using a non-subsampled contourlet transform, the image was divided into different regions, and different interpolation methods were applied to each region, effectively enhancing edge clarity. However, both of these methods still have shortcomings in adaptive processing. To address this, Song et al. [

23] proposed an adaptive interpolation enlargement method based on local fractal analysis. This method divides the image regions using fractal dimension calculation and selects the appropriate interpolation form for different regions. At the same time, it combines parameter optimization and sub-block adaptive selection strategies to further improve the quality of the enlarged image, making the interpolation process more adaptive and flexible, especially excelling in detail retention. In addition, to address the issue of poor reconstruction performance of interpolation methods on complex textured images, Xue et al. [

24] proposed a structured sparse low-rank representation model, utilizing the structured sparse characteristics of image multi-channel data to maintain consistency in both spatial and frequency domains, thereby improving the fidelity of image details.

However, interpolation-based super-resolution reconstruction methods can only process single-frame images based on prior information, which, although theoretically capable of enlarging a single low-resolution image, often leads to suboptimal reconstruction accuracy in engineering applications. This is because a single frame contains limited image information, and with no new information to supplement, the reconstruction accuracy is typically not ideal in most cases [

25]. In contrast, multi-frame super-resolution methods, by utilizing complementary information and prior knowledge from sequential images, can more effectively retain the image’s detailed features, becoming a key focus of image quality optimization research [

26]. Among the reconstruction-based super-resolution methods, common approaches include convex set projection [

27], iterative back-projection [

28], and maximum a posteriori (MAP) estimation [

29]. The convex set projection method projects the image onto a convex set that satisfies specific constraints, gradually obtaining higher-resolution images; the iterative back-projection method simulates the projection values of low-resolution images and compares them with the original image, gradually correcting the reconstructed image; the MAP estimation method combines prior information and observed data to obtain the optimal reconstructed image by maximizing the posterior probability. Although these methods improve the reconstruction effect to some extent, they suffer from performance degradation when the number of images is insufficient or the noise is high. Additionally, they have high computational complexity, which makes them unsuitable for real-time applications. To address these challenges, Zeng et al. [

30] proposed a multi-frame super-resolution algorithm based on semi-quadratic estimation and improved bilateral total variation regularization. This method effectively reduces the computational complexity of the convex set projection method through robust estimation of adaptive norms, showing excellent performance in edge preservation. Lu et al. [

31] introduced non-local similarity regularization and multi-edge filtering within the MAP framework, significantly improving tolerance to motion estimation errors and overcoming the artifact problem that occurs with iterative back-projection when motion estimation is inaccurate. Li et al. [

32] further proposed an adaptive frame selection and multi-frame fusion method, which filters high-quality frames and fuses multiple frames. This method not only optimizes the MAP estimation in long-distance and distorted images but also reduces the impact of atmospheric distortion and noise on reconstruction quality. It enhances the robustness and applicability of the algorithm, making super-resolution reconstruction more efficient and accurate in different scenarios. Most existing multi-frame image super-resolution reconstruction methods are typically applicable to precise sub-pixel-level micro-displacement sequential images [

33,

34]. However, in part manufacturing environments, due to factors such as slight vibrations of the image acquisition devices, the displacement between the actual acquired sequential part images often exceeds one pixel and can even reach several dozen pixels, which contradicts the small displacement (sub-pixel-level) conditions assumed by most multi-frame super-resolution reconstruction methods [

35]. Therefore, insufficient displacement accuracy between sequential images can lead to significant errors between the reconstructed images and the actual data, limiting the practical application of sub-pixel-level displacement methods in part image quality optimization and subsequently affecting the accuracy of surface quality detection of parts. To address these issues, many researchers have focused on improving the image acquisition process, using techniques such as active displacement imaging or high-precision image measurement to obtain sub-pixel-level displacement sequential images. For example, Chen et al. [

36] used high-precision motion estimation technology to generate a series of video frames with precise micro-displacement; Cui et al. [

37] generated panoramic images with sub-pixel-level displacements through rotational and scale-invariant adjustments; Gui et al. [

38] used the large-angle deflection characteristics of a Risley prism to acquire sequential images; Liang et al. [

39] achieved precise displacement between sequential images by controlling the variable aperture size. These studies effectively solved the displacement accuracy limitation in practical applications through rotation, motion estimation, optical deflection, and aperture adjustments, significantly improving the accuracy and applicability of super-resolution reconstruction. However, these methods typically face high equipment costs and operational complexity, making them not widely feasible for part surface detection applications.

In recent years, learning-based image super-resolution reconstruction algorithms have become the main focus of research in the super-resolution domain, such as Generative Adversarial Networks (GANs) [

40], Multi-class GANs [

41], and Multi-scale Convolutional Networks [

42]. These methods effectively exploit the intrinsic structural relationships between images to complete the reconstruction process, learning richer high-frequency prior information, which helps solve the problems of insufficient reconstruction accuracy in single-frame image super-resolution methods and information distortion in multi-frame image super-resolution methods. However, these learning-based methods typically require large datasets for training and have enormous computational demands, making it challenging to meet the efficiency requirements for real-time processing [

43]. To address this, Fang et al. [

44] and Lu et al. [

45] proposed different lightweight super-resolution networks that reduce the model’s computational load while improving image reconstruction quality. However, these methods face challenges such as a single-task focus and an imbalance between lightweight design and multi-task capabilities. To overcome these challenges, related researchers have improved these lightweight super-resolution networks using Transformer structures, addressing the shortcomings in task specificity, adaptability, and flexibility, enabling stable model operation in multi-task environments while significantly enhancing image reconstruction performance [

46,

47,

48]. Additionally, Liu et al. [

49] proposed a multi-scale residual aggregation network that combines features from different scales to enhance the edge information and detection accuracy of single-frame images while reducing network complexity. Li et al. [

50] used dual-dictionary learning techniques to improve single-frame image reconstruction accuracy and reduce computational resource demands, thereby increasing the accuracy of tool wear monitoring. He et al. [

51] designed a progressive video super-resolution network that alleviates multi-frame information distortion issues by hierarchical feature extraction, achieving high-precision displacement detection for rotating equipment. While these methods address issues of accuracy and information distortion in both single-frame and multi-frame reconstructions, they still significantly reduce computational load. However, learning-based super-resolution reconstruction methods still do not meet the application requirements for high-efficiency processing of part images.

In summary, how to enhance edge clarity while maintaining the detail features of the reconstructed image and reduce the algorithm’s structural complexity remains a challenging issue in the research on part image quality optimization.

4. Experiments and Analysis of the Proposed Method

Remote-sensing images have characteristics such as high resolution, remote detection capability, and wide coverage, which are closely related to industrial application scenarios. These features make remote-sensing images an appropriate experimental subject to verify the effectiveness of the proposed method. Specifically, remote-sensing images share similarities with industrial part images, particularly in terms of lighting, texture, and geometric properties. Remote-sensing images, acquired at various times, can simulate the lighting conditions and regional monitoring of industrial production, as well as the detection of defects on industrial parts. Therefore, using remote-sensing images not only effectively demonstrates the validity of the proposed method but also provides theoretical support for its application in industrial part defect detection.

This paper first conducts super-resolution reconstruction experiments on remote-sensing images with a spatial resolution of 5.5 m. The comparison between this method and classical methods is analyzed in terms of image reconstruction performance. Secondly, to further explore the quantitative evaluation of image quality, this paper combines multiple objective evaluation metrics, including Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), Information Entropy (IE), and Mean Gradient (MG), to analyze the improvement effect of the algorithm on image reconstruction quality.

(1) PSNR is a commonly used image quality evaluation metric widely applied in image processing and computer vision fields. PSNR is calculated based on the Mean Squared Error (MSE) to assess the difference between the original image and the processed or distorted image. Its calculation formula is as follows:

In the equation,

represents the maximum pixel value in the image used to compute the Peak Signal-to-Noise Ratio (PSNR);

MSE represents the Mean Squared Error; and

I(

i,

j) and

K(

i,

j) represent the original image and the denoised image, respectively.

(2) The Structural Similarity Index (SSIM) is primarily used to measure the similarity between two images. Unlike PSNR, SSIM not only considers the global error of the image but also takes into account three aspects: luminance, contrast, and structure. Its value ranges from 0 to 1, with values closer to 1 indicating better preservation of the image structure. The calculation formula is as follows:

In the equation,

,

represent the pixel mean values of the

x,

y images, respectively;

,

represents the variance of the

x,

y image;

represents the covariance between the

x,

y images; and

,

,

. Typically,

B is taken to be 1.

(3) Information Entropy (IE) is an image complexity evaluation metric widely used to measure the amount of detail information contained in an image and the randomness of its grayscale distribution. A higher entropy value indicates a more complex grayscale distribution and more information in the image, whereas images with lower entropy tend to be smoother or contain fewer details. The calculation formula for IE is as follows:

In the equation,

represents the probability of a certain grayscale value occurring, and

H(

x) represents the entropy value.

(4) MG is a metric used to measure the degree of image detail sharpening and the ability to preserve edge features. It is mainly calculated using the mean of pixel gradients. A higher MG value indicates that more edge and detail features are preserved, and the image clarity is higher. A low MG value indicates that the image is blurred or excessively smooth. The calculation formula for MG is as follows:

In the equation,

L,

W represent the image dimensions, and

f(

x,

y) represents the grayscale value at (

x,

y).

In the experiment, all algorithms were implemented based on MATLAB 2019a. The computer operating system was Windows 11, with an Intel Core i7-1170 processor and 16.00 GB RAM.

4.1. Natural Image Super-Resolution Reconstruction Experiment

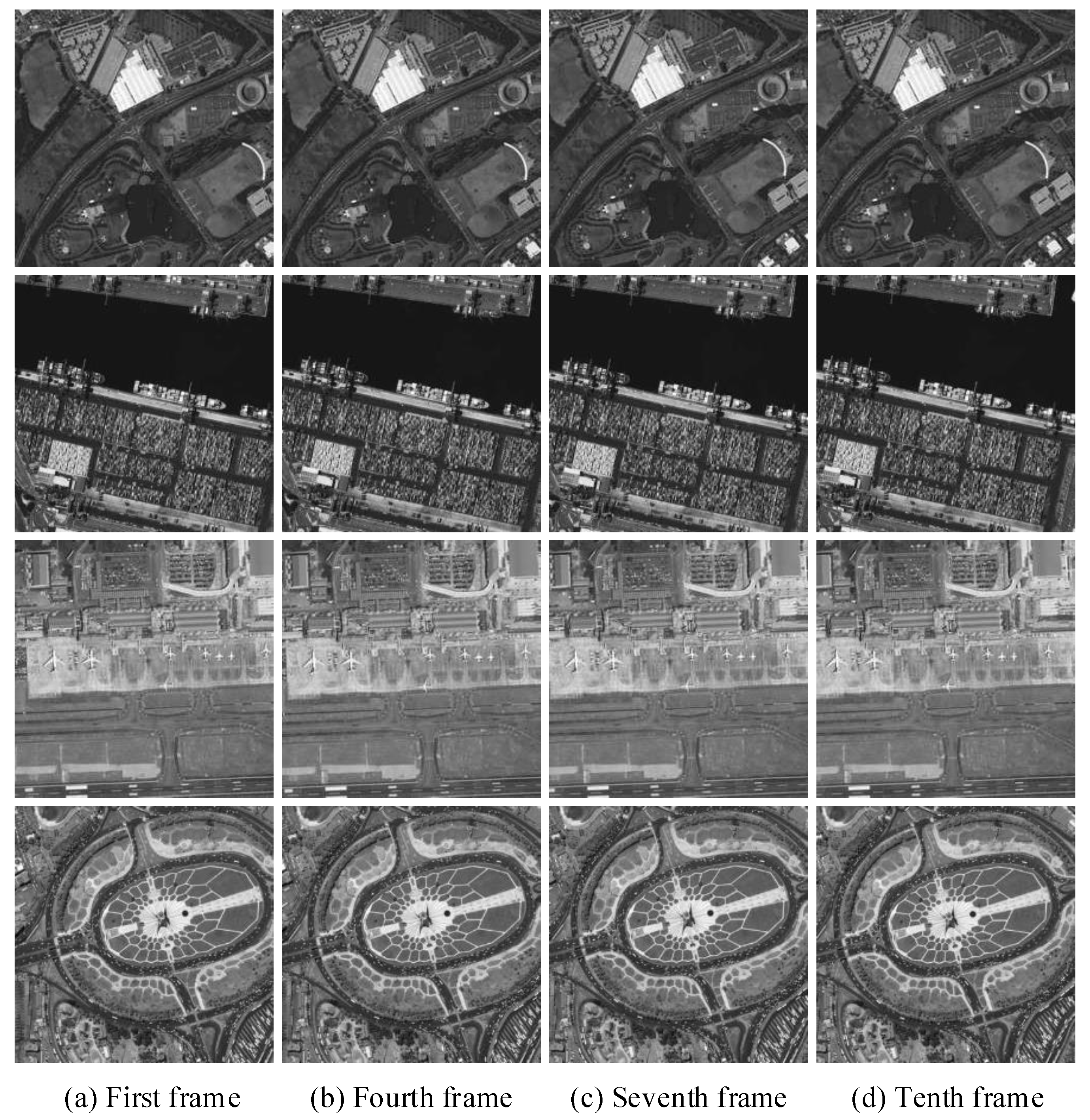

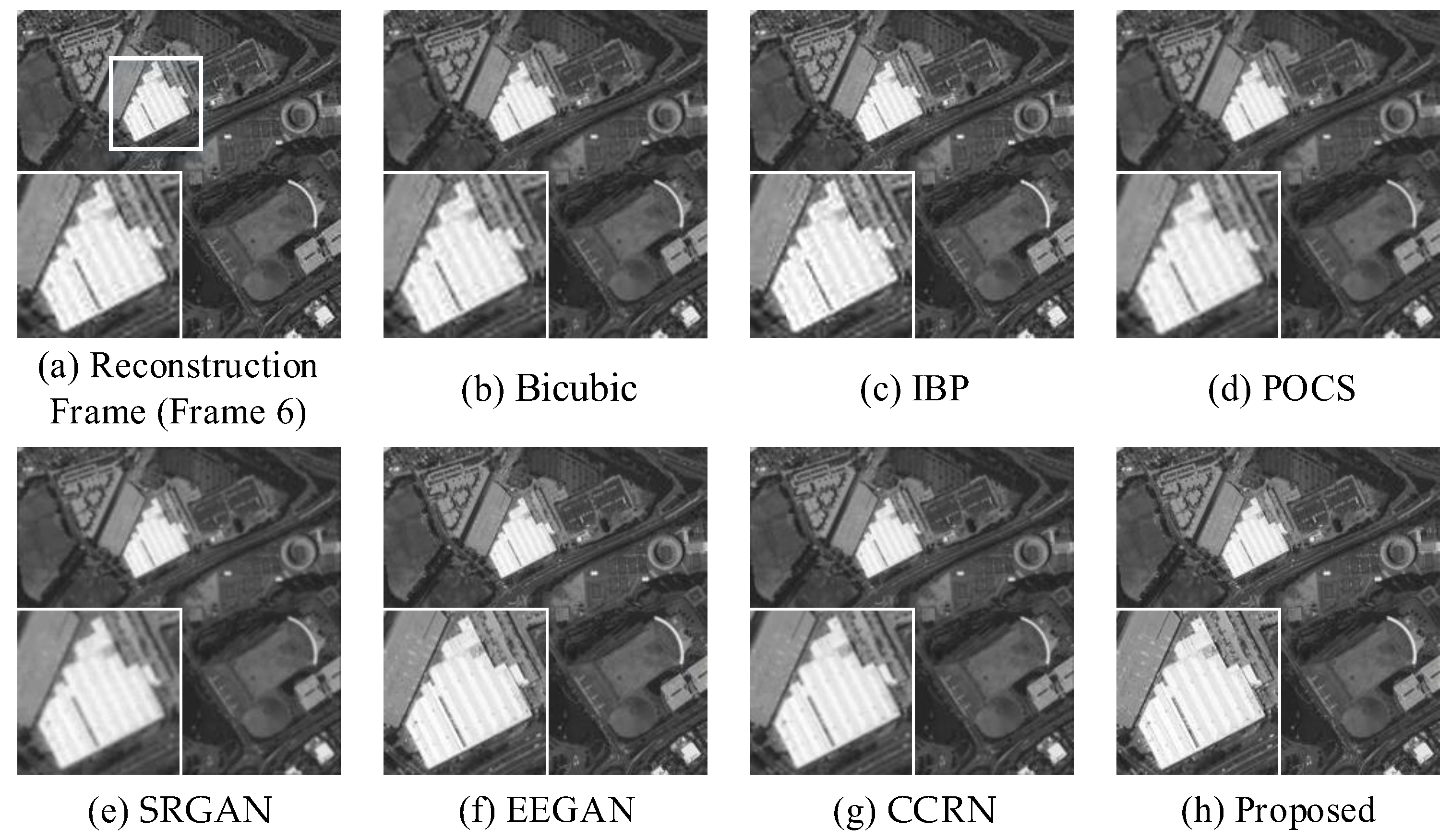

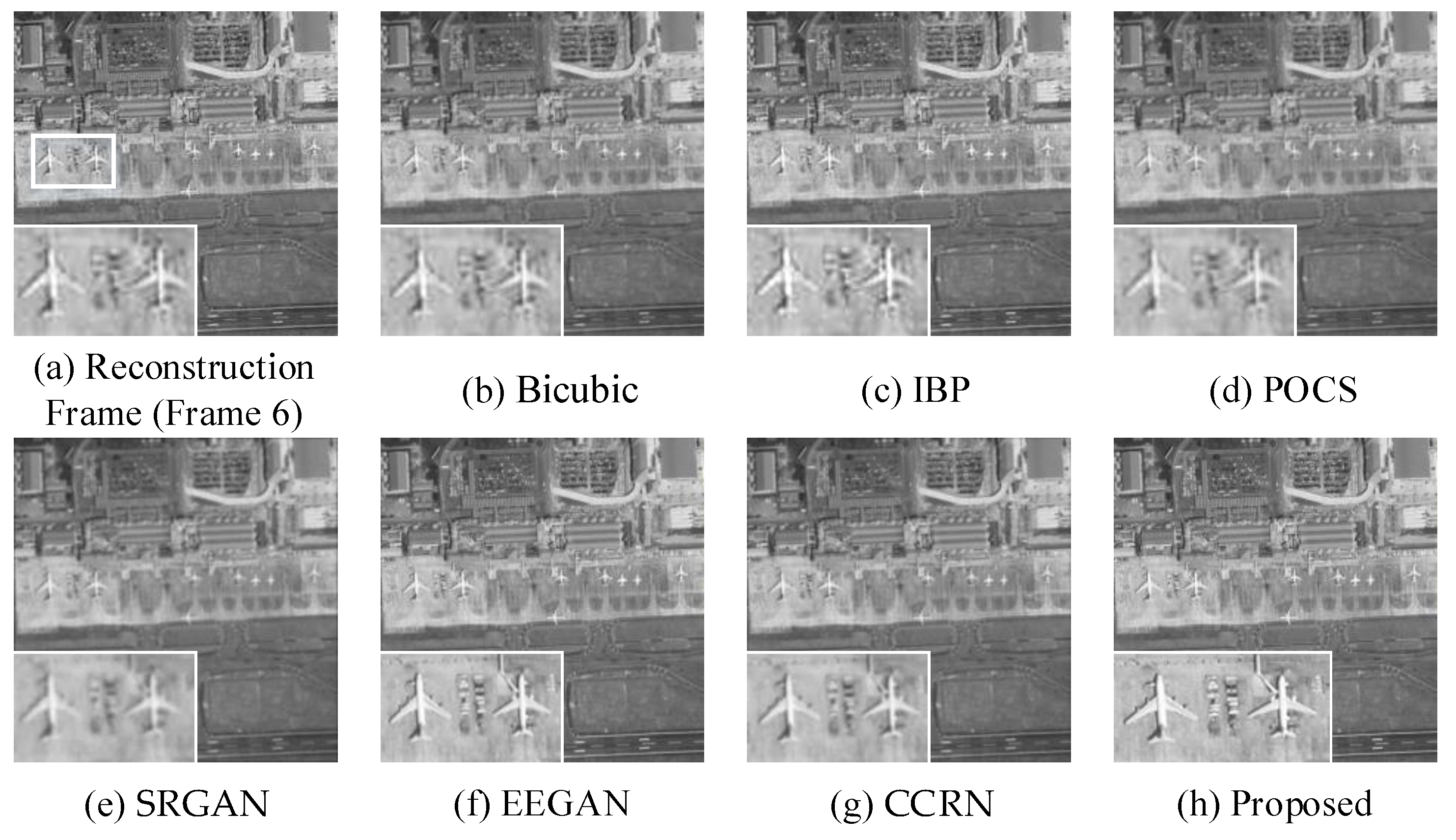

This paper takes a 5.5 m resolution image obtained by a remote-sensing satellite as the experimental object. The image content involves satellite observations of urban building distribution, port transportation, and airport shipping. As shown in

Figure 5, ten frames of each image type are selected for four sets of reconstruction experiments. It can be observed from the figure that these sequence images have low quality, and there are significant pixel displacements between the images.

In addition, the proposed method is compared with Bicubic interpolation, Iterative Back-Projection (IBP), traditional convex set projection (POCS), SRGAN [

14], Edge-Enhanced GAN (EEGAN) [

13], and CCRN [

49] in terms of subjective visual effects and objective experimental data. Furthermore, the image information calculation matrix size used in this algorithm is adaptively set based on the statistical characteristics of different image information. During the experiment, the sixth frame from the middle of the sequence images is chosen as the reconstruction frame. This selection is based on specific considerations of image quality, inter-frame similarity, and motion displacement error. The sixth frame was chosen because it exhibited higher similarity with both the preceding and succeeding frames while minimizing motion displacement errors. The comparison algorithm parameters are set according to the recommended values in the original literature.

Table 1 and

Table 2 show the objective experimental data comparison of reconstructed images using different methods, while

Figure 6,

Figure 7,

Figure 8 and

Figure 9 compare the overall and local subjective visual effects of the reconstructed images from each method. From the objective experimental data of each group, the Bicubic method produces the worst results, with the IBP and POCS methods yielding similar, suboptimal outcomes. The method proposed in this paper demonstrates overall superior performance in terms of Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), and the richness of reconstructed image detail features when compared to reference images. While EEGAN and CCRN show competitive results, the proposed method outperforms them in terms of comprehensive image quality. While SRGAN performs well in terms of Mean Gradient (MG) and Information Entropy (IE), it exhibits poor performance in terms of signal-to-noise ratio. Furthermore, since both the proposed method and EEEGAN apply image edge enhancement during the super-resolution reconstruction process, they show a significant difference in terms of average MG when compared to other methods.

In terms of subjective visual effect comparison,

Figure 6 and

Figure 8 show the reconstructed images of satellite observations of urban building distribution and airport transportation, mainly comparing the edge reconstruction effects of different algorithms. It is evident that the images reconstructed using the Bicubic algorithm have blurry edges and severe loss of high-frequency information. Particularly in the reconstructed images of airport transportation, the overall contours of airplanes are almost unrecognizable. The IBP algorithm produces clearer edge contours but introduces significant noise and jagged artifacts. The traditional POCS algorithm reduces jaggedness and noise compared to the previous two methods, but it still lacks sufficient edge information, resulting in unclear contours and poor visual quality. Overall, the first three algorithms fail to capture enough surface details. SRGAN and CCRN can reconstruct sufficient surface details, but from the perspective of local visual effects, the edges of buildings and airplanes exhibit varying degrees of blurriness, resulting in weak edge perception and inferior visual quality. The images reconstructed by the EEEGAN method and the proposed method are visually similar in both overall and local views, successfully restoring clear building and airplane edge contours, as well as rooftop textures. The airport transportation image reconstructed using the proposed method even reveals details such as the commuter vehicles beside the airplanes, providing abundant surface detail information. However, upon closer inspection, the EEEGAN method introduces slight artifacts along the edges, making the proposed method the best in terms of visual effect.

Figure 7 and

Figure 9 depict the reconstructed images of satellite observations of port transportation and urban traffic, focusing on texture visual effects.

As shown in

Figure 7, images reconstructed using Bicubic and IBP exhibit distorted textures with jagged artifacts, making it impossible to observe the distribution of transported goods. The IBP algorithm even introduces significant noise. The POCS algorithm produces less clear texture details, and in the local view, noticeable white edges appear, leading to suboptimal reconstruction quality. While SRGAN and CCRN reconstruct images with varying degrees of blurriness, they do allow for the observation of goods on ports and ships. The EEGAN method and the proposed method, however, ensure that texture details remain intact without blurring or breakage, achieving clear and reasonable texture details with enhanced edge sharpness.

Figure 9 shows the satellite observation of urban traffic in a certain area where image quality directly affects traffic flow monitoring. The images reconstructed using Bicubic, IBP, and traditional POCS algorithms suffer from severe texture distortion, deformation, and blurriness. The SRGAN method also results in blurry textures, making it impossible to observe vehicle movements with these four methods. CCRN reconstructs images with clearer textures compared to the previous methods, revealing distinguishable vehicle contours but failing to capture specific vehicle movement details. Both the EEEGAN method and the proposed method accurately display vehicle movement, but from the local view, the proposed method provides a sharper and clearer reconstruction.

Therefore, based on the above subjective and objective experimental data, the effectiveness of the proposed algorithm has been validated.

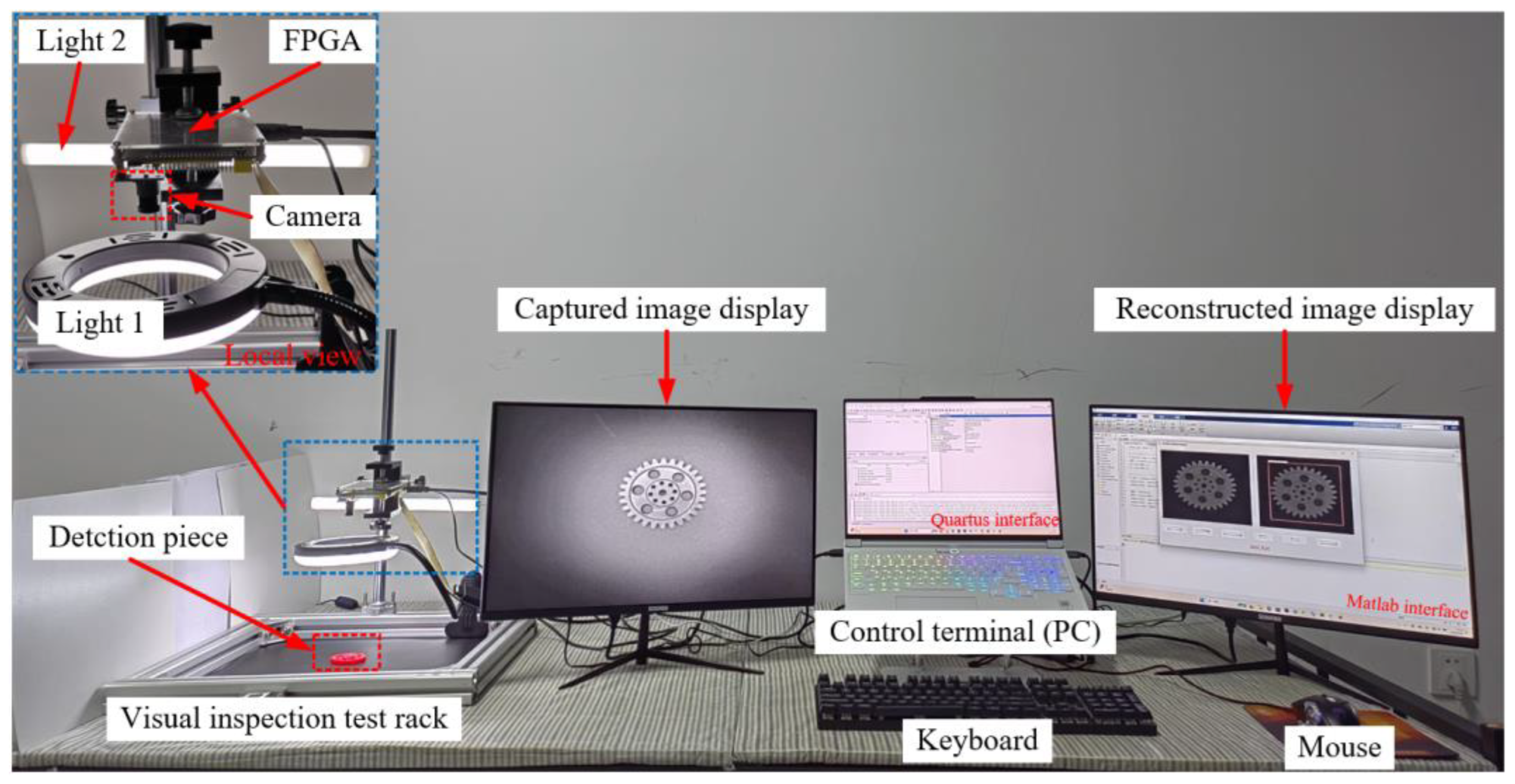

4.2. Part Image Super-Resolution Reconstruction Experiment

To further validate the practical application and stability of the proposed algorithm, the method is applied to the image preprocessing stage of industrial production workpiece detection. The experimental platform and related equipment are shown in

Figure 10, consisting mainly of a control terminal (PC), monitor, Field-Programmable Gate Array (FPGA) development board, light source, and visual inspection frame. The FPGA development board is equipped with an OV5640 camera, which is responsible for capturing experimental images, while the visual inspection frame is used to secure the camera and place the test pieces. The experimental process is as follows: First, the FPGA captures test images and adds Poisson–Gaussian mixed noise, with the results displayed in real-time on the monitor on the left side of

Figure 10. The experiment personnel can control the acquisition process through the Quartus software interface. After image acquisition, the data are transferred to MATLAB 2019a for image quality optimization, and the final optimized image is displayed on the monitor on the right side of

Figure 10. The control terminal is a PC that manages the entire experiment’s operation and control, running the Windows 11 operating system and equipped with an Intel Core i9-14900HX processor and 32 GB of memory. The software platforms used in the experiment include Quartus and MATLAB.

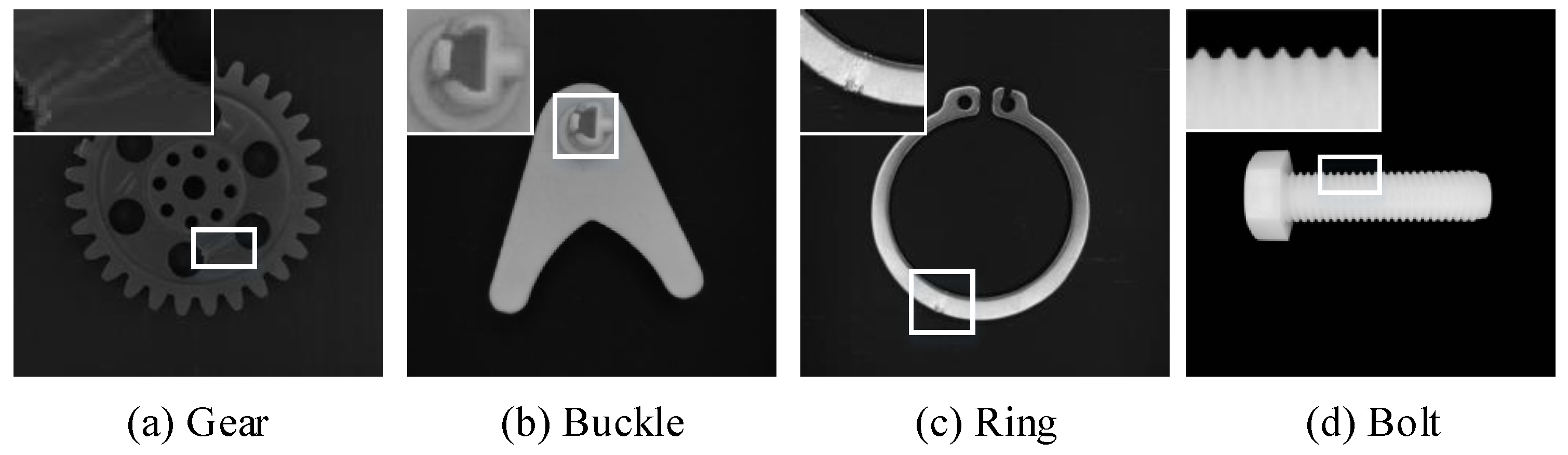

In actual production, due to harsh production environments, uneven surface lighting, and limited imaging device resolution, the images captured by the imaging equipment have low resolution and blurry detail features, resulting in low accuracy in defect detection before the product is shipped, with occurrences of false positives and false negatives. Therefore, before defect detection, the issue of low image quality must be addressed by improving the image quality.

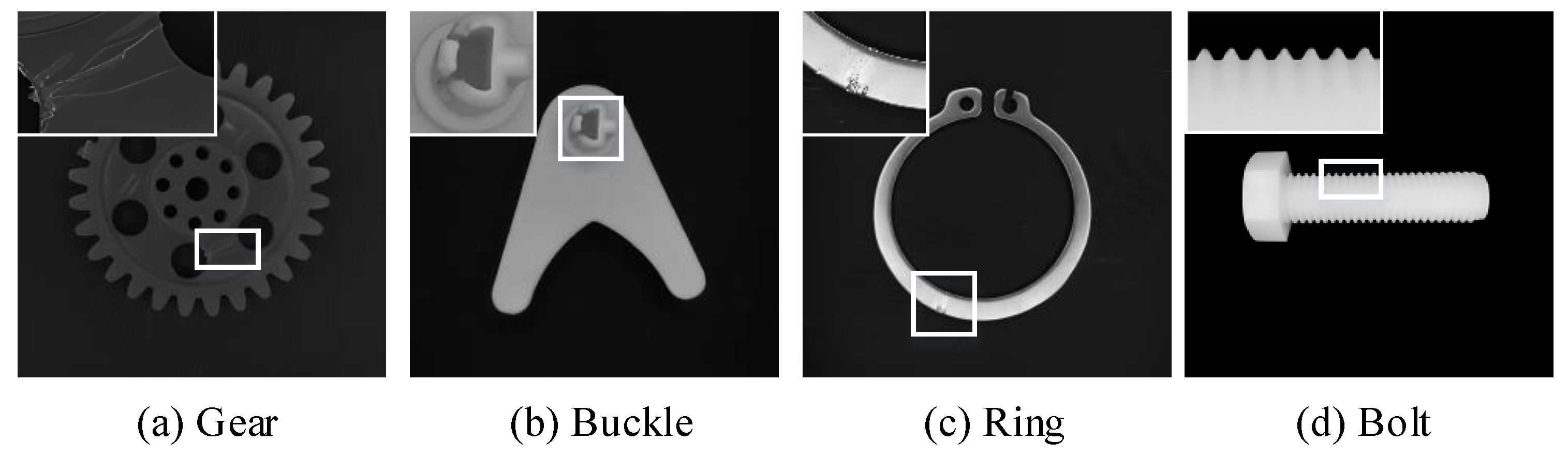

Figure 11 shows the images of workpieces with three different materials and shapes collected during the experiment. It is evident that these images fail to clearly present the detailed features of the workpieces.

Figure 12 shows the images obtained after processing the original images using the proposed method for product defect detection. Comparing the processed images with the original images in terms of overall and local visual effects, the buckle detection image after reconstruction exhibits clearer detail features and more prominent edges, effectively highlighting minor product surface defects. The gear detection image processed by the algorithm maintains good edge continuity, especially for small line cracks or burrs on the gear surface, where the algorithm sharpens the edges, aiding in feature localization for defect detection. The circular workpiece detection image shows significantly improved surface texture detail clarity after algorithmic preprocessing, with small pitting defects on the workpiece surface that are well defined, improving the accuracy of surface defect detection. Finally, the reconstructed bolt detection image shows clearer threads, which is beneficial for subsequent thread processing quality detection. Additionally, to verify the stability of the proposed method in processing workpiece detection images with different relative displacements, CCD cameras were used to capture detection images at different frame rates. The relative displacement between images in different frame rate sequences varied significantly, with smaller relative displacements for higher frame rates and larger relative displacements for lower frame rates.

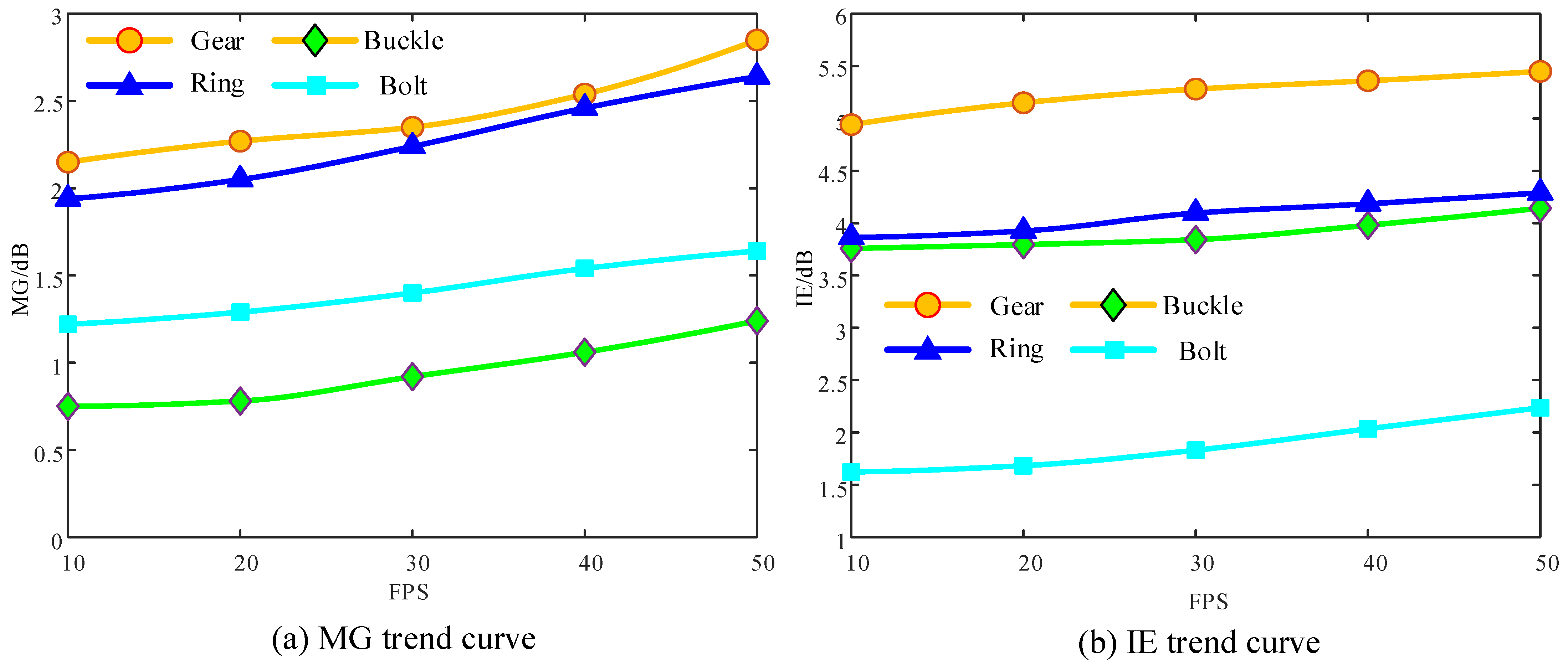

Figure 13 shows the IE and MG trend curve images for different workpiece detection images at frame rates between 10 and 50 FPS.

In terms of IE, the values for different frame rate sequences differ by no more than 0.5 dB. In terms of MG, the difference is no more than 1 dB. Overall, both IE and MG results show a relatively stable state for the images processed with the proposed method, regardless of the displacement. In summary, the application experiment results demonstrate that the proposed method, when applied in actual production, can optimize the quality of workpiece detection images and ensure good processing stability, which is beneficial for subsequent image processing and application.

5. Discussion of the Proposed Method

The adaptive multi-scale object tracking-based part image super-resolution reconstruction method proposed in this paper demonstrates significant advantages in edge detail preservation, feature restoration, and computational efficiency, showing superior performance in multiple experimental scenarios. The experimental results show that this method significantly outperforms existing methods in quantitative metrics such as PSNR and SSIM, with an average PSNR value of 32.03 dB and an average SSIM value of 0.9412. Additionally, in the comparison of IE and MG metrics, the proposed method also exhibits superior detail information extraction capability and gradient retention performance, achieving values of 8.0527 and 7.9444, respectively. These results confirm that the proposed method can achieve high-quality image reconstruction under different motion and displacement conditions, with high stability in processing edge and texture features.

From the analysis of natural image experiments, the method proposed in this paper demonstrates excellent performance in various remote-sensing image reconstruction scenarios. For instance, the proposed method can clearly restore the edge contours of buildings, the structure of airport runways, and the distribution details of port cargo. In contrast, traditional methods such as IBP and POCS encounter issues like artifacts and blurred edges when processing these complex images. Specifically, in the “airport transportation remote sensing image” experiment, the proposed method achieved better edge accuracy, making the reconstructed image visually clearer and more intuitive, providing important support for further analysis of remote-sensing images. Additionally, in the “urban building distribution” experiment, the method successfully restored the texture features of buildings, which is of significant importance for high-resolution image applications in complex environments. Moreover, in the industrial inspection scenario, the proposed method was practically applied and verified through a visual surface quality detection experimental platform. The experiment showed that under different frame rates (10–50 FPS), the fluctuations in the IE and MG indicators of the workpiece detection images processed by the proposed method remained within 0.5 dB and 1 dB, respectively, fully proving the stability and robustness of the method in real-time detection. Furthermore, the results of the workpiece detection experiment indicate that the proposed method effectively restores the fine structure of gear teeth, the details of screw threads, and the integrity of buckle edges, further improving the accuracy of industrial inspection and quality control capabilities.

At the same time, compared to existing methods, the proposed method demonstrates unique advantages and innovations. Consistent with the edge-enhanced network method proposed by EEGAN, the proposed method maintains high accuracy in edge feature extraction while further optimizing the detail restoration performance of edge information. Compared to the traditional edge interpolation method based on edge interpolation proposed by Liu et al. [

49], the proposed method overcomes the problem of excessive smoothing in complex edge regions through an adaptive block strategy, achieving superior performance in detail preservation. Moreover, compared to the feature extraction model based on deep learning proposed by Shao et al. [

56], the proposed method achieves more efficient reconstruction performance without requiring a learning framework, particularly excelling in resource-limited hardware environments.

Despite its advantages, the proposed method also has certain limitations. First, the experimental data are primarily focused on remote-sensing images and industrial inspection, which limits the method’s general applicability in other complex scenarios. Second, the adaptive block strategy may lead to the loss of local details when handling regions with high texture complexity due to the limitations of block precision. As shown in

Table 1, the results for Experiment 3, involving “airport shipping” images, highlight the impact of texture complexity on performance. This type of image contains a significant amount of texture detail, with many regions exhibiting complex textures. In this case, the SSIM value of the proposed method is 0.9084, which is lower than CCRN’s 0.9254 and EEGAN’s 0.9194. This performance drop can be attributed to the adaptive block strategy used in the proposed method. When handling areas with high texture complexity, the block precision is limited, which leads to the loss of local details and a decrease in structural similarity, as measured by SSIM, particularly in complex texture regions. Additionally, compared to deep learning models, the proposed method still requires improvement in terms of speed optimization for large-scale data processing and adaptive capability for specific tasks. To address these limitations, future research could focus on the following optimizations:

- (1)

Expanding the diversity of the experimental dataset by incorporating scenarios such as medical imaging and video surveillance to test the generalizability of the method;

- (2)

Optimizing the adaptive block algorithm and integrating more feature dimensions to improve the handling capability for complex regions;

- (3)

Exploring the combination of the proposed method with deep learning frameworks, such as using Convolutional Neural Networks (CNN) or Transformer models, to further exploit image prior information for achieving higher reconstruction performance and greater applicability.

In summary, the adaptive multi-scale object tracking-based part image super-resolution reconstruction method proposed in this paper has been experimentally validated, demonstrating dual advantages in detail restoration and computational efficiency. It provides an effective technical solution for practical industrial inspection and complex remote-sensing image scenarios. Future research will focus on further optimizing the algorithm structure and exploring its potential in a broader range of application fields.

6. Conclusions

This paper addresses the image quality issues in part image surface defect detection by proposing a part image super-resolution reconstruction method based on adaptive multi-scale object tracking. The method uses an image adaptive block strategy, dynamically segmenting the reconstruction frame based on the similarity, statistical characteristics, and local features of the image blocks. Meanwhile, the optical flow method is employed to accurately estimate the motion parameters between sequence images, effectively improving feature matching accuracy and computational efficiency. Furthermore, the method introduces the concept of correlation filtering target tracking, constructing a feature tracking sampling function algorithm model, successfully solving the information distortion caused by integer pixel-level displacement in sequence part images, and enhancing the accuracy of capturing similar feature information across multiple frames. This significantly improves the quality of part images, providing reliable data support for subsequent surface defect detection. The research results demonstrate that the proposed method exhibits significant advantages in edge detail preservation, texture feature restoration, and computational efficiency. In multiple experimental setups, the proposed method outperforms traditional methods in quantitative metrics such as PSNR and SSIM and demonstrates higher precision in detail evaluations like information entropy and Mean Gradient, further validating its robustness and applicability in complex scenarios. Additionally, in the preprocessing stage of actual industrial workpiece detection images, this method optimizes image quality, highlighting edge features while improving the accuracy and efficiency of subsequent defect detection. Through experimental analysis of detection images with relative displacement sequences under different frame rates, the results show that the method effectively verifies its stability and robustness in processing large displacement multi-frame images.

Despite its advantages, the proposed method still experiences some loss of local details when handling regions with complex textures, and its real-time processing capability for large-scale data needs further optimization. Future research directions include the following: (1) expanding the diversity of the experimental dataset by applying the method to more fields, such as medical imaging, video surveillance, etc., to validate its generalizability; (2) optimizing the adaptive block algorithm to enhance the processing capability for complex texture regions; and (3) combining with deep learning frameworks to further explore image prior information, improving the method’s reconstruction performance and computational efficiency.

In summary, the method proposed in this paper demonstrates significant advantages in edge feature extraction and detail restoration, providing an effective technical solution for part image quality optimization and high-quality reconstruction. It has been validated for its broad application potential in visual inspection and super-resolution reconstruction in complex scenarios.