Abstract

The global trend toward Industry 4.0 has intensified the demand for intelligent, adaptive, and energy-efficient manufacturing systems. Machine learning (ML) has emerged as a crucial enabler of this transformation, particularly in high-mix, high-precision environments. This review examines the integration of machine learning techniques, such as convolutional neural networks (CNNs), reinforcement learning (RL), and federated learning (FL), within Taiwan’s advanced manufacturing sectors, including semiconductor fabrication, smart assembly, and industrial energy optimization. The present study draws on patent data and industrial case studies from leading firms, such as TSMC, Foxconn, and Delta Electronics, to trace the evolution from classical optimization to hybrid, data-driven frameworks. A critical analysis of key challenges is provided, including data heterogeneity, limited model interpretability, and integration with legacy systems. A comprehensive framework is proposed to address these issues, incorporating data-centric learning, explainable artificial intelligence (XAI), and cyber–physical architectures. These components align with industrial standards, including the Reference Architecture Model Industrie 4.0 (RAMI 4.0) and the Industrial Internet Reference Architecture (IIRA). The paper concludes by outlining prospective research directions, with a focus on cross-factory learning, causal inference, and scalable industrial AI deployment. This work provides an in-depth examination of the potential of machine learning to transform manufacturing into a more transparent, resilient, and responsive ecosystem. Additionally, this review highlights Taiwan’s distinctive position in the global high-tech manufacturing landscape and provides an in-depth analysis of patent trends from 2015 to 2025. Notably, this study adopts a patent-centered perspective to capture practical innovation trends and technological maturity specific to Taiwan’s globally competitive high-tech sector.

1. Introduction

The rapid evolution of industrial systems, spurred by globalization, digitalization, and the emergence of Industry 4.0, has significantly reshaped the manufacturing, energy, chemical, and logistics sectors worldwide [1,2]. In this context, industrial optimization and predictive control have become essential for ensuring production efficiency, cost-effectiveness, sustainability, and product quality. These systems facilitate precise resource allocation, waste reduction, enhanced throughput, and operation near optimal setpoints—even in dynamic and uncertain conditions [3,4].

However, conventional optimization techniques—including linear programming, model predictive control (MPC), and heuristic algorithms— increasingly struggle to manage industrial data characterized by high volume, velocity, variety, and veracity, collectively known as the “4Vs” [5,6]. These traditional models often depend on simplified assumptions, require significant domain-specific tuning, and are generally inflexible when faced with nonlinear dynamics or unforeseen perturbations [7,8].

Machine learning (ML) has emerged as a transformative solution, offering adaptive, data-driven alternatives for system modeling, optimization, and control [9,10]. By learning patterns from historical and real-time sensor data, ML has facilitated significant advancements in predictive maintenance, process tuning, quality assurance, and anomaly detection [11,12]. Techniques such as supervised learning, including support vector machines (SVMs), random forests (RFs), and neural networks along with unsupervised learning (e.g., clustering, PCA) and reinforcement learning (e.g., Q-learning, deep reinforcement learning) have demonstrated considerable industrial impact [13]. These techniques were selected due to their high relevance and frequent practical adoption in Taiwan’s high-tech manufacturing sector, as confirmed by the analyzed patent data and industrial case studies.

Recent applications illustrate this impact across various sectors. For instance, deep learning has enhanced energy optimization in mining operations [8], while natural language processing (NLP) combined with data mining has improved industrial communication protocols [6]. Although global industries have increasingly adopted ML, Taiwan’s high-tech manufacturing sector provides a uniquely rich and under-examined context for ML deployment. Leading firms such as TSMC, Foxconn, China Steel, Delta Electronics, and MediaTek have used ML for wafer yield enhancement, robotic scheduling, steel production optimization, and supply chain forecasting [7,14]. However, these implementations encounter distinct local challenges, including siloed data systems, the coexistence of legacy control equipment, and concerns over data privacy [3,12].

This paper offers a thorough analysis of both global and Taiwan-specific patents, an aspect often overlooked in prior ML reviews, to highlight technological progress and industry maturity. Patents provide valuable insights into innovative trends and serve as indicators of market readiness. For example, TSMC holds patents related to adaptive process control using ML, Foxconn focuses on smart manufacturing logistics, and MediaTek specializes in ML-enhanced chip testing [5,10]. This review suggests that Taiwan’s unique industrial landscape requires tailored ML frameworks that differ from general global methodologies. It thoroughly explains how ML is transforming industrial optimization and control in Taiwan and beyond by combining academic literature, real-world case studies, and patent analytics. Additionally, it emphasizes underexplored opportunities where Taiwan’s industries can strategically invest to boost their global competitiveness. Compared to other major manufacturing hubs, such as China, South Korea, and the United States, Taiwan’s high-mix, high-precision production environment presents distinct challenges and opportunities for deploying scalable ML solutions.

The remainder of this paper is organized as follows. Section 2 defines the scope and historical development of industrial optimization and predictive control. Section 3 categorizes cutting-edge ML techniques and their applications in process, energy, and maintenance optimization. Section 4 discusses advancements in predictive control, with a focus on model-free and learning-based frameworks. Section 5 examines the significant challenges in implementation, including data quality, explainability, and integrating legacy systems. Section 6 outlines future research directions, such as hybrid architecture, causal inference, and federated learning. Section 7 summarizes key insights and emphasizes pathways toward scalable and reliable ML deployment in industry.

2. Industrial Context and Patent Trends in Optimization and Predictive Control

Industrial optimization and predictive control are crucial for enhancing the efficiency, resilience, and competitiveness of modern manufacturing systems. As global industries face increasing pressure from digital transformation, environmental regulations, and rapidly changing market demands, the ability to dynamically optimize production processes and ensure consistent product quality has become a strategic necessity. Recent advances in machine learning (ML), alongside the evolution of Industry 4.0, have significantly broadened the capabilities of traditional optimization and control frameworks. ML allows industrial systems to adapt to process drift, learn from complex operational data, and make proactive, data-driven decisions, exceeding the limitations of static, rule-based, or model-dependent approaches.

Patent data from 2015 to 2025 reflects this paradigm shift. There has been a global surge in filings related to industrial optimization and predictive control, particularly in the semiconductor fabrication, energy management, and smart logistics sectors. Taiwan, propelled by its globally competitive electronics and high-tech manufacturing industries, has emerged as a key player in this trend.

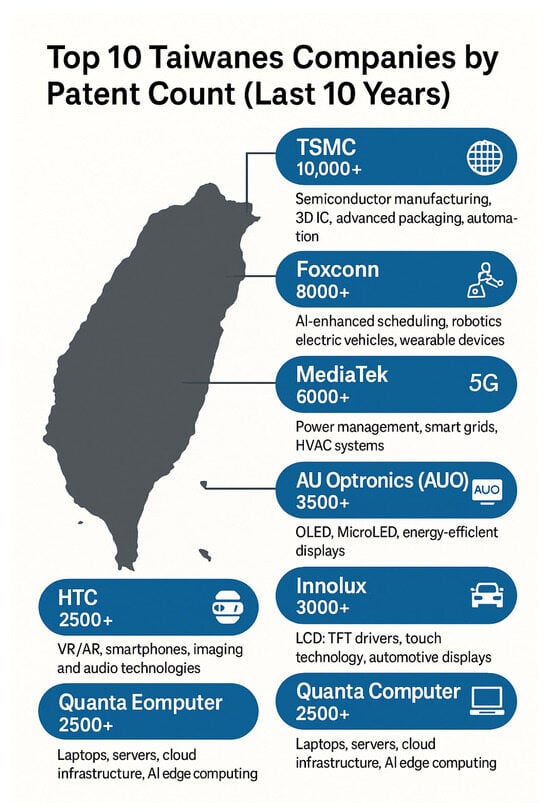

As illustrated in Figure 1, Taiwan’s leading patent filing companies over the past decade include TSMC, Foxconn, MediaTek, Delta Electronics, AU Optronics (AUO), Innolux, United Microelectronics Corporation (UMC), HTC, Quanta Computer, and Compal Electronics.

Figure 1.

Top 10 Taiwanese companies by patent count (2013–2023), spanning sectors such as semiconductors, robotics, 5G, energy systems, cloud AI, and AR/VR technologies.

These firms collectively represent the technological backbone of Taiwan’s innovation ecosystem, encompassing semiconductor design, display technologies, 5G connectivity, edge AI, and smart manufacturing platforms. These firms collectively represent the core of Taiwan’s innovation ecosystem, spanning semiconductor production, display technologies, cloud infrastructure, mobile devices, and smart factory platforms. It is worth noting that Taiwan’s patent filings in industrial optimization and predictive control have consistently increased over the past decade, securing a prominent global position. Industry leaders such as TSMC, Foxconn, and Delta Electronics have spearheaded key technological innovations in semiconductor manufacturing, smart automation, and energy management. Their concerted efforts enhance Taiwan’s domestic industrial competitiveness and reinforce its critical role in the global Industry 4.0 landscape. Additionally, a comparative assessment of patent quality, novelty, and industrial impact was conducted to highlight the technological significance beyond mere patent counts.

Among these, TSMC leads with patents in semiconductor process control, 3D integration, and advanced packaging. Foxconn has concentrated on AI-driven robotic scheduling and automation technologies. Delta Electronics has focused on smart grid solutions, HVAC optimization, and energy-efficient control systems. Companies like MediaTek, AUO, and Innolux have also demonstrated robust activity in edge AI, display innovations, and 5G-related architectures, highlighting Taiwan’s strategic depth in physical production and cyber–physical systems design. To provide technical depth and relevance, this review emphasizes the following three representative sectors that exemplify the convergence of ML, optimization, and predictive control in Taiwan’s industrial landscape:

- TSMC—advanced semiconductor manufacturing and yield control.

- Foxconn—smart assembly and AI-enhanced production scheduling.

- Delta Electronics—energy-efficient systems and cyber–physical optimization

This focused lens enables a more in-depth examination of how machine learning-driven control strategies are transforming mission-critical operations within Taiwan’s high-tech sector, while also providing transferable insights for global manufacturing contexts. Other sectors, such as biotechnology and small to medium-sized enterprises, were deliberately excluded due to insufficient patent data and the lack of comparable industrial scale.

2.1. Definitions and Scope

Industrial optimization involves applying mathematical and algorithmic techniques to enhance operational efficiency, lower production costs, improve yield, and manage resource allocation under constraints [15,16]. Predictive control, particularly Model Predictive Control (MPC), builds on these foundations by utilizing dynamic system models to predict future states over a finite horizon and calculate optimal control actions in real-time [17,18]. These methods have long functioned as vital tools in high value manufacturing sectors, facilitating systematic process control under complex operational boundaries.

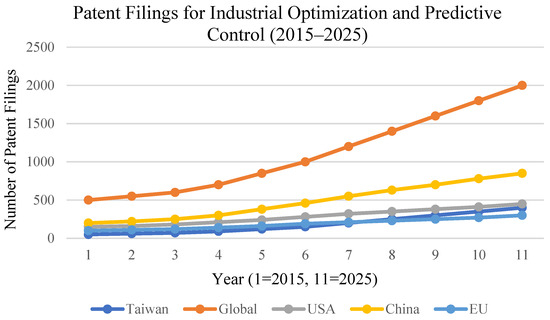

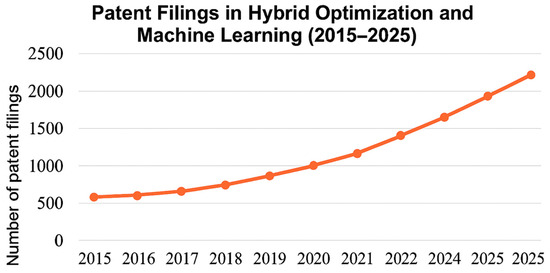

The patent data cited in this review were retrieved from the USPTO (United States Patent and Trademark Office) and TIPO (Taiwan Intellectual Property Office) databases. Searches were conducted using targeted keywords such as “machine learning”, “industrial optimization”, and “predictive control”, focusing on patent filings from 2015 to 2025, as illustrated in Figure 2. All analyzed patent data are publicly accessible and anonymized; therefore, no additional ethical approval is required.

Figure 2.

Annual patent filings in industrial optimization and predictive control by sector (2015–2025), comparing Taiwan’s trajectory with leading global regions such as China, the USA, and South Korea. Patent counts are aggregated by the sector to illustrate relative growth and technological focus.

This approach ensures that the analysis captures relevant technological developments within a contemporary timeframe while maintaining consistency across global and local patent datasets. Taiwan’s manufacturing landscape presents unique challenges and opportunities for optimization. The prevalence of high-mix, low-volume production modes—particularly in sectors such as semiconductors and precision machinery—demands high adaptability, advanced process traceability, and robust control strategies. However, many factories continue to rely on legacy equipment and heterogeneous infrastructures that often lack seamless interoperability with modern intelligent systems. Additionally, linguistic and technological diversity across production lines can result in fragmented operator interfaces, inconsistent sensor protocols, and variable data standards. Other sectors, such as biotechnology and small to medium-sized enterprises, were deliberately excluded due to the limited availability of patent data and the absence of comparable industrial scale.

To overcome these constraints, Taiwanese manufacturers are adopting several forward-looking strategies. These strategies include lightweight machine learning models suitable for deployment on embedded systems, hybrid architectures that enable seamless integration of cloud-to-edge control, and privacy-preserving techniques such as federated learning. These innovations facilitate the implementation of predictive and adaptive control within existing infrastructure, thereby reducing operational disruptions and cybersecurity risks, which are key factors in mission-critical environments.

In this context, Taiwan presents a compelling testbed for developing scalable, interpretable, and resource-efficient ML-based control systems. The convergence of real-time production demands, legacy constraints, and rapid innovation creates an ideal environment for stress-testing next-generation industrial optimization technologies. The following section examines how these modern data-driven methods build upon, extend, and sometimes surpass classical optimization frameworks.

2.2. Classical Approaches and Their Limitations

Classical optimization techniques have long provided the theoretical foundation for industrial decision-making and process control, offering mathematically rigorous methods to enhance system performance under constraints. These techniques have been utilized across various sectors, including manufacturing, logistics, energy, and finance, where structured decision frameworks are essential. Major categories include the following:

- Linear and Integer Programming (LP/IP)

Linear programming offers globally optimal solutions to problems characterized by linear objective functions and constraints, typically solved using simplex or interior-point methods [19]. Integer programming extends this framework to discrete variables, making it essential for resource allocation, job scheduling, and capacity planning [20]. While LP/IP remains fundamental in supply chain optimization, its dependence on linearity restricts its applicability in modern industry’s complex, nonlinear, and stochastic systems standard.

- Nonlinear Programming (NLP)

NLP addresses optimization problems that involve nonlinear relationships among variables and constraints. It has been widely used in chemical process control, mechanical system design, energy modeling, and semiconductor optimization [21]. However, NLP solvers are often computationally expensive, highly sensitive to initial conditions, and require assumptions of continuity and differentiability, which limit their robustness in volatile industrial environments.

- Metaheuristics

Metaheuristic algorithms, including genetic algorithms, particle swarm optimization, simulated annealing, and ant colony optimization, provide flexible strategies for addressing NP-hard and non-convex problems [22,23]. Drawing inspiration from biological or physical systems, these techniques have been effectively employed in process layout design, complex scheduling, and manufacturing network optimization. Despite their versatility, metaheuristics often lack guaranteed convergence, require parameter tuning, and may yield suboptimal or inconsistent outcomes.

- Summary of Limitations

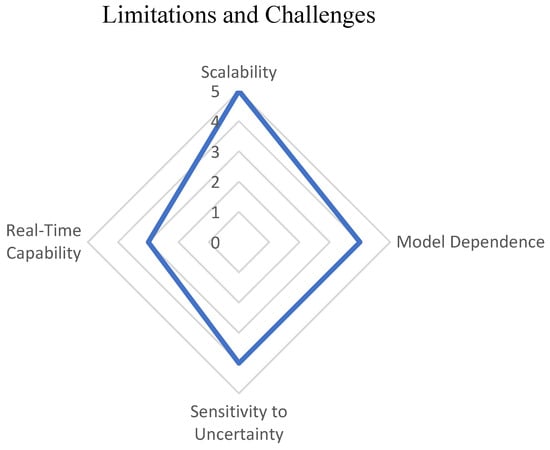

While classical methods provide essential analytical tools, they encounter growing limitations in real-world applications due to system complexity, variability, and real-time constraints. As shown in Figure 3, four critical challenges are often mentioned:

Figure 3.

Limitations of classical optimization techniques across key performance dimensions: scalability, model dependence, uncertainty sensitivity, and real-time capability.

- (1)

- Scalability: The computational cost increases rapidly with the number of variables and constraints, restricting use in large-scale systems.

- (2)

- Model Dependence: Accurate and complete mathematical models are frequently unavailable or impractical in dynamic, heterogeneous environments.

- (3)

- Sensitivity to Uncertainty: Most classical techniques assume deterministic inputs, diminishing resilience to disturbances, equipment drift, or demand volatility.

- (4)

- Limited Real-Time Responsiveness: Traditional solvers are typically utilized offline and cannot adapt swiftly enough for real-time control.

These limitations are especially pronounced in semiconductor and electronics manufacturing, where production involves nonlinear interactions, time-varying disturbances, and sub-nanometer process control requirements. Consequently, industries are increasingly adopting hybrid and ML-based optimization architectures that better manage complexity, uncertainty, and speed.

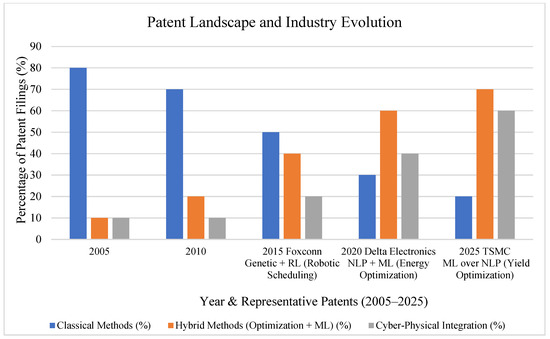

2.2.1. Patent Landscape and Methodological Shift

Patent activity serves as a forward-looking indicator of innovation trajectories. A review of global patent filings from 2015 to 2025 reveals a significant shift from classical mathematical programming to hybrid approaches that integrate optimization with machine learning (ML), statistical modeling, and cyber–physical system (CPS) frameworks. As illustrated in Figure 4, patents filed before 2010 primarily focused on classical techniques, such as deterministic process control and batch scheduling. In contrast, filings since 2015 increasingly represent blended architectures, where ML is employed for adaptive learning, model updating, and real-time inference. Notably, Taiwanese firms have emerged as leaders in this methodological transition:

Figure 4.

Evolution of global patent filings in industrial optimization (2015–2025). A clear transition from classical methods to hybrid and cyber–physical architectures, with key contributions from Taiwanese firms such as Foxconn, Delta, and TSMC.

- Foxconn has filed patents that combine genetic algorithms with reinforcement learning for scheduling robots in smart factories.

- Delta Electronics has developed NLP–ML hybrid systems to optimize HVAC energy efficiency.

- TSMC has applied ML layers to NLP-based yield optimization processes in semiconductor fabrication.

This trend reflects broader shifts driven by the rise of CPS and IIoT systems. These environments demand computational intelligence, distributed adaptability, online learning, and cloud–edge orchestration. Recent patents emphasize hybrid MPC frameworks, adaptive optimization policies, and resilient edge computing systems, underscoring the diminishing dominance of purely equation-based optimization.

2.2.2. The Evolving Role of Classical Methods

Despite the rise of advanced machine learning techniques, classical optimization methods remain crucial in both theoretical and practical fields. From a theoretical perspective, these methods offer distinct advantages. They provide formal guarantees of convergence and optimality, provided that certain assumptions are satisfied. Their structured and deterministic nature enhances interpretability, making them well-suited for applications where model transparency is crucial. Furthermore, for small to medium-sized optimization problems, classical approaches often remain computationally efficient and robust.

In practical contexts, classical methods are increasingly utilized not as standalone tools but as integral components within broader, hybrid systems. For example, they frequently serve as performance baselines when evaluating machine learning-based optimization solutions. Additionally, classical techniques, such as linear programming, are embedded as modules within larger control frameworks, including model predictive control (MPC). Within data-driven pipelines, they contribute to enhancing interpretability and enabling the explicit modeling of constraints, which is often challenging for purely black-box learning models.

Overall, the role of classical optimization has evolved from a dominant solution paradigm to a complementary and supportive mechanism. These methods are now commonly integrated into flexible, intelligent, and context-aware optimization architectures—a shift that will be further examined in the following section.

2.3. Machine Learning in Industrial Applications: Key Challenges

Machine learning (ML) has become a pivotal technology in industrial optimization and predictive control. However, its successful application in manufacturing and related fields is limited by several inherent challenges, which have been extensively documented in the literature.

- Data Quality and Heterogeneity

Due to diverse sensor types, sampling rates, and vendor-specific protocols, industrial data are frequently characterized by noise, missing values, and heterogeneous formats. Additionally, the scarcity of labeled data is a significant bottleneck, especially in predictive maintenance, where fault occurrences are infrequent yet critical [24,25]. These challenges impede model accuracy and generalization. Transfer learning and data augmentation have been suggested to alleviate these effects [26,27].

- Model Interpretability and Trust

Adopting complex ML models, including deep learning architectures and ensemble methods, encounters resistance in industrial settings due to their black-box nature [28,29]. Interpretability is crucial for regulatory compliance and operator acceptance, especially in high-stake domains like semiconductor fabrication and chemical processing [30]. Explainable AI (XAI) methods, such as SHAP and LIME, have been developed to provide post hoc explanations; however, challenges persist regarding their real-time applicability and domain-specific interpretation [31,32].

- Integration with Legacy Systems

Many manufacturing plants use legacy control systems like PLCs and SCADA, which lack standard interfaces for ML integration [33]. The limited computational capacity and real-time constraints further complicate the deployment of data-driven models [34]. Solutions involving edge computing and digital twins have been proposed to bridge this gap, enabling near-real-time inference while maintaining existing infrastructure [35,36].

- Scalability and Real-Time Adaptation

Industrial processes require scalable ML models that adjust to changing system dynamics and coordinate multiple assets. Traditional offline batch learning falls short in these situations. Emerging paradigms such as online, federated, and reinforcement learning provide promising solutions for real-time adaptability and privacy-preserving cross-site collaboration [37,38].

- Cybersecurity and Data Privacy

The increasing connectivity of industrial systems exposes new attack surfaces. Integrating ML introduces additional cybersecurity challenges, necessitating robust defense mechanisms and privacy-preserving model training, especially in sensitive or regulated industries [39,40]. Federated learning is recognized as an effective approach to strike a balance between privacy and collaborative model improvement [41].

This synthesis of the current literature highlights the multifaceted challenges faced in applying ML within industrial settings. Addressing these issues is crucial for transitioning ML from research prototypes to scalable, reliable, and operationally viable industrial systems.

2.4. Predictive Control Techniques in Practice

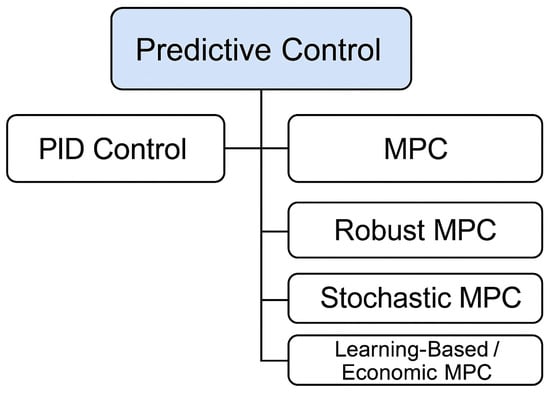

Predictive control has become a cornerstone of modern industrial automation, enabling proactive adjustments of system inputs based on real-time data and predictive modeling of future trajectories. Predictive control frameworks enhance operational stability, reduce process variability, and improve efficiency under constraints and uncertainty by continuously optimizing control actions over a moving time horizon. The evolution of predictive control methods reflects a progression from traditional, rule-based controllers to increasingly sophisticated model- and data-driven techniques, as illustrated in Figure 5.

Figure 5.

Hierarchical structure of predictive control techniques illustrating the evolution from PID to advanced MPC variants, including learning-based and economic control.

- PID control, despite its simplicity and widespread use, cannot manage multivariable interactions, nonlinear dynamics, and explicit constraints [42]. It remains common in low-complexity or legacy systems due to its ease of tuning and robustness.

- Model Predictive Control (MPC) has emerged as the leading advanced control strategy in the industry. MPC optimizes a cost function while adhering to model-based forecasts and operational constraints, showcasing superior performance in complex, multivariable systems such as chemical reactors, semiconductor fabrication, and smart grids [43].

- Robust MPC enhances traditional MPC by explicitly addressing bounded uncertainties, thus ensuring controller performance in worst-case scenarios. In contrast, Stochastic MPC employs probabilistic uncertainty models, facilitating risk-aware decisions in highly variable environments [44,45].

- The frontier of predictive control encompasses Learning-Based MPC and Economic MPC. These approaches utilize machine learning models to enhance prediction accuracy and adjust control policies in response to changing system dynamics. Economic MPC additionally shifts the optimization objective from traditional setpoint tracking to business-aligned goals such as cost reduction or energy efficiency.

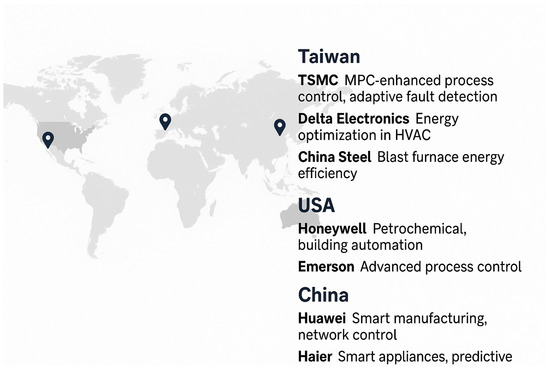

In Taiwan, industry leaders are at the forefront of predictive control innovation.

- TSMC has developed and patented MPC-based control systems for nanometer-scale precision and real-time fault detection in semiconductor fabrication, which improves yield and equipment uptime.

- Delta Electronics uses predictive control for energy-efficient HVAC operation, reducing carbon emissions while maintaining occupant comfort.

- China Steel employs data-driven predictive models to optimize blast furnace operations, minimizing fuel consumption and CO2 emissions, while enhancing product quality.

These examples highlight the broad impact of predictive control, from precision manufacturing process quality to large-scale industrial energy sustainability.

Globally, major companies such as Honeywell and Emerson in the United States and Huawei and Haier in China have incorporated predictive control into building automation, petrochemical refining, and smart appliance platforms. This worldwide activity signifies a competitive race toward intelligent, adaptive control technologies, as illustrated in Figure 6.

Figure 6.

Industrial applications of predictive control techniques across diverse sectors, highlighting global innovation leaders and deployment scenarios.

2.5. Challenges Driving Data-Driven Approaches

Technical methods such as SHAP values, LIME explanations, and saliency maps are commonly adopted to improve the interpretability of complex ML models in industrial settings. These methods help operators understand feature contributions and model decisions, thus bridging the gap between black-box predictions and actionable process insights. Despite the foundational importance of classical optimization and predictive control in industrial automation, contemporary manufacturing environments demand increased flexibility, scalability, and responsiveness. These changing requirements expose the significant limitations of traditional methods and have spurred the adoption of data-driven, machine learning (ML) enhanced solutions.

One of the primary challenges is scalability and system complexity. Modern industrial operations produce high-dimensional data streams from interconnected production lines, sensors, and supply chains. Traditional deterministic optimization techniques often struggle to scale effectively under these conditions. For example, multi-stage supply chains involving thousands of decision variables or manufacturing systems with frequent product changeovers necessitate near real-time decision-making tasks that exceed the computational capacity of most classical solvers [18].

Another significant limitation lies in the reliance on accurate physical models. Constructing robust, physics-based models for nonlinear, time-varying, or stochastic industrial systems is often impractical, especially when processes are subject to external disturbances. As a result, there is an increasing demand for ML approaches capable of learning system behaviors directly from operational data without depending on explicit priori models [46].

Robustness and adaptability pose further challenges for classical control architectures. Traditional controllers are sensitive to model inaccuracies, sensor noise, and environmental variability. Real-world phenomena such as equipment aging, process drift, and fluctuating raw material quality introduce disturbances that classical methods are poorly equipped to manage effectively [47].

Moreover, integrating with legacy systems remains a significant barrier, particularly for small and medium-sized enterprises (SMEs) that use outdated equipment and fragmented software infrastructures. These environments often lack computational resources and connectivity to support centralized optimization frameworks. Consequently, there is an increasing interest in lightweight, cloud-enabled, or edge-deployable data-driven control solutions that work in conjunction with existing hardware [48].

These industrial pain points are reflected in patent activity trends from 2015 to 2025. Figure 7 shows that filings related to hybrid optimization and machine learning (ML) frameworks have increased substantially, indicating a significant industry shift toward adaptive, intelligent control platforms. A significant application of convolutional neural networks (CNNs) is evident in the semiconductor sector, particularly through the efforts of Taiwan Semiconductor Manufacturing Company (TSMC). TSMC has implemented CNNs for the automated classification of defects in high-resolution wafer inspection images. By training CNN models on extensive annotated image datasets and incorporating them into in-line inspection processes, TSMC has achieved a 27% decrease in false positives, thereby significantly improving inspection efficiency and reducing unnecessary rework [49]. Additionally, TSMC utilizes feed-forward control methodologies, including multiple regression and gradient boosting machines (GBMs), to forecast optimal photolithography parameters—such as dose, focus, and etch time—based on upstream metrology data. This strategy facilitates nanometer-scale fabrication precision, thereby alleviating the effects of sub-angstrom drift that could compromise device performance [50]. Importantly, these patents underscore the application of sophisticated CNN architecture featuring multi-scale extraction capabilities to identify micro-defects on wafer surfaces that are frequently overlooked by conventional vision systems. This innovative approach not only diminishes false positives but also enhances the reliability of inline inspections within the constraints of high-volume manufacturing.

Figure 7.

The increasing number of patent filings in hybrid optimization and machine learning for industrial control underscores the growing emphasis on adaptive, data-driven approaches. Taiwanese industry leaders, such as Foxconn (utilizing AI-enhanced scheduling), Delta Electronics (employing self-optimizing HVAC systems), and TSMC (utilizing ML-based yield prediction).

These challenges collectively support a paradigm shift from model-centric to data-driven methodologies, paving the way for integrating supervised learning, reinforcement learning, federated learning, and explainable AI into industrial optimization and predictive control, as further detailed in the following sections.

3. Applications for Machine Learning in Industrial Optimization

Machine learning (ML) has become a crucial pillar of industrial optimization, allowing complex systems to operate with increased intelligence, adaptability, and efficiency. Unlike traditional approaches that depend on static rules or model-based techniques, ML methods utilize real-time and historical data to enhance predictive analytics, informed decision-making, and operational optimization.

The growing complexity and variability of modern industrial environments, along with the inherent limitations of classical model-based control, have driven a paradigm shift toward data-driven, ML-enabled frameworks. Machine learning enables systems to infer dynamic behaviors, identify patterns, and make adaptive decisions directly from operational data without relying on explicit physical models. As a result, machine learning plays a critical role in predictive maintenance, real-time scheduling, quality assurance, energy management, and autonomous process optimization.

Unlike traditional optimization techniques that rely on fixed mathematical models, ML models continuously improve their performance by learning from both historical and streaming data. This capability to generalize, adapt, and self-correct is especially valuable in environments marked by nonlinear dynamics, uncertain inputs, and distributed sensor-actuator architectures.

This section offers a structured overview of key ML methodologies and their applications in industry, emphasizing the following:

- Core methodological categories encompass supervised learning, reinforcement learning, federated learning, and explainable AI.

- Deployment scenarios cover semiconductor manufacturing, energy-efficient process control, and predictive maintenance.

- Representative case studies from leading Taiwanese companies, such as TSMC, Foxconn, and Delta Electronics, emphasize contextual challenges and innovation trajectories.

This section aims to provide a comprehensive perspective on how data-driven intelligence is transforming the landscape of modern manufacturing systems by linking ML techniques to their functional roles and industrial impact.

3.1. Process Optimization

Process optimization plays a foundational role in modern manufacturing, where precision, adaptability, and efficiency are paramount, especially in high-mix, low-volume environments. Traditional optimization approaches, such as statistical process control (SPC) and run-to-run (R2R) feedback control, often struggle to manage the nonlinear, multivariate, and time-varying characteristics of contemporary industrial systems. In contrast, machine learning (ML) offers data-driven solutions that can model complex dynamics, enabling adaptive control through continuous learning from both historical and streaming sensor data.

Supervised learning algorithms such as support vector machines (SVMs), random forests (RFs), and gradient boosting machines (GBMs) are widely used to predict key quality indicators like film thickness, uniformity, and material composition. For instance, ligand engineering combined with ML modeling has been applied to optimize ruthenium atomic layer deposition (ALD), improving uniformity in ultrathin films critical for next-generation semiconductor devices [51]. Likewise, ML-accelerated atomistic simulations have reduced the computational burden of reaction pathway analysis, accelerating material selection and experimental design [52].

Reinforcement learning (RL) techniques are also gaining prominence in dynamic process environments. One notable example is the application of distributional soft actor–critic RL to multizone planarization systems, yielding greater accuracy and robustness than traditional R2R controllers in chemical mechanical planarization (CMP) [53]. These examples illustrate how ML can augment or even replace classical methods by offering real-time, adaptive optimization under uncertainty.

A representative case in Taiwan’s semiconductor industry is Taiwan Semiconductor Manufacturing Company (TSMC), which employs convolutional neural networks (CNNs) for automated defect classification during wafer inspection. By training CNNs on large, annotated image datasets and integrating them into inline inspection workflows, TSMC achieved a 27% reduction in false positives, substantially improving inspection reliability and reducing unnecessary rework [49]. Complementing these efforts, TSMC also applies feed-forward control models, utilizing multiple regression and GBMs, to predict lithographic parameters such as dose, focus, etch time, based on upstream metrology data [50]. These technologies together enhance process stability at nanometer precision, demonstrating how ML can serve both diagnostic and prescriptive roles in high-precision manufacturing.

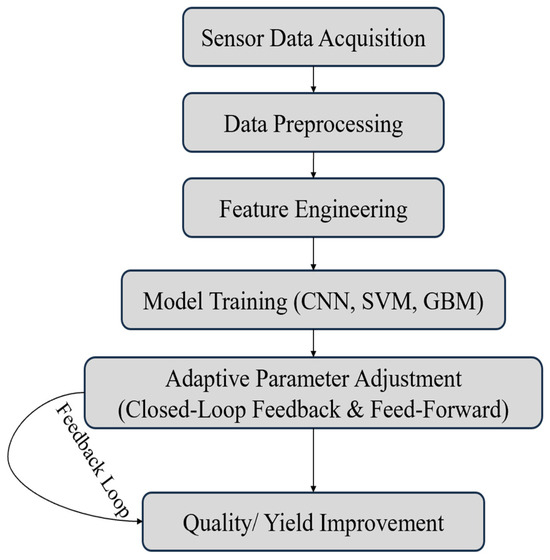

Figure 8 illustrates a typical ML-enabled process optimization pipeline. The workflow begins with data acquisition from industrial sensors, followed by preprocessing and feature engineering. ML models are then trained to detect patterns or predict outcomes, feeding real-time monitoring and adaptive control mechanisms through either feedback or feed-forward loops. These systems can adjust process parameters proactively, improving yield, efficiency, and overall product quality. The role of ML is not limited to production line inspection but also extends to energy optimization and maintenance, which are discussed in the subsequent sections.

Figure 8.

Machine learning-enabled process optimization pipeline.

3.2. Energy Efficiency via ML

While process optimization targets product quality and yield, energy efficiency has become an equally critical objective, particularly in high-intensity manufacturing settings such as semiconductor cleanrooms, precision electronics plants, and large-scale smart factories. These environments rely heavily on energy-intensive subsystems, such as HVAC, chillers, and lighting, which collectively account for 40–60% of total electricity consumption. As energy prices rise and environmental regulations tighten, manufacturers are increasingly adopting machine learning (ML) to enhance operational energy efficiency without compromising system stability or occupant comfort.

One of the most promising approaches in this domain is deep reinforcement learning (DRL), which enables intelligent agents to autonomously learn optimal energy control strategies through interaction with their environment. For example, a Deep Q-Network (DQN) applied to a campus-scale HVAC system achieved up to 25% energy savings while maintaining thermal comfort and air quality [54]. DRL agents dynamically adjust control variables such as air flow rate, setpoints, and damper positions in response to time-varying occupancy and thermal conditions.

In Taiwan, Delta Electronics has spearheaded the application of DRL-based frameworks in industrial energy management. Their AI-driven HVAC optimization systems—designed for semiconductor fabs and large commercial buildings—achieve energy savings of 10–18% by balancing temperature constraints, electricity costs, and process emissions [55]. These systems integrate real-time IoT sensor data (e.g., CO2 levels, occupancy, ambient temperature) with edge computing platforms, enabling decentralized inference and fast actuation. Multi-agent DRL frameworks further improve system scalability and responsiveness across multiple HVAC zones [56]. Beyond DRL, federated learning (FL) is gaining momentum as a privacy-preserving solution for collaborative energy optimization across factories. FL enables distributed model training without requiring raw data to be shared between facilities, addressing confidentiality concerns while still supporting global learning objectives. In Taiwan, FL-based AI-HVAC systems have been deployed to support tasks such as lighting control, chiller scheduling, and energy load forecasting using LSTM-based time series models [57].

Digital twins are also increasingly used to simulate factory energy dynamics and pre-train RL agents before real-world deployment. In a recent case from a Hsinchu-based semiconductor fab, a virtual HVAC environment was used to train DRL agents, resulting in a 24.6% improvement in chiller efficiency upon transfer to the actual system [58]. These simulations reduce the cost and risk of live training while enhancing the robustness of the model. Table 1 summarizes representative ML techniques applied to industrial energy optimization, highlighting their application scenarios and demonstrated benefits.

Table 1.

Machine learning models applied to industrial energy efficiency.

Collectively, these innovations signal a shift from static, rule-based energy control toward adaptive, intelligent frameworks powered by machine learning. In Taiwan’s climate-sensitive and energy-intensive manufacturing sector, such systems are becoming essential for achieving ESG goals, reducing carbon footprints, and enhancing long-term operational resilience. The following section expands on how similar ML techniques are now being leveraged for predictive maintenance and intelligent asset management across high-tech industries.

3.3. Predictive Maintenance

As manufacturing systems grow in scale and complexity, the ability to anticipate equipment failures and optimize maintenance scheduling has become crucial for ensuring operational continuity, cost efficiency, and safety. Predictive maintenance (PdM) leverages machine learning (ML) to transform maintenance from a reactive activity into a proactive, intelligence-driven function that aligns with Industry 4.0 principles.

A wide range of ML techniques are now employed in PdM frameworks. Supervised learning models, such as random forests (RFs), gradient boosting machines (GBMs), and support vector machines (SVMs), are frequently used for fault classification and remaining useful life (RUL) estimation in equipment like HVAC compressors, industrial motors, and robotic actuators. For example, CNNs trained on vibration data have achieved over 90% accuracy in multi-class fault diagnosis of robotic components in semiconductor cleanroom environments [9]. In data-scarce settings, unsupervised learning techniques—such as autoencoders and clustering—have proven useful for anomaly detection and early fault identification without labeled failure data [61].

Taiwanese manufacturers have increasingly adopted edge AI platforms, such as NVIDIA Jetson Nano and Raspberry Pi, to support real-time inference at the point of operation. These devices enable latency-sensitive predictive tasks—such as spindle motor monitoring or tool wear prediction—without relying on continuous cloud connectivity. Recent studies have shown that integrating edge AI with federated learning (FL) allows distributed PdM models to be trained across multiple factory sites while preserving sensitive machine data [62]. This approach is particularly relevant in Taiwan’s highly networked yet privacy-conscious smart factory environments.

Advanced PdM systems are also beginning to incorporate reinforcement learning (RL) and digital twins to optimize maintenance timing, resource allocation, and production coordination. In one notable example, a hybrid RL framework was applied to a bioethanol–ethylene production system to adaptively balance maintenance interventions with changing demand ratios [62]. These approaches demonstrate the feasibility of integrating predictive asset management with production planning in multi-product and dynamic settings. Table 2 summarizes representative ML techniques applied in predictive maintenance, their application scenarios, and demonstrated benefits.

Table 2.

Representative machine learning techniques for predictive maintenance.

In summary, ML-powered PdM enables real-time monitoring, fault prediction, and intelligent scheduling that reduces downtime, extends equipment life, and aligns maintenance operations with ESG goals. By combining predictive analytics with decentralized computing and privacy-aware model architectures, Taiwan’s high-tech industry is laying the groundwork for fully autonomous, resilient manufacturing systems. The subsequent section discusses how these trends are evolving further through hybrid control systems and explainable AI.

Building upon predictive maintenance, the next stage of intelligent manufacturing involves integrating machine learning with traditional control systems to enhance production adaptability and precision. The following section examines how hybrid control architectures integrate classical PID and MPC methods with data-driven intelligence, enabling real-time, context-aware decision-making on the shop floor.

4. ML-Enhanced Predictive Control

Predictive control involves the proactive regulation of dynamic systems by forecasting future states, interactions, and environmental disturbances. Traditionally, Model Predictive Control (MPC) has dominated this field, providing a principled approach based on accurate system models. However, MPC’s reliance on precise models restricts its applicability in complex, nonlinear, or poorly characterized systems. Machine learning (ML) in predictive control frameworks addresses these challenges by facilitating nonlinear system approximation, adaptive policy learning, and real-time control in high-dimensional, multivariable environments. This chapter examines two main application domains where ML enhances or replaces traditional model-based predictive control techniques.

4.1. Real-Time Control via ML

In real-time process control, ML models serve as forward predictors of system behavior, enabling controllers to adjust inputs based on projected state trajectories preemptively. For instance, in chemical processing and pharmaceutical manufacturing, Long Short-Term Memory (LSTM) networks and Gaussian Process Regression (GPR) have been trained on sensor time series data to anticipate critical state transitions in batch and continuous processes. Ref. [63] Implemented an LSTM-based model predictive control (MPC) framework in a distillation process, achieving a 31% reduction in control error compared to conventional PID controllers. Their approach leveraged recurrent neural networks to capture system dynamics more effectively, enabling improved real-time optimization under nonlinear process conditions. According to Ref. [64], in Taiwan, the Industrial Technology Research Institute (ITRI) partnered with fine chemical manufacturers to deploy lightweight neural network controllers on edge computing-enabled programmable logic controllers (PLCs), allowing for real-time predictive regulation of temperature-sensitive synthesis reactions. Additionally, ML facilitates the development of soft sensors that infer unmeasurable process variables, such as intermediate species concentrations, using autoencoder architectures and support vector regression (SVR), thus improving system observability and control accuracy.

4.2. Multivariable Control Systems

Multivariable control systems consist of multiple interacting feedback loops with nonlinear dependencies, common in semiconductor equipment, aerospace actuators, and automotive powertrains. Data-driven ML models—including deep neural networks (DNNs), attention-based transformers, and hybrid neural-MPC frameworks—offer robust alternatives for capturing these complex interactions. For example, Ref. [65] applied deep neural networks (DNNs) to aerospace fuel injection systems, demonstrating an 18% reduction in fuel consumption variability under dynamic loading. In the semiconductor domain. According to Ref. [66], in Taiwan, TSMC and ASE Group have investigated multi-output regression models for coordinated etching and deposition control, optimizing parameters such as layer thickness, sidewall angle, and uniformity. These models are integrated within closed-loop control architectures to adjust real-time gas flow rates, RF power, and chamber temperature.

At the same time, reinforcement learning (RL) algorithms like Soft Actor–Critic (SAC) and Proximal Policy Optimization (PPO) are gaining traction for learning optimal policies in dynamic and uncertain industrial environments. Promising applications include robotic path planning and multi-zone HVAC control, where competing objectives—energy efficiency versus occupant comfort—require adaptive trade-offs. Table 3 summarizes various ML approaches utilized in predictive control systems, along with their respective industrial application domains and noted performance improvements as follows:

Table 3.

Machine learning approaches in predictive control systems.

These developments highlight that ML is more than just a supplementary enhancement; it represents a paradigm shift toward adaptive, scalable, and intelligent control system design. However, moving from pilot implementations to robust, industrial-scale deployments involves significant challenges. The following chapter examines the practical obstacles encountered in Taiwan’s industrial sectors, including complexities in system integration, data quality issues, model generalization difficulties, and barriers to cyber–physical implementation, drawing on case studies from leaders such as TSMC, Foxconn, and Delta Electronics.

While hybrid predictive control systems demonstrate significant performance gains, their widespread deployment faces notable barriers. These include technical complexity, model transparency, and challenges related to industrial standardization. The following discussion outlines these practical limitations and identifies future research directions that could support scalable, explainable, and resilient ML adoption in manufacturing.

5. Challenges and Future Directions

While machine learning (ML) has demonstrated significant potential to revolutionize industrial optimization and predictive control, its widespread adoption in real-world production environments remains limited. Many documented successes are still confined to pilot-scale or experimental settings, with only a minority achieving seamless and sustained integration into operational workflows. This implementation gap results from a complex interplay of technical, infrastructural, and organizational barriers that collectively hinder the scalability, robustness, and trustworthiness of ML solutions in mission-critical industrial systems. Overcoming these barriers requires not only robust model development but also seamless integration with diverse hardware infrastructures. Legacy production lines often rely on equipment with limited digital interfaces, complicating real-time data capture and process monitoring. To address this, sensor networks must be standardized to ensure consistent, high-quality data streams, and edge computing nodes are increasingly deployed to enable low-latency inference near the data source. These hardware constraints and infrastructural mismatches remain significant bottlenecks for scaling ML adoption in industrial settings. Additionally, ensuring interpretability and transparency is crucial for building trust in ML-driven decision-making. Technical methods such as SHAP values, LIME explanations, and saliency maps are commonly used to enhance the interpretability of complex ML models by quantifying feature contributions and clarifying model logic. These approaches help operators understand how model predictions translate into actionable process adjustments, bridging the gap between black-box outputs and practical industrial insights.

5.1. Data Quality and Availability

The effectiveness of machine learning (ML) in industrial applications depends critically on the quality, completeness, and consistency of input data. However, real-world industrial data sets often face challenges that undermine model reliability, generalizability, and reproducibility. Sensor data often suffers from environmental noise, hardware degradation, signal intermittency, and calibration drift—conditions that accumulate over time due to wear, contamination, or sensor aging. Distributed logging infrastructures may experience data loss, packet delays, or incomplete records due to communication failures and asynchronous system architectures. Even when data collection is continuous, labels are often sparse or missing, particularly in predictive maintenance scenarios where failures are infrequent yet consequential. These factors present significant hurdles to training effective supervised learning models.

Moreover, data heterogeneity remains a significant challenge within industrial ecosystems. Data is collected from a variety of devices provided by multiple vendors, stored in incompatible formats, and sampled at different temporal resolutions. The lack of standardized data schemas or ontologies complicates the integration and unification of datasets across production lines, which restricts model scalability and generalizability across various factory sites. Additionally, many industrial sites lack formalized data governance frameworks, resulting in inconsistent metadata, poor traceability, and limited opportunities for reusing historical data in model development and benchmarking.

To address these challenges, several advanced ML-driven strategies have been adopted in practice. Transfer learning facilitates knowledge transfer by fine-tuning models that have been pretrained on related domains, thereby enhancing performance when dealing with small or noisy datasets. Generative models, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), are increasingly utilized to generate rare failure scenarios, thereby augmenting training datasets synthetically. For instance, Ref. [67] demonstrated that GAN-based data augmentation improves fault classification robustness in rotating machinery, particularly under sparse or noisy failure conditions, such as pump cavitation. Federated learning enables distributed model training without the need to share raw data, thus preserving privacy in sensitive manufacturing environments [68]. Self-supervised learning enables the extraction of meaningful representations from unlabeled sensor streams, which can then be utilized for pretraining before fine-tuning with limited labeled examples. Finally, digital twins serve as virtual replicas of physical systems, simulating diverse operating conditions and producing synthetic labeled data to support model development without disrupting ongoing production processes [69].

Empirical evidence highlights the effectiveness of these approaches. Recent reviews emphasize the effectiveness of combining transfer learning with generative data augmentation techniques for fault diagnosis in rotating machinery. Ref. [70] systematically evaluated state-of-the-art methods, including GAN-based synthetic data generation, and reported that such approaches significantly improve classification accuracy—often exceeding 90%—especially in limited-data conditions. These hybrid frameworks enhance feature generalization and enable robust model adaptation across varying operating conditions and fault domains. Similarly, Ref. [71] demonstrated that federated transformer models trained via SSL outperformed centralized supervised models by over 18% in cross-factory generalization tasks. Collectively, these advances signify a paradigm shift in industrial AI—from the conventional emphasis on “more data equals better models” to a data-centric paradigm that prioritizes data quality, simulation, labeling, and adaptability within the model development pipeline.

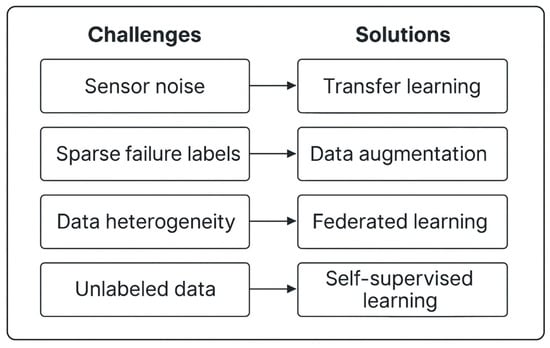

Figure 9 visually contrasts typical industrial data challenges with ML-driven solutions, such as GANs, federated learning, SSL, and digital twins. It illustrates the shift towards intelligent data engineering, which facilitates more robust and transferable AI models in constrained and noisy data conditions.

Figure 9.

Common data challenges and machine learning–driven solutions in industrial environments.

Despite these promising developments in data quality enhancement, a critical subsequent challenge arises in understanding how ML models make decisions. As model complexity and autonomy increase, interpretability and trust become essential for broader industrial adoption. This theme is discussed in the following section.

5.2. Model Interpretability and Trust

Despite the significant success of machine learning (ML) in industrial classification, prediction, and control tasks, the lack of interpretability remains a major barrier to widespread adoption. Many high-performing models—such as deep neural networks (DNNs), ensemble learners (e.g., gradient boosting, random forests), and reinforcement learning (RL) agents—are often viewed as “black boxes” because their internal decision-making processes are largely opaque. In high-stakes domains like semiconductor fabrication, pharmaceutical manufacturing, and energy grid operations, transparency is essential not only for operational accountability but also for regulatory compliance and fostering operator trust. Interpretability methods fall into two broad categories. First, inherently interpretable models—such as decision trees, rule-based systems, and generalized additive models (GAMs) provide transparency by design. However, these models may struggle to capture complex, nonlinear, or high-dimensional relationships, often limiting their predictive power. Second, post hoc explanation techniques aim to offer insights into already trained complex models, revealing which features drive model predictions. Common approaches include the following:

- SHAP (SHapley Additive Explanations): Based on cooperative game theory, it provides consistent and model-agnostic feature attributes.

- LIME (Local Interpretable Model-agnostic Explanations): This method approximates model behavior locally using simple surrogate models, allowing for intuitive explanations.

- Integrated Gradients: This technique calculates gradients to attribute importance in differentiable deep learning models, proving especially effective in vision applications.

These techniques have shown practical value. For instance, Ref. [72] applied SHAP in HVAC fault diagnosis, allowing maintenance engineers to prioritize interventions based on key contributing features. A growing body of research emphasizes the need for explainable artificial intelligence (XAI) in industrial fault diagnostics, particularly in high-stakes environments where human operators rely heavily on AI-driven decisions. Ref. [73] provides a comprehensive review of XAI techniques—such as LIME, SHAP, and rule-based methods—emphasizing their capacity to enhance decision transparency in fault detection systems. These methods enhance trust and operational responsiveness by making AI predictions interpretable and actionable, even in safety-critical applications.

Despite their promise, deploying explainable AI (XAI) methods in industrial contexts poses several challenges. Many current techniques are not optimized for real-time, streaming data environments typical of manufacturing. Semantic gaps between data scientists and domain engineers can diminish the usefulness of explanations unless they are tailored to align with operational knowledge. The modality of the explanation, whether graphical, textual, local, or global, significantly affects how users comprehend model outputs and make subsequent decisions. Ref. [74] emphasized that mismatched explanation types can reduce user trust or even lead to misinformed actions, particularly in high-stakes domains. Moreover, most XAI methods focus on pattern correlation rather than causal inference, which can lead to misinterpretation in dynamic control environments.

To address these limitations, causal explanation frameworks are emerging. These approaches aim to uncover cause-and-effect relationships rather than mere correlations, facilitating actionable interventions in complex systems, such as predictive maintenance or process control. Simultaneously, hybrid symbolic–neural models and physics-informed neural networks (PINNs) incorporate domain knowledge into neural architectures, enhancing interpretability while preserving modeling power and ensuring safety in critical control applications. Importantly, regulatory frameworks are increasingly requiring interpretability. The EU AI Act, ISO/IEC 22989, and the NIST AI Risk Management Framework explicitly mandate traceability, auditability, and human-understandable explanations for AI systems deployed in regulated industrial settings. Table 4 summarizes six widely used XAI techniques, highlighting their applications, strengths, and limitations in industrial ML contexts to aid in selecting the most suitable methods.

Table 4.

Comparison of XAI methods in industrial ML contexts.

5.3. Integration with Legacy System

Integrating modern machine learning (ML) techniques into legacy industrial systems presents challenges that extend beyond simple technological incompatibility, encompassing organizational, infrastructural, and regulatory factors. While ML shows significant promise in areas such as process optimization, predictive maintenance, and fault detection, many production environments still depend heavily on outdated infrastructures. Common platforms—such as programmable logic controllers (PLCs), supervisory control and data acquisition (SCADA) systems, and distributed control systems (DCS) often lack the accessibility, computational power, and interface flexibility necessary for advanced ML applications. These systems prioritize deterministic operation, real-time responsiveness, and system uptime, making it technically and operationally complex to retrofit them with probabilistic, latency-sensitive ML agents.

A significant technical barrier in industrial AI deployment is protocol incompatibility. Legacy control systems often rely on communication standards such as Modbus or CAN bus, which are not directly compatible with modern publish–subscribe protocols like MQTT or REST. To address this, edge computing platforms such as NVIDIA Jetson Nano and Raspberry Pi have been used as protocol bridges. Ref. [78] demonstrated that such edge nodes can preprocess data, perform protocol translation, and execute local inference tasks, thus enabling integration with AI frameworks without altering legacy PLC infrastructure.

Digital twins offer a promising solution. These virtual replicas of physical systems enable the development, training, and validation of ML models in non-disruptive simulated environments. By combining physical modeling with data-driven learning, digital twins replicate complex, nonlinear dynamics. Ref. [79] reported that implementing digital twins in legacy CNC machining systems reduced unplanned downtime by 21% during retrofitting phases. Concurrently, hybrid optimization frameworks are gaining traction. These frameworks combine traditional control logic (e.g., PID controllers or fuzzy rules) with ML-based predictive modules, forming semi-autonomous agents capable of real-time adaptation. This approach is particularly practical in sectors like semiconductor manufacturing, where mechanistic domain knowledge is embedded in legacy systems but can be enhanced with live inferential intelligence [2].

The human factor remains crucial in integrating legacy systems. Many facilities lack personnel skilled in ML techniques and legacy system configurations. This gap has driven the development of AutoML pipelines tailored for SCADA and OPC-UA environments. These tools automate feature extraction, model training, and deployment into operator-friendly dashboards, lowering barriers for control engineers. Automated machine learning (AutoML) systems aim to streamline the processes of model selection, hyperparameter tuning, and deployment. However, applying AutoML across heterogeneous industrial sites remains challenging due to variability in data characteristics and resource constraints. Ref. [80] reviewed foundational AutoML frameworks and emphasized the importance of modularity and adaptability to support flexible deployment scenarios. Their findings suggest that modular AutoML architecture can reduce the need for retraining across production sites, thereby accelerating cross-factory adoption.

Despite these advances, systemic challenges persist. The introduction of edge devices and ML layers expands the cyber-attack surface and raises operational technology (OT) security concerns. Most industrial sites also lack standardized AI-OT integration blueprints, resulting in fragmented, ad hoc implementations with limited scalability. Furthermore, model drift poses risks when ML models are deployed in slowly evolving equipment environments with sparse labeling feedback.

International standards bodies have developed frameworks that guide the secure and interoperable deployment of ML in industrial environments to address these challenges. The Industrial Internet Reference Architecture (IIRA), Reference Architectural Model Industrie 4.0 (RAMI 4.0) [80], and standards from IEC TC65 and ISO/IEC JTC 1/SC 42 emphasize modularity, traceability, and role-based functional layering [80]. These architectures promote a clear separation among sensing, preprocessing, inferencing, and actuation layers to enhance validation, maintainability, and regulatory compliance.

6. Discussion and Implications for Industrial ML Deployment

This review presents the transformative impacts of machine learning (ML) on Taiwan’s high-precision, high-mix manufacturing industries. Industry champions such as Foxconn, Delta Electronics, and Taiwan Semiconductor Manufacturing Company (TSMC) have made measurable improvements in process performance by employing CNN-based defect inspection, reinforcement learning-based adaptability scheduling, and federated learning-based cross-factory energy optimization. These improvements have been achieved through enhanced defect identification accuracy, improved energy efficiency, and increased robustness in scheduling. Taiwan’s manufacturing sector, with its high clustering density of industries and extremely customized production lines, presents a ripe case for the deployment of ML-based algorithms. In-bedding machine learning (ML) at the edge through solutions such as NVIDIA Jetson Nano has enabled real-time inference and effectively mitigated data privacy and latency issues. It was found to become mission-essential as well as energy-intensive task-associated.

It performs comprehensive patent analysis and detects a distinct trend among leading techniques, shifting from conventional optimization frameworks to hybrid data-centric control systems. A patent comparative analysis between Taiwan’s industrial strategies on ML and those of Western countries reveals significant divergence. Taiwanese developments concentrate heavily on lightweight deployment, cross-factory model generalization, and privacy-preservation learning frameworks. This trend is facilitated by combining explainable artificial intelligence (XAI) techniques and cyber–physical control loops. In high-risk environments, SHAP, LIME, and surrogate modeling have been discovered to enhance model transparency and operator trust. Hybrid architecture fusing digital twins with classical model-predictive control (MPC) and real-time learning agents also enables factories to link legacy gear with next-gen control logic.

It is important to acknowledge the limitations of this current work. First, this present work relies heavily on publicly available patent information and, therefore, may understate proprietary innovation or activity by SMEs. Secondly, case studies, as informative as they are, rarely incorporate longitudinal measures of performance or operational scalability. Thirdly, socio-organizational factors—such as workforce readiness or cultural resistance to AI—are crucial to successful implementation, even if they are peripheral to this particular analysis.

Future research activities should focus on domain-adaptive and federated machine learning (ML) systems that can demonstrate the capacity to generalize across different production lines while ensuring data security. It is crucial to adopt causal machine learning (ML) frameworks to foster greater model explainability and support robust decision-making mechanisms. Greater importance should be attached to incorporating machine learning (ML) into standardized cyber–physical infrastructures and designing modular artificial intelligence (AI) toolboxes to facilitate uptake by small and medium-sized enterprises (SMEs). Finally, establishing cross-sector benchmarking datasets and simulation platforms (e.g., digital twins) is necessary to accelerate the development, deployment, and harmonization of industrial AI solutions on a regulatory front.

7. Conclusions

This review identifies three key insights into the deployment of machine learning (ML) within Taiwan’s high-tech manufacturing sector. First, case studies and patent records confirm that ML technologies—namely, CNNs for defect detection, reinforcement learning for scheduling, and federated learning for cross-factory optimization—are increasingly reshaping industrial processes in high-mix, high-accuracy environments. Second, Taiwan’s unique industrial constraints have facilitated a shift from ordinary control mechanisms to lightweight, dynamic, and privacy-protecting ML executions. Third, industrial applications by TSMC, Foxconn, and Delta Electronics demonstrate quantifiable benefits, including improved precision, energy savings, and enhanced robustness of procedures.

To bridge the gap between prototype development and industrial deployment, this paper outlines a comprehensive ML implementation framework. It covers data-driven techniques (e.g., generative augmentation, self-supervised learning), explainable AI techniques (e.g., SHAP, surrogate models), and hybrid control systems integrating digital twins, edge computing, and industrial protocols (e.g., OPC-UA). These solutions comply with globally agreed-upon standards, such as RAMI 4.0 and IIRA, promoting modularity, traceability, and scalable AI–OT integration.

Nonetheless, a couple of limitations should be mentioned. This review is based on publicly available patent information and may have overlooked proprietary applications or SME innovations. Possible methodological biases reside in case selection or sector concentration. More research should proceed in the direction of causal ML, federated control systems, and explainable cyber–physical optimization to achieve lifecycle-adaptive, trustworthy industrial AI. Ultimately, ML should be viewed not merely as a computational tool but as a foundational enabler of next-generation cyber–physical manufacturing ecosystems.

Author Contributions

Conceptualization, C.-C.W. and C.-H.C.; methodology, C.-C.W. and C.-H.C.; validation, C.-C.W. and C.-H.C.; formal analysis, C.-C.W. and C.-H.C.; data curation, C.-H.C.; writing—original draft preparation, C.-H.C. writing—review and editing, C.-C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Funder Ming Chi University of Technology.

Data Availability Statement

The data supporting the findings of this study are from publicly available patent databases: WIPO PATENTSCOPE, Derwent Innovation, and the Taiwan Intellectual Property Office (TIPO). Some of these databases require registration or institutional access. No new data were generated or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kaur, R.; Kumar, R.; Aggarwal, H. Systematic Review of Artificial Intelligence, Machine Learning, and Deep Learning in Machining Operations: Advancements, Challenges, and Future Directions. Arch. Comput. Methods Eng. 2025, 1–54. [Google Scholar] [CrossRef]

- El-Zathry, N.E.; Akinlabi, S.; Woo, W.L. Enhancing friction stir-based techniques with machine learning: A comprehensive review. IOP Sci. 2025, 6, 021001. [Google Scholar] [CrossRef]

- Gasser, M.; Abdelhafiz, M.M.; Yehia, T.; Ebaid, H. Can artificial intelligence and machine learning predict the performance of nano-based drilling fluids? A review. Trends Sci. 2025, 22, 9686. [Google Scholar] [CrossRef]

- Rana, M.K. Deep learning process control for petrochemical plants. SSRN 2025. [Google Scholar]

- Velesaca, H.O.; Holgado-Terriza, J.A. OPC-UA in artificial intelligence: A systematic review of the integration of data mining and NLP in industrial processes. Manuf. Rev. 2025, 12, 9. [Google Scholar] [CrossRef]

- Wilson, H. A review of AI techniques for improving quality control in electric vehicle production lines. SSRN 2025. [Google Scholar]

- Yahya, A.; Parvatharedy, S.; Amuhaya, L. Transforming mining energy optimization: A review of machine learning techniques and challenges. Front. Energy Res. 2025, 13, 1569716. [Google Scholar] [CrossRef]

- Islam, M.A.; Islam, N.M. The integration of AI and machine learning in supply chain optimization: Enhancing efficiency and reducing costs. ResearchGate 2025. [Google Scholar]

- Davoodi, S.; Al-Shargabi, M.; Wood, D.A. Advancement of artificial intelligence applications in hydrocarbon well drilling technology: A review. Appl. Soft Comput. 2025, 176, 113129. [Google Scholar] [CrossRef]

- Saha, D.; Padhiary, M.; Chandrakar, N. AI vision and machine learning for enhanced automation in food industry: A systematic review. Food Humanit. 2025, 4, 100587. [Google Scholar] [CrossRef]

- Graham, O.; Nelson, J. Revolutionizing manufacturing: Artificial intelligence’s role in enhancing operational performance. Preprints 2025. [Google Scholar]

- Jatavallabhula, J.K.; Masubelele, F. Artificial intelligence for quality assurance in friction stir welding-a review on opportunities and challenges. Eng. Res. Express 2025, 7, 022402. [Google Scholar] [CrossRef]

- Bhuiyan, S.M.Y.; Chowdhury, A.; Hossain, M.S. AI-driven optimization in renewable hydrogen production: A review. Am. J. Inf. Sci. 2025, 6, 76–94. [Google Scholar] [CrossRef]

- Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Bertsimas, D.; Tsitsiklis, J.N. Introduction to Linear Optimization; Athena Scientific: Belmont, MA, USA, 1997; Volume 6, pp. 479–530. [Google Scholar]

- Qin, S.J.; Badgwell, T.A. A survey of industrial model predictive control technology. Control Eng. Pract. 2003, 11, 733–764. [Google Scholar] [CrossRef]

- Rawlings, J.B.; Risbeck, M.J. Model predictive control with discrete actuators: Theory and application. Automatica 2017, 78, 258–265. [Google Scholar] [CrossRef]

- Dantzig, G.B. Linear Programming and Extensions; Princeton University Press: Princeton, NJ, USA, 1963; Available online: https://www.rand.org/pubs/reports/R366.html (accessed on 12 March 2025).

- Nemhauser, G.; Wolsey, L. Computational complexity. In Integer and Combinatorial Optimization; Wiley: Hoboken, NJ, USA, 1988; pp. 114–145. [Google Scholar]

- Bazaraa, M.S.; Sherali, H.D.; Shetty, C.M. Nonlinear Programming: Theory and Algorithms; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Glover, F.W.; Kochenberger, G.A. (Eds.) Handbook of Metaheuristics; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2003; Volume 57. [Google Scholar]

- Talbi, E.G. Metaheuristics: From Design to Implementation; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Zhao, B.; Bilen, H. Dataset Condensation with Differentiable Siamese Augmentation. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021), Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: New York, NY, USA, 2021; Volume 139, pp. 12674–12685. [Google Scholar]

- Weng, J.; Lin, J.; Li, H. Explainable predictive maintenance using decision trees: A case study on CNC machines. Procedia CIRP 2020, 93, 659–664. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Zhou, M.T.; Singh, S.; Guestrin, C. “Why should I trust you?”: Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kim, B. Towards a rigorous science of interpretable machine learning. arXiv 2017, arXiv:1702.08608. [Google Scholar]

- Gunning, D. Explainable artificial intelligence (XAI). Def. Adv. Res. Proj. Agency 2017, 2, 1. [Google Scholar] [CrossRef] [PubMed]

- Molnar, C. Interpretable Machine Learning: A Guide for Making Black Box Models Explainable. 2020. Available online: https://christophm.github.io/interpretable-ml-book/ (accessed on 12 March 2025).

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; pp. 4765–4774. [Google Scholar]

- Wang, W.; Harrou, F.; Bouyeddou, B.; Senouci, S.M.; Sun, Y. A stacked deep learning approach to cyber-attacks detection in industrial systems: Application to power system and gas pipeline systems. Clust. Comput. 2022, 25, 561–578. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Lapira, E.; Bagheri, B.; Kao, H.A. Recent advances and trends in predictive manufacturing systems in big data environment. Manuf. Lett. 2013, 1, 38–41. [Google Scholar] [CrossRef]