1. Introduction

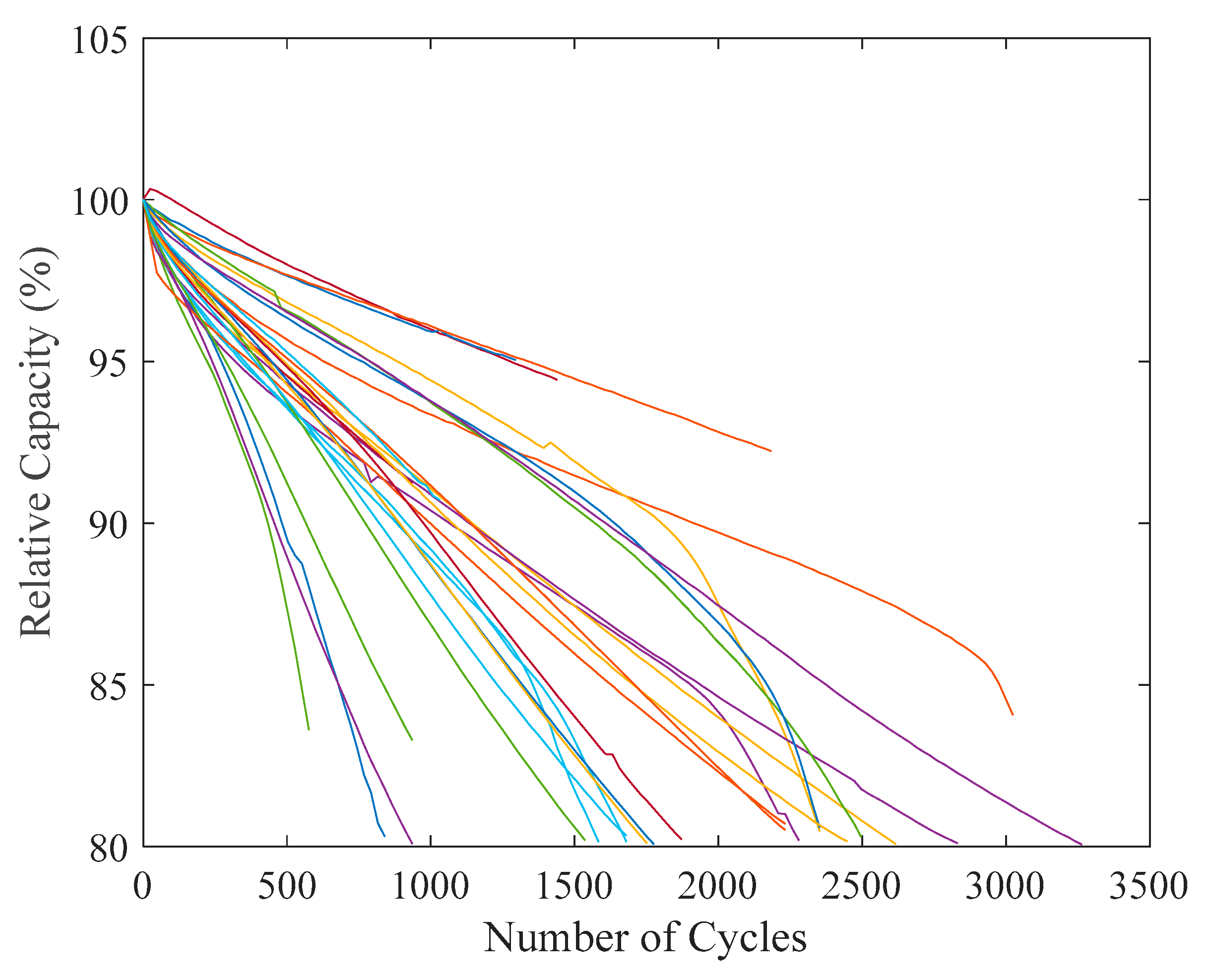

The rapid advancement of lithium-ion battery (LIB) technologies has become a cornerstone of the global transition to sustainable energy systems. From electric vehicles [

1,

2] to grid-scale [

3,

4] storage solutions, LIBs play a pivotal role in decarbonizing transportation and electricity networks. However, a critical bottleneck that persists in battery development is the extensive time and resources required for accurate lifetime prediction. Traditional testing protocols, as documented by Bishop et al. [

5] and Ecker et al. [

6], typically necessitate cycling numerous battery samples to end of life (EoL) under various stress conditions that can span 6–18 months and consume substantial laboratory resources [

7]. This testing paradigm not only delays technology deployment but also significantly increases development costs, creating a formidable barrier to innovation, particularly for emerging battery chemistries and smaller research institutions.

Recent years have seen growing recognition of this challenge, with researchers exploring various approaches to streamline battery testing [

8,

9]. While some studies, such as those by Severson et al. [

10] and Attia et al. [

11], have focused on machine learning techniques to predict battery aging from early-cycle data, these methods still require substantial experimental data for training and validation. Other efforts have examined accelerated testing protocols, as discussed by Berecibar et al. [

12], but these often risk altering fundamental degradation mechanisms. Our research addresses a critical gap in this landscape by developing a systematic framework that fundamentally rethinks the sample size requirements for reliable lifetime prediction. Building on the statistical foundations established by Dechent et al. [

13] and Strange et al. [

14], we demonstrate that strategic sample selection and advanced modeling can achieve comparable predictive accuracy with dramatically fewer test specimens. The significance of this advancement becomes particularly evident when considering the current pressures on battery innovation. As noted by Farmann and Sauer [

15], temperature variations alone can alter battery degradation trajectories by up to 300%, necessitating comprehensive testing across multiple environmental conditions. Traditional approaches to capture this variability require prohibitively large sample sizes, creating what Paarmann et al. [

16] describe as the “testing trilemma”, balancing accuracy, speed, and cost. Our framework resolves this challenge by combining three key innovations: intelligent sample selection that captures the full stress parameter space, advanced degradation modeling that accounts for nonlinear aging effects, and robust uncertainty quantification to ensure reliability. The result is a methodology that reduces testing costs by 70–90% while maintaining prediction errors below 2%, as validated across 27 distinct operating conditions.

This transformation in testing efficiency arrives at a critical moment for the energy storage industry. With global LIB demand projected to grow tenfold by 2030 (IEA, 2023) [

17], and new chemistries, such as silicon-anode and solid-state batteries entering development pipelines, the need for faster, more cost-effective evaluation methods has never been greater. Our approach not only accelerates the development cycle for established technologies but also lowers the barriers to entry for novel battery concepts, potentially catalyzing a new wave of innovation. By redefining what constitutes sufficient experimental evidence for battery validation, we open possibilities for more agile research methodologies, more efficient industrial development processes, and, ultimately, faster deployment of advanced energy storage solutions to support the clean energy transition.

The implications extend beyond technical considerations to encompass broader systemic impacts. As battery performance and longevity become increasingly crucial for applications ranging from consumer electronics to renewable energy integration, the ability to reliably predict lifespan with minimal testing could reshape entire value chains. Manufacturers could reduce time to market for new products, grid operators could optimize storage system deployments with greater confidence, and policymakers could make more informed decisions about infrastructure investments, which all benefit from the increased efficiency and reduced costs enabled by our methodology. In this context, our work represents not merely an incremental improvement in testing protocols but a fundamental shift in how the battery industry approaches one of its most persistent challenges.

1.1. Literature Review

The scientific journey to understand and predict LIB degradation has followed a complex trajectory, mirroring the evolving demands placed on energy storage systems. Early foundational work by Spotnitz [

18] first quantified the nonlinear nature of capacity fade, establishing critical baselines for subsequent modeling efforts. This pioneering research revealed that battery aging follows distinct electrochemical pathways that vary significantly across operating conditions—an insight that would later prove crucial for developing reduced-sample testing methodologies.

Physics-based models emerged as the first systematic approach to degradation prediction, with Pinson and Bazant’s [

19] theory of SEI formation providing a rigorous mathematical framework. Delacourt and Safari [

20] demonstrated their ability to capture complex electrode-level phenomena. However, as Smith et al. [

3] conclusively showed in their grid storage study, these models faced fundamental limitations in practical applications. Their requirement for detailed material parameters—often unavailable for commercial cells—and substantial computational resources made them unsuitable for rapid battery development cycles. This realization spurred the development of alternative approaches that could balance accuracy with practicality.

The semi-empirical modeling paradigm represented a significant leap forward, combining mechanistic understanding with empirical observations. Bishop et al.’s [

5] comprehensive study of vehicle to grid applications established definitive relationships between operational stresses (depth of discharge (

DoD), charge/discharge rates (

Crate)) and capacity fade, while Birkl et al. [

21] developed sophisticated frameworks for aging mechanism identification. Schmalstieg et al. [

22] further advanced these approaches through their holistic aging model for 18,650 cells, which systematically incorporated multiple degradation pathways. However, these models still relied on complete aging datasets, creating what Richardson et al. [

23] termed “the data availability paradox”, which identifies that models require extensive testing to validate predictions meant to reduce testing requirements.

Concurrent with these modeling advances, experimental studies provided crucial insights into stress factor effects. Dubarry et al.’s [

24] meticulous work quantified how

DoD accelerates degradation, while Waldmann et al. [

25] established temperature-dependent aging maps. Farmann and Sauer [

15] contributed a critical understanding of how open-circuit voltage behavior changes with aging. These studies collectively revealed that degradation patterns, while complex, follow reproducible signatures under given stress conditions, which represents a finding that would later enable reduced-sample approaches.

The field’s evolution took two distinct but ultimately complementary directions. Data-driven approaches, exemplified by Severson et al. [

10] and Attia et al. [

11], demonstrated remarkable predictive capabilities using machine learning. However, as Galatro et al. [

26] and Rieger et al. [

27] cautioned, these methods often required even larger training datasets than conventional approaches and faced challenges in generalizability across cell chemistries. Parallel efforts in accelerated testing protocols (Berecibar et al., [

12]; Ecker et al., [

6]) sought to compress testing timelines, although Paarmann et al. [

16] systematically demonstrated how elevated stress conditions could fundamentally alter degradation pathways.

The critical theoretical foundation for sample optimization emerged from statistical approaches to battery testing. Dechent et al.’s [

13] rigorous framework for determining sample sizes to quantify cell to cell variability provided the mathematical basis for reduced-sample testing. Strange et al. [

14] expanded this through their online prediction framework with cycle by cycle updates, while Kim et al. [

28] and Li et al. [

29] developed methods to extract maximum information from minimal early-life data. These advances collectively addressed what Motapon et al. [

30] had identified as the core challenge in developing “predictive models that respect both electrochemical reality and practical constraints”.

This study synthesizes these diverse strands of investigation into a unified framework that resolves several long-standing limitations:

- (1)

It incorporates the fundamental stress factor relationships established by Omar et al. [

31] and Wang et al. [

32] while overcoming their data-intensive requirements;

- (2)

It builds on the statistical sampling theories of Dechent et al. [

13] and Strange et al. [

14] by integrating them with mechanistic degradation models;

- (3)

It addresses the real-world applicability challenges identified by Serrao et al. [

33] and Tran and Khambadkone [

4] through adaptive algorithms for non-uniform cycling.

This integration represents more than incremental progress, as it constitutes a paradigm shift in battery testing methodology. By systematically combining mechanistic understanding with optimized experimental design, we resolve the fundamental tension between prediction accuracy and the testing burden that has constrained battery development for decades. The framework’s ability to maintain high accuracy with dramatically reduced sample sizes, as we demonstrate across 27 distinct operating conditions, provides a practical solution to one of the most persistent challenges in energy storage research.

1.2. Innovations and Contributions

This study introduces several key advances that fundamentally transform the paradigm of LIB degradation testing, as shown in the following:

A first systematic framework for sample-reduced testing;

Quantified minimum sample requirements;

Novel hybrid modeling architecture using cycle-equivalent conversion for non-uniform cycling that reduces error propagation compared to prior methods [

31];

Modified sine cosine algorithm (SCA) called landscape-aware perturbation control SCA (LPCSCA) optimized parameter extraction that achieves 30% faster convergence than the second best optimization algorithm;

Practical implementation roadmap for reduced-sample testing.

Our experimental validation across 27 stress conditions demonstrates that the proposed framework can reduce testing costs by about 90% while maintaining prediction errors below 2%, which enables faster iteration cycles for next-generation battery development.

5. Results and Discussion

To rigorously evaluate the proposed degradation model’s performance and practical utility, we conducted a systematic series of optimization studies with two primary objectives: (1) establishing baseline accuracy metrics using complete experimental datasets and (2) quantifying the trade-offs between sample size reduction and prediction reliability. This multi-stage validation framework provides critical insights into the model’s robustness while addressing the core challenge of minimizing testing costs without compromising predictive accuracy.

The initial evaluation employs all available experimental data (27 samples, 2151 data points) to establish reference performance metrics (RMSE, MAE) and validate the model’s ability to capture complex degradation patterns across the full parameter space. This complete data analysis serves as the gold standard for subsequent comparisons.

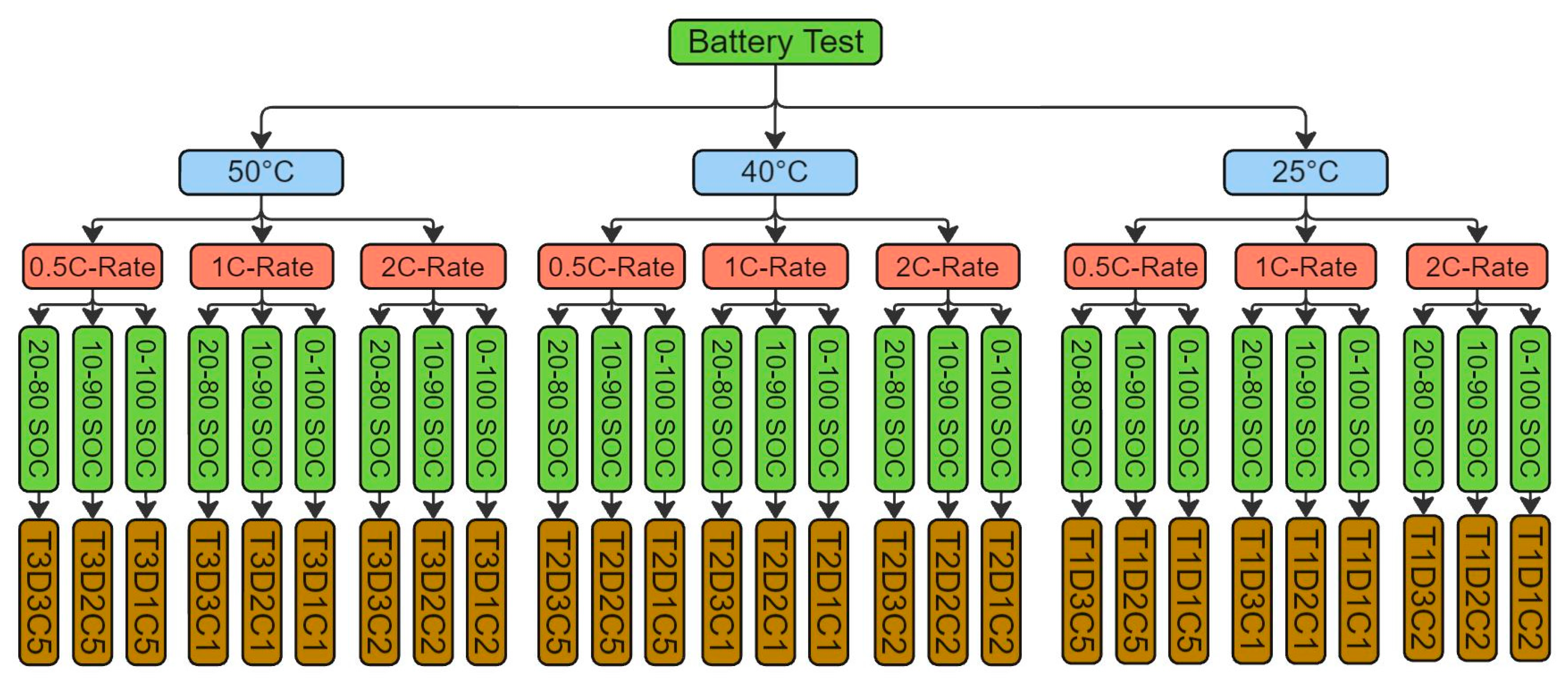

These tests have been labeled as shown in

Figure 3 and shown in the following:

T1 to represent the tests at room temperature.

T2 to represent the tests at 40 °C.

T3 to represent the tests at 50 °C.

D1 to represent the tests at cycling from 0 to 100% SoC.

D2 to represent the tests at cycling from 10% to 90% SoC.

D3 to represent the tests at cycling from 20% to 80% SoC.

C1 to represent the tests at cycling at 1 Crate.

C5 to represent the tests at cycling at 0.5 Crate.

C2 to represent the tests at cycling at 2 Crate.

Based on the above coding strategy, T2D1C5 (as an example) is representing the cycling test at 40 °C, with 0–100% SoC and 0.5 Crate. This coding will facilitate the discussion of the results.

5.1. Strategic Sample Reduction Analysis

Building on the baseline results, we systematically evaluate model performance under progressively constrained data conditions. This phased approach examines the following:

Case 1: 18 samples (33% reduction).

Case 2: 9 samples (67% reduction).

Case 3: 3 samples (89% reduction).

Each reduction scenario follows strict representativeness criteria to maintain coverage of critical stress factors (temperature, DoD, Crate).

5.2. Model Performance with All Available Data (Baseline)

In this study, all of the available data points will be used to determine the model’s parameters. As described above, we have 27 test cases representing three different temperatures, three different DoD, and three different Crate.

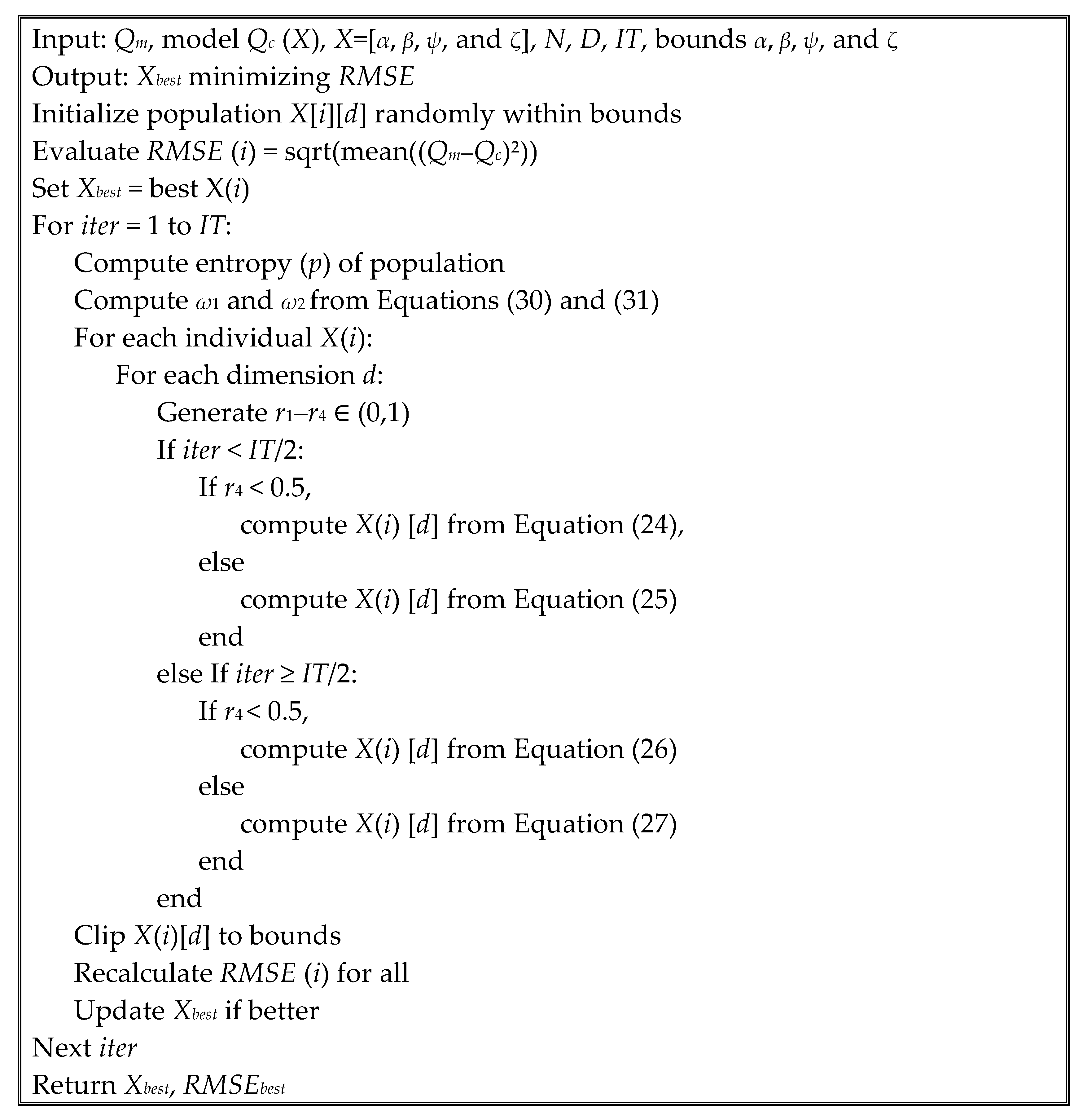

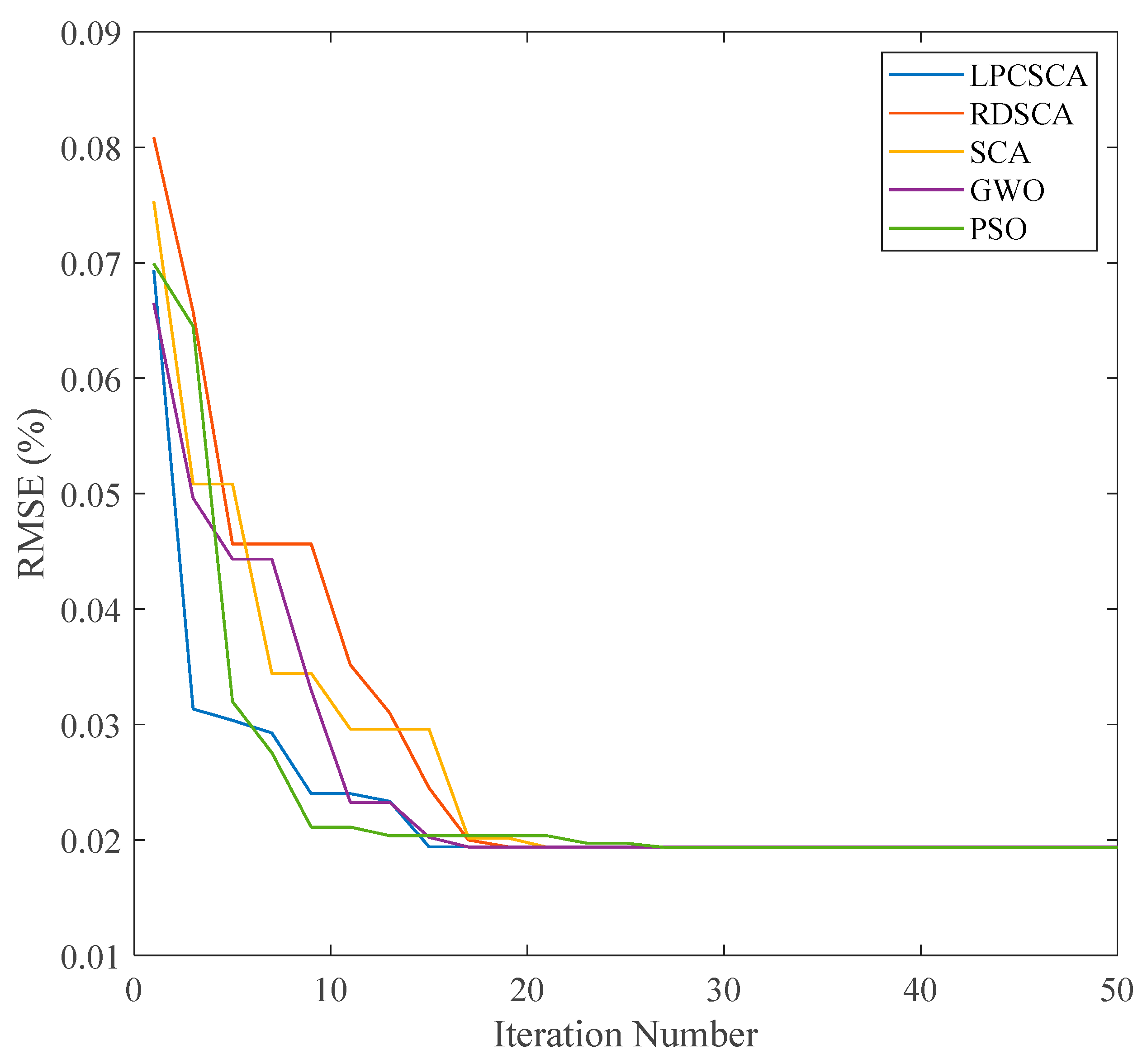

All 2151 data points were used by the optimization algorithm to determine the optimal model parameters. Due to the complexity of fitting the model equations to such a large dataset, five recent optimization algorithms (listed in

Section 3) were evaluated to identify the most suitable one for this operating condition and for subsequent analyses. Each algorithm was tested using the same swarm size (400 search agents) and number of iterations (100) to ensure a fair comparison.

Among the evaluated methods, the proposed double adaptive random spare reinforced SCA (referred to as LPCSCA) demonstrated the fastest convergence and the lowest failure rate. The convergence performance of all algorithms is summarized in

Table 2 and illustrated in

Figure 6. These results clearly show that LPCSCA outperforms the other optimization algorithms in terms of convergence speed and reliability. Specifically, LPCSCA achieves a convergence time that is 30% shorter than that of the second-best algorithm, RDSCA. Additionally, LPCSCA achieved a 100% success rate, compared to 97% for RDSCA [

42], 95% for standard SCA [

43], 92% for PSO [

44], and 94% for GWO [

45].

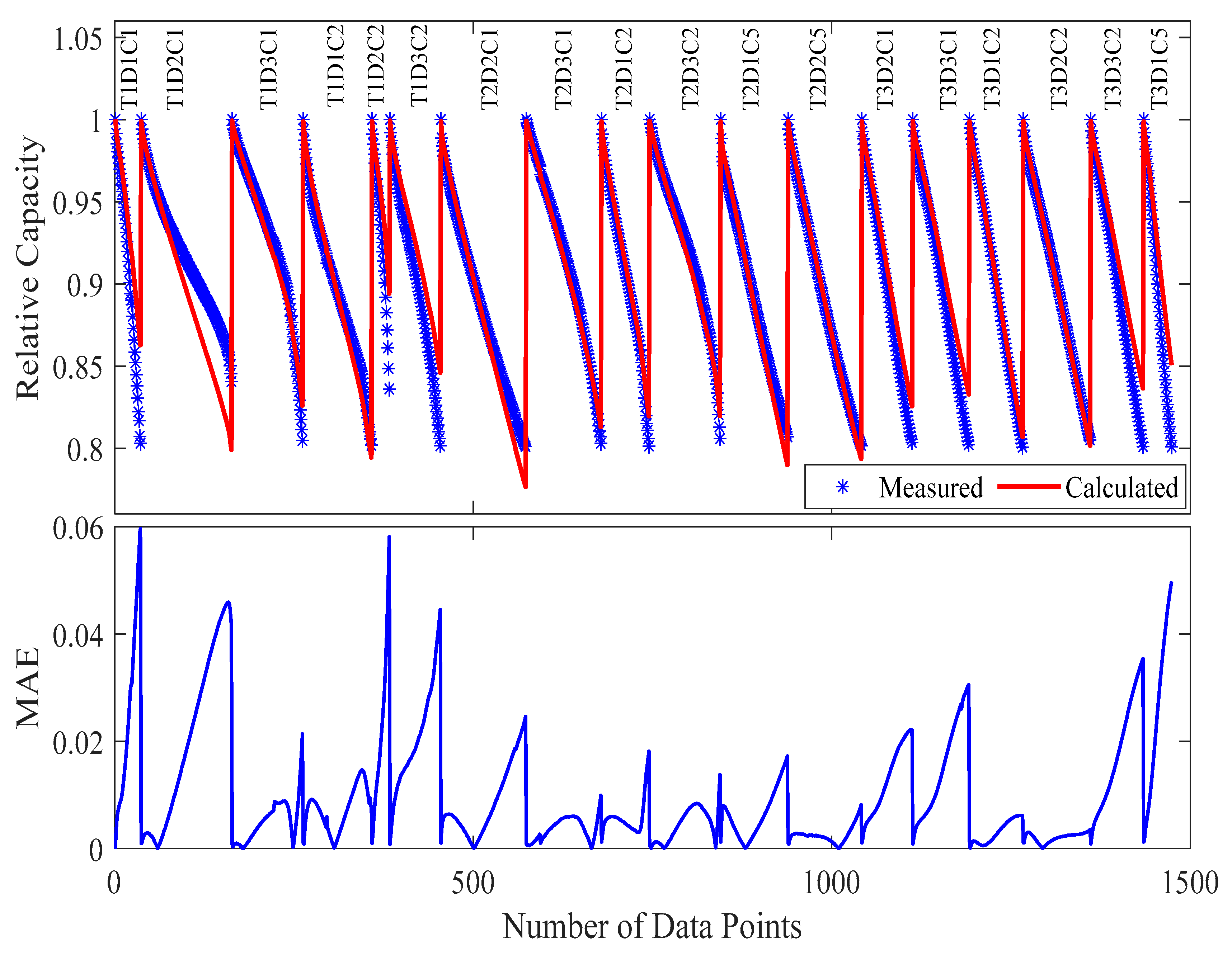

Following optimization to minimize the

RMSE between measured and calculated capacity, the resulting model parameters are presented in

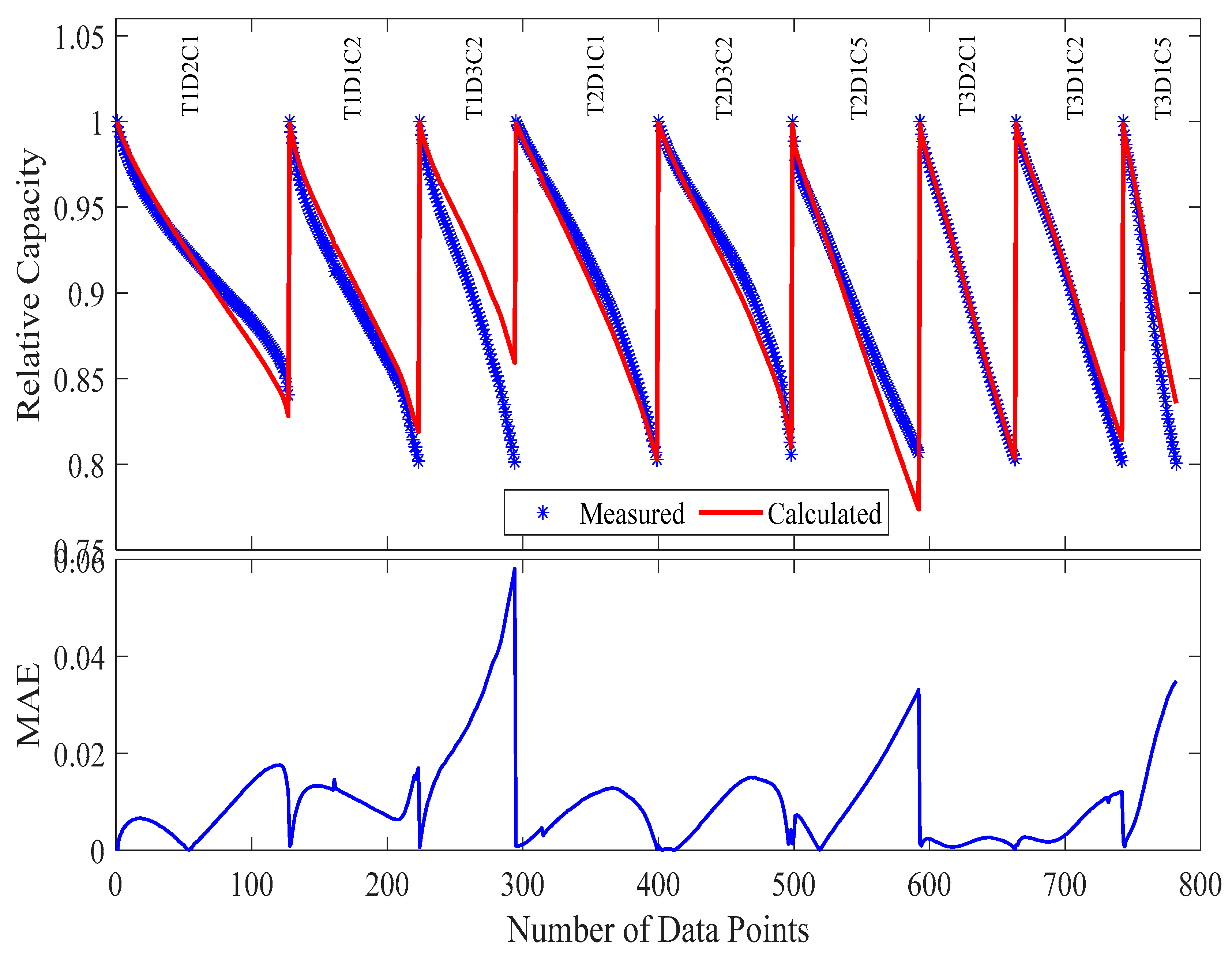

Table 3, with the comparison between measured and calculated relative capacity visualized in

Figure 7. These results indicate that the proposed model effectively fits 27 samples comprising 2118 data points, achieving a mean absolute error of 0.87% and a maximum error of 7.25%, highlighting the effectiveness of the developed degradation model and the selected optimization algorithm.

The central question addressed in the following subsections is whether the entire dataset (samples, tests, and data points) is necessary for accurately extracting model parameters. To investigate this, subsets of samples will be excluded from the optimization process to derive degradation model parameters, and their validity will then be assessed against the complete dataset. The key outcome of this analysis will be determining the minimum number of battery samples required to obtain degradation parameters with acceptable accuracy.

5.3. Model Performance with Sample Reduction (Case 1)

The comprehensive analysis of model performance under progressively constrained experimental datasets represents a critical contribution to battery testing methodology. Our investigation systematically evaluates the fundamental trade-off between testing resource investment and predictive accuracy through carefully designed sample reduction studies.

The first reduction case has 18 samples (Case 1) and follows a rigorous, multi-stage selection protocol designed to maintain a balanced representation of all critical stress factors. Initial removal of incomplete tests (T1D1C5, T1D2C5, T1D3C5, T2D2C2, T3D2C5, T3D3C5) ensures data quality, while subsequent strategic elimination preserves comprehensive coverage of the experimental design space. This approach specifically maintains the following:

Equivalent representation across temperature groups (six samples per temperature).

Full span of DoD conditions (0–100%, 10–90%, 20–80%).

Complete range of Crate (0.5 C, 1 C, 2 C).

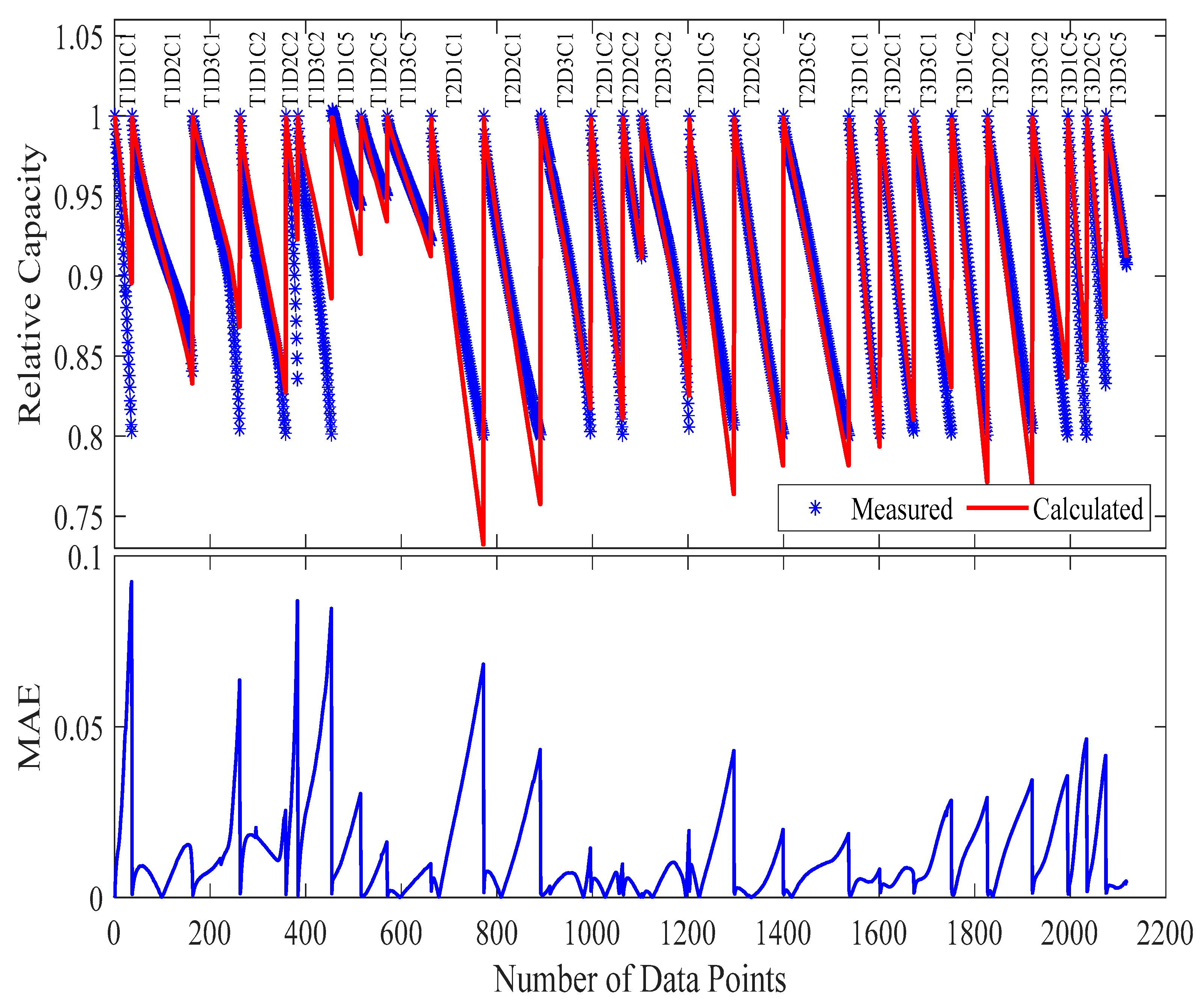

The model parameters derived from this reduced dataset demonstrate remarkable resilience, achieving a training MAE of just 0.99%, only a 0.12% point increase over the full dataset model. When validated against the complete experimental matrix (27 samples, 2151 points), the performance remains robust, with MAE = 1.22%, representing a modest 39% relative increase in error while reducing testing costs by 33%.

Detailed error analysis reveals several important insights.

With the 33% testing cost reduction, the MAE increased from 0.87% to 1.22% and remains well within practical tolerance for most engineering applications.

Error escalation primarily occurs in extreme operating conditions (notably, 2 C cycling at 50 °C).

The balanced sample selection successfully preserves model accuracy across the majority of the operational envelope.

Visual examination of

Figure 8 and

Figure 9 as well as

Table 4 provides compelling evidence of the model’s stability. The capacity degradation trajectories maintain excellent agreement with experimental measurements, with deviations primarily manifesting in later cycles, where cumulative stress effects become more pronounced. This performance consistency confirms that strategic sample selection can effectively capture the essential degradation dynamics while significantly reducing the experimental burden.

The 20% increase in maximum absolute error (from 7.25 to 8.73%) warrants careful consideration in application-specific contexts. While this represents a more substantial relative change, it is important to note the following.

These maximum MAEs occur in less than 5% of predictions.

The affected conditions represent boundary cases in the operational design space.

The MAE values remain within acceptable ranges for most practical applications.

This case study establishes a critical foundation for understanding how intelligent experimental design can optimize the balance between testing costs and model accuracy. The results demonstrate that through careful sample selection and maintaining comprehensive stress factor coverage, substantial resource savings can be achieved with only modest compromises in predictive performance. These findings have immediate practical implications for battery testing protocols, particularly in industrial settings where testing costs and development timelines are critical constraints.

5.4. Model Performance with Significant Sample Reduction (Case 2)

Building upon the initial findings from Case 1, we further examine the model’s robustness under more aggressive sample reduction. Case 2 represents a strategic downsizing to just nine carefully selected samples (782 data points), maintaining a balanced representation of all critical stress factors while achieving a 67% reduction in testing requirements. The sample selection methodology follows three key principles.

Comprehensive Stress Coverage: Each retained sample combination ensures representation of the following:

All three temperature levels (25 °C, 40 °C, 50 °C);

The full DoD spectrum (0–100%, 10–90%, 20–80%);

Varied Crate (0.5 C, 1 C, 2 C).

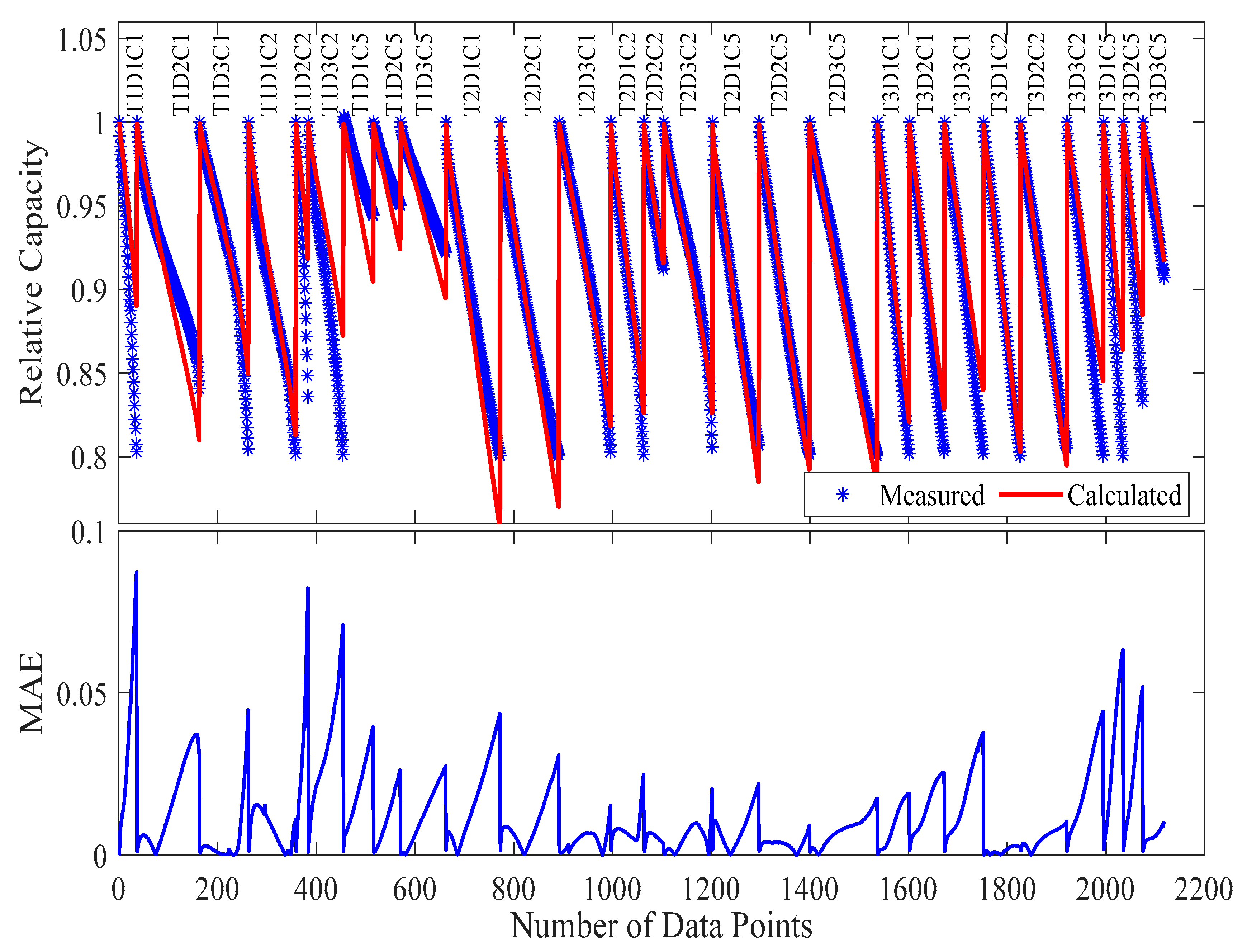

For Case 2, we strategically selected a nine-sample subset to ensure comprehensive coverage across three principal stress dimensions: temperature (25 °C, 40 °C, 50 °C), depth of discharge (DoD; 0–100%, 10–90%, 20–80%), and Crate (0.5 C, 1 C, 2 C). This selection was guided by a coverage matrix that guaranteed representation at each level of the three factors while minimizing redundancy. Samples were selected to maximize orthogonality in combinations of stress conditions, thereby improving diversity in aging trajectories. This process involved combinatorial analysis to ensure balanced representation across the stress spectrum, as illustrated in

Table 5 and

Figure 10.

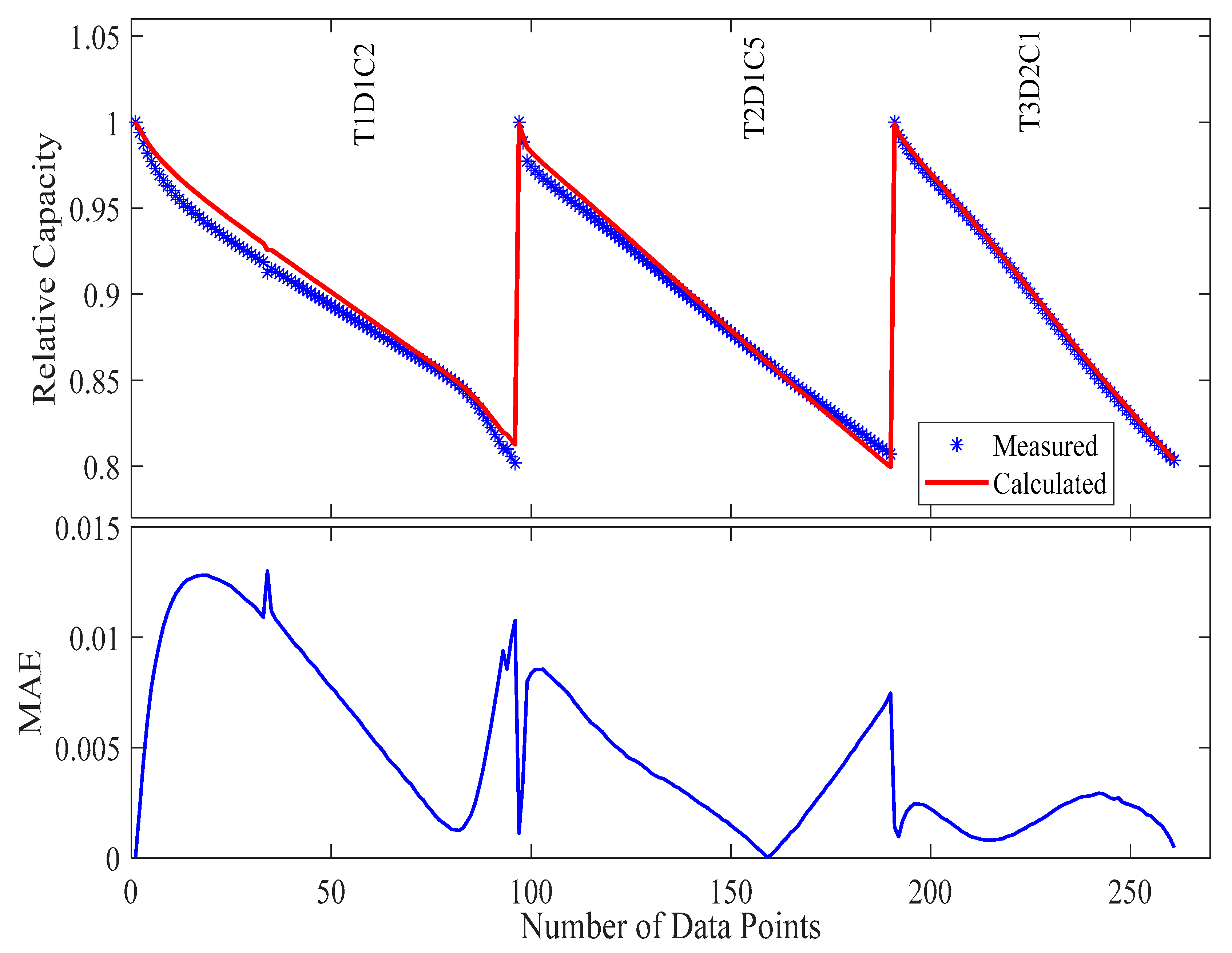

The selected samples (T1D2C1, T1D1C2, T1D3C2, T2D3C1, T2D3C2, T2D1C5, T3D2C1, T3D1C2, T3D1C5) were chosen to maximize information content per test, maintain orthogonal stress factor combinations, and preserve edge-case conditions.

The optimization process yielded 1.03%

MAE and maximum absolute error of 5.81%, as shown in

Figure 10 and

Table 5.

When applied to the complete validation set (27 samples), the reduced-parameter model demonstrated 1.33%

MAE (52.9% increase vs. full-dataset model) and 9.25% maximum absolute error (28.97% increase vs. full-dataset model). It is worth noting that from

Figure 9, there is a particularly strong performance in mid-range conditions (1 C, 40 °C) and predictable error escalation in extreme conditions (2 C at 50 °C). The model maintains excellent phase agreement in degradation trajectories (

Figure 11). This case demonstrates that even with just one-third of the original test matrix (samples), the model retains substantial predictive capability. The 1.33%

MAE achieved represents exceptional performance considering the 67% reduction in experimental burden. These findings have important practical implications for battery testing programs where resource constraints demand maximum information yield from minimal experimental investment.

5.5. Extreme Sample Reduction Analysis (Case 3)

The final and most rigorous evaluation of our modeling framework examines its performance under conditions of extreme sample reduction, where only three strategically selected test conditions (comprising 261 data points, “Case 3”) were utilized, representing a mere 11% of the original experimental dataset. This investigation serves as a critical stress test of our methodology’s ability to extract meaningful degradation parameters from minimal experimental inputs while maintaining predictive validity across the full operational envelope.

In Case 3, we identified a minimal yet representative three-sample subset through combinatorial optimization. This subset (T1D1C2, T2D1C5, T3D2C1) was selected to span the extremities of the stress factors (temperature, DoD, and Crate), thereby capturing a broad range of degradation behaviors. The objective was to maximize information density per sample while maintaining diversity in aging responses. The approach ensured stress factor coverage with minimal overlap, supporting the generalizability of the model from a minimal training set. Moreover, these subsets were selected using a structured combinatorial selection approach to ensure broad and orthogonal representation across stress factors. This included maintaining coverage of the following:

- (1)

The full temperature range (25 °C, 40 °C, and 50 °C);

- (2)

Diverse depth-of-discharge conditions (spanning both extreme 0–100% and moderate 20–80% ranges);

- (3)

Varied current levels (0.5 C, 1 C, and 2 C).

It is important to note that our model does not assume equal contributions of the stress factors to cell aging. Instead, stress-specific parameters (

α for

DoD,

β for Crate, and

ψ for temperature) are estimated independently during model fitting (see Equations (9)–(13)). These parameters allow the model to quantify and differentiate the influence of each factor. As seen in

Table 4,

Table 5 and

Table 6, the parameter values vary significantly across stress dimensions, indicating differing contributions to degradation. Furthermore, our results indicate that high

Crate and elevated temperature have a disproportionate impact, as reflected in increased error rates when these extremes are underrepresented.

This combinatorial selection methodology ensured that despite an 89% reduction in testing requirements, the model retained access to data capturing the key degradation mechanisms. Visual inspection of the time-series profiles (

Figure 12) confirmed that the selected data exhibited diverse yet representative aging trajectories. This diversity enabled the model to generalize essential capacity fade patterns, even with a significantly reduced dataset. Each distinct stress factor left a characteristic signature on the battery’s behavior, allowing the model to recognize and differentiate the effects of various stress conditions.

Remarkably, parameter optimization using this minimal dataset yielded exceptional fitting performance on the training points themselves, achieving an

RMSE of 0.0119,

MAE of just 0.47%, and a maximum error of 1.30% (as visually confirmed in

Figure 12 and listed in

Table 6). These results demonstrate the model’s inherent capacity to extract meaningful parameters from extremely limited data when test conditions are strategically selected to maximize information content.

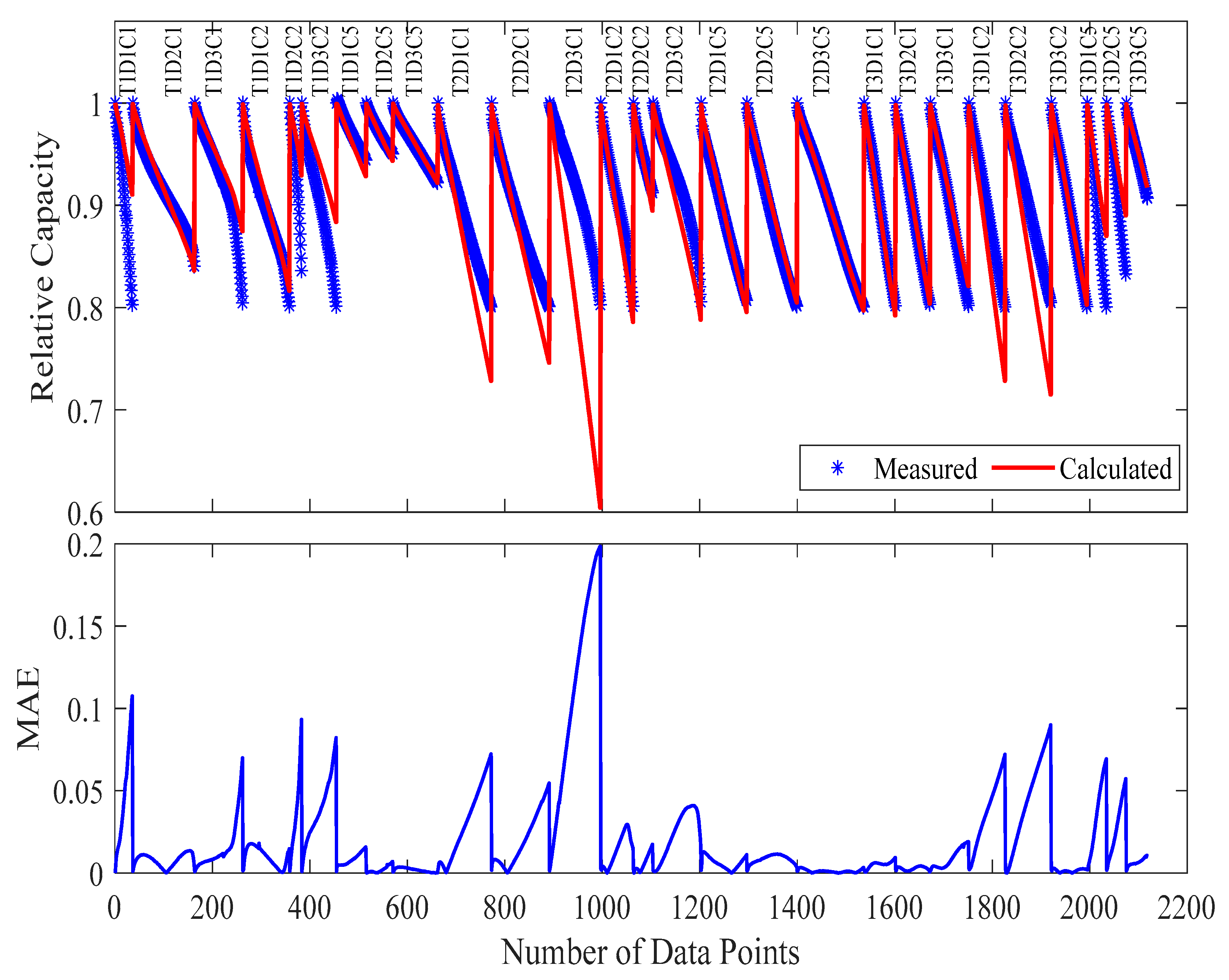

However, the application of these parameters to the complete validation set revealed the inevitable compromises of such aggressive sample reduction. The full-matrix evaluation showed an

MAE of 2.04% (representing a 134.5% increase compared to the full-dataset model) and a maximum error of 19.85% (177.6% increase), with error distribution patterns visible in

Figure 13. Detailed analysis of these results reveals several important insights into the model’s behavior under extreme data constraints.

The observed error progression follows a distinct logarithmic relationship concerning sample reduction. While the initial reduction from 27 to 18 samples (33% cycling test samples reduction) increased MAE by just 39% and the subsequent reduction to 9 samples (67% cycling test samples reduction) raised it by 53%, this final stage of extreme reduction to 3 samples (89% cycling test samples reduction) caused a disproportionate 135% MAE increase. This nonlinear scaling suggests the existence of a critical threshold in sample reduction beyond which predictive performance degrades rapidly.

Examination of specific failure modes provides crucial guidance for practical implementation. The primary sources of increased error occur in high Crate conditions not directly sampled (particularly 2 C operation at 50 °C) and intermediate DoD ranges (10–90%) that were not represented in the minimal set. Conversely, the model maintains surprisingly accurate predictions for low to moderate stress conditions and properly captures fundamental temperature-dependent behaviors, indicating that even this extremely reduced dataset preserves information about core degradation mechanisms.

From an application perspective, these findings establish clear guidelines for balancing accuracy requirements with resource constraints. The three-sample configuration may suffice for preliminary feasibility studies where rough estimates of battery lifetime are acceptable, while commercial-grade applications would require at least nine samples to maintain errors below 1.5%. Most importantly, the results demonstrate that the prediction of edge-case behaviors demands targeted inclusion of those specific stress conditions in the test matrix.

This limiting case study ultimately serves two vital purposes: it establishes the absolute lower bound of sample requirements for meaningful degradation modeling while simultaneously demonstrating that even minimal testing when properly designed can capture fundamental aging trends. The insights gained provide a scientific foundation for making informed trade-offs between testing costs and prediction accuracy in both research and industrial contexts.

5.6. Comprehensive Analysis of Sample Reduction Effects on Model Accuracy

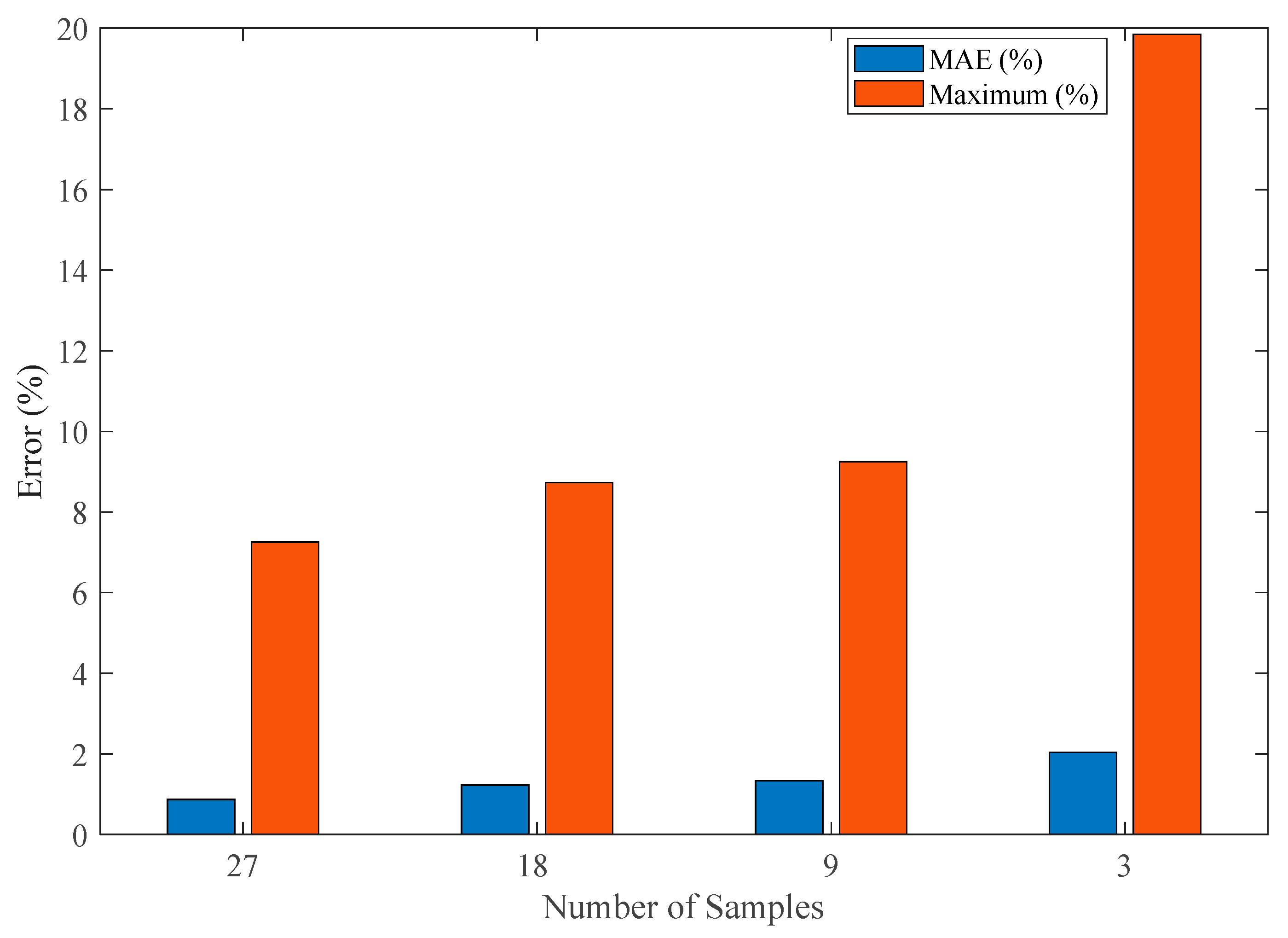

The systematic evaluation of model performance across varying sample sizes yields several critical insights into the fundamental relationship between experimental effort and prediction accuracy. As demonstrated in

Figure 14, our analysis reveals a well-defined trade-off between sample reduction and error metrics that follows distinct patterns for both mean and maximum absolute errors. The baseline full-dataset model (27 samples, 2151 data points) establishes the theoretical performance limit with an

MAE of 0.87% and a maximum absolute error of 7.15%. The first reduction case (18 samples, 2118 data points, 33% reduction) shows a moderate increase in these metrics to 1.22%

MAE (+39.11% relative increase compared to baseline) and 8.73% maximum error (+20.4% relative increase compared to baseline) while achieving proportional cost savings. This configuration maintains excellent predictive capability, with the 1.22%

MAE representing what we consider the threshold for high-accuracy applications in both research and industrial contexts.

A further reduction to nine samples (67% fewer tests, 782 data points) demonstrates the model’s remarkable resilience, with MAE increasing to just 1.33% (+52.87% relative increase compared to baseline) and maximum error reaching 9.25% (+28.97% relative increase compared to baseline). Importantly, this level of accuracy remains fully acceptable for most practical applications while providing substantial (67%) reductions in testing costs. The relative stability of these metrics through Case 2 confirms that proper experimental design can maintain model fidelity even with significant sample reduction.

The extreme case of three-sample modeling (89% reduction, 261 data points) pushes these boundaries, resulting in an MAE of 2.04% (+134.5% relative increase compared to baseline) and a maximum error of 19.85% (177.6% increase compared to baseline). While these increases appear substantial in relative terms, the absolute MAE remains below 2.1%—potentially acceptable for preliminary studies or rapid prototyping. The dramatic 90% cost reduction (from 27 to 3 samples) achieved in this configuration highlights the framework’s potential for resource-constrained applications.

Critical to the success of minimal-sample configurations is the strategic selection of test conditions. Our results prove that three carefully chosen samples, each representing distinct temperature, DoD, and Crate combinations, can capture the essential degradation dynamics. This finding has profound implications for battery testing economics, potentially reducing validation costs by an order of magnitude while maintaining usable accuracy for certain applications.

The nonlinear progression of error increases (39% → 53% → 135% for MAE) relative to sample reduction (33% → 67% → 89%) reveal important scaling relationships that inform test planning. Practitioners can now make informed decisions about the appropriate balance between accuracy requirements and resource constraints, with clear guidance emerging from these quantified trade-offs.

6. Conclusions

Lithium-ion battery development has long been constrained by the resource-intensive nature of traditional testing methods, which require numerous samples to undergo complete life-cycle analysis. Our research presents a breakthrough solution that dramatically reduces this burden while maintaining accuracy. By developing a data-efficient modeling framework, we demonstrate that as few as three strategically selected samples (just 11% of a standard test set) can predict battery degradation with only 2.04% mean absolute error, achieving 90% cost reduction. The system’s intelligence lies in its representative sampling across critical stress conditions (temperature variations, discharge depths, and charge rates) coupled with advanced optimization algorithms that maximize information extraction from minimal data.

This innovation carries significant technical and economic implications for both research and industry. The model’s ability to adapt to real-world conditions through novel cycle conversion methods makes it particularly valuable for practical applications beyond controlled lab environments. For researchers, it enables more studies with limited resources; for manufacturers, it accelerates development cycles while controlling costs. Looking forward, the framework’s adaptability suggests promising applications for emerging battery chemistries and pack-level testing. By transforming one of the most persistent bottlenecks in battery development, our approach represents more than just methodological improvement to offer a fundamental shift in energy storage validation that could accelerate progress toward sustainable electrification. The potential impacts range from faster electric vehicle development to more efficient grid-scale storage solutions, all achieved through smarter, more efficient testing protocols.

While this study focuses on a single, well-characterized dataset to ensure clarity and depth of analysis, we acknowledge the importance of evaluating the proposed methodology across a broader range of battery aging datasets. Extending the framework to other publicly available datasets with different chemistries and capacity ranges would provide further evidence of its generalizability. To this end, we plan to conduct a comprehensive benchmarking study in future work, assessing the performance and robustness of the proposed approach across multiple datasets.