Abstract

The implementation of image recognition technology can significantly enhance the levels of automation and intelligence in smart agriculture. However, most researchers focused on its applications in medical imaging, industry, and transportation, while fewer focused on smart agriculture. Based on this, this study aims to contribute to the comprehensive understanding of the application of image recognition technology in smart agriculture by investigating the scientific literature related to this technology in the last few years. We discussed and analyzed the applications of plant disease and pest detection, crop species identification, crop yield prediction, and quality assessment. Then, we made a brief introduction to its applications in soil testing and nutrient management, as well as in agricultural machinery operation quality assessment and agricultural product grading. At last, the challenges and the emerging trends of image recognition technology were summarized. The results indicated that the models used in image recognition technology face challenges such as limited generalization, real-time processing, and insufficient dataset diversity. Transfer learning and green Artificial Intelligence (AI) offer promising solutions to these issues by reducing the reliance on large datasets and minimizing computational resource consumption. Advanced technologies like transformers further enhance the adaptability and accuracy of image recognition in smart agriculture. This comprehensive review provides valuable information on the current state of image recognition technology in smart agriculture and prospective future opportunities.

1. Introduction

Image recognition technology (IRT) is an emerging technology used to process, analyze, and interpret images by computer to identify various objects, including crops, faces, vehicles, landmarks, and other patterns across diverse environments. It is the foundation of stereoscopic vision, motion analysis, and data fusion, and it is of great significance in a variety of fields, such as navigation, map and terrain alignment, natural resource analysis, weather forecasting, environmental monitoring, physiological anomaly research, and smart agriculture [1]. “Smart agriculture” refers to the comprehensive application of modern information technology to realize visualized remote diagnosis, remote control, and disaster warning in agriculture [2]. The technologies included integrating computer (IC) [3], IRT [4], internet of things (IoT) [5], audio-visual technology [6], remote sensing, global positioning system (GPS), and geographic information system (GIS) technology [7], wireless sensor networks (WSN) [8], and expert knowledge. However, traditional IRT is based on some simple model structures and requires manual preprocessing of images, which limits the application effect in smart agriculture applications [9]. To solve these challenges, researchers began to explore deeper network models, such as Convolutional Neural Networks (CNN) [10], Recurrent Neural Networks (RNN) [11], and Generative Adversarial Networks (GAN) [12], so that the machine can automatically extract image features and reduce human intervention [13]. In recent years, various image recognition systems have been successfully developed and applied in actual agricultural production [14]. These systems are mainly used for plant disease and pest detection, crop identification, crop yield prediction, and quality evaluation, providing effective solutions for improving agricultural efficiency.

In crop disease detection, various plant diseases can be quickly identified and classified by high-precision image capture and real-time processing technologies, significantly improving disease management’s timeliness and effectiveness [15,16]. Advanced technologies such as hyperspectral imaging and multispectral cameras are widely applied in crop identification to enhance recognition accuracy and efficiency. Hyperspectral imaging captures spectral data beyond the human visual range, enabling precise classification of crops like hard and soft wheat [17]. Multispectral cameras perform excellently in practical applications, such as analyzing leaf reflectance characteristics for grape variety identification and aiding vineyard management [18]. The combination of these technologies with machine learning and deep learning models improves crop classification accuracy, thereby promoting agricultural production and management. Additionally, unmanned aerial vehicles (UAVs) combined with IRT show tremendous potential in crop yield prediction and quality assessment, supporting large-scale monitoring and resource optimization [19,20,21].

However, most research is still at the prototype stage, and several challenges remain. These include insufficient datasets, weak real-time detection capabilities, and difficulties in detecting multiple diseases simultaneously. Agricultural products are often indistinguishable during identification due to their similar spectral characteristics, salt-and-pepper noise, and low-resolution crop images [22]. In practical field conditions, particularly in high-density plantings, crops can obscure each other, making it difficult to distinguish individual plants in images and increasing the complexity of identification.

In soil testing and nutrient management, the combination of IRT and machine learning algorithms such as support vector machines (SVM) and CNN realized efficient soil type classification and nutrient detection and enhanced the precision of crop growth monitoring and fertilizer application in agricultural production. However, if the soil is covered with dense vegetation, current machine learning and image recognition algorithms make it hard to segment and identify soil features accurately. Even advanced models, such as improved versions of Mask R-CNN [23], face challenges when processing in high-density environments. In the evaluation of agricultural machinery operation quality, real-time monitoring and quality evaluation of the machinery operation process have been achieved through the integration of deep learning (DL) models, ensuring accuracy and efficiency [24]. In the grading of crops, due to the short shelf life and susceptibility to damage of fruits and vegetables, manual sorting is often slow and time-consuming, which affects distribution efficiency [25]. Therefore, in modern agriculture, integrating emerging technologies into crop sorting equipment has become an inevitable trend. Technologies such as IRT and computer vision (CV) can rapidly, accurately, and non-destructively detect the appearance characteristics of agricultural products, enabling quality assessment, classification, and grading [26,27]. Three-dimensional imaging, combined with CV, shows significant advantages in quality assessment and automatic grading of apples [28]. These technologies help group products into batches of uniform quality, making them easier to sell and transport while reducing the risk of harmful products blending in.

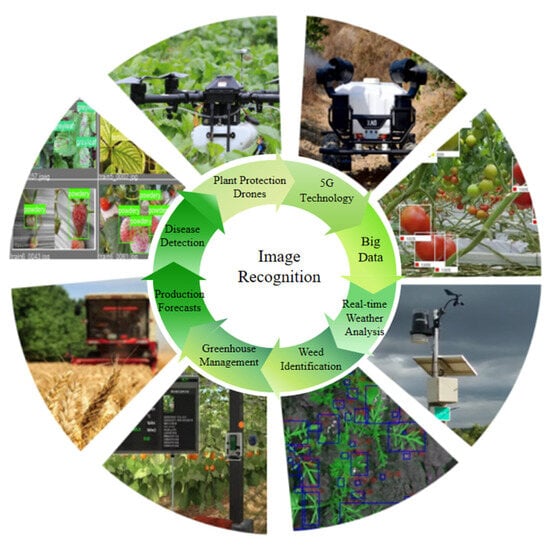

As shown in Figure 1, IRT has a wide range of applications in modern agriculture. In plant protection, IRT enables plant protection drones to accurately identify pest-affected areas and apply precise pesticide spraying, reducing chemical usage and minimizing environmental impact. In weed identification, IRT combined with CV, multispectral, and hyperspectral imaging technologies effectively distinguishes weeds from crops, achieving precision weed management and optimizing herbicide use. In greenhouse management, IRT integrated with IoT devices and deep learning algorithms provides real-time monitoring of plant health and environmental conditions, enabling timely disease identification and resource optimization. Additionally, IRT combined with meteorological sensors offers real-time weather analysis, helping farmers optimize irrigation, planting, and harvesting schedules based on weather conditions. By integrating with big data platforms and machine learning technologies, IRT also uncovers patterns and trends in agricultural data, optimizing agricultural practices and promoting more efficient and sustainable agricultural development.

Figure 1.

Applications of image recognition technology in smart agriculture. Source: Original figure created by the authors.

Moreover, technical tie-ups can further improve the application of IRT in smart agriculture [29]. For instance, integrating emerging technologies such as RS, IoT, and blockchain can enhance the efficiency of data collection and processing and elevate the intelligence and automation of systems, making the agricultural industry more precise and efficient.

In conclusion, IRT has been widely applied in various aspects of smart agriculture. However, it still faces numerous challenges in complex environment and fluctuating climatic conditions, such as the generalization capability of models, the real-time processing ability of algorithms, and the adaptability across different crops and growth stages. Based on this, this study aims to present a holistic overview of the development of IRT and its applications in smart agriculture.

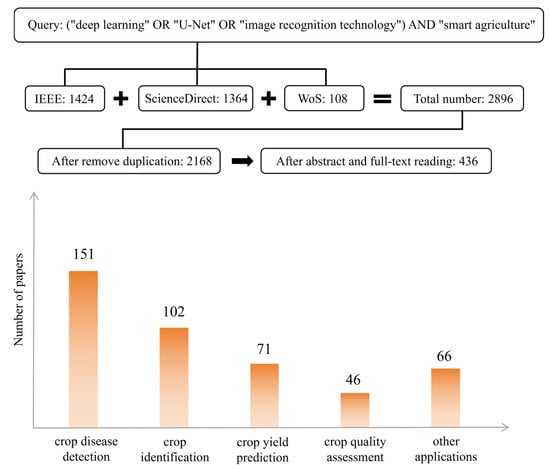

Related Work: In order to investigate the research related to agricultural image recognition techniques, a literature search was conducted in the following three digital databases: (1) IEEE Xplore, (2) Web of Science, and (3) ScienceDirect for relevant existing studies. The search queries and results are shown in Figure 2. The search timeframe is from 2019 to 2024. The research object is crop-related tasks, and the research questions focus on deep learning techniques and image recognition techniques.

Figure 2.

Literature search and selection process.

In the first stage, we searched the three databases, and the preliminary results showed that a total of 2896 documents were retrieved. In the second stage, 728 duplicates were removed. Finally, in the third phase, we scrutinized 2168 papers based on the following criterion: only those papers that discussed in detail image recognition techniques and their specific applications in agricultural scenarios were retained. After further abstracting and full-text reading screening, 436 highly relevant papers (including 56 review papers) were finally selected. Based on the search results, we categorized the research applications into the following areas: crop disease detection, crop identification, crop yield prediction and quality assessment.

As shown in Table 1, we selected 6 representative papers from 56 survey papers for comparative analysis. Several authors [30,31,32,33,34,35] provided reviews on the application of agricultural IRT in various fields, including plant disease detection, crop identification, and yield prediction. In these studies, deep learning, CV methods, and data analysis techniques are widely used. Tian et al. [32] introduced a deep learning-based quality assessment method, which outperforms traditional methods in crop quality monitoring. Dhanya et al. [33] emphasized the impact of environmental variables on crop yield prediction, pointing out that most current methods fail to adequately consider factors such as climate and soil variations. The contributions of this survey compared to recent literature in the field can be summarized as follows:

- Compared to other studies in the field, this review provides a comprehensive summary of agricultural IRT applications, enabling researchers to quickly and thoroughly understand the various technologies and their research foundations.

- We provide an overview of application areas such as plant disease detection, crop identification, and quality assessment. Additionally, we discuss how to optimize these applications by adopting deep learning and CV techniques.

- We present future research opportunities aimed at further improving crop recognition accuracy, achieving precision agriculture, and exploring the potential of new technologies in agricultural automation.

Table 1.

Comparison of related studies on agricultural image recognition technology applications.

Table 1.

Comparison of related studies on agricultural image recognition technology applications.

| Year | Reference | Plant Disease Detection | Crop Identification | Crop Yield Prediction | Quality Assessment | Soil Nutrient Management | Fruit Grading |

|---|---|---|---|---|---|---|---|

| 2018 | Diego et al. [30] | √ | √ | ||||

| 2019 | Kirtan et al. [31] | √ | √ | ||||

| 2020 | Tian et al. [32] | √ | √ | √ | |||

| 2022 | Dhanya et al. [33] | √ | √ | √ | |||

| 2022 | Mamat et al. [34] | √ | √ | √ | |||

| 2024 | Lian et al. [35] | √ | √ | ||||

| 2024 | Our | √ | √ | √ | √ | √ | √ |

The main contents are as follows: Section 2 makes a comprehensive introduction to the development and fundamental processes of IRT; Section 3 covers its applications in plant disease and pest detection and classification, crop identification, as well as crop yield prediction and quality assessment. It also provides a brief overview of other applications of IRT in smart agriculture. Section 4 analyzes the significant scientific achievements and the challenges in this field in recent years and explores the future directions of agricultural IRT. This comprehensive review provides valuable information on the current state of IRT in smart agriculture and prospective future opportunities.

2. Overview of the Development of Image Recognition Technology

2.1. Image Recognition Technology

IRT is used by computers to process, analyze, and explain images to identify various patterns and objects. A series of enhancement and reconstruction technologies are used to effectively improve the quality of suboptimal images [36,37,38,39]. IRT comprises image acquisition, preprocessing, data augmentation, feature extraction, feature matching, classification design, classification decision-making, and recognition and matching [40]. Image acquisition is the first step involving capturing images through cameras, drones, satellite imaging systems, and handheld imaging devices such as portable hyperspectral or thermal cameras. Image preprocessing is the second step, and it primarily aims to make necessary adjustments to the raw image (such as grayscale conversion, noise reduction, enhancement, and size normalization) and make the images more transparent and better for subsequent processing stages [41]. Data augmentation follows preprocessing as an optional but essential step in many cases, where synthetic transformations such as rotation, flipping, cropping, scaling, and color adjustments are applied to the original images. This process increases the diversity of the dataset and improves the robustness of image recognition models by simulating various real-world conditions. Feature extraction is the third step and a crucial stage in image recognition. It focuses on extracting useful features from the image to facilitate subsequent classification or identification tasks [42]. Feature extraction based on edge, shape, and texture technologies is all practical, and these technologies analyze the edge, shape, and texture of the image to derive beneficial characteristics for further processing. Feature matching is the fourth step, and the feature points between two or more images are compared and matched in this step [43]. Classification design and decision-making is the fifth step. Sensors convert electronic information from light or sound, thereby obtaining fundamental information about the object of study and transforming it into information machines that can be recognized. Recognition and matching is the last step, and the extracted features are matched with known patterns to identify the target object. IRT permeates various fields and becomes an indispensable component across industries. Although these fields provide direction for the development of IRT, they may be influenced by numerous factors in practical application, including the pace of technological advancement, shifts in market demand, and changes in policies and regulations.

2.2. Development of Image Recognition Technology

As a core part of the CV field, IRT has experienced a long development process. It can be divided into four key stages: inception stage, breakthrough stage, deep development stage, and application expansion stage. The four stages are as follows:

2.2.1. Inception Stage

The origins of IRT dated back to the 1950s. During this stage, image recognition primarily relied on simple feature extraction and template-matching technologies [44]. Since computer technology was still in its infancy and its capacity for image processing was limited, then, so-called image recognition was mainly applied to recognizing simple digits, letters, and symbols. Although the technology at this stage was relatively rudimentary, it laid the foundation for the subsequent development of IRT.

2.2.2. Breakthrough Stage

Early in the 1990s, more advanced algorithms, such as neural network-based image recognition methods, were injected into IRT for processing and analyzing images, which brought a significant breakthrough in IRT. These advancements enhance image processing capabilities and provide more efficient solutions for agricultural practitioners [45]. Additionally, with digital image processing technology, images’ storage, transmission, and processing have become more convenient. The breakthrough of these technologies significantly improved the accuracy and efficiency of IRT.

2.2.3. Deep Development Stage

In the early 21st century, with the rise of DL, IRT has been further developed [46]. DL enabled computers to automatically extract useful features from vast amounts of data without artificial characterization and effectively promoted image recognition accuracy. At the same time, the widespread availability of GPUs and other computational devices greatly accelerated the training speed of DL algorithms, and IRT was improved in application further.

2.2.4. Application Expansion Stage

Now, the application scenarios for IRT have been further expanded with the rapid development of the IoT, big data, and other technologies. For example, IRT is applied to (1) the detection and identification of faces, objects, and other targets in surveillance videos in the field of intelligent security; (2) assist doctors in rapidly diagnosing diseases in the medical field; (3) vehicle detection, traffic, and congestion analysis [47,48,49] in intelligent transportation; and (4) object tracking in fields such as agriculture and logistics. Object tracking further enhances the ability to monitor and manage dynamic targets in real time, supporting applications such as tracking livestock movements or agricultural machinery in smart farming.

In short, from the initial stage of simple feature extraction and template matching to the breakthrough stage of an image recognition method based on neural networks, then to DL, and finally to the application expansion stage with IoT and big data, the development of IRT has been experienced a long and continuous process. With the constant innovation and advancement of emerging technologies, IRT will bring more innovation and revolution to various fields.

3. Application of Image Recognition Technology in Smart Agriculture

In this section, we focus on three key categories of image processing technologies that hold significant importance and wide applications in smart agriculture: plant disease detection, crop species identification and classification, and crop yield prediction and quality assessment. These three categories play a vital role in addressing critical agricultural challenges, such as crop health management, resource optimization, and supply chain planning. By focusing on these categories, this section provides a comprehensive overview of the applications of image recognition technologies in smart agriculture.

3.1. Application of Image Recognition Technology in Plant Disease Detection and Classification

Plant diseases severely threaten crop yields and food safety and directly reduce farmers’ income [50]. According to the statistics, the loss rates for rice, wheat, barley, corn, potatoes, soybeans, cotton, and coffee beans are between 9% and 16%, and the bactericidal budget for disease control in the United States alone exceeded 5 billion dollars annually. The direct financial loss of these six major agricultural products in Australia due to plant diseases reached 8.8 billion dollars annually. In contrast, the total value of the products was worth 74 billion dollars [51]. In 1840, the Irish Potato Famine caused by the late blight pathogen and the Bengal Famine caused by rice brown spot resulted in millions of deaths [52]. To mitigate the impact of plant diseases, it is imperative to continuously monitor crop health and swiftly implement effective control measures, which can significantly reduce the loss. However, the traditional manual detection method was inefficient for widespread disease monitoring. Substantial human and financial resources were invested, but accurate, timely information on disease progression was still hard to provide to farmers, which led to the belated action and ultimately exacerbated economic losses. Therefore, rapidly and accurately identifying plant diseases and taking prompt countermeasures is extremely urgent.

3.1.1. The Process of Plant Disease Detection

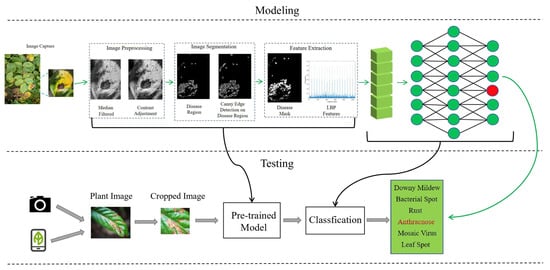

In the agricultural industry, IRT for crop disease detection is the key to improving crop health management and production efficiency [53]. The steps of this technology include acquiring crop images, preprocessing the images to enhance quality, extracting key features from the images, and identifying and classifying diseases based on the machine learning model [54]. The overall process not only depends on high-quality image acquisition technologies but also requires image processing and analysis algorithms to recognize the characteristics of crop diseases. In the initial step of crop disease detection, clear images of the crops captured under good light conditions by high-definition cameras or other image acquisition devices are necessary to facilitate subsequent analysis [55]. In the preprocessing step, grayscale conversion, binarization, and noise filtering (as shown in Figure 3) are essential to enhance image quality, simplify content, and reduce the complexity of subsequent processing [56]. This step ensures that the images are clear and suitable for feature extraction. Figure 3 clearly illustrates the entire process of plant disease identification, starting from image acquisition and preprocessing, which directly impacts the accuracy of feature extraction. Feature extraction, as shown in Figure 3, identifies critical visual features such as color, texture, and shape that indicate crop disease symptoms. For example, changes in leaf color or texture caused by diseases are effectively captured in this step. These extracted features are then fed into machine learning algorithms for disease identification and classification, as demonstrated in the final stages of Figure 3. The process shown in Figure 3 highlights the importance of high-quality input images and advanced preprocessing techniques. The results support our conclusion that robust preprocessing and precise feature extraction are critical for improving the accuracy of disease detection models, particularly in complex environments.

Figure 3.

Process of plant disease identification based on image recognition technology. The images were captured under uniform lighting conditions using high-definition cameras. Preprocessing involved median filtering to reduce noise and contrast adjustment for clarity. The disease regions were segmented using canny edge detection, and local binary pattern (LBP) features were extracted for further classification. Source: Original figure created by the authors.

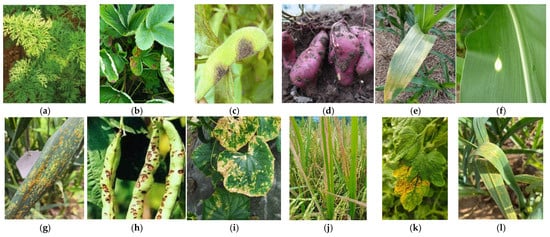

In agricultural research and crop health management, the key visual features in crop images are the primary basis for image processing and DL technology to determine whether a crop is diseased or not. These features include color change, abnormal shape and size, different textures and edge characteristics, and spots and lesions [57]. Such features reflect the impact of diseases on the appearance of crops, including leaf chlorosis, morphological variations, changes in surface texture, etc. [58], and common types of crop diseases are shown in Figure 4. Precise image acquisition and image analysis processes combined with advanced machine learning algorithms, particularly the application of DL models, can realize the learning and identification of diseases in a large amount of data, thereby significantly improving the efficiency and accuracy of crop disease management [59].

Figure 4.

Common types of crop diseases (a): Carrot leaf curl disease; (b): Strawberry snake eye disease; (c): Soybean anthracnose; (d): Sweet potato soft rot; (e): Corn brown spot disease; (f): Corn round spot disease; (g): Wheat leaf rust; (h): Bean gray mold disease; (i): Cucumber leaf spot; (j): Bacterial Leaf Blight of Rice; (k): Bacterial Speck of Tomato; (l): Garlic Rust.

3.1.2. Deep Learning Technologies for Plant Disease Detection

In recent years, the rapid advances of AI have enabled its application across various fields and effectively addressed critical challenges within these domains. As a key component of AI, CV provided practical tools and methods for solving cross-domain problems and drove the development of multiple industries, especially in object identification [60]. One of the most widely used was CNN because of its exceptional performance in object detection by capturing input images.

To address the complex issue of tomato disease identification, Fuentes et al. [61] employed DL technologies such as R-CNN, Faster R-CNN, SSD, VGG, and residual networks. They successfully identified nine types of tomato diseases and processed images from complex fields around the plants. Similarly, Patil et al. [62] developed a severity estimation system based on image processing technology to tackle the three major rice diseases—brown spot, rice blast, and bacterial blight. This system could identify disease types and severity with an overall accuracy of 96.43%. Additionally, YOLOv8 (You Only Look Once v8) is a deep learning-based object detection model that has been applied to crop leaf disease detection through efficient spatial allocation and feature learning. Abid et al. [63] applied the YOLOv8 model to detect crop leaf diseases in Bangladesh, and the training results showed that the model achieved an average precision (mAP) of 98% and an F1 score of 97% in recognizing 19 types of crop diseases, demonstrating its potential for real-time, high-precision crop disease detection in complex environments.

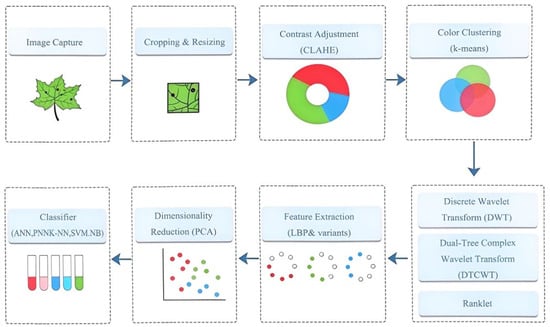

Mathew et al. [64] developed an automated system using image processing for the early detection of major fungal diseases in bananas, achieving a classification accuracy of 95.4% with methods like K-Nearest Neighbors (KNN), SVM, and Naive Bayes (NB) [65]. The effectiveness of these classification methods was evaluated using cross-validation and performance metrics, including accuracy and F1-score. As shown in Figure 5, the system integrates preprocessing steps (cropping, resizing, and contrast enhancement using Contrast Adjustment (CLAHE)) with feature extraction techniques (such as LBP and wavelet transform) and classifiers to ensure accurate disease classification. This method demonstrates how multi-step image processing and deep learning models can effectively extract disease features, validating the accuracy and practicality of deep learning technologies for disease detection in complex agricultural environments and providing farmers with tools for timely disease control.

Figure 5.

Classification methods for fungal diseases. Images of fungal-infected leaves were captured under uniform lighting conditions using high-resolution cameras. The preprocessing steps included cropping, resizing, and contrast adjustment using CLAHE. Features were extracted using LBP variants, Discrete Wavelet Transform (DWT), Dual-Tree Complex Wavelet Transform (DTCWT), and Ranklet methods. Classification was conducted using machine learning algorithms such as ANN, PNNK-NN, SVM, and NB. Dimensionality reduction was performed using PCA to enhance model efficiency and accuracy. Source: Original figure created by the authors.

The aforementioned studies illustrated the great potential and advantages of DL and image-processing technologies in agricultural disease detection and classification. These technologies could reduce the demand for manual sample collection and provide instant detection ability, and expected to achieve large-scale applications through drones. However, certain limitations and challenges remain exist, primarily in the following aspects:

- Disease identification performance of models or algorithms in a complex environment is deficient and needs to be enhanced further.

- Sample collection and annotation, model training and validation, model interpretation and decision-making still rely on manual intervention, and the accuracy of recognition will be directly affected.

- The applicability and stability of the system under different environmental and cultivation conditions are unknown, and the expandability of new technologies across different crop species and their practical application is limited.

- CNN requires lots of image data for training, but now, the data are limited to plant disease identification research [66].

To address the issue raised above, Transfer Learning (TL) was proposed [67]. A new recognition model could be constructed by pre-training a CNN on publicly available image datasets and adjusting it on a smaller targeted dataset using the prior knowledge contained in this model. The overfitting problem caused by insufficient data could be effectively alleviated, and this model reduced the training time required for the target scene effectively. Based on this, Mehri et al. [68] proposed a DL model called AppleNet, which was effectively applied to apple disease detection with an accuracy rate of 96.00%. Similarly, Ghosal et al. [69] established a small dataset and developed a CNN model based on TL since it was difficult to obtain rice leaf disease image data in practice, and the accuracy of the model reached 92.46%. A summary of key studies on deep learning-based plant disease detection is presented in Table 2.

In plant disease identification, TL is well-behaved, especially when there is not enough image data, or it is too heavy to train the model from the beginning. It can save valuable computational resources and training time based on the knowledge learned on large datasets [70] and enhance the model’s generalization ability, effectively recognizing and classifying plant diseases in more realistic environments. It not only improves the classification accuracy but also strengthens the capability of the model to identify complex background and disease features [71].

In addition, special weather conditions, such as cloudy days, can significantly reduce the contrast between crop disease areas and healthy parts, making it more difficult for image recognition algorithms to detect early-stage diseases. Currently, several studies have explored techniques to mitigate the impact of adverse weather conditions. For example, image enhancement methods, such as contrast adjustment and color correction, have been shown to improve image quality under low-light or cloudy conditions by enhancing the contrast between diseased and healthy plant parts. Furthermore, incorporating multimodal data, such as infrared and thermal imaging, has proven effective in compensating for reduced visibility caused by insufficient lighting. These approaches can enhance the robustness and accuracy of disease detection algorithms, particularly in complex environmental conditions. We have summarized key studies on deep learning-based plant disease detection in Table 2. Therefore, future research should focus on optimizing models for use under diverse weather conditions, thereby further improving the applicability of crop disease detection systems in real-world scenarios.

Table 2.

Research progress in image recognition for plant disease detection.

Table 2.

Research progress in image recognition for plant disease detection.

| Research Object | Year | Image Processing Task | Network Framework | Accuracy | Laboratory or Field Use | Notes |

|---|---|---|---|---|---|---|

| Banana | 2021 | Banana fungal disease detection | Artificial Neural Networks (ANN), Probabilistic Neural Networks (PNN) | 95.40% | Laboratory | Lighting, shadows, and leaf overlap can affect the extraction of texture features and classification accuracy [64]. |

| Wheat | 2021 | Detection of five fungal diseases in wheat | EfficientNet-B0 | 94.20% | Field | An additional workforce is required to develop and maintain the mobile application [72]. |

| Pomegranate | 2021 | Pomegranate leaf disease identification | SVM, CNN | 98.07% | Laboratory | Disease manifestations may vary across regions, and the model needs to be trained on data from a broader range of areas to enhance its generalization ability [73]. |

| Tomato | 2022 | Tomato disease detection | VGG, ResNet | N/A | Field | Insufficient sample size and high pattern variation lead to confusion with other categories, resulting in false positives or lower average precision [61]. |

| Rice | 2022 | Rice disease detection | FRCNN, EfficientNet-B0 | 96.43% | Laboratory | The model cannot fully cover all rice varieties and growth environments with limited collected samples [62]. |

| Apple | 2022 | Apple disease detection | KNN | 99.78% | Laboratory | KNN requires calculating the similarity with all training images when querying an image, leading to high computational complexity and affecting real-time performance [74]. |

| Rice | 2022 | Rice disease identification | CNN | 99.28% | Laboratory | To improve overall classification accuracy, there may be a tendency to overlook the precise differentiation of subcategories, leading to errors in subclassification [75]. |

| Tomato | 2022 | Tomato disease identification | CNN | 96.30% | Laboratory | After significant changes in image acquisition conditions, the feasibility of vision transformers in image recognition tasks decreases [76]. |

| Rice | 2022 | Rice disease identification and detection | Moblnc-Net | 99.21% | Field | MobileNet, as a lightweight network, faces limitations in computational resources when processing high-resolution images or during real-time monitoring [77]. |

| Soybean | 2023 | Soybean leaf disease identification | ImageNet, TRNet18 | 99.53% | N/A | No specific experiments and results have been provided for cross-crop applications, making the actual effectiveness of transferability unknown [78]. |

| Corn | 2023 | identification of nine corn diseases | VGGNet | 98.10%~100% | Laboratory | Pre-trained models, such as VGG16, perform well on large-scale datasets but may overfit when applied to smaller datasets [79]. |

| Sweet pepper | 2023 | Detection of bacterial spot disease in sweet pepper | YOLOv3 | 90.00% | Field | When detecting multiple diseases on the same leaf, features of different diseases may overlap, leading to false positives or missed detections [80]. |

| Wheat | 2024 | Recognition of wheat rust | Imp-DenseNet | 98.32% | Laboratory | No specific optimization measures to address confounding factors are described [81]. |

| Crop leaf disease | 2024 | Detection of 19 types of crop leaf diseases | YOLOv8 | 98.00% | Laboratory | Model demonstrates real-time, high-precision detection in complex environments [63]. |

Note. The data are sourced from field environments and laboratory conditions, often under natural or artificial light. Image acquisition was performed using high-resolution cameras and UAVs, with challenges including uneven lighting, leaf occlusions, and complex backgrounds.

Table 2 highlights the experimental conditions (such as lighting environments and image acquisition methods) and the choice of deep learning network frameworks, both of which directly influence model performance. For example, using CNN and EfficientNet-B0 frameworks under controlled conditions for rice disease detection achieved an accuracy of over 96%, validating the critical role of robust image preprocessing and feature extraction in improving disease classification accuracy.

The application of IRT in plant disease detection was summarized as follows:

- Current research in plant disease detection mainly focuses on the classification and analysis of single leaves, and the leaves need to be adjusted to face up and put under a uniform background [82]. However, in an actual field environment, disease identification should cover all parts of the plant since they are distributed in different orientations. Therefore, image acquisition should focus on multiple perspectives and be conducted under conditions close to the natural environment to improve the model’s effectiveness in practical applications.

- Most researchers extracted the regions of interest directly from collected images, thus ignored occlusion issues led to lower recognition accuracy, and the generalization ability of the model was limited [83,84]. The occlusion is caused by various factors, including the natural swing of leaves, interference from branches or other plants, uneven external lighting, complex backgrounds, overlapping of leaves, and other environmental factors. Different degrees of occlusion impacted the recognition process, leading to incorrect identification or missed detection. In recent years, despite the significant process in DL algorithms, accurately identifying plant diseases and pests under extreme conditions (such as in dimly lit environments) remains a considerable challenge.

- In actual agricultural production, plants are often affected by several diseases at once rather than just one. However, most current research worked on detecting individual diseases, and it was difficult to identify and classify various diseases at the same time. This limitation was particularly prominent in practical applications due to the complexity production environment, where plants were threatened by two or more pathogens, such as fungi, bacteria, and viruses. When various diseases occur concurrently, their symptoms are mixed or combined, which needs a higher requirement on the recognition capacity of the model. More excellent model structures and algorithms for multimodal data fusion should be explored to solve this issue. For example, integrating image recognition with other sensor data (such as temperature and humidity) could enhance the accuracy of simultaneous multi-disease identification.

3.2. Application of Image Recognition in Crop Identification

The information on crop planting structure is important for product management and policy-making in smart agriculture [85]. At the beginning of the 21st century, the identification of crop species primarily relied on traditional image processing technologies and machine learning methods, including feature extraction and pattern recognition technologies such as edge detection, color and texture analysis, shape matching, etc. Specific image features of the images were extracted by manual selection and calculation, such as color histograms, texture features, and shape descriptors. Subsequently, machine learning algorithms, SVM, decision trees, and KNN were used to learn and classify the extracted features. Although these methods have made some achievements in specific applications, their performance and accuracy were often limited due to their reliance on manual feature extraction. This limitation became particularly evident when dealing with large-scale and complex agricultural image data, led to low efficiency and accuracy and missed the high standard application requirements [86]. In the second decade of the 21st century, the classification and identification of crop images using deep CNN grew increasingly popular and was more widely applied [87].

3.2.1. Advances in Image Recognition for Crop Identification

In 1974 and 1980, the United States initiated the Large Area Crop Inventory Experiment (LACIE) and Agriculture and Resources Inventory Surveys Through Aerospace Remote Sensing (AGRISTARS) programs [88], respectively,. The purpose was to conduct a global identification of significant crops, growth monitoring of various grain crops, and yield estimation. The Promotion of these programs directly facilitated the development of more efficient and accurate crop identification algorithms. IRT for crop identification has become crucial in enhancing farmland management efficiency and crop production precision [89]. High-definition cameras were used to capture crop images under suitable lighting conditions. Advanced ML and DL technologies, such as CNN, were used to analyze these images to accurately identify and classify crop species [90]. Vital visual features were extracted by IRT and these features were essential parameters for determining crop species. For instance, different crop species exhibit distinct differences in leaf shape, fruit shape, and color distribution could be effectively distinguished between various crops.

3.2.2. Technologies for Crop Identification

Nunes et al. [91] developed an improved crop classification identification method for tomato varieties based on U-Net drone remote sensing images. The dataset was expanded by applying flip transformations, translation transformations, and random cropping. It deepened the U-Net network structure by introducing an Atrous Spatial Pyramid Pooling (ASPP) structure [92] to better understand semantic information and enhance the model’s ability to extract data features. Additionally, a dropout layer [93] was brought in to prevent model overfitting, and this method achieved an accuracy rate of 94.12% in the experiment. However, this method required substantial computational resources for large-scale data training, and further optimization and adjustment were needed to improve the model’s ability to distinction between different crop species. A plant species identification method based on an optimized 3D Polynomial Neural Network (3DPNN) and a Variable Overlap Time Coherent Sliding Window (VOTCSW) were developed by Abdallah et al. [94]. They generated a large, labeled image dataset through the EAGL-I system and significantly reduced computational resource requirements by introducing a polynomial reduction algorithm. In the experiment of the Weed seedling images of species expected to Manitoba, Canada (WSISCMC) dataset, the method achieved an accuracy of 99.9%. These results demonstrate that the method not only exhibits high accuracy in plant species identification tasks but also excels in computational efficiency when handling complex datasets, showing great potential for applications in dynamic or large-scale data scenarios.

Other studies have focused on modifying existing CNN architectures to enhance plant species identification performance. To achieve the automatic identification of grape leaf images, Nasiri et al. [95] modified the VGG16 architecture by adding a global average pooling layer, a dense layer, batch normalization layers, and a dropout layer, thus enhancing the model’s ability to identify the complex visual features of grape varieties [96]. A five-fold cross-validation evaluated the uncertainty and prediction efficiency of the CNN model, and the final model had an average accuracy of over 99% on grape variety classification. Similarly, Liu et al. [97] developed a model based on GoogLeNet to identify grape varieties automatically. The training dataset was expanded by over 5091 leaf images of different grape varieties, which were collected manually, and image enhancement and data enhancement technologies were used to improve the model’s generalization ability. Compared with several CNN models, they adjusted the learning rate, batch size, and training epochs to optimize the GoogLeNet model. This process was completed by a cloud server and enabled the rapid identification of 21 grape varieties with high accuracy (up to 99.91%) under a complex environment. A summary of the key studies on plant species identification using deep learning models is presented in Table 3. Yang et al. [98] improved the VGG16 of the deep convolutional neural network and achieved the automatic identification of 12 peanut varieties. They preprocessed 12 peanut pod images acquired by the scanner to form a dataset containing 3365 images. A series of improvements were made to optimal the VGG16 network, such as renovating the F6 and F7 fully connected layers, adding Conv6 and global average pooling layers, modifying the three convolutional layers in Conv5 to depthwise separable convolutions, and increasing the batch normalization (BN) layers. The results showed that the average accuracy of the improved VGG16 model was up to 96.7% on the peanut pod test set, which was 8.9% higher than the original VGG16 model and 1.6% to 12.3% higher than other classical models. To achieve efficient aquatic plant recognition, Wang et al. [99] proposed an improved YOLOv8 model (APNet-YOLOv8s). The research results showed that APNet-YOLOv8s improved the mean average precision (mAP50) by 2.7% in aquatic plant detection, reaching 75.3%, and increased the frames per second (FPS) by 50.2% compared to YOLOv8s.

Table 3.

Research progress in image recognition for crop identification.

In Table 3, we present the research progress in crop identification using IRT, focusing on key influencing factors such as dataset size, crop diversity, and environmental conditions. These studies utilized deep learning frameworks like VGG16 and GoogLeNet, with identification accuracy ranging from 92% to over 99%. However, limitations such as the narrow range of crop species covered, small dataset sizes, and variations in lighting conditions in field environments still challenge the models’ generalization capabilities. For instance, grape variety identification achieved a high accuracy of 99.91%, but the dataset included only 21 common varieties, highlighting the necessity of expanding dataset size and geographical diversity to improve model robustness and applicability in real-world agricultural scenarios.

The application of IRT in crop identification was summarized as follows:

- With the application of CNN, the identification of crop species was no longer limited to single crops, and multiple crops in the same image could able to be classified and recognized at the same time, even in complex environments. Moreover, the concurrent identification of multiple crops was improved owe to the integration of multispectral and hyperspectral imaging technologies. The spectral information of different wavelengths could be captured, and other crop species could be effectively distinguished.

- Currently, roadblocks brought by the diversity of perspectives, growth conditions, and image quality when collecting the datasets for crop identification should be emphasized, which limits the adaptability and stability of models in different crop species and growing environments. Although ResNet, DenseNet, and more excellent models achieved some achievements in specific environments, they were still in their infancy in complex environments. Different perspectives, lighting conditions, seasonal changes, and crop growth stages all lead to changes in image features, making it difficult for the model to generalize across all actual situations.

- To achieve high crop identification accuracy, DL models typically rely on ample computing resources, which presents significant challenges for adaptability in real-time and large-scale field applications. In complex environments, particularly in resource-limited rural areas or small farms, these models with high computational demands were difficult to deploy and use effectively. Therefore, more improvements are needed for the existing image recognition models to enhance their applicability and efficiency in practical agricultural production. Based on current technology, an effective strategy was introduced in lightweight model architectures, e.g., MobileNet and EfficientNet. These models significantly reduced compute and storage requirements, thus obtaining high accuracy and making the models more suitable for application in resource-constrained complex environments. TL and data enhancement models could also be applied to improve the model’s generalization ability with limited labeled data and enhance the applicability in different crop species and growth stages.

3.3. Application of Image Recognition in Crop Yield Prediction and Quality Assessment

Crop yield prediction and quality assessment are critical in smart agriculture, market forecasting, and food supply chain management [107]. Traditionally, crop yield prediction relied on empirical judgment and sampling methods. Traditional methods predicted yield and assessed crop quality by measuring specific physical indicators (size, weight, or color) [108]. Although these methods have been widely used in practice, they are often inefficient, costly, and susceptible to subjective judgment. With the development of IRT, it began to be used in crop yield prediction and quality assessment [109]. The features of crop images (such as color, shape, and texture) can be analyzed by IRT to enable automatic evaluation of crop quality and yield prediction [110]. However, manual feature extraction limited their accuracy in complex environments. In recent years, crop yield prediction and quality assessment methods have undergone a revolutionary transformation with the rapid development of DL [111]. DL methods have significantly improved the capacity and efficiency of processing large-scale agricultural image data by automatically extracting and learning image features. It brought a qualitative leap in accuracy and dramatically enhanced the degree of automation and applicability in prediction [112]. However, the performance of DL models should be further improved in yield prediction and quality assessment across different crops, growth stages, and environmental conditions.

3.3.1. Methods for Crop Yield Forecasting and Quality Assessment

Crop yield prediction methods are divided into two main categories. The first category was the traditional yield estimation method based on empirical statistical and crop growth simulation models. These methods all highly dependent on various environmental factors, with high data acquisition costs and low spatial generalization capability, making it difficult to generalize in other regions. The second category includes methods that combine image recognition technologies such as neural networks, machine learning models, and hybrid intelligent algorithms [113]. Neural networks are suitable for processing high-dimensional image data and are commonly used in plant disease detection tasks. In contrast, hybrid intelligent algorithms, which integrate multiple techniques (e.g., neural networks with statistical models or time-series models), excel in handling nonlinear and multivariable relationships in crop yield prediction [114]. These methods demonstrate distinct advantages in different tasks, such as CNNs being effective for high-resolution image processing, while hybrid algorithms are better suited for predicting disease progression or modeling complex variables.

3.3.2. Technologies for Crop Yield Forecasting and Quality Assessment

Bawa et al. [115] proposed a cotton yield prediction method combined SVM and IRT. It could automatically identify and count cotton bolls to estimate the approximate yield by analyzing high-resolution images captured by UAVs. For one thousand randomly selected and visually reviewed pixels, it was found that the method was highly effective in segmenting cotton bolls from background pixels, achieving a classification accuracy of 95%. Meanwhile, Oliveira et al. [116] used UAV remote sensing and CNN to predict forage dry matter yield. AlexNet [117], ResNeXt50 [118], and DarkNet53 [119] were tested by 330 experimental plots and trained by ten-fold cross-validation. The results indicated that the pre-trained ResNeXt50 model could accurately predict dry matter yield.

Similarly, several studies have focused on fruit counting and yield prediction using IRT and DL technologies. A summary of representative studies on image recognition-based crop yield prediction is presented in Table 4. Reddy et al. [120] proposed an improved YOLOv8 model (YOLOv8 + SAM) for cotton yield prediction. YOLOv8 improved mAP50 by 0.9% to 83.3% compared to YOLOv7, showing higher accuracy in complex scenarios. However, it still faces challenges in multi-object detection and adapting to dense or overlapping cotton bolls. A fruit-counting method based on DL was proposed by Rahnemoonfar et al. [121]. They developed a deep CNN using an improved Inception-ResNet architecture and tested it on natural images. The result showed that the average test accuracy was 91% on real images and 93% on synthetic images. Stein et al. [122] developed a multi-sensor system to solve the occlusion issues through multi-view processing technology, and they realized the effective fruit identification, tracking, localization, and yield prediction in commercial mango orchards. The experimental results indicated that the system estimated the yields of each tree with an error of only 1.36% by automatically identifying and counting fruits in images, even without field adjustment. Chen et al. [123] proposed a data-driven method based on DL, and they utilized a Dual Convolutional Network (DCN) strategy and crowdsourced labels to identify and count fruits, thereby predicting the yield of apples and oranges. This method overcame the identification challenges caused by variations in lighting, fruit coverage, and the mutual covering of adjacent fruits. Dorj et al. [124] introduced a citrus fruit detection and yield prediction method using IRT to identify and count fruits in citrus tree images automatically. The technique transformed the converting image color space and applied threshold segmentation of fruit segmentation and counting. Eighty-four images were used in the experiment, and the algorithm demonstrated a high consistency compared to manual counting, with a correlation coefficient of 0.93. Fu et al. [125] proposed a kiwifruit yield prediction system using an Android smartphone, which automatically counts fruits based on IRT. A fast fruit-counting algorithm was developed to estimate the image area by selecting significant color channels and their thresholds. One hundred kiwifruit images were tested in the experiment, and the method achieved a recognition accuracy of 76.4% under natural conditions.

Table 4.

Research progress in image recognition for crop yield prediction.

The application on IRT for crop yield prediction and quality assessment was summarized as follows:

Combining high-resolution remote sensing images and DL made the models used to predict crop yields more accurate. Methods such as CNN could be used to analyze the crops’ growth conditions and combined environmental factors for more comprehensive prediction. However, model overfitting, insufficient data diversity, high computational resource demands, and the “black box” nature of DL models were the problems of these models. To solve these issues, data enhancement, transfer learning, and multimodal data fusion were proposed to enhance the models’ generalization capability and application efficiency. Additionally, the introduction of Explainable Artificial Intelligence (EAI) technologies made the outcomes of DL more transparent and provided a clear explanation of the model results. In the future, more attempts should be conducted to optimize hyperparameter tuning and the data annotation process to improve the accuracy and practicality of predictive models and advance this field.

3.4. Other Applications of Image Recognition in Smart Agriculture

Researchers focused on the applications of the IRT in other areas of smart agriculture and have achieved significant results in the following three aspects:

- Soil testing and nutrient management, Jiang et al. [132,133] use hyperspectral imaging and multispectral cameras to conduct detailed analyses of soil and identify its chemical composition, nutrient levels, moisture content, and pH value. When combined with DL, this approach enables the rapid classification of soil types and the detection of key nutrients (nitrogen, phosphorus, and potassium) [134,135,136]. The application of this technology provides more precise fertilization plans for agricultural production and enhances the scientific management of crop growth.

- Agricultural machinery operation quality assessment, Türköz et al. [137,138] developed a monitoring system based on CV and DL, which installed cameras and sensors on agricultural machinery to capture and analyze image data of the operation process in real-time. These data could be used to assess the performance of the machinery, such as the precision of harvesting, any missed sections, or potential crop damage, thereby ensuring consistency and accuracy of the operations. However, in practical use, these systems are still subject to interference from vibrations, complex lighting conditions, and changes in the field environment.

- The grading of crops, CV and 3D imaging technologies are widely used to detect and classify the quality characteristics of agricultural products such as fruits and vegetables, including size, color, shape, and surface defects. Through the application of deep learning models, the system can identify and grade the products without causing any damage. A summary of representative applications of image recognition in other smart agriculture domains is presented in Table 5.

Table 5. Research progress in image recognition for other fields of smart agriculture.

Table 5. Research progress in image recognition for other fields of smart agriculture.

To demonstrate the broad application potential of IRT in various agricultural tasks, we have referenced several research studies in Table 5 for further support. For example, in crop irrigation management, variations in environmental and soil conditions complicate accurate water supply predictions, highlighting the need for dynamic models capable of real-time adaptation. Similarly, in soil type classification, spectral reflectance variations caused by moisture and organic content emphasize the importance of integrating multispectral image data to improve classification accuracy. These cases illustrate that IRT plays a crucial role in precision agriculture by enhancing monitoring and management capabilities, despite facing environmental and technical challenges. This further validates the importance of continuously optimizing data acquisition methods and model designs to meet the diverse and complex demands of smart agricultural applications.

4. Challenges and Prospects

- Scarcity of Agricultural Datasets: The current agricultural image recognition datasets primarily originated from common crop species led to insufficient diversity of these datasets [143,144]. For example, in the CropNet dataset (Sourced from the U.S. Department of Agriculture, Sentinel-2 satellite imagery, and WRF-HRRR meteorological data), more than 75% of the data are concentrated on major crops like rice, wheat, and maize, while data on less common crops, such as peanuts and sweet potatoes, account for less than 10%. Due to this imbalance, models tend to overfit the features of common crops, leading to lower accuracy on rare or economically important crops, with prediction accuracy for common crops reaching 85%, while accuracy for less common crops is only around 60%. Furthermore, the focus on common crops limits climate change research, as studies generally concentrate on crops like rice and maize, leaving other crops underrepresented in climate adaptation research [145]. The construction of agricultural image datasets faced various challenges, such as complex backgrounds and various lighting conditions, which increased the difficulty of accurate annotation. Additionally, crops grown in complex environments where other objects or different species of crops might be around them increased the difficulty of image segmentation [146]. Therefore, IRT used in smart agriculture must adapt to different environments, which raises higher requirements for the related datasets. To solve these challenges, advanced DL technologies such as transfer learning, GANs, model architecture optimization, physics-informed neural networks, and deep synthetic minority oversampling technologies could be employed. By adopting these innovative technologies, the application limitations of current IRT in smart agricultural can be effectively overcome and better meet the practical applications’ needs.

- Multimodal Data Fusion: In addition to addressing the challenges posed by dataset scarcity, another promising direction for enhancing crop image recognition is multimodal data fusion. By combining data from different sensor types, such as visible light images, infrared imagery, and thermal imaging, recognition systems can leverage complementary information to improve accuracy and robustness. For instance, infrared and thermal images can help to detect crop disease or stress in conditions with poor lighting or overcast weather, where visible light images may lack contrast. These complementary datasets provide a more holistic view of the crop environment, allowing the model to better differentiate between healthy and diseased areas. Furthermore, fusion techniques such as deep learning-based multi-input models or feature-level fusion can enhance the model’s performance in complex environments, such as those with occlusions or varying backgrounds. The integration of multimodal data will help in improving crop disease detection in diverse environmental settings, thus strengthening the adaptability of image recognition systems for smart agriculture. Given the dynamic nature of agricultural environments, the development and application of multimodal data fusion will be key to overcoming the limitations of traditional image recognition systems, enhancing their scalability and practical application in diverse farming scenarios.

- Semi-Supervised and Unsupervised Learning: To solve the problems of annotation difficulties and data scarcity, unsupervised learning was proposed, which utilized substantial amounts of unlabeled visual data for training. Unsupervised learning significantly reduced the dependence on annotated datasets by exploiting the intrinsic structure and features of the data to build models without annotated data. Semi-supervised learning combined the strengths of both supervised and unsupervised learning, and it somewhat applied some supervision to unannotated data. In the smart agricultural industry, particularly in rare disease detection, collecting large-scale datasets was expensive and complex, and data annotation was time-consuming for experts. Semi-supervised and unsupervised learning effectively solved these problems [147,148,149]. Transfer learning is another critical technique. Training a general image recognition model on a large number of annotated samples and then fine-tuning it on a small specific agricultural application sample could enhance the model’s generalization ability and make it more adaptable to different types of farming images [150]. This approach reduces the reliance on large-scale annotated data and enables the model to handle diverse application scenarios better in smart agriculture.

- Application of Green AI: AI technology is developing rapidly, and the concept of Green AI is being developed to make AI technology more energy-efficient and reduce carbon emissions and resource consumption [151]. Integration of Green AI with IRT will optimize algorithms and model structures to minimize computational resource usage. It employs renewable energy and low-carbon computing resources during training and inference processes. These approaches will drive IRT’s efficient, energy-saving, and environmentally friendly application [152].

5. Conclusions

As a pivotal analytical tool in modern agriculture, IRT has demonstrated transformative potential in vegetation monitoring, phytopathology detection, and precision farm management. Our systematic review establishes that IRT implementations significantly enhance crop quality optimization, yield augmentation, and operational cost reduction—three critical pillars for achieving sustainable agricultural intensification. The technological progression from conventional machine learning models (SVM, KNN, U-Net) to advanced deep learning architectures, particularly transformer-based systems with self-attention mechanisms [153,154], marks a paradigm shift in agricultural computer vision capabilities.

The emergence of Vision Transformer (ViT) and Data-efficient Image Transformer (DeiT) architectures addresses the fundamental limitations of traditional CNNs in processing agricultural imagery. By enabling global dependency modeling through parallelized self-attention computations [155], these architectures outperform conventional methods in complex agricultural environments characterized by heterogeneous backgrounds, interspecies variations, and dynamic environmental interferents. Empirical evidence confirms their superior performance metrics in critical tasks, including multi-crop identification, subclinical disease recognition, and phenological stage monitoring.

Nevertheless, three fundamental challenges constrain current IRT applications:

- (1)

- Insufficient biodiversity representation in training datasets: Current agricultural datasets often lack diversity, covering only a limited range of crops and environments, which hinders the model’s ability to generalize across different conditions. Additionally, many datasets fail to effectively capture the interactions and variations between different biological entities during the crop growth process [156].

- (2)

- Scalability limitations in real-world agricultural settings: IRT systems face difficulties when deployed in large-scale, dynamic agricultural environments. The variability in lighting, weather conditions, and terrain can severely impact the accuracy and consistency of image recognition models. Moreover, the need for real-time processing and high throughput in these environments often exceeds the capabilities of current systems, limiting their effectiveness in practical applications [157].

- (3)

- Inadequate multimodal data integration: Current IRT systems often rely on single-modal data, such as RGB images, which limits their ability to capture the complexity of agricultural environments fully. The integration of other modalities, such as thermal [158], hyperspectral [159], and LiDAR data [160], remains underdeveloped. Without effective multimodal data fusion, models struggle to account for various environmental factors, plant health indicators, and growth stages, resulting in suboptimal performance in diverse agricultural scenarios [161].

Future research directions should prioritize:

- (1)

- Development of large-scale, ecologically diverse image repositories: Future research should focus on building datasets that cover various crops, environments, and growth conditions to enhance the model’s adaptability and performance across different agricultural settings.

- (2)

- Implementation of lightweight transformer variants for edge computing deployment: Future research should focus on developing optimized, resource-efficient transformer models suitable for edge devices with limited computational power, specifically for image recognition tasks [162]. These models should maintain high performance while reducing computational overhead, enabling real-time image processing and decision-making in field applications where low latency is critical.

- (3)

- Multispectral data fusion techniques combining RGB, thermal, and hyperspectral inputs: To enhance crop monitoring accuracy, research could center on developing methods to integrate multispectral data from diverse sources effectively. For instance, by combining RGB imagery, thermal imaging, and hyperspectral data, models can capture more comprehensive information about crop health, growth stages, and environmental conditions.

The impending integration of 5G-enabled edge computing with transformer-optimized IRT systems promises to revolutionize precision agriculture through real-time field diagnostics, targeted agrochemical application, and autonomous cultivation management. This technological synergy will likely catalyze the transition towards data-driven agricultural ecosystems characterized by enhanced production efficiency, minimized ecological footprint, and improved climate resilience [163,164].

This comprehensive analysis provides both a methodological framework for current IRT implementations and a strategic roadmap for advancing intelligent agricultural systems. Subsequent investigations should focus on developing adaptive learning architectures capable of handling agricultural complexity while maintaining computational efficiency—crucial requirements for global scalability in smart farming initiatives.

6. Limitation and Future Research

One of the limitations of this study is that it is a literature-based theoretical review without the inclusion of newly collected empirical data. This paper investigates the recent trends in the application of IRT in various fields of smart farming, primarily through a systematic analysis of previously published research articles, including both primary studies and review papers. While we did not conduct original experiments or field investigations, the cited sources are predominantly original research studies that provide first-hand data and findings. Future research is encouraged to build upon this foundation by incorporating primary data collection and field observations, which would offer more comprehensive and practical insights into the discussed challenges. Additionally, expanding the scope of databases and research dimensions could help develop a more holistic understanding of IRT applications in smart agriculture.

Author Contributions

Methodology, C.J. and Z.H.; software, F.G. and K.Y.; investigation, K.M.; data curation, K.M.; writing—original draft, K.M.; writing—review and editing, F.G., C.J. and K.M. All authors have read and agreed to the published version of the manuscript.

Funding

This study was founded by the Youth Fond of Natural Science Foundation of China (Project No. 52405244), and Applied Peak Cultivation Discipline in Anhui province (Project No: XK-XJGF004).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Nomenclature

| Abbreviations | Definition |

| AI | Artificial Intelligence |

| IRT | Image Recognition Technology |

| IC | Integrating Computer |

| IoT | Internet of Things |

| GPS | Global Positioning System |

| GIS | Geographic Information System |

| WSN | Wireless Sensor Networks |

| CNN | Convolutional Neural Networks |

| RNN | Recurrent Neural Networks |

| GAN | Generative Adversarial Networks |

| UAVs | Unmanned Aerial Vehicles |

| SVM | Support Vector Machines |

| DL | Deep Learning |

| CV | Computer Vision |

| KNN | K-Nearest Neighbors |

| NB | Naive Bayes |

| DWT | Discrete Wavelet Transform |

| DTCWT | Dual-Tree Complex Wavelet Transform |

| ANN | Artificial Neural Networks |

| PNN | Probabilistic Neural Networks |

| LACIE | Large Area Crop Inventory Experiment |

| ASPP | Atrous Spatial Pyramid Pooling |

| 3DPNN | 3D Polynomial Neural Network |

| BN | Batch Normalization |

| DCN | Dual Convolutional Network |

| EAI | Explainable Artificial Intelligence |

| ViT | Vision Transformer |

| DeiT | Data-efficient Image Transformer |

References

- Ghazal, S.; Munir, A.; Qureshi, W.S. Computer vision in smart agriculture and precision farming: Techniques and applications. Artif. Intell. Agric. 2024, 13, 64–83. [Google Scholar] [CrossRef]

- Qazi, S.; Khawaja, B.A.; Farooq, Q.U. IoT-equipped and AI-enabled next generation smart agriculture: A critical review, current challenges and future trends. IEEE Access 2022, 10, 21219–21235. [Google Scholar] [CrossRef]

- Boahen, J.; Choudhary, M. Advancements in Precision Agriculture: Integrating Computer Vision for Intelligent Soil and Crop Monitoring in the Era of Artificial Intelligence. Int. J. Sci. Res. Eng. Manag. 2024, 8, 1–5. [Google Scholar] [CrossRef]

- Fu, X.; Ma, Q.; Yang, F.; Zhang, C.; Zhao, X.; Chang, F.; Han, L. Crop pest image recognition based on the improved ViT method. Inf. Process. Agric. 2024, 11, 249–259. [Google Scholar] [CrossRef]

- Sharma, K.; Shivandu, S.K. Integrating artificial intelligence and Internet of Things (IoT) for enhanced crop monitoring and management in precision agriculture. Sens. Int. 2024, 5, 100292. [Google Scholar] [CrossRef]

- Adli, H.K.; Remli, M.A.; Wan Salihin Wong, K.N.S.; Ismail, N.A.; González-Briones, A.; Corchado, J.M.; Mohamad, M.S. Recent advancements and challenges of AIoT application in smart agriculture: A review. Sensors 2023, 23, 3752. [Google Scholar] [CrossRef]

- Raihan, A. A systematic review of Geographic Information Systems (GIS) in agriculture for evidence-based decision making and sustainability. Glob. Sustain. Res. 2024, 3, 1–24. [Google Scholar] [CrossRef]

- Ting, Y.T.; Chan, K.Y. Optimising performances of LoRa based IoT enabled wireless sensor network for smart agriculture. J. Agric. Food Res. 2024, 16, 101093. [Google Scholar] [CrossRef]

- Altalak, M.; Ammad Uddin, M.; Alajmi, A.; Rizg, A. Smart Agriculture Applications Using Deep Learning Technologies: A Survey. Appl. Sci. 2022, 12, 5919. [Google Scholar] [CrossRef]

- Mendoza-Bernal, J.; González-Vidal, A.; Skarmeta, A.F. A Convolutional Neural Network approach for image-based anomaly detection in smart agriculture. Expert Syst. Appl. 2024, 247, 123210. [Google Scholar] [CrossRef]

- Das, S.; Tariq, A.; Santos, T.; Kantareddy, S.S.; Banerjee, I. Recurrent neural networks (RNNs): Architectures, training tricks, and introduction to influential research. In Machine Learning for Brain Disorders; Humana: New York, NY, USA, 2023; pp. 117–138. [Google Scholar]

- Akkem, Y.; Biswas, S.K.; Varanasi, A. A comprehensive review of synthetic data generation in smart farming by using variational autoencoder and generative adversarial network. Eng. Appl. Artif. Intell. 2024, 131, 107881. [Google Scholar] [CrossRef]

- Joshi, K.; Kumar, V.; Anandaram, H.; Kumar, R.; Gupta, A.; Krishna, K.H. A review approach on deep learning algorithms in computer vision. In Intelligent Systems and Applications in Computer Vision; CRC Press: Boca Raton, FL, USA, 2023; pp. 1–15. [Google Scholar]

- Banik, A.; Patil, T.; Vartak, P.; Jadhav, V. Machine learning in agriculture: A neural network approach. In Proceedings of the IEEE 2023 4th International Conference for Emerging Technology (INCET), Belgaum, India, 26–28 May 2023; pp. 1–6. [Google Scholar]

- Gong, X.; Zhang, S. A High-Precision Detection Method of Apple Leaf Diseases Using Improved Faster R-CNN. Agriculture 2023, 13, 240. [Google Scholar] [CrossRef]

- Abbas, I.; Liu, J.; Amin, M.; Tariq, A.; Tunio, M.H. Strawberry Fungal Leaf Scorch Disease Identification in Real-Time Strawberry Field Using Deep Learning Architectures. Plants 2021, 10, 2643. [Google Scholar] [CrossRef]

- Tyagi, N.; Raman, B.; Garg, N. Classification of Hard and Soft Wheat Species Using Hyperspectral Imaging and Machine Learning Models. In International Conference on Neural Information Processing; Springer: Singapore, 2023; pp. 565–576. [Google Scholar]

- Gutiérrez, S.; Fernández-Novales, J.; Diago, M.P.; Tardaguila, J. On-the-go hyperspectral imaging under field conditions and machine learning for the classification of grapevine varieties. Front. Plant Sci. 2018, 9, 1102. [Google Scholar] [CrossRef]

- Ferreira, M.P.; de Almeida, D.R.A.; de Almeida Papa, D.; Minervino, J.B.S.; Veras, H.F.P.; Formighieri, A.; Ferreira, E.J.L. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Alanazi, A.; Wahab, N.H.A.; Al-Rimy, B.A.S. Hyperspectral Imaging for Remote Sensing and Agriculture: A Comparative Study of Transformer-Based Models. In Proceedings of the 2024 IEEE 14th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malaysia, 24–25 May 2024; pp. 129–136. [Google Scholar]

- Changjie, H.; Changhui, Y.; Shilong, Q.; Yang, X.; Bin, H.; Hanping, M. Design and experiment of double disc cotton topping device based on machine vision. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2023, 54, 1–5. [Google Scholar]

- Du, P.; Bai, X.; Tan, K.; Xue, Z.; Samat, A.; Xia, J.; Liu, W. Advances of four machine learning methods for spatial data handling: A review. J. Geovisual. Spat. Anal. 2020, 4, 1–25. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, K.; Xin, Y.; Zhao, X. Soil Image Segmentation Based on Mask R-CNN. In Proceedings of the IEEE 2023 3rd International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 6–8 January 2023; pp. 507–510. [Google Scholar]

- Narang, G.; Galdelli, A.; Pietrini, R.; Solfanelli, F.; Mancini, A. A Data Collection Framework for Precision Agriculture: Addressing Data Gaps and Overlapping Areas with IoT and Artificial Intelligence. In Proceedings of the 2024 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Padua, Italy, 21–23 October 2024; pp. 580–585. [Google Scholar]

- Valenzuela, J.L. Advances in postharvest preservation and quality of fruits and vegetables. Foods 2023, 12, 1830. [Google Scholar] [CrossRef] [PubMed]

- Dhanya, V.G.; Subeesh, A.; Susmita, C.; Amaresh; Saji, S.J.; Dilsha, C.; Keerthi, C.; Nunavath, A.; Singh, A.N.; Kumar, S. High Throughput Phenotyping Using Hyperspectral Imaging for Seed Quality Assurance Coupled with Machine Learning Methods: Principles and Way Forward. Plant Physiol. Rep. 2024, 29, 749–768. [Google Scholar] [CrossRef]

- Dewi, D.A.; Kurniawan, T.B.; Thinakaran, R.; Batumalay, M.; Habib, S.; Islam, M. Efficient Fruit Grading and Selection System Leveraging Computer Vision and Machine Learning. J. Appl. Data Sci. 2024, 5, 1989–2001. [Google Scholar] [CrossRef]