Real-Time Classification of Chicken Parts in the Packaging Process Using Object Detection Models Based on Deep Learning

Abstract

1. Introduction

- The YOLOv8 and RT-DETR algorithms were applied for the first time in the field of chicken part classification.

- The effectiveness of the detection models was evaluated and compared on a 5-category chicken parts classification image dataset with different combinations, using original images of chicken parts taken in an experimental environment.

- As a result of the evaluation of seven deep learning-based object detection models with high prediction accuracy using versions of the YOLOv8 and RT-DETR algorithms, it has been proven that the YOLOv8s model is more successful than the RT-DETR models and other YOLOv8 versions on this dataset.

2. Literature

3. Methods

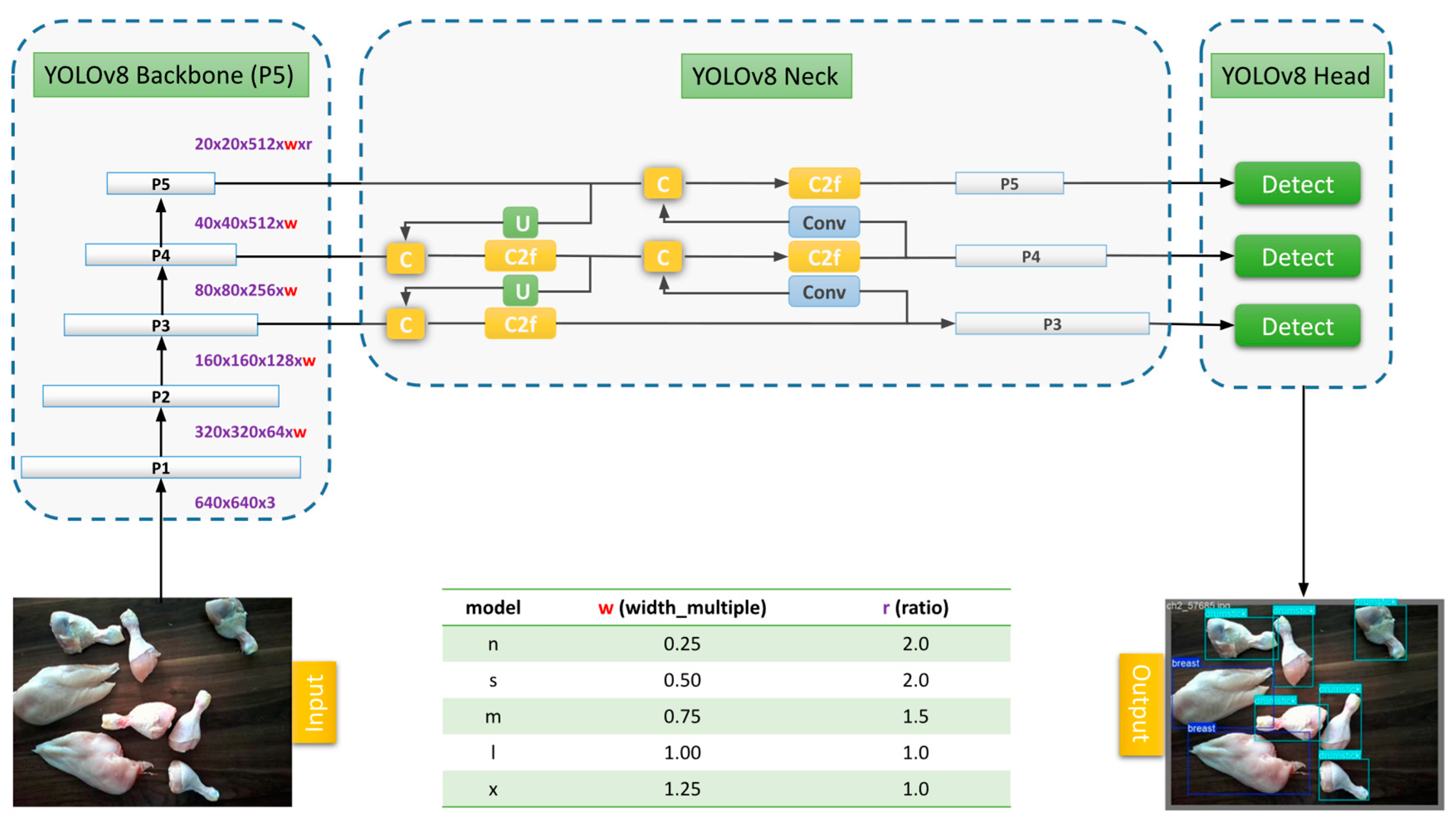

3.1. You Only Look Once Version 8 (YOLOv8)

3.2. Real-Time Detection Transformer (RT-DETR)

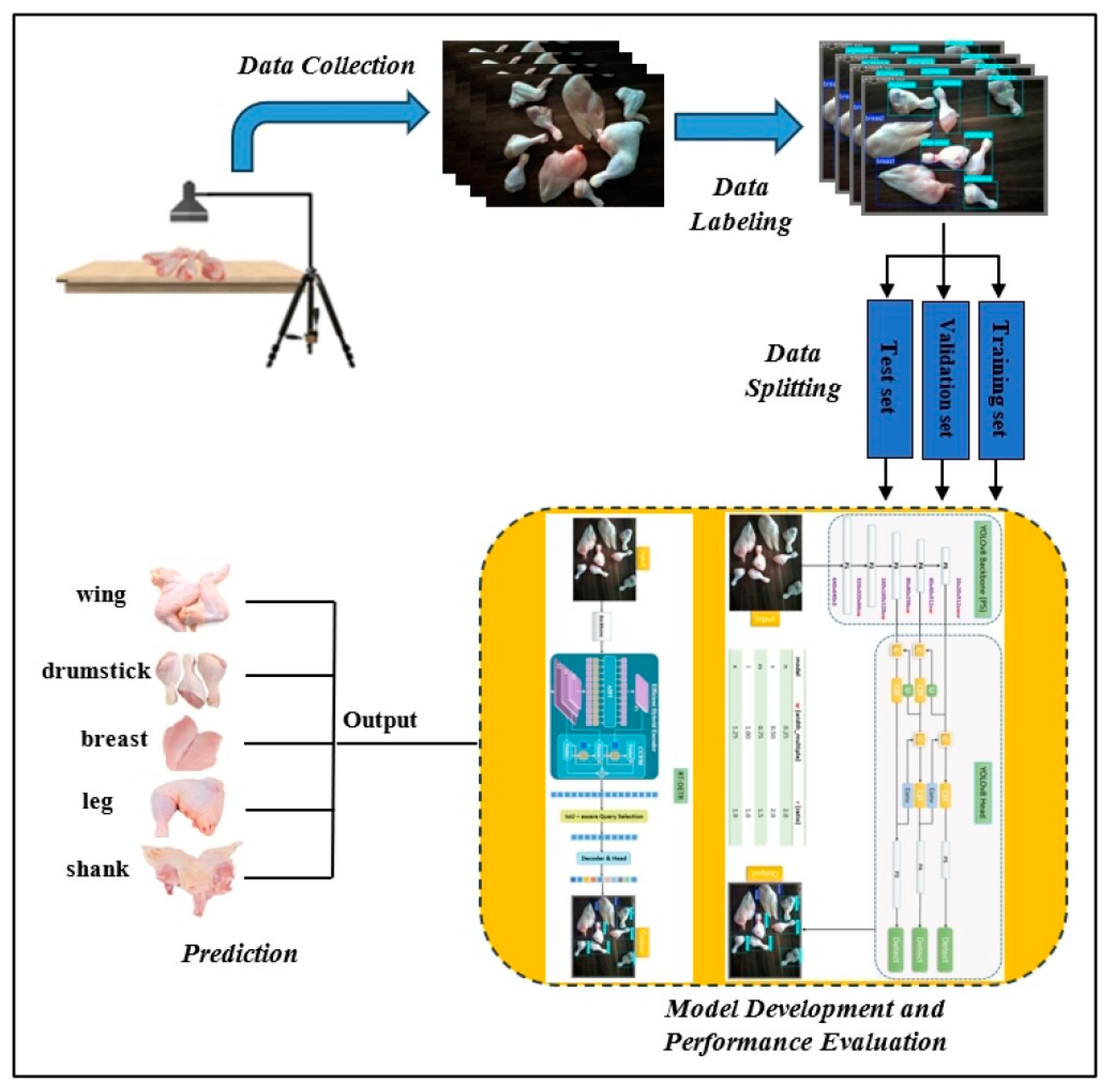

4. Methodology and Implementation

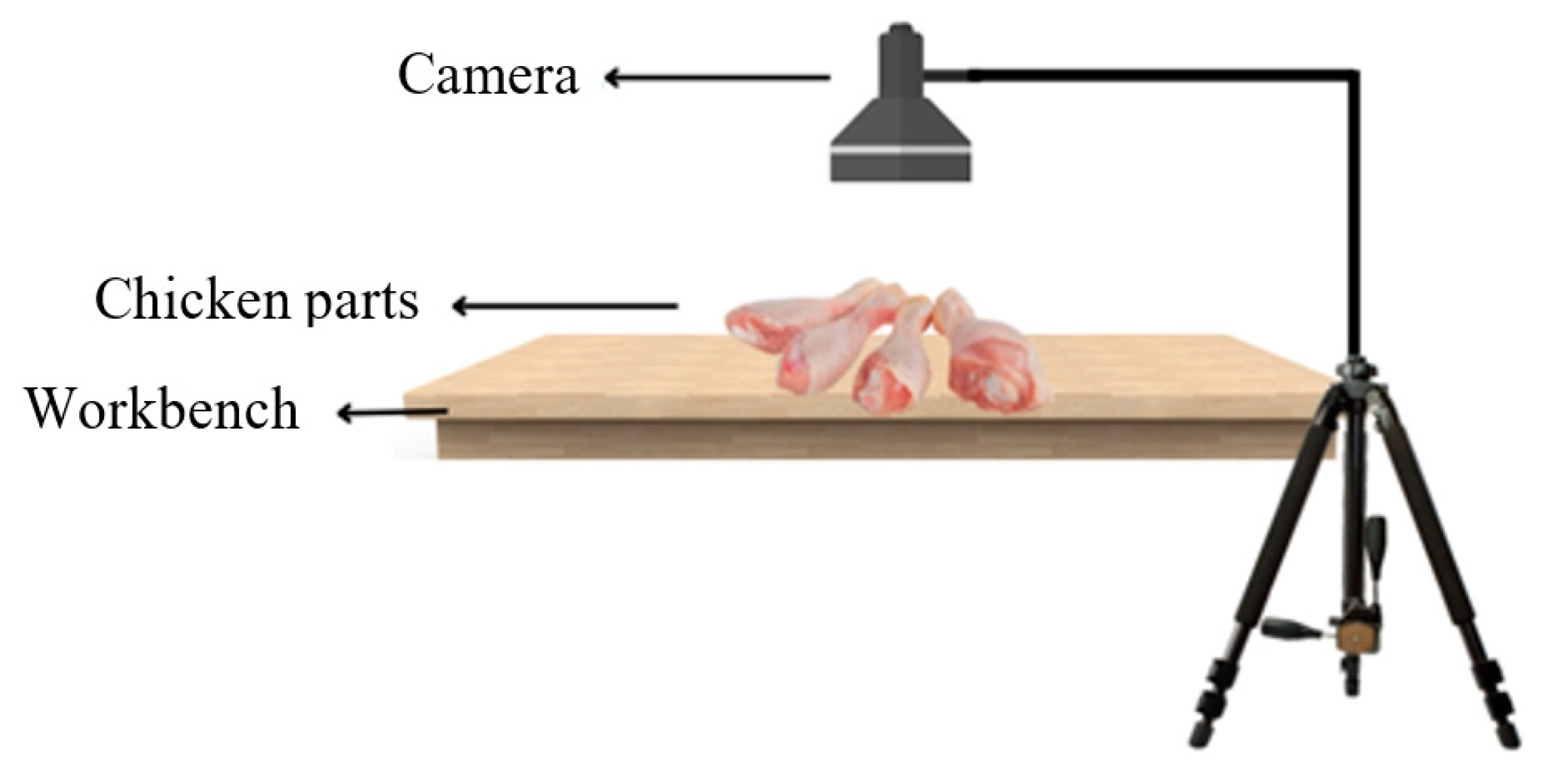

4.1. Chicken Parts Identification Dataset Collection

4.2. Data Preparation and Preprocessing

4.3. Performance Evaluation Metrics

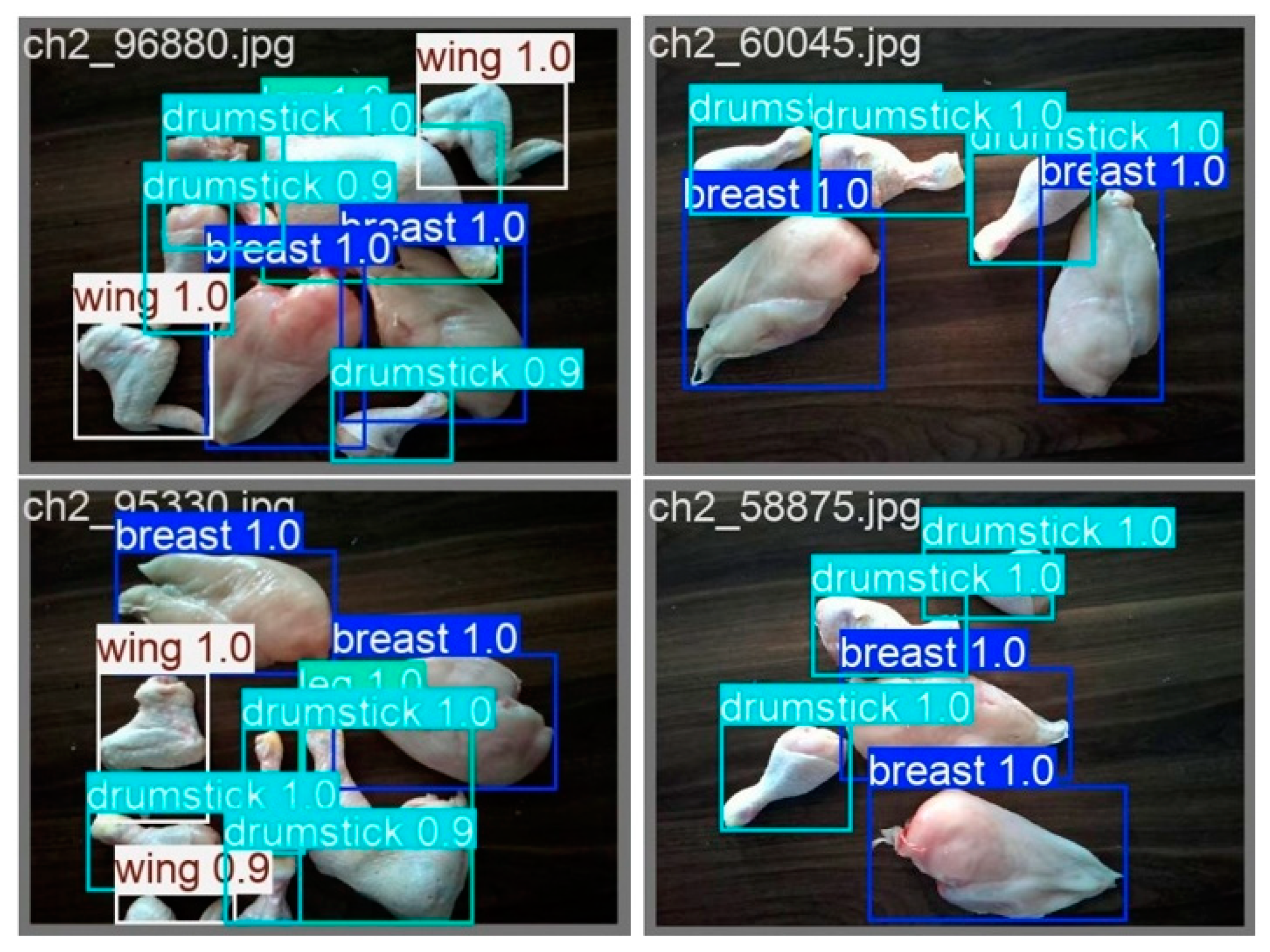

5. Experiments and Results

5.1. Experimental Design

5.2. Experimental Results

6. Conclusions

- Reducing the need for manual intervention by automating the process of separating chicken parts,

- Minimizing errors caused by manual classification by the human eye,

- Reducing and eliminating labor waste and faulty product packaging waste,

- Increasing the degree of automation of chicken plant production processes,

- Reducing customer complaints due to faulty product packaging and increasing customer satisfaction,

- Directing the workforce to areas where it can be used more efficiently,

- Increasing the speed and efficiency of the production process and reducing production costs.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AIFI | Attention-based Intra-scale Feature Interaction |

| ANN | Artificial Neural Networks |

| AP | Mean Precision |

| AUC | Area Under The Receiver-Operating Characteristic Curve |

| C2F | CSPBottleneck with 2 convolutions |

| CCD | Charge-coupled Device |

| CCFM | CNN-based Cross-scale Feature Fusion |

| CIoU | Complete Intersection over Union |

| CNN | Convolutional Neural Network |

| CNTP | Number of chicken pieces correctly detected by the model |

| CNTN | Number of chicken pieces correctly missed |

| CNFP | Number of chicken pieces incorrectly detected |

| DETR | Detection Transformer |

| DFL | Distribution Focused Loss |

| FPN | Feature Pyramid Network |

| FPS | Frames per Second |

| GFLOPs | Giga Floating-Point Operations Per Second |

| GPU | Graphics Processing Unit |

| IOU | Intersection Over Union |

| mAP | Mean Average Precision |

| MITF | Mean Inference Time per Frame |

| PAN | Path Aggregation Network |

| PR | Precision–recall |

| R-CNN | Region Based Convolutional Neural Networks |

| RT-DETR | Real-Time Detection Transformer |

| SPPF | Spatial Pyramid Pooling Fast |

| YOLOv4 | You Only Look Once version 4 |

| YOLOv5 | You Only Look Once version 5 |

| YOLOv5x | You Only Look Once version 5-extra large |

| YOLOv7 | You Only Look Once version 7 |

| YOLOv8 | You Only Look Once version 8 |

| YOLOv8l | You Only Look Once version 8-large |

| YOLOv8m | You Only Look Once version 8-medium |

| YOLOv8n | You Only Look Once version 8-nano |

| YOLOv8s | You Only Look Once version 8-small |

| YOLOv8x | You Only Look Once version 8-extra large |

References

- Omrak, H. Türkiye ranks 10th in the world in poultry meat production. Agric. For. J. 2021. Available online: http://www.turktarim.gov.tr/Haber/702/turkiye-kanatli-eti-uretiminde-dunyada-10--sirada#:~:text=Sekt%C3%B6r%2C%2015%20bin%20adet%20kay%C4%B1tl%C4%B1,ise%20d%C3%BCnyada%207’nci%20s%C4%B1radad%C4%B1r (accessed on 22 February 2025). (In Turkish).

- Türkiye Statistical Institute. Available online: https://data.tuik.gov.tr/Bulten/Index?p=Kumes-Hayvanciligi-Uretimi-Ocak-2024-53562 (accessed on 21 November 2024). (In Turkish)

- Statista. Global Chicken Meat Production 2012–2024. Available online: https://www.statista.com/statistics/237637/production-of-poultry-meat-worldwide-since-1990/#:~:text=This%20statistic%20depicts%20chicken%20meat,million%20metric%20tons%20by%202024 (accessed on 21 November 2024).

- Cooreman-Algoed, M.; Boone, L.; Taelman, S.E.; Van Hemelryck, S.; Brunson, A.; Dewulf, J. Impact of consumer behaviour on the environmental sustainability profile of food production and consumption chains–a case study on chicken meat. Resour. Conserv. Recycl. 2022, 178, 106089. [Google Scholar] [CrossRef]

- Marcinkowska-Lesiak, M.; Zdanowska-Sąsiadek, Ż.; Stelmasiak, A.; Damaziak, K.; Michalczuk, M.; Poławska, E.; Wyrwisz, J.; Wierzbicka, A. Effect of packaging method and cold-storage time on chicken meat quality. CyTA-J. Food 2016, 14, 41–46. [Google Scholar] [CrossRef]

- Teimouri, N.; Omid, M.; Mollazade, K.; Mousazadeh, H.; Alimardani, R.; Karstoft, H. On-line separation and sorting of chicken portions using a robust vision-based intelligent modelling approach. Biosyst. Eng. 2018, 167, 8–20. [Google Scholar] [CrossRef]

- Nayyar, A.; Kumar, A. (Eds.) A Roadmap to Industry 4.0: Smart Production, Sharp Business and Sustainable Development; Springer Nature: Berlin/Heidelberg, Germany, 2019; ISBN 978-3-030-14543-9. [Google Scholar]

- Chen, Y.W.; Shiu, J.M. An implementation of YOLO-family algorithms in classifying the product quality for the acrylonitrile butadiene styrene metallization. Int. J. Adv. Manuf. Technol. 2022, 119, 8257–8269. [Google Scholar] [CrossRef] [PubMed]

- Kulkarni, U.; Patil, A.; Devaranavadagi, R.; Devagiri, S.B.; Pamali, S.K.; Ujawane, R. Vision-Based Quality Control Check of Tube Shaft using DNN Architecture. In Proceedings of the ITM Web of Conferences 2023, Gujarat, India, 28-29 April 2023; EDP Sciences. Volume 53, p. 02009. [Google Scholar] [CrossRef]

- Chetoui, M.; Akhloufi, M.A. Object detection model-based quality inspection using a deep CNN. In Proceedings of the Sixteenth International Conference on Quality Control by Artificial Vision, Albi, France, 6–8 June 2023; Volume 12749, pp. 65–72. [Google Scholar] [CrossRef]

- Ardic, O.; Cetinel, G. Deep Learning Based Real-Time Engine Part Inspection with Collaborative Robot Application. IEEE Access 2024. [Google Scholar] [CrossRef]

- Akgül, İ. A novel deep learning method for detecting defects in mobile phone screen surface based on machine vision. Sak. Univ. J. Sci. 2023, 27, 442–451. [Google Scholar] [CrossRef]

- Alpdemir, M.N. Pseudo-Supervised Defect Detection Using Robust Deep Convolutional Autoencoders. Sak. Univ. J. Comput. Inf. Sci. 2022, 5, 385–403. [Google Scholar] [CrossRef]

- Güngör, M.A. A New Gradient Based Surface Defect Detection Method for the Ceramic Tile. Sak. Univ. J. Sci. 2022, 26, 1159–1169. [Google Scholar] [CrossRef]

- Fan, J.; Zheng, P.; Li, S. Vision-based holistic scene understanding towards proactive human–robot collaboration. Robot. Comput.-Integr. Manuf. 2022, 75, 102304. [Google Scholar] [CrossRef]

- Zhang, R.; Lv, J.; Li, J.; Bao, J.; Zheng, P.; Peng, T. A graph-based reinforcement learning-enabled approach for adaptive human-robot collaborative assembly operations. J. Manuf. Syst. 2022, 63, 491–503. [Google Scholar] [CrossRef]

- Li, C.; Zheng, P.; Yin, Y.; Pang, Y.M.; Huo, S. An AR-assisted Deep Reinforcement Learning-based approach towards mutual-cognitive safe human-robot interaction. Robot. Comput.-Integr. Manuf. 2023, 80, 102471. [Google Scholar] [CrossRef]

- Banafian, N.; Fesharakifard, R.; Menhaj, M.B. Precise seam tracking in robotic welding by an improved image processing approach. Int. J. Adv. Manuf. Technol. 2021, 114, 251–270. [Google Scholar] [CrossRef]

- Rout, A.; Deepak, B.B.V.L.; Biswal, B.B.; Mahanta, G.B. Weld seam detection, finding, and setting of process parameters for varying weld gap by the utilization of laser and vision sensor in robotic arc welding. IEEE Trans. Ind. Electron. 2021, 69, 622–632. [Google Scholar] [CrossRef]

- Chen, C.; Chen, T.; Cai, Z.; Zeng, C.; Jin, X. A hierarchical visual model for robot automatic arc welding guidance. Ind. Robot. Int. J. Robot. Res. Appl. 2023, 50, 299–313. [Google Scholar] [CrossRef]

- Susto, G.A.; Schirru, A.; Pampuri, S.; McLoone, S.; Beghi, A. Machine learning for predictive maintenance: A multiple classifier approach. IEEE Trans. Ind. Inform. 2014, 11, 812–820. [Google Scholar] [CrossRef]

- Paolanti, M.; Romeo, L.; Felicetti, A.; Mancini, A.; Frontoni, E.; Loncarski, J. Machine learning approach for predictive maintenance in industry 4.0. In Proceedings of the 2018 14th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Oulu, Finland, 2–4 July 2018. [Google Scholar] [CrossRef]

- Liu, C.; Zhu, H.; Tang, D.; Nie, Q.; Zhou, T.; Wang, L.; Song, Y. Probing an intelligent predictive maintenance approach with deep learning and augmented reality for machine tools in IoT-enabled manufacturing. Robot. Comput.-Integr. Manuf. 2022, 77, 102357. [Google Scholar] [CrossRef]

- Zhuang, L.; Xu, A.; Wang, X.L. A prognostic driven predictive maintenance framework based on Bayesian deep learning. Reliab. Eng. Syst. Saf. 2023, 234, 109181. [Google Scholar] [CrossRef]

- Knoll, D.; Prüglmeier, M.; Reinhart, G. Predicting future inbound logistics processes using machine learning. Procedia CIRP 2016, 52, 145–150. [Google Scholar] [CrossRef]

- Yu, X.; Liao, X.; Li, W.; Liu, X.; Tao, Z. Logistics automation control based on machine learning algorithm. Clust. Comput. 2019, 22, 14003–14011. [Google Scholar] [CrossRef]

- Abosuliman, S.S.; Almagrabi, A.O. Computer vision assisted human computer interaction for logistics management using deep learning. Comput. Electr. Eng. 2021, 96, 107555. [Google Scholar] [CrossRef]

- Gregory, S.; Singh, U.; Gray, J.; Hobbs, J. A computer vision pipeline for automatic large-scale inventory tracking. In Proceedings of the 2021 ACM Southeast Conference, New York, NY, USA, 15–17 April 2021; pp. 100–107. [Google Scholar] [CrossRef]

- Denizhan, B.; Yıldırım, E.; Akkan, Ö. An Order-Picking Problem in a Medical Facility Using Genetic Algorithm. Processes 2024, 13, 22. [Google Scholar] [CrossRef]

- Zhafran, F.; Ningrum, E.S.; Tamara, M.N.; Kusumawati, E. Computer vision system based for personal protective equipment detection, by using convolutional neural network. In Proceedings of the 2019 International Electronics Symposium (IES), Surabaya, Indonesia, 27–28 September 2019. [Google Scholar] [CrossRef]

- Khandelwal, P.; Khandelwal, A.; Agarwal, S.; Thomas, D.; Xavier, N.; Raghuraman, A. Using computer vision to enhance safety of workforce in manufacturing in a post COVID world. arXiv 2020, arXiv:2005.05287. [Google Scholar] [CrossRef]

- Cheng, J.P.; Wong, P.K.Y.; Luo, H.; Wang, M.; Leung, P.H. Vision-based monitoring of site safety compliance based on worker re-identification and personal protective equipment classification. Autom. Constr. 2022, 139, 104312. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, Z.; Li, X. Rs-detr: An improved remote sensing object detection model based on rt-detr. Appl. Sci. 2024, 14, 10331. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1137–1149. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Güney, E.; Bayilmiş, C.; Çakan, B. An implementation of real-time traffic signs and road objects detection based on mobile GPU platforms. IEEE Access 2022, 10, 86191–86203. [Google Scholar] [CrossRef]

- Talaat, F.M.; ZainEldin, H. An improved fire detection approach based on YOLO-v8 for smart cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Inui, A.; Mifune, Y.; Nishimoto, H.; Mukohara, S.; Fukuda, S.; Kato, T.; Furukawa, T.; Tanaka, S.; Kusunose, M.; Takigami, S.; et al. Detection of elbow OCD in the ultrasound image by artificial intelligence using YOLOv8. Appl. Sci. 2023, 13, 7623. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Nguyen, D.; Hoang, V.D.; Le, V.T.L. V-DETR: Pure Transformer for End-to-End Object Detection. In Asian Conference on Intelligent Information and Database Systems; Springer Nature: Singapore, 2024; pp. 120–131. [Google Scholar] [CrossRef]

- Zhao, X.; Song, Y. Improved ship detection with YOLOv8 enhanced with MobileViT and GSConv. Electronics 2023, 12, 4666. [Google Scholar] [CrossRef]

- Wang, A.; Xu, Y.; Wang, H.; Wu, Z.; Wei, Z. CDE-DETR: A Real-Time End-To-End High-Resolution Remote Sensing Object Detection Method Based on RT-DETR. In Proceedings of the IGARSS 2024-2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024. [Google Scholar]

- Asmara, R.A.; Hasanah, Q.; Rahutomo, F.; Rohadi, E.; Siradjuddin, I.; Ronilaya, F.; Handayani, A.N. Chicken meat freshness identification using colors and textures feature. In Proceedings of the 2018 Joint 7th International Conference on Informatics, Electronics & Vision (ICIEV) and 2018 2nd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 25–29 June 2018. [Google Scholar] [CrossRef]

- Putra, G.B.; Prakasa, E. Classification of chicken meat freshness using convolutional neural network algorithms. In Proceedings of the 2020 International Conference on Innovation and Intelligence for Informatics, Computing and Technologies (3ICT), Sakheer, Bahrain, 20–21 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Garcia, M.B.P.; Labuac, E.A.; Hortinela, C.C., IV. Chicken meat freshness classification based on vgg16 architecture. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 13–15 September 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Nyalala, I.; Okinda, C.; Makange, N.; Korohou, T.; Chao, Q.; Nyalala, L.; Zhang, J.; Zuo, Y.; Yousaf, K.; Liu, C.; et al. On-line weight estimation of broiler carcass and cuts by a computer vision system. Poult. Sci. 2021, 100, 101474. [Google Scholar] [CrossRef]

- You, M.; Liu, J.; Zhang, J.; Xv, M.; He, D. A novel chicken meat quality evaluation method based on color card localization and color correction. IEEE Access 2020, 8, 170093–170100. [Google Scholar] [CrossRef]

- Salma, S.; Habib, M.; Tannouche, A.; Ounejjar, Y. Poultry Meat Classification Using MobileNetV2 Pretrained Model. Rev. D’intelligence Artif. 2023, 37, 275–280. [Google Scholar] [CrossRef]

- Chen, Y.; Peng, X.; Cai, L.; Jiao, M.; Fu, D.; Xu, C.C.; Zhang, P. Research on automatic classification and detection of chicken parts based on deep learning algorithm. J. Food Sci. 2023, 88, 4180–4193. [Google Scholar] [CrossRef]

- Peng, X.; Xu, C.; Zhang, P.; Fu, D.; Chen, Y.; Hu, Z. Computer vision classification detection of chicken parts based on optimized Swin-Transformer. CyTA-J. Food 2024, 22, 2347480. [Google Scholar] [CrossRef]

- Tamang, S.; Sen, B.; Pradhan, A.; Sharma, K.; Singh, V.K. Enhancing COVID-19 safety: Exploring yolov8 object detection for accurate face mask classification. Int. J. Intell. Syst. Appl. Eng. 2023, 11, 892–897. Available online: https://ijisae.org/index.php/IJISAE/article/view/2966 (accessed on 20 February 2025).

- Bawankule, R.; Gaikwad, V.; Kulkarni, I.; Kulkarni, S.; Jadhav, A.; Ranjan, N. Visual detection of waste using YOLOv8. In Proceedings of the 2023 International Conference on Sustainable Computing and Smart Systems (ICSCSS), Coimbatore, India, 14–16 June 2023; pp. 869–873. [Google Scholar] [CrossRef]

- Jun, E.L.T.; Tham, M.L.; Kwan, B.H. A Comparative Analysis of RT-DETR and YOLOv8 for Urban Zone Aerial Object Detection. In Proceedings of the 2024 IEEE International Conference on Automatic Control and Intelligent Systems (I2CACIS), Shah Alam, Malaysia, 29 June 2024; pp. 340–345. [Google Scholar] [CrossRef]

- Guemas, E.; Routier, B.; Ghelfenstein-Ferreira, T.; Cordier, C.; Hartuis, S.; Marion, B.; Bertout, S.; Varlet-Marie, E.; Costa, D.; Pasquier, G. Automatic patient-level recognition of four Plasmodium species on thin blood smear by a real-time detection transformer (RT-DETR) object detection algorithm: A proof-of-concept and evaluation. Microbiol. Spectr. 2024, 12, e01440-23. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://docs.ultralytics.com/models/yolov8 (accessed on 20 February 2025).

- Yu, Z.; Wan, L.; Yousaf, K.; Lin, H.; Zhang, J.; Jiao, H.; Yan, G.; Song, Z.; Tian, F. An enhancement algorithm for head characteristics of caged chickens detection based on cyclic consistent migration neural network. Poult. Sci. 2024, 103, 103663. [Google Scholar] [CrossRef] [PubMed]

- Dumitriu, A.; Tatui, F.; Miron, F.; Ionescu, R.T.; Timofte, R. Rip current segmentation: A novel benchmark and yolov8 baseline results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1261–1271. [Google Scholar]

- Aishwarya, N.; Kumar, V. Banana ripeness classification with deep CNN on NVIDIA jetson Xavier AGX. In Proceedings of the 2023 7th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), Kirtipur, Nepal, 11–13 October 2023; pp. 663–668. [Google Scholar] [CrossRef]

- Soylu, E.; Soylu, T. A performance comparison of YOLOv8 models for traffic sign detection in the Robotaxi-full scale autonomous vehicle competition. Multimed. Tools Appl. 2024, 83, 25005–25035. [Google Scholar] [CrossRef]

- Zhai, X.; Huang, Z.; Li, T.; Liu, H.; Wang, S. YOLO-Drone: An optimized YOLOv8 network for tiny UAV object detection. Electronics 2023, 12, 3664. [Google Scholar] [CrossRef]

- Kumari, S.; Gautam, A.; Basak, S.; Saxena, N. Yolov8 based deep learning method for potholes detection. In Proceedings of the 2023 IEEE International Conference on Computer Vision and Machine Intelligence (CVMI), Gwalior, India, 10–11 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Pereira, G.A. Fall detection for industrial setups using yolov8 variants. arXiv 2024, arXiv:2408.04605. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Lu, L.L.; Li, X.L.; Wu, Y.W.; Chen, B.C. Enhanced RT-DETR for Traffic Sign Detection: Small Object Precision and Lightweight Design. Research Square [Preprint]. 12 November 2024. Available online: https://www.researchsquare.com/article/rs-5351138/v1 (accessed on 20 February 2025).

- Liu, M.; Wang, H.; Du, L.; Ji, F.; Zhang, M. Bearing-detr: A lightweight deep learning model for bearing defect detection based on rt-detr. Sensors 2024, 24, 4262. [Google Scholar] [CrossRef] [PubMed]

- Dai, L.; Wang, D.; Song, F.; Yang, H. Concrete Bridge Crack Detection Method Based on an Improved RT-DETR Model. In Proceedings of the 2024 3rd International Conference on Robotics, Artificial Intelligence and Intelligent Control (RAIIC), Mianyang, China, 5–7 July 2024; pp. 172–175. [Google Scholar]

- Liu, Y.; Cao, Y.; Sun, Y. Research on Rail Defect Recognition Method Based on Improved RT-DETR Model. In Proceedings of the 2024 5th International Conference on Computer Engineering and Application (ICCEA), Hangzhou, China, 12–14 April 2024; pp. 1464–1468. [Google Scholar] [CrossRef]

- Li, X.; Cai, M.; Tan, X.; Yin, C.; Chen, W.; Liu, Z.; Wen, J.; Han, Y. An efficient transformer network for detecting multi-scale chicken in complex free-range farming environments via improved RT-DETR. Comput. Electron. Agric. 2024, 224, 109160. [Google Scholar] [CrossRef]

- Cao, X.; Wang, H.; Wang, X.; Hu, B. DFS-DETR: Detailed-feature-sensitive detector for small object detection in aerial images using transformer. Electronics 2024, 13, 3404. [Google Scholar] [CrossRef]

- Tang, S.; Yan, W. Utilizing RT-DETR model for fruit calorie estimation from digital images. Information 2024, 15, 469. [Google Scholar] [CrossRef]

- Yu, C.; Chen, X. Railway rutting defects detection based on improved RT-DETR. J. Real-Time Image Process. 2024, 21, 146. [Google Scholar] [CrossRef]

- Bayraktar, E.; Basarkan, M.E.; Celebi, N. A low-cost UAV framework towards ornamental plant detection and counting in the wild. ISPRS J. Photogramm. Remote Sens. 2020, 167, 1–11. [Google Scholar] [CrossRef]

- Mamdouh, N.; Khattab, A. YOLO-based deep learning framework for olive fruit fly detection and counting. IEEE Access 2021, 9, 84252–84262. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Crivellari, A.; Ghamisi, P.; Shahabi, H.; Blaschke, T. A comprehensive transferability evaluation of U-Net and ResU-Net for landslide detection from Sentinel-2 data (case study areas from Taiwan, China, and Japan). Sci. Rep. 2021, 11, 14629. [Google Scholar] [CrossRef]

- Torkul, O.; Selvi, İ.H.; Şişci, M.; Diren, D.D. A New Model for Assembly Task Recognition: A Case Study of Seru Production System. IEEE Access 2024. [Google Scholar] [CrossRef]

- Jang, W.S.; Kim, S.; Yun, P.S.; Jang, H.S.; Seong, Y.W.; Yang, H.S.; Chang, J.S. Accurate detection for dental implant and peri-implant tissue by transfer learning of faster R-CNN: A diagnostic accuracy study. BMC Oral Health 2022, 22, 591. [Google Scholar] [CrossRef]

- Qadir, H.A.; Shin, Y.; Solhusvik, J.; Bergsland, J.; Aabakken, L.; Balasingham, I. Toward real-time polyp detection using fully CNNs for 2D Gaussian shapes prediction. Med. Image Anal. 2021, 68, 101897. [Google Scholar] [CrossRef] [PubMed]

- Su, Y.; Cheng, B.; Cai, Y. Detection and Recognition of Traditional Chinese Medicine Slice Based on YOLOv8. In Proceedings of the 2023 IEEE 6th International Conference on Electronic Information and Communication Technology (ICEICT), Qingdao, China, 21–24 July 2023; pp. 214–217. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-time flying object detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar] [CrossRef]

- Ding, J.; Zhang, J.; Zhan, Z.; Tang, X.; Wang, X. A precision efficient method for collapsed building detection in post-earthquake UAV images based on the improved NMS algorithm and faster R-CNN. Remote Sens. 2022, 14, 663. [Google Scholar] [CrossRef]

- Jin, M.; Zhang, J. Research on Microscale Vehicle Logo Detection Based on Real-Time DEtection TRansformer (RT-DETR). Sensors 2024, 24, 6987. [Google Scholar] [CrossRef]

- Wu, M.; Qiu, Y.; Wang, W.; Su, X.; Cao, Y.; Bai, Y. Improved RT-DETR and its application to fruit ripeness detection. Front. Plant Sci. 2025, 16, 1423682. [Google Scholar] [CrossRef]

- Parekh, A. Comparative Analysis of YOLOv8 and RT-DETR for Real-Time Object Detection in Advanced Driver Assistance Systems. Master’s Thesis, The University of Western Ontario, London, ON, Canada, 2025. [Google Scholar]

| Training Dataset | Validation Dataset | Test Dataset | ||||

|---|---|---|---|---|---|---|

| Class | Images | Instances | Images | Instances | Images | Instances |

| All | 634 | 1639 | 136 | 400 | 137 | 363 |

| breast | 261 | 384 | 51 | 73 | 58 | 90 |

| drumstick | 303 | 696 | 74 | 198 | 61 | 134 |

| wing | 106 | 315 | 21 | 63 | 24 | 86 |

| leg | 112 | 146 | 30 | 40 | 17 | 23 |

| shank | 38 | 98 | 9 | 26 | 9 | 30 |

| Parameter | Value |

|---|---|

| Epoch | 300 |

| Batch size | 2 |

| Momentum | 0.9 |

| Input image size | 640 × 640 |

| Warmup epochs | 3 |

| Warmup momentum | 0.8 |

| Warmup bias learning rate | 0.1 |

| Patience | 100 |

| Workers | 8 |

| Optimizer | Adam |

| Weight decay | 0.0005 |

| Initial learning rate | 0.001111 |

| Final learning rate | 0.01 |

| Model | Parameters | Layers | Number of Completed Epochs | Training Time (hours) | Training Time per Epoch (hours) | GFLOPs |

|---|---|---|---|---|---|---|

| YOLOv8n | 3,006,623 | 168 | 300 | 2.244 | 0.00748 | 8.1 |

| YOLOv8s | 11,127,519 | 168 | 300 | 4.380 | 0.0146 | 28.4 |

| YOLOv8m | 25,842,655 | 218 | 300 | 5.469 | 0.01823 | 78.7 |

| YOLOv8l | 43,610,463 | 268 | 300 | 6.494 | 0.021647 | 164.8 |

| YOLOv8x | 68,128,283 | 268 | 300 | 8.693 | 0.028977 | 257.4 |

| RT-DETR Large | 31,994,015 | 494 | 244 | 8.400 | 0.034426 | 103.5 |

| RT-DETR Extra-Large | 65,477,711 | 638 | 158 | 11.176 | 0.070734 | 222.5 |

| Model | Precision | Recall | F1-Score | mAP@0.5 | mAP@0.5:0.95 | MITF (ms/Image) |

|---|---|---|---|---|---|---|

| YOLOv8n | 0.9801 | 1.0 | 0.9900 | 0.9948 | 0.9720 | 6.1 |

| YOLOv8s | 0.9961 | 0.9977 | 0.9969 | 0.9950 | 0.9807 | 10.3 |

| YOLOv8m | 0.9864 | 0.9911 | 0.9888 | 0.9926 | 0.9802 | 15.2 |

| YOLOv8l | 0.9949 | 0.9925 | 0.9937 | 0.9945 | 0.9738 | 22.7 |

| YOLOv8x | 0.9884 | 0.9910 | 0.9897 | 0.9937 | 0.9742 | 32.8 |

| RT-DETR Large | 0.9908 | 1.0 | 0.9954 | 0.9938 | 0.9670 | 30.5 |

| RT-DETR Extra-Large | 0.9981 | 0.9694 | 0.9835 | 0.9888 | 0.9588 | 45.8 |

| Study | Classes (Types of Chicken Parts) | Dataset Details | Methods | The Most Successful Method | The Most Successful Results |

|---|---|---|---|---|---|

| Teimouri et al. [6] | breast, leg, filet, wing, and drumstick | 100 samples | Partial least squares regression, linear discriminant analysis, and artificial neural network | Artificial neural network | Overall accuracy: %93, MITF: 15 ms/image |

| Chen et al. [49] | wing, leg, and breast | 600 images | YOLOV4-CSPDarknet53, YOLOV3-Darknet53, YOLOV3-MobileNetv3, SSD-MobileNetv3, and SSD-VGG16 | YOLOV4-CSPDarknet53 | mAP%: 98.86%, MITF 22.2 ms/image |

| Peng et al. [50] | wing, leg, and breast | 2000 images | Swin-Transformer, YOLOV3-Darknet53, YOLOV3-MobileNetv3, SSD-MobileNetv3, and SSD-VGG16 | Swin-Transformer | mAP% = 97.21%, MITF: 19.02 ms/image |

| This Study | breast, drumstick, wing, leg and shank | 907 images | YOLOv8 (YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l and YOLOv8x) RT-DETR (RT-DETR Large and RT-DETR Extra-Large) | YOLOv8s | F1-Score: 0.9969, mAP@0.5: 0.9950, mAP@0.5:0.95: 0.9807, MITF: 10.3 ms/image |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Şahin, D.; Torkul, O.; Şişci, M.; Diren, D.D.; Yılmaz, R.; Kibar, A. Real-Time Classification of Chicken Parts in the Packaging Process Using Object Detection Models Based on Deep Learning. Processes 2025, 13, 1005. https://doi.org/10.3390/pr13041005

Şahin D, Torkul O, Şişci M, Diren DD, Yılmaz R, Kibar A. Real-Time Classification of Chicken Parts in the Packaging Process Using Object Detection Models Based on Deep Learning. Processes. 2025; 13(4):1005. https://doi.org/10.3390/pr13041005

Chicago/Turabian StyleŞahin, Dilruba, Orhan Torkul, Merve Şişci, Deniz Demircioğlu Diren, Recep Yılmaz, and Alpaslan Kibar. 2025. "Real-Time Classification of Chicken Parts in the Packaging Process Using Object Detection Models Based on Deep Learning" Processes 13, no. 4: 1005. https://doi.org/10.3390/pr13041005

APA StyleŞahin, D., Torkul, O., Şişci, M., Diren, D. D., Yılmaz, R., & Kibar, A. (2025). Real-Time Classification of Chicken Parts in the Packaging Process Using Object Detection Models Based on Deep Learning. Processes, 13(4), 1005. https://doi.org/10.3390/pr13041005