A Multi-Source Fusion-Based Material Tracking Method for Discrete–Continuous Hybrid Scenarios

Abstract

1. Introduction

- (1)

- Difficulties in material state modeling under complex processes: Existing SES models have three key limitations—(a) relying on fixed path assumptions and being unable to adapt to “small-batch, multi-specification” production; (b) lacking hierarchical inference logic, with most adopting single-layer event-driven production, making it difficult to resolve process intersection conflicts; (c) separating inference from error correction, only being able to output static states, and being unable to suppress accumulated errors of dynamic trajectories, resulting in traditional modeling being unable to cover the discrete–continuous hybrid process of special steel.

- (2)

- Insufficient accuracy of visual detection and tracking under similar materials and complex working conditions: existing detection algorithms have a weak ability to extract weak features under a high-temperature glare; tracking algorithms are prone to ID switches and trajectory breakage due to fixed weights and weak distinguishability, making it difficult to meet the high-beat and high-reliability tracking requirements of special steel production.

- (3)

- Unstable decision-making under multi-source information heterogeneity and conflicts: traditional D-S fusion lacks spatiotemporal alignment mechanisms and insufficiently quantifies evidence conflicts caused by temporal deviations and semantic heterogeneity, leading to reduced reliability of state decisions in conflict areas and failure to ensure the continuity of full-process tracking.

- (1)

- Proposes an integrated semantic modeling method of “process-rule-driven hierarchical inference + trajectory inference with anchor correction,” breaking the scenario adaptability bottleneck of traditional state modeling: first, a general SES framework, decoupled from specific processes, is constructed. By abstracting “material states” and “trigger events,” a unified state description for multi-specification steel materials is realized. Second, a three-layer hierarchical inference system based on Drools is designed, improving the robustness of state inference. Finally, a signal-driven trajectory inference and multi-anchor dynamic correction strategy is proposed. Through “prediction-matching sliding-window smoothing,” errors are suppressed, ensuring trajectory continuity in non-visual areas and filling the gap of traditional modeling that “only has static states without in-process position localization”.

- (2)

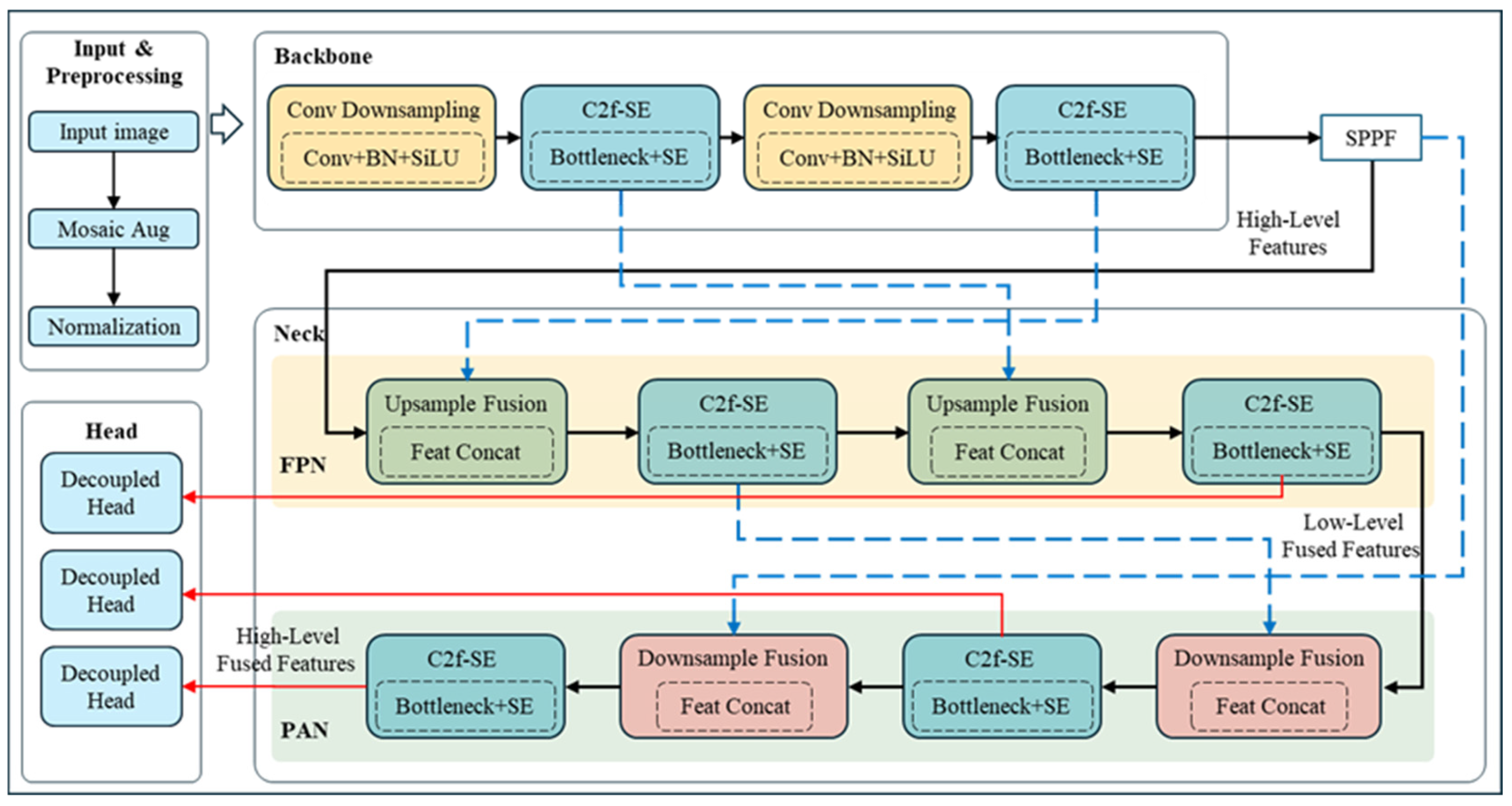

- Proposes a visual perception scheme of “YOLOv8-SE + improved DeepSORT,” solving the accuracy and stability problems of visual tracking in complex industrial scenarios: first, the squeeze-and-excitation (SE) channel attention mechanism is embedded after the C2f module of YOLOv8 to enhance the discriminability of weak features, such as steel surface details and boundary notches. Second, a hybrid loss function of the “focal loss (solving class imbalance) + CIOU loss (optimizing bounding box regression)” is adopted to improve detection accuracy in high-temperature glare and blurred scenarios. Finally, the DeepSORT framework is improved—optimizing re-ID feature extraction through cross-entropy loss and triplet loss (solving similar material confusion), dynamically adjusting the weight of motion/appearance similarity (reducing ID switches), and retaining weak matches and cross-camera spatial alignment (avoiding trajectory breakage)—thus, improving the ID retention rate and trajectory integrity.

- (3)

- Proposes an improved D-S evidence fusion strategy with “dynamic confidence weighting, spatiotemporal alignment, and multi-frame consistency voting,” realizing the reliable collaborative decision-making of multi-source data: first, a dynamically confidence-weighted BPA is constructed, integrating historical state consistency, temporal stability, and upstream module confidence, and adjusting the discount factor according to the modal noise level (reducing abnormal interference). Second, a spatiotemporal alignment mechanism (temporal offset estimation + spatial coordinate calibration) is introduced, to ensure multi-source data are fused under the same benchmark. Finally, a multi-frame consistency voting module is designed to select the optimal result through branch trajectory scoring in case of high conflicts, improving fusion reliability in complex scenarios and ensuring the accuracy of full-process tracking.

2. Multi-Source Fusion Material Tracking Method for Discrete–Continuous Hybrid Scenarios

- (1)

- Semantic modeling layer: utilizes SES and the Drools rule engine to abstract equipment signals and processing actions into event streams, driving state transitions and achieving trajectory inference, providing process priors for downstream modules;

- (2)

- Visual perception layer: based on YOLOv8-SE and improved DeepSORT, performs real-time detection and cross-frame association under complex conditions, improving detection accuracy and ID stability;

- (3)

- Multi-source fusion layer: through improved D-S evidence theory, incorporates dynamic confidence, spatiotemporal alignment, and consistency voting mechanisms to integrate visual, signal, and anchor data from multiple sources, enhancing system robustness.

2.1. Semantic Modeling Layer

2.1.1. State Modeling and Rule Inference

- (1)

- Atomic event rule layer L1: encodes equipment signals with Boolean logic to generate standardized events as foundational drivers;

- (2)

- State inference layer L2: builds a finite state machine that receives event streams to drive state transitions, embedding a time window for delayed updates to accumulate confidence and avoid ambiguous paths;

- (3)

- Advanced constraint layer L3: resolves rule conflicts through interlocking mutual exclusion, priority scheduling, and causal chain inference, with backtracking to repair state drifts.

2.1.2. Signal-Driven Trajectory Inference Model

2.2. Visual Perception Layer

2.2.1. YOLOv8-SE Detection Network Optimization

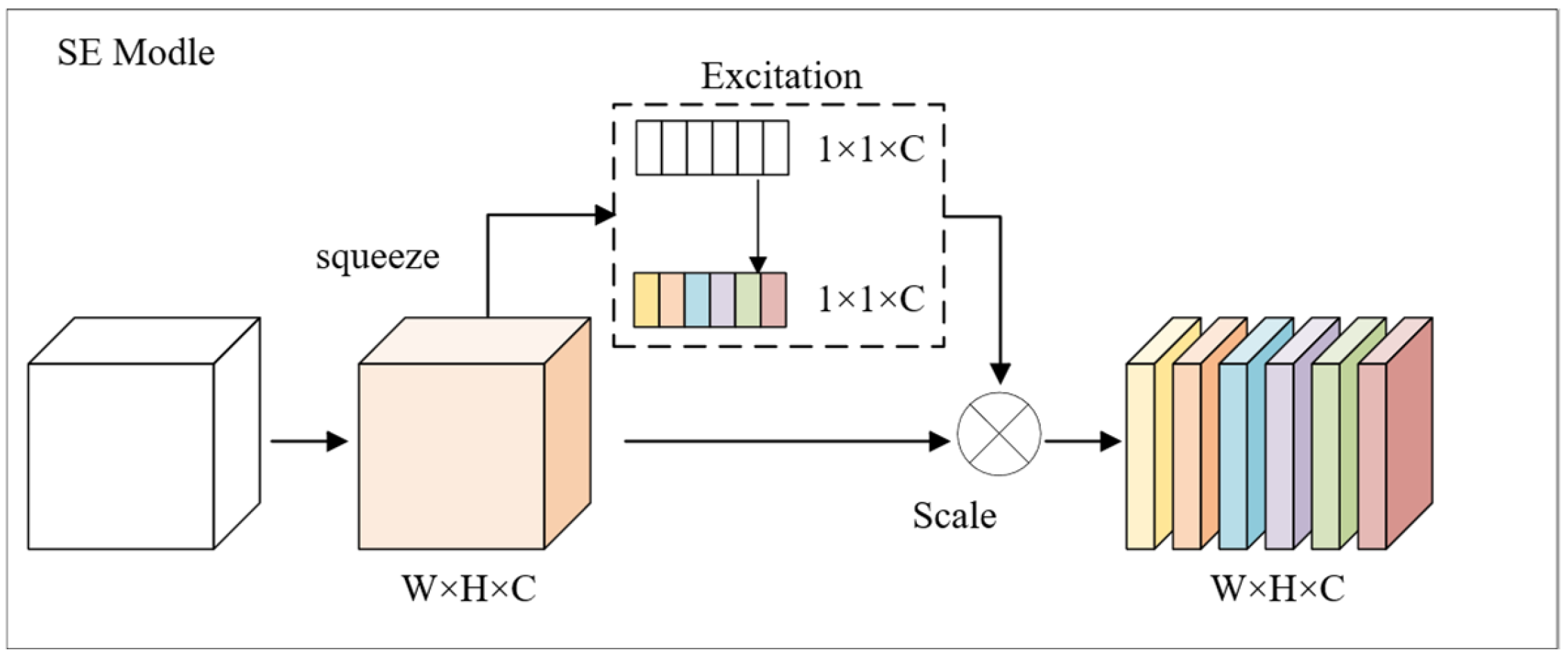

- (1)

- Channel squeeze: computes channel descriptors via global average pooling to compress spatial dimensions:where is the pixel value of feature map at position in channel c, and is the global descriptor for channel c.

- (2)

- Channel excitation: learns channel weights via fully connected layers to highlight key channels:where and are fully connected layer weight matrices (ris compression ratio, set to 4), ReLU(⋅) is the activation function, and σ is the Sigmoid function.

- (3)

- Feature enhancement (Scale): multiplies learned weights with the original feature map channel-wise to generate enhanced features:where is the original feature map’s c-th channel, and is the enhanced feature channel.

- (1)

- and use focal loss to address class imbalance:where is predicted probability, is true label, is balance coefficient (0.25), and γ is difficulty weight (2).

- (2)

- uses CIOU loss, integrating bounding box center distance, overlap area, and aspect ratio:where is predicted bounding box center vector, is ground truth bounding box center vector, is Euclidean distance, is the diagonal length of the smallest enclosing rectangle for both boxes, is aspect ratio consistency coefficient, and .

2.2.2. Improved DeepSORT Tracking Algorithm

- (1)

- Re-ID feature extraction optimization: extracts highly discriminative re-ID feature vectors via lightweight CNN:where is cross-entropy loss, and is triplet loss.

- (2)

- Multi-dimensional similarity fusion: combines Kalman filter predictions to compute motion similarity, introducing dynamic weights to balance motion and appearance information.

- (3)

- Association optimization strategies:

2.3. Multi-Source Fusion Decision Model

2.3.1. Consistency Verification and BPA Construction

- (1)

- Historical consistency : represents consistency between historical and current states:where is indicator function, is historical state of material at moment , and is temporal decay weight.

- (2)

- Temporal stability: measures state temporal stability via entropy:where is state entropy, is maximum entropy( is number of states), and is state probability distribution.

- (3)

- Path reliability: output from upstream modules (rule confidence from state modeling or classification confidence from visual detection).

2.3.2. D-S Evidence Combination and Anomaly Tolerance

- (1)

- TemporalTemporal alignment: estimate inter-modal time offset , where is reference modal trajectory, is m-th modal trajectory, is time offset, and is optimal offset;

- (2)

- Spatial alignment: corrects spatial differences via equipment coordinate system calibration, ensuring multi-modal position vectors in the same coordinate system.

- (1)

- Conflict detection: identifies anomalies via conflict factor or confidence fluctuation ;

- (2)

- Branch path generation: generates branch paths for anomalous trajectories, recording timestamp, position vector , and confidence for each branch;

- (3)

- Multi-frame voting selection: computes branch scores within time window , selecting the highest-score branch as primary trajectory .

- (4)

- Backtracking repair and manual review: repairs inconsistent segments (e.g., position jumps) in primary trajectory via backtracking; triggers manual review if conflicts persist over 3 frames.

3. Experiments

3.1. Experimental Setup

3.1.1. Experimental Scenario and Problem Definition

3.1.2. Experimental Data

3.1.3. Experimental Environment

3.1.4. Verification Scheme

3.1.5. Core Parameter Selection

3.2. Evaluation Metrics

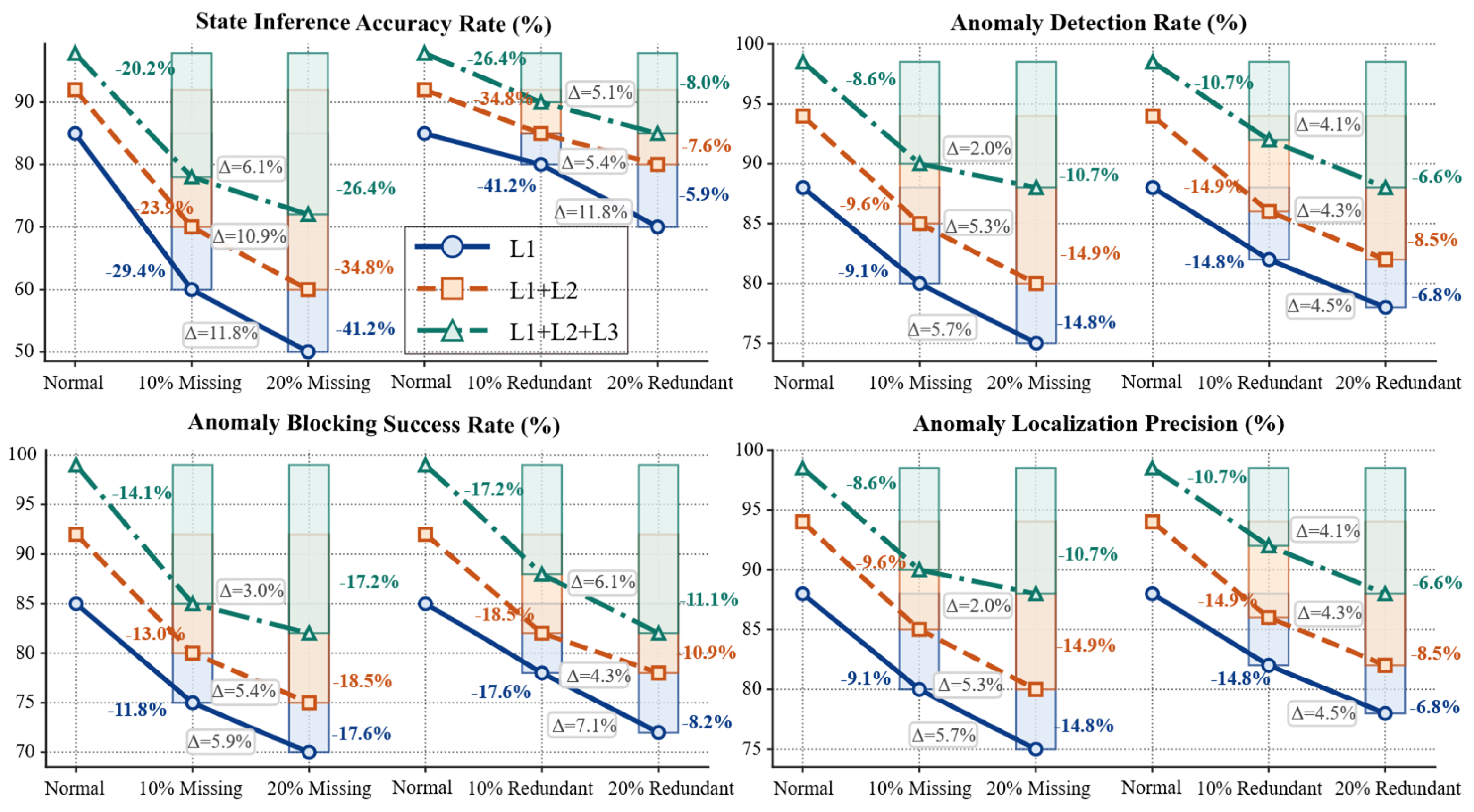

3.3. Performance Quantification of Three-Layer Rule-Based Inference

- (1)

- In the normal scenario without data interference, the three-layer rule model (L1 + L2 + L3) achieves the best performance: state inference accuracy rate (SAR) reaches 97.8%, anomaly detection rate (ADR) 98.5%, anomaly blocking success rate (BSR) 99.0%, and anomaly localization precision (ALP) 98.5%. All indicators are over 10% higher than those of the L1 model. The performance of the L1 + L2 model falls between L1 and L1 + L2 + L3. This indicates that the layered rule system can effectively integrate process specifications, reduce semantic ambiguity, and improve inference accuracy.

- (2)

- In scenarios with data missing or redundancy, the performance of all models decreases, but the three-layer model exhibits significantly stronger robustness. In the data redundancy scenario, the three-layer model achieves a BSR of 88.0% (10% higher than L1) and an ALP of 88.0% (10% higher than L1), with a smaller performance attenuation difference (Δ). This demonstrates that the interlock/anti-redundancy logic and causal chain reasoning of the high-level constraint layer can effectively suppress interference and reduce the rate of performance attenuation.

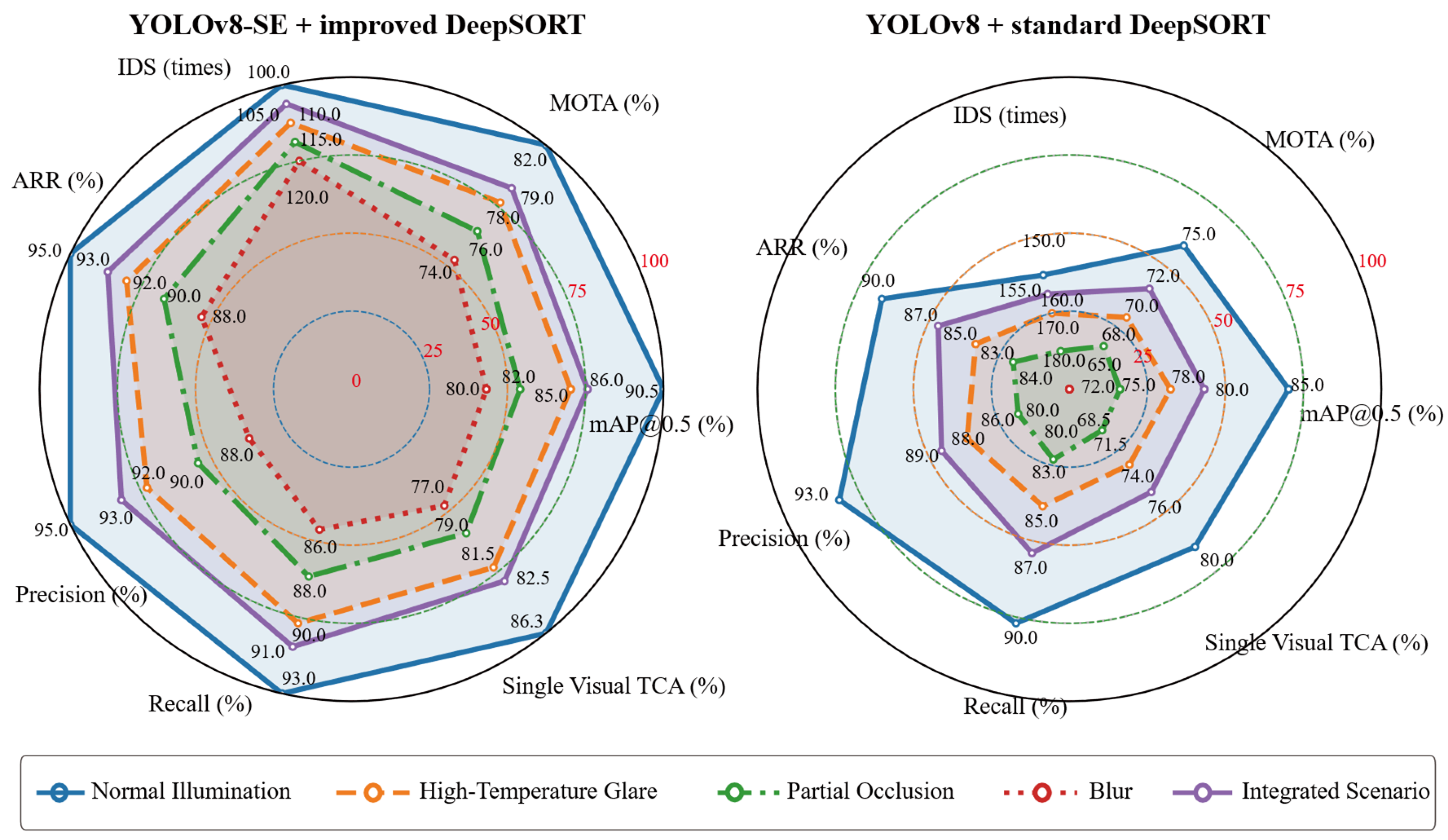

3.4. Visual Perception Performance

- (1)

- Min–max normalization was used to map all indicators to the [0, 100] range.

- (2)

- The number of ID switches (IDS) was reversed using the “maximum value reversal method” (reversed IDS = maximum IDS value − original IDS) before normalization, ensuring all indicators follow the “higher value = better performance” evaluation standard.

- (1)

- The “YOLOv8-SE + improved DeepSORT” combination exhibits significant advantages in the normal illumination scenario: mean average precision (mAP@0.5) reaches 90.5% (5.5% higher than the “original YOLOv8 + standard DeepSORT” combination), multiple object tracking accuracy (MOTA) 82.0% (7.0% higher than YOLOv8), and IDS is reduced by 33.3%. This reflects the enhancement effect of the squeeze-and-excitation (SE) module on key features.

- (2)

- YOLOv8-SE shows stronger robustness in interference scenarios: as scenario complexity increases (e.g., high-temperature glare → partial occlusion → blur), the performance of both models decreases, but the degradation amplitude of YOLOv8-SE is smaller.

3.5. Multi-Source Fusion Performance

3.5.1. Comparison Between Fusion and Single-Mode Baselines

- (1)

- In the normal scenario without data interference, the multi-source fusion method outperforms single-mode baselines in all indicators. Rule-based inference dimension: SAR reaches 99.8% (2.0% higher than rule-only), and ADR 99.9% (1.4% higher than rule-only), benefiting from the correction of rule ambiguity by visual data. Visual perception dimension: mAP@0.5 reaches 99.7% (9.2% higher than vision-only), and MOTA 99.8% (17.8% higher than vision-only), due to the filtering of visual false detections by rule constraints. Comprehensive recovery dimension: association recovery rate (ARR) reaches 99.0% (3–4% higher than both baselines), reflecting the advantage of “rule-visual” bidirectional verification.

- (2)

- The multi-source fusion method exhibits outstanding anti-interference capability in scenarios with data missing or redundancy. With under 20% data missing, the SAR of rule-only drops to 72.0% (26.4% lower than the normal scenario), while the SAR of multi-source remains at 96.4% (only 3.4% lower), as visual data compensates for the missing rule inputs. With under 20% data redundancy, the mAP@0.5 of vision-only drops to 80.0% (10.5% lower than the normal scenario), while the mAP@0.5 of multi-source reaches 96.7% (only 3.0% lower), as rule constraints eliminate redundant visual noise.

- (3)

- In the integrated scenario (mixed interference), the multi-source fusion method maintains core indicators above 98%: SAR = 98.2% (10.2% higher than rule-only), mAP@0.5 = 97.7% (11.7% higher than vision-only), and recall = 98.2% (9.2% higher than baselines). This verifies its core value of “complementary fault tolerance” in complex industrial environments—rule-based inference resolves visual ambiguity, while visual perception supplements missing rule information, forming a closed-loop verification.

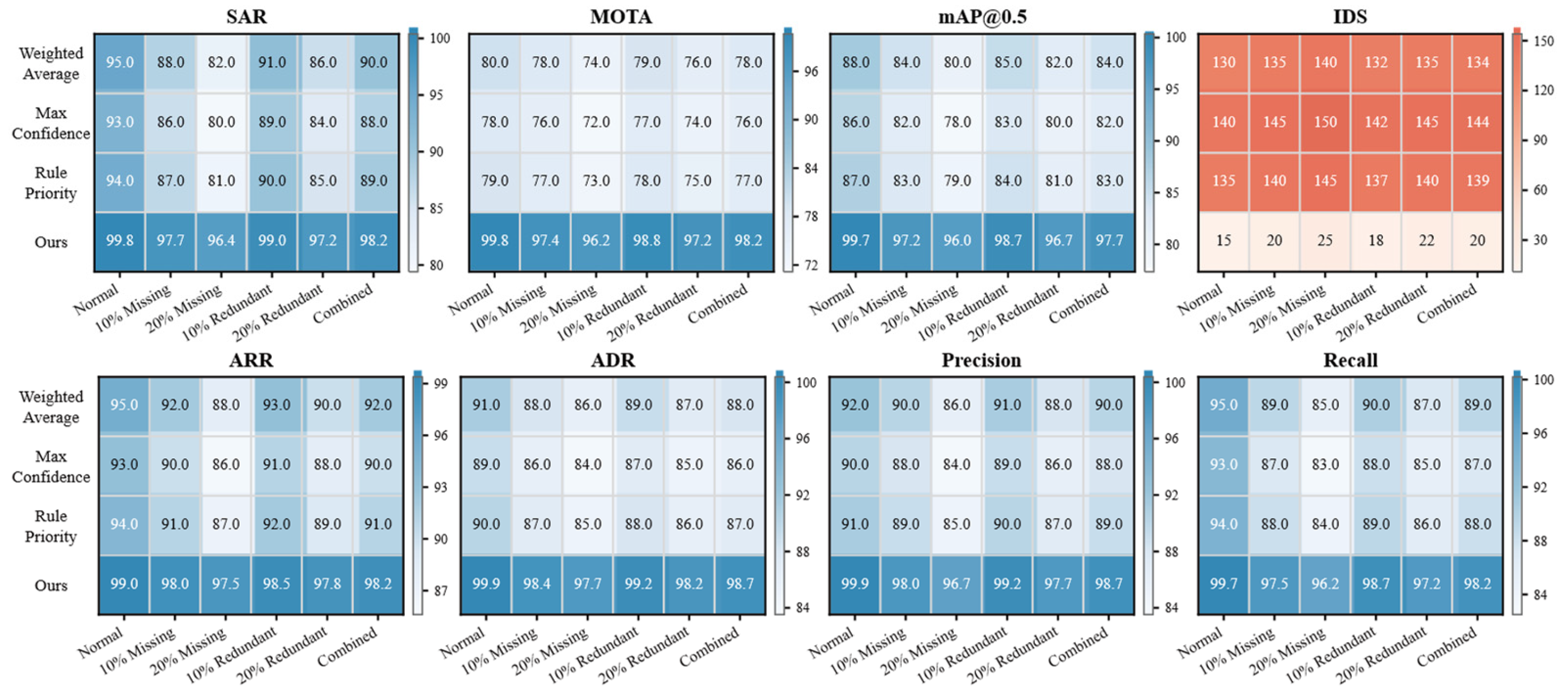

3.5.2. Comparison of Different Fusion Strategies

- (1)

- Our strategy achieves the best performance across all indicators: In the normal scenario, our strategy reaches SAR = 99.8% (4.8% higher than the second-best rule priority) and ADR = 99.9% (8.9% higher than the second-best weighted average), benefiting from the “dynamic confidence weighting + spatiotemporal alignment” mechanism to correct rule ambiguity. With under 20% data redundancy, our strategy achieves mAP@0.5 = 96.7% (11.7% higher than max confidence) and MOTA = 97.2% (18.2% higher than weighted average), as the optimized dynamic matching strategy of DeepSORT enhances ID persistence for similar materials.

- (2)

- The performance degradation amplitude of our strategy is significantly smaller than that of traditional strategies: For SAR, the degradation of our strategy from the normal to 20% missing scenario is 3.4%, while that of the weighted average strategy is 13.7%. This difference stems from the “anomaly fault tolerance module” of our strategy—which suppresses the weight of interfering data through dynamic confidence allocation, avoiding the sharp performance drop of traditional strategies caused by “over-reliance on a single data source”.

4. Conclusions

- (1)

- For the challenge of difficult state modeling (fixed path assumptions, process intersection conflicts, and separated inference-correction in existing SES), the semantic modeling layer combines a process-decoupled state–event system (SES) with a Drools hierarchical rule engine and multi-anchor dynamic calibration. Experimental results show a state inference accuracy (SAR) of 97.8% in the normal scenario, verifying that the three-layer reasoning system effectively resolves process intersection conflicts. Additionally, the multi-anchor correction suppresses trajectory accumulation errors by over 25% compared to traditional static modeling, enabling reliable state inference for small-batch and multi-specification production.

- (2)

- For the challenge of insufficient visual tracking accuracy (weak feature extraction under high-temperature glare, frequent ID switches, and trajectory breakage in occlusion), the visual perception layer adopts YOLOv8-SE (embedded with SE channel attention) and an improved DeepSORT. Under complex working conditions (e.g., high-temperature glare and partial occlusion), this layer achieves a 5.5–8.0% improvement in mAP@0.5 (reaching 90.5% in glare scenarios) and a reduction of over 30% in ID switches (IDS). These results confirm that the SE module enhances weak feature discriminability for similar materials, while the dynamic matching strategy of DeepSORT ensures trajectory continuity in multi-steel parallel transmission.

- (3)

- For the challenge of unstable multi-source decision-making (temporal deviations, semantic heterogeneity, and low conflict tolerance in traditional D-S fusion), the multi-source fusion layer introduces dynamic confidence weighting, spatiotemporal alignment, and multi-frame consistency voting based on improved D-S evidence theory. In scenarios with 20% data missing, the state inference accuracy (SAR) remains 96.4%—9.0% higher than that of single-mode methods (rule-only: 72.0%; vision-only: 85.0%). This proves that the spatiotemporal alignment mechanism eliminates modal deviations and the consistency voting module enhances conflict tolerance, ensuring stable decision-making under heterogeneous data interference.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zafar, M.H.; Langas, E.F.; Sanfilippo, F. Exploring the Synergies between Collaborative Robotics, Digital Twins, Augmentation, and Industry 5.0 for Smart Manufacturing: A State-of-the-Art Review. Robot. Comput.-Integr. Manuf. 2024, 89, 102769. [Google Scholar] [CrossRef]

- Li, J.; Wang, X.; Yang, Q.; Sun, Y.; Zhao, J.; Mao, X.; Qie, H. IoT-Based Framework for Digital Twins in Steel Production: A Case Study of Key Parameter Prediction and Optimization for CSR. Expert Syst. Appl. 2024, 250, 123909. [Google Scholar] [CrossRef]

- Kasilingam, S.; Yang, R.; Singh, S.K.; Farahani, M.A.; Rai, R.; Wuest, T. Physics-Based and Data-Driven Hybrid Modeling in Manufacturing: A Review. Prod. Manuf. Res. 2024, 12, 2305358. [Google Scholar] [CrossRef]

- Vasan, V.; Sridharan, N.V.; Vaithiyanathan, S.; Aghaei, M. Detection and Classification of Surface Defects on Hot-Rolled Steel Using Vision Transformers. Heliyon 2024, 10, e38498. [Google Scholar] [CrossRef]

- Tang, B.; Chen, L.; Sun, W.; Lin, Z. Review of Surface Defect Detection of Steel Products Based on Machine Vision. IET Image Process. 2023, 17, 303–322. [Google Scholar] [CrossRef]

- Ogunrinde, I.; Bernadin, S. Improved DeepSORT-Based Object Tracking in Foggy Weather for AVs Using Sematic Labels and Fused Appearance Feature Network. Sensors 2024, 24, 4692. [Google Scholar] [CrossRef]

- Chang, X.; Yan, X.; Qiu, B.; Wei, M.; Liu, J.; Zhu, H. Anomaly Detection and Confidence Interval-based Replacement in Decay State Coefficient of Ship Power System. IET Intell. Transp. Syst. 2024, 18, 2409–2439. [Google Scholar] [CrossRef]

- Chen, L.; Bi, G.; Yao, X.; Tan, C.; Su, J.; Ng, N.P.H.; Chew, Y.; Liu, K.; Moon, S.K. Multisensor Fusion-Based Digital Twin for Localized Quality Prediction in Robotic Laser-Directed Energy Deposition. Robot. Comput.-Integr. Manuf. 2023, 84, 102581. [Google Scholar] [CrossRef]

- Shi, L.; Ding, Y.; Cheng, B. Development and Application of Digital Twin Technique in Steel Structures. Appl. Sci. 2024, 14, 11685. [Google Scholar] [CrossRef]

- Huang, G.; Huang, H.; Zhai, Y.; Tang, G.; Zhang, L.; Gao, X.; Huang, Y.; Ge, G. Multi-Sensor Fusion for Wheel-Inertial-Visual Systems Using a Fuzzification-Assisted Iterated Error State Kalman Filter. Sensors 2024, 24, 7619. [Google Scholar] [CrossRef]

- Engelmann, B.; Schmitt, A.-M.; Heusinger, M.; Borysenko, V.; Niedner, N.; Schmitt, J. Detecting Changeover Events on Manufacturing Machines with Machine Learning and NC Data. Appl. Artif. Intell. 2024, 38, 2381317. [Google Scholar] [CrossRef]

- Chen, H.; Wang, J.; Shao, K.; Liu, F.; Hao, J.; Guan, C.; Chen, G.; Heng, P.-A. Traj-Mae: Masked Autoencoders for Trajectory Prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 8351–8362. [Google Scholar]

- Hennebold, C.; Islam, M.M.; Krauß, J.; Huber, M.F. Combination of Process Mining and Causal Discovery Generated Graph Models for Comprehensive Process Modeling. Procedia CIRP 2024, 130, 1296–1302. [Google Scholar] [CrossRef]

- Gaugel, S.; Reichert, M. Industrial Transfer Learning for Multivariate Time Series Segmentation: A Case Study on Hydraulic Pump Testing Cycles. Sensors 2023, 23, 3636. [Google Scholar] [CrossRef]

- Dogan, O.; Areta Hiziroglu, O. Empowering Manufacturing Environments with Process Mining-Based Statistical Process Control. Machines 2024, 12, 411. [Google Scholar] [CrossRef]

- Jackson, I.; Jesus Saenz, M.; Ivanov, D. From Natural Language to Simulations: Applying AI to Automate Simulation Modelling of Logistics Systems. Int. J. Prod. Res. 2024, 62, 1434–1457. [Google Scholar] [CrossRef]

- Akhramovich, K.; Serral, E.; Cetina, C. A Systematic Literature Review on the Application of Process Mining to Industry 4.0. Knowl. Inf. Syst. 2024, 66, 2699–2746. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, L.; Konz, N. Computer Vision Techniques in Manufacturing. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 105–117. [Google Scholar] [CrossRef]

- Gui, Z.; Geng, J. YOLO-ADS: An Improved YOLOv8 Algorithm for Metal Surface Defect Detection. Electronics 2024, 13, 3129. [Google Scholar] [CrossRef]

- Li, M.; Yu, Z.; Fang, L.; Meng, Y.; Zhang, T. GCP-YOLO Detection Algorithm for PCB Defects. In Proceedings of the 2024 4th International Symposium on Computer Technology and Information Science (ISCTIS), Xi’an, China, 12–14 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 492–495. [Google Scholar]

- Jing, Z.; Li, S.; Zhang, Q. YOLOv8-STE: Enhancing Object Detection Performance Under Adverse Weather Conditions with Deep Learning. Electronics 2024, 13, 5049. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking with a Deep Association Metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3645–3649. [Google Scholar]

- Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; Meng, H. Strongsort: Make Deepsort Great Again. IEEE Trans. Multimed. 2023, 25, 8725–8737. [Google Scholar] [CrossRef]

- Wang, Y.; Qing, Y.; Huang, K.; Dang, C.; Wu, Z. Preformer MOT: A Transformer-Based Approach for Multi-Object Tracking with Global Trajectory Prediction. Fundam. Res. 2025, in press. [Google Scholar]

- Liang, J.; Cheng, J. Mirror Target YOLO: An Improved YOLOv8 Method with Indirect Vision for Heritage Buildings Fire Detection. IEEE Access 2025, 13, 11195–11203. [Google Scholar] [CrossRef]

- Tang, J.; Ye, C.; Zhou, X.; Xu, L. YOLO-Fusion and Internet of Things: Advancing Object Detection in Smart Transportation. Alex. Eng. J. 2024, 107, 1–12. [Google Scholar] [CrossRef]

- Liu, Z.; Wei, J.; Li, R.; Zhou, J. SFusion: Self-Attention Based N-to-One Multimodal Fusion Block. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Greenspan, H., Madabhushi, A., Mousavi, P., Salcudean, S., Duncan, J., Syeda-Mahmood, T., Taylor, R., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 14221, pp. 159–169. ISBN 978-3-031-43894-3. [Google Scholar]

- Sheng, W.; Shen, J.; Huang, Q.; Liu, Z.; Ding, Z. Multi-Objective Pedestrian Tracking Method Based on YOLOv8 and Improved DeepSORT. Math. Biosci. Eng. 2024, 21, 1791–1805. [Google Scholar] [CrossRef]

- Cao, Z.; Huang, Z.; Pan, L.; Zhang, S.; Liu, Z.; Fu, C. Towards Real-World Visual Tracking with Temporal Contexts. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 15834–15849. [Google Scholar] [CrossRef] [PubMed]

- Song, X.; Zhang, T.; Yi, W. An Improved YOLOv8 Safety Helmet Wearing Detection Network. Sci. Rep. 2024, 14, 17550. [Google Scholar] [CrossRef] [PubMed]

- Hua, Z.; Jing, X. An Improved Belief Hellinger Divergence for Dempster-Shafer Theory and Its Application in Multi-Source Information Fusion. Appl. Intell. 2023, 53, 17965–17984. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, J.; Zhang, J.; Yi, R.; Wang, Y.; Wang, C. Multimodal Industrial Anomaly Detection via Hybrid Fusion. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 8032–8041. [Google Scholar]

- Tao, C.; Cao, X.; Du, J. G2SF-MIAD: Geometry-Guided Score Fusion for Multimodal Industrial Anomaly Detection. arXiv 2025, arXiv:2503.10091. [Google Scholar]

- El-Din, D.M.; Hassanein, A.E.; Hassanien, E.E. An Adaptive and Late Multifusion Framework in Contextual Representation Based on Evidential Deep Learning and Dempster–Shafer Theory. Knowl. Inf. Syst. 2024, 66, 6881–6932. [Google Scholar] [CrossRef]

- Shao, Z.; Dou, W.; Pan, Y. Dual-Level Deep Evidential Fusion: Integrating Multimodal Information for Enhanced Reliable Decision-Making in Deep Learning. Inf. Fusion 2024, 103, 102113. [Google Scholar] [CrossRef]

- Qu, X.; Liu, Z.; Wu, C.Q.; Hou, A.; Yin, X.; Chen, Z. MFGAN: Multimodal Fusion for Industrial Anomaly Detection Using Attention-Based Autoencoder and Generative Adversarial Network. Sensors 2024, 24, 637. [Google Scholar] [CrossRef]

- Wang, H.; Ji, Z.; Lin, Z.; Pang, Y.; Li, X. Stacked Squeeze-and-Excitation Recurrent Residual Network for Visual-Semantic Matching. Pattern Recognit. 2020, 105, 107359. [Google Scholar] [CrossRef]

- Shen, J.; Yang, H. Multi-Object Tracking Model Based on Detection Tracking Paradigm in Panoramic Scenes. Appl. Sci. 2024, 14, 4146. [Google Scholar] [CrossRef]

| Indicator Name | Abbreviation | Definition and Formula |

|---|---|---|

| State Reasoning Accuracy Rate | SAR | (Number of Correct States/Total Number of States) × 100% |

| Anomaly Detection Rate | ADR | (Number of Correctly Detected Anomalies/Total Number of Anomalies) × 100% |

| Anomaly Blocking Success Rate | BSR | (Number of Successfully Blocked Incorrect Updates/Number of Detected Anomalies) × 100% |

| Anomaly Localization Precision | ALP | (Number of Correctly Localized Anomalies/Number of Detected Anomalies) × 100% |

| Mean Average Precision | mAP@0.5 | Average Precision Under Intersection Over Union (IoU) Threshold of 0.5 |

| Multiple Object Tracking Accuracy | MOTA | Tracking Accuracy Integrating Missed Detections, False Detections, and ID switches |

| Number of ID Switches | IDS | Total Number of ID Switches During the Tracking Process |

| Association Recovery Rate | ARR | (Number of Successfully Recovered Associations/Total Number of Interrupted Associations) × 100% |

| Precision | Precision | (True Positives/(True Positives + False Positives)) × 100% |

| Recall | Recall | (True Positives/(True Positives + False Negatives)) × 100% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, K.; Xiao, X.; Zhang, Y.; Liu, G.; Li, X.; Zhang, F. A Multi-Source Fusion-Based Material Tracking Method for Discrete–Continuous Hybrid Scenarios. Processes 2025, 13, 3727. https://doi.org/10.3390/pr13113727

Yang K, Xiao X, Zhang Y, Liu G, Li X, Zhang F. A Multi-Source Fusion-Based Material Tracking Method for Discrete–Continuous Hybrid Scenarios. Processes. 2025; 13(11):3727. https://doi.org/10.3390/pr13113727

Chicago/Turabian StyleYang, Kaizhi, Xiong Xiao, Yongjun Zhang, Guodong Liu, Xiaozhan Li, and Fei Zhang. 2025. "A Multi-Source Fusion-Based Material Tracking Method for Discrete–Continuous Hybrid Scenarios" Processes 13, no. 11: 3727. https://doi.org/10.3390/pr13113727

APA StyleYang, K., Xiao, X., Zhang, Y., Liu, G., Li, X., & Zhang, F. (2025). A Multi-Source Fusion-Based Material Tracking Method for Discrete–Continuous Hybrid Scenarios. Processes, 13(11), 3727. https://doi.org/10.3390/pr13113727