Priority/Demand-Based Resource Management with Intelligent O-RAN for Energy-Aware Industrial Internet of Things

Abstract

:1. Introduction

- We study efficiency resource management, which enables O-RAN to provide gratitude support for multi-vendor and scalable deployment of MEC servers and improve resources based on the task demands and service priority.

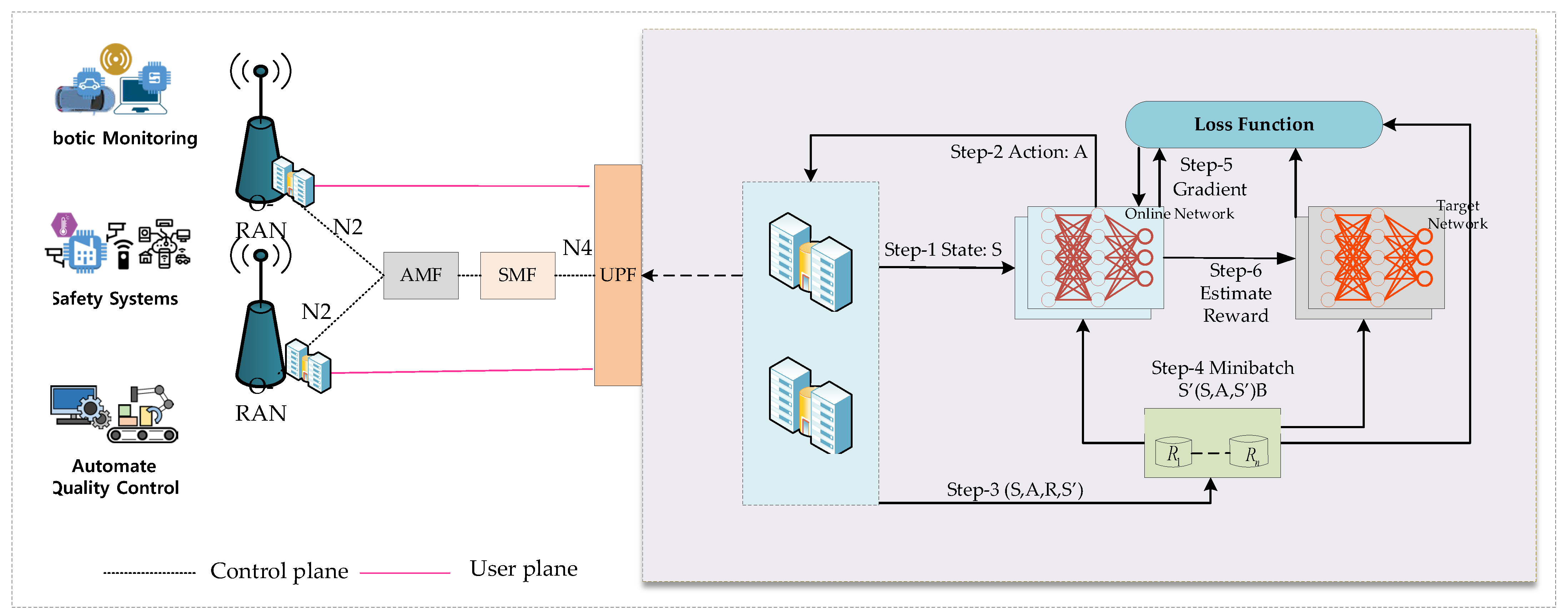

- Then, the problem of resource and energy minimization is conducted to transform into a Markov decision process (MDP). After, we design a novelty distributed DRL-driven resource management policy in the proposed model, which jointly optimal resource and priority/demand based on IIoT criteria usage.

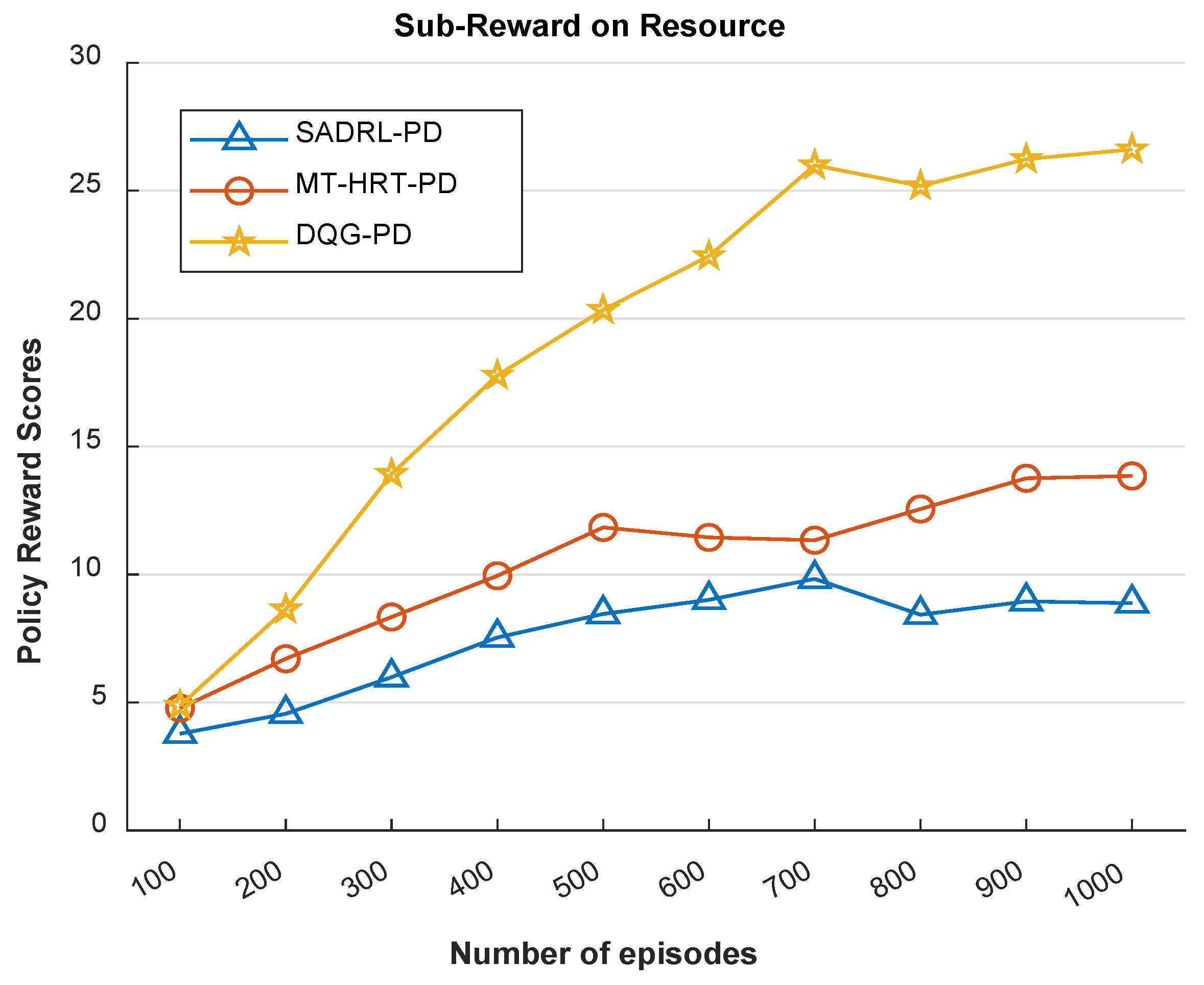

- Our proposed DQG-PD algorithm improves resource management efficiency and reduces task processing time and latency to enhance efficient resource awareness of IIoT applications.

- We enhance network energy efficiency optimization based on the DQN approach. Leveraging the DQN approach, which decouples two stages (e.g., online network and target network) to respond to the network performance by stabilizing long-term learning while enabling rapid adaptation to immediate demands.

- Lastly, we conduct experiments to evaluate and show the witness that our network scenario outperforms reference schemes.

2. Related Work

2.1. Energy Efficiency for IIoT

2.2. Optimization Approaches Based on MEC for Virtualization in Energy Utilization

2.3. DRL for MEC-Integrated O-RAN Resource Management

3. Problem Formulation and Objectives

3.1. IIoT Model and Slice Types

- QCI 3 ensures that the communication infrastructure supports the reliability and timely exchange of data critical for the automation of industrial processes and real-time monitoring applications. Hence, data is critical for automation in controlling the network environment’s charge policy.

- QCI 70 ensures that mission-critical data in IIoT environments receives the highest level of service quality, characterized by ultra-reliability, low latency, high priority, enhanced security, and dedicated bandwidth.

- QCI 82 provides the resource capabilities for defining discrete automation, which involves controlling and monitoring manufacturing processes that handle individual parts or units, generally in environments such as assembly lines or robotics, where precision and real-time performance are crucial.

3.2. Designing and Formulating Network Resource Management

3.3. Communication Model

3.4. Offloading Model

3.5. Computation Model

- MEC server execution:

- Total complete time:

3.6. Objective Model on Resource Management

4. DQN-Based Priority/Demanding Resource Management

4.1. Markov Decision Process Elements

- (1)

- State-space: in each time slot, each communication link and computation in MEC observe the network state from the environment. Let denote the state space. The current environment state includes measurement of the data transmission rate from the IIoT device and MEC server, the status of all resources in the IIoT device is supposed to offload the resource to the MEC server, 0 otherwise. computation task model of n-th. As a result, state is defined by the following parameters:

- (2)

- Action-space: we utilize agents to make decisions based on gathering the current state of the environment. The goal of the agent is to make the optimal decision based on maximizing the resource utilization in terms of bandwidth, computation resource utilization, and minimizing the overall average service delay with minimal task execution. Action at each time step t can be defined as the action in our network system, which considers offloading the t-th task and allocating the resource (bandwidth and computation resource) to the task for execution on the MEC server. Action can be defined as:where is a representation of the channel bandwidth, selection task offloading for task size with belonging to MEC server when = 1, and in local. The agent will take actions based on tasks in each time step and get the reward from the environment. Note that in each decision epoch, an action also affects the next state in next time slot-t.

- (3)

- Reward: RL aims to maximize the reward from good actions. Our reward function is to design and optimize to reflect the enhancement of the priority of resource management and efficient energy. The reward function can be defined as:

4.2. DQN-Based Solutions

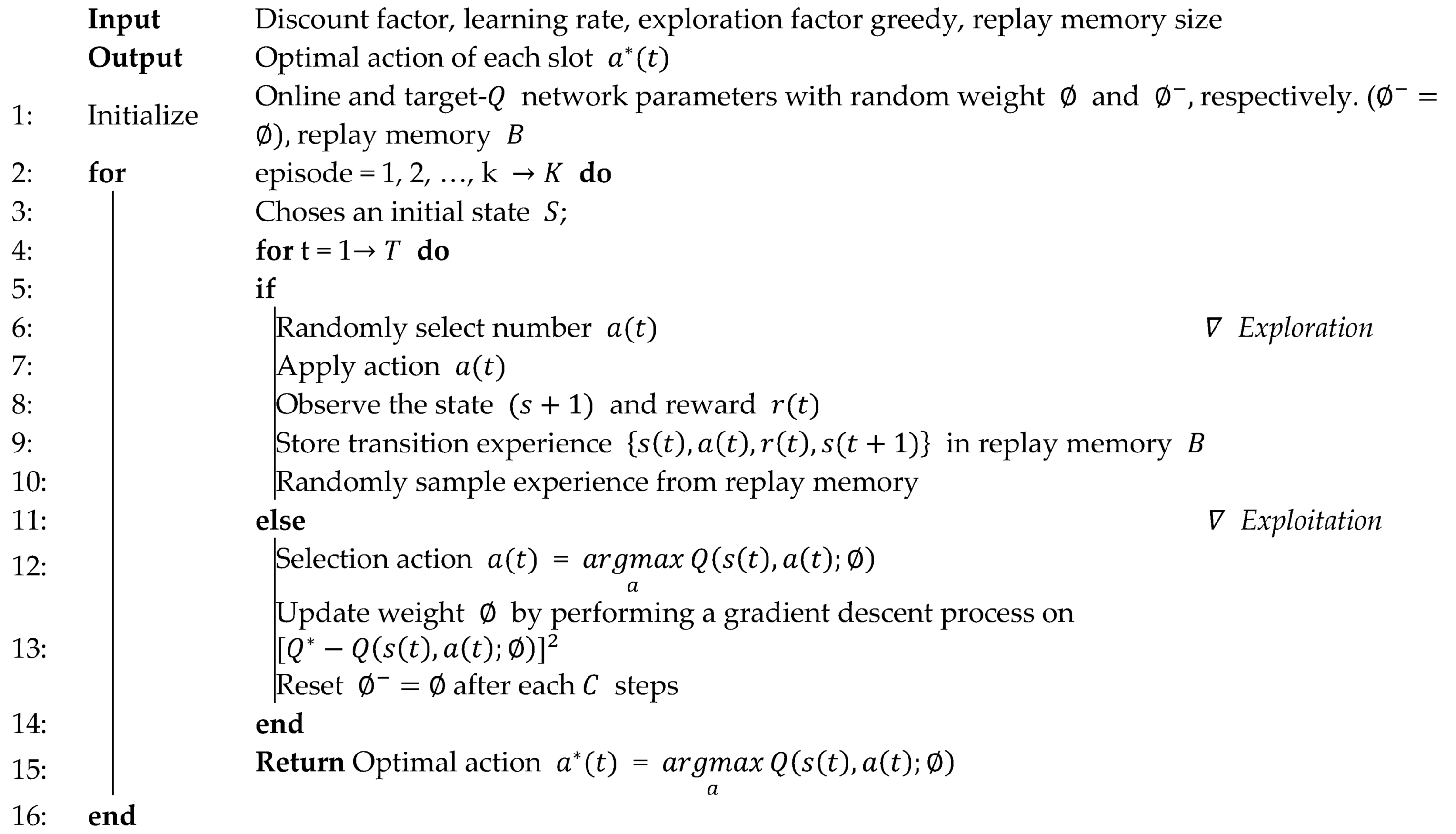

| Algorithm 1: DQG-PD algorithm for priority/demand resources in the MEC server |

|

5. Simulation and Discussions

5.1. Parameter Settings

5.2. Performance Evaluation

- The high acceptance ratio demonstrates the controller’s scalability and ability to effectively accommodate a larger number of service requests.

- The restoration ratio measures how well the system recovers from service failures, ensuring uninterrupted service and high availability, particularly for high incoming task requests.

- In total, we concluded the performance into completion ratios, which demonstrates its effectiveness in completing tasks even under heavy loads.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Westergren, U.H.; Mähler, V.; Jadaan, T. Enabling Digital Transformation: Organizational Implementation of the Internet of Things. Inf. Manag. 2024, 61, 103996. [Google Scholar] [CrossRef]

- Niboucha, R.; Saad, S.B.; Ksentini, A.; Challal, Y. Zero-Touch Security Management for MMTC Network Slices: DDoS Attack Detection and Mitigation. IEEE Internet Things J. 2022, 10, 7800–7812. [Google Scholar] [CrossRef]

- Eloranta, V.; Turunen, T. Platforms in Service-Driven Manufacturing: Leveraging Complexity by Connecting, Sharing, and Integrating. Ind. Mark. Manag. 2016, 55, 178–186. [Google Scholar] [CrossRef]

- Leng, J.; Sha, W.; Wang, B.; Zheng, P.; Zhuang, C.; Liu, Q.; Wuest, T.; Mourtzis, D.; Wang, L. Industry 5.0: Prospect and Retrospect. J. Manuf. Syst. 2022, 65, 279–295. [Google Scholar] [CrossRef]

- Xu, X.; Lu, Y.; Vogel-Heuser, B.; Wang, L. Industry 4.0 and Industry 5.0—Inception, Conception and Perception. J. Manuf. Syst. 2021, 61, 530–535. [Google Scholar] [CrossRef]

- Chi, H.R.; Wu, C.K.; Huang, N.-F.; Tsang, K.-F.; Radwan, A. A Survey of Network Automation for Industrial Internet-of-Things toward Industry 5.0. IEEE Trans. Ind. Inform. 2023, 19, 2065–2077. [Google Scholar] [CrossRef]

- Mao, W.; Zhao, Z.; Chang, Z.; Min, G.; Gao, W. Energy-Efficient Industrial Internet of Things: Overview and Open Issues. IEEE Trans. Ind. Inform. 2021, 17, 7225–7237. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A Survey on Mobile Edge Computing: The Communication Perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Polese, M.; Bonati, L.; D’Oro, S.; Basagni, S.; Melodia, T. Understanding O-RAN: Architecture, Interfaces, Algorithms, Security, and Research Challenges. IEEE Commun. Surv. Tutor. 2023, 25, 1376–1411. [Google Scholar] [CrossRef]

- Liang, X.; Wang, Q.; Al-Tahmeesschi, A.; Chetty, S.B.; Grace, D.; Ahmadi, H. Energy Consumption of Machine Learning Enhanced Open RAN: A Comprehensive Review. IEEE Access 2024, 12, 81889–81910. [Google Scholar] [CrossRef]

- Chih-Lin, I.; Kuklinski, S.; Chen, T.; Ladid, L. A Perspective of O-RAN Integration with MEC, SON, and Network Slicing in the 5G Era. IEEE Netw. 2020, 34, 3–4. [Google Scholar] [CrossRef]

- Lu, J.; Feng, W.; Pu, D. Resource Allocation and Offloading Decisions of D2D Collaborative UAV-Assisted MEC Systems. KSII Trans. Internet Inf. Syst. 2024, 18, 211–232. [Google Scholar]

- Ojaghi, B.; Adelantado, F.; Verikoukis, C. SO-RAN: Dynamic RAN Slicing via Joint Functional Splitting and MEC Placement. IEEE Trans. Veh. Technol. 2023, 72, 1925–1939. [Google Scholar] [CrossRef]

- Ateya, A.A.; Algarni, A.D.; Hamdi, M.; Koucheryavy, A.; Soliman, N.F. Enabling Heterogeneous IoT Networks over 5G Networks with Ultra-Dense Deployment—Using MEC/SDN. Electronics 2021, 10, 910. [Google Scholar] [CrossRef]

- Ros, S.; Tam, P.; Song, I.; Kang, S.; Kim, S. Handling Efficient VNF Placement with Graph-Based Reinforcement Learning for SFC Fault Tolerance. Electronics 2024, 13, 2552. [Google Scholar] [CrossRef]

- Shi, X.; Zhang, Z.; Cui, Z.; Cai, X. Many-Objective Joint Optimization for Dependency-Aware Task Offloading and Service Caching in Mobile Edge Computing. KSII Trans. Internet Inf. Syst. 2024, 18, 1238–1259. [Google Scholar]

- Yi, D.; Zhou, X.; Wen, Y.; Tan, R. Toward Efficient Compute-Intensive Job Allocation for Green Data Centers: A Deep Reinforcement Learning Approach. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019. [Google Scholar] [CrossRef]

- Popli, S.; Jha, R.K.; Jain, S. A Survey on Energy Efficient Narrowband Internet of Things (NBIoT): Architecture, Application and Challenges. IEEE Access 2019, 7, 16739–16776. [Google Scholar] [CrossRef]

- Kim, D.-Y.; Park, J.; Kim, S. Data Transmission in Backscatter IoT Networks for Smart City Applications. J. Sens. 2022, 2022, e4973782. [Google Scholar] [CrossRef]

- Ren, Y.; Guo, A.; Song, C. Multi-Slice Joint Task Offloading and Resource Allocation Scheme for Massive MIMO Enabled Network. KSII Trans. Internet Inf. Syst. 2023, 17, 794–815. [Google Scholar]

- Ros, S.; Tam, P.; Song, I.; Kang, S.; Kim, S. A Survey on State-of-The-Art Experimental Simulations for Privacy-Preserving Federated Learning in Intelligent Networking. Electron. Res. Arch. 2024, 32, 1333–1364. [Google Scholar] [CrossRef]

- Nagappan, K.; Rajendran, S.; Alotaibi, Y. Trust Aware Multi-Objective Metaheuristic Optimization Based Secure Route Planning Technique for Cluster Based IIoT Environment. IEEE Access 2022, 10, 112686–112694. [Google Scholar] [CrossRef]

- Kang, S.; Ros, S.; Song, I.; Tam, P.; Math, S.; Kim, S. Real-Time Prediction Algorithm for Intelligent Edge Networks with Federated Learning-Based Modeling. Comput. Mater. Contin. 2023, 77, 1967–1983. [Google Scholar] [CrossRef]

- Mao, M.; Lee, A.; Hong, M. Deep Learning Innovations in Video Classification: A Survey on Techniques and Dataset Evaluations. Electronics 2024, 13, 2732. [Google Scholar] [CrossRef]

- Patel, D.; Won, M. Experimental Study on Low Power Wide Area Networks (LPWAN) for Mobile Internet of Things. In Proceedings of the 2017 IEEE 85th Vehicular Technology Conference (VTC Spring), Sydney, NSW, Australia, 4–7 June 2017. [Google Scholar] [CrossRef]

- Qin, Z.; Li, F.Y.; Li, G.Y.; McCann, J.A.; Ni, Q. Low-Power Wide-Area Networks for Sustainable IoT. IEEE Wirel. Commun. 2019, 26, 140–145. [Google Scholar] [CrossRef]

- Zhou, F.; Feng, L.; Kadoch, M.; Yu, P.; Li, W.; Wang, Z. Multiagent RL Aided Task Offloading and Resource Management in Wi-Fi 6 and 5G Coexisting Industrial Wireless Environment. IEEE Trans. Ind. Inform. 2022, 18, 2923–2933. [Google Scholar] [CrossRef]

- Tam, P.; Ros, S.; Song, I.; Kim, S. QoS-Driven Slicing Management for Vehicular Communications. Electronics 2024, 13, 314. [Google Scholar] [CrossRef]

- Hazra, A.; Adhikari, M.; Amgoth, T.; Srirama, S.N. Intelligent Service Deployment Policy for Next-Generation Industrial Edge Networks. IEEE Trans. Netw. Sci. Eng. 2021, 9, 3057–3066. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, J.; Chen, Z.; Chen, Z.; Gan, J.; He, J.; Wang, B. Spectrum- and Energy- Efficiency Analysis under Sensing Delay Constraint for Cognitive Unmanned Aerial Vehicle Networks. KSII Trans. Internet Inf. Syst. 2022, 16, 1392–1413. [Google Scholar]

- Ernest, H.; Madhukumar, A.S. Computation Offloading in MEC-Enabled IoV Networks: Average Energy Efficiency Analysis and Learning-Based Maximization. IEEE Trans. Mob. Comput. 2023, 23, 6074–6087. [Google Scholar] [CrossRef]

- Lim, H.; Hwang, T. Energy-Efficient Beamforming and Resource Allocation for Multi-Antenna MEC Systems. IEEE Access 2022, 10, 18008–18022. [Google Scholar] [CrossRef]

- Sun, M.; Xu, X.; Huang, Y.; Wu, Q.; Tao, X.; Zhang, P. Resource Management for Computation Offloading in D2D-Aided Wireless Powered Mobile-Edge Computing Networks. IEEE Internet Things J. 2021, 8, 8005–8020. [Google Scholar] [CrossRef]

- Tong, Z.; Cai, J.; Mei, J.; Li, K.; Li, K. Dynamic Energy-Saving Offloading Strategy Guided by Lyapunov Optimization for IoT Devices. IEEE Internet Things J. 2022, 9, 19903–19915. [Google Scholar] [CrossRef]

- Guo, F.; Zhang, H.; Li, X.; Ji, H. Victor Joint Optimization of Caching and Association in Energy-Harvesting-Powered Small-Cell Networks. IEEE Trans. Veh. Technol. 2018, 67, 6469–6480. [Google Scholar] [CrossRef]

- Qazzaz, M.M.; Kułacz, Ł.; Kliks, A.; Zaidi, S.A.; Dryjanski, M.; McLernon, D. Machine Learning-Based XApp for Dynamic Resource Allocation in O-RAN Networks. arXiv 2024, arXiv:2401.07643. [Google Scholar] [CrossRef]

- Mungari, F. An RL Approach for Radio Resource Management in the O-RAN Architecture. In Proceedings of the 2021 18th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Rome, Italy, 6–9 July 2021. [Google Scholar] [CrossRef]

- Yu, P.; Yang, M.; Xiong, A.; Ding, Y.; Li, W.; Qiu, X.; Meng, L.; Kadoch, M.; Cheriet, M. Intelligent-Driven Green Resource Allocation for Industrial Internet of Things in 5G Heterogeneous Networks. IEEE Trans. Ind. Inform. 2022, 18, 520–530. [Google Scholar] [CrossRef]

- 3GPP TS 23.203 V17.2.0; Technical Specification Group Services and System Aspects. Policy and Charging Control Architecture; ETSI: Valbonne, France, 2021.

- Tam, P.; Math, S.; Kim, S. Optimized Multi-Service Tasks Offloading for Federated Learning in Edge Virtualization. IEEE Trans. Netw. Sci. Eng. 2022, 9, 4363–4378. [Google Scholar] [CrossRef]

| QCI-Index | Resource Types | Priority Level | PDB | PELR | Industry Application Use Case |

|---|---|---|---|---|---|

| QCI-3 Process Automation and Monitoring | GBR | 30 | 50 ms | Robotic monitoring | |

| QCI-70 Mission Critical Data | Non-GBR | 55 | 200 ms | Safety systems | |

| QCI-82 Discrete Automation | Delay critical GBR | 19 | 10 ms | Automate quality control |

| Symbol | Description |

|---|---|

| Offloading decision from IIoT device to MEC, whether 1 or otherwise | |

| -th | |

| Data transmission rate from IIoT n-th to MEC server m-th | |

| Transmission power device-n to MEC server m-th | |

| Channel bandwidth | |

| Ground interference power consumption | |

| Processing power required by VNF v-th | |

| Utilization of VNF v-th | |

| Total bandwidth of MEC server m-th | |

| Satisfaction of latency | |

| Upper bound of total resource usage of the capacity of each MEC server. | |

| Execution at MEC server with task n-th | |

| Time accepted |

| Parameters | Value |

|---|---|

| Number of MEC servers | 4 |

| Number of IIoT devices | [50, 100, 150] |

| Task size | [5, 30] MB |

| Upper-bound bandwidth | 20 MHz |

| CPU frequency of MEC server | [5, 20] GHz |

| Maximum link latency | 1.5 ms |

| Number of time slots | 1000 |

| Replay memory buffer size | 3000 |

| Activation function | ReLU |

| Discount factor on reward | 0.95 |

| Learning rate | 0.001 |

| Batch size | 32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ros, S.; Kang, S.; Song, I.; Cha, G.; Tam, P.; Kim, S. Priority/Demand-Based Resource Management with Intelligent O-RAN for Energy-Aware Industrial Internet of Things. Processes 2024, 12, 2674. https://doi.org/10.3390/pr12122674

Ros S, Kang S, Song I, Cha G, Tam P, Kim S. Priority/Demand-Based Resource Management with Intelligent O-RAN for Energy-Aware Industrial Internet of Things. Processes. 2024; 12(12):2674. https://doi.org/10.3390/pr12122674

Chicago/Turabian StyleRos, Seyha, Seungwoo Kang, Inseok Song, Geonho Cha, Prohim Tam, and Seokhoon Kim. 2024. "Priority/Demand-Based Resource Management with Intelligent O-RAN for Energy-Aware Industrial Internet of Things" Processes 12, no. 12: 2674. https://doi.org/10.3390/pr12122674

APA StyleRos, S., Kang, S., Song, I., Cha, G., Tam, P., & Kim, S. (2024). Priority/Demand-Based Resource Management with Intelligent O-RAN for Energy-Aware Industrial Internet of Things. Processes, 12(12), 2674. https://doi.org/10.3390/pr12122674