Applying a Novel Image Recognition Curve-Fitting Control Strategy Combined with a Cloud Monitoring Technique into an Electric Self-Driving Vehicle (ESDV) to Improve Its Operation Efficiency

Abstract

:1. Introduction

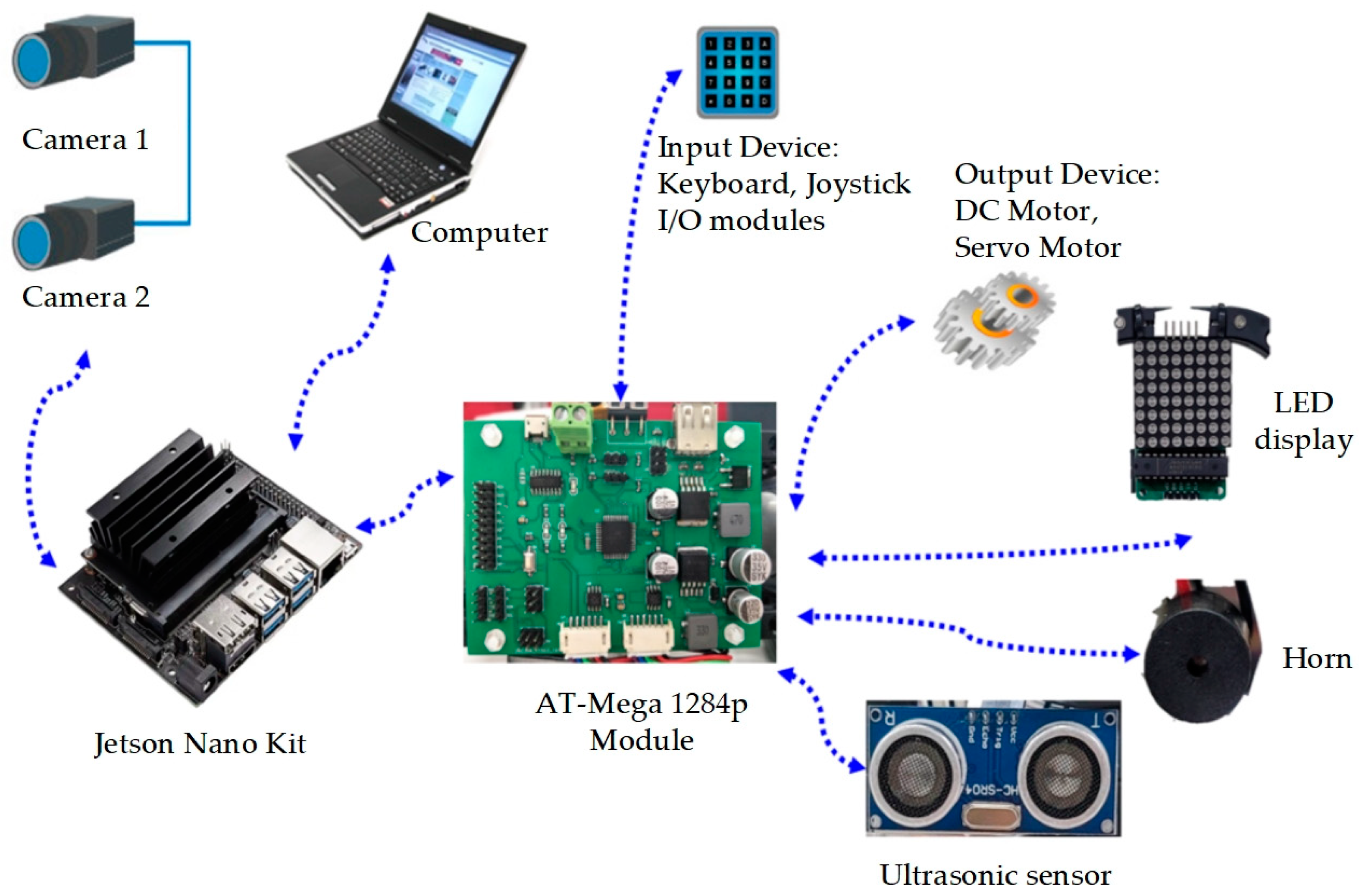

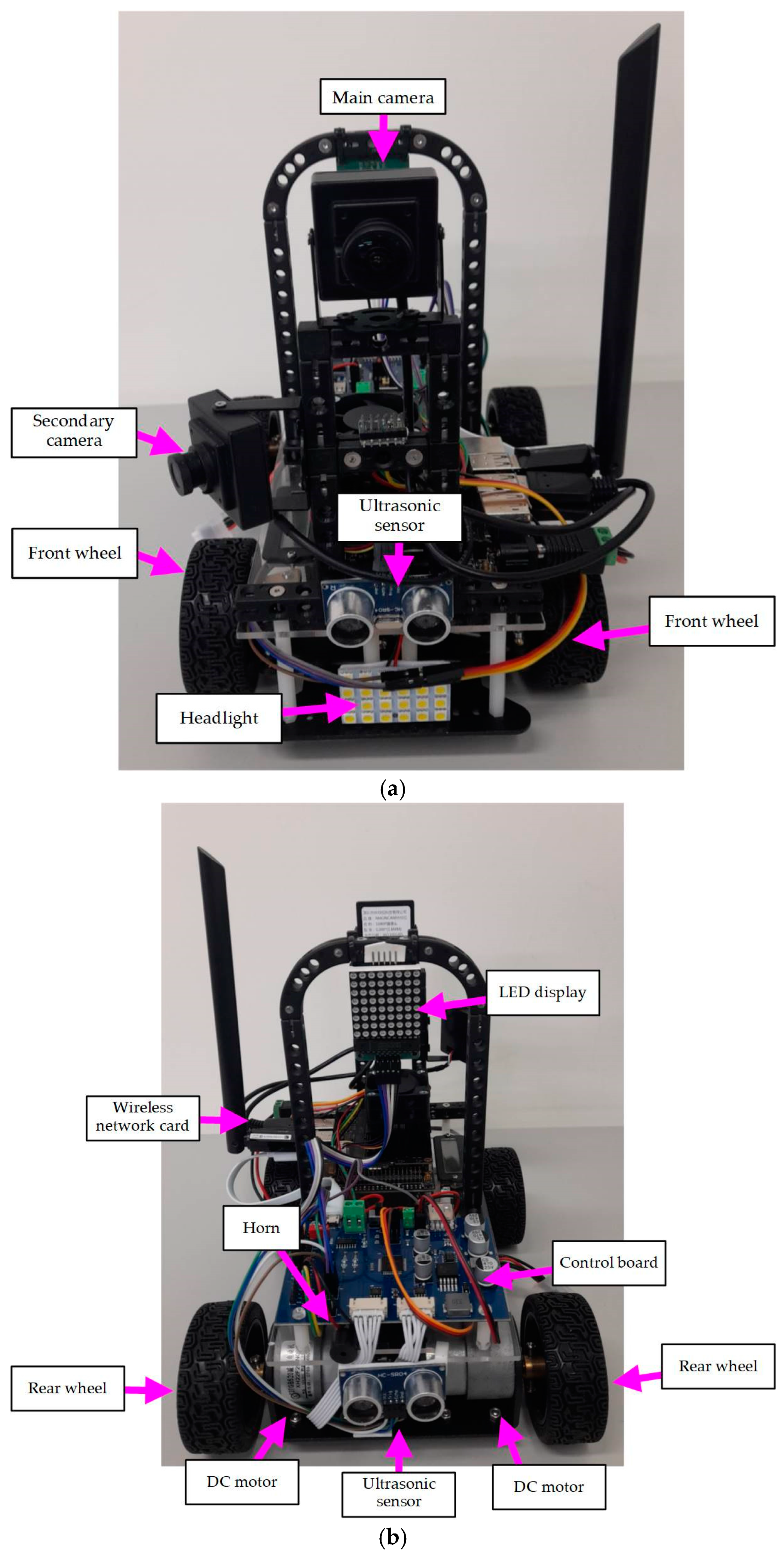

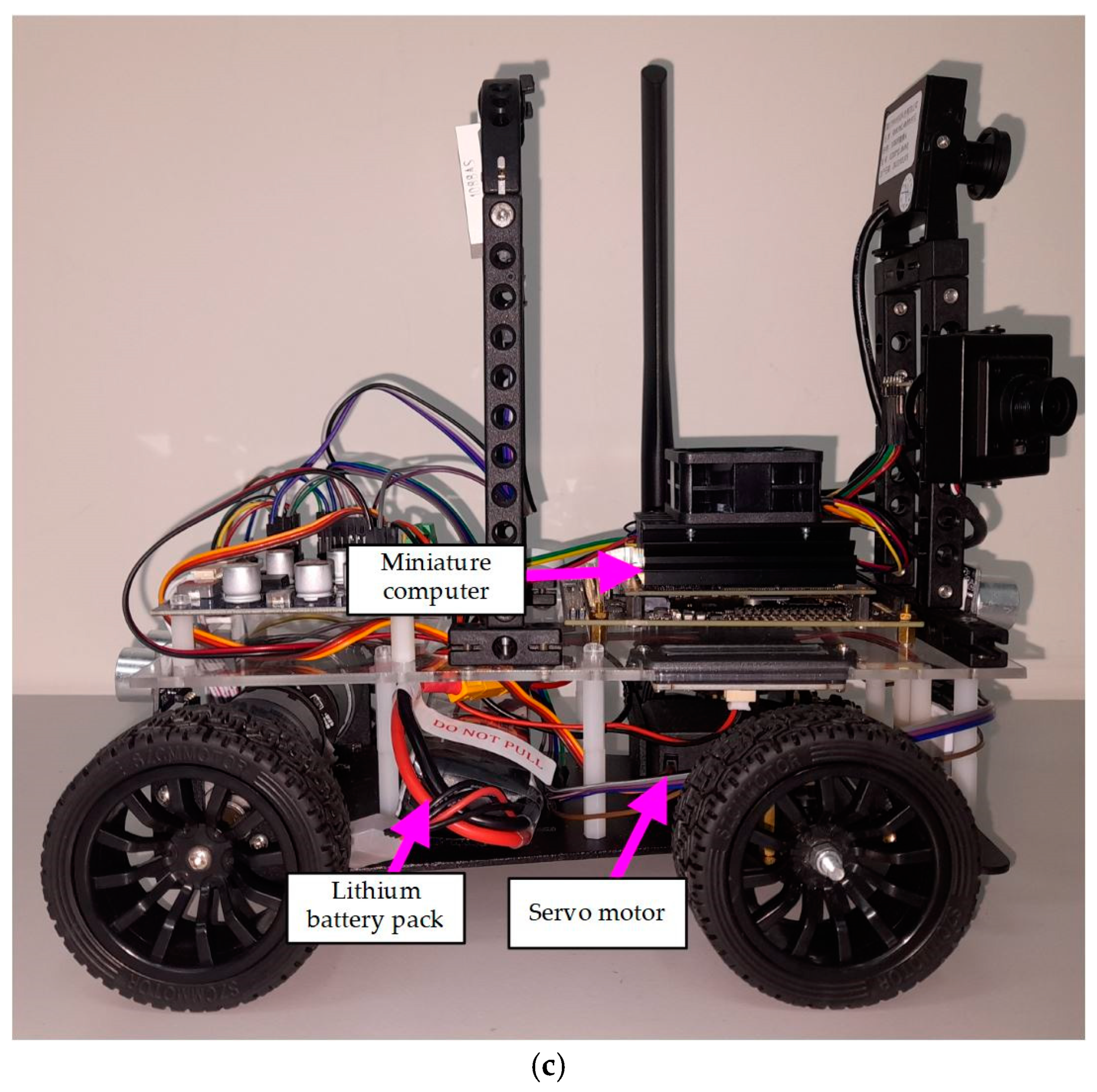

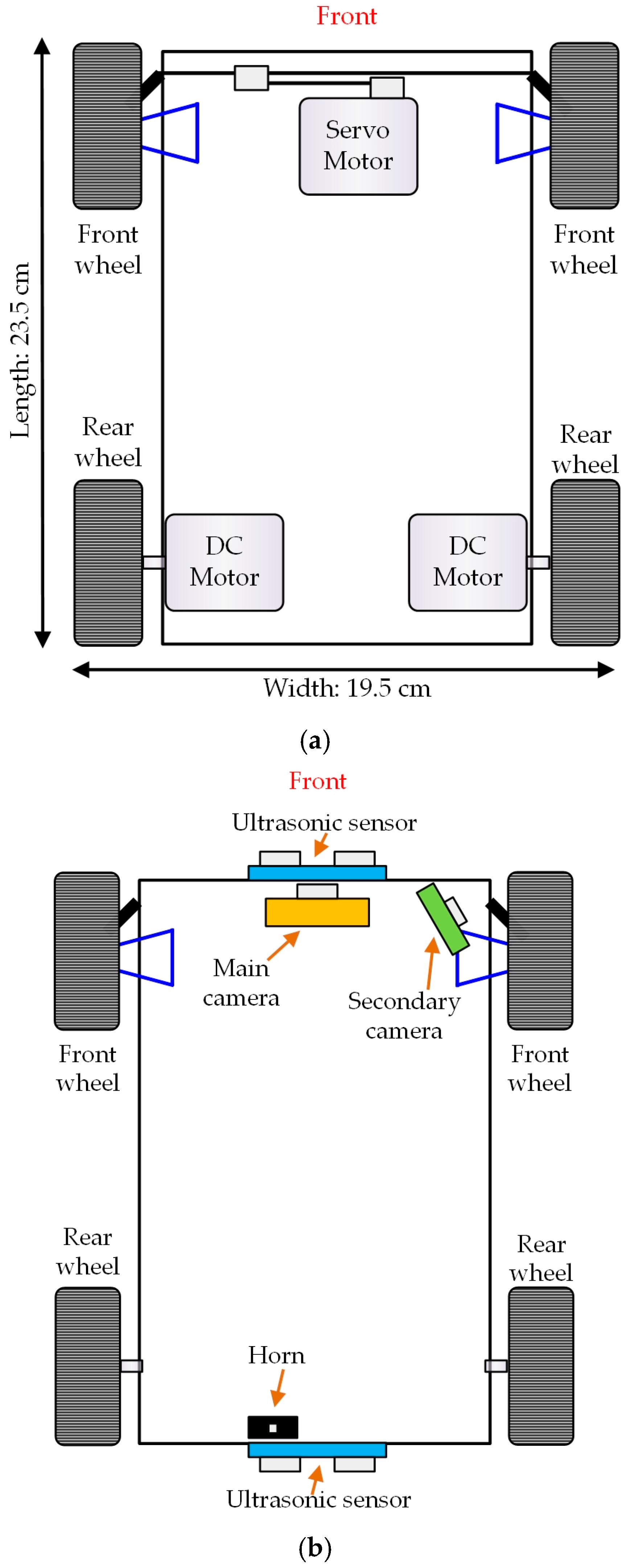

2. Overview of Electric Self-Driving Vehicle System

2.1. The Electric Self-Driving Vehicle Architecture and Component Description

2.2. Image Recognition System Description

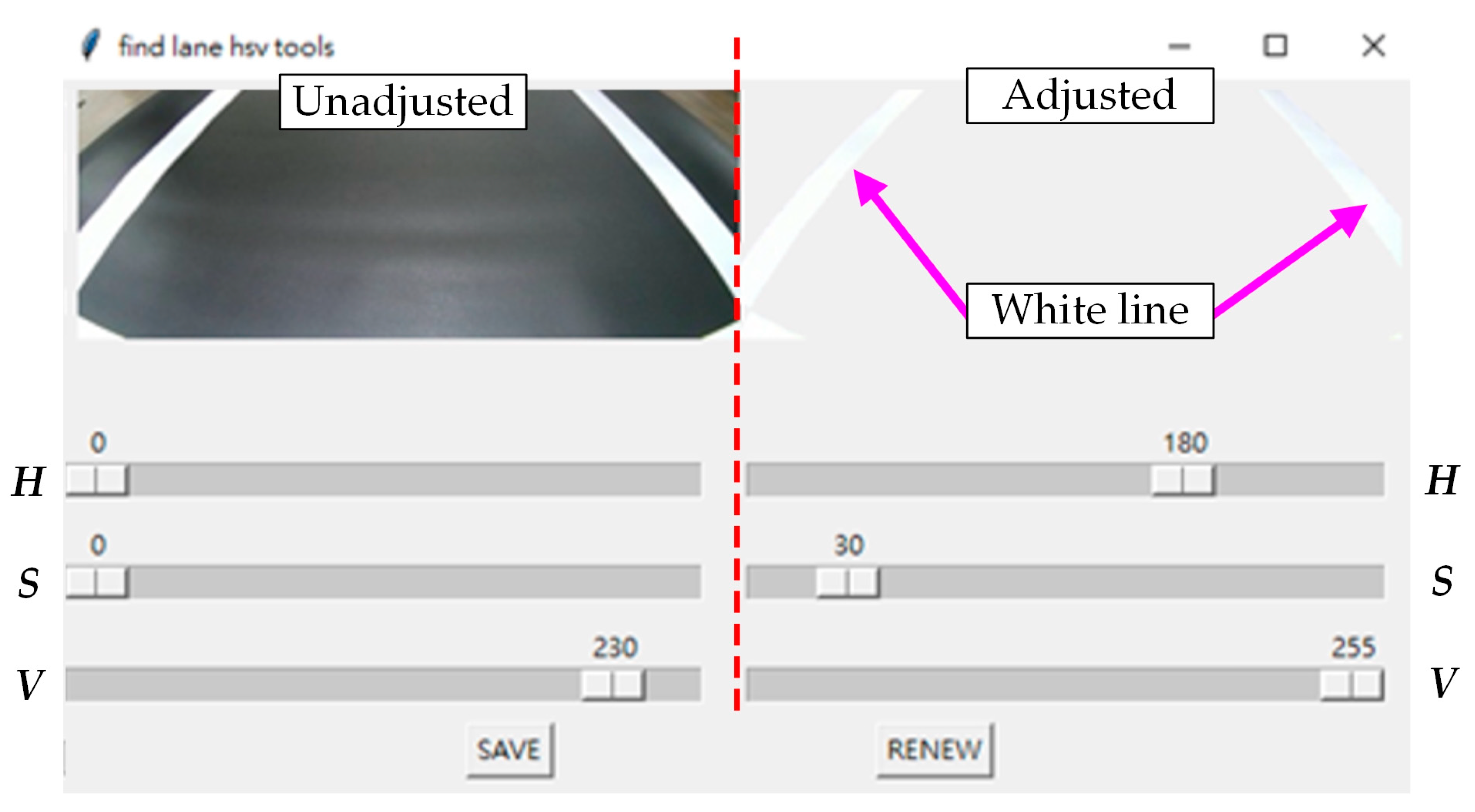

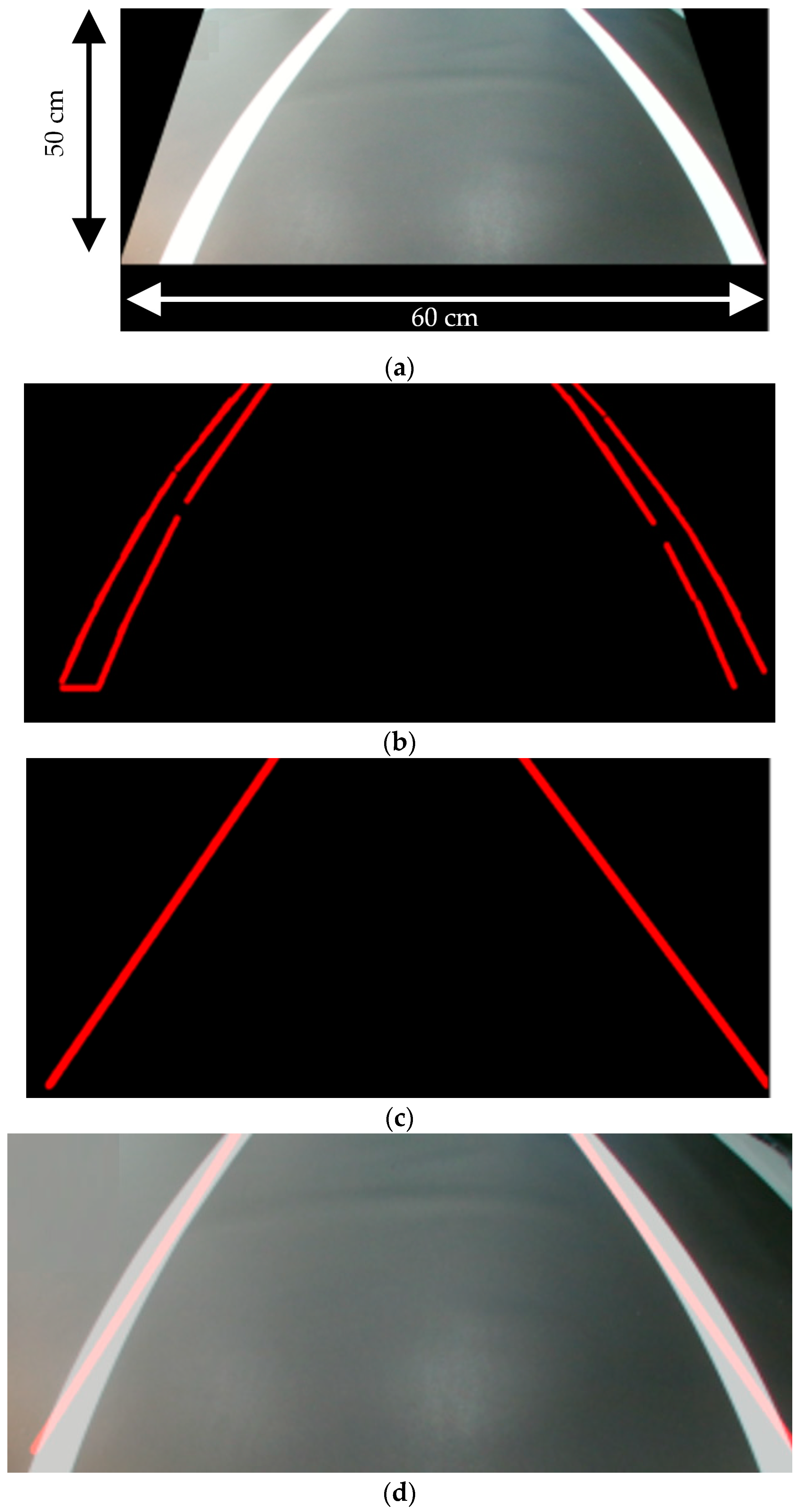

2.2.1. Image Adjustment and Lane Identification

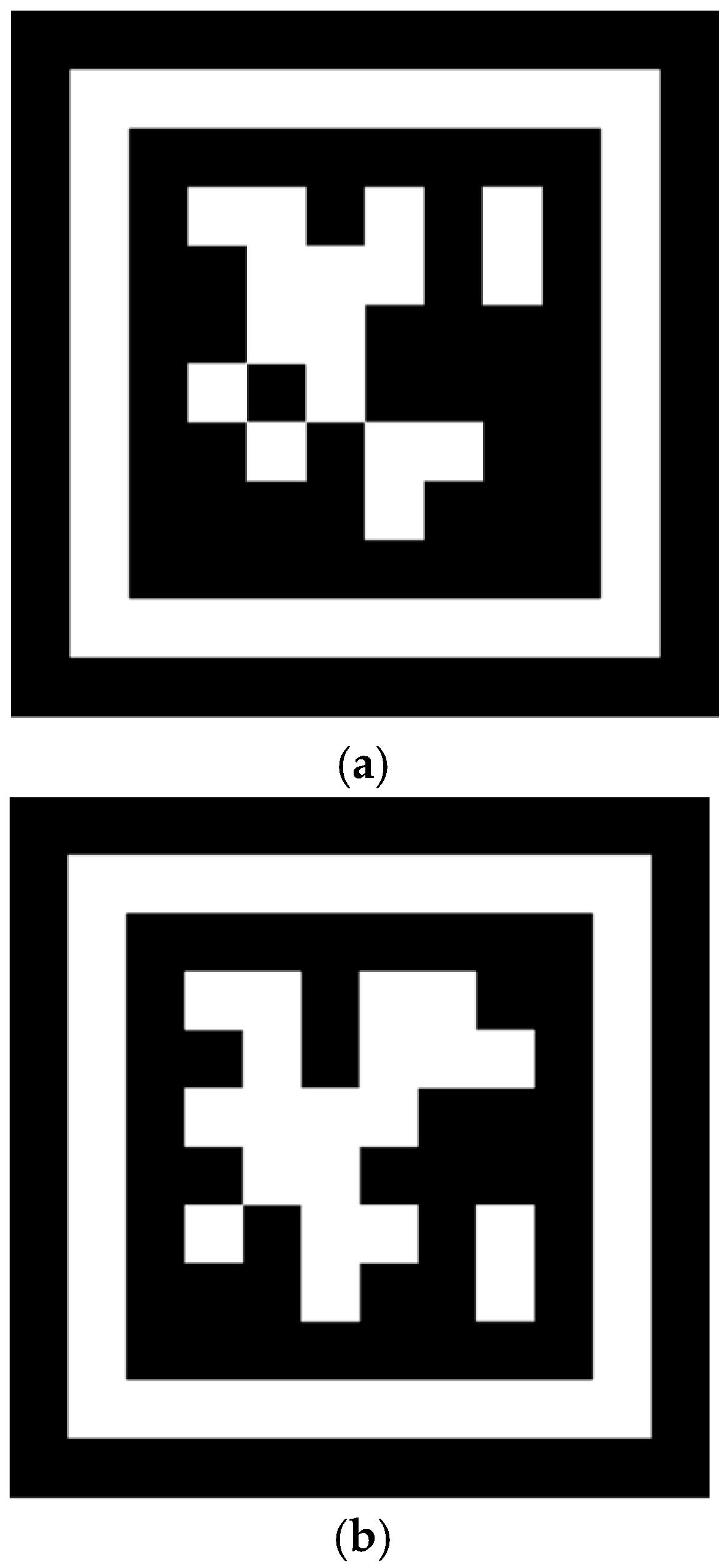

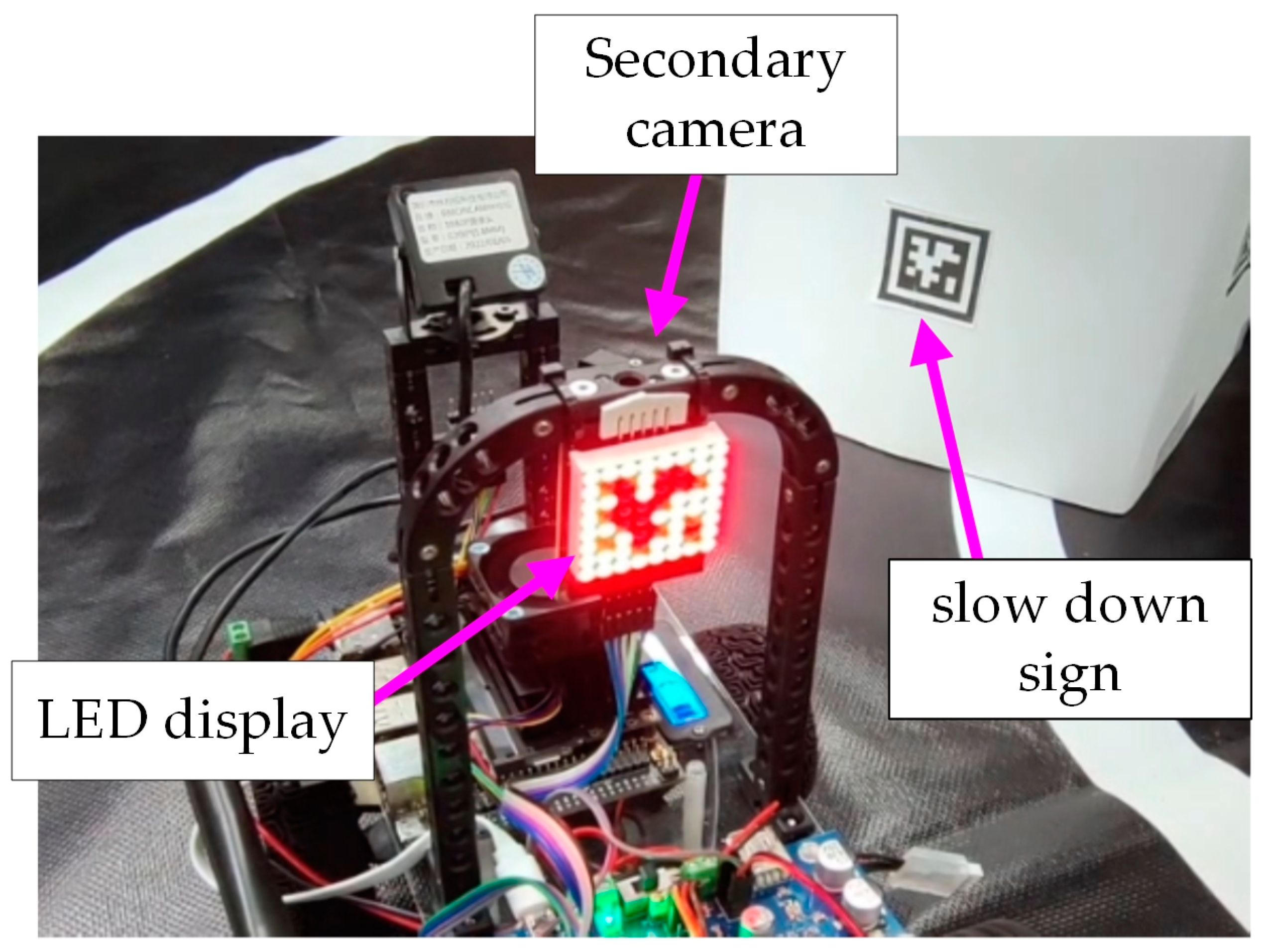

2.2.2. Sign Mark Recognition

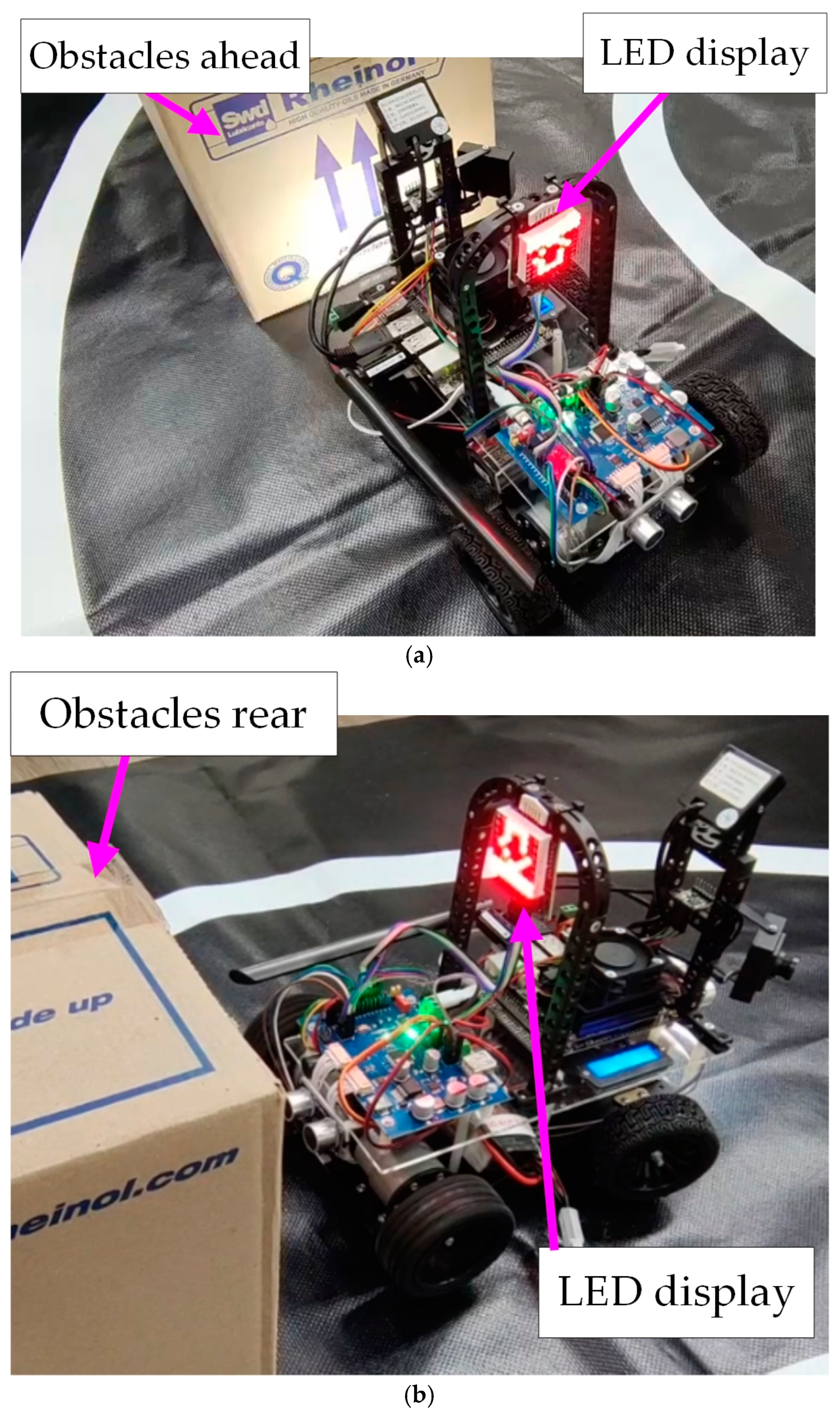

2.3. Alarm System Description

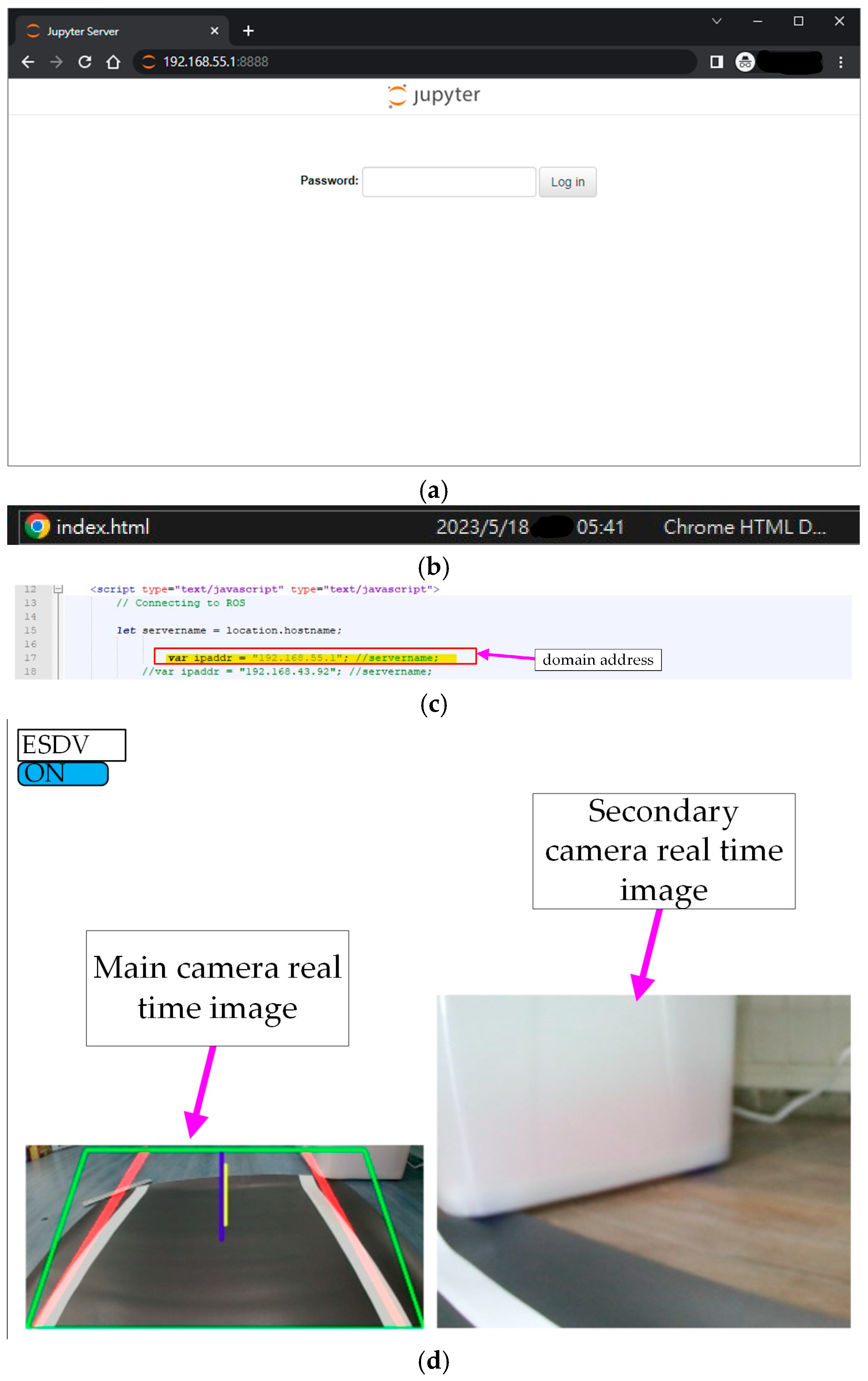

2.4. Cloud Monitoring Web System Description

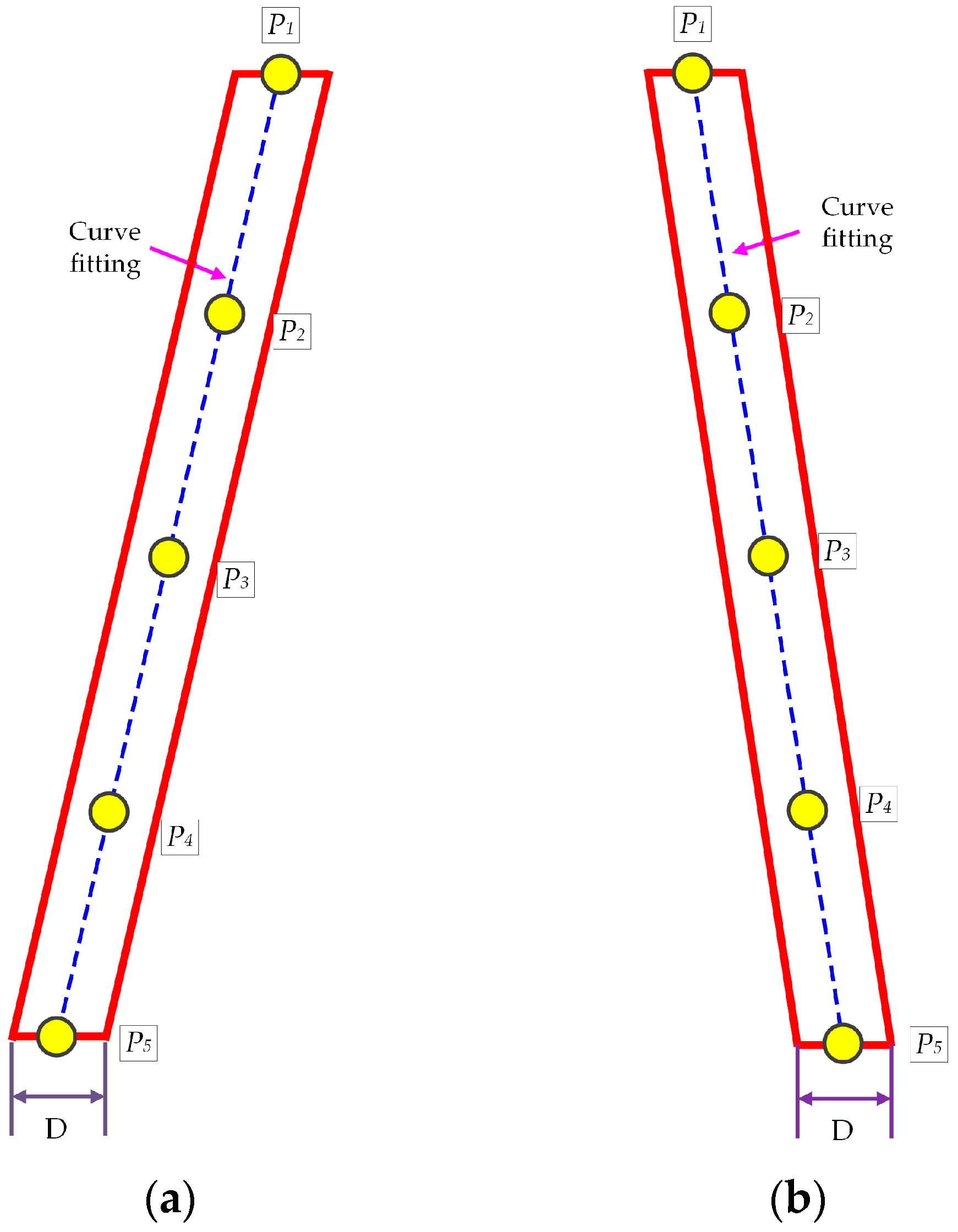

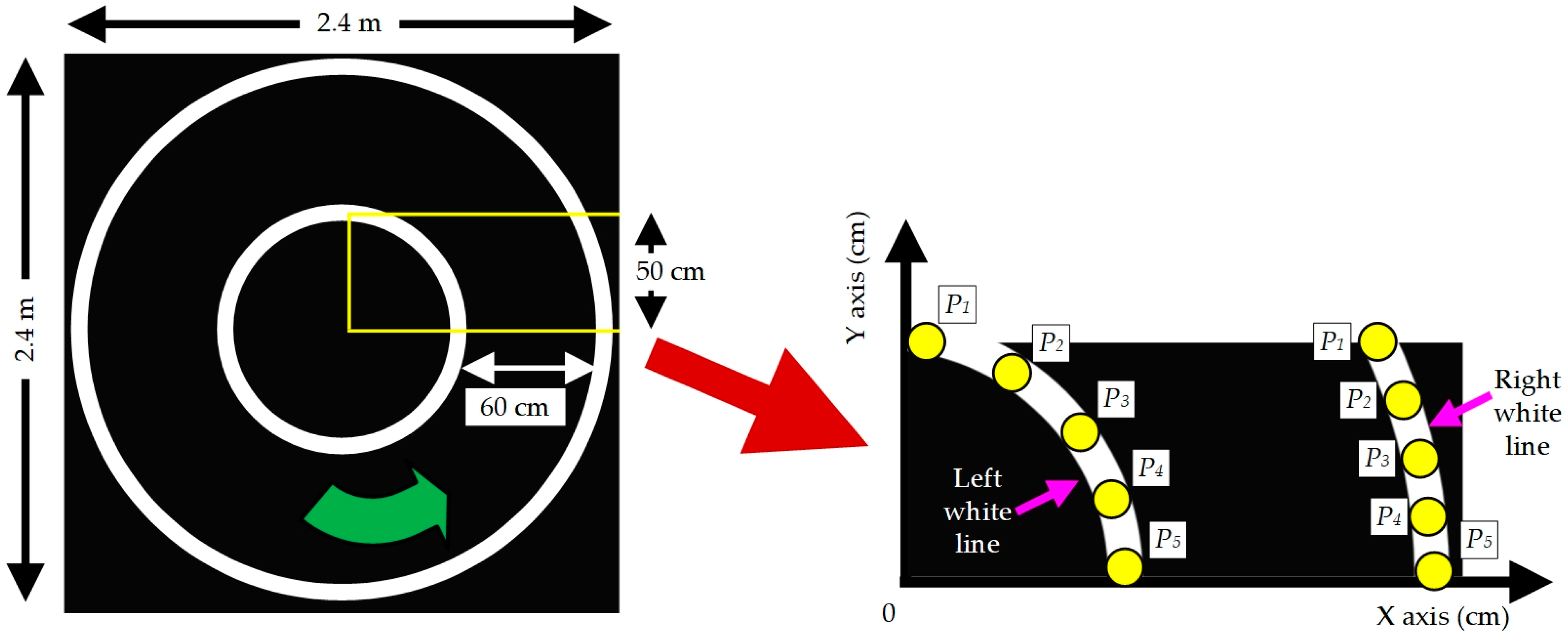

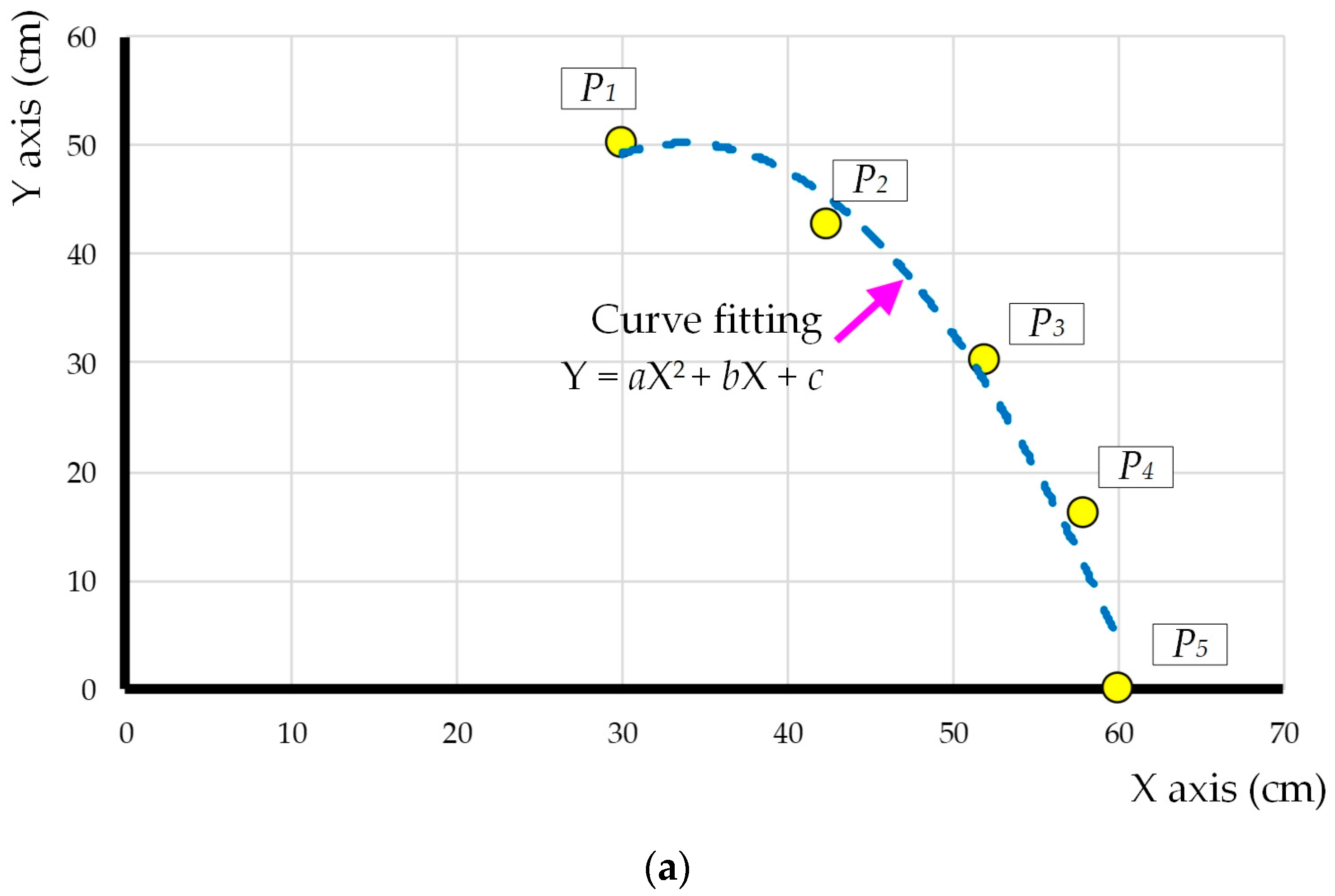

3. Proposed Image Recognition Curve-Fitting Control Technology

4. Experimental Results

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Perozzi, G.; Rath, J.J.; Sentouh, C.; Floris, J.; Popieul, J.-C. Lateral Shared Sliding Mode Control for Lane Keeping Assist System in Steer-by-Wire Vehicles: Theory and Experiments. IEEE Trans. Intell. Veh. 2023, 8, 3073–3082. [Google Scholar] [CrossRef]

- Garcia-Garcia, J.A.; Arévalo, C.; Meidan, A.; Morillo-Baro, E.; Escalona, M.J. gPROFIT: A Tool to Assist the Automatic Extraction of Business Knowledge from Legacy Information Systems. IEEE Access 2021, 9, 94934–94952. [Google Scholar] [CrossRef]

- Zhang, J.; Akinsolu, M.O.; Liu, B.; Vandenbosch, G.A.E. Automatic AI-Driven Design of Mutual Coupling Reducing Topologies for Frequency Reconfigurable Antenna Arrays. IEEE Trans. Antennas Propag. 2021, 69, 1831–1836. [Google Scholar] [CrossRef]

- Gao, Y.; Ji, W.; Zhao, X. SOC Estimation of E-Cell Combining BP Neural Network and EKF Algorithm. Processes 2022, 10, 1721. [Google Scholar] [CrossRef]

- Shi, D.; Li, S.; Liu, K.; Wang, Y.; Liu, R.; Guo, J. Adaptive Energy Management Strategy Based on Intelligent Prediction of Driving Cycle for Plug−In Hybrid Electric Vehicle. Processes 2022, 10, 1831. [Google Scholar] [CrossRef]

- Zhang, Y.; Yan, S.; Yin, W.; Wu, C.; Ye, J.; Wu, Y.; Liu, L. HOMER-Based Multi-Scenario Collaborative Planning for Grid-Connected PV-Storage Microgrids with Electric Vehicles. Processes 2023, 11, 2408. [Google Scholar] [CrossRef]

- Feng, J.; Chang, X.; Fan, Y.; Luo, W. Electric Vehicle Charging Load Prediction Model Considering Traffic Conditions and Temperature. Processes 2023, 11, 2256. [Google Scholar] [CrossRef]

- Liu, Z.; Cai, Y.; Wang, H.; Chen, L.; Gao, H.; Jia, Y.; Li, Y. Robust Target Recognition and Tracking of Self-Driving Cars With Radar and Camera Information Fusion Under Severe Weather Conditions. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6640–6653. [Google Scholar] [CrossRef]

- Bastos, D.; Brandão, A.; Lorences-Riesgo, A.; Monteiro, P.P.; Oliveira, A.S.R.; Pereira, D.; Olyaei, H.Z.; Drummond, M.V. Time-Frequency Range Estimation Method for Pulsed LiDAR. IEEE Trans. Veh. Technol. 2023, 72, 1429–1437. [Google Scholar] [CrossRef]

- Soman, S.P.; Kumar, G.S.; Abubeker, K.M. Internet-of-Things-Assisted Artificial Intelligence-Enabled Drowsiness Detection Framework. IEEE Sens. Lett. 2023, 7, 6003704. [Google Scholar] [CrossRef]

- Somerset, W.E.; Feeney, A.; Kang, L.; Li, Z.; Dixon, S. Design and Dynamics of Oil Filled Flexural Ultrasonic Transducers for Elevated Pressures. IEEE Sens. J. 2021, 22, 12673–12680. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, Z.; Wu, J.; Chen, Q.; Wang, F. Lightweight intelligent autonomous unmanned vehicle based on deep neural network in ROS system. In Proceedings of the 2022 IEEE 5th International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 23–25 September 2022; pp. 679–684. [Google Scholar] [CrossRef]

- Das, S.; Mantri, T.; Tembhurkar, R. Trajectory Planning and Maneuver Control to Assist Lane Change. In Proceedings of the 2021 8th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 26–27 August 2021; pp. 191–197. [Google Scholar] [CrossRef]

- Saranya, M.; Archana, N.; Reshma, J.; Sangeetha, S.; Varalakshmi, M. Object detection and lane changing for self driving car using cnn. In Proceedings of the 2022 International Conference on Communication, Computing and Internet of Things (IC3IoT), Chennai, India, 10–11 March 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Afor, M.E.; Ravulakollu, K.; Chhabra, M.; Sharma, P.; Dewingong, T.F.; Dewan, R. Automated Sign Detection in a Self-Driving Car. In Proceedings of the 2022 9th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 23–25 March 2022; pp. 272–276. [Google Scholar] [CrossRef]

- Chaitra, P.G.; Deepthi, V.; Gautami, S.; Suraj, H.M.; Kumar, N. Convolutional Neural Network based Working Model of Self Driving Car-A Study. In Proceedings of the International Conference on Electronics and Sustainable Communication Systems (ICESC 2020), Coimbatore, India, 2–4 July 2020; pp. 645–650. [Google Scholar] [CrossRef]

- Chen, K. The Design of Cruise control and lane-changing control for an autonomous vehicle. In Proceedings of the 2021 3rd International Academic Exchange Conference on Science and Technology Innovation (IAECST), Guangzhou, China, 10–12 December 2022; pp. 957–960. [Google Scholar] [CrossRef]

- Kannapiran, S.; Berman, S. Go-CHART: A miniature remotely accessible self-driving car robot. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 2265–2272. [Google Scholar] [CrossRef]

- Sai, P.G.; Chandu, S.S.; Aishwarya, S.C.; Revanth, V.; Geetha, G. Detection of Lanes and Objects Using Deep Learning Techniques. In Proceedings of the International Conference on Inventive Research in Computing Applications (ICIRCA 2022), Coimbatore, India, 21–23 September 2022; pp. 446–450. [Google Scholar] [CrossRef]

- Yogitha; Subhashini, S.; Pandiyan, D.; Sampath, S.; Sunil, S. Review on Lane and Object Detection for Accident Prevention in Automated Cars. In Proceedings of the Second International Conference on Artificial Intelligence and Smart Energy (ICAIS-2022), Coimbatore, India, 23–25 February 2022; pp. 1794–1800. [Google Scholar] [CrossRef]

- Nguyen, H.-H.-N.; Pham, D.-H.; Le, T.-L.; Le, M.-H. Lane Keeping and Navigation of a Self-driving RC Car Based on Image Semantic Segmentation and GPS Fusion. In Proceedings of the 2022 6th International Conference on Green Technology and Sustainable Development (GTSD), Nha Trang City, Vietnam, 29–30 July 2022; pp. 601–606. [Google Scholar] [CrossRef]

- Lin, H.; Zeng, C.; Zhang, H.; Jiang, G. Radar Maneuvering Target Motion Parameter Estimation Based on Hough Transform and Polynomial Chirplet Transform. IEEE Access 2021, 9, 35178–35195. [Google Scholar] [CrossRef]

- Zhao, K.; Han, Q.; Zhang, C.-B.; Xu, J.; Cheng, M.-M. Deep Hough Transform for Semantic Line Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4793–4806. [Google Scholar] [CrossRef] [PubMed]

- Jue, W.; Pengwei, H.; Jianqiang, Q.; Lei, G. Confocal Ellipse Hough Transform for Polarization Compass in the Nonideal Atmosphere. IEEE Trans. Instrum. Meas. 2023, 72, 5010108. [Google Scholar] [CrossRef]

| Item | Specifications | Quantity |

|---|---|---|

| Self-driving electric vehicle body | Dimensions: 23.5 cm (length) × 19.5 cm (width) × 23.5 cm (height) | 1 |

| Front wheel | Wheel diameter: 6.5 cm, width: 2.5 cm, type: skid tires | 2 |

| Rear wheel | Wheel diameter: 6.5 cm, width: 2.5 cm, type: skid tires | 2 |

| Miniature computer | Brand: NVIDIA, model: Jetson Nano 4G | 1 |

| Control board | Brand: Microchip, Model: ATMEGA 1284p | 1 |

| Main camera | Resolution 1920 × 1080, 160-degree wide-angle | 1 |

| Secondary camera | Resolution 1280 × 720, 120-degree wide-angle | 1 |

| DC motors | Input voltage: 12 V, rotational speed: 330 rpm | 2 |

| Lithium battery pack | Rate voltage: 11.1 V, capacity: 2.25 Ah | 1 |

| Servo motor | Input voltage: 4.8 V~7.2 V, torque: 9.4 kg/cm~13.5 kg/cm | 1 |

| Ultrasonic sensor | Sensing distance: 2 cm~450 cm, input voltage: 5 V | 2 |

| Horn | Input voltage: 5 V, Decibel: 80 dB | 1 |

| Headlight | Input voltage: 5 V, rate power: 15 W, color: white light | 1 |

| LED display | LED color: red, input voltage: 5 V, dimensions: 54 mm × 22 mm | 1 |

| Wireless network card | Brand: TP-LINK, model: AC1300 | 1 |

| Control Method | [15] | Proposed IRCF |

|---|---|---|

| Run 110 m clockwise | 200 s | 185 s |

| Run 110 m counterclockwise | 201 s | 186 s |

| Speed | 1.97 km/h | 2.13 km/h |

| Efficacy | Middle | High |

| Running dexterity | Middle | Good |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.-D.; Lin, P.-J.; Lai, S.-X.; Lin, C.-H.; Farooqui, S.-A. Applying a Novel Image Recognition Curve-Fitting Control Strategy Combined with a Cloud Monitoring Technique into an Electric Self-Driving Vehicle (ESDV) to Improve Its Operation Efficiency. Processes 2023, 11, 2732. https://doi.org/10.3390/pr11092732

Liu H-D, Lin P-J, Lai S-X, Lin C-H, Farooqui S-A. Applying a Novel Image Recognition Curve-Fitting Control Strategy Combined with a Cloud Monitoring Technique into an Electric Self-Driving Vehicle (ESDV) to Improve Its Operation Efficiency. Processes. 2023; 11(9):2732. https://doi.org/10.3390/pr11092732

Chicago/Turabian StyleLiu, Hwa-Dong, Ping-Jui Lin, Shan-Xun Lai, Chang-Hua Lin, and Shoeb-Azam Farooqui. 2023. "Applying a Novel Image Recognition Curve-Fitting Control Strategy Combined with a Cloud Monitoring Technique into an Electric Self-Driving Vehicle (ESDV) to Improve Its Operation Efficiency" Processes 11, no. 9: 2732. https://doi.org/10.3390/pr11092732

APA StyleLiu, H.-D., Lin, P.-J., Lai, S.-X., Lin, C.-H., & Farooqui, S.-A. (2023). Applying a Novel Image Recognition Curve-Fitting Control Strategy Combined with a Cloud Monitoring Technique into an Electric Self-Driving Vehicle (ESDV) to Improve Its Operation Efficiency. Processes, 11(9), 2732. https://doi.org/10.3390/pr11092732