Machine Learning Methods in Skin Disease Recognition: A Systematic Review

Abstract

1. Introduction

2. Skin Lesion Datasets and Image Preprocessing

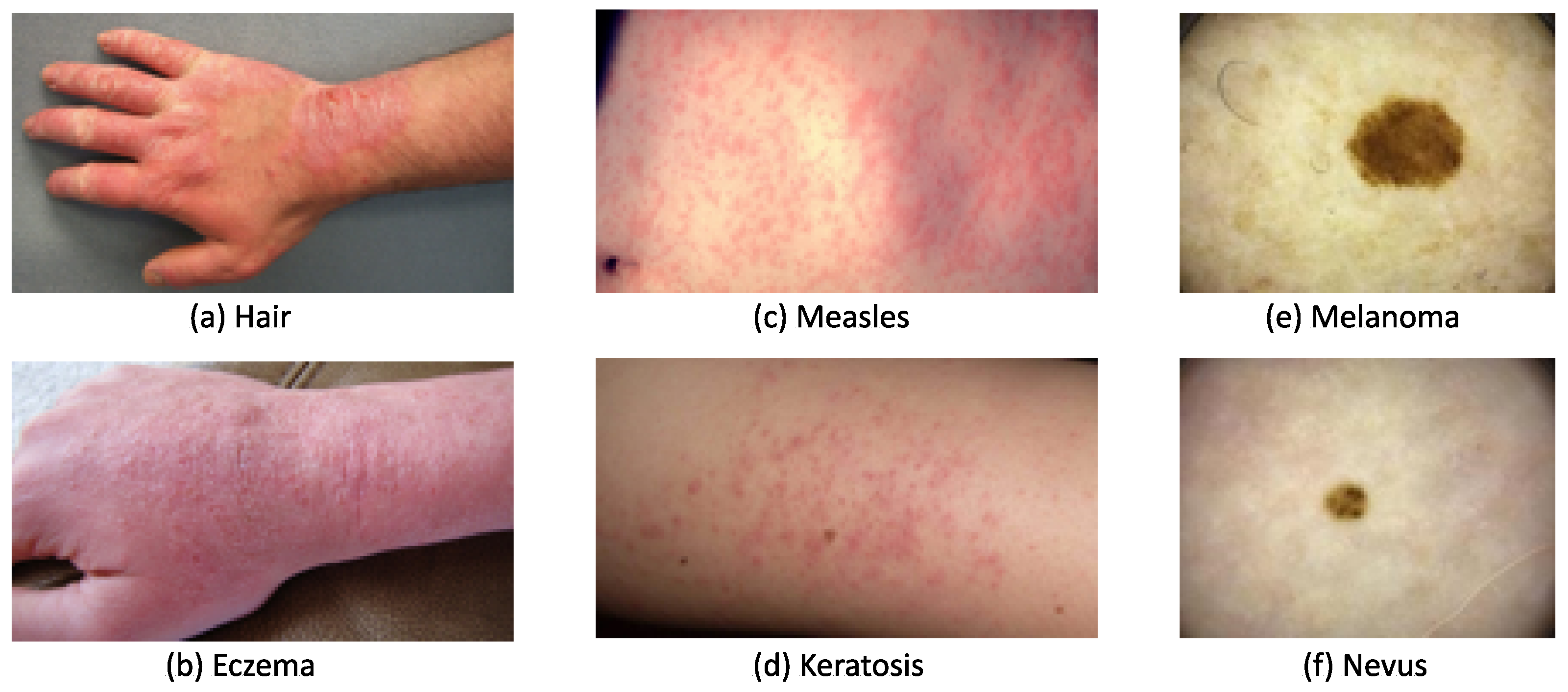

2.1. Skin Lesion Datasets

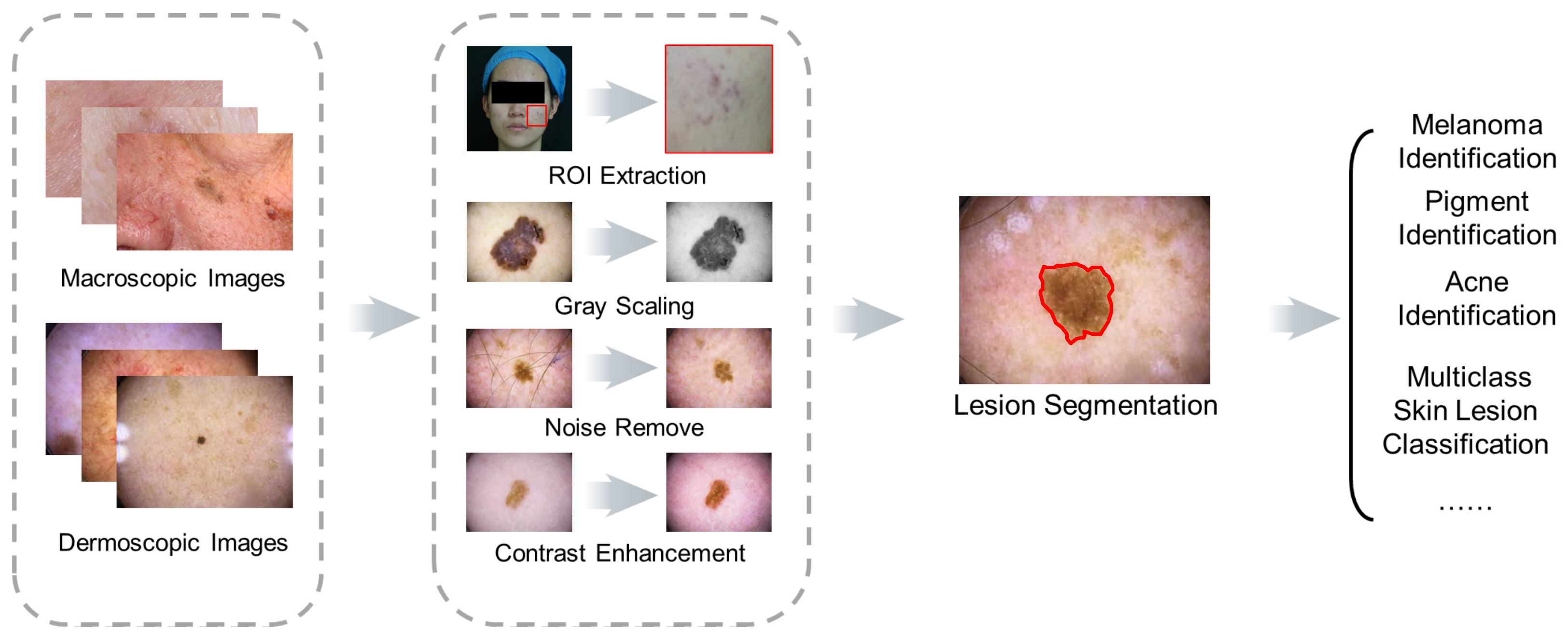

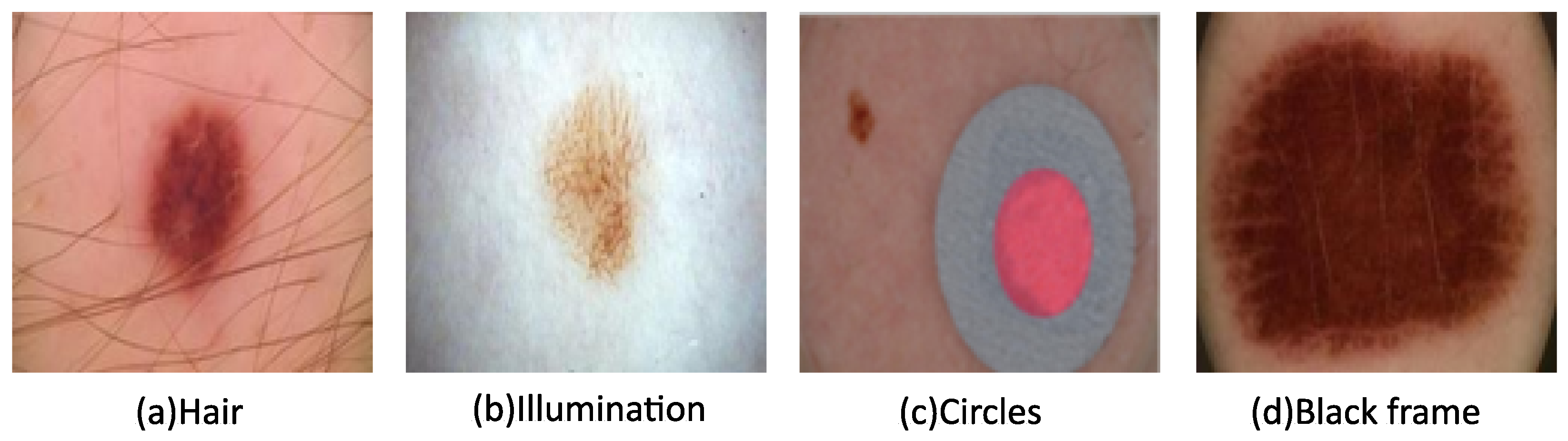

2.2. Image Preprocessing

2.3. Segmentation and Classification Evaluation Metrics

3. Skin Lesion Segmentation Methods

3.1. Traditional Segmentation Methods

3.2. DL Skin Lesion Segmentation Methods

4. Skin Lesion Classification

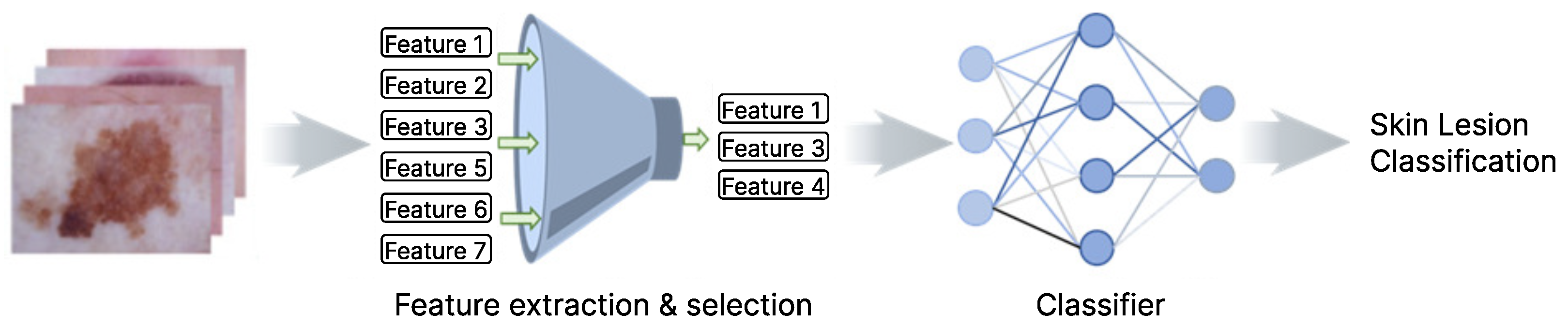

4.1. Feature Extraction and Selection

4.2. DL-Based Feature Extract and Selection Methods

4.3. Traditional ML Models for Skin Disease Classification

4.4. Deep Learning Models for Skin Disease Classification

5. Current Status, Challenges, and Outlook

5.1. Current Research Publication Status

5.2. Challenges and Outlooks

5.2.1. Macroscopic Images with Robust Diagnosis

5.2.2. Racial and Geographical Biases in Public Datasets

5.2.3. Dataset Characteristics and DL Methods

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- ALKolifi-ALEnezi, N.S. A Method Of Skin Disease Detection Using Image Processing And Machine Learning. Procedia Comput. Sci. 2019, 163, 85–92. [Google Scholar] [CrossRef]

- Skin Disorders: Pictures, Causes, Symptoms, and Treatment. Available online: https://www.healthline.com/health/skin-disorders (accessed on 21 February 2023).

- ISIC Archive. Available online: https://www.isic-archive.com/#!/topWithHeader/wideContentTop/main (accessed on 20 February 2023).

- Sun, J.; Yao, K.; Huang, K.; Huang, D. Machine learning applications in scaffold based bioprinting. Mater. Today Proc. 2022, 70, 17–23. [Google Scholar] [CrossRef]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.B.H.; Thomas, L.; Enk, A.; et al. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef] [PubMed]

- Rotemberg, V.; Kurtansky, N.; Betz-Stablein, B.; Caffery, L.; Chousakos, E.; Codella, N.; Combalia, M.; Dusza, S.; Guitera, P.; Gutman, D.; et al. A patient-centric dataset of images and metadata for identifying melanomas using clinical context. Sci. Data 2021, 8, 34. [Google Scholar] [CrossRef] [PubMed]

- Melanoma Skin Cancer Rreport. Melanoma UK. 2020. Available online: https://www.melanomauk.org.uk/2020-melanoma-skin-cancer-report (accessed on 20 February 2023).

- Mendonça, T.; Ferreira, P.M.; Marques, J.S.; Marcal, A.R.; Rozeira, J. PH 2-A dermoscopic image database for research and benchmarking. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 5437–5440. [Google Scholar]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Combalia, M.; Codella, N.C.; Rotemberg, V.; Helba, B.; Vilaplana, V.; Reiter, O.; Carrera, C.; Barreiro, A.; Halpern, A.C.; Puig, S.; et al. Bcn20000: Dermoscopic lesions in the wild. arXiv 2019, arXiv:1908.02288. [Google Scholar]

- Dermnet. Kaggle. Available online: https://www.kaggle.com/datasets/shubhamgoel27/dermnet (accessed on 20 February 2023).

- Giotis, I.; Molders, N.; Land, S.; Biehl, M.; Jonkman, M.F.; Petkov, N. MED-NODE: A computer-assisted melanoma diagnosis system using non-dermoscopic images. Expert Syst. Appl. 2015, 42, 6578–6585. [Google Scholar] [CrossRef]

- Yap, J.; Yolland, W.; Tschandl, P. Multimodal skin lesion classification using deep learning. Exp. Dermatol. 2018, 27, 1261–1267. [Google Scholar] [CrossRef]

- Dermofit Image Library Available from The University of Edinburgh. Available online: https://licensing.edinburgh-innovations.ed.ac.uk/product/dermofit-image-library (accessed on 20 February 2023).

- Gutman, D.; Codella, N.C.; Celebi, E.; Helba, B.; Marchetti, M.; Mishra, N.; Halpern, A. Skin lesion analysis toward melanoma detection: A challenge at the international symposium on biomedical imaging (ISBI) 2016, hosted by the international skin imaging collaboration (ISIC). arXiv 2016, arXiv:1605.01397. [Google Scholar]

- Codella, N.C.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging, hosted by the international skin imaging collaboration. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 168–172. [Google Scholar]

- Codella, N.; Rotemberg, V.; Tschandl, P.; Celebi, M.E.; Dusza, S.; Gutman, D.; Helba, B.; Kalloo, A.; Liopyris, K.; Marchetti, M.; et al. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (isic). arXiv 2019, arXiv:1902.03368. [Google Scholar]

- ISIC Challenge. Available online: https://challenge.isic-archive.com/landing/2019/ (accessed on 21 February 2023).

- Kawahara, J.; Daneshvar, S.; Argenziano, G.; Hamarneh, G. Seven-point checklist and skin lesion classification using multitask multimodal neural nets. IEEE J. Biomed. Health Inform. 2019, 23, 538–546. [Google Scholar] [CrossRef] [PubMed]

- Alahmadi, M.D.; Alghamdi, W. Semi-Supervised Skin Lesion Segmentation With Coupling CNN and Transformer Features. IEEE Access 2022, 10, 122560–122569. [Google Scholar] [CrossRef]

- Abhishek, K.; Hamarneh, G.; Drew, M.S. Illumination-based transformations improve skin lesion segmentation in dermoscopic images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 728–729. [Google Scholar]

- Oliveira, R.B.; Mercedes Filho, E.; Ma, Z.; Papa, J.P.; Pereira, A.S.; Tavares, J.M.R. Computational methods for the image segmentation of pigmented skin lesions: A review. Comput. Methods Programs Biomed. 2016, 131, 127–141. [Google Scholar] [CrossRef]

- Hameed, N.; Shabut, A.M.; Ghosh, M.K.; Hossain, M.A. Multi-class multi-level classification algorithm for skin lesions classification using machine learning techniques. Expert Syst. Appl. 2020, 141, 112961. [Google Scholar] [CrossRef]

- Toossi, M.T.B.; Pourreza, H.R.; Zare, H.; Sigari, M.H.; Layegh, P.; Azimi, A. An effective hair removal algorithm for dermoscopy images. Ski. Res. Technol. 2013, 19, 230–235. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Huang, G.; Yao, K.; Leach, M.; Sun, J.; Huang, K.; Zhou, X.; Yuan, L. A Comparison of Applying Image Processing and Deep Learning in Acne Region Extraction. J. Image Graph. 2022, 10, 1–6. [Google Scholar] [CrossRef]

- Shetty, B.; Fernandes, R.; Rodrigues, A.P.; Chengoden, R.; Bhattacharya, S.; Lakshmanna, K. Skin lesion classification of dermoscopic images using machine learning and convolutional neural network. Sci. Rep. 2022, 12, 18134. [Google Scholar] [CrossRef]

- Gulzar, Y.; Khan, S.A. Skin Lesion Segmentation Based on Vision Transformers and Convolutional Neural Networks—A Comparative Study. Appl. Sci. 2022, 12, 5990. [Google Scholar] [CrossRef]

- Vesal, S.; Ravikumar, N.; Maier, A. SkinNet: A deep learning framework for skin lesion segmentation. In Proceedings of the 2018 IEEE Nuclear Science Symposium and Medical Imaging Conference Proceedings (NSS/MIC), Sydney, Australia, 10–17 November 2018; pp. 1–3. [Google Scholar]

- Al-Masni, M.A.; Al-Antari, M.A.; Choi, M.T.; Han, S.M.; Kim, T.S. Skin lesion segmentation in dermoscopy images via deep full resolution convolutional networks. Comput. Methods Programs Biomed. 2018, 162, 221–231. [Google Scholar] [CrossRef]

- Ünver, H.M.; Ayan, E. Skin lesion segmentation in dermoscopic images with combination of YOLO and grabcut algorithm. Diagnostics 2019, 9, 72. [Google Scholar] [CrossRef]

- Thapar, P.; Rakhra, M.; Cazzato, G.; Hossain, M.S. A novel hybrid deep learning approach for skin lesion segmentation and classification. J. Healthc. Eng. 2022, 2022, 1709842. [Google Scholar] [CrossRef] [PubMed]

- Ali, A.R.; Li, J.; Trappenberg, T. Supervised versus unsupervised deep learning based methods for skin lesion segmentation in dermoscopy images. In Proceedings of the Advances in Artificial Intelligence: 32nd Canadian Conference on Artificial Intelligence, Canadian AI 2019, Kingston, ON, Canada, 28–31 May 2019; pp. 373–379. [Google Scholar]

- Gupta, S.; Panwar, A.; Mishra, K. Skin disease classification using dermoscopy images through deep feature learning models and machine learning classifiers. In Proceedings of the IEEE EUROCON 2021–19th International Conference on Smart Technologies, Lviv, Ukraine, 6–8 July 2021; pp. 170–174. [Google Scholar]

- Kassem, M.A.; Hosny, K.M.; Fouad, M.M. Skin lesions classification into eight classes for ISIC 2019 using deep convolutional neural network and transfer learning. IEEE Access 2020, 8, 114822–114832. [Google Scholar] [CrossRef]

- Khan, M.A.; Javed, M.Y.; Sharif, M.; Saba, T.; Rehman, A. Multi-model deep neural network based features extraction and optimal selection approach for skin lesion classification. In Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS), Aljouf, Saudi Arabia, 3–4 April 2019; pp. 1–7. [Google Scholar]

- Khan, M.A.; Sharif, M.; Akram, T.; Bukhari, S.A.C.; Nayak, R.S. Developed Newton-Raphson based deep features selection framework for skin lesion recognition. Pattern Recognit. Lett. 2020, 129, 293–303. [Google Scholar] [CrossRef]

- Nock, R.; Nielsen, F. Statistical region merging. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1452–1458. [Google Scholar] [CrossRef] [PubMed]

- Hojjatoleslami, S.; Kittler, J. Region growing: A new approach. IEEE Trans. Image Process. 1998, 7, 1079–1084. [Google Scholar] [CrossRef]

- Levner, I.; Zhang, H. Classification-driven watershed segmentation. IEEE Trans. Image Process. 2007, 16, 1437–1445. [Google Scholar] [CrossRef]

- Maeda, J.; Kawano, A.; Yamauchi, S.; Suzuki, Y.; Marçal, A.; Mendonça, T. Perceptual image segmentation using fuzzy-based hierarchical algorithm and its application to dermoscopy images. In Proceedings of the 2008 IEEE Conference on Soft Computing in Industrial Applications, Muroran, Japan, 25–27 June 2008; pp. 66–71. [Google Scholar]

- Devi, S.S.; Laskar, R.H.; Singh, N.H. Fuzzy C-means clustering with histogram based cluster selection for skin lesion segmentation using non-dermoscopic images. Int. J. Interact. Multimed. Artif. Intell. 2020, 6, 1–6. [Google Scholar] [CrossRef]

- Bhati, P.; Singhal, M. Early stage detection and classification of melanoma. In Proceedings of the 2015 Communication, Control and Intelligent Systems (CCIS), Mathura, India, 7–8 November 2015; pp. 181–185. [Google Scholar]

- Henning, J.S.; Dusza, S.W.; Wang, S.Q.; Marghoob, A.A.; Rabinovitz, H.S.; Polsky, D.; Kopf, A.W. The CASH (color, architecture, symmetry, and homogeneity) algorithm for dermoscopy. J. Am. Acad. Dermatol. 2007, 56, 45–52. [Google Scholar] [CrossRef]

- Soyer, H.P.; Argenziano, G.; Zalaudek, I.; Corona, R.; Sera, F.; Talamini, R.; Barbato, F.; Baroni, A.; Cicale, L.; Di Stefani, A.; et al. Three-point checklist of dermoscopy. Dermatology 2004, 208, 27–31. [Google Scholar] [CrossRef]

- Argenziano, G.; Catricalà, C.; Ardigo, M.; Buccini, P.; De Simone, P.; Eibenschutz, L.; Ferrari, A.; Mariani, G.; Silipo, V.; Sperduti, I.; et al. Seven-point checklist of dermoscopy revisited. Br. J. Dermatol. 2011, 164, 785–790. [Google Scholar] [CrossRef]

- Oliveira, R.B.; Pereira, A.S.; Tavares, J.M.R. Computational diagnosis of skin lesions from dermoscopic images using combined features. Neural Comput. Appl. 2019, 31, 6091–6111. [Google Scholar] [CrossRef]

- Zakeri, A.; Hokmabadi, A. Improvement in the diagnosis of melanoma and dysplastic lesions by introducing ABCD-PDT features and a hybrid classifier. Biocybern. Biomed. Eng. 2018, 38, 456–466. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Jie, S.; Hong, G.S.; Rahman, M.; Wong, Y. Feature extraction and selection in tool condition monitoring system. In Proceedings of the AI 2002: Advances in Artificial Intelligence: 15th Australian Joint Conference on Artificial Intelligence, Canberra, Australia, 2–6 December 2002; pp. 487–497. [Google Scholar]

- Akram, T.; Lodhi, H.M.J.; Naqvi, S.R.; Naeem, S.; Alhaisoni, M.; Ali, M.; Haider, S.A.; Qadri, N.N. A multilevel features selection framework for skin lesion classification. Hum. Centric Comput. Inf. Sci. 2020, 10, 1–26. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, A.; Gul, N.; Anjum, M.A.; Nisar, M.W.; Azam, F.; Bukhari, S.A.C. Integrated design of deep features fusion for localization and classification of skin cancer. Pattern Recognit. Lett. 2020, 131, 63–70. [Google Scholar] [CrossRef]

- Hegde, P.R.; Shenoy, M.M.; Shekar, B. Comparison of machine learning algorithms for skin disease classification using color and texture features. In Proceedings of the 2018 International Conference on Advances in Computing, Communications and Inform. (ICACCI), Bangalore, India, 19–22 September 2018; pp. 1825–1828. [Google Scholar]

- Yu, Z.; Jiang, X.; Zhou, F.; Qin, J.; Ni, D.; Chen, S.; Lei, B.; Wang, T. Melanoma recognition in dermoscopy images via aggregated deep convolutional features. IEEE Trans. Biomed. Eng. 2018, 66, 1006–1016. [Google Scholar] [CrossRef]

- Kassem, M.A.; Hosny, K.M.; Damaševičius, R.; Eltoukhy, M.M. Machine learning and deep learning methods for skin lesion classification and diagnosis: A systematic review. Diagnostics 2021, 11, 1390. [Google Scholar] [CrossRef]

- Hameed, N.; Shabut, A.; Hossain, M.A. A Computer-aided diagnosis system for classifying prominent skin lesions using machine learning. In Proceedings of the 2018 10th Computer Science and Electronic Engineering (CEEC), Essex, UK, 19–21 September 2018; pp. 186–191. [Google Scholar]

- Yao, K.; Huang, K.; Sun, J.; Jude, C. Ad-gan: End-to-end unsupervised nuclei segmentation with aligned disentangling training. arXiv 2021, arXiv:2107.11022. [Google Scholar]

- Yao, K.; Huang, K.; Sun, J.; Jing, L.; Huang, D.; Jude, C. Scaffold-A549: A benchmark 3D fluorescence image dataset for unsupervised nuclei segmentation. Cogn. Comput. 2021, 13, 1603–1608. [Google Scholar] [CrossRef]

- Yao, K.; Sun, J.; Huang, K.; Jing, L.; Liu, H.; Huang, D.; Jude, C. Analyzing cell-scaffold interaction through unsupervised 3d nuclei segmentation. Int. J. Bioprinting 2022, 8, 495. [Google Scholar] [CrossRef]

- Yao, K.; Huang, K.; Sun, J.; Hussain, A.; Jude, C. PointNu-Net: Simultaneous Multi-tissue Histology Nuclei Segmentation and Classification in the Clinical Wild. arXiv 2021, arXiv:2111.01557. [Google Scholar] [CrossRef]

- Ali, M.S.; Miah, M.S.; Haque, J.; Rahman, M.M.; Islam, M.K. An enhanced technique of skin cancer classification using deep convolutional neural network with transfer learning models. Mach. Learn. Appl. 2021, 5, 100036. [Google Scholar] [CrossRef]

- Shahin, A.H.; Kamal, A.; Elattar, M.A. Deep ensemble learning for skin lesion classification from dermoscopic images. In Proceedings of the 2018 9th Cairo International Biomedical Engineering Conference (CIBEC), Cairo, Egypt, 20–22 December 2018; pp. 150–153. [Google Scholar]

- Ichim, L.; Popescu, D. Melanoma detection using an objective system based on multiple connected neural networks. IEEE Access 2020, 8, 179189–179202. [Google Scholar] [CrossRef]

- Patil, R.; Bellary, S. Machine learning approach in melanoma cancer stage detection. J. King Saud-Univ.-Comput. Inf. Sci. 2022, 34, 3285–3293. [Google Scholar] [CrossRef]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: Third International Workshop, DLMIA 2017, and 7th International Workshop, ML-CDS 2017, Held in Conjunction with MICCAI 2017, Québec City, QC, Canada, 14 September 2017; pp. 240–248. [Google Scholar]

- Yeung, M.; Sala, E.; Schönlieb, C.B.; Rundo, L. Unified focal loss: Generalising dice and cross entropy-based losses to handle class imbalanced medical image segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef] [PubMed]

- Bertels, J.; Eelbode, T.; Berman, M.; Vandermeulen, D.; Maes, F.; Bisschops, R.; Blaschko, M.B. Optimizing the dice score and jaccard index for medical image segmentation: Theory and practice. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2019: 22nd International Conference, Shenzhen, China, 13–17 October 2019; pp. 92–100. [Google Scholar]

- van Beers, F.; Lindström, A.; Okafor, E.; Wiering, M.A. Deep Neural Networks with Intersection over Union Loss for Binary Image Segmentation. In Proceedings of the ICPRAM, Prague, Czech Republic, 19–21 February 2019; pp. 438–445. [Google Scholar]

- Ho, Y.; Wookey, S. The real-world-weight cross-entropy loss function: Modeling the costs of mislabeling. IEEE Access 2019, 8, 4806–4813. [Google Scholar] [CrossRef]

- Zhou, Q.; Shi, Y.; Xu, Z.; Qu, R.; Xu, G. Classifying melanoma skin lesions using convolutional spiking neural networks with unsupervised stdp learning rule. IEEE Access 2020, 8, 101309–101319. [Google Scholar] [CrossRef]

- Alsaade, F.W.; Aldhyani, T.H.; Al-Adhaileh, M.H. Developing a recognition system for diagnosing melanoma skin lesions using artificial intelligence algorithms. Comput. Math. Methods Med. 2021, 2021, 1–20. [Google Scholar] [CrossRef]

- Yao, K.; Su, Z.; Huang, K.; Yang, X.; Sun, J.; Hussain, A.; Coenen, F. A novel 3D unsupervised domain adaptation framework for cross-modality medical image segmentation. IEEE J. Biomed. Health Inform. 2022, 26, 4976–4986. [Google Scholar] [CrossRef]

- Yao, K.; Gao, P.; Yang, X.; Sun, J.; Zhang, R.; Huang, K. Outpainting by queries. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; pp. 153–169. [Google Scholar]

| Dataset | Image Number | Disease Category | Labeled Images | Segmentation Mask |

|---|---|---|---|---|

| PH2 [8] | 200 | 2 | All | No |

| Med-Node [12] | 170 | 2 | All | No |

| ISIC Archive [3] | 71,066 | 25 | All | No |

| ISIC 2016 [15] | 1279 | 2 | All | Yes |

| ISIC 2017 [16] | 2600 | 3 | All | Yes |

| ISIC 2018 [17] | 11,527 | 7 | 10,015 | Yes |

| ISIC 2019 [18] | 33,569 | 8 | 25,331 | No |

| ISIC 2020 [6] | 44,108 | 9 | 33,126 | No |

| HAM 10,000 [9] | 10,015 | 7 | All | No |

| BCN 20,000 [10] | 19,424 | 8 | All | No |

| EDRA [19] | 1011 | 10 | All | No |

| DermNet [11] | 19,500 | 23 | All | No |

| Dermofit [14] | 1300 | 10 | All | No |

| Task | DL Methods | Metrics | Ref | |||||

|---|---|---|---|---|---|---|---|---|

| Jac | Acc | FS | SP | SS | Dice | |||

| Skin Lesion Segmentation | SkinNet | √ | √ | √ | [28] | |||

| Skin Lesion Segmentation | FrCN | √ | √ | √ | [29] | |||

| Skin Lesion Segmentation | YOLO and Grabcut Algorithm | √ | √ | √ | √ | [30] | ||

| Skin Lesion Segmentation and Classification | Swarm Intelligence (SI) | √ | √ | √ | √ | √ | √ | [31] |

| Skin Lesion Segmentation | UNet and unsupervised approach | √ | √ | [32] | ||||

| Skin Lesion Segmentation | CNN and Transformer | √ | [20] | |||||

| Skin Lesion Classification | VGG and Inception V3 | √ | √ | [33] | ||||

| Skin Lesion Classification | CNN and Transfer Learning | √ | √ | √ | √ | [34] | ||

| Melanoma Classification | ResNet and SVM | √ | [35] | |||||

| Melanoma Classification | Fast RCNN and DenseNet | √ | [36] | |||||

| Year | 2018 | 2019 | 2020 | 2021 | 2022 |

|---|---|---|---|---|---|

| Publication No. | 179 | 173 | 214 | 258 | 300 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, J.; Yao, K.; Huang, G.; Zhang, C.; Leach, M.; Huang, K.; Yang, X. Machine Learning Methods in Skin Disease Recognition: A Systematic Review. Processes 2023, 11, 1003. https://doi.org/10.3390/pr11041003

Sun J, Yao K, Huang G, Zhang C, Leach M, Huang K, Yang X. Machine Learning Methods in Skin Disease Recognition: A Systematic Review. Processes. 2023; 11(4):1003. https://doi.org/10.3390/pr11041003

Chicago/Turabian StyleSun, Jie, Kai Yao, Guangyao Huang, Chengrui Zhang, Mark Leach, Kaizhu Huang, and Xi Yang. 2023. "Machine Learning Methods in Skin Disease Recognition: A Systematic Review" Processes 11, no. 4: 1003. https://doi.org/10.3390/pr11041003

APA StyleSun, J., Yao, K., Huang, G., Zhang, C., Leach, M., Huang, K., & Yang, X. (2023). Machine Learning Methods in Skin Disease Recognition: A Systematic Review. Processes, 11(4), 1003. https://doi.org/10.3390/pr11041003