Research on the Optimization of A/B Testing System Based on Dynamic Strategy Distribution

Abstract

:1. Introduction

2. Related Work

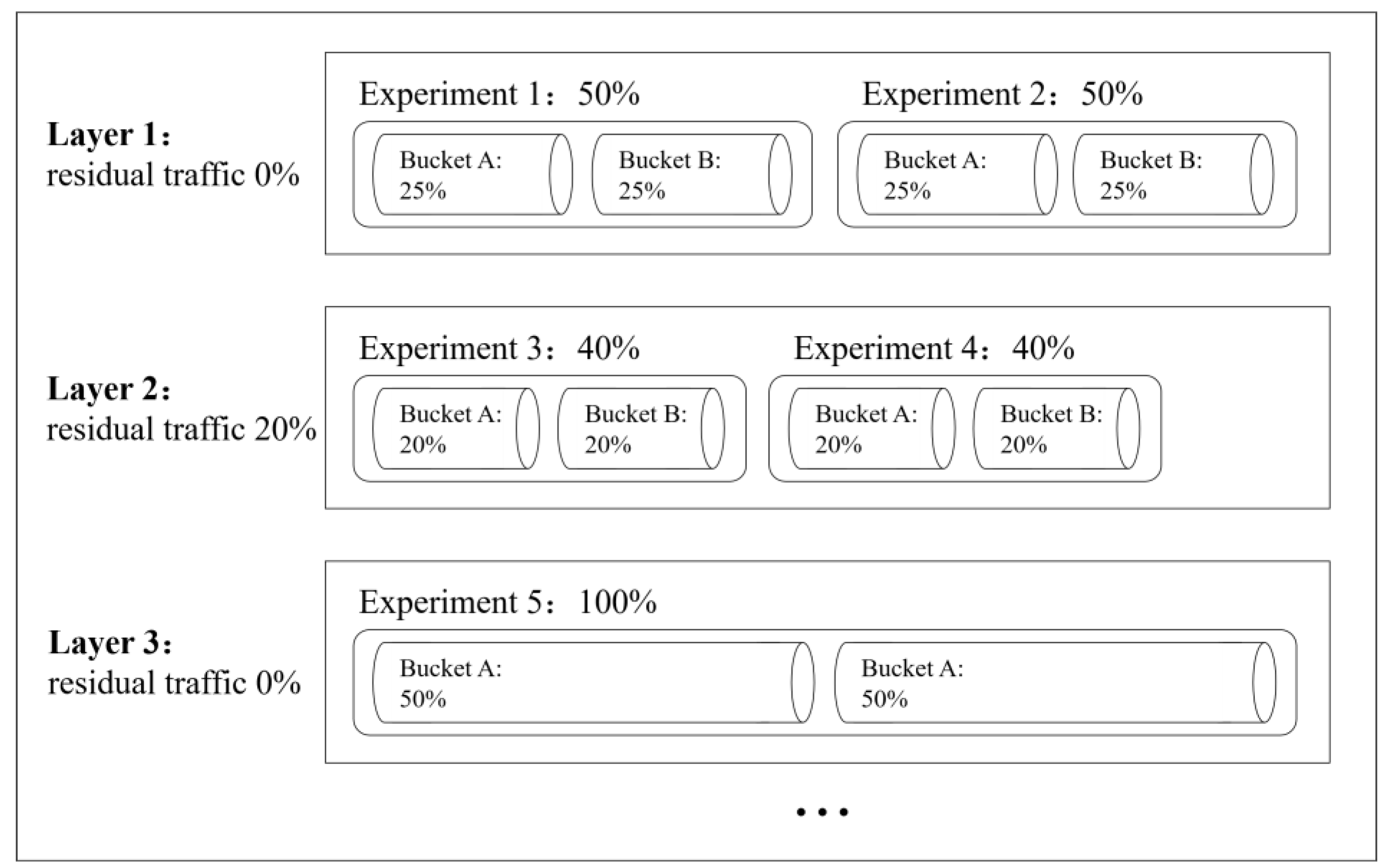

3. Dynamic Strategy Distribution

3.1. Overview

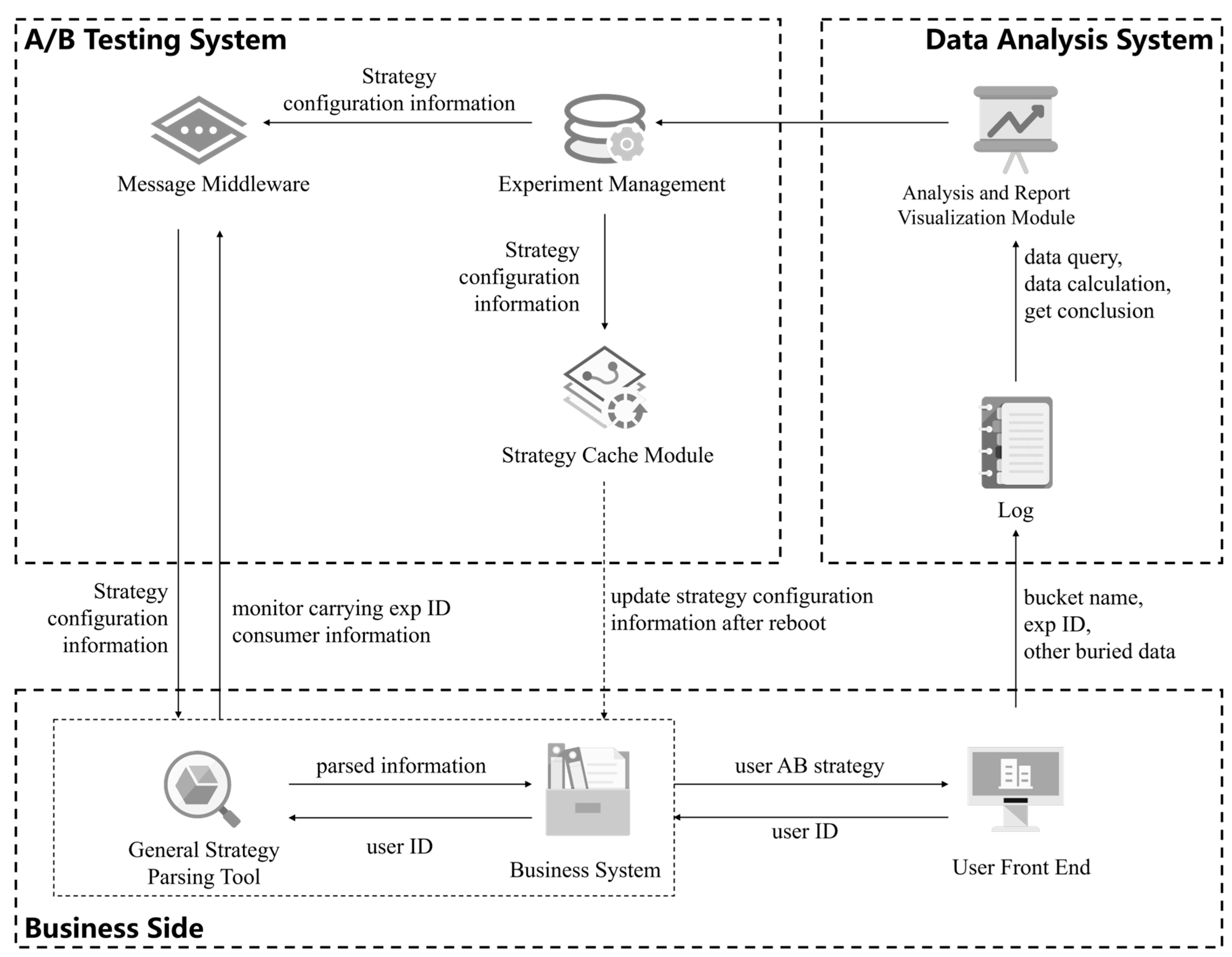

3.2. System Model

- (1)

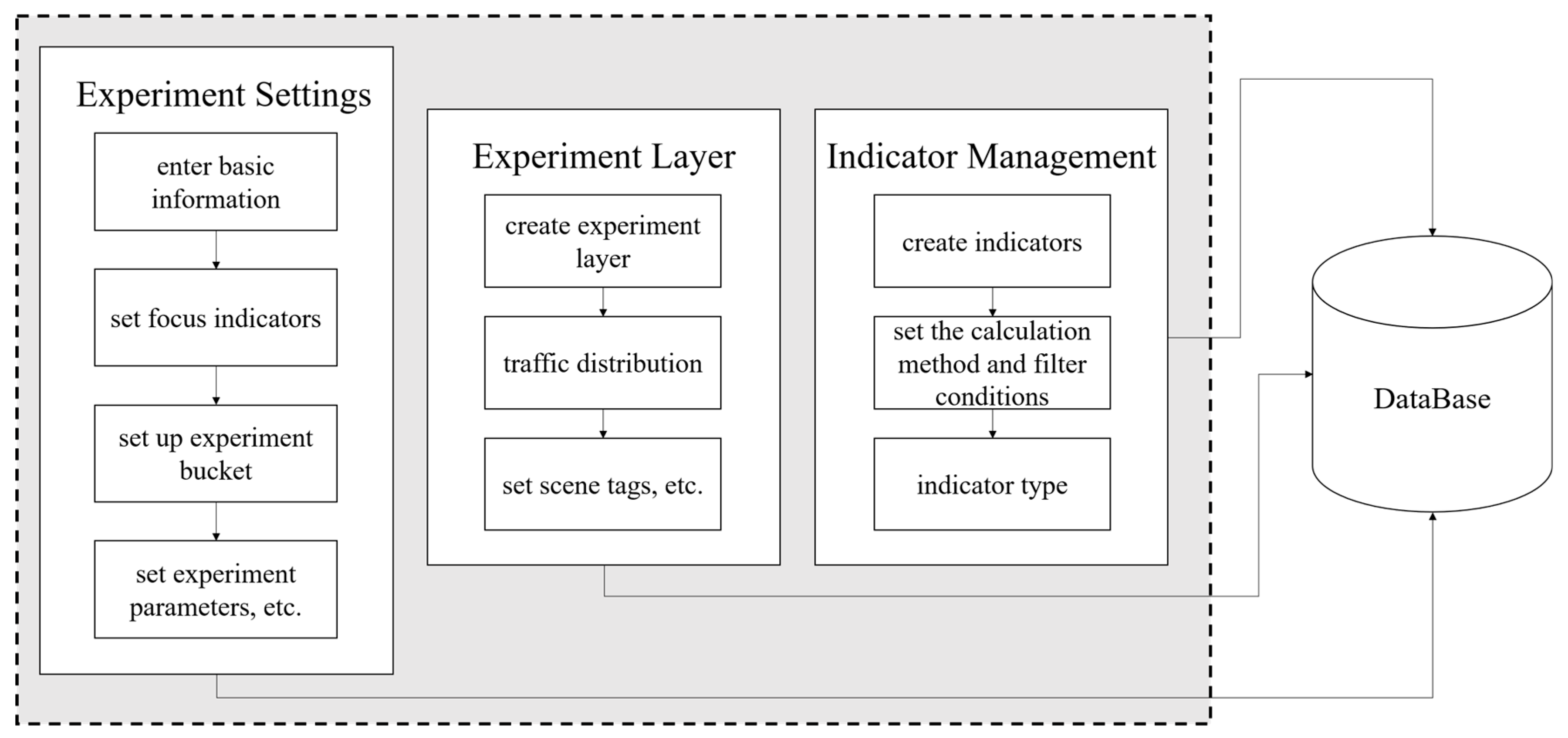

- A/B testing subsystem—experiment management module: This module is responsible for experiment strategy editing, which is used to create, query, update, and delete experiment-related information. After configuring or updating the experiment information, it will generate or update the configuration information of the experiment strategy according to the relevant information, and then send the new strategy configuration information to the strategy cache module and the message middleware at the same time, as shown in Figure 1’s “A/B testing subsystem” section.

- (2)

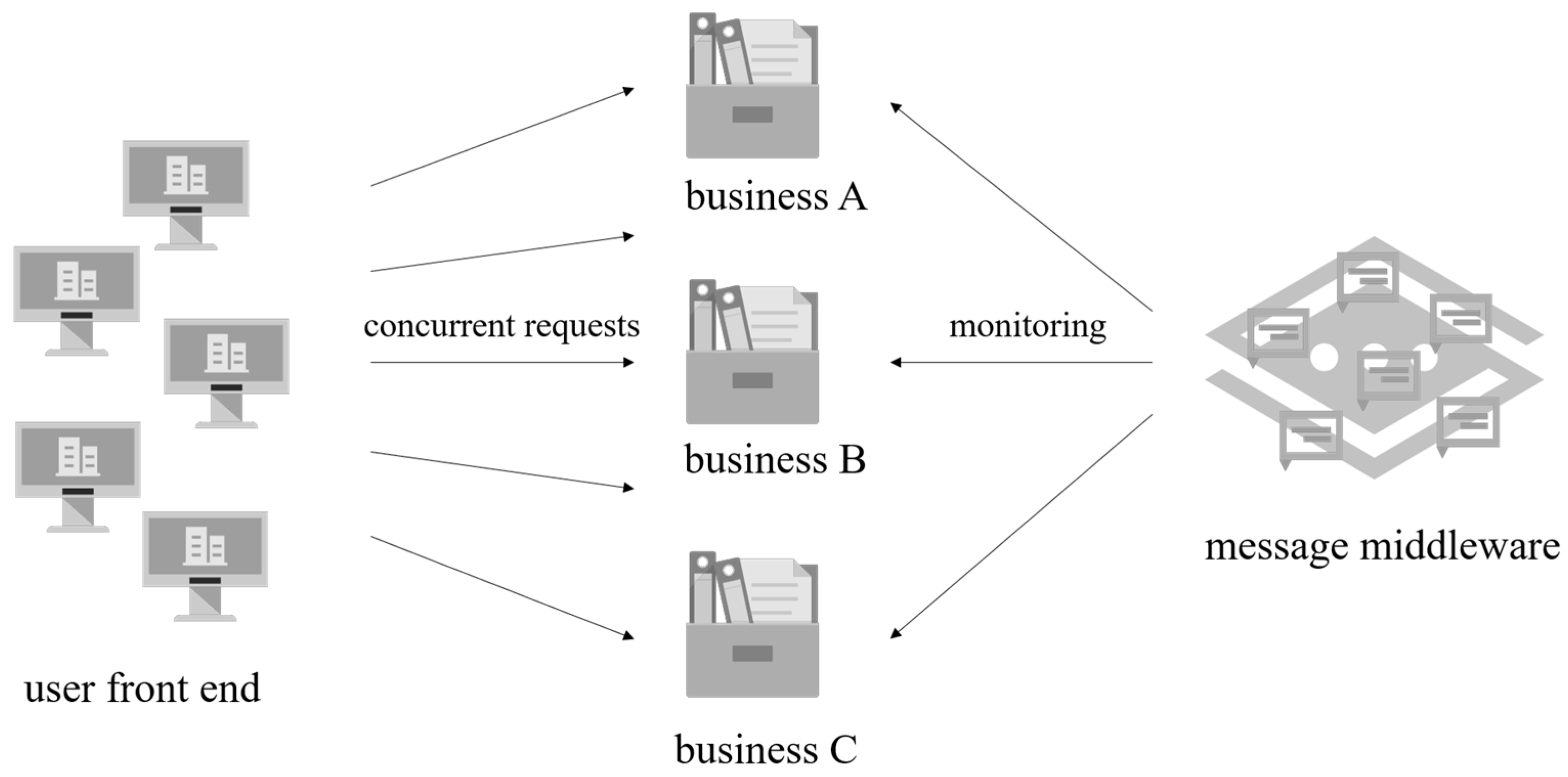

- A/B testing subsystem—message middleware: Due to its characteristics of asynchronous message delivery, the message middleware becomes the key to solving high-concurrency problems. When creating a new experiment, the strategy configuration information generated by the experiment management module will be put into the message queue. When updating an experiment, the experiment management module will push the new strategy configuration information to the message middleware. For example, when performing traffic distribution operations such as the experimental traffic expansion, deletion of buckets, and traffic push, the traffic configuration will be updated and pushed to the message queue. When updating the experimental parameters, we push the new parameter list. In this way, it can be guaranteed that the latest configuration information is cached in the message middleware. The asynchrony of the message middleware is manifested, on the one hand, in that the communication between the experiment management module and the message middleware occurs only when creating and updating, and, on the other hand, in that the business side obtains the configuration information through long-term monitoring, and only communicates with it when the monitoring configuration information changes, and the communication between the two sides is asynchronous and does not interfere with each other.

- (3)

- A/B testing subsystem—strategy cache module: The way this module updates the configuration information is similar to the message middleware. Unlike the message middleware, the strategy cache module is mainly used to record the traffic distribution and backup information. When creating a new experiment, the strategy cache module will respond to the query of the experiment management module and return the assigned traffic of different experiments. When the business system restarts the service, the business system will send a strategy access request to the strategy cache module to obtain the current strategy configuration information of the experiment backed up in the cache.

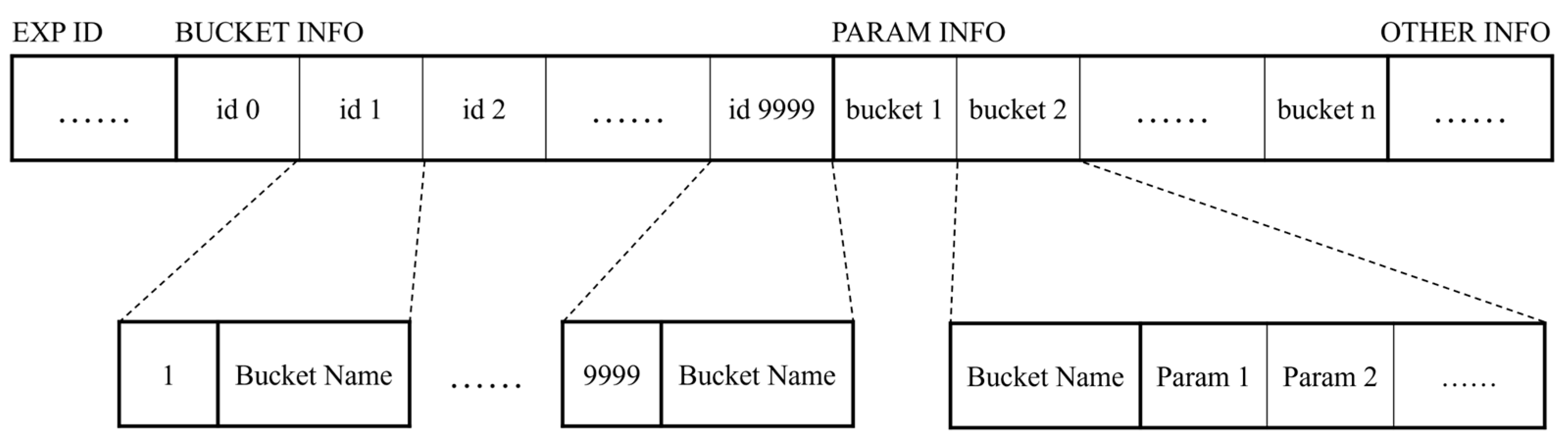

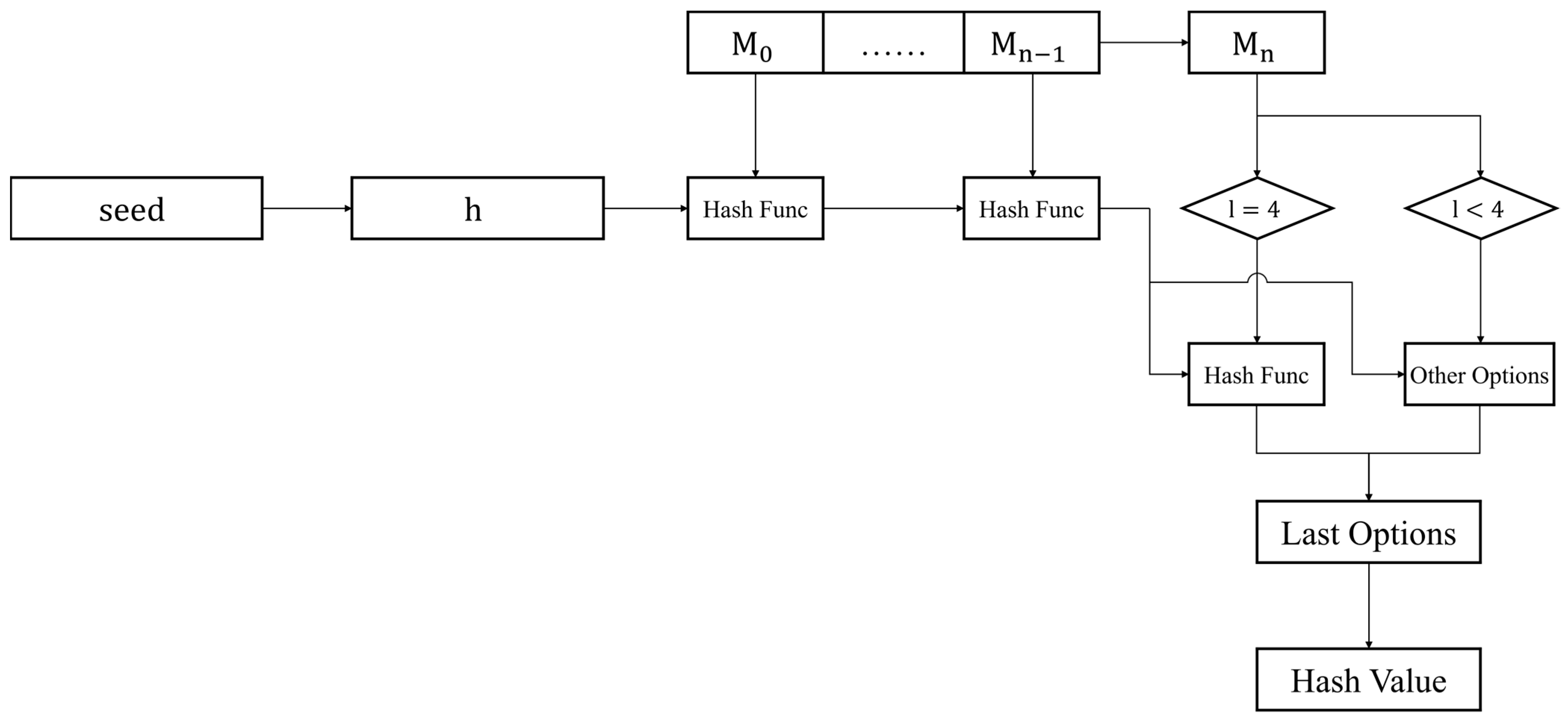

3.3. Strategy Configuration Information

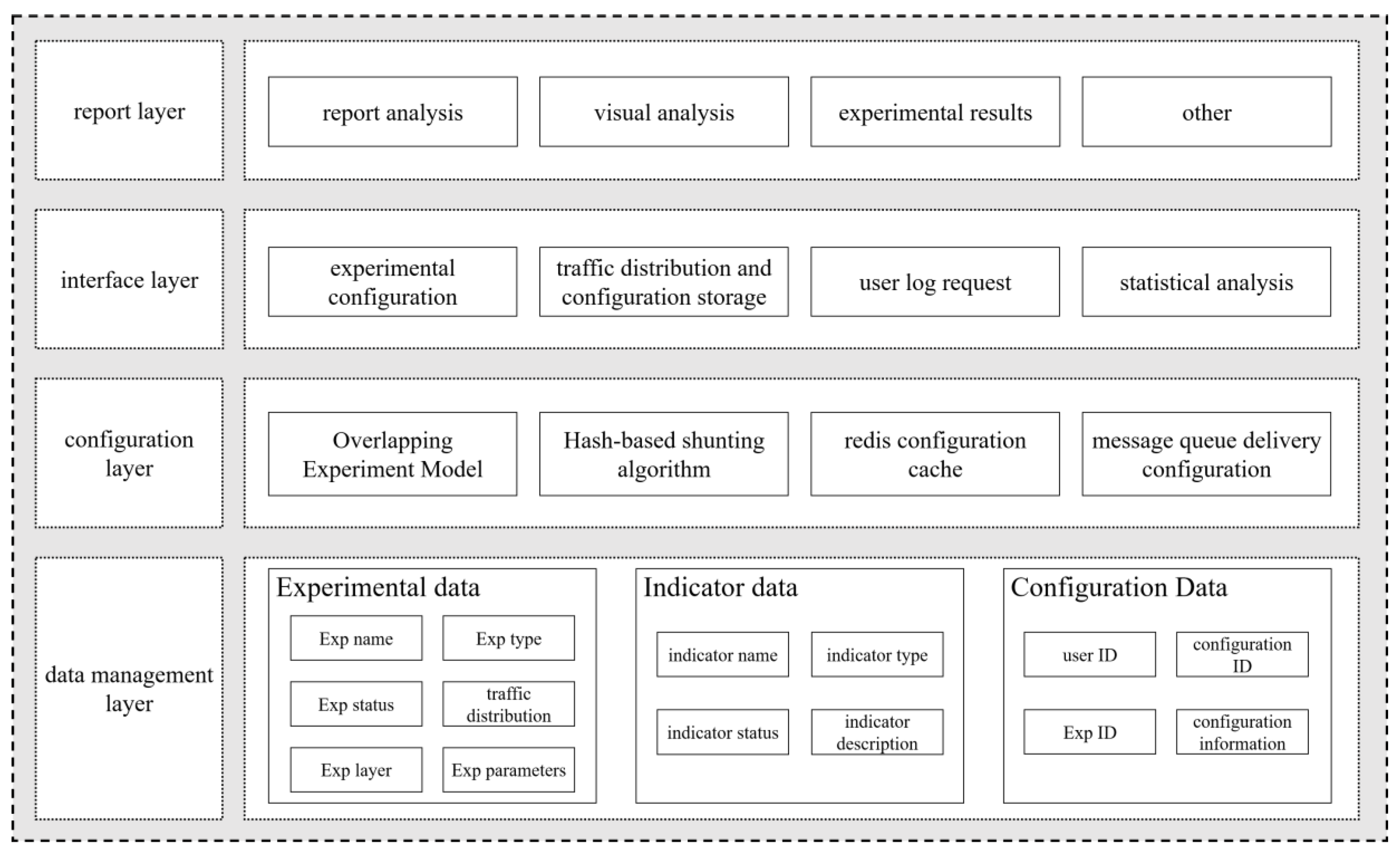

4. Configuration-Driven Traffic-Multiplexing A/B Testing System

4.1. System Structure

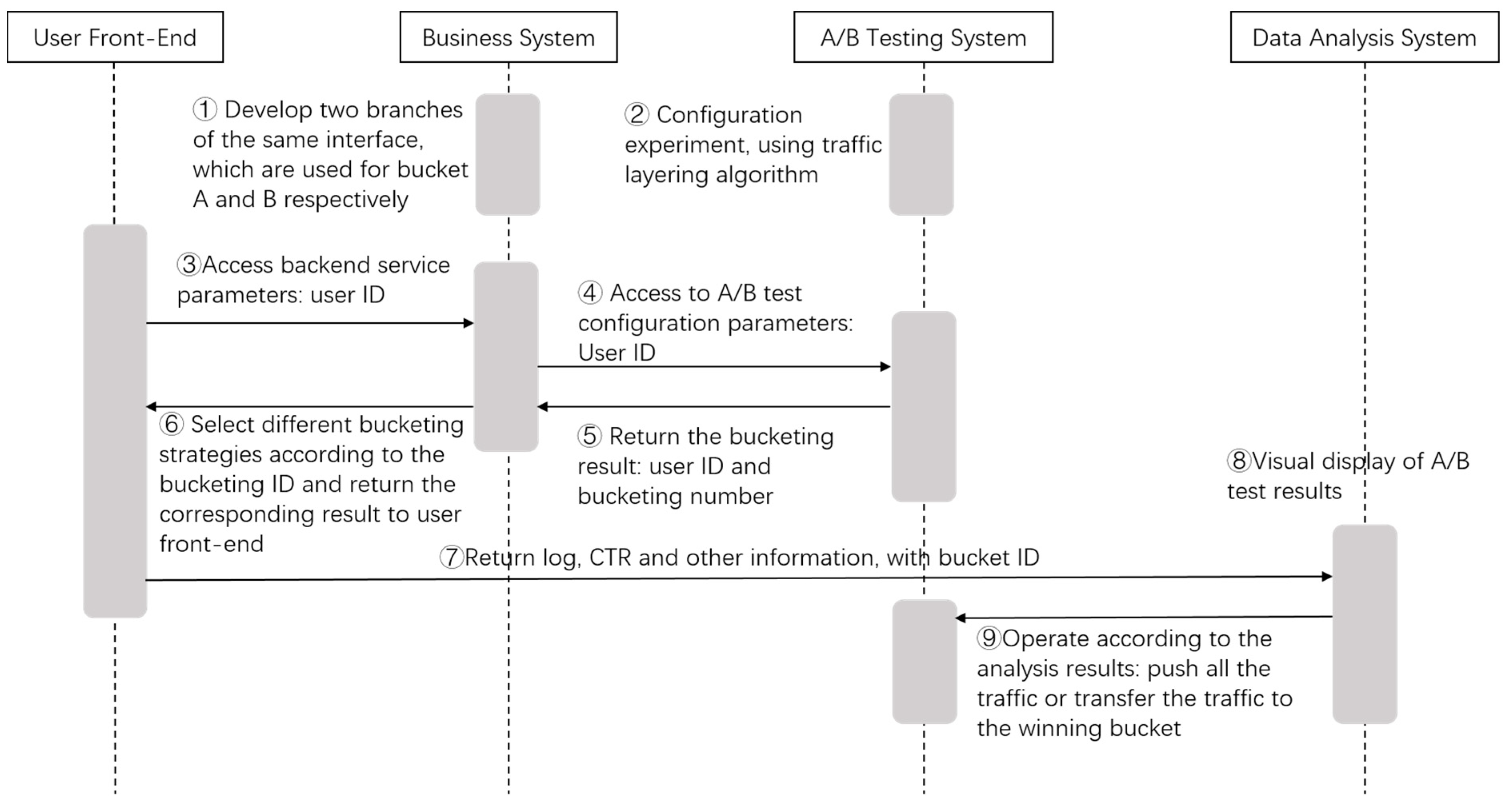

4.2. Business Process

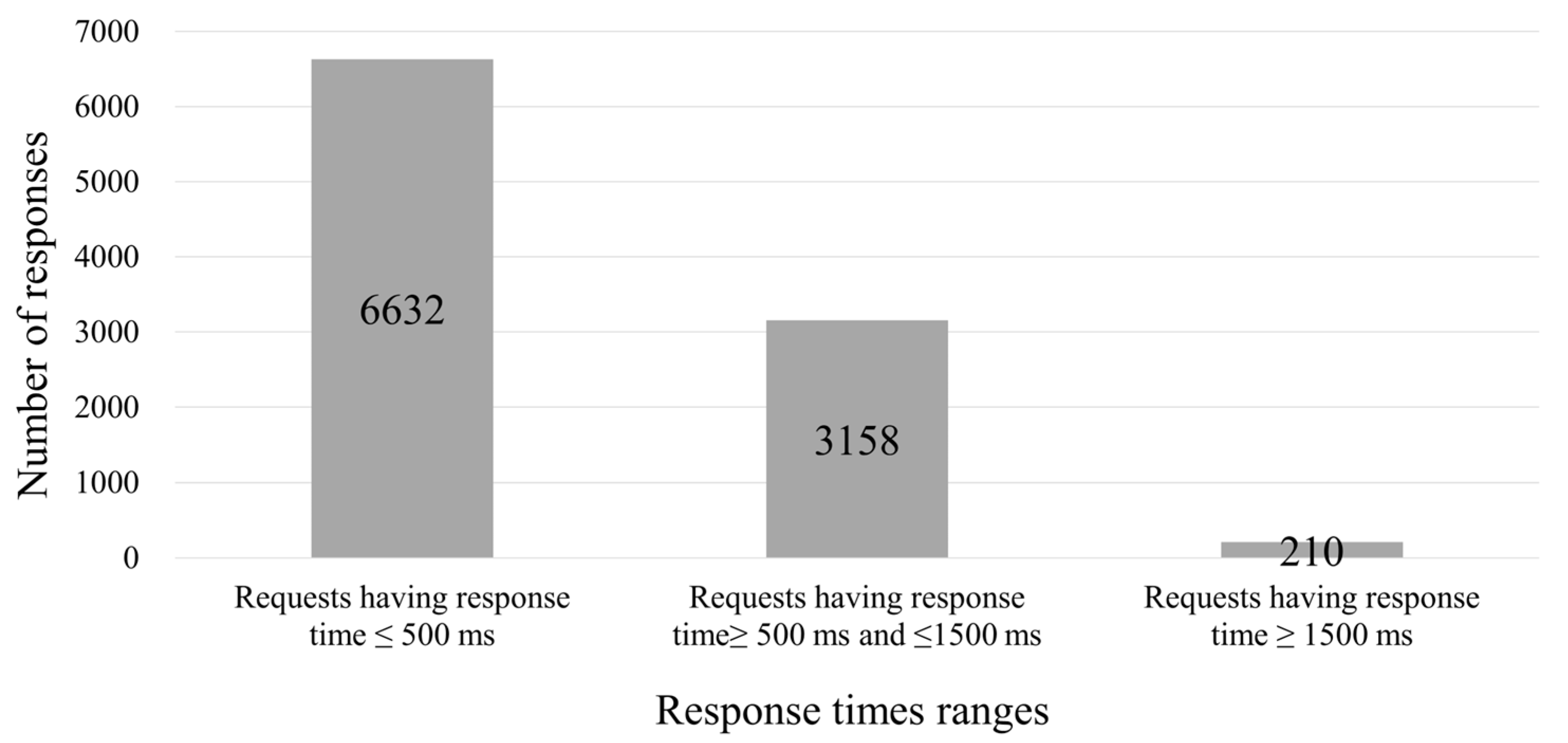

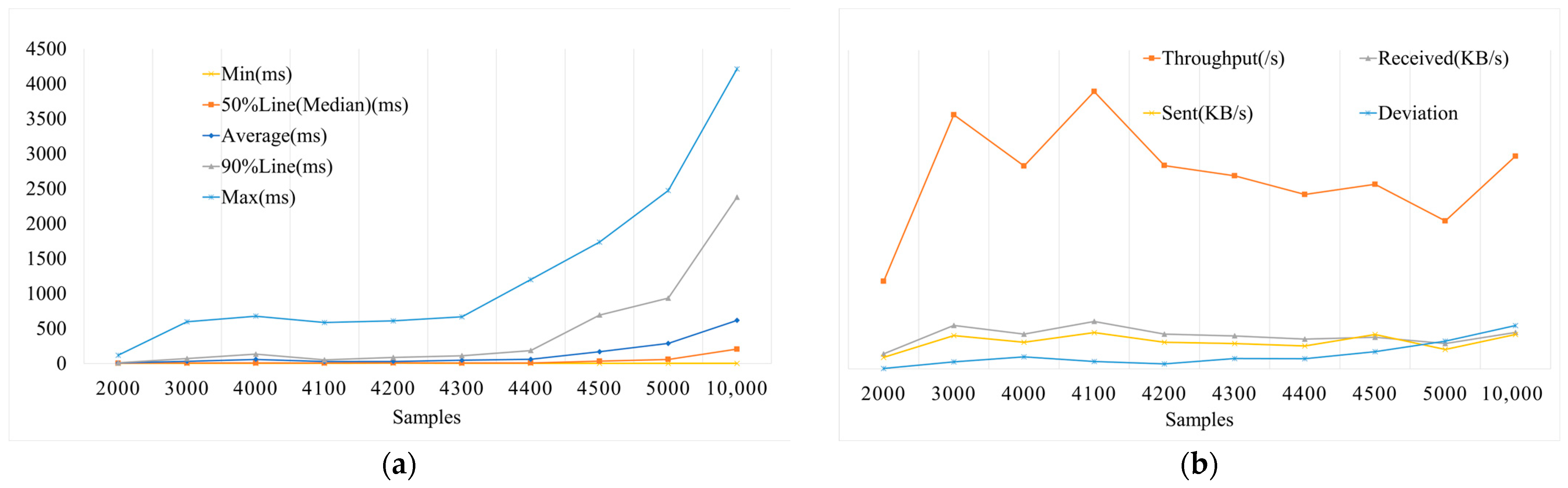

5. Experiment and Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Baier, D.; Rese, A. Increasing Conversion Rates Through Eye Tracking, TAM, A/B Tests: A Case Study. In Advanced Studies in Behaviormetrics and Data Science; Springer: Singapore, 2020; pp. 341–353. [Google Scholar]

- Kohavi, R.; Longbotham, R. Online experiments: Lessons learned. Computer 2007, 40, 103–105. [Google Scholar] [CrossRef]

- Siroker, D.; Koomen, P. A/B Testing: The Most Powerful Way to Turn Clicks into Customers; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Tang, D.; Agarwal, A.; O’Brien, D.; Meyer, M. Overlapping Experiment Infrastructure: More, Better, Faster Experimentation. In Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 24–28 July 2010; pp. 17–26. [Google Scholar]

- Kohavi, R.; Longbotham, R.; Sommerfield, D.; Henne, R.M. Controlled experiments on the web: Survey and practical guide. Data Min. Knowl. Discov. 2009, 18, 140–181. [Google Scholar] [CrossRef] [Green Version]

- Azevedo, E.M.; Deng, A.; Montiel Olea, J.L.; Rao, J.; Weyl, E.G. A/b testing with fat tails. J. Political Econ. 2020, 128, 46–54. [Google Scholar] [CrossRef]

- Deng, A.; Lu, J.; Litz, J. Trustworthy analysis of online a/b tests: Pitfalls, challenges and solutions. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining; ACM: New York, NY, USA, 2017; pp. 641–649. [Google Scholar]

- Hill, D.N.; Nassif, H.; Liu, Y.; Iyer, A.; Vishwanathan, S.V.N. An Efficient Bandit Algorithm for Realtime Multivariate Optimization. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1813–1821. [Google Scholar]

- Bojinov, I.; Chen, A.; Liu, M. The Importance of Being Causal. In Harvard Data Science Review; MIT Press: Cambridge, MA, USA, 2020; p. 2. [Google Scholar]

- Saveski, M.; Pouget-Abadie, J.; Saint-Jacques, G.; Duan, W.; Ghosh, S.; Xu, Y.; Airoldi, E.M. Detecting Network Effects: Randomizing over Randomized Experiments. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1027–1035. [Google Scholar]

- Jiang, S.; Martin, J.; Wilson, C. Who’s the Guinea Pig? Investigating Online A/B/n Tests in-the-Wild. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; pp. 201–210. [Google Scholar]

- Johari, R.; Koomen, P.; Pekelis, L. Peeking at A/B Tests: Why It Matters, and What to Do about It. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1517–1525. [Google Scholar]

- Claeys, E.; Gancarski, P.; Maumy-Bertrand, M.; Wassner, H. Dynamic allocation optimization in A/B tests using classification-based preprocessing. IEEE Trans. Knowl. Data Eng. 2021, 35, 335–349. [Google Scholar] [CrossRef]

- Nandy, P.; Basu, K.; Chatterjee, S.; Tu, Y. A/B testing in dense large-scale networks: Design and inference. Adv. Neural Inf. Process. Syst. 2020, 33, 2870–2880. [Google Scholar]

- Kokkonis, G.; Psannis, K.E.; Roumeliotis, M. Network adaptive flow control algorithm for haptic data over the internet–NAFCAH. In International Conference on Genetic and Evolutionary Computing; Springer: Cham, Switzerland, 2015; pp. 93–102. [Google Scholar]

- Deng, A.; Lu, J.; Chen, S. Continuous monitoring of A/B tests without pain: Optional stopping in Bayesian testing. In Proceedings of the 2016 IEEE international conference on data science and advanced analytics (DSAA), Montreal, QC, Canada, 17–19 October 2016; pp. 243–252. [Google Scholar]

- King, R.; Churchill, E.F.; Tan, C. Designing with Data Improving the User Experience with A/B Testing; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017. [Google Scholar]

- Yamaguchi, F.; Nishi, H. Hardware-based hash functions for network applications. In Proceedings of the 2013 19th IEEE International Conference on Networks (ICON), IEEE, Singapore, 11–13 December 2013; pp. 1–6. [Google Scholar]

| Label | Configuration |

|---|---|

| CPU | AMD Ryzen 9 3900 12-Core Professor |

| GPU | NVDIA GeForce RTX 2070 8 G |

| RAM | 32 G |

| ROM | 512 G SSD 1.8 T HDD |

| Label | Value |

|---|---|

| Average | 410 ms |

| 50%Line (Median) | 204 ms |

| 90%Line | 1244 ms |

| 95%Line | 1336 ms |

| 99%Line | 1761 ms |

| Min | 3 ms |

| Max | 1835 ms |

| Error | 0.00% |

| Deviation | 475 |

| Throughput | 2335.4/s |

| Received | 399.1 KB/s |

| Sent | 305.6 KB/s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sheng, J.; Liu, H.; Wang, B. Research on the Optimization of A/B Testing System Based on Dynamic Strategy Distribution. Processes 2023, 11, 912. https://doi.org/10.3390/pr11030912

Sheng J, Liu H, Wang B. Research on the Optimization of A/B Testing System Based on Dynamic Strategy Distribution. Processes. 2023; 11(3):912. https://doi.org/10.3390/pr11030912

Chicago/Turabian StyleSheng, Jinfang, Huadan Liu, and Bin Wang. 2023. "Research on the Optimization of A/B Testing System Based on Dynamic Strategy Distribution" Processes 11, no. 3: 912. https://doi.org/10.3390/pr11030912

APA StyleSheng, J., Liu, H., & Wang, B. (2023). Research on the Optimization of A/B Testing System Based on Dynamic Strategy Distribution. Processes, 11(3), 912. https://doi.org/10.3390/pr11030912