One-Layer Real-Time Optimization Using Reinforcement Learning: A Review with Guidelines

Abstract

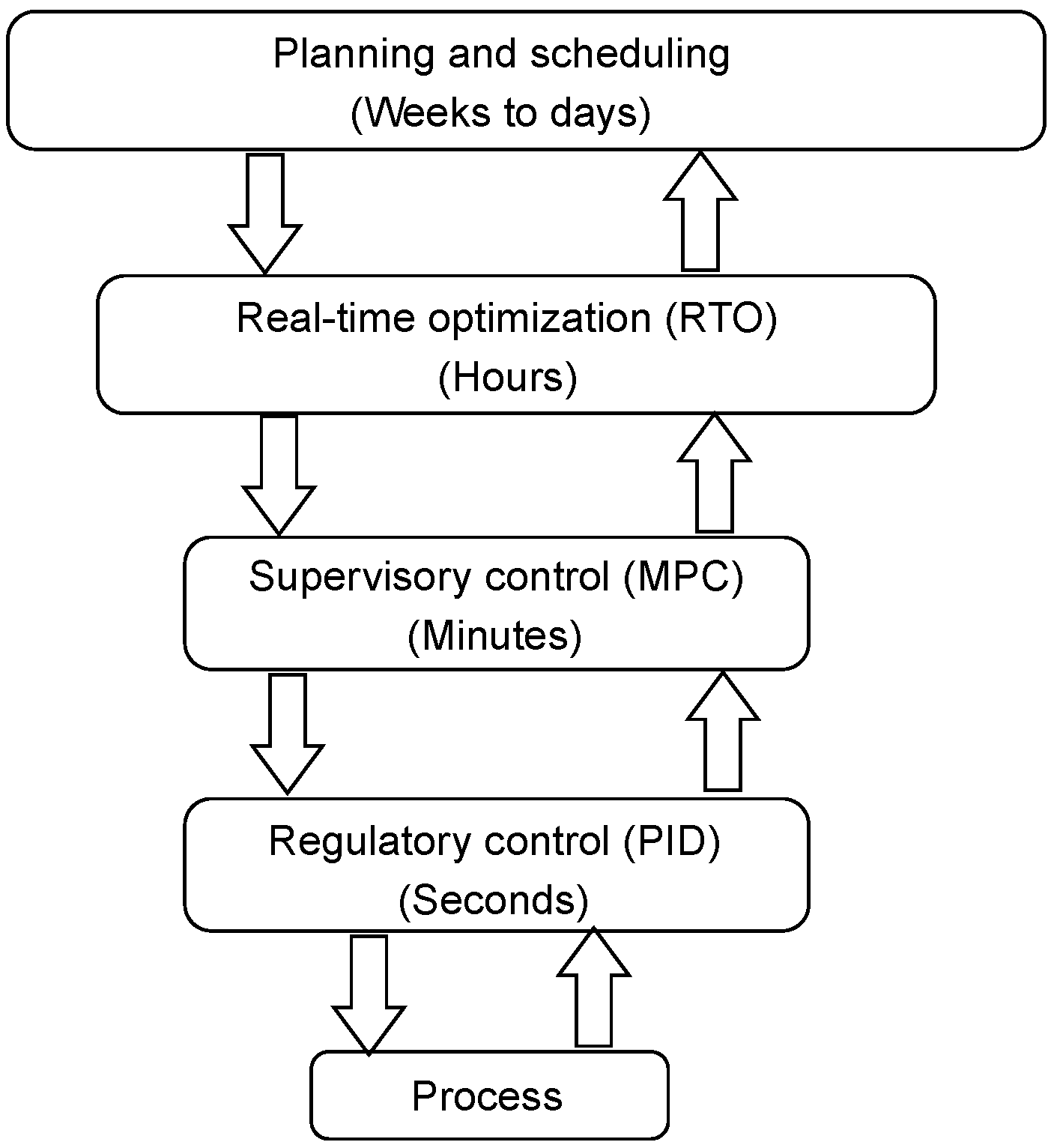

1. Introduction

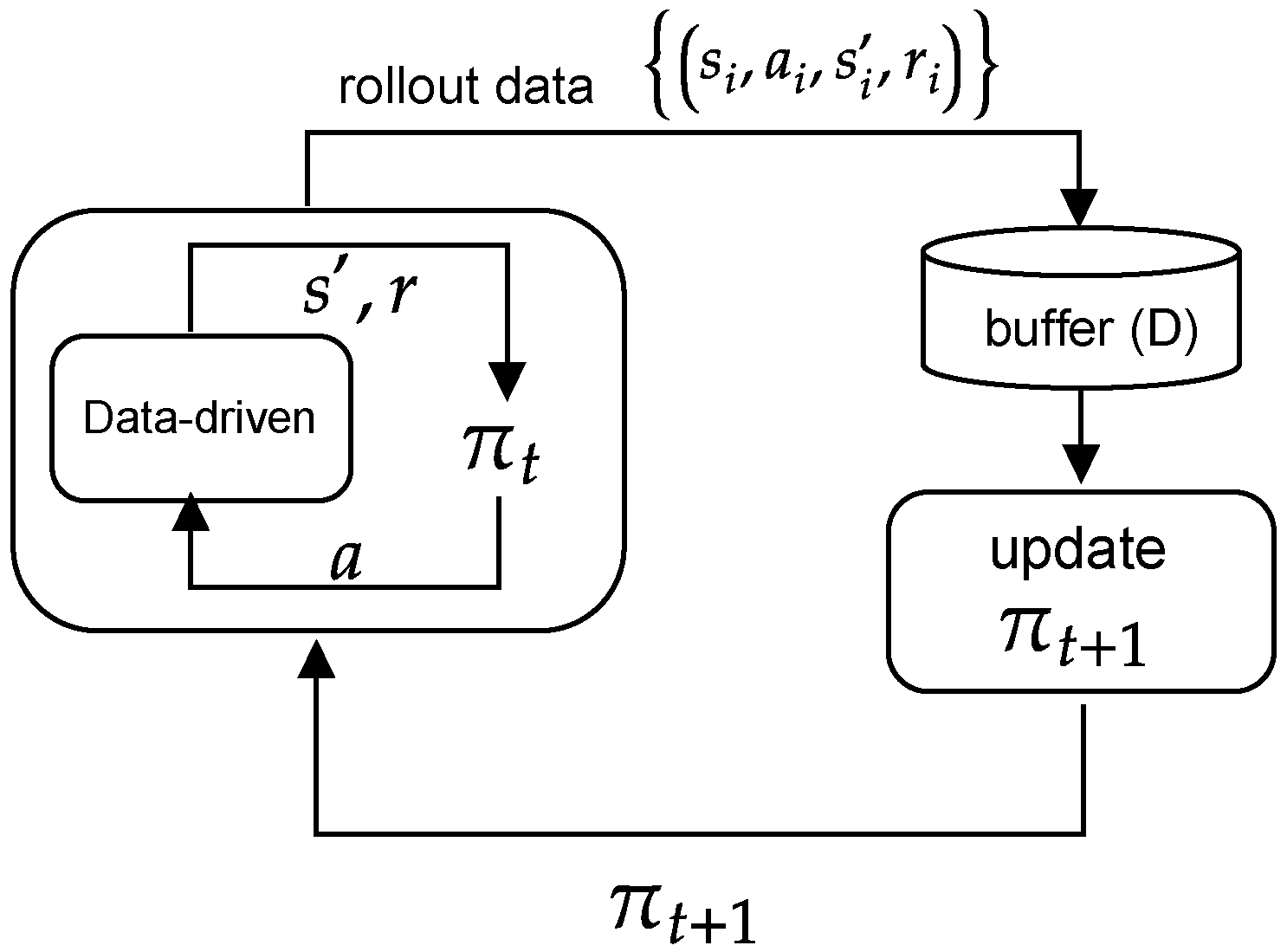

2. Reinforcement Learning

2.1. Markov Decision Process

2.2. Algorithms

2.3. Deep Reinforcement Learning

3. Applications

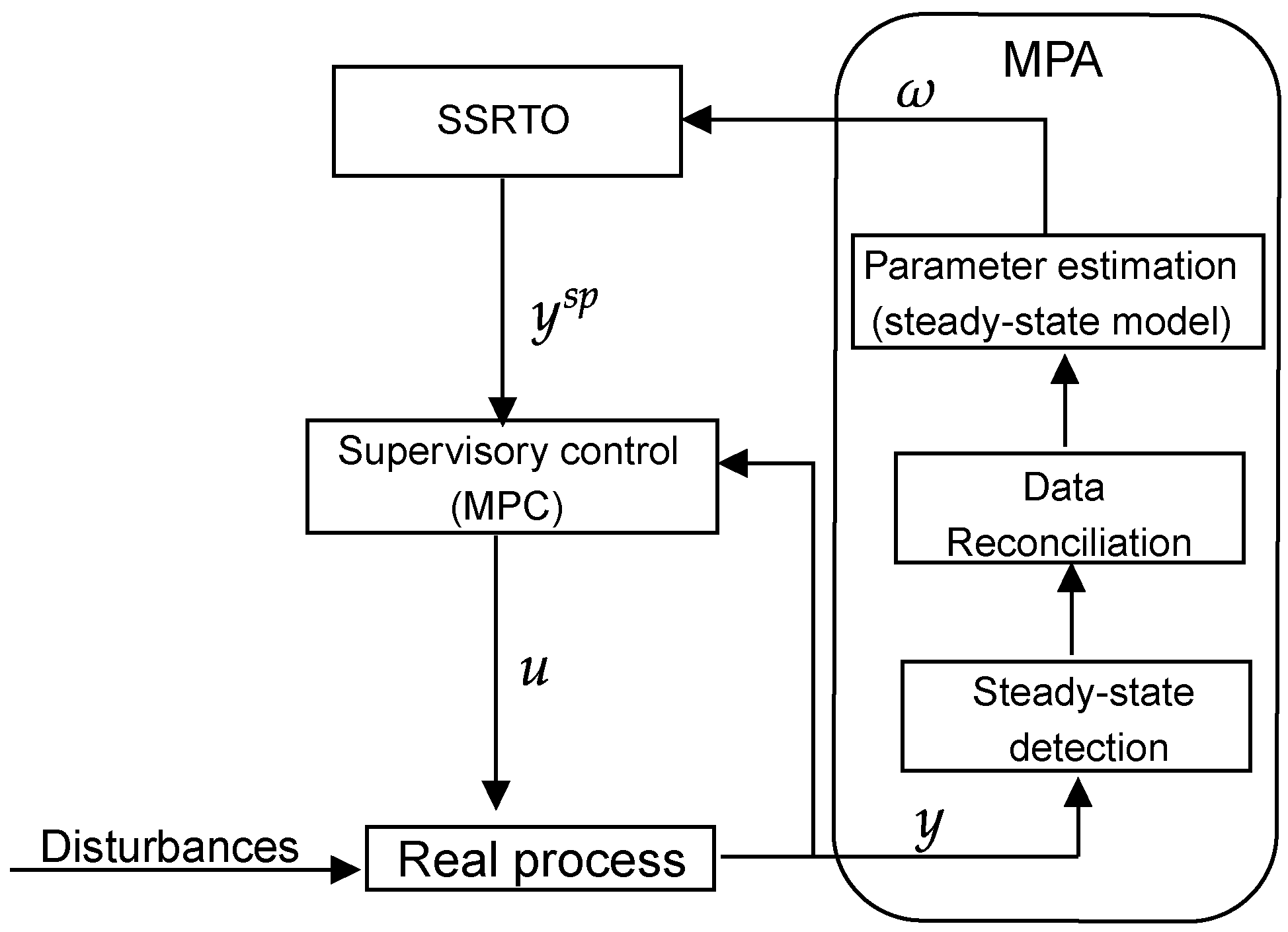

3.1. SSRTO

3.2. Supervisory Control

3.3. Regulatory Control

4. Benchmark Study of Reinforcement Learning

4.1. Offline Control Experiment

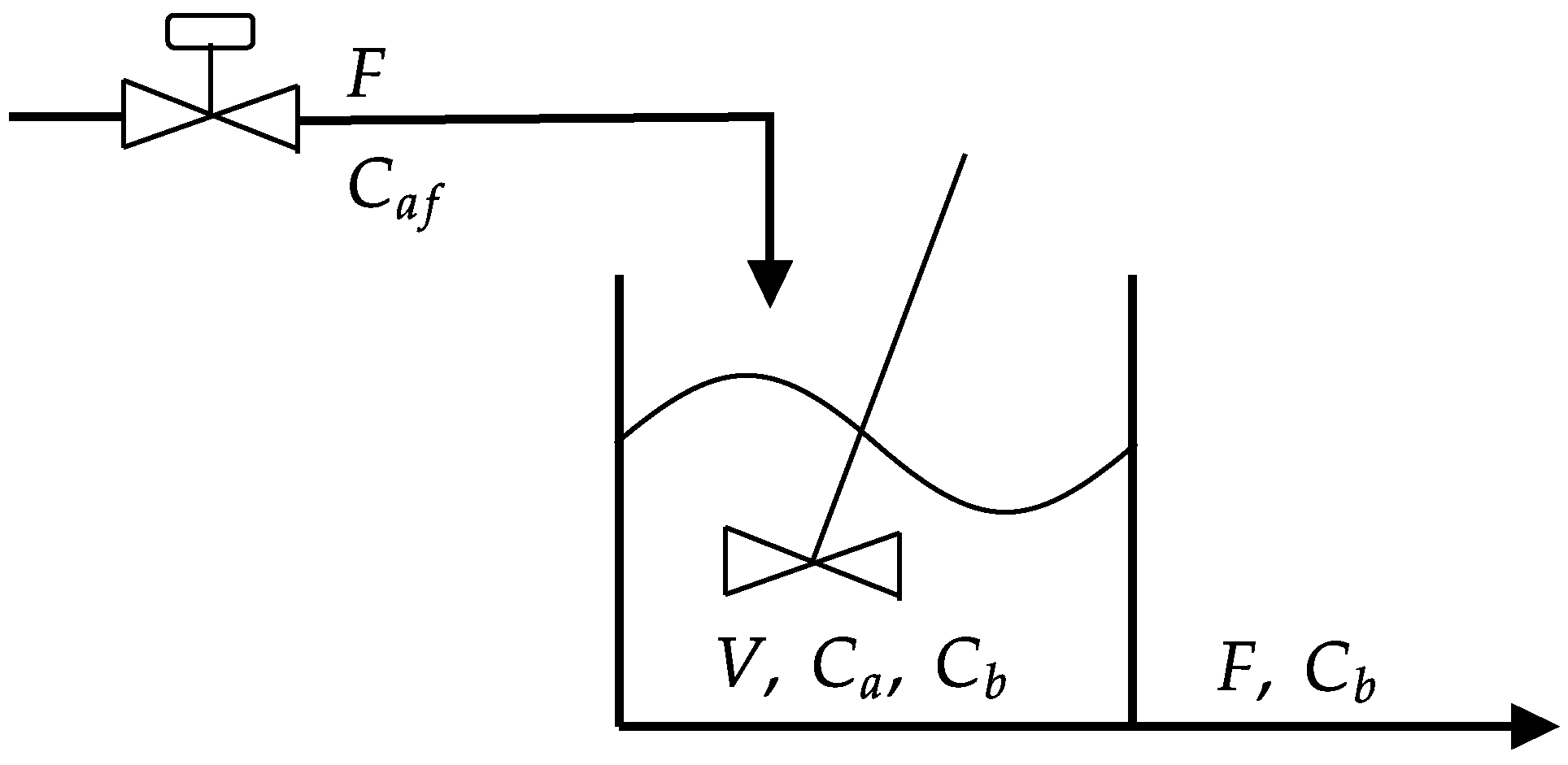

4.1.1. Dynamic Model of the CSTR

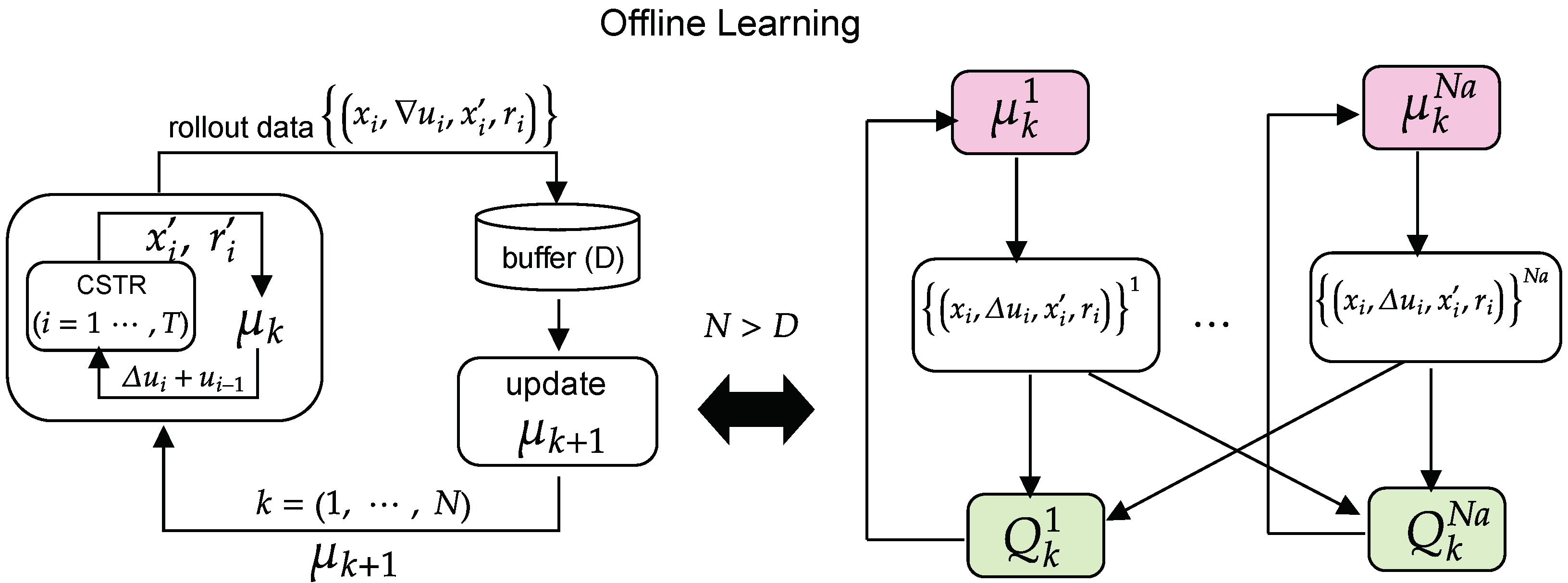

4.1.2. RL Framework

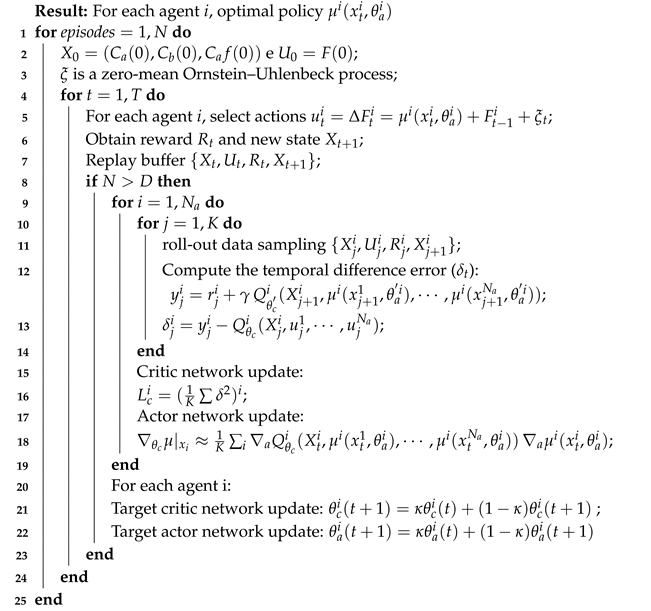

| Algorithm 1: MADDPG algorithm. |

|

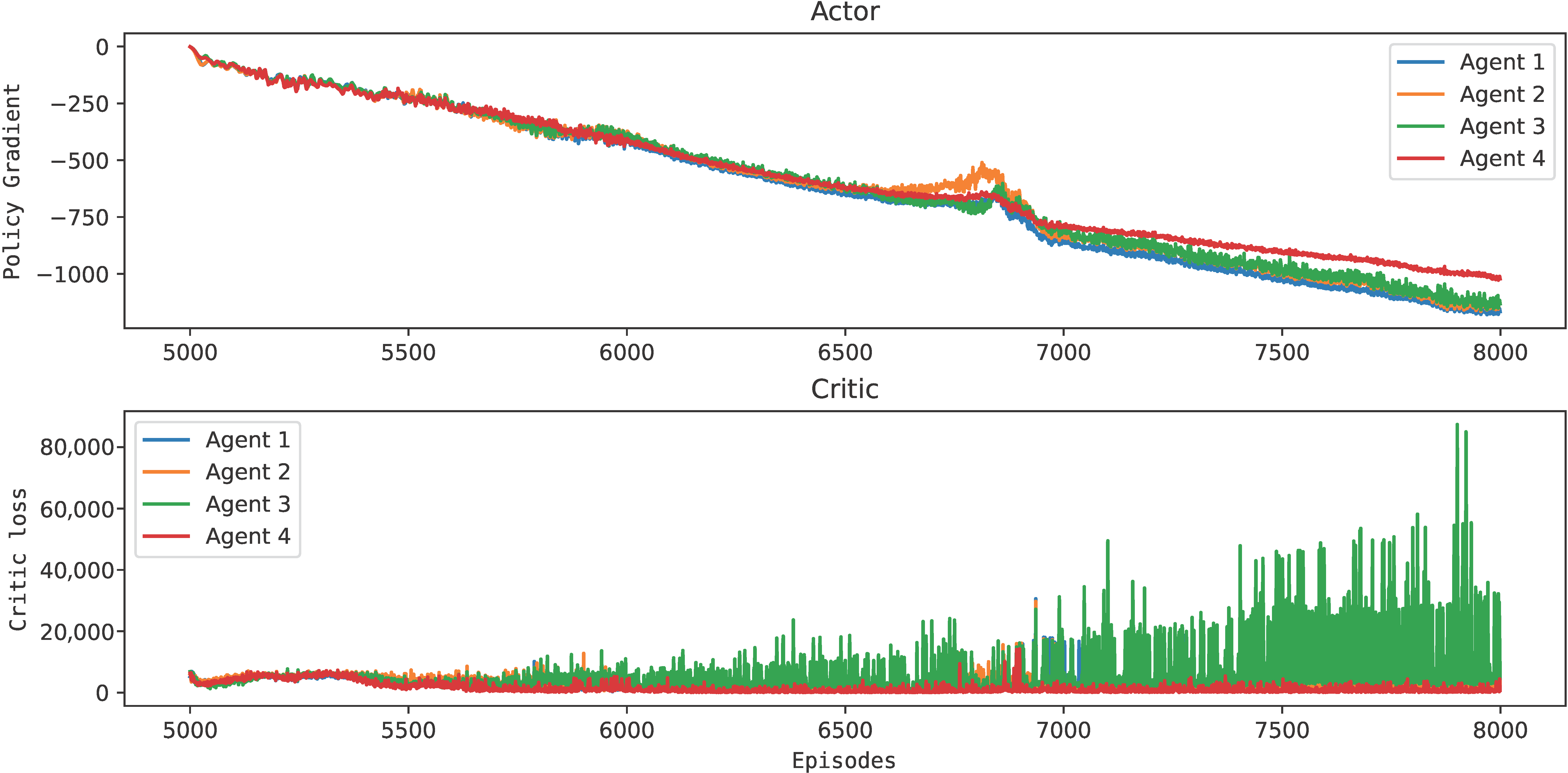

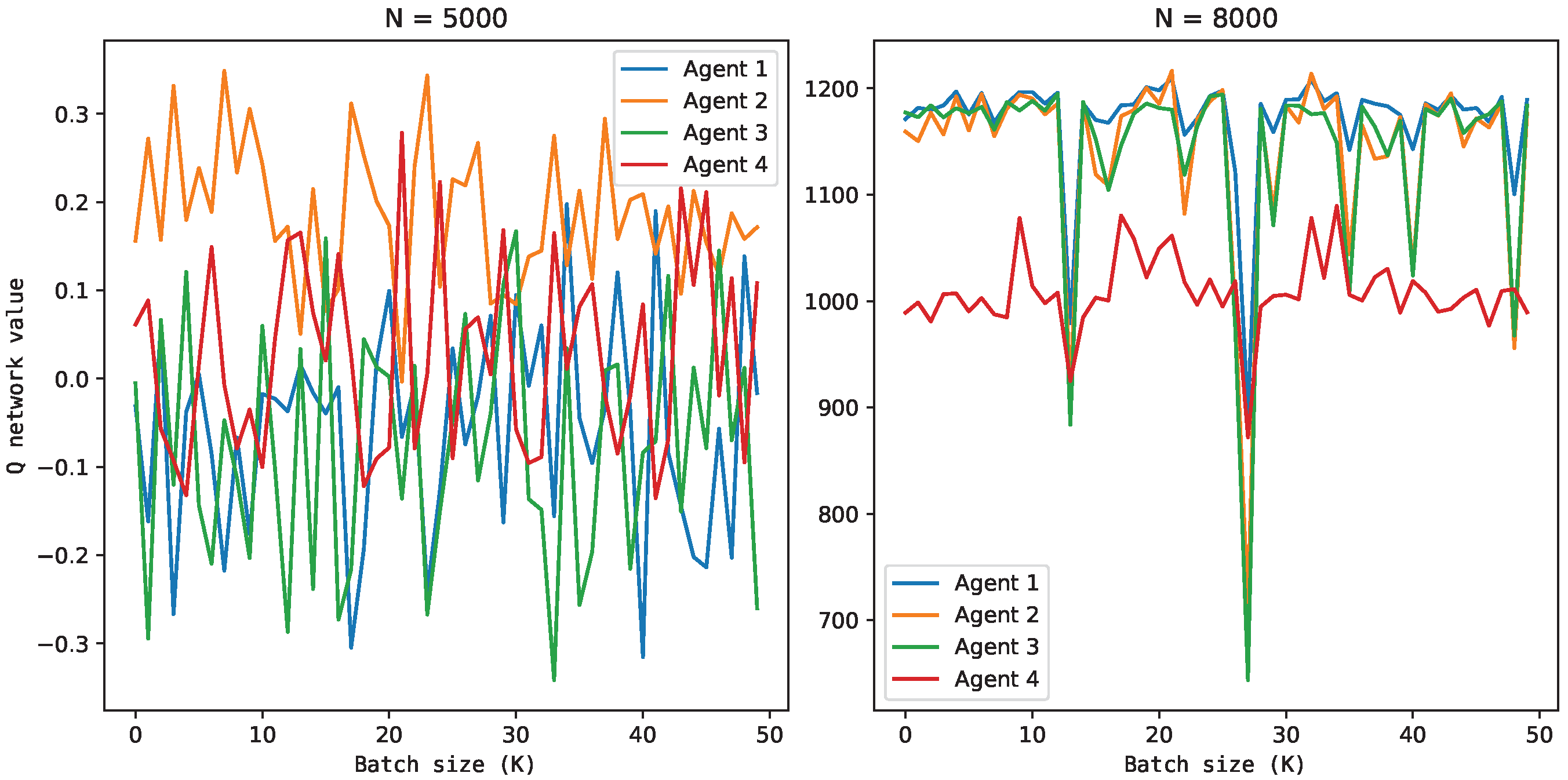

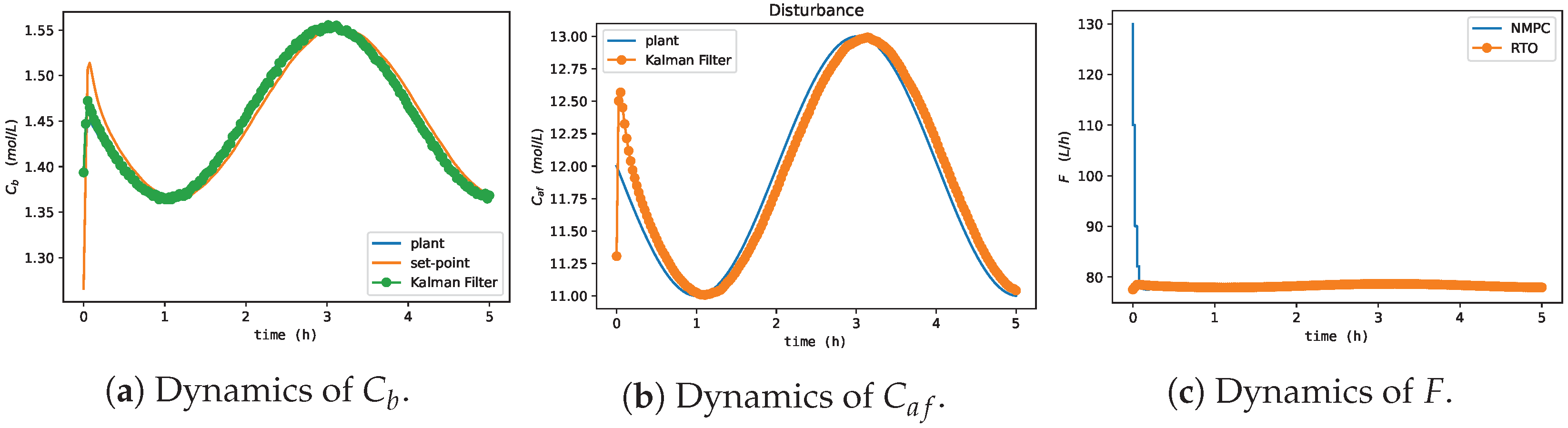

4.1.3. Validation of the Control Experiment

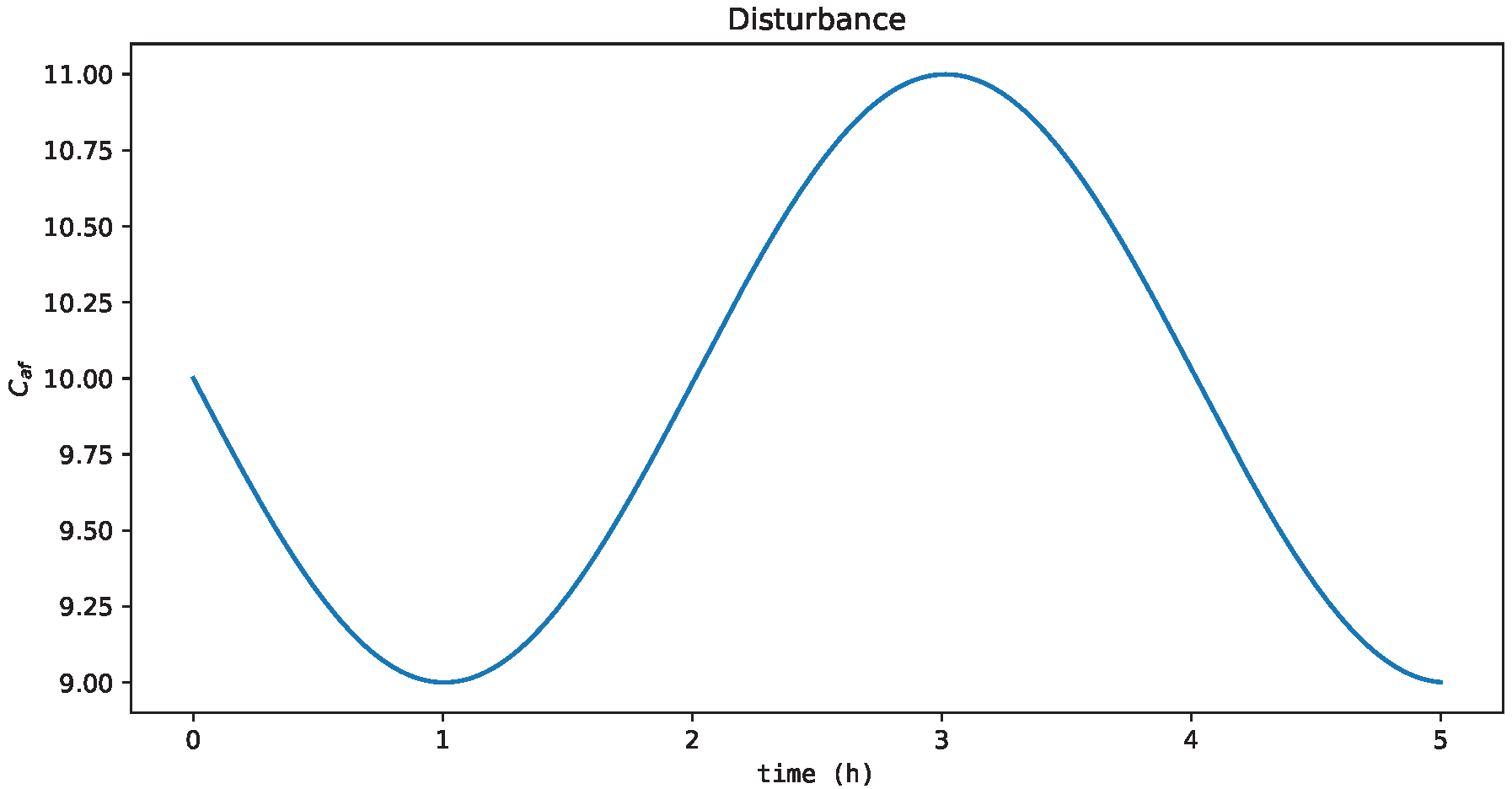

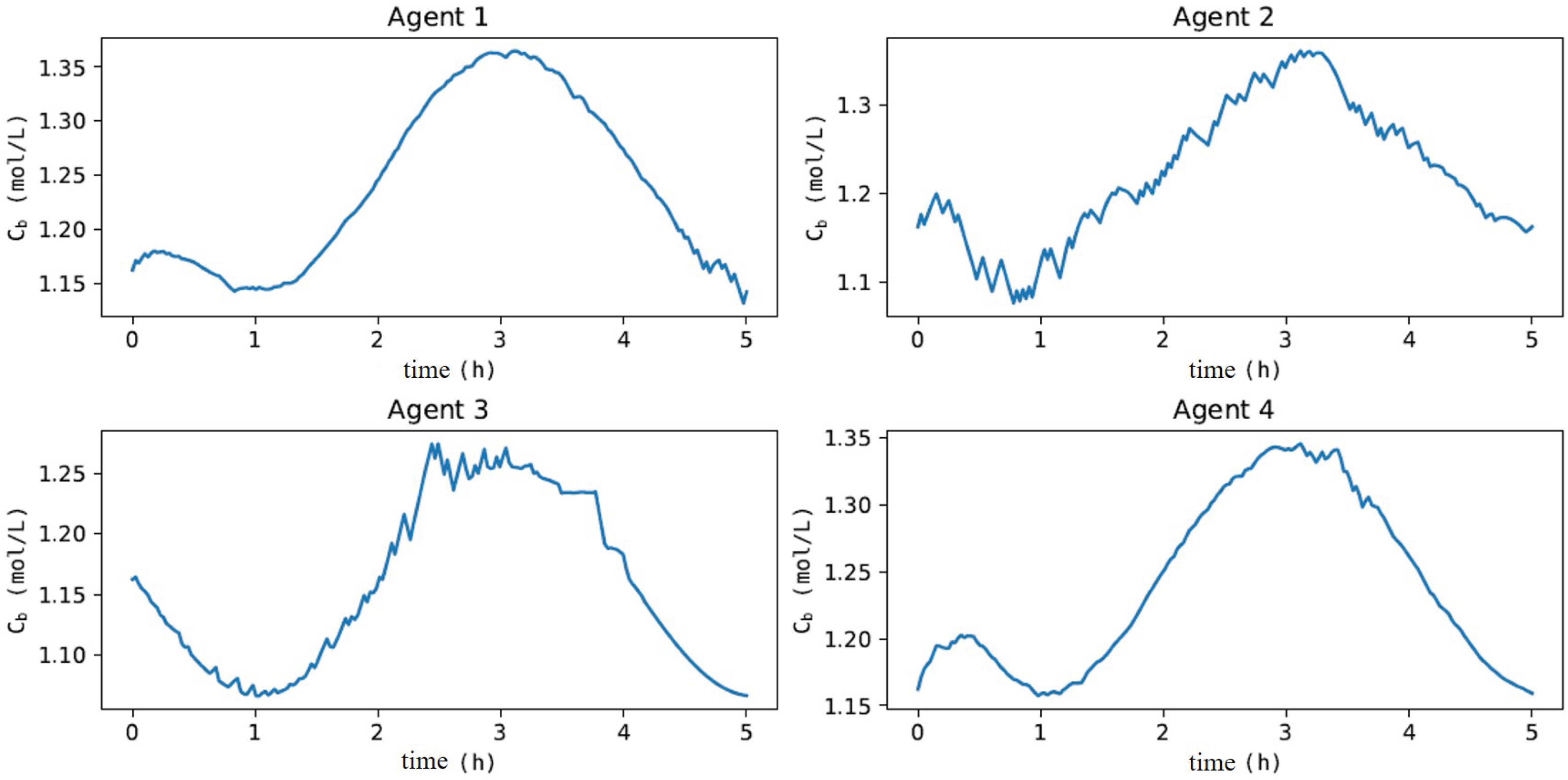

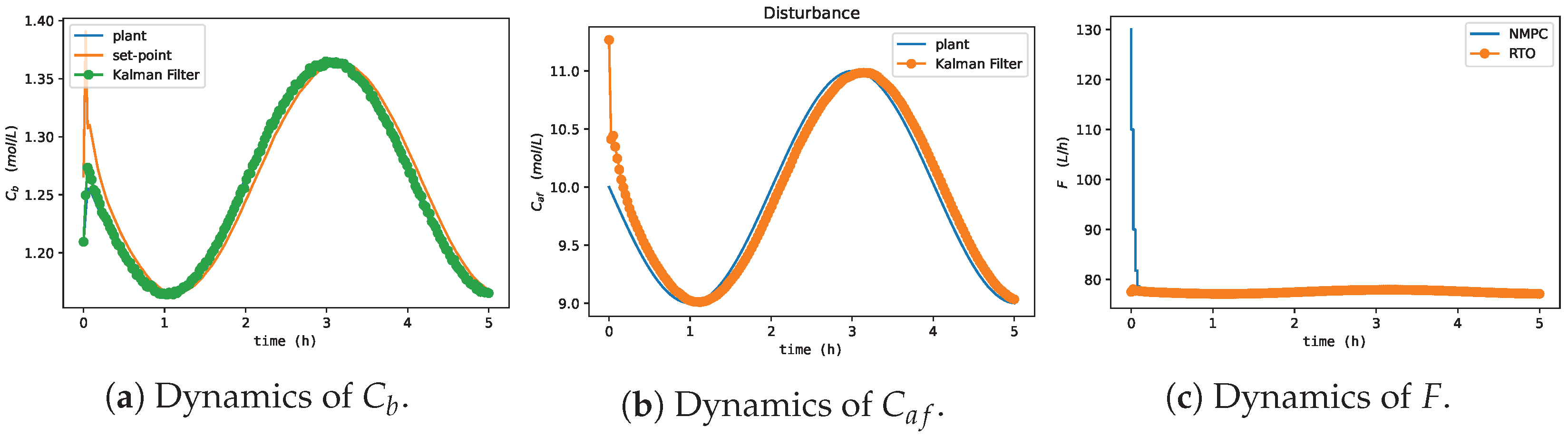

Validation for Process Condition 1

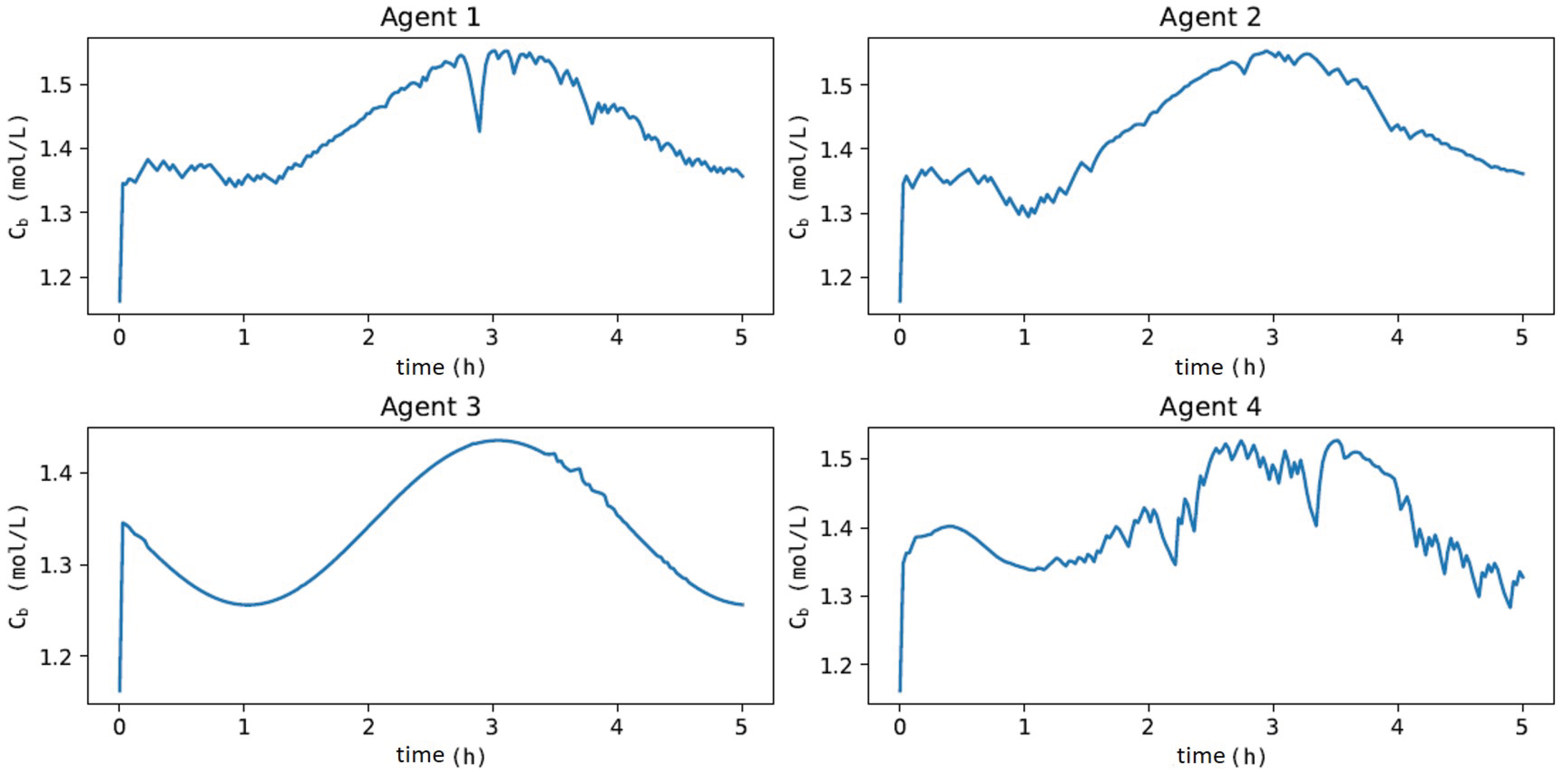

Validation for Process Condition 2

5. Conclusions

- There are a huge number of RL applications not considering the economic optimization of the plant;

- Almost all applications are restricted to validation with bench-scale control experiments or based on simulation;

- There is a consensus in the literature that extensive offline training is indispensable to obtain adequate control agents regardless of the process;

- The definition of the reinforcement signal (reward) must be rigorously performed to adequately guide the agents’ learning, which must be penalized when it is far from the condition considered ideal or when it results in impossible or unfeasible state transitions;

- The benchmark study of RL confirmed the hypothesis that cooperative control agents based on the MADDPG algorithm (i.e., one-layer approach) could be an option for the HRTO approach;

- Learning with cooperative control agents improved the learning rate (Agents 1 and 4) through the collection of experiences of sub-optimal policies (Agents 2 and 3);

- The parallel implementation with MADDPG is possible;

- The benefits of the collection of experiences with MADDPG depend on a trustworthy process simulation;

- Learning with MADDPG is fundamentally more difficult than the single agent (DDPG), especially for large-scale processes due to the dimensionality problem;

- It is necessary to develop RL algorithms to handle security constraints to ensure control stability and investigate applications for small-scale processes.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Skogestad, S. Control structure design for complete chemical plants. Comput. Chem. Eng. 2004, 28, 219–234. [Google Scholar] [CrossRef]

- Skogestad, S. Plantwide control: The search for the self-optimizing control structure. J. Process Control 2000, 10, 487–507. [Google Scholar] [CrossRef]

- Forbes, J.F.; Marlin, T.E. Model accuracy for economic optimizing controllers: The bias update case. Ind. Eng. Chem. Res. 1994, 33, 1919–1929. [Google Scholar] [CrossRef]

- Miletic, I.; Marlin, T. Results analysis for real-time optimization (RTO): Deciding when to change the plant operation. Comput. Chem. Eng. 1996, 20, S1077–S1082. [Google Scholar] [CrossRef]

- Mochizuki, S.; Saputelli, L.A.; Kabir, C.S.; Cramer, R.; Lochmann, M.; Reese, R.; Harms, L.; Sisk, C.; Hite, J.R.; Escorcia, A. Real time optimization: Classification and assessment. In Proceedings of the SPE Annual Technical Conference and Exhibition, Houston, TX, USA, 27–29 September 2004. [Google Scholar]

- Bischoff, K.B.; Denn, M.M.; Seinfeld, J.H.; Stephanopoulos, G.; Chakraborty, A.; Peppas, N.; Ying, J.; Wei, J. Advances in Chemical Engineering; Elsevier: Amsterdam, The Netherlands, 2001. [Google Scholar]

- Krishnamoorthy, D.; Skogestad, S. Real-Time Optimization as a Feedback Control Problem—A Review. Comput. Chem. Eng. 2022, 161, 107723. [Google Scholar] [CrossRef]

- Sequeira, S.E.; Graells, M.; Puigjaner, L. Real-time evolution for on-line optimization of continuous processes. Ind. Eng. Chem. Res. 2002, 41, 1815–1825. [Google Scholar] [CrossRef]

- Adetola, V.; Guay, M. Integration of real-time optimization and model predictive control. J. Process Control 2010, 20, 125–133. [Google Scholar] [CrossRef]

- Backx, T.; Bosgra, O.; Marquardt, W. Integration of model predictive control and optimization of processes: Enabling technology for market driven process operation. IFAC Proc. Vol. 2000, 33, 249–260. [Google Scholar] [CrossRef]

- Yip, W.; Marlin, T.E. The effect of model fidelity on real-time optimization performance. Comput. Chem. Eng. 2004, 28, 267–280. [Google Scholar] [CrossRef]

- Biegler, L.; Yang, X.; Fischer, G. Advances in sensitivity-based nonlinear model predictive control and dynamic real-time optimization. J. Process Control 2015, 30, 104–116. [Google Scholar] [CrossRef]

- Krishnamoorthy, D.; Foss, B.; Skogestad, S. Steady-state real-time optimization using transient measurements. Comput. Chem. Eng. 2018, 115, 34–45. [Google Scholar] [CrossRef]

- Matias, J.O.; Le Roux, G.A. Real-time Optimization with persistent parameter adaptation using online parameter estimation. J. Process Control 2018, 68, 195–204. [Google Scholar] [CrossRef]

- Matias, J.; Oliveira, J.P.; Le Roux, G.A.; Jäschke, J. Steady-state real-time optimization using transient measurements on an experimental rig. J. Process Control 2022, 115, 181–196. [Google Scholar] [CrossRef]

- Valluru, J.; Purohit, J.L.; Patwardhan, S.C.; Mahajani, S.M. Adaptive optimizing control of an ideal reactive distillation column. IFAC-PapersOnLine 2015, 48, 489–494. [Google Scholar] [CrossRef]

- Zanin, A.; de Gouvea, M.T.; Odloak, D. Industrial implementation of a real-time optimization strategy for maximizing production of LPG in a FCC unit. Comput. Chem. Eng. 2000, 24, 525–531. [Google Scholar] [CrossRef]

- Zanin, A.; De Gouvea, M.T.; Odloak, D. Integrating real-time optimization into the model predictive controller of the FCC system. Control Eng. Pract. 2002, 10, 819–831. [Google Scholar] [CrossRef]

- Ellis, M.; Durand, H.; Christofides, P.D. A tutorial review of economic model predictive control methods. J. Process Control 2014, 24, 1156–1178. [Google Scholar] [CrossRef]

- Mayne, D.Q. Model predictive control: Recent developments and future promise. Automatica 2014, 50, 2967–2986. [Google Scholar] [CrossRef]

- Wang, X.; Mahalec, V.; Qian, F. Globally optimal dynamic real time optimization without model mismatch between optimization and control layer. Comput. Chem. Eng. 2017, 104, 64–75. [Google Scholar] [CrossRef]

- Uc-Cetina, V.; Navarro-Guerrero, N.; Martin-Gonzalez, A.; Weber, C.; Wermter, S. Survey on reinforcement learning for language processing. Artif. Intell. Rev. 2022, 1–33. [Google Scholar] [CrossRef]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Pérez, P. Deep reinforcement learning for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4909–4926. [Google Scholar] [CrossRef]

- Peng, X.B.; Andrychowicz, M.; Zaremba, W.; Abbeel, P. Sim-to-real transfer of robotic control with dynamics randomization. In Proceedings of the 2018 IEEE international conference on robotics and automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 3803–3810. [Google Scholar]

- Wulfmeier, M.; Posner, I.; Abbeel, P. Mutual alignment transfer learning. In Proceedings of the Conference on Robot Learning. PMLR, Mountain View, CA, USA, 13–15 November 2017; pp. 281–290. [Google Scholar]

- Ma, Y.; Zhu, W.; Benton, M.G.; Romagnoli, J. Continuous control of a polymerization system with deep reinforcement learning. J. Process Control 2019, 75, 40–47. [Google Scholar] [CrossRef]

- Dogru, O.; Wieczorek, N.; Velswamy, K.; Ibrahim, F.; Huang, B. Online reinforcement learning for a continuous space system with experimental validation. J. Process Control 2021, 104, 86–100. [Google Scholar] [CrossRef]

- Mowbray, M.; Petsagkourakis, P.; Chanona, E.A.D.R.; Smith, R.; Zhang, D. Safe Chance Constrained Reinforcement Learning for Batch Process Control. arXiv 2021, arXiv:2104.11706. [Google Scholar]

- Petsagkourakis, P.; Sandoval, I.O.; Bradford, E.; Zhang, D.; del Rio-Chanona, E.A. Reinforcement learning for batch-to-batch bioprocess optimisation. In Computer Aided Chemical Engineering; Elsevier: Amsterdam, The Netherlands, 2019; Volume 46, pp. 919–924. [Google Scholar]

- Petsagkourakis, P.; Sandoval, I.O.; Bradford, E.; Zhang, D.; del Rio-Chanona, E.A. Reinforcement learning for batch bioprocess optimization. Comput. Chem. Eng. 2020, 133, 106649. [Google Scholar] [CrossRef]

- Yoo, H.; Byun, H.E.; Han, D.; Lee, J.H. Reinforcement learning for batch process control: Review and perspectives. Annu. Rev. Control 2021, 52, 108–119. [Google Scholar] [CrossRef]

- Faria, R.D.R.; Capron, B.D.O.; Secchi, A.R.; de Souza, M.B. Where Reinforcement Learning Meets Process Control: Review and Guidelines. Processes 2022, 10, 2311. [Google Scholar] [CrossRef]

- Powell, K.M.; Machalek, D.; Quah, T. Real-time optimization using reinforcement learning. Comput. Chem. Eng. 2020, 143, 107077. [Google Scholar] [CrossRef]

- Thorndike, E.L. Animal intelligence: An experimental study of the associative processes in animals. Psychol. Rev. Monogr. Suppl. 1898, 2, i. [Google Scholar] [CrossRef]

- Minsky, M. Neural nets and the brain-model problem. Unpublished Doctoral Dissertation, Princeton University, Princeton, NJ, USA, 1954. [Google Scholar]

- Bellman, R. A Markovian decision process. J. Math. Mech. 1957, 6, 679–684. [Google Scholar] [CrossRef]

- Bellman, R. Dynamic Programming; Princeton University Press: Princeton, NJ, USA, 1957; Volume 95. [Google Scholar]

- Marvin, M.; Seymour, A.P. Perceptrons; MIT Press: Cambridge, MA, USA, 1969. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Sugiyama, M. Statistical Reinforcement Learning: Modern Machine Learning Approaches; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Watkins, C.J.C.H. Learning from Delayed Rewards; King’s College: Oxford, UK, 1989. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement learning: An introduction; MIT Press: London, UK, 2018. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press: Cambridge, UK, 1998; Volume 135. [Google Scholar]

- Williams, R. Toward a Theory of Reinforcement-Learning Connectionist Systems; Technical Report NU-CCS-88-3; Northeastern University: Boston, MA, USA, 1988. [Google Scholar]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic Policy Gradient Algorithms. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014; Volume 32, pp. 387–395. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Fujimoto, S.; Van Hoof, H.; Meger, D. Addressing function approximation error in actor–critic methods. arXiv 2018, arXiv:1802.09477. [Google Scholar]

- LeCun, Y.; Touresky, D.; Hinton, G.; Sejnowski, T. A theoretical framework for back-propagation. In Proceedings of the 1988 Connectionist Models Summer School; CMU, Morgan Kaufmann: Pittsburgh, PA, USA, 1988; Volume 1, pp. 21–28. [Google Scholar]

- Sutton, R.S.; McAllester, D.A.; Singh, S.P.; Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst. 2000, 12, 1057–1063. [Google Scholar]

- Sutton, R.S. Learning to predict by the methods of temporal differences. Mach. Learn. 1988, 3, 9–44. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Hwangbo, S.; Sin, G. Design of control framework based on deep reinforcement learning and Monte-Carlo sampling in downstream separation. Comput. Chem. Eng. 2020, 140, 106910. [Google Scholar] [CrossRef]

- Yoo, H.; Kim, B.; Kim, J.W.; Lee, J.H. Reinforcement learning based optimal control of batch processes using Monte-Carlo deep deterministic policy gradient with phase segmentation. Comput. Chem. Eng. 2021, 144, 107133. [Google Scholar] [CrossRef]

- Oh, D.H.; Adams, D.; Vo, N.D.; Gbadago, D.Q.; Lee, C.H.; Oh, M. Actor-critic reinforcement learning to estimate the optimal operating conditions of the hydrocracking process. Comput. Chem. Eng. 2021, 149, 107280. [Google Scholar] [CrossRef]

- Ramanathan, P.; Mangla, K.K.; Satpathy, S. Smart controller for conical tank system using reinforcement learning algorithm. Measurement 2018, 116, 422–428. [Google Scholar] [CrossRef]

- Bougie, N.; Onishi, T.; Tsuruoka, Y. Data-Efficient Reinforcement Learning from Controller Guidance with Integrated Self-Supervision for Process Control. IFAC-PapersOnLine 2022, 55, 863–868. [Google Scholar] [CrossRef]

- Spielberg, S.; Tulsyan, A.; Lawrence, N.P.; Loewen, P.D.; Bhushan Gopaluni, R. Toward self-driving processes: A deep reinforcement learning approach to control. AIChE J. 2019, 65, e16689. [Google Scholar] [CrossRef]

- Lawrence, N.P.; Forbes, M.G.; Loewen, P.D.; McClement, D.G.; Backström, J.U.; Gopaluni, R.B. Deep reinforcement learning with shallow controllers: An experimental application to PID tuning. Control Eng. Pract. 2022, 121, 105046. [Google Scholar] [CrossRef]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Badgwell, T.A.; Lee, J.H.; Liu, K.H. Reinforcement learning–overview of recent progress and implications for process control. In Computer Aided Chemical Engineering; Elsevier: Amsterdam, The Netherlands, 2018; Volume 44, pp. 71–85. [Google Scholar]

- Buşoniu, L.; de Bruin, T.; Tolić, D.; Kober, J.; Palunko, I. Reinforcement learning for control: Performance, stability, and deep approximators. Annu. Rev. Control 2018, 46, 8–28. [Google Scholar] [CrossRef]

- Nian, R.; Liu, J.; Huang, B. A review on reinforcement learning: Introduction and applications in industrial process control. Comput. Chem. Eng. 2020, 139, 106886. [Google Scholar] [CrossRef]

- Mendoza, D.F.; Graciano, J.E.A.; dos Santos Liporace, F.; Le Roux, G.A.C. Assessing the reliability of different real-time optimization methodologies. Can. J. Chem. Eng. 2016, 94, 485–497. [Google Scholar] [CrossRef]

- Marchetti, A.G.; François, G.; Faulwasser, T.; Bonvin, D. Modifier adaptation for real-time optimization—Methods and applications. Processes 2016, 4, 55. [Google Scholar] [CrossRef]

- Câmara, M.M.; Quelhas, A.D.; Pinto, J.C. Performance evaluation of real industrial RTO systems. Processes 2016, 4, 44. [Google Scholar] [CrossRef]

- Alhazmi, K.; Albalawi, F.; Sarathy, S.M. A reinforcement learning-based economic model predictive control framework for autonomous operation of chemical reactors. Chem. Eng. J. 2022, 428, 130993. [Google Scholar] [CrossRef]

- Kim, J.W.; Park, B.J.; Yoo, H.; Oh, T.H.; Lee, J.H.; Lee, J.M. A model-based deep reinforcement learning method applied to finite-horizon optimal control of nonlinear control-affine system. J. Process Control 2020, 87, 166–178. [Google Scholar] [CrossRef]

- Oh, T.H.; Park, H.M.; Kim, J.W.; Lee, J.M. Integration of reinforcement learning and model predictive control to optimize semi-batch bioreactor. AIChE J. 2022, 68, e17658. [Google Scholar] [CrossRef]

- Shah, H.; Gopal, M. Model-free predictive control of nonlinear processes based on reinforcement learning. IFAC-PapersOnLine 2016, 49, 89–94. [Google Scholar] [CrossRef]

- Recht, B. A tour of reinforcement learning: The view from continuous control. Annu. Rev. Control Robot. Auton. Syst. 2019, 2, 253–279. [Google Scholar] [CrossRef]

- Kumar, V.; Nakra, B.; Mittal, A. A review on classical and fuzzy PID controllers. Int. J. Intell. Control Syst. 2011, 16, 170–181. [Google Scholar]

- awryńczuk, M.; Marusak, P.M.; Tatjewski, P. Cooperation of model predictive control with steady-state economic optimisation. Control Cybern. 2008, 37, 133–158. [Google Scholar]

- Lowe, R.; Wu, Y.; Tamar, A.; Harb, J.; Abbeel, P.; Mordatch, I. Multi-agent actor–critic for mixed cooperative-competitive environments. arXiv 2017, arXiv:1706.02275. [Google Scholar]

- Anderson, C.W. Learning and Problem Solving with Multilayer Connectionist Systems. Ph.D. Thesis, University of Massachusetts at Amherst, Amherst, MA, USA, 1986. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Chen, K.; Wang, H.; Valverde-Pérez, B.; Zhai, S.; Vezzaro, L.; Wang, A. Optimal control towards sustainable wastewater treatment plants based on multi-agent reinforcement learning. Chemosphere 2021, 279, 130498. [Google Scholar] [CrossRef]

| Main Topic | Algorithm | References |

|---|---|---|

| SSRTO | Deep actor–critic | [33] |

| Supervisory control | REINFORCE | [29,30] |

| Deep Q-learning | [53] | |

| PPO | [28] | |

| DDPG | [26] | |

| DDPG | [54] | |

| A2C | [55] | |

| Regulatory control | Deep Q-learning | [56] |

| PPO | [57] | |

| DDPG | [58] | |

| DDPG | [59] | |

| A3C | [27] |

| Hyperparameters | Value |

|---|---|

| MADDPG | |

| Discount factor () | 0.99 |

| Batch size (K) | 50 |

| Buffer (D) | 5000 |

| Episodes (N) | 8000 |

| Time constant () | 0.005 |

| Number of agents () | 4 |

| (0.1, 0.1, 1) | |

| Actor Network | |

| Activation function | ReLU, Tanh |

| Layers () | 4 |

| Neurons () | (200, 150, 150, 120) |

| Critic Network | |

| Activation function | ReLU, Linear |

| Layers () | 2 |

| Neurons () | (250, 150) |

| DNN Training Algorithm | |

| Optimizer | Adam |

| Actor learning rate () | 0.0035 |

| Critic learning rate () | 0.035 |

| Decay learning rate () | 0.1 |

| Agent | Average Yield | Online Experiment Time |

|---|---|---|

| 1 | 1.2414 | 1.5 s |

| 2 | 1.2015 | 1.5 s |

| 3 | 1.1508 | 1.5 s |

| 4 | 1.2405 | 1.5 s |

| HRTO | 1.2534 | 16 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Faria, R.d.R.; Capron, B.D.O.; de Souza Jr., M.B.; Secchi, A.R. One-Layer Real-Time Optimization Using Reinforcement Learning: A Review with Guidelines. Processes 2023, 11, 123. https://doi.org/10.3390/pr11010123

Faria RdR, Capron BDO, de Souza Jr. MB, Secchi AR. One-Layer Real-Time Optimization Using Reinforcement Learning: A Review with Guidelines. Processes. 2023; 11(1):123. https://doi.org/10.3390/pr11010123

Chicago/Turabian StyleFaria, Ruan de Rezende, Bruno Didier Olivier Capron, Maurício B. de Souza Jr., and Argimiro Resende Secchi. 2023. "One-Layer Real-Time Optimization Using Reinforcement Learning: A Review with Guidelines" Processes 11, no. 1: 123. https://doi.org/10.3390/pr11010123

APA StyleFaria, R. d. R., Capron, B. D. O., de Souza Jr., M. B., & Secchi, A. R. (2023). One-Layer Real-Time Optimization Using Reinforcement Learning: A Review with Guidelines. Processes, 11(1), 123. https://doi.org/10.3390/pr11010123