Intelligent Facemask Coverage Detector in a World of Chaos

Abstract

:1. Introduction

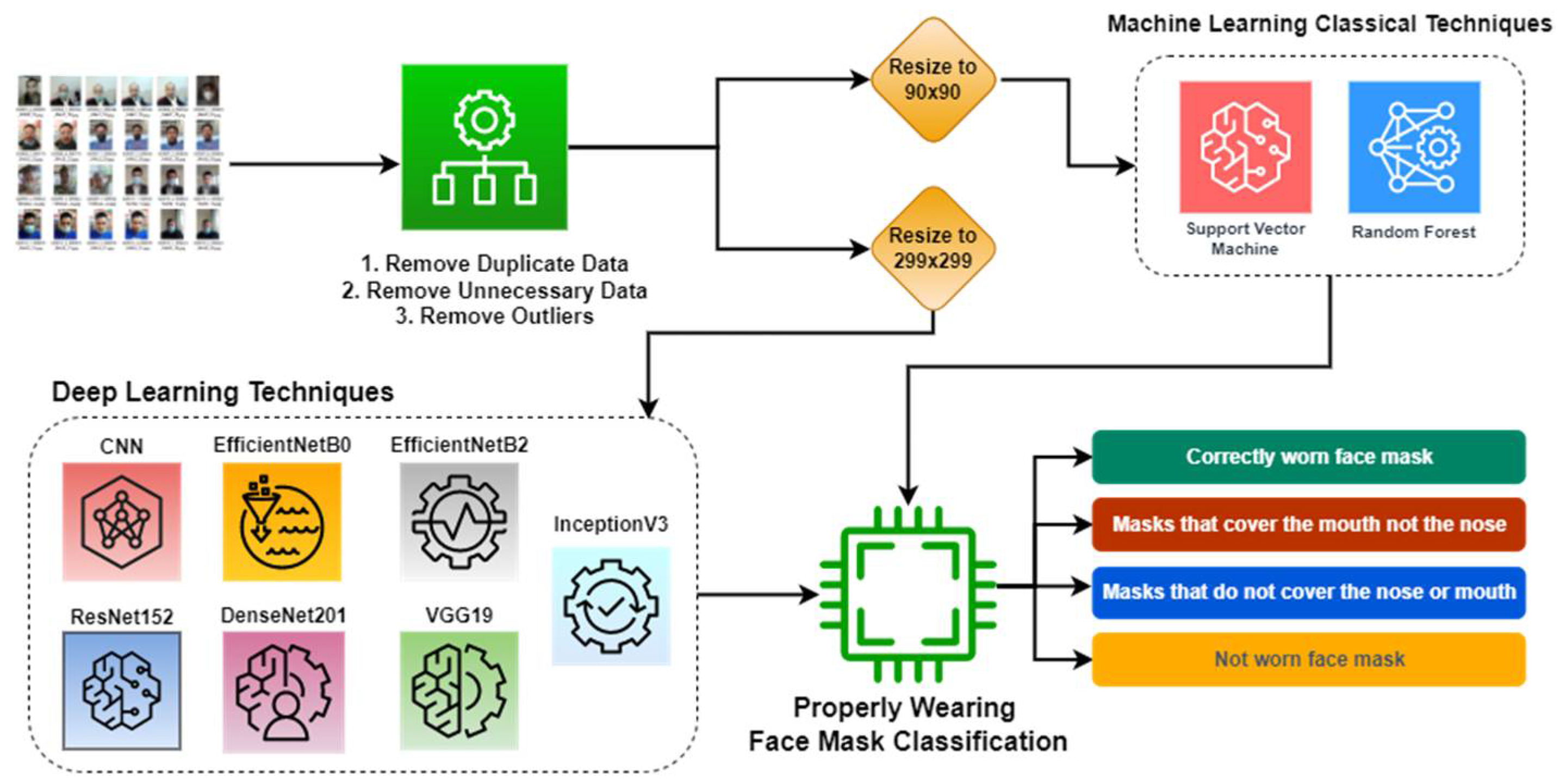

- The proposed scheme checks whether the person wears a mask or not.

- It determines the coverage area of the facemask on the human face and classifies the facemask facial image into four categories: appropriately wearing a mask (covering both nose and mouth), partially wearing a mask (covering mouth but not nose), inappropriately wearing a mask (neither covering mouth nor nose), and not wearing a mask at all.

- The paper investigates state-of-the-art pre-trained models and analyzes the performance with traditional machine learning and deep learning models for facemask coverage.

- The study analyzes the performance of various models with several metrics, such as accuracy, F1-score, precision, and recall.

- The application of the proposed system ensures the mask fits and covers essential areas, including the nose, mouth, and chin.

2. Materials and Methods

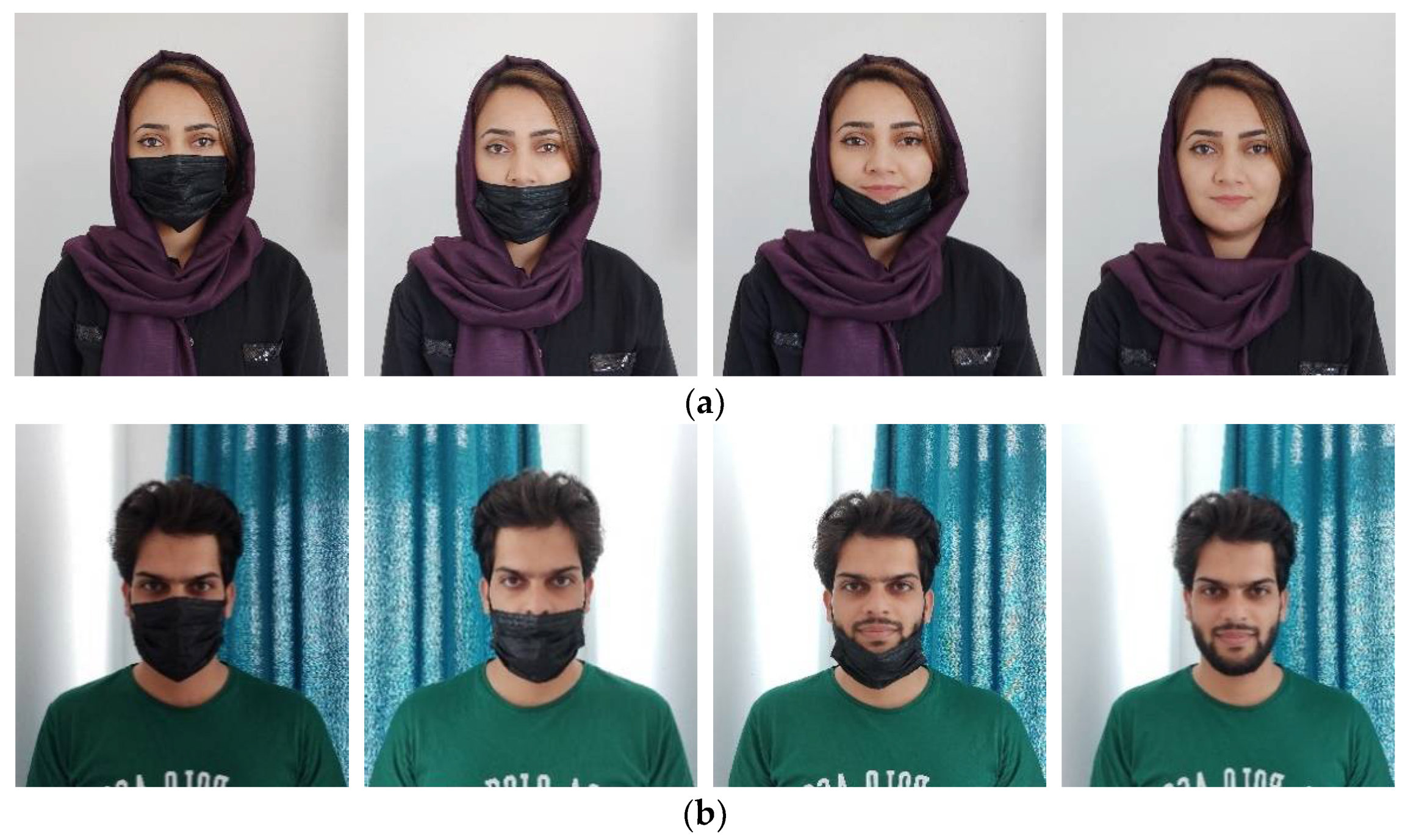

2.1. Dataset

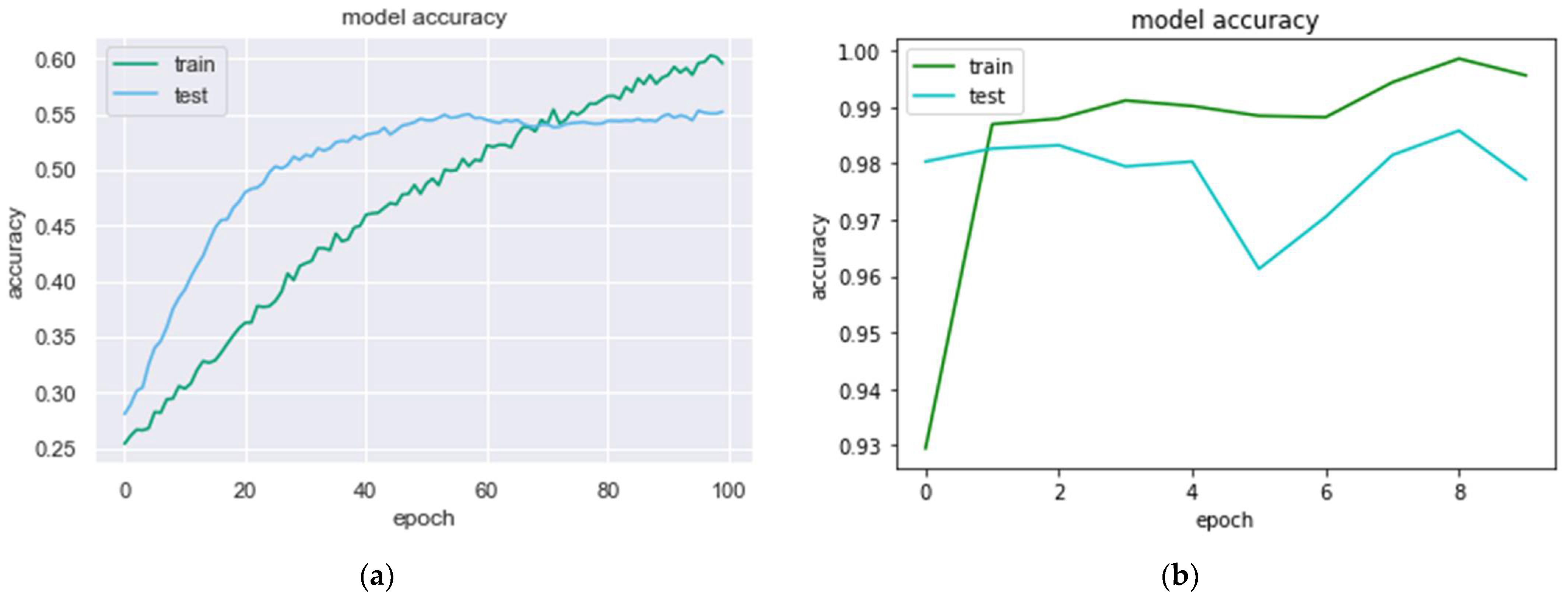

2.2. CNN Model Architecture

2.3. Machine Learning Classical Algorithms

2.3.1. Random Forest

2.3.2. Support Vector Machine

2.4. Deep Neural Network Models

2.4.1. InceptionV3

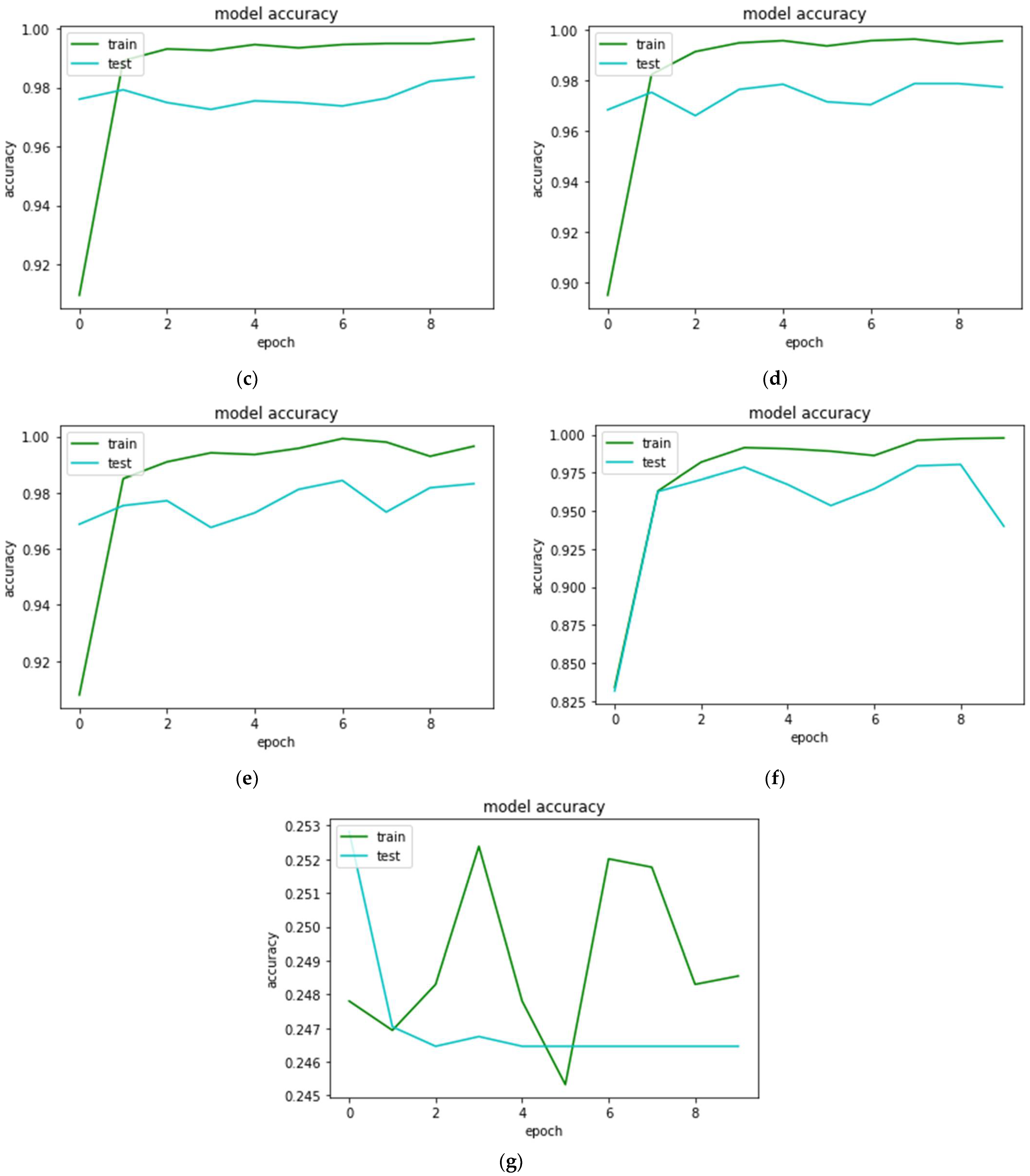

2.4.2. EfficientNetB0 and EfficientNetB2

2.4.3. DenseNet201

2.4.4. ResNet201

2.4.5. VGG19

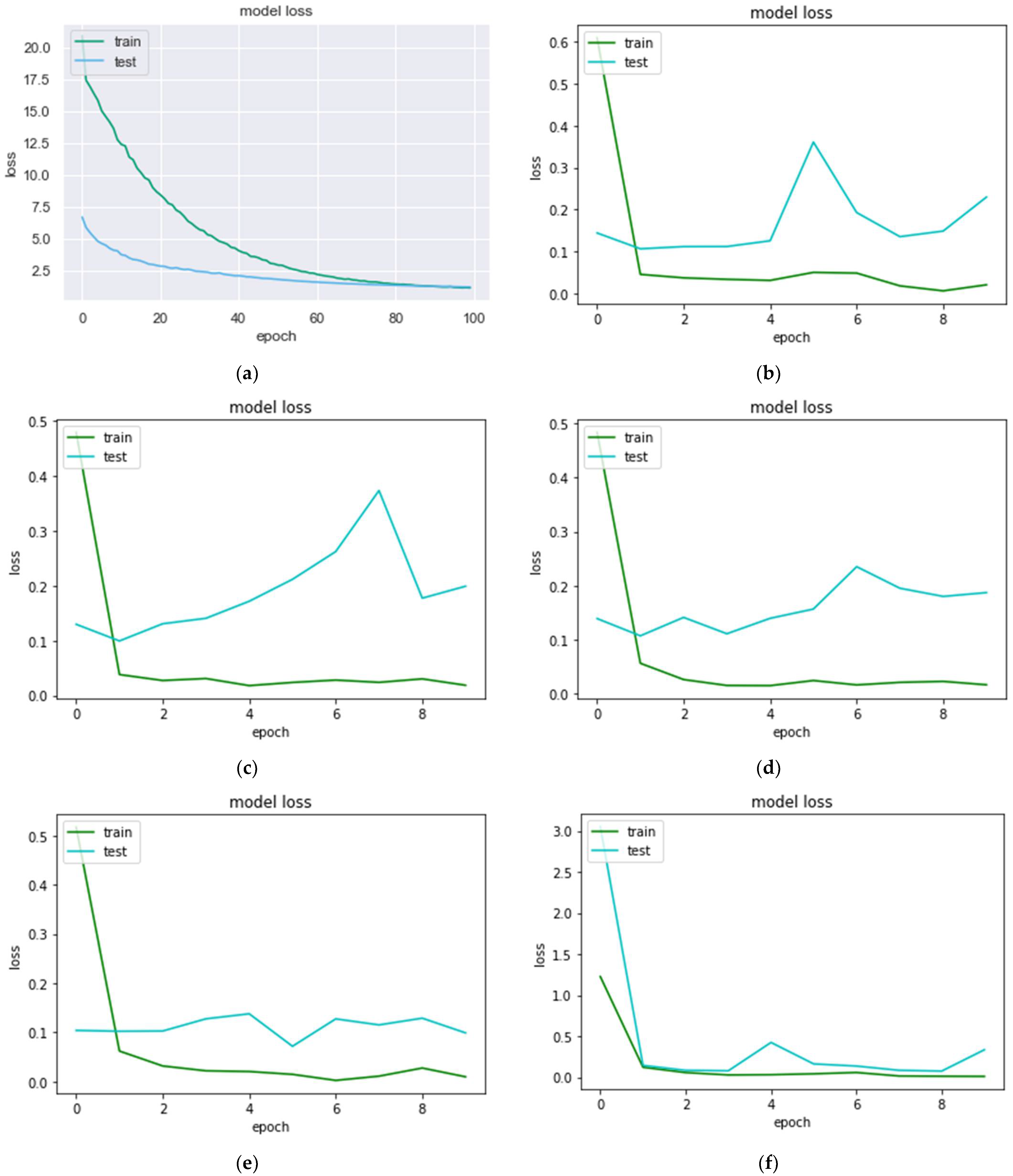

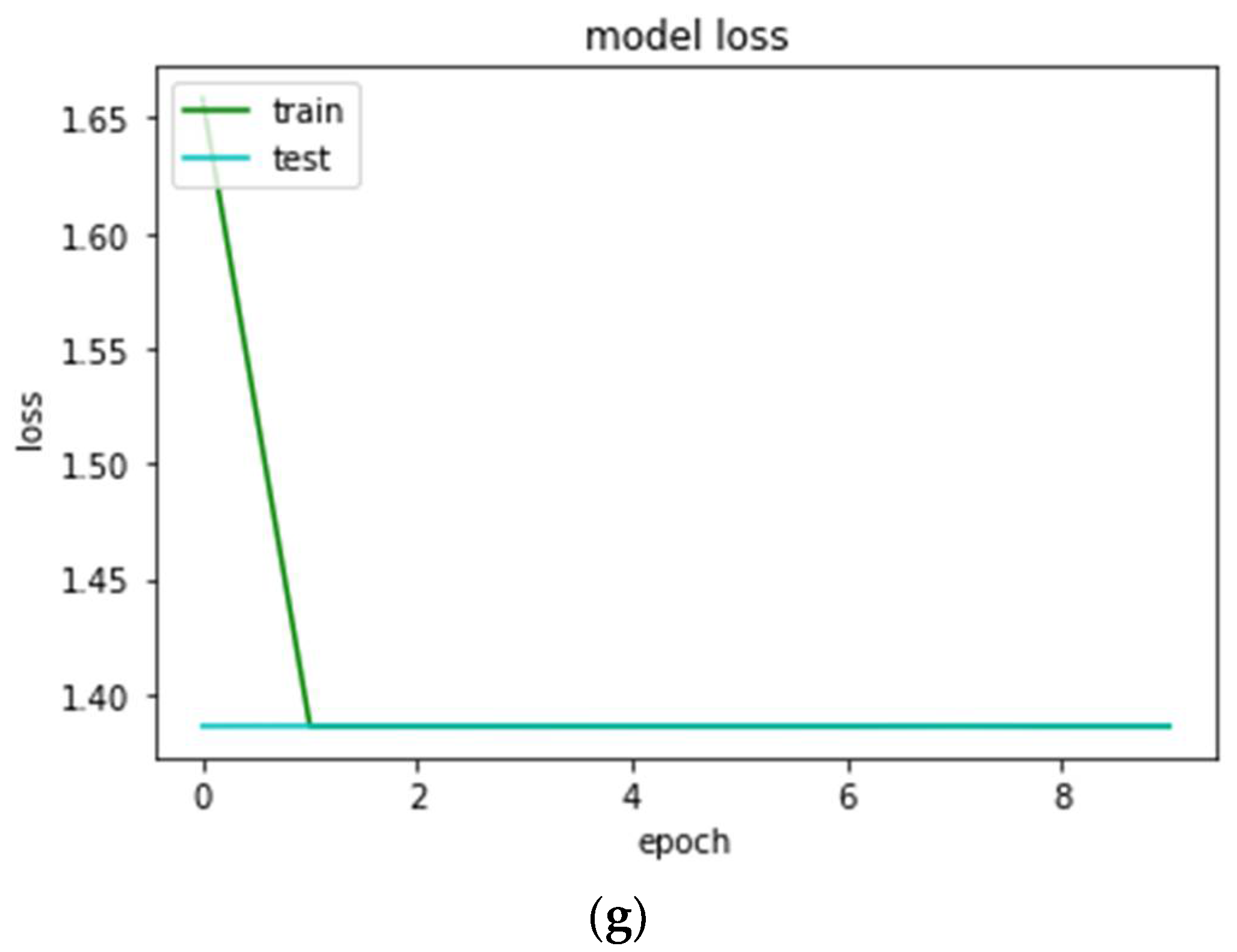

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, C.; Horby, P.W.; Hayden, F.G.; Gao, G.F. A novel coronavirus outbreak of global health concern. Lancet 2020, 395, 470–473. [Google Scholar] [CrossRef]

- Yadav, S. Deep Learning based Safe Social Distancing and Face Mask Detection in Public Areas for COVID-19 Safety Guidelines Adherence. Int. J. Res. Appl. Sci. Eng. Technol. 2020, 8, 1368–1375. [Google Scholar] [CrossRef]

- Rasheed, J.; Jamil, A.; Hameed, A.A.; Al-Turjman, F.; Rasheed, A. COVID-19 in the Age of Artificial Intelligence: A Comprehensive Review. Interdiscip. Sci. Comput. Life Sci. 2021, 13, 153–175. [Google Scholar] [CrossRef] [PubMed]

- Arora, D.; Garg, M.; Gupta, M. Diving deep in Deep Convolutional Neural Network. In Proceedings of the IEEE 2020 2nd International Conference on Advances in Computing, Communication Control and Networking, ICACCCN 2020, Greater Noida, India, 18–19 December 2020; pp. 749–751. [Google Scholar] [CrossRef]

- Rasheed, J.; Waziry, S.; Alsubai, S.; Abu-Mahfouz, A.M. An Intelligent Gender Classification System in the Era of Pandemic Chaos with Veiled Faces. Processes 2022, 10, 1427. [Google Scholar] [CrossRef]

- Rasheed, J.; Alimovski, E.; Rasheed, A.; Sirin, Y.; Jamil, A.; Yesiltepe, M. Effects of Glow Data Augmentation on Face Recognition System based on Deep Learning. In Proceedings of the 2020 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 26–28 June 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Rasheed, J. Analyzing the Effect of Filtering and Feature-Extraction Techniques in a Machine Learning Model for Identification of Infectious Disease Using Radiography Imaging. Symmetry 2022, 14, 1398. [Google Scholar] [CrossRef]

- Rasheed, J.; Shubair, R.M. Screening Lung Diseases Using Cascaded Feature Generation and Selection Strategies. Healthcare 2022, 10, 1313. [Google Scholar] [CrossRef] [PubMed]

- Song, Z.; Nguyen, K.; Nguyen, T.; Cho, C.; Gao, J. Spartan Face Mask Detection and Facial Recognition System. Healthcare 2022, 10, 87. [Google Scholar] [CrossRef] [PubMed]

- Suresh, K.; Palangappa, M.; Bhuvan, S. Face Mask Detection by using Optimistic Convolutional Neural Network. In Proceedings of the 6th International Conference on Inventive Computation Technologies, ICICT 2021, Coimbatore, India, 20–22 January 2021; pp. 1084–1089. [Google Scholar] [CrossRef]

- Srinivasan, S.; Singh, R.R.; Biradar, R.R.; Revathi, S. COVID-19 monitoring system using social distancing and face mask detection on surveillance video datasets. In Proceedings of the 2021 International Conference on Emerging Smart Computing and Informatics, ESCI 2021, Pune, India, 5–7 March 2021; pp. 449–455. [Google Scholar] [CrossRef]

- Susanto, S.; Putra, F.A.; Analia, R.; Suciningtyas, I.K.L.N. The face mask detection for preventing the spread of COVID-19 at politeknik negeri batam. In Proceedings of the ICAE 2020—3rd International Conference on Applied Engineering, Batam, Indonesia, 7–8 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Venkateswarlu, I.B.; Kakarla, J.; Prakash, S. Face mask detection using MobileNet and global pooling block. In Proceedings of the 4th IEEE Conference on Information and Communication Technology, CICT 2020, Chennai, India, 3–5 December 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Siradjuddin, I.A.; Muntasa, A. Faster Region-based Convolutional Neural Network for Mask Face Detection. In Proceedings of the 2021 5th International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 24–25 November 2021; pp. 282–286. [Google Scholar] [CrossRef]

- Xu, M.; Wang, H.; Yang, S.; Li, R. Mask wearing detection method based on SSD-Mask algorithm. In Proceedings of the 2020 International Conference on Computer Science and Management Technology, ICCSMT 2020, Shanghai, China, 20–22 November 2020; pp. 138–143. [Google Scholar] [CrossRef]

- Negi, A.; Kumar, K.; Chauhan, P.; Rajput, R.S. Deep neural architecture for face mask detection on simulated masked face dataset against covid-19 pandemic. In Proceedings of the IEEE 2021 International Conference on Computing, Communication, and Intelligent Systems, ICCCIS 2021, Greater Noida, India, 19–20 February 2021; pp. 595–600. [Google Scholar] [CrossRef]

- Nujella, R.B.P.; Sahu, S.; Prakash, S. Face-Mask Detection to Control the COVID-19 Spread Employing Deep Learning Approach. In Proceedings of the 2021 5th International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 22–23 October 2021; pp. 1–3. [Google Scholar] [CrossRef]

- Gowri, S. A Real-Time Face Mask Detection Using SSD and MobileNetV2. In Proceedings of the 2021 4th International Conference on Computing and Communications Technologies (ICCCT), Chennai, India, 16–17 December 2021; pp. 144–148. [Google Scholar] [CrossRef]

- Roman, K. 500 GB of Images with People Wearing Masks. Part 3|Kaggle 2021. Available online: https://www.kaggle.com/datasets/tapakah68/medical-masks-p3 (accessed on 30 March 2022).

- Sarica, A.; Cerasa, A.; Quattrone, A. Random Forest Algorithm for the Classification of Neuroimaging Data in Alzheimer’s Disease: A Systematic Review. Front. Aging Neurosci. 2017, 9, 329. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thai, L.H.; Hai, T.S.; Thuy, N.T. Image Classification using Support Vector Machine and Artificial Neural Network. Int. J. Inf. Technol. Comput. Sci. 2012, 4, 32–38. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; Volume 2019, pp. 10691–10700. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; Volume 2017, pp. 2261–2269. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; Volume 2016, pp. 770–778. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, Online, 10 April 2015; pp. 1–14. [Google Scholar]

- Tomás, J.; Rego, A.; Viciano-Tudela, S.; Lloret, J. Incorrect Facemask-Wearing Detection Using Convolutional Neural Networks with Transfer Learning. Healthcare 2021, 9, 1050. [Google Scholar] [CrossRef]

- Sertic, P.; Alahmar, A.; Akilan, T.; Javorac, M.; Gupta, Y. Intelligent Real-Time Face-Mask Detection System with Hardware Acceleration for COVID-19 Mitigation. Healthcare 2022, 10, 873. [Google Scholar] [CrossRef]

| Study | Method |

|---|---|

| [12] | MobileNetV2 |

| [14] | MobileNet |

| [15] | Faster R-CNN |

| [16] | SSD and SSD-Mask algorithms |

| [17] | CNN and VGG16 |

| [18] | MobileNet and OpenCV |

| [19] | SSD and MobileNetV2 |

| Mask Wearing Type | No. of Images |

|---|---|

| Correctly worn masks | 2884 |

| Masks that cover the mouth but not the nose | 2884 |

| Masks that do not cover the nose or mouth | 2884 |

| Not wearing a mask | 2884 |

| Total | 11,536 |

| Training Set | 8075 |

| Test Set | 3461 |

| Hyperparameters | Value |

|---|---|

| Optimizer | Adam |

| Number of epochs | 100 |

| Batch Size | 120 |

| Loss | categorical_crossentropy |

| Metrics | accuracy |

| Learning rate | 0.000001 |

| Hyperparameters | Value |

|---|---|

| Number of estimators | 20 |

| Criterion | entropy |

| Maximum depth | 3 |

| Model | Accuracy % | Precision % | Recall % | F1-Score % |

|---|---|---|---|---|

| CNN | 55.22 | 54.90 | 55.40 | 55.20 |

| Random Forest | 48.92 | 51.00 | 48.74 | 47.41 |

| SVM | 56.83 | 57.35 | 56.73 | 56.58 |

| InceptionV3 | 98.40 | 98.30 | 98.30 | 98.30 |

| EfficientNetB0 | 97.70 | 97.70 | 97.70 | 97.70 |

| EfficientNetB2 | 98.35 | 98.30 | 98.30 | 98.40 |

| DenseNet201 | 97.72 | 97.80 | 97.70 | 97.70 |

| ResNet152 | 93.99 | 94.60 | 94.00 | 94.00 |

| VGG19 | 24.65 | 31.20 | 25.00 | 24.60 |

| Study | Method | No. of Classes | Types of Detection | Result |

|---|---|---|---|---|

| [12] | MobileNetV2 | 2 | mask/no mask | 90.55% |

| [14] | MobileNet | 2 | mask/no mask | 99.0% |

| [15] | Faster R-CNN | 2 | mask/no mask | 73.0 % |

| [16] | SSD and SSD-Mask algorithms | 2 | mask/no mask | 86.3% and 88.2% |

| [17] | CNN and VGG16 | 2 | mask/no mask | 97.42% and 98.97% |

| [18] | MobileNet and OpenCV | 2 | mask/no mask | 99.41% |

| [19] | SSD and MobileNetV2 | 2 | mask/no mask | 99.0% |

| [29] | ResNet50V2 | 2 | mask/no mask | 99.0% |

| [28] | VGG16 | 6 | glasses/fit side/nose out/correct/bridge/no mask | 83.4% |

| Proposed | InceptionV3 | 4 | mouth-nose-chin covered/nose-chin covered/chin covered/no mask | 98.40% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Waziry, S.; Wardak, A.B.; Rasheed, J.; Shubair, R.M.; Yahyaoui, A. Intelligent Facemask Coverage Detector in a World of Chaos. Processes 2022, 10, 1710. https://doi.org/10.3390/pr10091710

Waziry S, Wardak AB, Rasheed J, Shubair RM, Yahyaoui A. Intelligent Facemask Coverage Detector in a World of Chaos. Processes. 2022; 10(9):1710. https://doi.org/10.3390/pr10091710

Chicago/Turabian StyleWaziry, Sadaf, Ahmad Bilal Wardak, Jawad Rasheed, Raed M. Shubair, and Amani Yahyaoui. 2022. "Intelligent Facemask Coverage Detector in a World of Chaos" Processes 10, no. 9: 1710. https://doi.org/10.3390/pr10091710

APA StyleWaziry, S., Wardak, A. B., Rasheed, J., Shubair, R. M., & Yahyaoui, A. (2022). Intelligent Facemask Coverage Detector in a World of Chaos. Processes, 10(9), 1710. https://doi.org/10.3390/pr10091710