A New Camera Calibration Technique for Serious Distortion

Abstract

:1. Introduction

2. Obtaining the Image Coordinate (u0, v0) and Distortion Coefficients k1 and k2

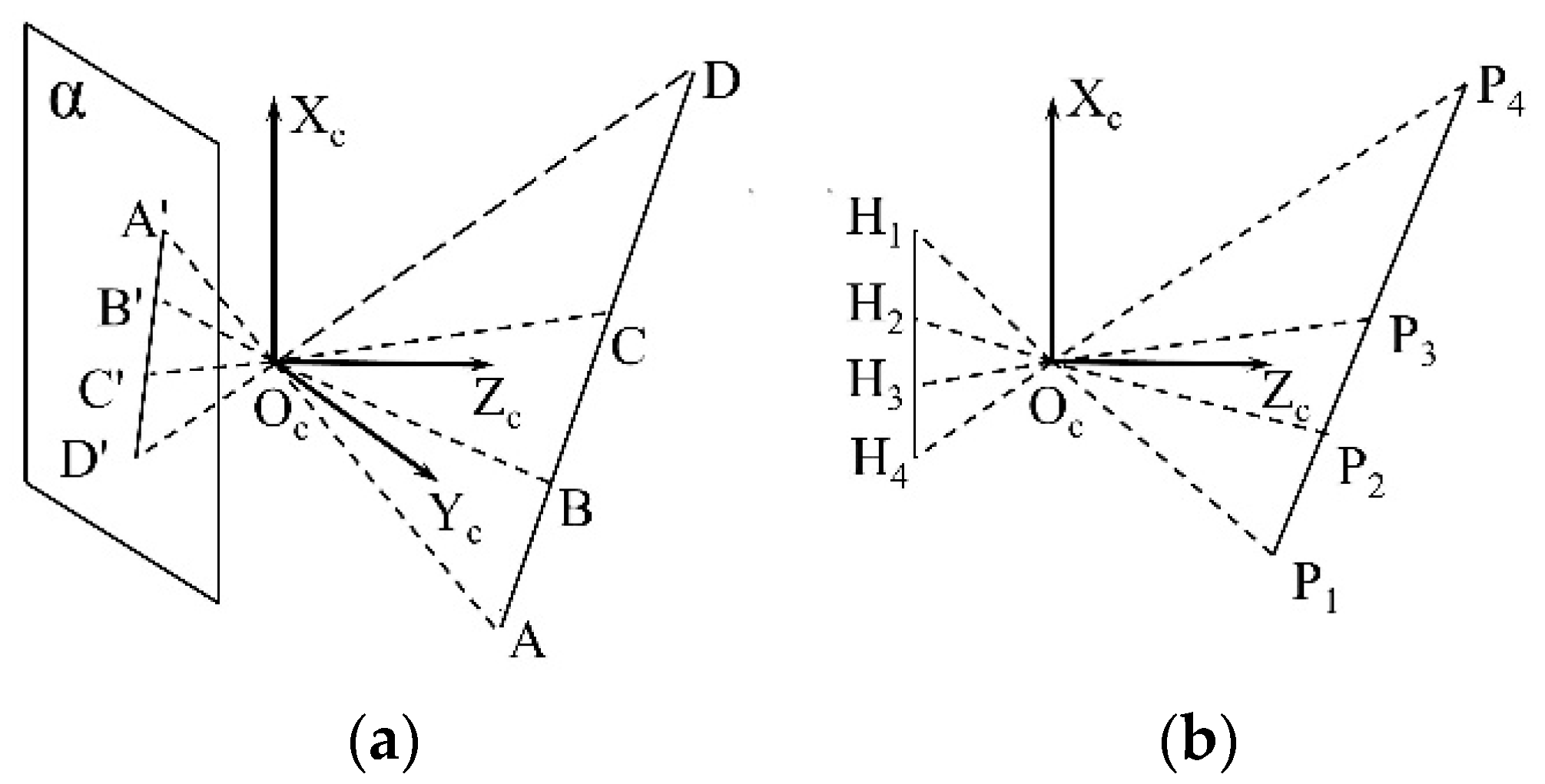

2.1. Geometrical Deduction of Imaging

2.2. Calculation of the Ideal Image Coordinates without Distortion

2.3. Solving the Coordinate (u0, v0) and Distortion Coefficients k1 and k2

3. Image Distortion Correction

4. Getting Other Parameters of Camera

5. Experiments

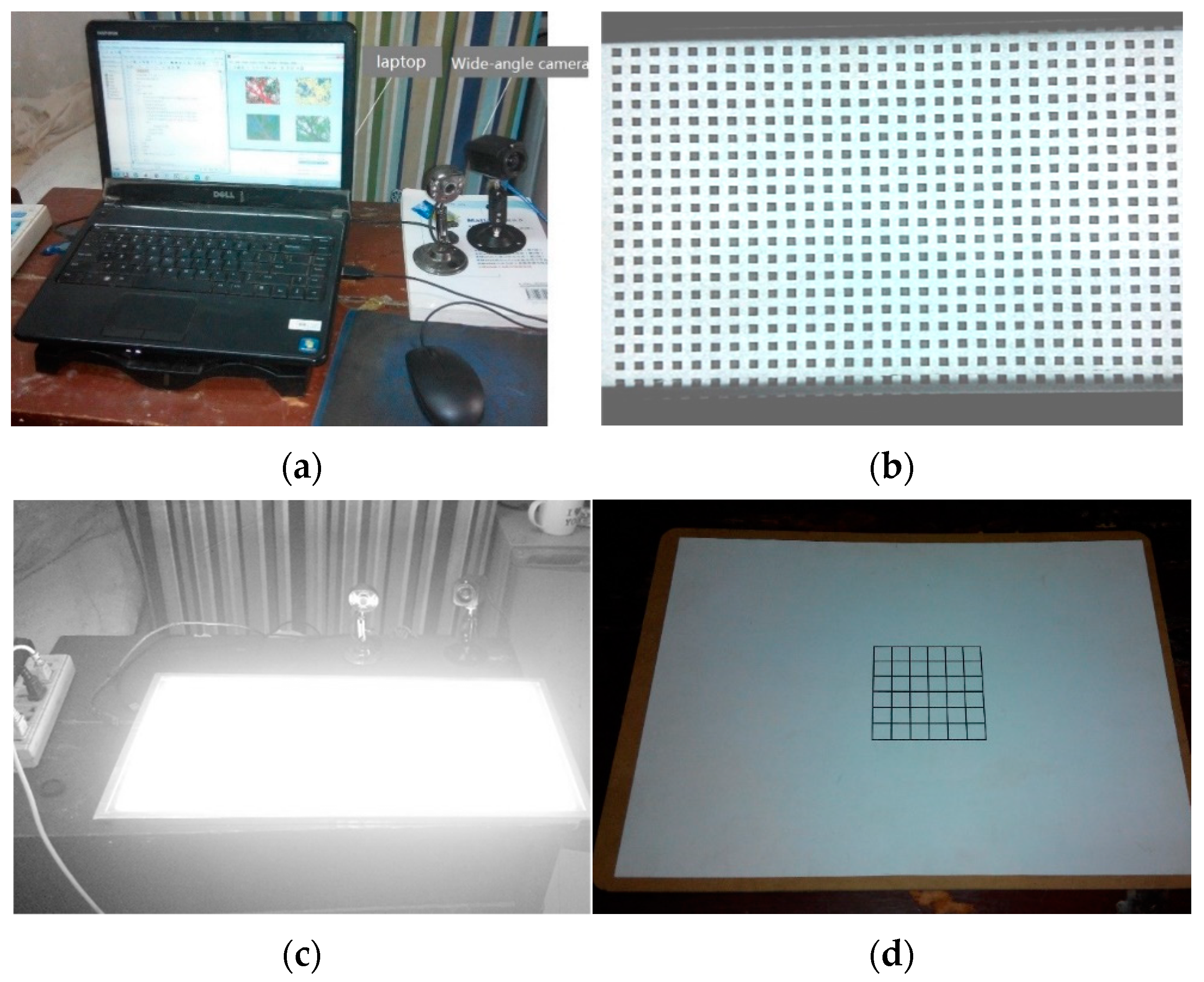

5.1. Experimental Equipment

5.2. Ultra-Wide-Angle Camera Calibration Process

- (1)

- Prepare a flat board of targets with equally spaced black squares.

- (2)

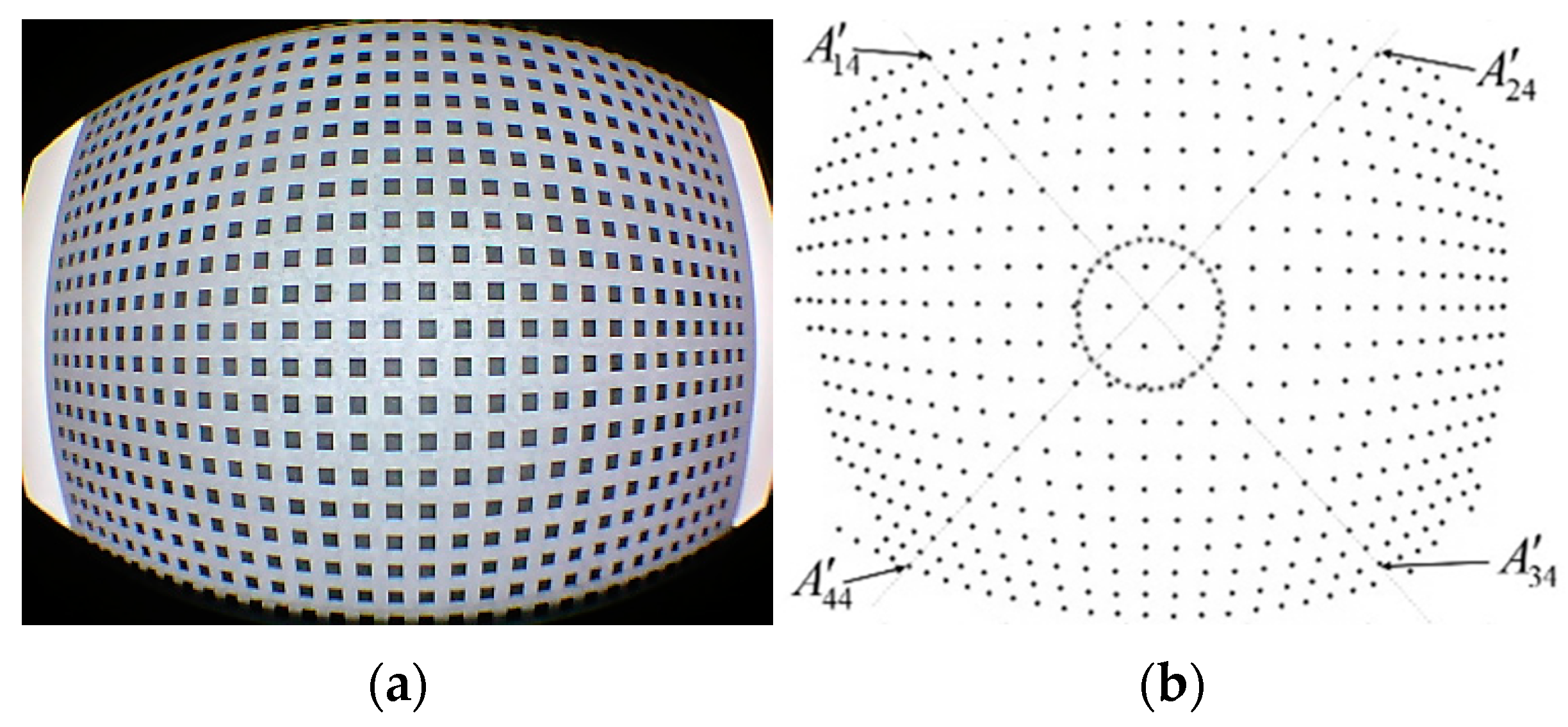

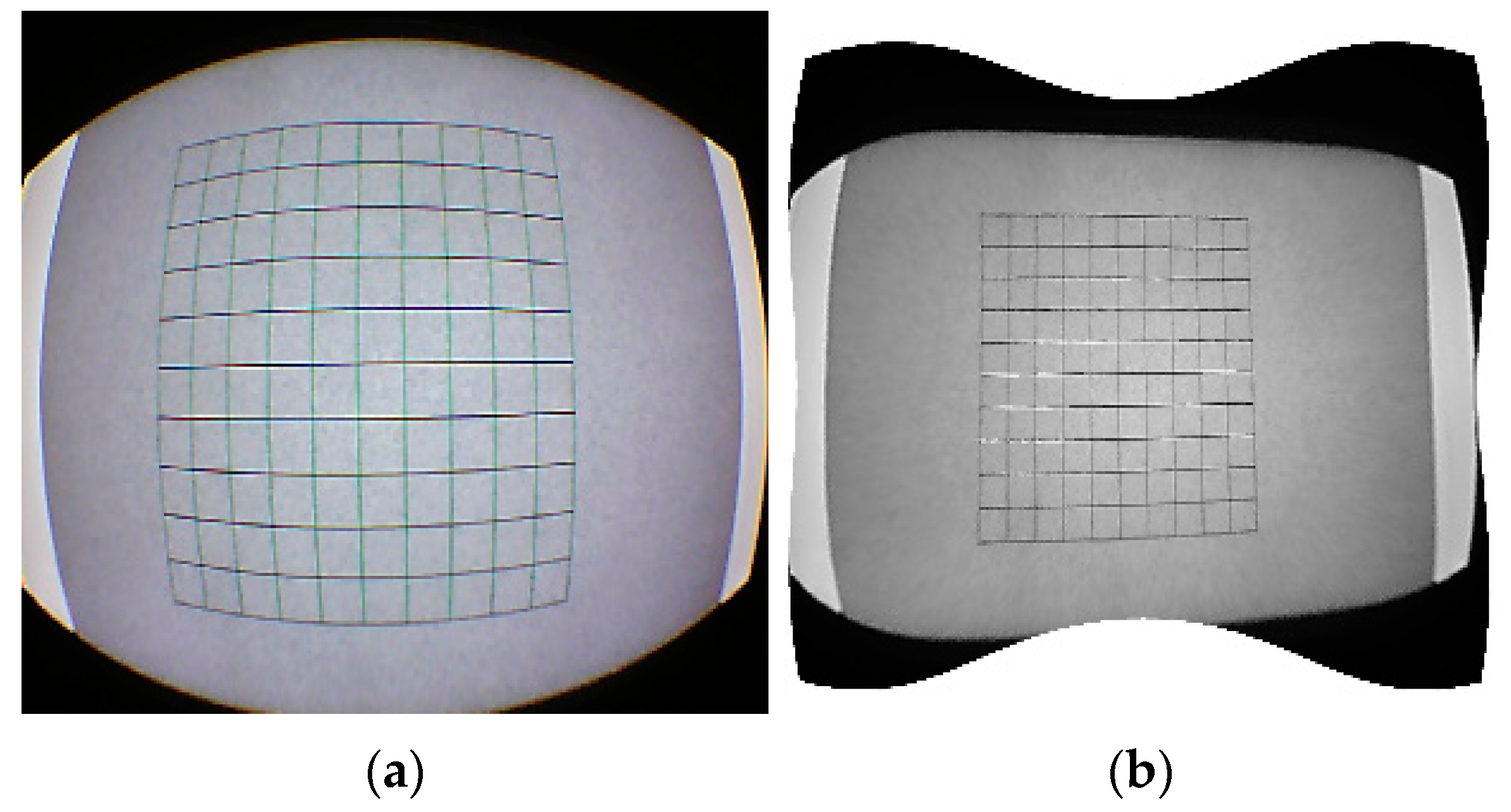

- The image of the target is captured at 1280 × 1024 pixels, as shown in Figure 4a.

- (3)

- The coordinates of the centroid of each black square in the target image were obtained using computer processing. In addition, a circular area is created with the centre of the image as the centre and 100 pixel points as the radius, and the coordinates of the centroids of all black squares within the circular area are obtained, as shown in Figure 4b.

- (4)

- The computer is used to find the combination of three points that satisfy in the same line, and to determine all other points on the same line that are far from the center of the image. Using Equation (12) to find the furthest ideal image coordinate points from the center without distortion, the image points found are shown in Figure 4b as , , and .

- (5)

- Combine the image points , , and in two according to Equation (15) to find the image coordinates (u0, v0) and the distortion coefficients k1 and k2. The values of and are then obtained according to the obtained parameters using Equation (16).

- (6)

- Using the average parameter values obtained, the image points on the line involved in the calculation are corrected according to Equation (17).

- (7)

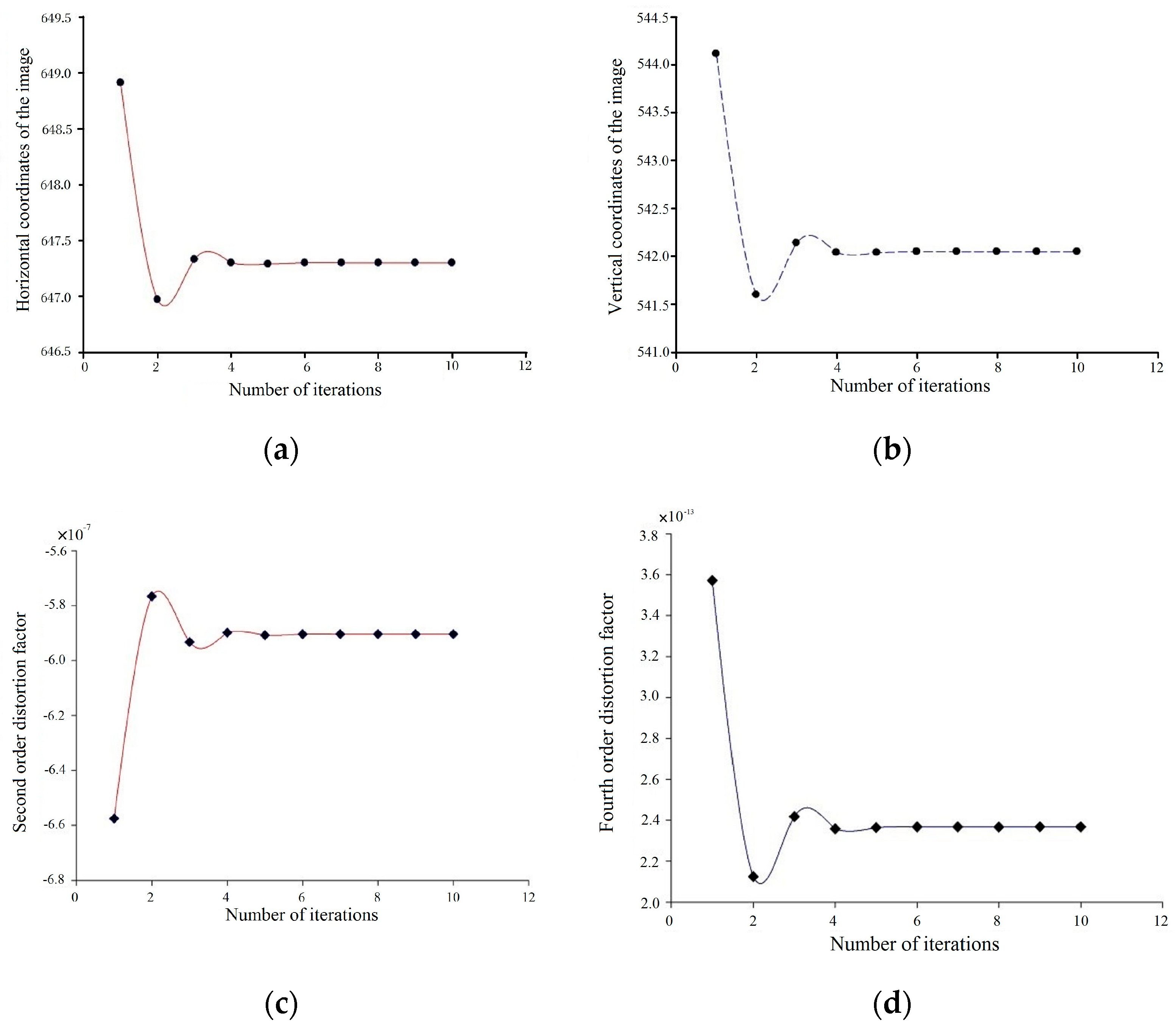

- Repeat the steps 4, 5, and 6 until the desired parameters converge.

- (8)

- The camera is calibrated by using Equations (32)–(34) to find the other parameters of the camera based on the image coordinates of the optical axis center point and the distortion coefficient obtained.

- (9)

- The distorted image is corrected using the camera parameters acquired in step 8.

- (10)

- The camera calibration parameters were verified using the Zhang Zhengyou flat calibration method [26].

5.3. Results of Experiments

6. Conclusions

- (1)

- Only one image acquisition of the target is required.

- (2)

- No expensive ancillary equipment is required and it is highly adaptable.

- (3)

- High calibration progress within 1% relative error.

- (4)

- Rapid calibration.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Higuchi, H.; Fujii, H.; Taniguchi, A.; Watanabe, M.; Yamashita, A.; Asama, H. 3D Measurement of Large Structure by Multiple Cameras and a Ring Laser. J. Robot. Mechatron. 2019, 31, 251–262. [Google Scholar] [CrossRef]

- Han, X.-F.; Laga, H.; Bennamoun, M. Image-Based 3D Object Reconstruction: State-of-the-Art and Trends in the Deep Learning Era. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1578–1604. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chae, H.-W.; Choi, J.-H.; Song, J.-B. Robust and Autonomous Stereo Visual-Inertial Navigation for Non-Holonomic Mobile Robots. IEEE Trans. Veh. Technol. 2020, 69, 9613–9623. [Google Scholar] [CrossRef]

- Tarrit, K.; Molleda, J.; Atkinson, G.A.; Smith, M.L.; Wright, G.C.; Gaal, P. Vanishing point detection for visual surveillance systems in railway platform environments. Comput. Ind. 2018, 98, 153–164. [Google Scholar] [CrossRef]

- Ali, M.A.H.; Lun, A.K. A cascading fuzzy logic with image processing algorithm–based defect detection for automatic visual inspection of industrial cylindrical object’s surface. Int. J. Adv. Manuf. Technol. 2019, 102, 81–94. [Google Scholar] [CrossRef]

- Faugeras, O.; Toscani, G. The calibration problem for stereo. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 22–26 June 1986; pp. 15–20. [Google Scholar]

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef] [Green Version]

- Weng, J.; Cohen, P.; Herniou, M. Camera calibration with distortion models and accuracy evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 3965–3980. [Google Scholar] [CrossRef] [Green Version]

- Gao, H.; Wu, C.; Gao, L.; Li, B. An improved two-stage camera calibration method. In Proceedings of the 2006 6th World Congress on Intelligent Control and Automation, Dalian, China, 21–23 June 2006; Volume 1–12, pp. 9514–9518. [Google Scholar]

- Ma, S. A self-calibration technique for active vision systems. IEEE Trans. Robot. Autom. 1996, 12, 114–120. [Google Scholar]

- Yang, C.; Wang, W.; Hu, Z. An active vision based camera intrinsic parameters self-calibration technique. Chin. J. Comput. 1998, 21, 428–435. [Google Scholar]

- Gibbs, J.A.; Pound, M.P.; French, A.P.; Wells, D.M.; Murchie, E.H.; Pridmore, T.P. Active Vision and Surface Reconstruction for 3D Plant Shoot Modelling. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 17, 1907–1917. [Google Scholar] [CrossRef]

- Xu, D.; Zhang, D.; Liu, X.; Ma, L. A Calibration and 3-D Measurement Method for an Active Vision System with Symmetric Yawing Cameras. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Xu, G.; Chen, F.; Li, X.; Chen, R. Closed-loop solution method of active vision reconstruction via a 3D reference and an external camera. Appl. Opt. 2019, 58, 8092–8100. [Google Scholar] [CrossRef] [PubMed]

- Faugeras, O.; Luong, Q.; Maybank, S. Camera self-calibration: Theory and experiments. In Proceedings of the 2nd European Conference on Computer Vision, Margherita Ligure, Italy, 19–22 May 1992; pp. 321–334. [Google Scholar]

- Maybank, S.; Faugeras, O. A theory of self-calibration of a moving camera. Int. J. Comput. Vis. 1992, 8, 123–151. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, X.; Hong, X.; Zhang, F. Pixel-Level Extrinsic Self Calibration of High Resolution LiDAR and Camera in Targetless Environments. IEEE Robot. Autom. Lett. 2021, 6, 7517–7524. [Google Scholar] [CrossRef]

- Guan, B.; Yu, Y.; Su, A.; Shang, Y.; Yu, Q. Self-calibration approach to stereo cameras with radial distortion based on epipolar constraint. Appl. Opt. 2019, 58, 8511–8521. [Google Scholar] [CrossRef]

- Liu, S.; Peng, Y.; Sun, Z.; Wang, X. Self-calibration of projective camera based on trajectory basis. J. Comput. Sci. 2018, 31, 45–53. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, Y. Camera self-calibration from projection silhouettes of an object in double planar mirrors. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2017, 34, 696. [Google Scholar] [CrossRef]

- Führ, G.; Jung, C.R. Camera Self-Calibration Based on Nonlinear Optimization and Applications in Surveillance Systems. IEEE Trans. Circuits Syst. Video Technol. 2015, 27, 1132–1142. [Google Scholar] [CrossRef]

- Sun, J.; Wang, P.; Qin, Z.; Qiao, H. Effective self-calibration for camera parameters and hand-eye geometry based on two feature points motions. IEEE/CAA J. Autom. Sin. 2017, 4, 370–380. [Google Scholar] [CrossRef]

- El Akkad, N.; Merras, M.; Baataoui, A.; Saaidi, A.; Satori, K. Camera self-calibration having the varying parameters and based on homography of the plane at infinity. Multimed. Tools Appl. 2018, 77, 14055–14075. [Google Scholar] [CrossRef]

- Wan, B.; Zhang, D. Calibration method of camera intrinsic parameters with invariance of cross-ratio. Comput. Eng. 2008, 34, 261–262. [Google Scholar]

- Wu, Y.; Zhu, H.; Hu, Z.; Wu, F. Camera calibration from the quasi-affine invariance of two parallel circles. In Proceedings of the 8th European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; Volume 3021, pp. 190–202. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Chatterjeel, C.; Roychowdhury, V. Algorithms for coplanar camera calibration. Mach. Vis. Appl. 2000, 12, 84–97. [Google Scholar] [CrossRef]

- Ying, X.; Peng, K.; Hou, Y.; Guan, S.; Kong, J.; Zha, H. Self-Calibration of Catadioptric Camera with Two Planar Mirrors from Silhouettes. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1206–1220. [Google Scholar] [CrossRef]

- Zhang, H.; Wong, K. Self-Calibration of Turntable Sequences from Silhouettes. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 5–14. [Google Scholar] [CrossRef] [Green Version]

- Biao, H.; Ming, S.; Lei, S. Vision recognition and framework extraction of loquat branch-pruning robot. J. South China Univ. Technol. Nat. Sci. 2015, 43, 114–119, 126. [Google Scholar]

| Number of Iterations | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 53.91 | −92.53 | 1145.24 | −35.25 | 1130.92 | 1048.54 | 142.65 | 1061.38 |

| 2 | 24.83 | −123.48 | 1143.43 | −31.78 | 1116.75 | 1033.76 | 135.73 | 1070.73 |

| 3 | 31.52 | −116.36 | 1144.05 | −32.74 | 1120.17 | 1037.26 | 136.99 | 1068.96 |

| 4 | 30.25 | −117.72 | 1143.98 | −32.59 | 1119.48 | 1036.58 | 136.78 | 1069.24 |

| 5 | 30.48 | −117.50 | 1143.97 | −32.60 | 1119.58 | 1036.62 | 136.80 | 1069.21 |

| 6 | 30.45 | −117.51 | 1143.93 | −32.61 | 1119.58 | 1036.67 | 136.80 | 1069.26 |

| 7 | 30.45 | −117.51 | 1143.93 | −32.61 | 1119.58 | 1036.67 | 136.80 | 1069.26 |

| 8 | 30.45 | −117.51 | 1143.93 | −32.61 | 1119.58 | 1036.67 | 136.80 | 1069.26 |

| Parameters | Algorithms in This Paper | Zhang’s Algorithm | Relative Error |

|---|---|---|---|

| u0 | 647.30 | 646.81 | 0.08% |

| v0 | 542.05 | 540.23 | 0.34% |

| k1 | −5.9035 × 10−7 | −5.9325 × 10−7 | 0.44% |

| k2 | 2.3674 × 10−13 | 2.3891 × 10−13 | 0.91% |

| kx | 2279.10 | 2283.04 | 0.17% |

| ky | 2759.30 | 2770.13 | 0.39% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, B.; Zou, S. A New Camera Calibration Technique for Serious Distortion. Processes 2022, 10, 488. https://doi.org/10.3390/pr10030488

Huang B, Zou S. A New Camera Calibration Technique for Serious Distortion. Processes. 2022; 10(3):488. https://doi.org/10.3390/pr10030488

Chicago/Turabian StyleHuang, Biao, and Shiping Zou. 2022. "A New Camera Calibration Technique for Serious Distortion" Processes 10, no. 3: 488. https://doi.org/10.3390/pr10030488

APA StyleHuang, B., & Zou, S. (2022). A New Camera Calibration Technique for Serious Distortion. Processes, 10(3), 488. https://doi.org/10.3390/pr10030488