Abstract

Accurate recognition of Activities of Daily Living (ADL) plays an important role in providing assistance and support to the elderly and cognitively impaired. Current knowledge-driven and ontology-based techniques model object concepts from assumptions and everyday common knowledge of object use for routine activities. Modelling activities from such information can lead to incorrect recognition of particular routine activities resulting in possible failure to detect abnormal activity trends. In cases where such prior knowledge are not available, such techniques become virtually unemployable. A significant step in the recognition of activities is the accurate discovery of the object usage for specific routine activities. This paper presents a hybrid framework for automatic consumption of sensor data and associating object usage to routine activities using Latent Dirichlet Allocation (LDA) topic modelling. This process enables the recognition of simple activities of daily living from object usage and interactions in the home environment. The evaluation of the proposed framework on the Kasteren and Ordonez datasets show that it yields better results compared to existing techniques.

1. Introduction

Activity recognition is an important area of research in pervasive computing due to its significance in the provision of support and assistance to the elderly, disabled and cognitively impaired. It is a process involved in identifying what an individual is doing, e.g., sleeping, showering, and cooking. Research efforts so far have focused on the use of video [1,2], wearable sensors [3,4] and wireless sensor networks [5,6] to monitor simple human activities. Video based activity recognition captures body images which are segmented and then classified using context based analysis. Unlike sensor based activity recognition, video based approaches suffer from accurate segmentation of captured images which affects classification process and also raises privacy concerns.

Sensor based activity recognition uses sensors to monitor object usage from the interactions of users with objects. Typically, sensor based activity recognition follows data-driven, knowledge-driven or a combination of both techniques to identify activities. Data driven approaches use machine learning and statistical methods which involve discovering data patterns to make activity inferences. Research efforts [5,6,7] show the strengths of the approach in the learning process. Inferences in most cases for data driven approaches are hidden and or latent, thus requiring activities identified to be expressed in understandable format for the end user. In addition, data driven approach suffers from its inability to integrate context aware features to enhance activity recognition.

On the other hand, knowledge driven and ontology-based methods model activities as concepts, associating them to everyday knowledge of object usage within the home environment through a knowledge engineering process. The modelling process involves associating low-level sensor data to the relevant activity to build a knowledge base of activities in relation to sensors and objects. Activities are then recognised by following logical inference and or inclusion of subsumption reasoning. In comparison to data driven techniques, knowledge driven techniques are more expressive and inferences are usually in the format easily understood by the end user [8]. Knowledge driven techniques in most cases depend on everyday knowledge of activities and object use to build and construct activity ontologies. Knowledge of object use is mostly by assumptions, regular every day knowledge of what object are used for routine activities or even wiki-know-how (http://www.wikihow.com) [9].

In this paper, we follow a sensor based activity recognition approach with sensors capturing object use as the result of the interactions of object in the home environment. We regard object use and interactions as atomic events leading to activities. With same objects, we could have different activities, making the process of identifying activity from object use complex. In some cases, we could have activities with shared or similar object use. The approach we propose specifically follows object use as events and entries to recognise activities. This is in line with what is obtainable in real world situations and most home environments. In addition, the interactions of objects as object use in the home environment result in activities. These object use and interactions as atomic events result in particular routine activities. Our motivation stems from the premise that knowledge driven activity recognition constructed from the everyday knowledge of object use may not fit into certain activity situations or capture routine activities in specific home environments. If an activity model has been developed based on the generic and or assumed knowledge of object use, the recognition model may fail due to activities and objects fittings which differs with individuals and home environments. Generic ontology models have been designed and developed as in Chen et al. [7] to emphasise re-usability and share-ability.

As a way forward, it is essential to accommodate object concepts that are specific to the routine activities with regards to the individual and the contextual environment. To provide assistance and support to the elderly and cognitively impaired, the recognition of their ADLs must be accurate and precise with regards to the object use events. In this context, the major aim of this paper is to present a framework for activity recognition by extending knowledge driven activity recognition techniques to include a process of acquiring knowledge of object use to describe contexts of activity situations. This framework also addresses the problem where object use for routine activities have not been predefined. Thus, the challenge becomes discovering specific object use for particular routine activities. This paper focuses on the process of the recognition of ADL as a step towards the provision support and assistance to the elderly disabled and cognitively impaired. It also emphasises on knowledge driven techniques and shows that the knowledge acquisition process can be extended beyond generic and common knowledge of object use to build activity ontology. Given these, the work in this paper harnesses the complementary strengths of data and the knowledge driven techniques to provide solutions to the limitations and challenges highlighted above.

In particular, this paper makes the following contributions:

- Ability to deal with streaming data and segmentation using a novel time-based windowing technique to augment and include temporal properties to sensor data.

- Automated activity-object use discovery in context for the likely objects use for specific routine activities. We use Latent Dirichlet Allocation (LDA) topic modelling through activity-object use discovery to acquire knowledge for concepts formation as part of an ontology knowledge acquisition and learning system.

- Extend the traditional activity ontology to include the knowledge concepts acquired from the activity-object use discovery and context description which is vital for recognition, especially where object use for routine activities have not been predefined.

- A methodology to model fine grain activity situations combining ontology formalism with precedence property and 4D fluent approach with the realisation that activities are a result of atomic events occurring in patterns and orders.

- The evaluation and validation of the proposed framework to show that it outperforms the current state-of-the-art knowledge based recognition techniques.

The remainder of the paper is organized as follows. Section 2 provides an overview of the related works, while Section 3 describes the proposed activity recognition approach. Section 4 provides experimental results for Kasteren and Ordonez datasets to validate the proposed framework. Finally, Section 5 concludes this paper.

2. Related Work

Activity recognition approaches can be classified into two broad categories: data and knowledge driven approaches. This classification is based on the methodologies adopted, how activities are modelled and represented in the recognition process. Data driven approaches can be generative or discriminative. According to [8], the generative approach builds a complete description of the input (data) space.The resulting model induces a classification boundary which can be applied to classify observations during inference. The classification boundary is implicit and many activity data are required to induce it. Generative classification models includes Dynamic Bayes Networks (DBN) [10,11], Hidden Markov Model (HMM) [12,13], Naive Bayes (NB) [14,15], Topic model Latent Dirichlet Allocation (LDA) [16,17]. Discriminative models, as opposed to generative models, do not allow generating samples from the joint distribution of the models [8]. Discriminative classification models includes nearest neighbour [18,19], decision trees [20], support vector machines (SVMs) [21,22], conditional random fields (CRF) [23], multiple eigenspaces [24], and K-Means [18].

Data driven approaches have the advantage of handling incomplete data and managing noisy data. A major drawback associated with data driven approaches is that they lack the expressiveness to represent activities.

Topic modelling inspired by the text and natural language processing community have been applied to discover and recognise human activity routines by Katayoun and Gatica-Perez [16] and Huynh et al. [3]. Huynh et al. [3] applied the bag of words model of the Latent Dirichlet Allocation (LDA) to discover activities like dinner, commuting, office work etc.

Whilst Katayoun and Gatica-Perez [16] discovered activity routines from mobile phone data, Huynh et al. [3] used wearable sensors attached to the body parts of the user. Activities discovered in both work were latent, lacked expressiveness and minimal opportunities to integrate context rich features. Our work also significantly differs from Katayoun and Gatica-Perez [16] and Huynh et al. [3] with the modelling using an ontology activity model.

Knowledge driven ontology models follow web ontology language (OWL) theories for the specification of conceptual structures and their relationships. Ontology based activity recognition is a key area within knowledge-driven approaches. It involves the use of ontological activity modelling and representation to support activity recognition and assistance. Ontology uses the formal and explicit specification of a shared conceptualization of a problem domain [25]. Vocabulary for modelling a domain is provided by specifying the objects and concepts, properties, and relationships, which then uses domain and prior knowledge to predefine activity models to define activity ontologies [8]. Latfi et al. [26] proposed an ontology framework for a telehealth smart home aimed at providing support for elderly persons suffering from loss of cognitive autonomy.

Chen et al. [8,27] proposed an ontology-based approach to activity recognition, in which they constructed context and activity ontologies for explicit domain modelling. Sensor activations over a period of time are mapped to individual contextual information and then fused to build a context at any specific time point. Subsumption reasoning were used to classify the activity ontologies, thus inferring the ongoing activity. Knowledge driven ontology models also follow web ontology language (OWL) theories for the specification of conceptual structures and their relationships [28]. OWL has been widely used for modelling human activities for recognition, which most of the time involves the description of activities by their specifications using their object and data properties [27]. In ontology modelling, domain knowledge is required to encode activity scenarios, but it also allows the use of assumptions and common sense domain knowledge to build the activity scenarios that describe the conditions that drive the derivation of the activities [29]. Recognising the activity then requires the modelled data to be fed to the ontology reasoner for classification. Chen et al. [8] and Okeyo et al. [29] followed generic activity knowledge to develop an ontology model for the smart home users. Whilst these approaches to model activities depending on common sense domain knowledge and its associated heuristics, which is commendable, they often lead to incorrect activity recognition due to lack of specificity of the object use and contexts describing the activity situations. The problem arises because generic models cannot capture specific considerations to object use for routine activities, home settings and individual object usage between differing users. They also do not follow evidenced patterns of object usage and activity evolution as they rely on generic know hows and hows to to build ontology models. In view of these limitations, we apply an LDA enabled activity discovery technique to discover likely object use for specific routine to augment the generic ontology modelling process by [8] in our work. The framework we propose in this paper also extends our previous works [30,31] by the inclusion of the ontology activity model.

3. Overview of Our Activity Recognition Approach

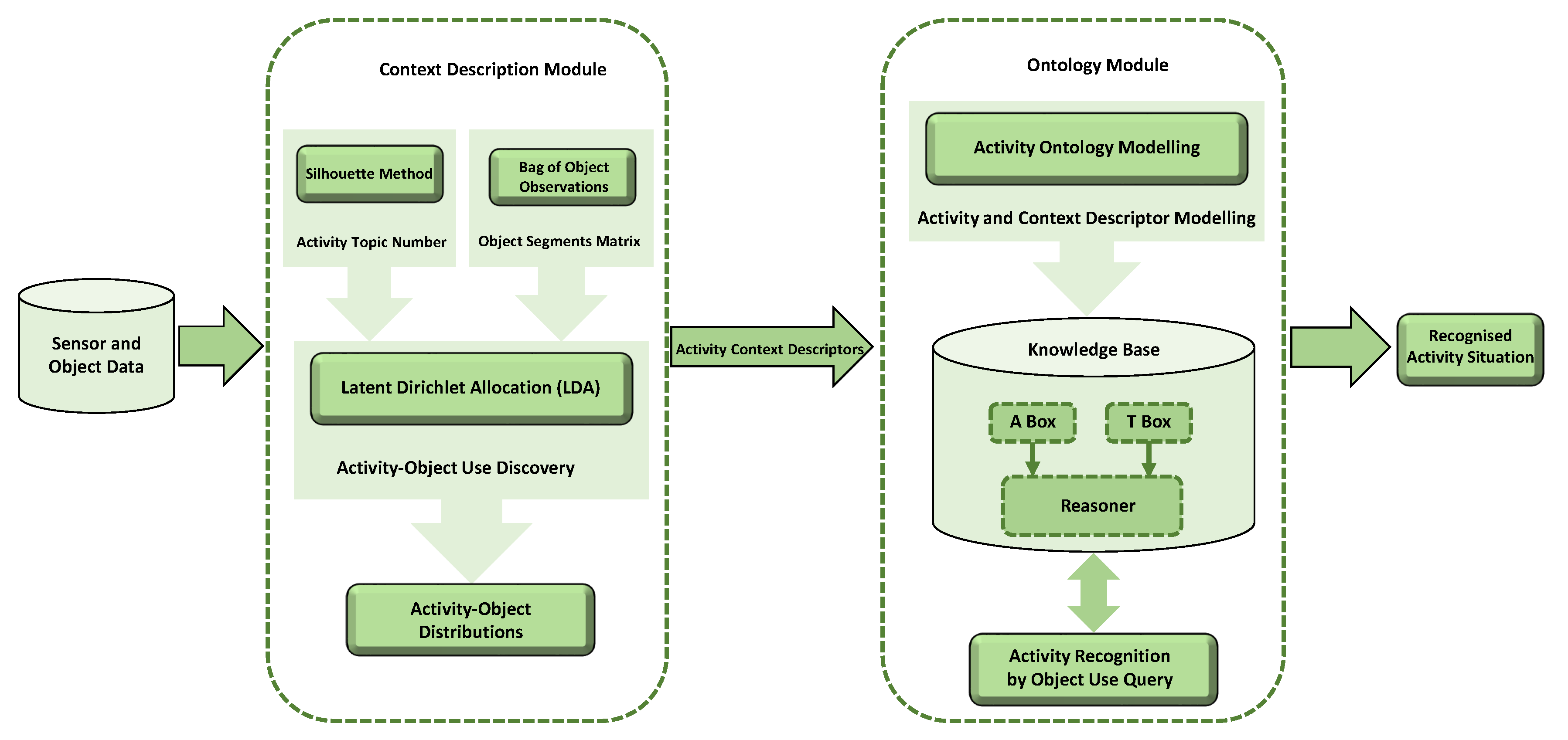

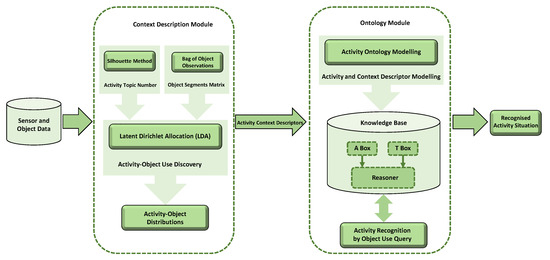

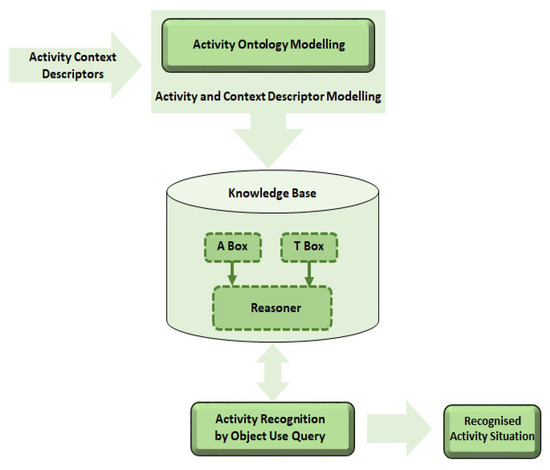

To achieve activity recognition, the proposed framework (Figure 1) supports object use as contexts of activity situations through activity-object use discovery, information fusion of activity and object concepts, activity ontology design, development and modelling, and then activity recognition.

Figure 1.

An Overview of the Activity Recognition Framework.

The framework consists of two component modules: the context description module and the ontology module. Object use for specific routine activities as activity-object use distributions and activity context descriptors are discovered using a LDA topic model in the context description module. An activity ontology is developed using the discovered object use for the respective activities as ontology concepts in the ontology module. Activity recognition is achieved by observed object use query on the activity ontology for the relevant activity situations. As a unified framework, the functions of these component modules are integrated to provide a seamless activity recognition platform which takes in inputs of sensor and object use observations captured in the home environment representing atomic events of object interactions. We describe in detail the component modules in the following subsections.

3.1. Context Description Module

The context description module augments the traditional knowledge driven activity recognition framework [7,27,32]. Its main function is to provide the knowledge of object use for specific routine activities as activity-object use distributions and activity context descriptors. These object use for specific routine activities are the contexts describing the specific routine activity situations, hence the name context description module. To provide the basis for an activity recognition, the knowledge of object use for respective activity concepts are required. The necessity of this object use knowledge is such that activities as high-level events are a result of low-level tasks or atomic events of object interactions. Traditional knowledge driven activity recognition frameworks [7,9] model ontologies from generic object use assumptions or every day knowledge of object use. However, the traditional (generic) models may not fit every home setting or environment and this may lead to erroneous object descriptions of low-level tasks and eventually incorrect activity recognition. To design and model activity situations ontologically, an accurate process of acquiring the knowledge of object use or context descriptors which describe activities or maps to activity as higher level of events must be employed. The context description module performs its function by a process of activity-object use discovery and activity context description based on the activity-object use discovery process. We briefly describe this modular process below.

3.1.1. Activity-Object Use Discovery by Latent Dirichlet Allocation

The activity-object use discovery uses the LDA approach introduced by Blei et al. [33]. The LDA generatively classifies a corpus of documents as a multinomial distribution of latent topics. It takes advantage of the assumption that there are hidden themes or latent topics which have associations with the words contained in a corpus of documents. It then requires the bag of words (documents) from a corpus of documents as input and number of topics as a key parameter. In the context of our activity-object use discovery process, the activity topic number and bag of sensor observations corresponds to the topic number and bag of words respectively of the LDA. We explain determining the activity topic number and bag of sensor observations below.

- Activity Topic Number: A key parameter needed by the LDA process is the topic number to discover the likely object use. The number of activities, so to say number of activity topics corresponds to the LDA topic number. We determine the number of these distinct activities in the dataset by applying the silhouette method through K-Means clustering. Huynh et al. [17] applied K-Means clustering to partition sensor dataset. They have used the results from the clustering process to construct document of different weights. In a slightly different way, we propose applying the K-Means clustering to determine our topic number. The clustering process partitions any given dataset into clusters and in our case, each cluster represents a candidate activity resultant from the object interactions therein. Our approached also aims to use this process to determine the number of activities topics which is a measure of the optimal number of clusters. An optimal number of clusters/activities is an important parameter that will maximize the recognition accuracy of the whole framework. To achieve this, we apply the concept of silhouette width identifying the difference between the within-cluster tightness and separation from the rest. Theoretically, it is a measure of the quality of clusters [34]. The silhouette width of from N as can be computed from:where is the average distance between and all other entities belonging to the cluster, and as the minimum of the averages distances between and entities in other clusters. When normalised, the measure of silhouette width values ranges and 1. If all the silhouette width values are close to 1, then the entities are well clustered. The highest mean silhouette width over different values of k then suggests the optimal number of clusters. The optimal number of clusters for the dataset is indicative of the number of activities in the dataset and the number of activity topics.

- Bag of Object Observations: The bag of objects observation we propose is analogous to the bag of words used in the LDA text and document analysis. In text and document analysis, a document (bag) in a corpus of texts can be represented as a set of words with their associated frequencies independent of their order of occurrence [35].We follow the bag of word approach to represent discrete observations of objects or sensors of specific time windows generated as events in the use or interaction of home objects. In this regard, we refer to it as bag of object observations. To satisfactorily achieve bagging of the objects accordingly, the stream of observed sensor or objects data are partitioned into segments of suitable time intervals. The objects and the partitioned segments then, respectively, correspond to the words and documents of the bag of words. If a dataset is given by D made of , …, objects, D can be partitioned using suitable sliding time window intervals into , …, segments similar to Equation (2).The observed objects , …, in each of the segments , …, are then represented with their associated frequencies f to form a segment-object-frequency matrix similar to the schema given in Equation (3). We further describe the bag of object observation with Scenario 1.

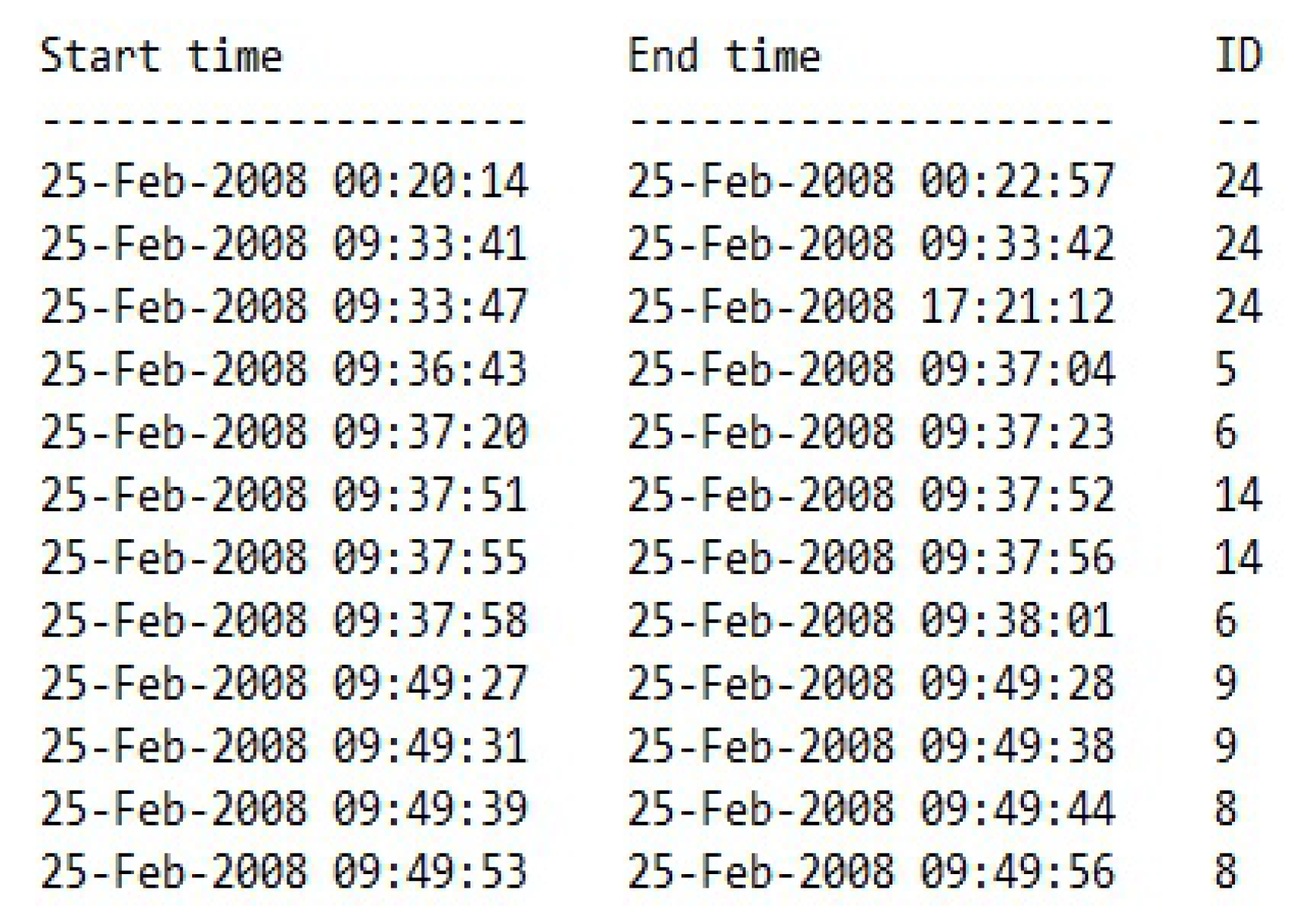

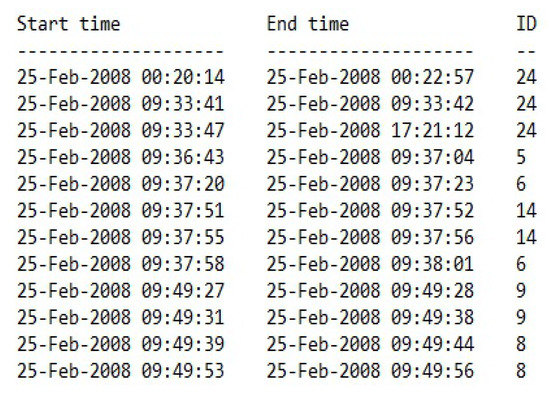

Scenario 1: We describe the formation of the bag of objects observations using a part of the Kasteren House A dataset (https://sites.google.com/site/tim0306/datasets), as illustrated in Figure 2. The observed object data are partitioned into segments using a sliding window of 60 s intervals so that objects: Hall-Bedroom Door belongs to Segments 1–3; Hall-Toilet Door, Hall-Bathroom Door and ToiletFlush belong to Segment 4; Hall-Bathroom belongs to Segment 5; and Plates Cupboard and Fridge belong to Segment 6. The objects in each of the segments with their associated frequencies form a segment-object-frequency matrix representing the bag of objects observations. Further, the objects are represented as aliases, e.g., Hall-Bedroom Door (BE), Hall-Toilet Door (TO), Hall-Bathroom Door (BA), ToiletFlush (TF), Fridge (FR), Plates Cupboard (PC), etc, to be encoded onto the bag of sensor observation as given in Equation (4).

Figure 2.

A part of the Kasteren House A dataset with Objects/Sensors ID represented as: 24 = Hall-Bedroom Door; 5 = Hall-Toilet Door; 6 = Hall-Bathroom Door; 14 = ToiletFlush; 9 = Plates Cupboard; and 8 = Fridge.

The LDA takes advantage of the assumption that there are hidden themes or latent topics which have associations with the words contained in a corpus of documents. It also involves the use of bag of words in the corpus of documents which are generatively classified to latent themes or topics and word distributions. We conversely apply this assumption to the activity-object pattern discovery context that latent activity topics would have associations with the features of object data in the partitioned segments of the bag of sensor observations discussed above. The documents are presented in the form of objects segments composed of co-occurring object data observations. With D composed of object segments similar to Equation (2), would be made of objects represented as , …, from X objects of . The LDA places a dirichlet prior P( ) with parameter on the document-topic distributions P(). It assumes a Dirichlet prior distribution on the topic mixture parameters and , to provide a complete generative model for documents D. describes D × Z matrix of document-specific mixture weights for the Z topics, each drawn from a Dirichlet() prior, with hyperparameter . is an X × Z matrix of word-specific mixture weights over X objects for the Z activity topics, drawn from which is a Dirichlet prior. The probability of a corpus or observed object dataset, is equivalent to finding parameter for the dirichlet distribution and parameter for the topic-word distributions P() that maximize the likelihood of the data for documents d = , as given in Equation (5), following Gibbs sampling for LDA parameter estimation [33].

Given the assumptions above, the activity-object patterns can be calculated from Equation (6):

where is the number of times an object is assigned to a topic z.

In the context of the activity recognition, modelling activity concepts for recognition would rely on the probabilistic distribution of the objects given the activity topics. The LDA topic model, P(), computes the object use distributions that are linked to specific activity topics. The object distributions for the activity topics through LDA topic model provides the activity context description needed to build an activity ontology model with regards to the specific object use. This provides the object use concepts and for the respective activity concepts needed to construct the activity ontology model. If we use the activity topic number from a given dataset D and bag of sensor observations as inputs, then the object use distributions specific to the routine activities can be determined and results similar to Equation (7). With this, we would have discovered the activity-object use distributions as activity context descriptors for the specific routine activities. The process ends with the LDA process assigning objects to specific activity topics. With regards to scenario 1, the LDA then assigns objects to the various routine activities as activity-object use distributions, e.g., ToiletFlush and Hall-Toilet Door assigned to the activity topic Toileting.

3.1.2. Context Descriptors for Routine Activities

The knowledge base of a knowledge driven activity recognition depends on a set of activity and object concepts carefully encoded ontologically to represent the activity descriptions. The knowledge representations is such that for a particular activity as a concept, there are object concepts which are used to describe the activity. In essence, the activity is specified by linking and associating it with objects as context descriptors. The activity concepts are structured in some cases hierarchically allowing more and general contextual properties in addition to the main associating object concepts to encode the activity descriptions. Ideally, activities are performed generating sensor events resulting from object interactions and object usage in the home environment. Understanding the activities and how they are performed relies on the knowledge breakdown of the respective object usage for the specific routine activities. The activity-object use discovery process provides the knowledge of objects used for the routine activities. Our aim is to use the activity-object use discovery to provide the conceptual model for annotating the routine activities with their context descriptors, in an automated manner to overcome one of the major hurdles of using knowledge-based approaches. The object usage discovered then become the context descriptors for the activity concepts in the activity ontology. The context descriptors specifications for the routine activities provides the link and relationship between the objects and the activities.

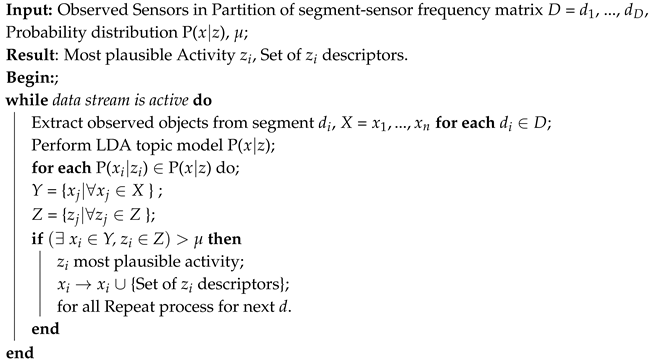

This link and relationship is carried onto the ontology layer to help provide class relationship and property assertion among the domain concepts. This process of context description utilises the object assignments to specific activity topic. It uses the number of times an object has been assigned to an activity and applies a threshold to conveniently imply the object as a context describing the activity. If , …, are the number of times an object has been assigned to an activity topic , then it becomes a context descriptor if for the activity topic is greater than a threshold value. The idea is that for an object to be a context describing an activity topic, it must have been assigned to an activity topic by a number of times greater than the threshold . We determined by computing the mean M of the number of objects assignment to K topics and standard deviation of the number of times an object has been allocated to an activity topic (see expression (8)). Finally, the context descriptors for the specific routine activities are achieved using Algorithm 1 with dependency on the activity-object distributions from the LDA and .

These context descriptors for the routine activities become the knowledge acquired from the object usage and the needed information of object and activity concepts to be encoded onto the activity ontology.

| Algorithm 1: Algorithm for Context Descriptors of Activities. |

|

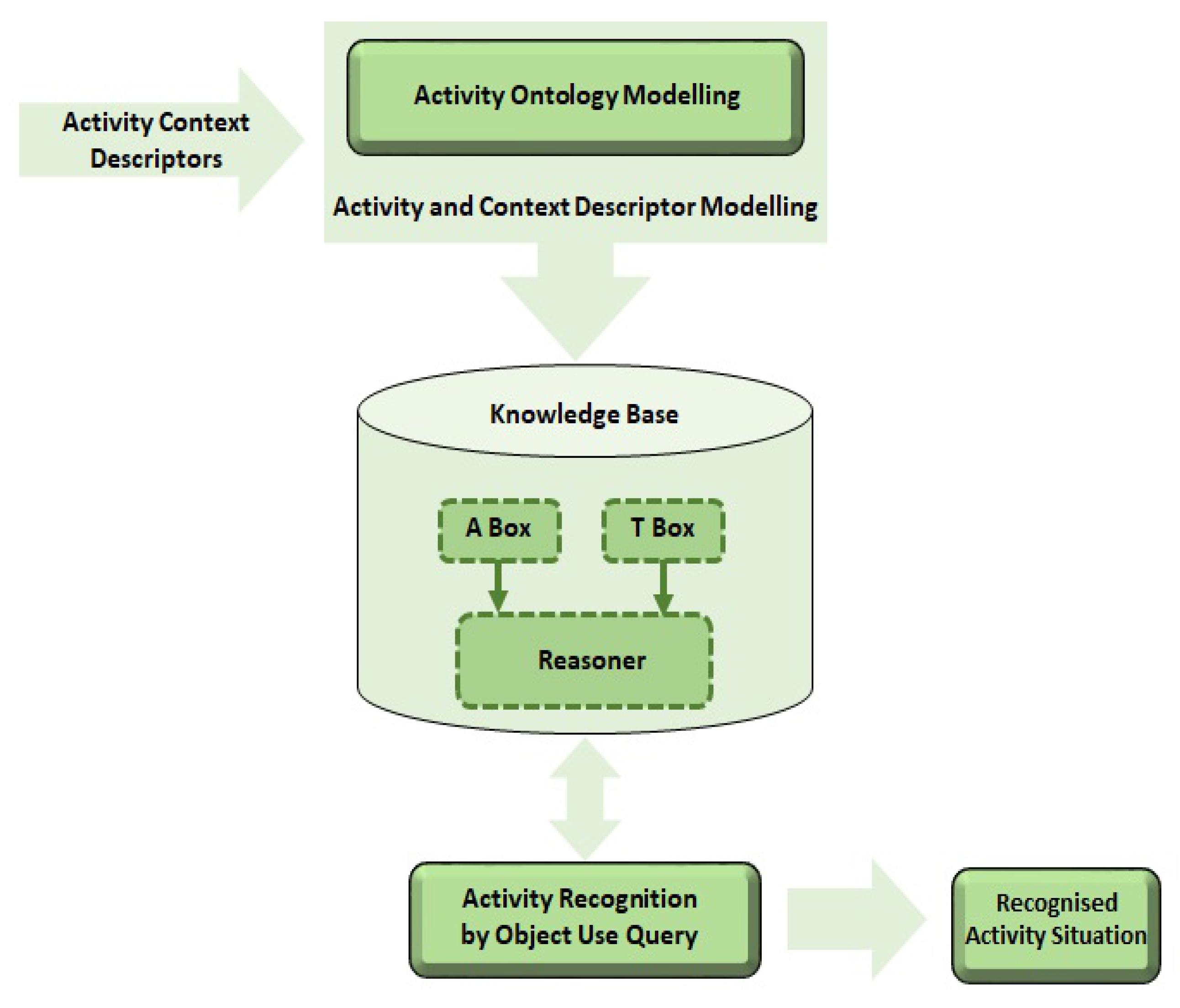

3.2. Ontology Module

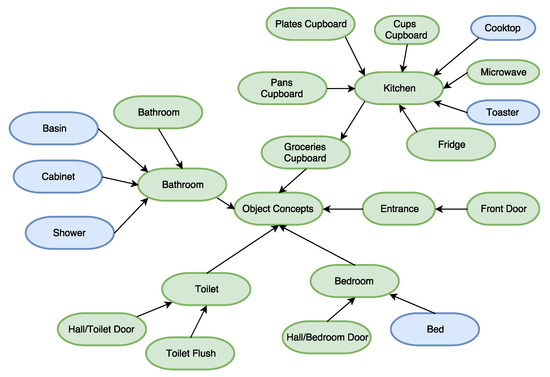

The ontology module, as illustrated in Figure 3, is composed of the knowledge base as a repository of information consisting of the modelled activity ontology concepts, data, rules used to support activity recognition. Similar to other knowledge bases, it functions as a repository where information can be collected, organized, shared and searched. The activities and the context descriptors from the context description module are designed, developed following description logic, knowledge representation and formalism and then added to the knowledge base. The knowledge base is made of the TBox, ABox and the reasoner. The TBox is the terminological box made of the activities concepts and the relevant context descriptors of object use as defined and encoded as ontology concepts. The ontological design and development process gradually populates the TBox by encoding the activities and context descriptors from the context description module as ontology concepts. The ABox is the assertional box made of the instances and individuals of the concepts encoded in the TBox. They are asserted through properties that may be object or data properties. For all the terminological concepts in our TBox, instances and individuals of these are asserted through different properties to populate our ABox. In addition to the activities and context descriptor concepts and instances, we also added temporal concepts and instantiated them following the 4D fluent approach to allow for a realistic reflection activity evolution and transition. In addition, based on their temporal properties, such as usual time of occurrence, activities could be modelled as static and dynamic activities. The resultant activity ontology created with the fusion of likely object use and behavioural information from the activity context descriptions makes it possible for activity inferencing. The reasoner checks the relationships between the concepts in the TBox and also checks the consistencies in the ABox for the individuals and instances to perform activity recognition by information retrieval. The eventual result from the information retrieval are the activities or activities situations.

Figure 3.

An overview of the activity ontology module.

The process of modelling an activity concept resulting from a set of sensors and object use requires asserting all the objects concepts and with their times to be encoded to represent the activity situation (see Table 1 and Table 2 for a list of concepts and notations used in our analogies). If an activity situation Breakfast is the result of Microwave_On and Fridge_On at times t1 and t2 respectively, then Breakfast can be asserted with the properties hasUse and hasStartTime as hasUse(Microwave_On, hasStartTime t1), hasUse(Fridge_On, hasStartTime t2). The activity is therefore modelled as a list of the objects and with their times ordered temporally. The example of Breakfast from Microwave_On and Fridge_On at t1 and t2 can then be encoded by Equation (9).

Table 1.

Ontology concepts used.

Table 2.

Ontology notations used.

Typically, activity situations or activities in the home environment are a result of specific objects use. To model activity situations accurately, it is important to extend the traditional activity ontology modelling to include specific resources and or objects use for the specific routine activity. The activity context descriptors resulting from the activity-object use discovery forms the resources and objects use concepts to be modelled onto the activity ontology for the specific routine activities such that:

Activities: The activity topics are annotated as activity concepts analogous to the activity situations in the home environment. This represents a class collection of all types of activities set as .

Objects: These represents class collection of all objects as activity context descriptors in the home environment set as .

Recall P() computes the likely objects use for each of the activity topics, then from would have a subset of likely objects defined as . The function f maps the activity context descriptors objects for activity as the likely objects use for this activity such that:

If the function f is replaced with the object property function in hasUse, then Equation (10) becomes:

The expressions below encodes enhanced sensors or object outputs with their temporal attributes.

The activity context descriptors are then modelled as resources and objects class concepts accordingly and then added to the ABox so that:

3.2.1. Modelling Fine Grain Activity Situations

In reality, activities are a result of multiple step-wise atomic tasks or sensor events. Thus, when modelling the concepts in ontology, it is important to consider this, given that order of activity or precedence in some cases may lead to different types of activities. The role value expressed by ○ ⊑ holds the relationship of transitivity between , and [32,36]. Therefore, transitivity can be applied to a list ontology concepts in expressing precedence. Given this, we introduce the property relationship hasLastObject to specify precedence relationship with objects in use. With the hasLastObject, we can extend our ontology to include the order of list of context descriptors more especially in the of modelling fine grain activity situation. Given an activity Z which specifically can be achieved in the order of objects , and then , a stepwise process expressed with Equation (14) can be followed to assert and recognise this activity situation given the order and precedence of object evolution.

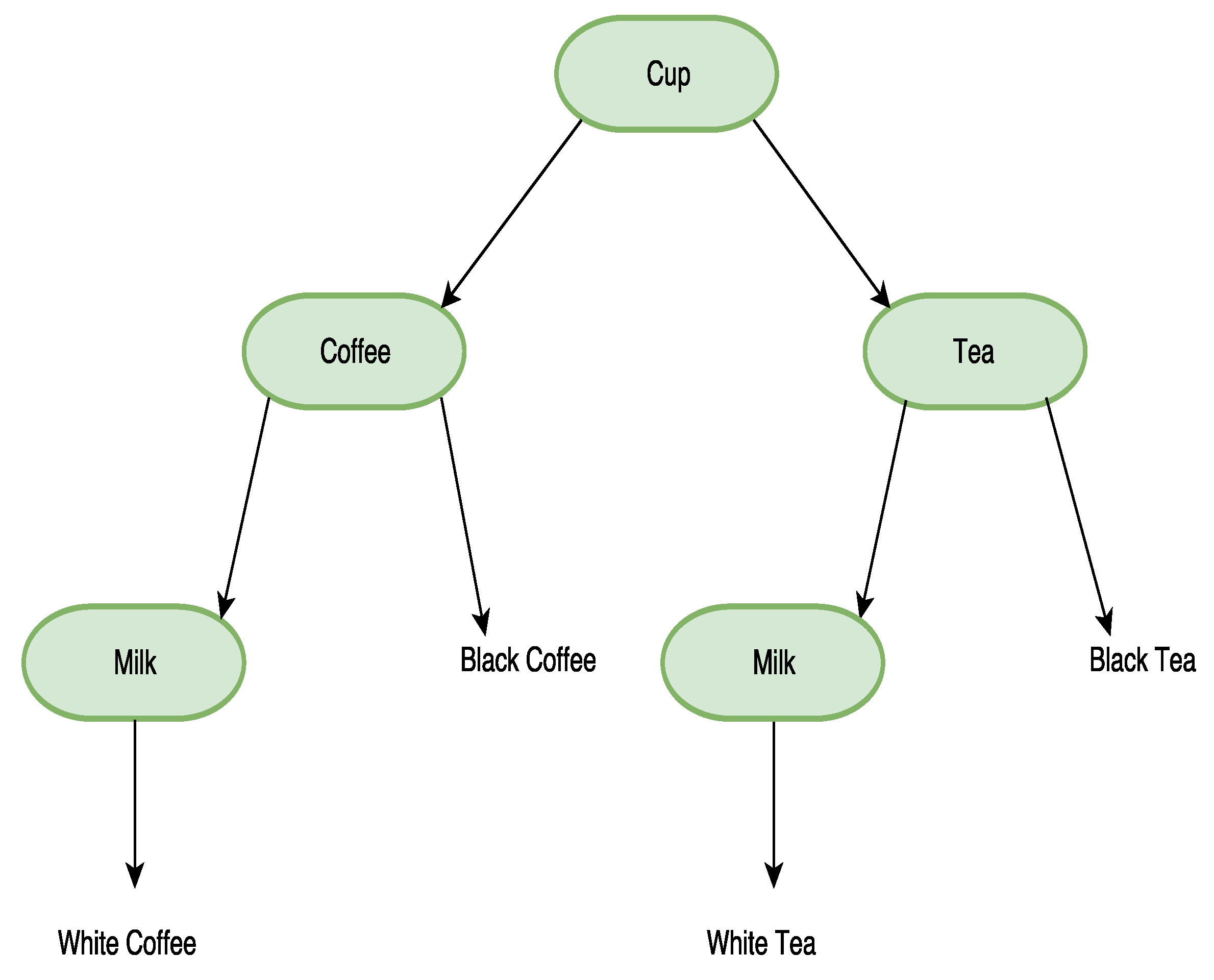

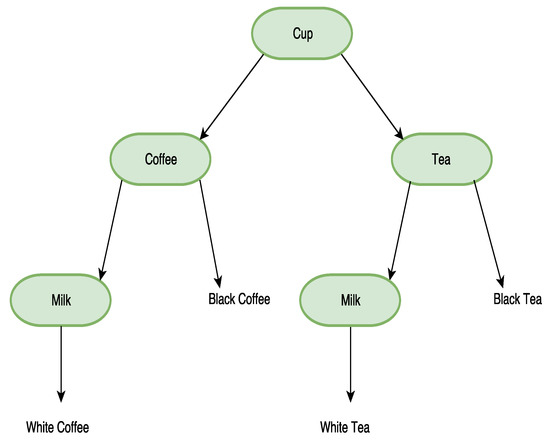

The significance of this ordering in precedence is such that each of these objects as events can lead to multiple activity situations, the specifications of precedence refines the activity based on the context which describes the activity. We demonstrate further using the scenario below as illustrated in Figure 4.

Figure 4.

The four kitchen object and the possible resulting activity situations.

Scenario 2: Suppose a kitchen environment has the objects Cup, Coffee, Milk and Tea. White Coffee, Black Coffee, Black Tea and White Tea are four likely activity situations that could result from the combinations of these object based on preference. Basically, the tree illustrated in Figure 4 shows the four activity situations. The property relationship hasLastObject can be used to specify precedence which then results to granular activities of choice. Possible orders of object use with Cup as first object use leading to White Coffee, Black Coffee, White Tea and Black Tea can then be encoded as Equations (13)–(16), respectively.

White Coffee: Cup, Coffee and Milk.

Black Coffee: Cup and Coffee.

White Tea: Cup, Tea and Milk.

Black Tea: Cup and Tea.

With order and precedence in a stepwise fashion, the four activities are bound if Cup is used first. Including Coffee refines the situation to White and Black Coffee. A step further including Milk further refines the activity situation to White Coffee. With the transitivity property of hasLastObject, the activity situations can be achieved so that all four activities depending on the path.

3.2.2. Static and Dynamic Activities

In the home environment, activities are performed differently, in different ways and times within the 24 h day path. Some of these activities can be performed at specific times of the day making them have static times occurrence (Static activities), performed at different or varying times of the day (Dynamic activities), and, in some cases, same or similar objects may be used to perform some of these activities. We make our analogy using Breakfast, Lunch, Dinner, Toileting and Showering as examples of activities in the home environment. Breakfast, Lunch and Dinner are examples of different activity concepts which can be performed with same or similar object interactions given that they are food related activities. However, they have specific times of the day they are performed, making them static activities. Given their similarities, they can be modelled as subclasses of the activity Make Food, however, they differ with regards to their respective temporal properties. Whilst they inherit all the properties of Make Food by subsumption, they can be easily confused in the recognition process if modelled in the ontology without consideration to their usual times of performance. Distinction can only be achieved for them by the specification of the time intervals they are usually performed.

On the other hand, activities such as Toileting and Showering can be performed at any time of the day, making the process of distinguishing them less dependent on their temporal properties, hence, they are dynamic activities. Dynamic activities are not constrained within any time interval. We therefore extend the ontology of activity situations to include static and dynamic activities using the 4D-fluent approach [37], requiring the temporal class concepts Timeslice and TimeInterval to be specified using the relational properties tsTimesliceOf and tsTimeIntervalOf respectively. The time intervals Interval1 and Interval2 holds the temporal information of the time slices for the static and dynamic activities respectively. An instance of a TimeSlice of an activity whether static or dynamic is linked by the property tsTimeSliceOf and property tsTimeInterval which then links this instance of TimeSlice with an instance of the class TimeInterval.

Modelling a Static Activity: A static activity is modelled by requiring the specification of the TimeSlice, TimeInterval class concepts and with the context descriptors for that activity. The activity concepts described above are extended so that the hasUse object property encodes the usage of the objects for the static activity by specifying the static activity as the domain class concept and ranges to all the object classes which describes the context descriptors. We further extend this with the temporal properties which requires tsTimeInterval to have domain TimeSlice and Resources and it ranges TimeInterval to capture specific time interval of the day through Interval (a sub class of TimeInterval). The time instants of the activity are captured through the tsTimeSliceOf with domain TimeSlice and Resources and it ranges to TimeSlice. Equation (19) encodes a static activity so that Interval1 asserts the time interval of the day the static activity is performed using the object .

Modelling a Dynamic Activity: Similar to static activities, it is modelled by requiring the specification of the TimeSlice, TimeInterval class concepts and with the context descriptors for that activity. An instance of a TimeSlice of a dynamic activity is linked by the property tsTimeSliceOf and property tsTimeInterval and then links this instance of the class TimeSlice with an instance of class TimeInterval which may be Interval2. Interval2 ranges to cover the full 24 h cycle of the day, as asserted by Equation (21).

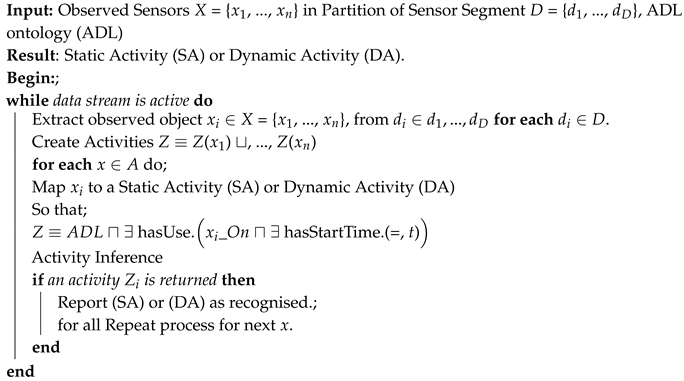

3.3. Activity Recognition by Object Use Query

The activity recognition process is enabled by Algorithm 2, which performs a mapping of the activity situation using the observed objects. A comparison is made through reasoning by the ontology to retrieve the closest activity situation described by the contexts of object observed as sensor data. The activity recognition uses object use query like constructs adapted from the Temporal Ontology Querying Language (TOQL) [37] on the knowledge base to retrieve activity situations fitting the requirements of the query. As an advantage, sensor states and status of object use as implemented in the activity ontology can be used in queries to reflect real situations of object usage in the home environment. A typical query is comprised of an SQL–like construct (SELECT–FROM–WHERE) for OWL which treats the ontology classes and properties as database tables and columns. An additional AT construct in the query compares the time interval for which a property is true with a time interval or instant.

Considering a scenario in the home environment where sensor status and outputs captured are reported, Algorithm 2 enables the activity recognition process. The inputs are observed sensor along their time lines as , …, from segments , …, . The process maps sensor or object on to the ontology applying inference rules to determine if is a context descriptor for a static or dynamic activity. If the mapping using the inference rule returns activity, then it is reported as the activity recognised for the observed .

| Algorithm 2: Activity recognition algorithm. |

|

Scenario 3: Considering a real situation of object use and interaction in the home environment as represented in Table 3, the question would be: “What activity situation does these sensor outputs in Table 3 represent at the particular time?” The query construct to provide the activity situation at that particular time is given in the equation below.

Table 3.

A sample of sensor status and output.

4. Experiments and Results

To validate the framework presented in this paper, we used the Kasteren A [6] and Ordonez A [5] datasets captured in two different home settings with similar events and activities (see Table 4 and Table 5 for an overview of the home setting descriptions and activity instances). Our choice of these dataset was driven by the fact that the Kasteren and Ordonez dataset contain a lot of sensor activations as object use with dense sensing applied. Different types of sensors (e.g., pressure sensors, and magnetic sensors tagged to home objects such as microwave, dishes, and cups) were used to capture object interactions representing the different activities. To further enhance the learning process, the activities contained therein have been performed in varied ways and accurately annotated with the ground truth.

Table 4.

Home setting and description.

Table 5.

Activity instances in the Kasteren and Ordonez dataset.

We followed a four-fold cross-validation on the datasets. Our criterion for evaluation is to compare recognised activities with the ground truth provided with the dataset based on the average true positives TP, false positives FP and false negatives FN per activity. The results are then further evaluated based on precision, recall and F-score.

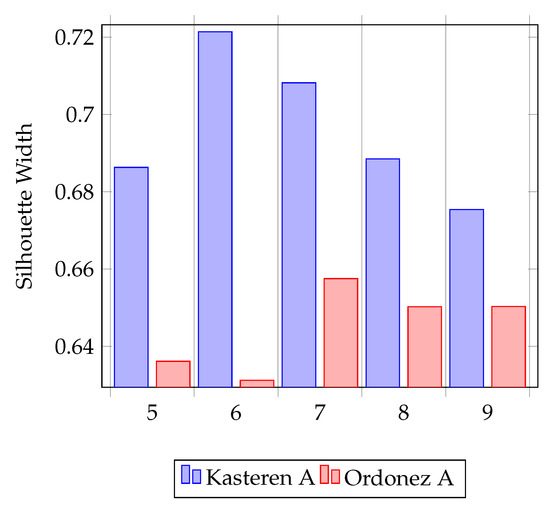

4.1. Activity-Object Use Discovery and Context Description Evaluation

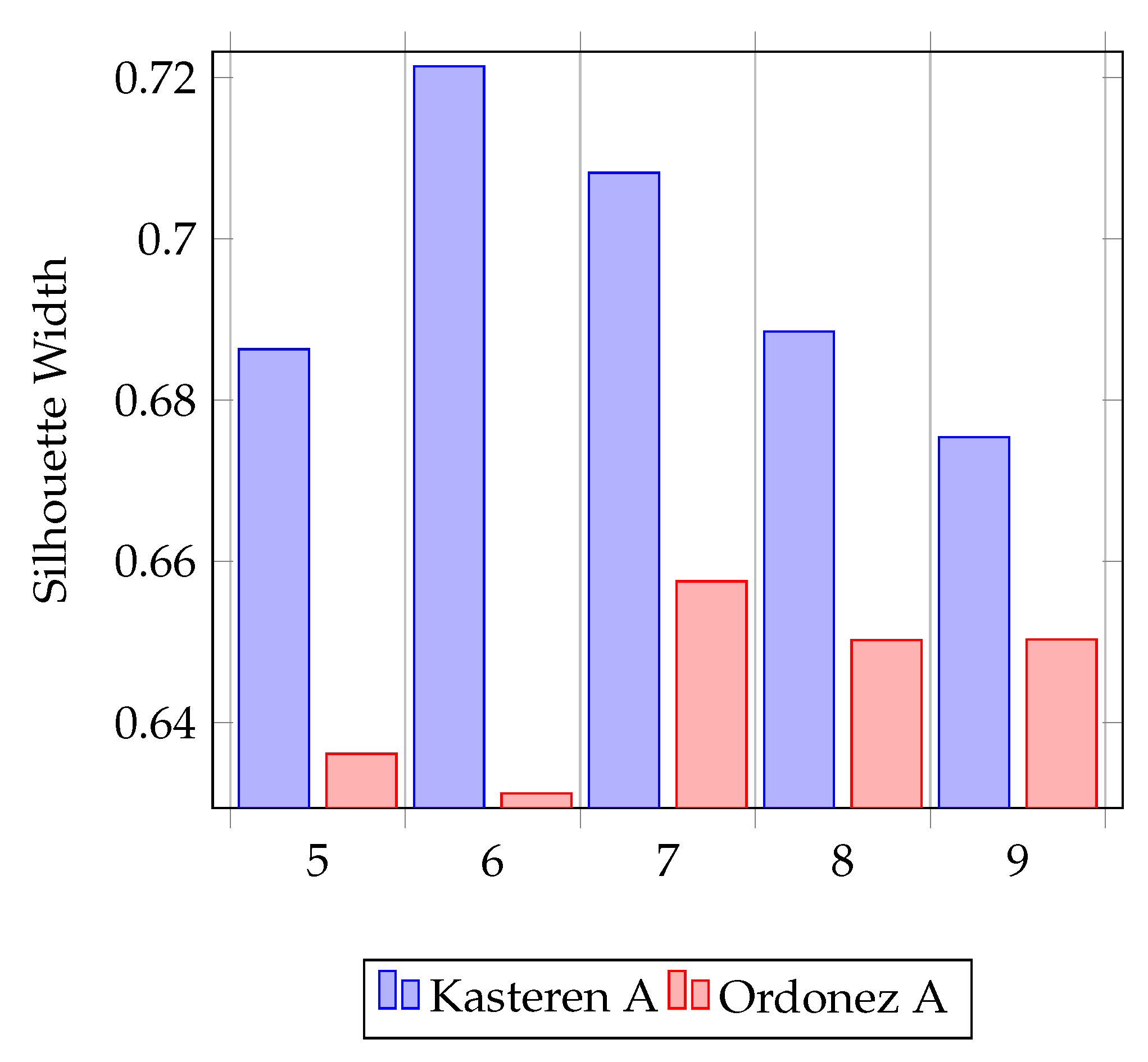

To generate the context descriptors for routine activities, we followed the context description process described in Section 3.1.1. Recall the LDA process requires activity topic numbers and the bag of objects observations. We determined the activity topic number using the silhouette method through K-Means clustering rather than a random guess of the number of activities represented in the dataset. To do this, we first cluster the sensor data features using K-Means, setting the number of clusters from 5 to 10 as a reasonable range of probable number of activities which this represents. We then performed the silhouette analysis which is a measure of the mean silhouette width of each of the clusters. Figure 5 represents a summary of the silhouette width results indicative of six and seven activity topics, respectively, for houses Kasteren A and Ordonez A.

Figure 5.

Silhouette width results for the Kasteren A and Ordonez A datasets indicative of the activity topics for the houses.

Next, we partitioned the dataset using 60-s sliding windows to construct the bag of object observations in the form of a segment-object-frequency matrix, as we have described Section 3.1.1. For the LDA activity-object discovery process, we used the constructed bags of object observations as inputs, the optimal activity topic numbers from the silhouette method and we set the dirichlet hyperparameters to and to 0.01 as recommended by Steyvers and Griffiths [35]. The context descriptors for the specific routine activities are achieved using Algorithm 1 above with dependency on the activity-object distributions from the LDA and . The idea is that for an object to be a context describing an activity topic, it must have been assigned to an activity topic by a number of times greater than the threshold . Further, we annotated some of the activity topics in line the ground truth and matched them with their respective context descriptors as given in Table 6 and Table 7 .

Table 6.

Activity concepts and the discovered context descriptors for Kasterens House A.

Table 7.

Activity concepts and the discovered context descriptors for Ordonez House A.

We discovered six activity topics (from the seven activity set in the Kasteren A ground truth) in this process indicative of the activity sets, which we annotated as Leaving, Toileting, Showering, Sleeping, Make Food and Drink. Our Make Food activity in this case represents Breakfast and Dinner due to same and similar context descriptors or object usage. However, we are able to distinguish these activities through the ontology static activity modelling. This is similar for Ordonez A with seven activities annotated as Leaving, Toileting, Showering, Sleeping, Make Food, Spare Time and Grooming. Our Make Food topic maps to Ordonez House A’s activity sets Breakfast, Lunch and Snack.

We evaluated the outcome of this process by analysing the similarities and relatedness between the contexts describing the activities since they were discovered in an unsupervised manner. To do this, we use the Jaccard Similarity index [38] expressed as Equation (22) to measure the similarities of the context descriptors for all the activities. The Jaccard index measures similarities between sample sets A and B by the ratio of the size of their intersection to the union of the sample sets.

The resulting score of similarities are always in the interval of 0 and 1 with a normalising factor 1 which is divided by the non-zero element in the sample sets being compared. The score of the Jaccard index is 1 if the sample sets are similar and equal and is 0 when there are no matching elements. By applying these methods, the resulting similarity indices for context descriptors of the activity topics for the Kasterens House A and Ordonez House A are presented in the Table 8 and Table 9. Although there are no marked similarities between the context descriptors for the activities, we applied a greater than 0.5 threshold which we used to underline the similarities between the sets of context descriptors. However, Drink has a context similarity quite high due to the use of Fridge, but this is below the threshold.

Table 8.

Jaccard similarity indices for context descriptors for Kasteren House A.

Table 9.

Jaccard similarity indices for context descriptors for Ordonez House A.

4.2. Activity Ontology and Recognition Performance

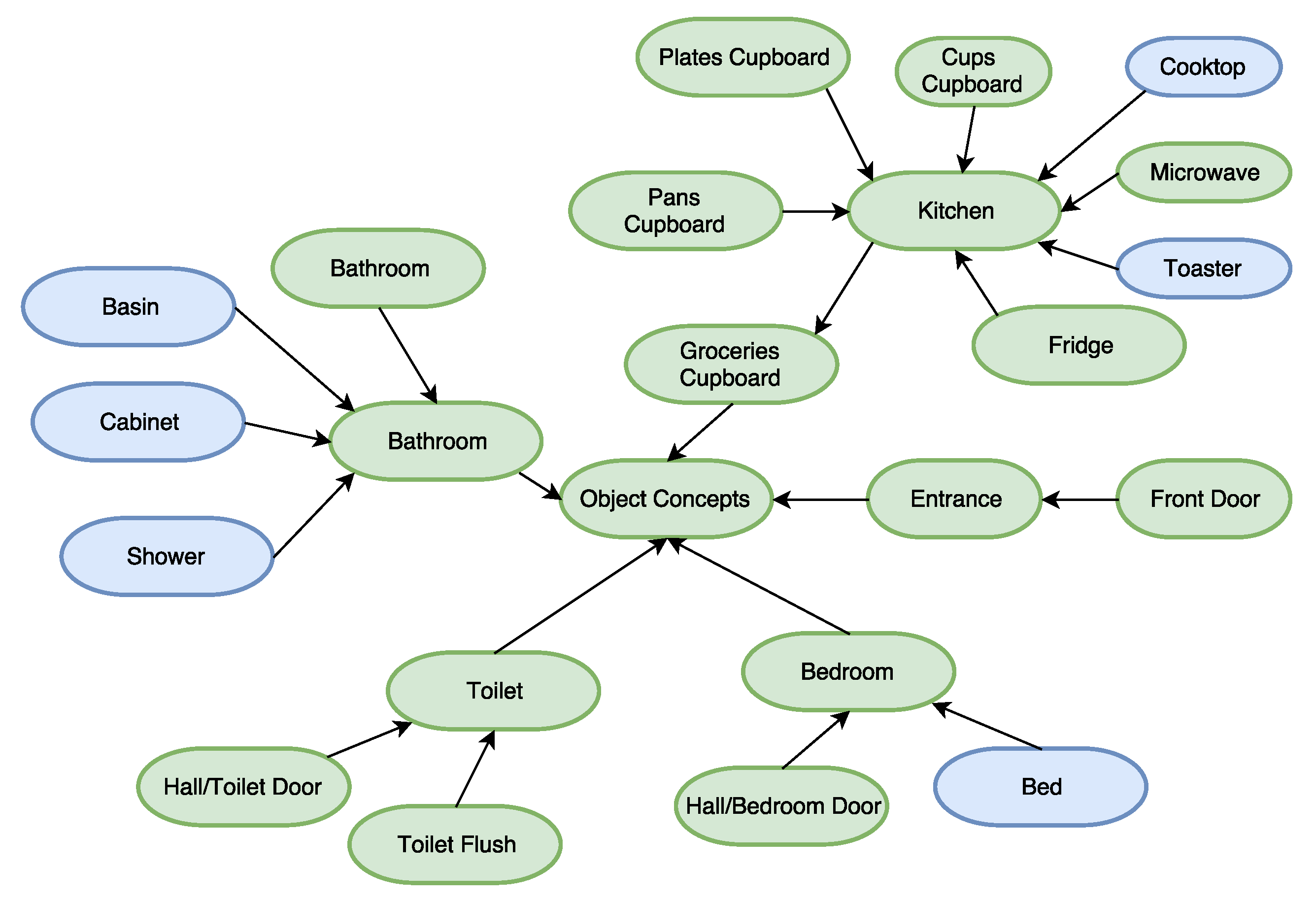

To this point, we have generated the context descriptors for the various activities for Kasteren A and Ordonez A houses. To facilitate activity recognition and eventual evaluation of the framework, we model the activities and the context descriptors from the previous subsection in an ontology activity model. To enhance and support a unified ontology model and with common concepts shared and which can be reused across the similar home environments, we developed unified activity ontology for the Kasteren and Ordonez datasets, as illustrated in Figure 6. The green coloured rounded rectangles represents common object concepts in both homes. The blue rounded rectangle has specifically used Ordonez concepts that are not shared in Kasteren concepts. This unified ontology model can also be extended and adapted further for similar homes thus reducing the amount of time taken to construct and develop activity ontologies.

Figure 6.

Common object concepts for Kasteren and Ordonez Houses.

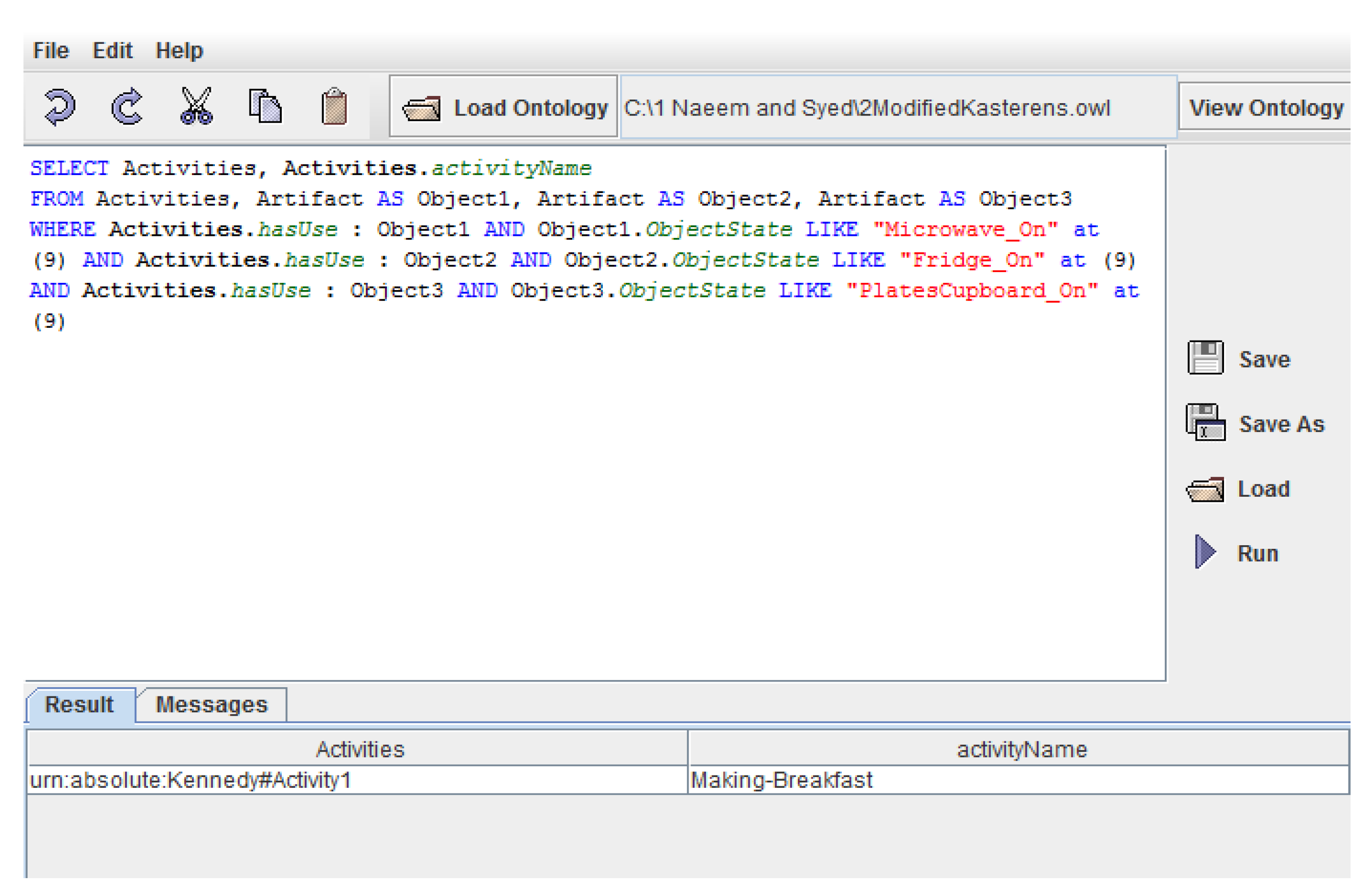

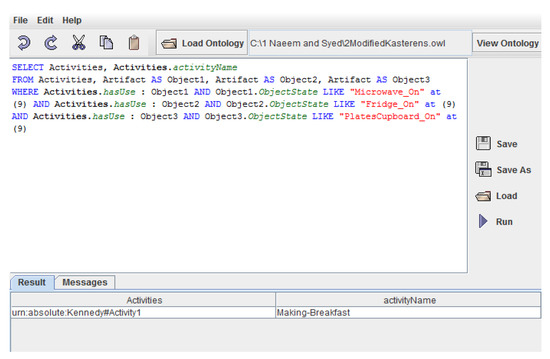

The activity concepts were also modelled accordingly, but due consideration was given to Make Food which represented a group of activities. The Make Food activity, as it implies, corresponds to a group of activities involving making of food and ranges from Breakfast to Dinner. With regards to the Kasterens dataset, we class Breakfast, and Dinner as static activities with super class Make Food sharing same or similar context descriptors and also they are performed at specific times of the day. To further enhance shared ontology concepts and reuse, we harmonised the Kasteren and Ordonez activity concepts, as illustrated in Figure 6, onto the activity ontology to form a set of unified activity concepts. Similar to the object concepts, we have colour coded activity concepts in this unified set of activity concepts with static and dynamic activities as super classes so that the green rounded rectangle represents common activity concepts and blue rounded rectangle as activity concepts in the Ordonez house and not in the Kasteren House. As part of our proposed framework, we added instances and individuals to the object concepts making the model more expressive (assertions used in populating the ABox) for example instantiating Microwave with Microwave_On to suggest the state of the object or sensor when in use. The ABox was further populated with assertions using object and data properties as we explained in Section 3.2 incorporating the context descriptors for the activity situations preparatory for activity recognition. The modelled activity situations or concepts are then linked to their respective context descriptors as object states through the properties as assertions added to the ABox. Activity recognition is enabled by an object use query to retrieve activity situations based on observed sensor or object data. Algorithm 2 implements the recognition process. To facilitate execution of activity inference, the modelled activity ontology is imported into the TOQL environment [37], as illustrated in Figure 7. Object based queries are executed by mapping the observed objects and its temporal information from the dataset to the closest activity in the imported activity ontology through ontological reasoning.

Figure 7.

Activity Recognition implementation from an object based query.

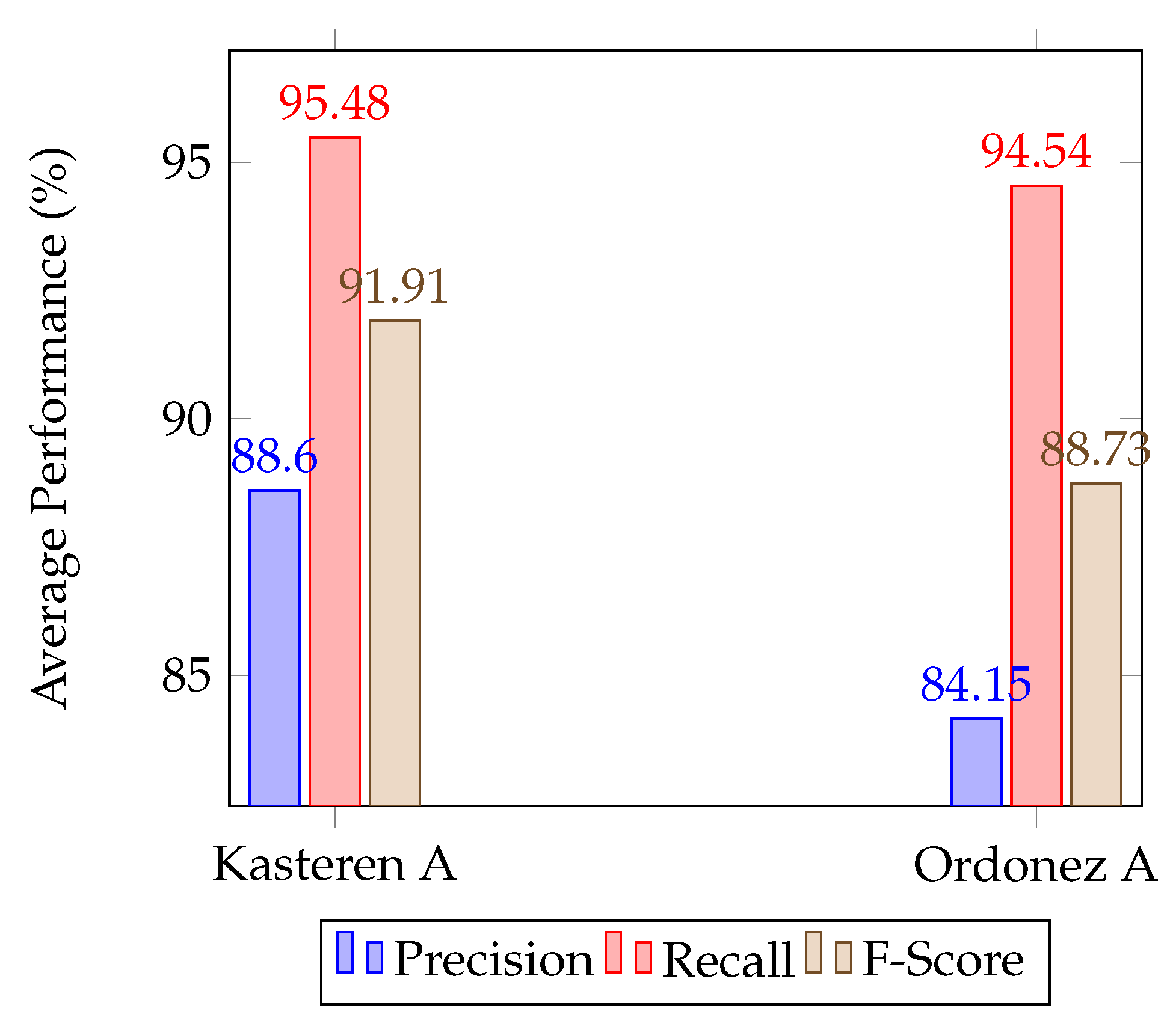

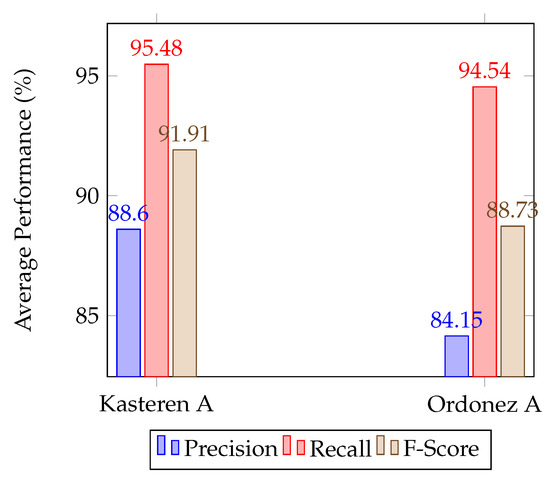

We evaluated the proposed framework, thus allowing us to compare the activities inferred and recognized by our framework with the ground truths. Leaving, Toileting, Sleeping and Showering for both datasets were recognised with significantly high results, as shown in Table 10. This performance can be attributed to the discovered object use and context descriptors for these activities. For these activities, object use were accurately specific with minimal false positives. The process of discovering likely object use for routine activities significantly ensured that these activities were associated to the objects used to perform them. Breakfast and Dinner for Kasteren House and Breakfast and Lunch for Ordonez House showed lower performance due to confusions from same and similar object use with Drink and Snack, respectively. These activities have shared same and similar object interactions as observed with context description process hence been classed under the super activity Make Food. Recall that to further distinguish Breakfast and Dinner they were modelled as Static activities given the specific time of the day they are performed. To enhance their recognition, time interval properties and concepts enabled by 4D fluent approach were included. They were often recognised concurrently and led to high false positives in the process. However, the results achieved for them are quite encouraging. Overall, the average precision, recall and F-Score of the datasets, as illustrated in Figure 8, show impressive performance.

Table 10.

Activity recognition performance for Kasteren A and Ordonez A.

Figure 8.

Average precision, recall and F-Score for Kasteren A and Ordonez A datasets.

4.3. Learning Performance of the framework

For further performance evaluation, we present the learning performance of our proposed activity recognition framework. Activities in the home environment can have different ways of being performed or the object use for activities may differ. A robust activity recognition model should have the ability of recognising activities irrespective of the object use and interactions. We evaluated the learning capability at the activity level further using the ground truth as the basis of evaluation. This comparison would involve the number instances of the different activities across folds at the activity level. A good model should be able to return almost the same number of activity traces as in the ground truth. The results, presented in Table 11, are indicative of good performance of the learning process of the framework. Leaving, Toileting, Sleeping and Showering were recognised with significantly high instances and minimal difference with the ground truth for both datasets. Breakfast, Lunch and Dinner showed lower performance due to confusions from same and similar object use with Drink. The number of correctly recognised instances in comparison to the ground truth is also high.

Table 11.

Summary of correctly recognised activity instances for Kasteren and Ordonez datasets.

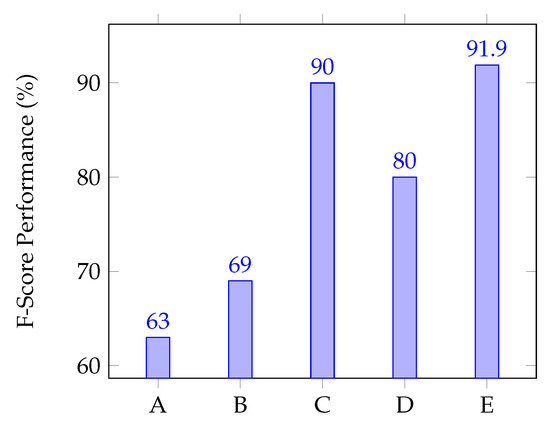

4.4. Comparison with Other Recognition Approaches

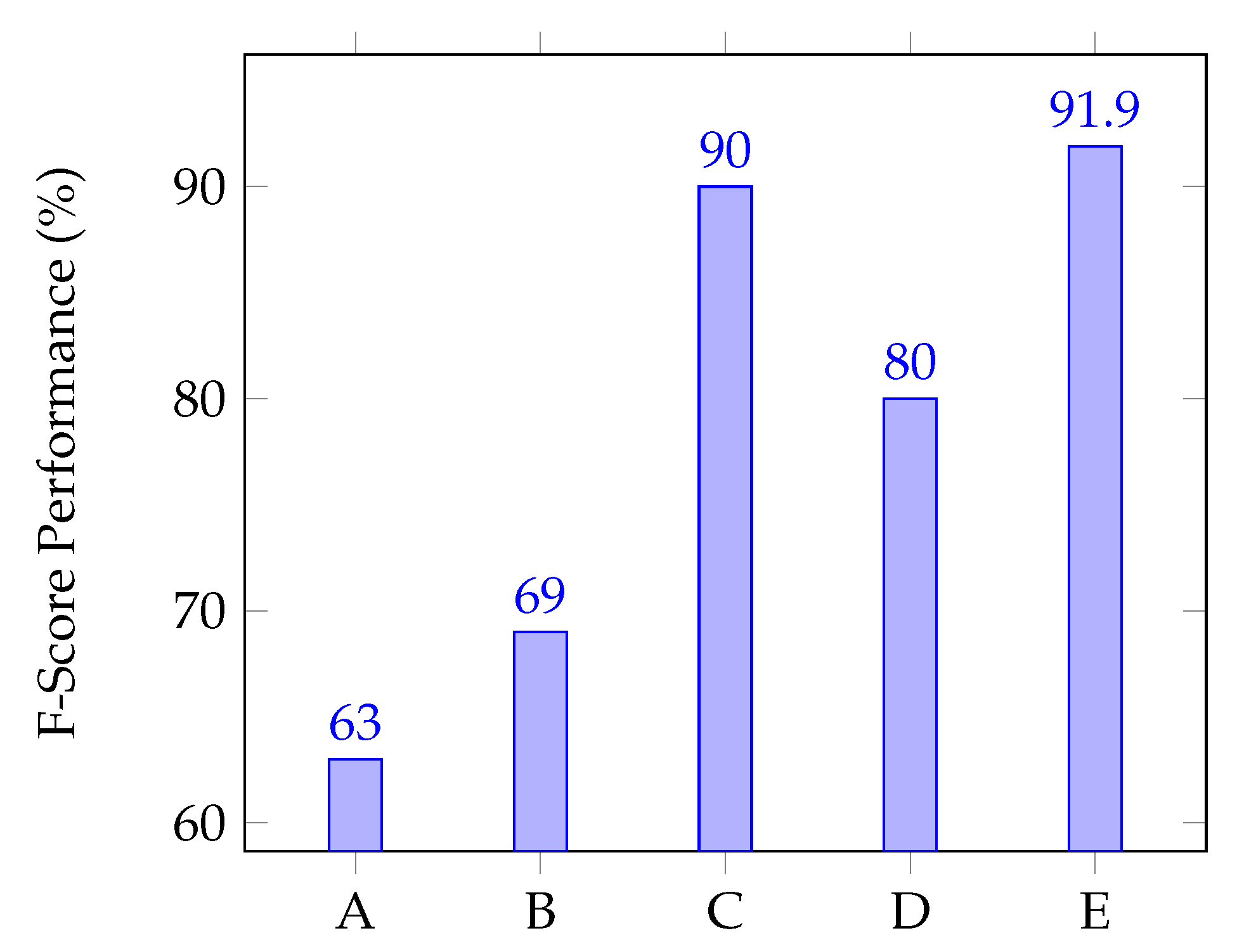

We compared the results we obtained with the results reported by Kasteren et al. [6], Ordonez et al. [5], Ye [39], and Riboni et al. [32] for the Kasteren and House A, as illustrated in Figure 9. Experimental methodology differs for the reported works. Kasteren et al. [6], Ordonez et al. [5] and Riboni et al. [32] all performed the evaluations using a leave one day out methodology. Ye [39] used a 10-fold validation, which is similar to the four-fold cross-validation we used. Although Ye [39] did not use timeslices, which lead to improved results, we have used 60-s timeslices similar to Kasteren et al. [6], Ordonez et al. [5] and Riboni et al. [32]. Comparing our results directly with these other methodologies, our work performed significantly better with 91.9% for the F-Score. It is assumed the weaker performance reported by Kasteren et al. [6], Ordonez et al. [5] and Riboni et al. [32] might be due to the effect of the evaluation methodology, i.e., leave one day out ,which meant fewer day representations for the object data. Comparing our results with Ye [39], we achieved a slightly higher F-Score which means our proposed frame work for recognition is robust and significantly good.

Figure 9.

A summary of evaluations using the Kasteren House A dataset for: (A) Kasteren et al. [6]; (B) Ordonez et al. [5]; (C) Ye [39]; (D) Riboni et al. [32]; and (E) this paper.

5. Summary and Conclusions

The proposed recognition framework in this paper provides the basis to learn and recognise activities. We carried out experiments using the Kasteren and Ordonez datasets. We evaluated the performance of the framework to recognise activities. In addition to the experiments, we compared our results to the results published using the same dataset in other literature. Based on the experiments and evaluations, we discuss the benefits and limitations of the proposed approaches.

- Activity-object use and context description process: As part of the framework, we proposed the acquiring knowledge of object use by the object use discovery and activity context descriptions for the activities. However, activities such as Breakfast, Lunch and Dinner, sharing same or similar object use, are considered as activity situations which can be made distinct by modelling them as static activities in the ontology. The main benefit of this process is its ability to discover unique object use as context descriptors for the activity situations. Limitations may arise for other similar activity situations such as Drink and Snack, as we observed with the datasets.

- Performance of the activity recognition process: Experiments carried out on the datasets suggest good recognition performance for activities. Although the performance was encouraging for most activities, recognition were confused for activities sharing same and similar object use. Notably in this case were Drink and Snack, which we modelled as dynamic activities, and Breakfast, Lunch and Dinner, modelled as static activities. Given the general performance of this activity recognition process, as illustrated in Figure 8, the activity recognition process on the average is significantly comparable.

- Model Learning Performance: The aim of this evaluation was to assess the model learning ability. From the results, the contexts descriptors which led to activity situations in the ground truth are similar to the contexts descriptors we discovered hence the result achieved at the activity level. We obtained almost the same number of activity traces for the datasets in comparison with the ground truth suggesting good and significant learning.

With the experiments, assessments and evaluations using publicly available datasets, we can say that: (i) The processes of activity-object use and context description of activity situation accurately provide the needed object and activity concepts for the ontology modelling process. (ii) Modelling activities as static and dynamic activities helps to improve activity recognition especially for activities with same and similar object interactions. (iii) Given the results from the activity recognition process in comparison with other results published using the same datasets, we conclude it is significantly good and encouraging. The experimental and evaluation process using these datasets suggests that the features, components and the entire activity recognition process have been fully verified.

Future work should involve extending the ontology activity model by the consideration of more contextual features for the different types of sensing devices. It should also consider extending the temporal entities and features for progressive activities as they evolve.

Author Contributions

Isibor Kennedy Ihianle and Usman Naeem conceived the idea and designed the experiments. Isibor Kennedy Ihianle developed the approach, carried out the ontology modelling, performed the analytic calculations and performed the simulations. Isibor Kennedy Ihianle, Usman Naeem, Syed Islam and Abdel-Rahman Tawil verified the analytical methods. Usman Naeem, Syed Islam and Abdel-Rahman Tawil supervised the findings of this work. Isibor Kennedy Ihianle wrote the paper. Isibor Kennedy Ihianle, Usman Naeem, Syed Islam and Abdel-Rahman Tawil discussed the results and contributed to the final manuscript.

Conflicts of Interest

We (Isibor Kennedy Ihianle, Usman Naeem, Syed Islam and Abdel-Rahman Tawil) wish to confirm that there are no known conflicts of interest associated with this publication and any organization and there has been no significant financial support for this work that could have influenced its outcome.

References

- Akdemir, U.; Turaga, P.; Chellappa, R. An Ontology based Approach for Activity Recognition from Video. In Proceedings of the 16th ACM International Conference on Multimedia (MM, 08), Vancouver, BC, Canada, 27–31 October 2008. [Google Scholar]

- Leo, M.; Medioni, G.; Trivedi, M.; Kanade, T.; Farinella, G. Computer Vision for Assistive Technologies. Comput. Vis. Image Underst. 2017, 154, 1–15. [Google Scholar] [CrossRef]

- Huynh, D.T.G. Human Activity Recognition with Wearable Sensors. Ph.D. Thesis, Technische Universit Darmstadt, Darmstadt, Germany, 2008. [Google Scholar]

- Labrador, M.A.; Yejas, O.D.L. An ontology based approach for activity recognition from video. In Human Activity Recognition: Using Wearable Sensors and Smartphones; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Ordonez, J.; de Toledo, P.; Sanchis, A. Activity Recognition Using Hybrid Generative/Discriminative Models on Home Environments Using Binary Sensors. Sensors 2013, 13, 5460–5477. [Google Scholar] [CrossRef] [PubMed]

- Van Kasteren, T.; Englebienne, G.; Krose, B.J.A. Human Activity Recognition from Wireless Sensor Network Data: Benchmark and Software. In Activity Recognition in Pervasive Intelligent Environments; Atlantis Press: Paris, France, 2011; pp. 165–186. [Google Scholar]

- Chen, L.; Nugent, C.D.; Wang, H. A Knowledge-Driven Approach to Activity Recognition in Smart Homes. IEEE Trans. Knowl. Data Eng. 2011, 24, 961–974. [Google Scholar] [CrossRef]

- Chen, L.; Nugent, C.; Hoey, J.; Cook, D.; Yu, Z. Sensor based activity recognition. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 790–808. [Google Scholar] [CrossRef]

- Okeyo, G.; Chen, L.; Wang, H.; Sterritt, R. Dynamic Sensor Data Segmentation for Real-time Activity Recognition. Pervasive Mob. Comput. 2012, 10, 155–172. [Google Scholar] [CrossRef]

- Modayil, J.; Bai, T.; Kautz, H. Improving the recognition of interleaved activities. In Proceedings of the 10th International Conference onUbiquitous Computing (UbiComp 2008), Seoul, Korea, 21–24 September 2008. [Google Scholar]

- Tapia, E.M.; Intille, S.S.; Larson, K.; Haskell, W.; Wright, J.; King, A.; Friedman, R. Real-time recognition of physical activities and their intensities using wireless accelerometers and a heart rate monitor. In Proceedings of the 11th IEEE International Symposium on Wearable Computers, Boston, MA, USA, 11–13 October 2007. [Google Scholar]

- Patterson, D.J.; Liao, L.; Fox, D.; Kautz, H. Inferring High Level Behavior from Low-Level Sensors. In Proceedings of the 5th Conference on Ubiquitous Computing (UbiComp, 03), Seattle, WA, USA, 12–15 October 2003. [Google Scholar]

- Patterson, D.J.; Kautz, D.F.H.; Philipose, M. Fine-grained activity recognition by aggregating abstract object usage. In Proceedings of the 9th IEEE International Symposium on Wearable Computers, Osaka, Japan, 18–21 October 2005. [Google Scholar]

- Langley, P.; Iba, W.; Thompson, K. An analysis of bayesian classifiers. In Proceedings of the 10th National Conference on Artificial Intelligence, San Jose, CA, USA, 12–16 July 1992. [Google Scholar]

- Tapia, E.M.; Intille, S.S.; Larson, K. Activity recognition in the home using simple and ubiquitous sensor. In Proceedings of the 2nd International Conference in Pervasive Computing (PERVASIVE 2004), Vienna, Austria, 21–23 April 2004. [Google Scholar]

- Katayoun, F.; Gatica-Perez, D. Discovering routines from large-scale human locations using probabilistic topic models. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 3. [Google Scholar]

- Huynh, T.; Fritz, M.; Schiele, B. Discovery of activity patterns using Topic Models. In Proceedings of the 10th International Conference on Ubiquitous computing (UbiComp 08), Seoul, Korea, 21–24 September 2008. [Google Scholar]

- Huynh, T.; Blanke, U.; Schiele, B. Scalable recognition of daily activities with wearable sensors. In Proceedings of the 3rd International Conference on Location and Context Awareness (LOCA 07), Oberpfaffenhofen, Germany, 20–21 September 2007. [Google Scholar]

- Lee, S.; Mase, K. Activity and location recognition using wearable sensor. IEEE Pervasive Comput. 2002, 1, 24–32. [Google Scholar]

- Bao, L.; Intille, S.S. Activity recognition from user annotated acceleration data. In Proceedings of the 2nd International Conference Pervasive Computing (PERVASIVE, 2004), Vienna, Austria, 21–23 April 2004. [Google Scholar]

- Cao, D.; Masoud, O.T.; Boley, D.; Papanikolopoulos, N. Human motion recognition using support vector machines. Comput. Vis. Image Underst. 2009, 113, 1064–1075. [Google Scholar] [CrossRef]

- Qian, H.; Mao, Y.; Xiang, W.; Wang, Z. Recognition of human activities using svm multi-class classification. Pattern Recognit. Lett. 2010, 31, 100–111. [Google Scholar] [CrossRef]

- Liao, L.; Fox, D.; Kautz, H. Extracting places and activities from GPS traces using hierarchical conditional random fields. Int. J. Robot. Res. 2007, 26, 119–134. [Google Scholar] [CrossRef]

- Huynh, T.; Schiele, B. Unsupervised discovery of structure in activity data using multiple eigenspaces. In Proceedings of the 2nd International Workshop on Location and Context Awareness (LoCA 2006), Dublin, Ireland, 10–11 May 2006. [Google Scholar]

- Gruber, T.R. A translation approach to portable ontology specifications. Knowl. Acquis. 1993, 5, 119–220. [Google Scholar] [CrossRef]

- Latfi, F.; Lefebvre, B.; Descheneaux, C. Ontology-Based Management of the Telehealth Smart Home, Dedicated to Elderly in Loss of Cognitive Autonomy. In Proceedings of the OWLED 2007 Workshop on OWL: Experiences and Directions, Innsbruck, Austria, 6–7 June 2007. [Google Scholar]

- Chen, L.; Nugent, C.D. Ontology-based activity recognition in intelligent pervasive environments. Int. J. Web Inf. Syst. 2009, 5, 410–430. [Google Scholar] [CrossRef]

- Yamada, N.; Sakamoto, K.; Kunito, G.; Yamazaki, K.; Isoda, Y.; Tanaka, S. Applying Ontology and Probabilistic Model to Human Activity Recognition from Surrounding Things. IPSJ Digit. Cour. 2007, 3, 506–517. [Google Scholar] [CrossRef]

- Okeyo, G.; Chen, L.; Wang, H.; Sterritt, R. A Hybrid Ontological and Temporal Approach for Composite Activity Modelling. In Proceedings of the IEEE 11th International Conference on Trust, Security and Privacy in Computing and Communications, Liverpool, UK, 25–27 June 2012. [Google Scholar]

- Ihianle, I.K.; Naeem, U.; Tawil, A. A Dynamic Segmentation Based Activity Discovery through Topic Modelling. In Proceedings of the IET International Conference on Technologies for Active and Assisted Living (TechAAL 2015), London, UK, 5 November 2015. [Google Scholar]

- Ihianle, I.K.; Naeem, U.; Tawil, A. Recognition of Activities of Daily Living from Topic Model. Procedia Comput. Sci. 2016, 98, 24–31. [Google Scholar] [CrossRef]

- Riboni, D.; Pareschi, L.; Radaelli, L.; Bettini, C. Is ontology based activity recognition really effective? In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), Seattle, WA, USA, 21–25 May March 2011. [Google Scholar]

- Blei, D.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet Allocation, Machine Learning. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Rousseeuw, P. J. A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Griffths, T.L.; Steyvers, M. Finding scientific topics. Proc. Natl. Acad. Sci. USA 2004, 101 (Suppl. 1), 5228–5235. [Google Scholar] [CrossRef] [PubMed]

- Scalmato, A.; Sgorbissa, A.; Zaccaria, R. Describing and Recognizing Patterns of Events in Smart Environments with Description Logic. IEEE Trans. Cybern. 2013, 43, 1882–1897. [Google Scholar] [CrossRef] [PubMed]

- Baratis, E.; Petrakis, E.G.M.; Batsakis, S.; Maris, N.; Papadakis, N. TOQL: Temporal Ontology Querying Language. Adv. Spat. Tempor. Databases 2009, 338–354. [Google Scholar]

- Jain, A.K.; Dubes, R.C. Algorithms for Clustering Datas; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1988. [Google Scholar]

- Ye, J. Exploiting Semantics with Situation Lattices in Pervasive Computing. Ph.D. Thesis, University College Dublin, Dublin, Ireland, 2009. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).