Abstract

Autonomous vehicles (AVs) are increasingly becoming a reality, enabled by advances in sensing technologies, intelligent control systems, and real-time data processing. For AVs to operate safely and effectively, they must maintain a reliable perception of their surroundings and internal state. However, sensor failures, whether due to noise, malfunction, or degradation, can compromise this perception and lead to incorrect localization or unsafe decisions by the autonomous control system. While modern AV systems often combine data from multiple sensors to mitigate such risks through sensor fusion techniques (e.g., Kalman filtering), the extent to which these systems remain resilient under faulty conditions remains an open question. This work presents a simulation-based fault injection framework to assess the impact of sensor failures on AVs’ behavior. The framework enables structured testing of autonomous driving software under controlled fault conditions, allowing researchers to observe how specific sensor failures affect system performance. To demonstrate its applicability, an experimental campaign was conducted using the CARLA simulator integrated with the Autoware autonomous driving stack. A multi-segment urban driving scenario was executed using a modified version of CARLA’s Scenario Runner to support Autoware-based evaluations. Faults were injected simulating LiDAR, GNSS, and IMU sensor failures in different route scenarios. The fault types considered in this study include silent sensor failures and severe noise. The results obtained by emulating sensor failures in our chosen system under test, Autoware, show that faults in LiDAR and IMU gyroscope have the most critical impact, often leading to erratic motion and collisions. In contrast, faults in GNSS and IMU accelerometers were well tolerated. This demonstrates the ability of the framework to investigate the fault-tolerance of AVs in the presence of critical sensor failures.

1. Introduction

Autonomous vehicles (AVs) are becoming more prevalent, with ongoing advancements bringing the industry closer to fully autonomous systems. As their integration into public roads accelerates, ensuring their safe operation becomes increasingly important. AVs have the potential to improve road safety [1], mobility, and environmental sustainability [2]. This transformation is driven by significant advances in sensing technologies, data processing, and intelligent control algorithms. In AV systems, sensor data serves as input for perception, localization, and decision-making processes. As such, the reliability of these sensors is critical for ensuring the safe and effective operation of autonomous vehicles.

Despite the increasing deployment of AVs in controlled environments and urban pilot programs, ensuring their safety in the face of real-world uncertainties remains a significant challenge. One particular challenge is the impact of sensor failures, which can arise due to environmental interference, hardware degradation, calibration errors, or cyber-physical attacks [3,4]. Sensor failures can lead to incorrect perception of the vehicle’s surroundings, degraded localization accuracy, or erratic control decisions, potentially resulting in catastrophic outcomes such as unsafe maneuvers or collisions.

Although the literature on autonomous vehicles extensively explores perception and control, relatively few studies have systematically simulated sensor failures in controlled environments to assess the fault tolerance of AV systems. Tools such as AVFI [5], CarFASE [6], and DriveFI [7] show promise in this regard, offering capabilities for structured fault injection, modeling sensor failures, and enabling reproducible experiments for behavior analysis. However, most published works [5,6,7,8,9,10] focus on algorithm development under ideal or mildly perturbed conditions, rather than on structured fault injection and behavior analysis of AV software stacks. This limitation is particularly significant when considered in the context of functional safety standards such as ISO 26262, which emphasize risk assessment and the validation of safety mechanisms under fault conditions [10]. Compliance with such standards requires not only theoretical resilience but also practical validation of a system’s response to component failures, namely sensors.

To address this gap, we propose a framework to assess sensor fault tolerance in AVs using simulation-based fault injection. The framework integrates CARLA, an open-source driving simulator capable of emulating sensor outputs in realistic urban environments, with Autoware, an open-source autonomous driving software stack. This integration allows for the execution of realistic urban driving scenarios under controlled conditions. A key component of this setup is the ROS bridge, which connects CARLA to Autoware by transmitting simulated sensor data. Sensor faults are injected through the ROS bridge by modifying sensor data in real time before it reaches Autoware. These include silent sensor failures (complete data loss) and noise (random deviations of sensor values) applied to LiDAR, IMU (gyroscope, accelerometer, and quaternion), and GNSS. Our contributions are summarized as follows:

- Integration of CARLA and Autoware: We established an integration that enables structured, automated testing of autonomous driving behavior under sensor failures using scenario-based simulations.

- Sensor Fault Injection Mechanism: We designed and implemented a fault injection system capable of simulating various sensor anomalies, including silent failures and noise. This mechanism targets key sensors such as LiDAR, IMU (gyroscope, accelerometer, and quaternion), and GNSS.

- Evaluation of AV System Response: We conducted a structured fault injection campaign to assess how Autoware responds under different sensor failure conditions. By injecting faults during scenario execution, we analyzed the system’s behavior and measured its ability to maintain safe and functional operation.

The remainder of this paper is structured as follows. Section 2 presents background concepts relevant to autonomous vehicles, including sensor systems, software architecture, and the foundations of sensor fusion. It also introduces the Autoware framework and the CARLA simulator. Section 3 reviews related work in AV fault injection and safety validation through simulation. Section 4 describes the simulation workflow and explains how sensor failures were implemented and executed within the test scenarios. Section 5 outlines the test conditions and fault model, followed by a description of each test run. Section 6 presents and analyzes the results. Finally, Section 7 concludes the study and discusses future work.

2. Background Concepts

To evaluate how autonomous driving systems respond to sensor faults, it is essential to understand the foundational components involved in their architecture and operation. This section introduces the key elements of autonomous vehicles, including their modular software architecture, sensor technologies, simulation environments, and the Autoware software stack. It begins by introducing different architectures of AV software, followed by a technical overview of the exteroceptive and proprioceptive sensors used in autonomous driving. The CARLA simulator and its integration with ROS are then presented as the tools used to emulate realistic driving scenarios. Finally, the Autoware system is described in terms of its architecture, sensing modules, and localization strategy. Together, these concepts provide the necessary context for understanding the simulation framework and fault injection methodology described later in this work.

2.1. Architecture of Autonomous Driving Systems

The Sense–Plan–Act (SPA) model has served as a conceptual foundation for autonomous systems, describing a sequential process of sensing, planning, and acting. While useful as a simplification, SPA is rarely used in its pure form for modern autonomous vehicles (AVs) because it struggles with real-time uncertainty, continuous adaptation, and highly dynamic road scenes. SPA’s reliance on static world models, absence of continuous feedback, and latency introduced by complete re-planning cycles make it less suited to real-time driving scenarios [11].

Modern AV software stacks adopt hybrid and reactive architectures, which blend fast feedback loops with higher-level deliberation. These designs often include multiple layers, reactive control, behavior sequencing, and planning, working in parallel and exchanging information continuously. Architectures such as Subsumption [12], Three-Tier (3T) [13], and Behavior Trees [14] are widely used in robotics, with adaptations for AVs. Additional advancements integrate Model Predictive Control (MPC) [15] for short-term re-planning, and learning-based perception–action loops for adaptive decision-making.

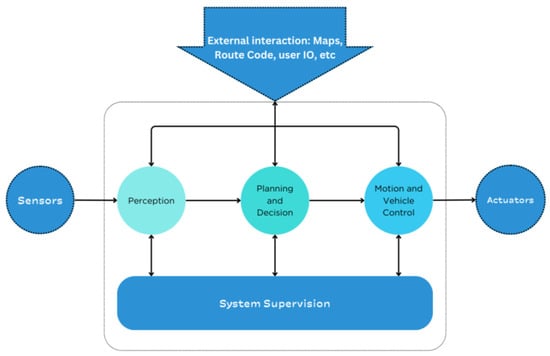

A common structure in AVs consists of Perception, Planning and Decision, Motion and Vehicle Control, and System Supervision modules [16]. These components operate in a tightly integrated loop, with frequent bidirectional data exchange and feedback paths, allowing for continuous adaptation to changing traffic conditions, sensor updates, and safety events. Figure 1 illustrates this modern modular organization.

Figure 1.

Functional architecture of an autonomous driving system.

2.1.1. Perception

Prediction is responsible for interpreting sensor data to build an understanding of the vehicle’s surroundings. It receives data from sensors such as cameras, LiDARs, radars, ultrasonic sensors, IMUs, and GNSS, often combining these to improve resilience. Using this input, the perception software detects and classifies relevant environmental features: other vehicles, pedestrians, cyclists, road signs and traffic lights, lane markings, free space, obstacles, etc. It produces a structured world model or environmental representation, which may include the 3D positions and velocities of objects, their classifications (object types), traffic light states, and road geometry [17]. In short, perception “perceives” or senses the environment and answers the question: “What is around me and where?”.

Within perception, localization determines the position and orientation (pose) of the vehicle in the world. While perception builds a map of what is around the vehicle, localization answers the question “Where am I?”. The Localization module uses data from sensors such as GNSS for global position, inertial measurement units (IMU) for accelerations/rotational rates, and often uses features from perception (matching LiDAR scans or camera observations to a known map) to estimate the vehicle’s location with high accuracy. Localization operates continuously in the background, compensating for GNSS/IMU drift over time by incorporating environmental cues [18,19].

Perception also incorporates prediction, which predicts the future motion of dynamic objects in the environment. Given the current state of surrounding vehicles, pedestrians, or other moving agents (as perceived by the perception module), prediction algorithms estimate “What are they going to do next?”. This typically involves computing short-term trajectory predictions for each relevant object, for example, predicting that a vehicle in front will continue straight at a certain speed, or that a pedestrian might begin crossing the street [20].

2.1.2. Planning and Decision

The Planning and Decision modules determine the vehicle’s next actions based on the perceived environment, predicted object motion, and driving objectives. It processes data from perception and maps to decide on a safe and efficient course of action. In essence, the planning module answers the question “What should I do now?” by generating a trajectory or path that leads the vehicle toward its destination. This plan is computed with the end goal in mind and is continuously updated to adapt to the current situation, ensuring safety and compliance with traffic rules along the way [21]. In this context, the plan refers to the high-level driving decision (for example, taking a lane change or stopping at an intersection), while the trajectory specifies the detailed spatiotemporal path the vehicle should follow, including position, speed, and heading over time. The trajectory is generated to respect safety margins, comfort, and traffic rules, and it is continuously updated to adapt to changes in the environment.

2.1.3. Motion and Vehicle Control

The Motion and Vehicle Control module takes the planned trajectory or target command from the planning and decision module and executes it by sending low-level control inputs to the vehicle’s actuators (throttle, brake, and steering). Its role is to ensure the vehicle acts on the plan accurately and safely [22]. Control is commonly divided into lateral control (steering) and longitudinal control (acceleration and braking). Controllers rely on feedback from localization and onboard sensors to adjust commands in real time, closing the loop between planning and physical actuation [21,22,23].

2.1.4. System Supervision

Overseeing all the above modules is the System Supervision (also referred to as the vehicle management or coordination layer). This module monitors the overall operation of the autonomous driving system to ensure it functions safely and reliably [17,24]. The key responsibilities of system supervision are:

- Health Monitoring: The supervision module continuously checks the status of both hardware and software throughout the vehicle. If any module is not operating correctly, for example, a critical sensor drops out or the planner stops producing new trajectories, the supervision layer will detect this [17].

- Fault Management and Fail-Safe Mechanisms: Upon detecting an anomaly or failure, system supervision can initiate appropriate fail-safe mechanisms. This might mean alerting the driver or a remote operator or transitioning the vehicle into a safe state (such as gradually coming to a stop) if the autonomous system can no longer operate safely. This supervisory function is crucial for meeting functional safety requirements (ISO 26262 and similar standards) in a safety-critical system [17].

- Mission and Mode Management: It can manage transitions between autonomous driving and manual control, authorize engagement of self-driving when system checks pass, and abort or override the autonomy if necessary. It may also handle route initialization and high-level mission planning or interface with fleet management (in the context of robotaxis, for example), though these functions are sometimes considered separate. Additionally, system supervision can manage the Human–Machine Interface (HMI), for example, conveying system status to passengers or requesting driver takeover when needed [17].

This supervision module allows a complex AV software stack to maintain reliability, managing everything from fault diagnostics to system-level decision-making.

2.2. Sensors Used in AVs

Sensors used in autonomous vehicles can be classified into two categories: internal state sensors (proprioceptive sensors) and external state sensors (exteroceptive sensors) [25]. A survey of these sensor technologies, their roles, common failures, and mitigation strategies was presented in our previous work [26]. The remainder of this subsection summarizes the most relevant aspects for the fault injection study described in this article. Proprioceptive sensors are responsible for measuring the internal state of the vehicle. This group includes systems such as inertial measurement units (IMU), inertial navigation systems (INS), and encoders. Encoders provide critical feedback on components like steering, motor position, braking, and acceleration, enabling accurate assessment of the vehicle’s position, motion, and odometry [27].

Exteroceptive sensors gather information about the external environment. These include cameras, LiDAR sensors, RADAR, global navigation satellite systems (GNSS), and ultrasonic sensors, which enable the vehicle to perceive terrain, surrounding objects, and environmental conditions.

The following subsections describe the role and give an overview of each sensor type.

2.2.1. Ultrasonic Sensors

Ultrasonic sensors estimate distances and detect nearby objects by emitting high-frequency sound waves, typically between 20 and 40 kHz [28], which are beyond the range of human hearing. These sensors calculate distance based on the time-of-flight of the emitted sound wave, measuring the duration until the echo returns after reflecting off an object. Ultrasonic sensors are highly directional, with a narrow detection beam, which makes them suitable for short-range applications. They are most commonly used as parking sensors [29] and are effective in adverse weather conditions or dusty environments [3,30]. However, their operational range is limited, generally allowing obstacle detection only up to approximately two meters [30,31].

2.2.2. RADAR: Radio Detection and Ranging

Radar sensors operate by emitting electromagnetic signals and analyzing the reflected waves. In frequency-modulated continuous-wave (FMCW) radars, measuring the time delay between the transmitted and received signals yields the range to an object, while the Doppler shift provides its relative velocity [32]. They offer a wide perception range, typically from 5 m up to 200 m [31,33], and maintain reliable performance in adverse weather conditions such as rain, fog, or low-light environments. Radars are particularly effective at detecting nearby objects surrounding the vehicle with a high degree of accuracy [3,30,31,33].

However, radar systems can produce false positives due to signal reflections from surrounding structures. Additionally, FMCW automotive radars can experience mutual interference, particularly when many vehicles operate nearby, which has been documented experimentally and is an active area of mitigation research [34,35].

2.2.3. LiDAR: Light Detection and Ranging

LiDAR sensors estimate distance by emitting laser light and inferring range from the returned signal. LiDAR distance can be measured using time-of-flight, amplitude/phase-modulated continuous wave (AMCW/phase-shift), and frequency-modulated continuous wave (FMCW), ToF relies on round-trip time, AMCW estimates the phase shift in an intensity-modulated carrier, and FMCW uses coherent beat-frequency detection and can directly yield Doppler velocity [36,37,38,39,40]. By scanning multiple directions, they generate detailed spatial information in the form of point clouds and distance maps [41,42]. This high-resolution data allows for precise identification of objects, pedestrians, and environmental features, supporting functions such as obstacle avoidance and navigation. LiDARs operate in the near-infrared (e.g., around 905 nm or 1550 nm) and can detect objects at distances up to 300 m [41,42].

Under ideal weather conditions, LiDAR delivers superior spatial resolution compared to radar. However, its performance degrades significantly in adverse weather, such as fog, heavy rain, or snow, where light pulses are scattered or absorbed by particles in the air [30,31,42,43].

2.2.4. Camera

Cameras provide high-resolution visual data and can capture fine-grained details of the environment at distances of up to 250 m. They are widely used in autonomous vehicles for applications such as blind spot detection, lane change assistance, side view monitoring, and accident recording. When combined with deep learning algorithms, cameras enable the recognition and interpretation of traffic signs, road markings, vehicles, and pedestrians [44,45,46,47].

Despite their strengths, cameras are highly sensitive to changes in lighting and weather conditions, including rain, snow, and fog, which can significantly impair their performance. To compensate for these limitations, cameras are often used in conjunction with radar and LiDAR systems, enhancing overall perception accuracy and resilience [48].

2.2.5. GNSS

The operating principle of the GNSS relies on the receiver identifying signals from at least four satellites and calculating its distance to each one. Given that the satellite positions are known, the receiver uses trilateration to estimate its own position in global coordinates. While GNSS is a general term that encompasses multiple satellite constellations, such as the American GPS, the European Galileo, the Russian GLONASS, and the Chinese BeiDou, GPS (Global Positioning System) is often used to refer to all satellite-based positioning systems.

GNSS signals are prone to many errors that reduce the accuracy of the system, including the following:

- Clock bias and synchronization, due to residual satellite-clock error and receiver clock drift/jitter, clock/synchronization effects are present, but the receiver’s absolute bias is estimated with (x, y, z), so stability, not absolute offset, governs positioning accuracy [49].

- Signal delays, caused by propagation through the ionosphere and troposphere.

- Multipath effect.

- Satellite orbit uncertainties.

Current vehicle positioning systems improve their accuracy by combining GNSS signals with data from other vehicle sensors (e.g., inertial measurement units (IMU), LiDARs, radars, and cameras) to produce trustworthy position information [50,51,52]. This mitigation strategy is called sensor fusion and is discussed in Section 2.2.7. GNSS is susceptible to jamming, such as when the receiver encounters interference from other radio transmission sources. GNSS receivers also suffer from spoofing, when fake GNSS signals are intentionally transmitted to feed false position information and divert the target from its intended trajectory.

2.2.6. Inertial Measurement Units

Inertial Measurement Units consist of multiple sensors, typically accelerometers, gyroscopes, and magnetometers. These sensors provide measurements of linear acceleration, angular velocity, and magnetic orientation. Based on this data, the system estimates the movement and orientation of the vehicle in three-dimensional space. The orientation is often expressed using quaternions, which offer a stable and precise representation of rotation. IMUs play a key role in detecting slippage, lateral movement, and changes in direction. When combined with other sensors such as GNSS or LiDAR, the IMU contributes to inertial guidance, a method that helps correct positioning errors and enhances the accuracy and frequency of vehicle motion estimation [31,52].

2.2.7. Sensor Fusion

Sensors vary in technology and purpose, and each type of sensor has its weaknesses that are inherent to its technological capabilities. To cover these weaknesses, some mitigation strategies are implemented, such as sensor fusion [24]. Sensor fusion is a crucial part of autonomous driving systems [27,32] where input from multiple sensors is combined to reduce errors and overcome the limitations of individual sensors. As cameras offer high-resolution images suitable for object classification and lane detection, LiDAR excels at precise distance measurements and accurate spatial mapping, radar provides reliable distance and velocity measurements under adverse conditions, and GNSS offers global positional data, combining information from these diverse sources allows autonomous vehicles to enhance overall perception performance and resilience, especially when individual sensors are compromised due to faults, noise, or environmental conditions [17,24,52].

Sensor fusion is crucial for ensuring AV safety, as reliance on a single sensor often leads to vulnerabilities and reduced performance under real-world conditions. Through the fusion process, redundancy across sensor modalities can be leveraged to compensate for inaccuracies or intermittent sensor failures, thereby increasing overall system resilience.

In autonomous driving stacks, such as Autoware, sensor fusion often occurs at multiple abstraction levels, depending on specific module requirements. Autoware applies Kalman filtering techniques to fuse GNSS positional data with LiDAR-based localization estimates, enhancing accuracy and reducing localization uncertainty, even under sensor noise or faults. Kalman filters are probabilistic algorithms particularly suited for sensor fusion because they efficiently handle noisy data, dynamically update estimates based on incoming measurements, and predict future system states.

The extensive literature on sensor fusion emphasizes its interdisciplinary complexity, involving classical algorithms (e.g., Kalman filtering, particle filtering, Bayesian inference) and modern deep-learning approaches [17,24,32,46,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74]. However, a detailed review of these methods falls beyond the scope of this study, as our primary goal is to assess system behavior under sensor faults rather than optimizing or developing fusion techniques.

2.3. CARLA

Autonomous driving technology relies on simulation environments to validate and enhance safety and performance before real-world deployment. These environments replicate real-life urban scenarios, integrating entities like vehicles, pedestrians, and complex infrastructure elements. The focus of these simulations is the ego vehicle, which represents the autonomous vehicle under study, interacting dynamically within the simulated environment. The ego vehicle’s behavior is managed through decision-making algorithms, forming a closed-loop system where sensor data of the ego vehicle is sent to the autonomous driving algorithm, and subsequently, the algorithm controls the ego vehicle. After evaluating several existing simulators and open-source autonomous driving stacks, CARLA was selected due to its realistic urban environments, sensor simulation capabilities, and ease of integration.

CARLA (Car Learning to Act) is an open-source simulator designed for autonomous driving research and development. Developed by the Computer Vision Center (CVC) at the Universitat Autònoma de Barcelona, CARLA provides a realistic 3D environment for testing and validating autonomous driving systems under a wide range of conditions. It supports high-fidelity urban scenes, configurable weather, traffic actors, and a broad suite of sensors, making it a widely adopted tool in both academic and industrial settings. The simulation platform supports flexible setup of sensor suites and provides signals that can be used to train driving strategies, such as GNSS coordinates, speed, acceleration, and detailed data on collisions and other infractions. A wide range of environmental conditions can be specified, including weather and time of day.

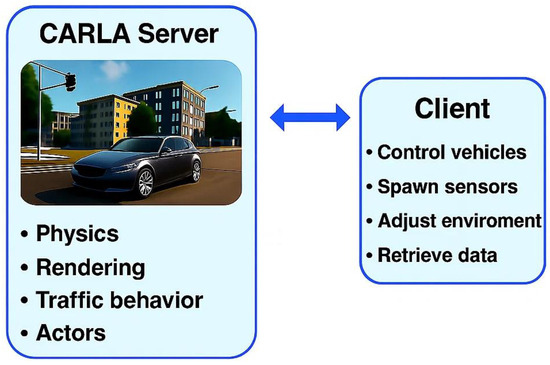

CARLA is built on Unreal Engine 4, which allows for advanced rendering and physics simulation (a new version is being built using Unreal Engine 5). The simulator operates using a client–server architecture (Figure 2) where:

Figure 2.

CARLA-Client communication using its API.

- The CARLA server handles the simulation environment, including physics, rendering, traffic behavior, and all the actors in the scene.

- The client, which may use a TCP/IP API or operate through a ROS interface, communicates with the server to control vehicles, place sensors, modify environment settings, and access simulation data.

CARLA can operate in two simulation modes: asynchronous and synchronous [75]. In the default asynchronous mode, the server advances the simulation independently of the client, meaning that events and sensor data generation depend directly on the computing speed of the machines involved. This can introduce non-deterministic behavior, as faster or slower hardware affects the timing of events, potentially leading to inconsistencies across simulation runs.

The synchronous mode ensures determinism and repeatability. In this mode, the simulation advances strictly in lockstep with the client’s commands, and each simulation step only progresses when explicitly triggered by the client. Thus, simulation events occur based on virtual time, independent of how long the hardware takes to compute each step. Consequently, a slower machine will take more real-world time to complete the same number of simulation steps compared to a faster machine. However, the internal timing, precision, and order of events within the simulation remain consistent. The concept of virtual time is crucial in this context, as it ensures simulation integrity and repeatability, making synchronous mode particularly suitable for experiments where deterministic and precise timing control is required.

2.3.1. Scenario Management and Ego-Vehicle

Scenario creation is facilitated by tools such as the Scenario Runner, which allows for the definition and control of events including intersections, pedestrian crossings, emergency stops, and vehicle overtaking. These scenarios define not only the behaviors and trajectories of multiple actors in the simulation, but also the environmental conditions (such as weather or time of day).

At the core of each scenario is the ego vehicle, representing the autonomous vehicle under test. It can be configured with a wide range of sensors including LiDAR (standard and semantic), RGB and depth cameras, IMU, GNSS, radar, and dynamic vision sensors (DVS). These sensors are placed at configurable locations on the vehicle and stream data in real-time, enabling both online and offline analysis. The ego vehicle can be controlled manually via the client interface or connected to an external autonomous driving system such as Autoware.

Scenario Runner is a Python-based framework developed specifically for CARLA that enables the execution of these driving scenarios. It provides an interface for defining scenario behavior, controlling the timing and logic of actor actions, and monitoring simulation outcomes. Scenario descriptions can be written in Python scripts or in XML format, allowing for a modular and reusable setup.

Once a scenario is launched, Scenario Runner connects to the running CARLA server using the Python API (Python 3.7). It then spawns the defined actors into the simulation world, orchestrates their behavior over time, and monitors key criteria such as collisions, route completion, traffic light compliance, and other successful conditions. This enables repeatable and automated testing of AV behavior under consistent environmental and situational parameters, which is essential for evaluating performance and safety under diverse and controlled conditions.

2.3.2. CARLA—Autonomous Driving System Communication Using ROS

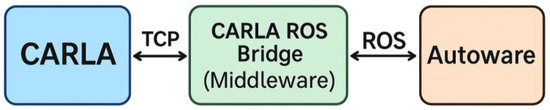

The CARLA ROS Bridge functions as a middleware interface that facilitates the integration of the CARLA simulation environment with ROS, enabling communication between the ego vehicle simulated in CARLA and external autonomous driving software frameworks.

ROS is an open-source middleware framework widely adopted in robotics research and development. It offers a modular architecture based on a publish–subscribe communication model, wherein distributed software components, referred to as nodes, exchange information through topics. ROS additionally provides comprehensive tools for sensor integration, data visualization, debugging, and simulation control. Figure 3 is a simplified diagram of the communication between CARLA and Autoware.

Figure 3.

CARLA ROS bridge Diagram.

Within the domain of autonomous driving, ROS links core modules such as perception, localization, planning, and control subsystems. The CARLA ROS bridge translates CARLA’s internal simulation data into standard ROS message formats and vice versa. This bidirectional interface enables the deployment of advanced driving stacks, such as Autoware, within the simulated environment. CARLA ROS bridge core functionalities include:

- Providing Sensor Data (Lidar, Semantic lidar, Cameras, GNSS, Radar, IMU)

- Providing Object Data (Traffic light status, Visualization markers, Collision, Lane invasion)

- Controlling AD Agents (Steer/Throttle/Brake)

- Controlling CARLA (Play/pause simulation, Set simulation parameters)

This integration is essential for testing and validating autonomous driving algorithms, particularly under controlled fault conditions.

2.4. Autoware

Autoware is an open-source software stack for autonomous driving systems, built on top of ROS, supporting both ROS 1 (Autoware.AI) and ROS 2 (Autoware.Auto/Autoware.Universe), with the newer ROS 2-based versions focusing on real-time safety, modularity, and compliance with automotive-grade standards. We have selected Autoware as our autonomous driving system to test our framework for its modular open-source architecture, active community support, and compatibility with ROS-based simulation frameworks.

Autoware builds on ROS by integrating a curated set of perception, localization, planning and control modules tailored for autonomous driving. Compared with using ROS alone, Autoware provides a standardized interface to vehicle hardware, built-in sensor fusion pipelines (e.g., LiDAR/IMU/GNSS fusion), high-definition map support, object detection and tracking, behavior and trajectory planning, and vehicle control interfaces.

Autoware is designed to serve as a complete platform for research, prototyping, and deployment of self-driving vehicles, particularly in urban and suburban environments [76], and when integrated with CARLA, Autoware becomes a powerful tool for testing, validation, and development in a safe and reproducible virtual environment.

Autoware has been successfully deployed in various real-world autonomous driving projects, especially in Japan and Europe, including autonomous shuttles, delivery robots, and smart city infrastructure tests [77].

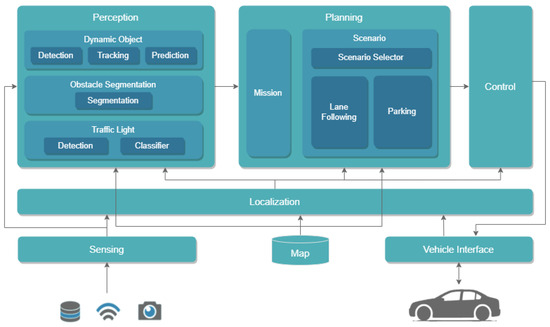

2.4.1. Architecture

Autoware provides a modular architecture (Figure 4) that includes all the essential modules required for autonomous vehicle operation, such as:

Figure 4.

Autoware Architecture [76].

- Perception: Object detection, segmentation, and tracking using data from LiDAR and cameras.

- Localization: Using GNSS, IMU, and LiDAR-based SLAM (Simultaneous Localization and Mapping) or map-matching for accurate vehicle positioning.

- Planning: Path planning, behavior planning, and route generation based on real-time map and object data.

- Control: Low-level vehicle actuation commands, such as throttle, brake, and steering, which are received by the vehicle interface responsible for executing them through actuators.

- Interface: Tools for vehicle-to-platform communication and human–machine interaction.

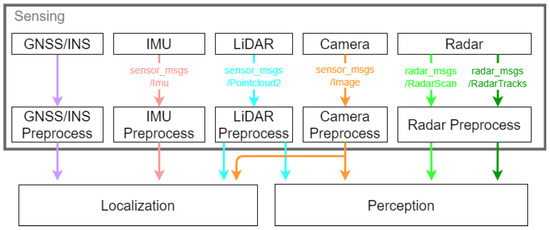

2.4.2. Sensing

The sensing module (Figure 5) applies pre-processing to the raw sensor data required by each module. It also abstracts data formats to allow the usage of sensors from different brands.

Figure 5.

Autoware sensing module.

This pre-processing includes applying filters, calibrating sensor inputs, and converting them into standardized message formats. The goal is to abstract away vendor-specific differences and provide clean, structured data for downstream modules like localization, perception, and control.

- GNSS: Provides global positioning (latitude, longitude, altitude) and orientation information. It is used primarily for global localization and navigation.

- IMU: Supplies measurements of vehicle motion, including acceleration and angular velocity. This data supports orientation tracking and is essential for sensor fusion techniques.

- LiDAR: Generates detailed 3D representations of the vehicle’s surroundings. It is used for localization (e.g., scan matching) and object detection.

- Camera: Delivers visual information for scene understanding, such as lane markings, traffic lights, and object classification.

- Radar: Detects objects by measuring distance, speed, and direction. Its robustness to adverse weather makes it valuable for tracking other road users.

Each of these sensors contributes complementary information that, once processed, supports the vehicle’s perception and decision-making systems.

2.4.3. Map

Autoware uses high-definition maps to support localization, routing, traffic rule handling, and to provide context for prediction and control. Two complementary map types are used: a vector map that encodes road semantics and a point cloud map that provides dense three-dimensional geometry.

The vector map is represented in Lanelet2 format. It contains lane geometry and topology, speed limits, turn permissions, stop lines, crosswalks, and traffic lights and signs. Behavior planning builds a lane graph from this information and triggers situation-specific modules such as lane change and intersection handling. Velocity planning reads speed limits and curvature from the map to set safe speed profiles and approach speeds.

The point cloud map provides a georeferenced three-dimensional model of the static environment, including road surface, curbs, building facades, poles, and barriers. It is used primarily for LiDAR-based localization through scan matching, for example, with NDT. Perception can also use the point cloud to remove the ground, mask static structures, and improve obstacle segmentation.

Both maps live in the fixed map frame in a local ENU coordinate system. A small projection metadata file specifies the transform from WGS84 latitude and longitude to this local frame so that GNSS positions are consistent with LiDAR and map coordinates.

Vector maps are authored and validated with Lanelet2 tools, while point cloud maps are built from survey LiDAR or SLAM runs and downsampled for runtime use. At startup, map loader nodes publish both maps, and large point clouds can be tiled and streamed so that only the needed tiles are kept in memory.

At runtime, localization aligns live LiDAR scans to the point cloud map and may fuse the resulting pose with GNSS and IMU. Routing and behavior planning query Lanelet2 topology and regulatory elements to generate routes and make right-of-way decisions. Velocity planning enforces map-based limits and approach constraints. Perception and prediction use both geometry and semantics to aid segmentation and provide intent context. Accurate alignment between the vector map, the point cloud map, and the real scene is critical. Otherwise, if misaligned, it can degrade localization quality and lead to poor decisions downstream. In summary, the map outputs the following:

- To the sensing module, the projection information (used to convert GNSS data to the local coordinate system).

- To the localization module, the point cloud (used for LiDAR-based localization) and vector maps (used for localization methods based on road markings).

- To the perception module, the point cloud map (used for obstacle segmentation) and vector map (used for vehicle trajectory prediction).

- To the planning module, the vector map (for behavior planning).

2.4.4. Localization

The localization module estimates the vehicle pose in the map frame and provides velocity and covariance in real-time. It ensures that these estimations are reliable, and if not, it generates errors or warning messages to inform the error-monitoring system. The method used for localization depends on the available sensors and the characteristics of the environment.

One commonly used method involves combining 3D LiDAR with a point cloud map. This approach is particularly effective in urban settings where numerous buildings and structures provide distinctive features for alignment. It works by matching incoming LiDAR data with a pre-existing point cloud map using techniques such as scan matching or Normal Distributions Transform. However, this method performs poorly in environments where the map lacks structural features, such as rural landscapes, open highways, or tunnels. It is also sensitive to environmental changes not represented on the map, including snow, construction work, or structural alterations. Signal issues such as reflections, glass surfaces, or interference from other laser sources may further degrade accuracy.

Another supported method involves using either 3D LiDAR or a camera in combination with a vector map. This configuration performs well in environments with clearly marked lanes, such as highways. Its accuracy can be affected by degraded or obstructed lane markings and variations in road surface reflections.

GNSS-based localization is suitable for open environments with minimal obstructions, such as rural areas. While it provides absolute global positioning, it is vulnerable to signal degradation, blockage, and spoofing. IMU-based localization relies on measurements of acceleration and angular velocity to estimate pose changes through dead reckoning. It works well in smooth and flat road conditions, but it is subject to drift over time due to inherent sensor biases which can be affected by environmental conditions such as temperature.

The Localization module integrates data from all these sources to improve accuracy and resilience. It requires that all incoming sensor data be correctly timestamped, valid, and consistent with the vehicle’s configuration. Additionally, maps used for localization, such as point cloud or lanelet2 formats, must closely match the real environment and be aligned if multiple sources are used. Large discrepancies between the map and the actual scene may lead to localization errors.

2.4.5. Perception

In Autoware, data from LiDAR, cameras, and radar are time-aligned with the vehicle’s pose and the map. The pipeline starts with point-cloud pre-processing (pointcloud_preprocessor), which removes noise, downsamples the cloud, crops to the area near the car, and separates ground from obstacles. Basic checks make sure timestamps and sensor calibrations are consistent, and map information (Lanelet2 plus the point-cloud map) gives extra context.

For LiDAR-based object detection, Autoware provides two options. The learning-based path, lidar_centerpoint, runs a modern 3D neural network (CenterPoint with a PointPillars backbone) and outputs oriented boxes for cars, pedestrians, and other objects. It is accurate and works well in busy scenes. The classical path, autoware_euclidean_cluster, does not use a neural network. Instead, it groups nearby points into clusters after simple filtering. It is fast, light on compute, and useful on modest hardware.

Perception then tracks objects over time, so they do not “blink” in and out when sensors are noisy. The multi_object_tracker combines consecutive detections (and multiple sensors when available) to keep a stable ID, position, and velocity for each object. When camera detections are enabled, autoware_bytetrack can track image-space boxes, and autoware_detection_by_tracker can feed the tracker’s estimates back to the detector to smooth things further.

Traffic light handling uses the map to narrow the camera’s search to only the signal heads that matter for the current lane. A detector (traffic_light_fine_detector) reads the light bulbs and their states, and a small association step ties them to the correct Lanelet2 signal group and stop line so planners can react at the right place.

Finally, Autoware produces a drivable-space/costmap that marks where the vehicle can safely drive. It uses the filtered LiDAR geometry, masks out known static structures with the point-cloud map, and inflates obstacles by a small safety margin. Parameters (for example, voxel size, cluster tolerances, detector confidence thresholds, and update rates) let users trade accuracy, smoothness, and runtime to match their compute budget and operating domain.

To cope with bad conditions, perception includes health checks. If a sensor drops frames or becomes unreliable, LiDAR glare or camera saturation, the system can down-weight that source, fall back from the learned detector to clustering, tighten tracking gates, or signal “degraded perception” so the vehicle can slow down or stop.

2.4.6. Planning

The Planning module determines where and how the vehicle should move based on the current environment, mission objectives, and safety constraints. It is structured into three main submodules: mission planning, planning modules, and validation. This modular architecture allows developers to extend or replace individual modules according to specific requirements or advancements.

First, mission (route) planning computes a global route from the current position to the destination using the Lanelet2 road graph. The output is a sequence of lanelets and waypoints that downstream modules follow. If the destination changes or a road becomes unavailable, the route is recomputed. Next, behavior planning decides what maneuver to perform along that route. Depending on rules and context, it may keep lane, change lanes, stop at a stop line, yield at an intersection, slow for a crosswalk, or pull over. It uses lane topology, speed limits, right-of-way, and traffic-signal states from the map and perception. In Autoware, these behaviors are provided as modular plugins so projects can enable only what they need. Then, motion planning turns the chosen behavior into a smooth, time-stamped trajectory. It produces a path (position and heading over distance) and a velocity profile (speed, acceleration, and jerk) that respect vehicle limits and passenger comfort. For low-speed areas and tight spaces, a freespace planner (e.g., Hybrid-A*) can generate drivable paths without relying on lane centerlines. An avoidance component can also shift the path laterally to pass slow or stopped obstacles when rules allow.

A velocity planning and smoothing stage enforces speed limits, approach speeds at intersections and crosswalks, lateral acceleration bounds from curvature, and safe gaps to lead vehicles. Smoothing shapes acceleration and jerk so the trajectory is comfortable and trackable by the controllers.

Before handing the trajectory to control, safety and feasibility checks verify that it stays within drivable space, avoids collisions with the latest obstacles, and respects speed and curvature limits as well as the selected behavior (for example, stopping before the stop line). If a check fails, the planner slows down, stops, or re-plans.

Planning consumes detected objects and traffic-signal states from perception, the current pose and velocity from localization, and the global route from mission planning. It outputs a time-stamped trajectory for control to track and publishes status so supervision can react if planning degrades (for example, by requesting a slow-down or stop).

2.4.7. Control

The Control module in Autoware is responsible for translating planned trajectories into low-level commands that can be executed by the vehicle’s actuators (steering, throttle, and brake), meaning that the goal of the control module is to track the target trajectory with stable lateral steering and longitudinal speed control. Autoware provides two main lateral controllers. The Pure Pursuit controller is a geometric look-ahead tracker that is robust and simple to tune, making it a common choice at low and medium speeds. The MPC Lateral controller picks the steering command by solving a small optimization problem at each time step. It uses a simple (linearized) vehicle model and a quadratic program solver, and lets you set explicit limits on steering rate and path curvature. The module also includes practical tuning guidance (e.g., horizon length and weight settings) so you can balance tracking accuracy and smoothness. Autoware’s PID Longitudinal controller combines feedforward and feedback acceleration, with options for slope compensation and delay handling. It tracks the velocity profile produced by the upstream planners and aims to deliver smooth speed regulation under typical driving conditions.

2.4.8. Vehicle Interface

The Vehicle Interface serves as the intermediary between the control commands generated by Autoware and the vehicle’s physical actuators or, in this case, the simulated vehicle provided by CARLA. It translates high-level commands, such as steering angle, throttle percentage, brake pressure, and gear selection, into actuator-level signals that the simulation environment can interpret and execute. CARLA is responsible for simulating the dynamics and responses of the ego vehicle based on the received control inputs.

2.5. Fault Injection in AV Systems

Fault injection is a testing technique where faults or errors are deliberately introduced into a system to evaluate its behavior and fault-tolerance capabilities [78]. In the context of autonomous vehicles, fault injection can target hardware (e.g., CPU errors), software (bugs), sensor data, or communications. Our focus is on sensor fault injection, which falls under software-implemented fault injection by corrupting sensor outputs, simulating the failure of the sensors. The motivation for fault injection in autonomous vehicles lies in the need to evaluate system safety under failure conditions. While standards such as ISO 26262 emphasize the importance of analyzing and validating the system’s behavior across different fault modes, implementing these tests in real-world settings is costly, risky, and often unfeasible. Although inducing faults directly in hardware components, such as damaging sensors or interfering with signals, can be a valid fault injection approach, doing so on real vehicles poses serious safety hazards and financial implications. Simulated fault injection offers a safe, controlled, and repeatable alternative that enables researchers and engineers to evaluate fault tolerance mechanisms without endangering people or damaging equipment. By using a simulator, we can expose the autonomous driving system to rare or dangerous scenarios that would be too risky in the real world. This includes extreme sensor failures like a completely blinded camera or a wildly drifting IMU, which could be life-threatening on a real vehicle but can be studied harmlessly in simulation.

3. Related Work

The safety validation of autonomous vehicles (AVs) has become a crucial research topic due to the increasing complexity of AV systems and the critical role sensors play in ensuring their reliable operation. The existing literature has focused on developing frameworks and simulation tools to systematically assess AV resilience under sensor faults, as well as evaluating AV performance in controlled virtual environments.

This section reviews prior work organized into two main categories: fault injection frameworks for AV safety validation and simulation-based AV testing. We highlight the strengths and limitations of these studies, clarifying the unique contributions of our research.

3.1. Fault Injection in Autonomous Vehicles

Ensuring that autonomous vehicles can handle faults has led to a body of research on AV fault injection and dependability assessment. One of the early efforts is AVFI (Autonomous Vehicle Fault Injector) [5], which pioneered end-to-end fault injection in AV systems. AVFI introduced faults at various points: sensor inputs (e.g., simulating camera or LiDAR failures), internal processing (e.g., flipping bits in a neural network), and even actuator commands, all within a simulated driving environment. The tool measured domain-specific safety metrics such as mission success rate and number of traffic violations under each fault scenario. Preliminary results from AVFI showed that injecting faults could indeed cause an AV to commit traffic violations that would not otherwise occur, underlining the importance of testing AVs beyond nominal conditions. AVFI used the CARLA simulator as a basis, demonstrating CARLA’s suitability for such fault experimentation [5].

Building on this, researchers have looked for smarter ways to inject faults. DriveFI (proposed by researchers at NVIDIA in 2019) [7] is a machine learning-based fault injection engine. Instead of manually specifying faults, DriveFI employs a Bayesian optimization approach to automatically discover the most “dangerous” faults and scenarios. By testing two industry-grade AV stacks (including NVIDIA’s own and Baidu’s Apollo), DriveFI was able to find hundreds of safety-critical vulnerabilities within hours, whereas random fault injection over weeks found few or none. The types of faults considered included sensor distortions and even logic bugs, and the impact was measured in terms of accidents or near-collisions in simulation. This work highlights how broad the space of possible faults is, and the need for intelligent search methods to focus on impactful cases [7].

The CarFASE tool [6] is specifically designed to integrate with open-source driving stacks (like OpenPilot by Comma.ai) and evaluate their behavior under both accidental faults and malicious attacks. For example, CarFASE can simulate a sudden change in camera brightness to mimic sensor attacks and then observe how the driving policy reacts. The authors used CarFASE to test OpenPilot’s resilience, and one use case showed that increased brightness (simulating a camera blinding scenario) led to degraded lane-keeping performance. The platform provides a library of fault models and a campaign configurator to automate scenario runs. The emergence of CarFASE underscores the community’s interest in accessible tools for fault injection using popular simulators like CARLA.

Aside from these, other works have explored particular sensor fault scenarios. Another work [48] focused on camera failures in an AV context, defining failure modes such as blurred images, blackout, and occlusions, and testing their effect on object detection and a self-driving agent in a simulator. The results showed that certain camera failures significantly increase detection errors and can lead to collisions in the simulation, which reinforces the notion that redundancy (like having multiple cameras or additional sensors) is needed. There are also studies on injecting faults in ADS controllers or code (for instance, using fuzzing or software mutation), but those are beyond the scope of sensor-level fault injection that we emphasize.

In contrast to prior AV fault injection studies that often focus on isolated algorithms, single sensors, or open-loop data perturbations, this work delivers a closed-loop, scenario-triggered fault injection framework operating at the middleware level of a full autonomous driving software stack. The framework supports multiple sensor types (LiDAR, IMU gyroscope/accelerometer/quaternion, GNSS), and uses a structured and reproducible fault model with location, type, trigger, and duration dimensions. By running injections during complete simulated driving scenarios, we capture system-level behavior and safety outcomes, enabling consistent classification of whether a fault was tolerated or led to unsafe operation. This combination of closed-loop execution, multi-sensor scope, and reproducibility distinguishes our work from previous tools and studies. Furthermore, our framework is implemented on ROS 2, which is mostly used in university research, but it can be ported to AUTOSAR Adaptive by bridging ROS 2 topics to SOME/IP (ara::com) services. Recent work [79] proposes an integrated ROS 2–AUTOSAR architecture (ASIRA) with a ROS 2–SOME/IP bridge and demonstrates data exchange in autonomous driving scenarios, including Autoware interoperating with an Adaptive AUTOSAR simulator, providing a path to use our framework on production-oriented AV software stacks. In practice, porting requires mapping message schemas and QoS/timing semantics and implementing an AUTOSAR-native injector; we list this engineering as future work. While our experiments run on a ROS 2 stack, the approach can be directly relevant to AUTOSAR-based developments and transferable to production-oriented pipelines.

In summary, the related work shows a progression from general fault injection frameworks (AVFI) to targeted or intelligent frameworks (DriveFI, CarFASE), as well as specific investigations of individual sensor failures. Our work differentiates itself by focusing on an integrated sensor fault injection in a complete open-source AV stack (Autoware). Many prior works used either proprietary stacks or simplified models of an AV. By using Autoware, we are exercising a full production-grade autonomy stack. Additionally, while tools like DriveFI aim to find worst-case faults automatically, our approach emphasizes the analysis of representative sensor faults to understand their effects. By manually designing fault conditions, we observe how specific sensor anomalies lead to unsafe behavior in the autonomous vehicle. This complements the broader search approach by providing insight into failure mechanisms. We also make our fault injection code available for the community, similar in spirit to CarFASE but focused on ROS/Autoware users.

3.2. Simulator-Based AV Testing

Simulator platforms are crucial for safely validating autonomous driving systems. Among the most notable platforms, LGSVL [80], despite its discontinuation, offered comprehensive scenario editing and supported numerous sensors and stacks like Autoware and Apollo [81]. AirSim [82] provided strong scenario generation capabilities and sensor support but was similarly discontinued.

Other platforms, such as PreScan [83] and Pro-SiVIC [84], provide extensive simulation environments often used by industry professionals for rigorous AV testing. Pegasus [85] and SafetyPool [86] platforms focus on standardized scenario definitions to ensure safety compliance, emphasizing reproducibility and comparability across tests. MetaDrive [87], another relevant simulator, emphasizes procedural generation of scenarios and supports sensors like LiDAR and camera. It is designed for efficiency and scalability, suitable for extensive reinforcement learning applications and systematic AV behavior studies.

In this study, we selected CARLA due to its active community, open-source nature, and compatibility with Autoware, ensuring flexibility and realism in fault injection experiments. The CARLA simulator’s extensive documentation and Scenario Runner capabilities make it particularly suitable for detailed, scenario-based sensor fault testing, a crucial aspect of our experimental approach.

4. Framework Implementation

This section describes how the proposed framework was implemented to evaluate the fault tolerance of an autonomous vehicle system under different sensor failure conditions. The approach integrates the CARLA simulator with the Autoware autonomous driving stack to enable controlled, scenario-based testing with sensor fault injection. To automate test execution and ensure repeatability, we extended the CARLA Scenario Runner to define scenarios and specify fault injection events during scenario execution. We detail the simulation environment setup, the integration with Autoware, the injection of sensor faults during the simulation, and the methodology used to collect and analyze results. This structured workflow provides a reproducible foundation for assessing how sensor failures affect AV behavior.

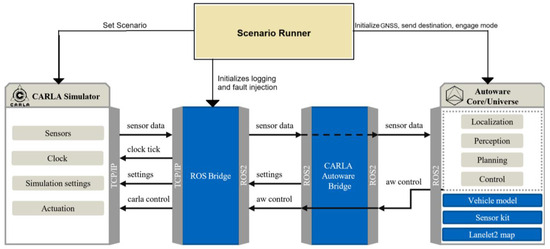

4.1. Framework Overview

Our experimental platform consists of the CARLA simulator (version 0.9.15) as our simulator and Autoware.Universe (version 2019.1) as the system under test, running together in a closed loop. CARLA simulates both the ego vehicle and its environment, publishing sensor data to Autoware via the ROS bridge. Autoware then processes this data through its perception, planning, and control modules, and sends actuation commands (steering, throttle, brake) back to CARLA to drive the ego vehicle.

The primary role of the ROS bridge is to establish communication between CARLA and Autoware. This is achieved by converting and forwarding sensor data provided by CARLA (via TCP/IP) to Autoware using the necessary ROS 2 message structures. Additionally, the CARLA Autoware Bridge manages initial server settings (e.g., map and weather), sensor configurations of the AV, and spawning of the AV in the CARLA server. Additionally, it converts control commands sent from Autoware to CARLA using a controller developed by TUMFTM [88].

To manage the scenario execution and coordinate fault injection timing, we used a modified version of Scenario Runner as an orchestration tool, allowing it to synchronize scenario runs, sensor fault injection configuration files, and data logging.

The ROS bridge was explored and modified to support scenario-based fault injection testing. Initially, it was enhanced to log collision and lane invasion events directly. We then introduced full sensor data logging, followed by a fault injection mechanism that intercepts and alters sensor data before it reaches Autoware. These modifications also include a dedicated ROS node capable of receiving instructions from Scenario Runner. These instructions are (i) to start/stop sensor logging, and (ii) the fault injection configuration file provided by the user, which is a JSON file defining the affected sensor, failure type, triggering location (map-based), and duration. Scenario Runner also enables automated evaluation of scenario outcomes. Criteria such as collision detection, timeouts, and lane invasions are used to determine whether a test run was successful or failed, eliminating the need for manual inspection of raw logs.

Figure 6 presents the final bridge, with the modified ROS bridge and the inclusion of the Scenario Runner.

Figure 6.

CARLA Autoware modified bridge (based on [88]).

4.2. Scenario Runner Modifications

In order to efficiently test the resilience and the behavior of autonomous driving systems under various fault conditions, it is necessary to execute a test run multiple times. Manually configuring and executing each run is time-consuming and error-prone. To address this, we extended Scenario Runner to support automated batch execution of test runs. Each test run consists of a specific scenario (from the workload) combined with a fault configuration (from the faultload).

Additionally, since the ego vehicle is managed externally through the Autoware integration rather than by the CARLA Scenario Runner itself, we modified the Scenario Runner to detect and attach to a pre-existing ego vehicle within the simulation. This avoids redundant instantiation and enables compatibility with externally controlled systems.

During each test run, sensor data and safety-related events (e.g., collisions, lane invasions) are logged, enabling post-analysis of how different fault types affect the vehicle’s behavior. This automation improves reproducibility and expands test coverage while minimizing manual intervention.

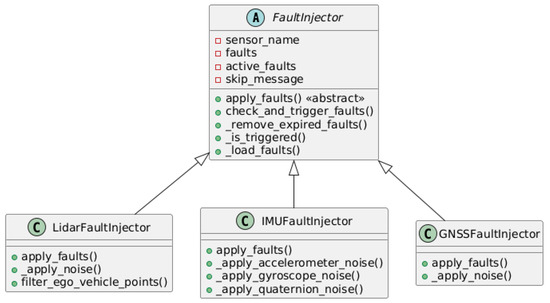

4.3. Sensor Fault Injection System Implementation

The sensor fault injection framework is modular and extensible. It is centered around a base class named FaultInjector that attaches to the ROS bridge at the point where simulator sensor messages are converted into ROS topics. At this injection point, the framework alters the sensor streams before publication, so any ROS-based autonomous driving stack can be exercised without changing its internal modules. The system under test then consumes these topics as if they originated from malfunctioning sensors. In our case, the Autoware perception and localization modules read the modified data unchanged. The class diagram is presented in Figure 7.

Figure 7.

Fault injector class diagram.

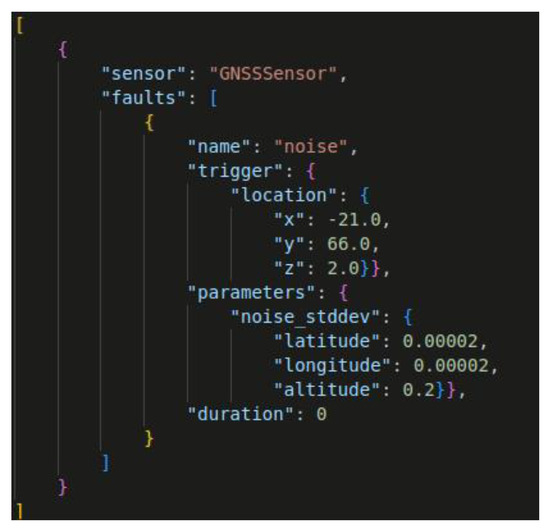

The framework was implemented in Python and integrated into the CARLA ROS bridge, which is built on ROS 2 Humble. It uses JSON configuration files, depends on CARLA’s Python API, and is compatible with Autoware.Universe.

Each sensor type, LiDAR, IMU, and GNSS, is handled by a dedicated subclass (LidarFaultInjector, IMUFaultInjector, and GNSSFaultInjector, respectively), all inheriting from the FaultInjector base class. This base class provides shared functionality, including fault management, activation logic, and the apply_faults() abstract method, which is implemented by each subclass. Although a camera sensor is included in our sensor configuration, no faults were injected into it, because it was not functional in the version of Autoware.Universe used in this work.

The FaultInjector class is tightly coupled with the Sensor class from the ROS bridge, which handles the conversion of CARLA sensor data to ROS 2 message formats. Upon initialization, FaultInjector loads a fault injection configuration file in JSON format. This configuration defines the location, type, trigger, and duration. Each time a new sensor message is received, the FaultInjector checks whether any faults should be applied, updates the list of active faults, and deactivates those whose duration has expired.

Two main fault types are supported: noise and silent failure. Noise is used to simulate degraded sensor output due to environmental or hardware-induced disturbances (e.g., electromagnetic interference, vibrations, or aging components). Silent failures represent cases in which a sensor stops sending data.

The LidarFaultInjector modifies the point cloud data by adding configurable Gaussian noise or stopping data publication to simulate a silent failure. Noise parameters (e.g., min_noise, max_noise) are defined in the JSON configuration file.

The IMUFaultInjector injects Gaussian noise independently into the gyroscope, accelerometer, and orientation quaternion data. It can also simulate silent failures for the entire IMU stream.

The GNSSFaultInjector applies noise to latitude, longitude, and altitude values and can simulate complete GNSS silent failures.

The fault injection configuration is sent by Scenario Runner as a JSON file and includes all necessary data, sensor (“LiDAR”, “IMU”, “GNSS”), faults (“noise” or “silent”), trigger (map-based location where the ego vehicle needs to be to trigger the fault), duration (time in seconds, 0 if permanent), and other parameters (fault-specific configuration, such as noise amplitude range). Figure 8 showcases an example of a sensor configuration file.

Figure 8.

Sensor Fault configuration example.

This design ensures that faults are applied in a consistent and reproducible manner relative to the scenario configuration and trigger conditions, making the system suitable for controlled experiments across multiple test runs.

The current implementation supports silent failure and severe noise for LiDAR, GNSS, and IMU. The same injection point in the ROS bridge allows additional fault models (constant bias/offset, drift as a random walk, stuck-at outputs, latency/jitter, and intermittent dropouts, among others) to be added with minimal changes, and to be applied to other sensors as the system under test and simulator interfaces permit. In this first iteration, we model “severe noise” as a zero-mean Gaussian, providing a basic way to perturb the sensors and offering a reproducible way to stress the AV stack without targeting a particular sensor’s physics [89]. While Gaussian noise is a useful baseline, real measurement errors are often non-Gaussian or time-correlated. For example, inertial sensors exhibit white noise plus bias instability/random walks [90], and GNSS errors in urban scenes show heavy-tailed residuals from multipath and blockage [91]. LiDAR in rain, fog, or snow [92] produces outliers and missing returns rather than purely Gaussian jitter.

4.4. Logging and Results Collection

ScenarioRunner provides mechanisms for collecting and exporting the results of each test run. Each scenario defines a set of relevant criteria by adding them to a behavior tree. This set determines what is monitored and what constitutes success or failure for that scenario, then the result for each criterion is stored in a file (txt, xml, or json). The criteria can be, collision (if the ego vehicle collided), route completion (how much of the planned route was completed), running redlight (detects if the ego vehicle ran a red light), Off road (if the vehicle left the drivable area), sidewalk (if the ego vehicle drove on the sidewalk), maximum route speed (if the vehicle did not surpass a certain velocity), and many more. The outcome is then calculated based on each criterion, and if all the criteria “Passed”, then the result is “Success”; otherwise, “Failure”.

5. Experimental Setup

This section describes the simulation environment, sensor configuration, fault model, and injection methodology used to assess the impact of sensor faults on autonomous vehicle behavior.

5.1. Simulation Environment and System Under Test

We distinguish between the simulation framework and the system under test. The framework comprises CARLA, the Scenario Runner orchestration, and the modified ROS bridge, where faults are injected and logs are recorded. The system under test is the Autoware-based autonomous driving stack used as the demonstration case. The framework delivers scenarios and perturbed sensor streams but does not implement perception, planning, or control logic. All observed behaviors, therefore, reflect the system under test and its configuration.

We used Town10 from CARLA’s map library as the simulation environment. This urban scenario offers a complex road network with multiple intersections, varied curvature, and diverse traffic conditions, making it suitable for testing sensor performance and autonomous decision-making under realistic conditions. To better match real-world traffic regulations and ensure consistency with map-based planning in Autoware, stop signs were manually added to the corresponding Lanelet2 map. This adjustment was necessary to reflect road rules that were not originally encoded in the base map and to trigger more meaningful behavior in the planning module.

The system under test follows the default TUMFTM sensor suite, which includes LiDAR, GNSS, and IMU. The camera was excluded because it was not functional in the Autoware version used, and the traffic light perception pipeline did not operate reliably, as it was constantly failing to recognize traffic lights; similar difficulties are reported in the Autoware issue tracker and CARLA–Autoware discussions. To avoid perception instability, we restricted the sensor configuration to LiDAR/GNSS/IMU for this study and left camera reintegration to future work [93,94]. No internal Autoware modules were modified and all parameters relevant to perception, localization, planning, and control were kept constant across runs. This configuration enables closed-loop interaction between CARLA and Autoware so that sensor-level faults can be injected at the framework layer and their effects on the behavior of the system under test can be observed.

5.2. Fault Model

We defined a fault model (or failure model, from the perspective of the sensor subsystems) characterized by the following four dimensions:

- Location—the location of the fault refers to the specific sensor affected. In this case, 5 different locations were considered: LiDAR, GNSS, and the three components of the IMU (gyroscope, accelerometer, and quaternion).

- Type—the fault type includes Silent Failures, where the sensor stops transmitting data, and noise, representing random deviations beyond the sensor’s normal operating conditions. We refer to this as Severe Noise.

- Trigger—the trigger defines the position where the ego vehicle must be to start the fault injection. We defined 5 fault triggers based on the moments the ego vehicle reaches specific locations within the map of the scenario.

- Duration—the duration of the fault specifies how long the fault remains active since the trigger. We defined a permanent duration, meaning the fault remains active until the end of the test run.

5.3. Severe Noise per Fault Location

In this section, we describe how the Severe Noise fault type was defined for each fault location, since different sensors behave in different ways. To define appropriate values for severe noise, we first identified the expected nominal noise levels for each sensor. These nominal values represent the typical measurement variability under normal operating conditions, as specified by manufacturers or reported in the literature. While such noise is always present in real-world deployments, it does not represent a fault. Instead, it serves as a reference baseline, helping us determine what constitutes abnormal or faulty behavior. Based on these nominal values, we established the corresponding severe noise levels used in our fault model.

5.3.1. LiDAR

To emulate noise in LiDAR data, we apply Gaussian noise to each point of the LiDAR point cloud. This fault represents small, random disturbances that real LiDAR sensors experience due to electronic interference, surface reflectivity, environmental conditions (e.g., dust, rain), or inherent sensor limitations [42,95,96].

The fault is implemented by adding a random noise to each coordinate (x, y, z) of every point in the cloud. The noise follows a Gaussian distribution centered around zero, meaning it introduces unbiased jitter around the true value. However, rather than focusing on absolute noise values (e.g., ±0.006 m), the significance of the fault is evaluated based on its relative deviation from the true point location.

Coordinate deviations up to 2% of the point’s range (distance from the sensor) are considered within expected tolerance for high-quality LiDARs. This value is consistent with findings in [97], which reported measurement errors within 1–2% for commercial terrestrial laser scanners operating under controlled conditions. Deviations between 2% and 10% are interpreted as severe deviations which translate into significant measurement corruption, likely leading to misperception, missed obstacles, or map inconsistencies. This percentage-based approach ensures that the added noise is significant, no matter the distance of the objects. For example, a 1 cm deviation is negligible for an object 50 m away but might completely distort a nearby point at 0.2 m. Furthermore, LiDAR returns are more sensitive at close range due to angular resolution and time-of-flight constraints, making small absolute changes more impactful.

By using this method, the simulation can capture both realistic sensor imperfections and fault-level disturbances, depending on the severity of the injected noise.

5.3.2. GNSS

To emulate positioning errors in a simulated autonomous system, we applied Gaussian noise to the GNSS readings, specifically affecting latitude, longitude, and altitude values. This type of fault replicates common disturbances encountered in real-world GNSS signals caused by atmospheric interference, satellite geometry (e.g., dilution of precision), multipath reflections, and receiver limitations [52,61,98].

A deviation of 0.00002 degrees both in latitude and longitude corresponds to approximately 2 m of horizontal error. The altitude noise was set to 0.2 m. These values align with the performance of single-frequency, civilian-grade GNSS receivers under open-sky conditions, where horizontal errors are typically under 3 m [99]. To emulate noise that exceeds nominal values, we adopted a standard deviation of 0.0002 degrees for latitude and longitude, corresponding to around 10 m of horizontal error. For altitude, a standard deviation of 2.0 m was defined. These values simulate degraded conditions due to poor satellite visibility, urban canyons, or intentional interference, which can degrade accuracy to over 10 m.

5.3.3. IMU

IMU faults are emulated by interfering separately with the following IMU measurements: angular velocity (gyroscope), linear acceleration (accelerometer), and orientation (quaternion). The purpose is to inject faults that are more severe than the natural variability and imperfections found in real-world IMUs due to mechanical, electronic, and environmental factors [98].

In commercial gyroscopes, bias instability and rate noise typically produce errors in the range of 0.01–0.05°/s, depending on environmental conditions and sampling rate. According to datasheet specifications, devices such as the Bosch BMI160 [100] or InvenSense MPU-6000 [101] show noise densities around 0.005–0.01°/s/√Hz. A 5% deviation threshold corresponds well to the upper bound of expected operating noise for angular velocity measurements under normal conditions. A deviation between 5 and 50% in gyroscopic output could indicate that the system is significantly misestimating rotational speed, leading to severe orientation estimation errors when fused with accelerometer and GNSS data.

Accelerometer nominal deviations are commonly expressed in µg/√Hz. For instance, the ADXL345 [102] shows typical deviation densities of ~150 µg/√Hz. For acceleration signals in the range of 1–2 m/s2 (e.g., steady cruising), a 5% deviation equates to ±0.05–0.1 m/s2, matching real-world fluctuations from road vibration or minor sensor inaccuracies. Noise values between 5 and 50% can seriously distort vehicle state estimation, especially when the signal-to-noise ratio is low, such as during smooth braking or slow turns.

Quaternions, derived from fused gyroscope and accelerometer data (and usually magnetometer), are sensitive to noise in both sensors [103]. Minor noise (<5%) can be expected due to integration drift or transient instability in attitude estimation filters. In simulation, this would translate to slight deviations in yaw, pitch, or roll, typical of nominal system behavior. Quaternion errors can simulate the effects of drift caused by improper sensor fusion or misalignment in orientation. This can lead to cascading failures in localization and control if not properly corrected. A distortion between 5 and 50% in quaternion values is equivalent to a significant misestimation of heading or tilt.

5.3.4. Summary of Fault Type Values per Location

This section summarizes the fault injection parameters used to emulate Severe Noise conditions in the different sensors, as well as presenting the nominal values, i.e., the noise that naturally occurs in every sensor, according to its specifications. Table 1 presents a structured overview of the Nominal values and the Severe Noise values per sensor.

Table 1.

Summary of noise values used in fault model.

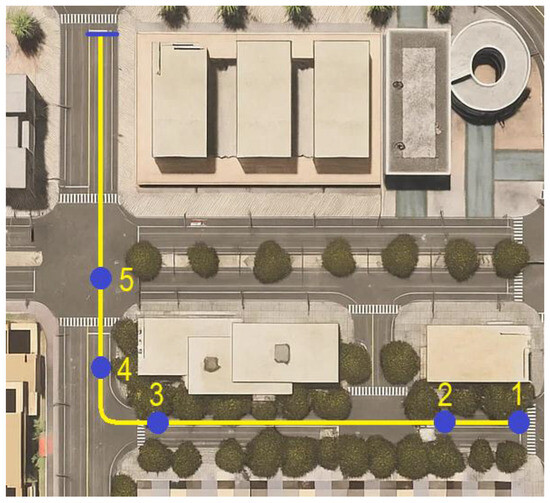

5.4. Fault Trigger

The experimental campaign is structured around a driving scenario designed in the CARLA simulator using the Town10 map. This scenario reflects a typical urban driving situation in which the autonomous system must operate safely under varying conditions. Sensor faults were introduced at strategic points to assess the vehicle’s behavior under different conditions of the AV and the surrounding environment. The ego vehicle used in all scenarios is a green van being controlled by Autoware.Universe version 2019.1.