4.3.1. Named Entity Recognition

In this study, the accuracy of entity and relation extraction in cybersecurity data depends on two key factors: (1) the model’s ability to accurately label entity boundaries and categories, and (2) the model’s adaptability to the semantic features of the cybersecurity domain. Only when both conditions are satisfied can high precision be achieved in the final results.

To evaluate the performance of the SecureBERT_Plus-BiLSTM-Attention-CRF model in Named Entity Recognition (NER) tasks, Precision, Recall, and F1 score were used as the evaluation metrics.

Precision refers to the proportion of correctly predicted entities (True Positives, TP) out of all predicted entities (False Positives, FP), measuring the accuracy of the predictions.

Recall indicates the ratio of correctly predicted entities to the total number of true entities (False Negatives, FN), reflecting the comprehensiveness of entity identification.

F1 score is the harmonic mean of Precision and Recall, balancing both metrics and mitigating the evaluation bias in imbalanced datasets.

Moreover, Accuracy was not considered as a performance metric due to its susceptibility to bias in imbalanced data, which can lead to misleading results. In contrast, the F1 score is a more reliable measure of model performance, widely used in information extraction tasks.

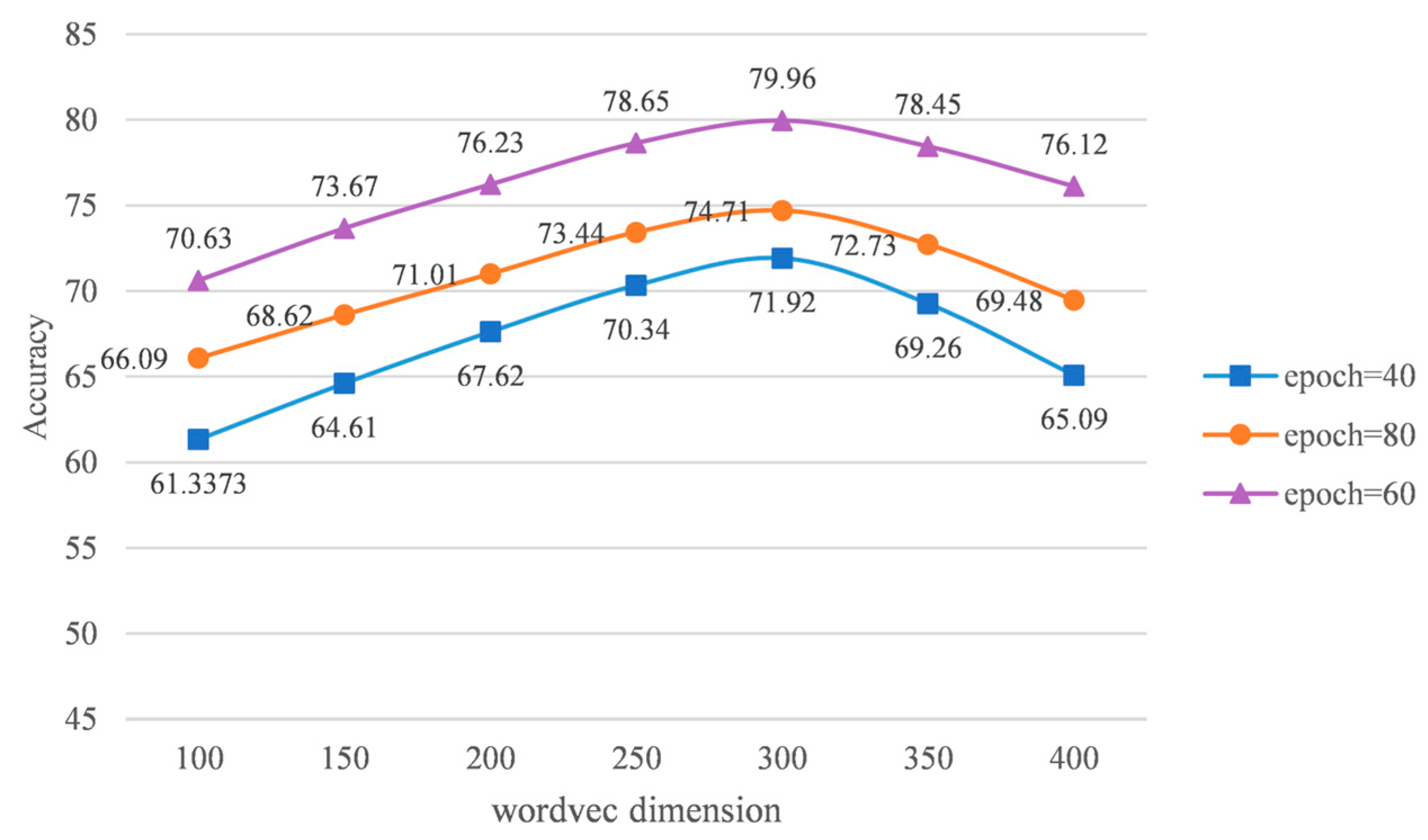

To validate the performance of the proposed SecureBERT_Plus-BiLSTM-Attention-CRF model for cybersecurity NER, nine experiments were conducted to compare and assess its effectiveness in Cyber Threat Intelligence (CTI). Experiment 1 evaluated the CNN-BiGRU-CRF model, Experiment 2 the CNN-BiLSTM-CRF model, Experiment 3 the RoBERTa-BiGRU-CRF model, Experiment 4 the RoBERTa-BiLSTM-CRF model, and Experiment 5 the SecureBERT_Plus-BiLSTM-CRF model, Experiment 6 assessed the performance of the SecureBERT_Plus-BiLSTM-Attention model, Experiment 7 the SecureBERT_Plus- Attention-CRF, Experiment 8 the SecureBERT_Plus-BiLSTM-Attention-CRF model, and Experiment 9 the SecureBERT_Plus-BiLSTM-Attention-CRF to evaluate component contributions.

Table 6 displays the precision (P), recall (R), and F1 scores for the extraction of entity-relation triples, including overlapping relation triples. The results demonstrate clear performance patterns across architectures.

According to the experimental results, Experiment 9 achieved F1 scores of 0.839 in the ALL scenario and 0.803 in the OVERLAP scenario. This demonstrates that the proposed technique offers a direct and effective solution for entity and relation extraction by fully leveraging the semantic interplay between the two tasks.

Comparing Experiments 1 and 2, as well as 3 and 4, shows that BiLSTM consistently outperforms BiGRU, both in CNN-based architectures and with RoBERTa. This underscores BiLSTM’s superior capability in capturing contextual dependencies, validating its effectiveness in NER tasks. Specifically, the CNN-BiLSTM-CRF model achieved an F1 score of 0.657, representing a 1.7% improvement over the CNN-BiGRU-CRF model (0.646). Similarly, the RoBERTa-BiLSTM-CRF model reached an F1 score of 0.772, outperforming RoBERTa-BiGRU-CRF (0.749) by 3.1%.

This gain is mainly due to BiLSTM’s memory retention mechanism, which effectively captures long-range dependencies in cybersecurity texts. Compared to BiGRU, BiLSTM excels at modeling relationships between distant tokens, making it more effective and stable when handling long entity boundaries and complex linguistic contexts.

Experiment 4 showed a 17.5% increase in F1 score over Experiment 2, highlighting the superior capabilities of BERT-based embeddings. This improvement reflects the strong generalization enabled by pre-trained language models, confirming their role in semantic modeling and cross-sentence dependency learning.

The SecureBERT_Plus model demonstrates measurable domain adaptation advantages, achieving 0.858 entity recognition precision. This represents a 4.25% improvement over the general-purpose RoBERTa model’s 0.823 precision. This improvement comes from the domain-adaptive pretraining strategy, which improves the model’s understanding of cybersecurity-specific terms and entities.

The integration of Attention results in steady improvements: Experiment 6 achieved 0.775 F1 for overlapping relations, Experiment 7 reached 0.784 F1, and Experiment 9 achieved the optimal 0.803 F1. These results validate the role of Attention in resolving nested entities, such as polymorphic malware variants.

Experiment 9 outperforms Experiment 8 in both F1 score and Overlap F1 by 5.9% and 4.6%. This improvement is primarily attributed to SecureBERT_Plus’s superior domain adaptation and the use of RoBERTa architecture, enhances its ability to handle cross-sentence dependencies and complex entity interactions in CTI tasks. These capabilities are further validated by the integration of Attention and CRF. Attention resolves nested entities, such as polymorphic malware variants, while CRF ensures label sequence coherence, which is essential for maintaining logical attack progression in multi-stage scenarios.

These advancements translate directly into the core inputs for

Section 4.3.3 on Knowledge Graph Construction: an entity recognition precision of 0.858 ensures node reliability, while a relation F1 score of 0.799 supports cross-sentence attack chain extraction, collectively laying a solid foundation for semantic integration and topological optimization in the final knowledge graph.

4.3.2. Evaluation of Entity Normalization

Entity normalization is a crucial step in the construction of cybersecurity knowledge graphs, aiming to enhance accuracy and query consistency by merging synonymous entities across data sources. At present, many approaches rely on static word embeddings or rule-based methods for entity alignment. However, these methods have limitations in capturing complex semantic relationships, especially with polysemy and synonymy, leading to low precision in entity aggregation.

To address these issues, the CyberKG framework uses SecureBERT_Plus to generate domain-aware contextual embeddings, combined with a Hierarchical Agglomerative Clustering (HAC)-based method for semantic clustering. This approach improves entity normalization accuracy while overcoming the limitations of traditional methods.

To verify the effectiveness of the proposed entity normalization, this study uses human-annotated entity alignment pairs from the DNRTI and MalwareDB datasets as the gold standard and evaluates performance with multi-granularity metrics. The evaluation system is based on established methods in knowledge base canonicalization, and is defined as follows:

Let be the set of entity clusters generated by the normalization system, and be the set of gold standard clusters. The metrics include macro-level, micro-level, and pairwise evaluations.

First, macro-level metrics are used to evaluate the semantic completeness of clusters, including macro precision (

) and macro recall (

).

is the proportion of fully pure clusters among those generated by the system, i.e., the proportion of entities correctly grouped within each cluster. The formula is defined as

where

and

represent the number of entities in the system-generated and gold standard clusters, respectively, and

is the size of their intersection.

is the reciprocal of

and reflects the coverage of gold standard clusters by the system, i.e., whether the system can effectively cover all gold clusters:

Second, micro-level precision (

) measures the disambiguation accuracy at the entity level.

quantifies the intersection size between generated and gold standard clusters, reflecting the reliability of local merges:

where

denotes the number of correctly matched entities in cluster

, and

is the total number of entities in

.

Finally, pairwise precision (

) evaluates global consistency by verifying coreference relations between entity pairs—addressing cross-source alias mapping issues such as “CVE-2023-1234” and “ProxyLogon”. It is calculated as follows:

where

is the number of correctly merged entity pairs in cluster

, and

is the total number of possible entity pairs in that cluster.

This study adopts the F-measure (F1-score) as the core evaluation metric, defined as the harmonic mean of precision and recall. The optimal HAC threshold is determined via grid search on the validation set. Entity similarity is computed using SecureBERT_Plus-generated context-aware embeddings, obtained by encoding full threat intelligence sentences to capture fine-grained cybersecurity semantics. The results are presented in

Table 7.

The experimental results demonstrate that the generated entity clusters effectively capture the semantic meaning of entities, and cover a large portion of the gold standard entities with high recall. At the macro level, the semantic completeness of the entity clusters achieves 87.6% recall and 80.9% precision (F1-score = 84.1%). This indicates that over 84% of the gold standard entities are covered by the generated clusters, with 80% of the entities within the clusters being semantically pure (e.g., “Fancy Bear” and “APT28” are 100% merged as the same attack group).

Although micro-level precision is slightly lower, it still demonstrates the method’s strong performance in entity disambiguation, particularly when handling cross-source entities. The disambiguation of coreferential entity pairs shows clustering consistency with 80.6% recall and 56.8% precision. While the pairwise precision is slightly lower, these results provide valuable directions for future optimizations, particularly in enhancing the accuracy of cross-source alias mappings.

Thus, it can be concluded that the proposed method meets the expected goals in improving entity disambiguation accuracy. Future work could focus on further optimizing the HAC strategy or integrating more advanced deep learning methods to improve cross-source alias handling and enhance global consistency.