1. Introduction

It is well known that for the purpose of modeling dependence in a risk management setting, the multivariate normal distribution is not flexible enough, and therefore its use can lead to a misleading assessment of risk(s). Indeed, the multivariate normal has light tails and its copula is tail-independent such that inference based on this model heavily underestimates joint extreme events. An important class of distributions that generalizes this simple model is that of

normal variance mixtures. A random vector

follows a normal variance mixture, denoted by

, if, in distribution,

where

is the

location (vector),

for

denotes the symmetric, positive semidefinite

scale (matrix) and

is a non-negative random variable independent of the random vector

(where

denotes the identity matrix); see, for example, (

McNeil et al. 2015, Section 6.2) or (

Hintz et al. 2020). Here, the random variable

W can be thought of as a shock mixing the normal

, thus allowing

to have different tail behavior and dependence structure than the special case of a multivariate normal.

The multivariate

t distribution with

degrees of freedom (dof) is also a special case of (

1), for

; a random variable (rv)

W is said to follow an inverse-gamma distribution with shape

and rate

, notation

, if

W has density

for

(here,

denotes the gamma function). If

, then

,

, so that all margins are univariate

t with the same dof

. The

t copula, which is the implicitly derived copula from

for a correlation matrix

P via Sklar’s theorem, is a widely used copula in risk management; see, e.g.,

Demarta and McNeil (

2005). It allows one to model pairwise dependencies, including tail dependence, flexibly via the correlation matrix

P. When

, all

k-dimensional margins of

are identically distributed. To overcome this limitation, one can allow different margins to have different dof. On a copula level, this leads to the notion of grouped

t copulas of

Daul et al. (

2003) and generalized

t copulas of

Luo and Shevchenko (

2010).

In this paper, we, more generally, define

grouped normal variance mixtures via the stochastic representation

where

is a

d-dimensional non-negative and comonotone random vector with

that is independent of

. Denote by

the quantile function of a random variable

W. Comonotonicity of the

implies the stochastic representation

If a

d-dimensional random vector

satisfies (

2) with

given as in (

3), we use the notation

where

for

and the inequality is understood component-wise.

As mentioned above, in the case of an (ungrouped) normal variance mixture distribution from (

1), the scalar random variable (rv)

W can be regarded as a shock affecting all components of

. In the more general setting considered in this paper where

is a vector of comonotone mixing rvs, different, perfectly dependent random variables affect different margins of

. By moving from a scalar mixing rv to a comonotone random vector, one obtains non-elliptical distributions well beyond the classical multivariate

t case, giving rise to flexible modeling of joint and marginal body and tail behaviors. The price to pay for this generalization is significant computational challenges: Not even the density of a grouped

t distribution is available in closed form.

At first glance, the definition given in (

2) does not indicate any “grouping” yet. However, Equation (

3) allows one to group components of the random vector

such that all components within a group have the same mixing distribution. More precisely, let

be split into

S sub-vectors, i.e.,

where

has dimension

for

and

. Now let each

have stochastic representation

. Hence, all univariate margins of the subvector

are identically distributed. This implies that all margins of the corresponding subvector

are of the same type.

An example is the copula derived from

in (

2) when

for

; this is the aforementioned grouped

t copula. Here, different margins of the copula follow (potentially) different

t copulas with different dof, allowing for more flexibility in modeling pairwise dependencies. A grouped

t copula with

, that is when each component has their own mixing distribution, was proposed in

Venter et al. (

2007) (therein called “individuated

t copula”) and studied in more detail in

Luo and Shevchenko (

2010) (therein called “

t copula with multiple dof”). If

, the classical

t copula with exactly one dof parameter is recovered.

For notational convenience, derivations in this paper are often done for the case

, so that the

are all different; the case

, that is when grouping is present, is merely a special case where some of the

are identical. That being said, we chose to keep the name “grouped” to refer to this class of models so as to reflect the original motivation for this type of model, e.g., as in

Daul et al. (

2003), where it is used to model the components of a portfolio in which there are subgroups representing different business sectors.

Previous work on grouped

t copulas and their corresponding distributions includes some algorithms for the tasks needed to handle these models, but were mostly focused on demonstrating the superiority of this class of models over special cases such as the multivariate normal or

t distribution. More precisely, in

Daul et al. (

2003), the grouped

t copula was introduced and applied to model an internationally diversified credit portfolio of 92 risk factors split into 8 subgroups. It was demonstrated that the grouped

t copula is superior to both the Gaussian and

t copula in regards to modeling the tail dependence present in the data.

Luo and Shevchenko (

2010) also study the grouped

t copula and, unlike in

Daul et al. (

2003), allow group sizes of 1 (corresponding to

in our definition). They provide calibration methods to fit the copula to data and furthermore study bivariate characteristics of the grouped

t copula, including symmetry properties and tail dependence.

However, to the best of our knowledge, there currently does not exist an encompassing body of work providing all algorithms and formulas required to handle these copulas and their corresponding distributions, both in terms of evaluating distributional quantities and in terms of general fitting algorithms. In particular, not even the problem of computing the distribution and density function of a grouped t copula has been addressed. Our paper fills this gap by providing a complete set of algorithms for performing the main computational tasks associated with these distributions and their associated copulas, and does so in an as automated way as possible. This is done not only for grouped t copulas, but (in many cases) for the more general grouped normal variance mixture distributions/copulas, which allow for even further flexibility in modeling the shock variables . Furthermore, we assume that the only available information about the distribution of the are the marginal quantile functions in the form of a “black box“, meaning that we can only evaluate these quantile functions but have no mathematical expression for them (so that neither the density, nor the distribution function of are available in closed form).

Our work includes the following contributions: (i) we develop an algorithm to evaluate the distribution function of a grouped NVM model. Our method only requires the user to provide a function that evaluates the quantile function of the

through a black box. As such, different mixing distributions can be studied by merely providing a quantile function without having to implement an integration routine for the model at hand; (ii) as mentioned above, the density function for a grouped

t distribution does not exist in closed form, neither does it for the more general grouped NVM case. We provide an adaptive algorithm to estimate this density function in a very general setting. The adaptive mechanism we propose ensures the estimation procedure is precise even for points that are far from the mean; (iii) to estimate Kendall’s tau and Spearman’s rho for a two-dimensional grouped NVM copula, we provide a representation as an expectation, which in turn leads to an easy-to-approximate two- or three-dimensional integral; (iv) we provide an algorithm to estimate the copula and its density associated with the grouped

t copula, and fitting algorithms to estimate the parameters of a grouped NVM copula based on a dataset. While the problem of parameter estimation was already studied in

Daul et al. (

2003) and

Luo and Shevchenko (

2010), the computation of the copula density which is required for the joint estimation of all dof parameters has not been investigated in full generality for arbitrary dimensions yet, which is a gap we fill in this paper.

The four items from the list of contributions described in the previous paragraph correspond to

Section 3,

Section 4,

Section 5 and

Section 6 of the paper.

Section 2 includes a brief presentation of the notation used, basic properties of grouped NVM distributions and a description of randomized quasi-Monte Carlo methods that are used throughout the paper since most quantities of interest require the approximation of integrals.

Section 7 provides a discussion. The proofs are given in

Section 8.

All our methods are implemented in the

R package

nvmix (

Hofert et al. (

2020)) and all numerical results are reproducible with the demo

grouped_mixtures.

3. Distribution Function

Let componentwise (entries to be interpreted as the corresponding limits). Then is the probability that the random vector falls in the hyper-rectangle spanned by the lower-left and upper-right endpoints and , respectively. If , we recover which is the (cumulative) distribution function of .

Assume wlog that

, otherwise adjust

,

accordingly. Then

where

for

. Note that the function

itself is a

d-dimensional integral for which no closed formula exists and is typically approximated via numerical methods; see, e.g.,

Genz (

1992).

Comonotonicity of the

allowed us to write

as a

-dimensional integral; had the

a different dependence structure, this convenience would be lost and the resulting integral in (

11) could be up to

-dimensional (e.g., when all

are independent).

3.1. Estimation

In

Hintz et al. (

2020), randomized quasi-Monte Carlo methods have been derived to approximate the distribution function of a normal variance mixture

from (

1). Grouped normal variance mixtures can be dealt with similarly, thanks to the comonotonicity of the mixing random variables in

.

In order to apply RQMC to the problem of estimating

, we need to transform

to an integral over the unit hypercube. To this end, we first address

. Let

be the Cholesky factor of

(a lower triangular matrix such that

). We assume that

has full rank which implies

for

.

Genz (

1992) (see also

Genz and Bretz (

1999,

2002,

2009)) uses a series of transformations, relying on

C being a lower triangular matrix, to write

where the

and

are recursively defined via

and

is

with

replaced by

for

. Note that the final integral in (

12) is

-dimensional.

Combining the representation (

12) of

with Equation (

11) yields

where

for

. The

are recursively defined by

for

and the

are

with

replaced by

for

.

Summarizing, we were able to write as an integral over the d-dimensional unit hypercube. Our algorithm to approximate consists of two steps:

First, a greedy re-ordering algorithm is applied to the inputs

,

,

. It re-orders the components

of

and

as well as the corresponding rows and columns in

in a way that the expected ranges of

in (

15) are increasing with the index

i for

. Observe that the integration variable

is present in all remaining

integrals in (

14) whose ranges are determined by the ranges of

; reordering the variables according to expected ranges therefore (in the vast majority of cases) reduces the overall variability of

g (namely,

for

). Reordering also makes the first variables “more important” than the last ones, thereby reducing the effective dimension of the integrand. This is particularly beneficial for quasi-Monte Carlo methods, as these methods are known to perform well in high-dimensional problems with low effective dimension; see, e.g.,

Caflisch et al. (

1997),

Wang and Sloan (

2005). For a detailed description of the method, see (

Hintz et al. 2020, Algorithm 3.2) (with

replaced by

and similarly for

for

to account for the generalization); similar reordering strategies have been proposed in

Gibson et al. (

1994) for calculating multivariate normal and in

Genz and Bretz (

2002) for multivariate

t probabilities.

Second, an RQMC algorithm as described in

Section 2.2 is applied to approximate the integral in (

14) with re-ordered

,

,

and

. Instead of integrating

g from (

15) directly, antithetic variates are employed so that effectively, the function

is integrated.

The algorithm to estimate just described is implemented in the function pgnvmix() of the R package nvmix.

3.2. Numerical Results

In order to assess the performance of our algorithm described in

Section 3.1, we estimate the error as a function of the number of function evaluations. Three estimators are considered. First, the “Crude MC“ estimator is constructed by sampling

and estimating

by

. The second and third estimator are based on the integrand

g from (

15), which is integrated once using MC (“g (MC)”) and once using a randomized Sobol’ sequence (“g (sobol)”). In either case, variable reordering is applied first.

We perform our experiments for an inverse-gamma mixture. As motivated in the introduction, an important special case of (grouped) normal variance mixtures is obtained when the mixing distribution is inverse-gamma. In the ungrouped case when

with

, the distribution of

is multivariate

t (notation

) with density

The distribution function of

does not admit a closed form; estimation of the latter was discussed for instance in

Genz and Bretz (

2009),

Hintz et al. (

2020),

Cao et al. (

2020). The same holds for a grouped inverse-gamma mixture model. If

for

, the random vector

follows a grouped

t distribution, denoted by

or by

for

. If

, denote by

the group sizes. In this case, we use the notation

or

for

. If

, it follows that

.

For our numerical examples to test the performance of our procedure for estimating

, assume

for a correlation matrix

P. We perform the experiment in

with

and in

with

. The following is repeated 15 times: Sample an upper limit

and a correlation matrix

P (sampled based on a random Wishart matrix via the function

rWishart() in R). Then estimate

using the three aforementioned methods using various sample sizes and estimate the error for the MC estimators based on a CLT argument and for the RQMC estimator as described in

Section 2.2.

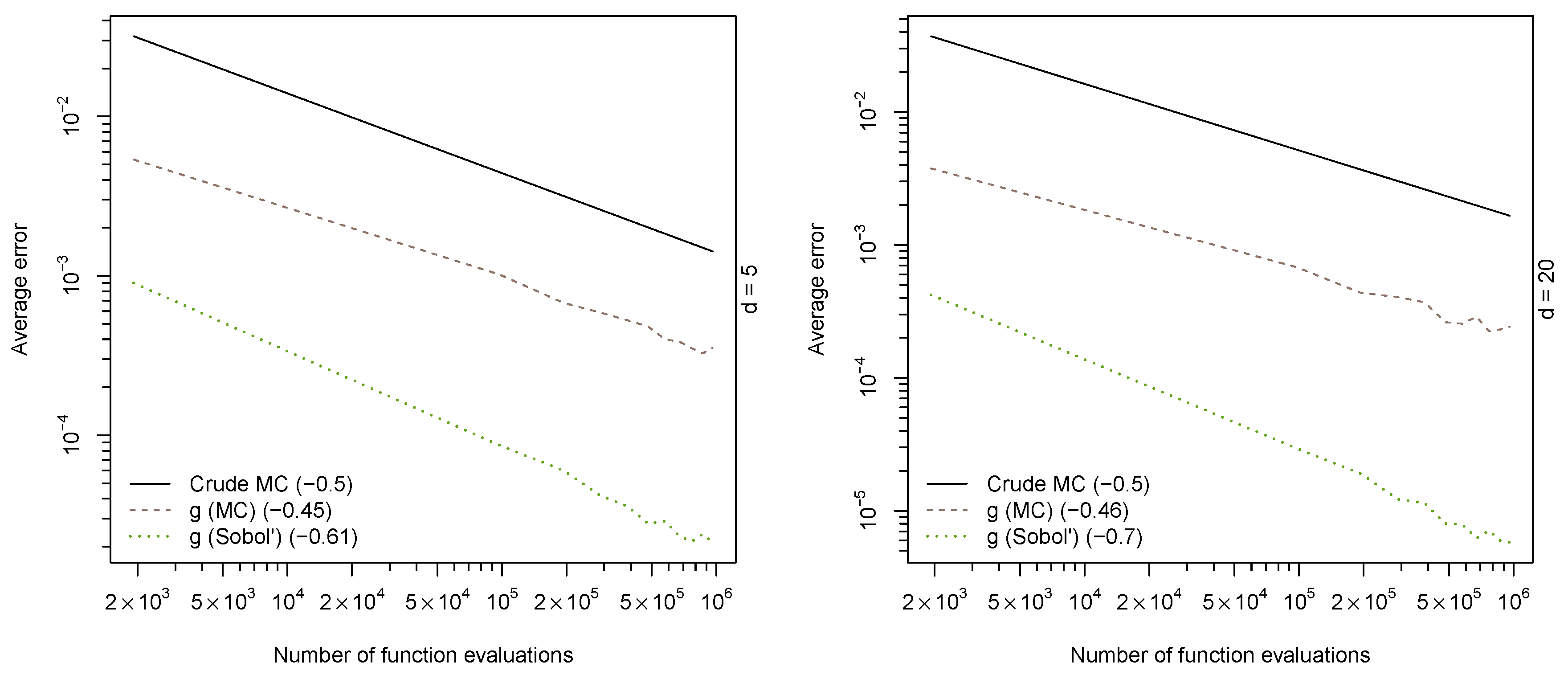

Figure 1 reports the average absolute errors for each sample size over the 15 runs.

Convergence speed as measured by the regression coefficient of where is the estimated error are displayed in the legend. As expected, the MC estimators have an overall convergence speed of ; however, the crude estimator has a much larger variance than the MC estimator based on the function g. The RQMC estimator (“g (sobol)”) not only shows much faster convergence speed than its MC counterparts, but also a smaller variance.

4. Density Function

Let us now focus on the density of

, where we assume that

has full rank in order for the density to exist. As mentioned in the introduction, the density of

is typically not available in closed form, not even in the case of a grouped

t distribution. The same conditioning argument used to derive (

11) yields that the density of

evaluated at

can be written as

where

denotes the (squared) Mahalanobis distance of

from

with respect to

and the integrand

is defined in an obvious manner. Except for some special cases (e.g., when all

are inverse-gamma with the same parameters), this integral cannot be computed explicitly, so that we rely on numerical approximation thereof.

4.1. Estimation

From (

18), we find that computing the density

of

evaluated at

requires the estimation of a univariate integral. As interest often lies in the logarithmic density (or log-density) rather than the actual density (e.g., likelihood-based methods where the log-likelihood function of a random sample is optimized over some parameter space), we directly consider the problem of estimating

for

with

h given in (

18).

Since

is expressed as an integral over

, RQMC methods to estimate

from

Section 2.2 can be applied directly to the problem in this form. If the log-density needs to be evaluated at several

, one can use the same point-sets

and therefore the same realizations of the mixing random vector

for all inputs. This avoids costly evaluations of the quantile functions

.

Estimating

via RQMC as just described works well for input

of moderate size, but deteriorates if

is far away from the mean. To see this,

Figure 2 shows the integrand

h for three different input

and three different settings for

. If

is “large”, most of the mass is contained in a small subdomain of

containing the abscissa of the maximum of

h. If an integration routine is not able to detect this peak, the density is substantially underestimated. Further complication arises as we are estimating the log-density rather than the density. Unboundedness of the natural logarithm at 0 makes estimation of

for small

challenging, both from a theoretical and a computational point of view due to finite machine precision.

In (

Hintz et al. 2020, Section 4), an adaptive RQMC algorithm is proposed to efficiently estimate the log-density of

. We generalize this method to the grouped case. The grouped case is more complicated because the distribution is not elliptical, hence the density does not only depend on

through

. Furthermore, the height of the (unique) maximum of

h in the ungrouped case can be easily computed without simulation, which helps the adaptive procedure find the relevant region; in the grouped case, the value of the maximum is usually not available. Lastly,

S (as opposed to 1) quantile evaluations are needed to obtain one function value

; from a run time perspective, evaluating these quantile functions is the most expensive part.

If

is “large”, the idea is to apply RQMC only in a relevant region

with

. More precisely, given a threshold

with

, choose

(

l for “left” and

r for “right”) with

so that

if and only if

. For instance, take

with

so that

is 10 orders smaller than

.

One can then apply RQMC (with a proper logarithm) in the region (by replacing every by ), producing an estimate for . By construction, the remaining regions do not contribute significantly to the overall integral anyway, so that a rather quick integration routine suffices here. Note that neither , nor are known explicitly. However, can be estimated from pilot-runs and can be approximated using bisections.

Summarizing, we propose the following method to estimate , , for given inputs and error tolerance .

This algorithm is implemented in the function dgnvmix(, log = TRUE) in the R package nvmix, which by default uses a relative error tolerance.

The advantage of the proposed algorithm is that only little run time is spent on estimating “easy” integrals, thanks to the pilot run in Step 1. If and (the current default in the nvmix package), this step gives 15 360 pairs . These pairs give good starting values for the bisections to find . Note that no additional quantile evaluations are needed to estimate the less important regions and .

4.2. Numerical Results

Luo and Shevchenko (

2010) are faced with almost the same integration problem when estimating the density of a bivariate grouped

t copula. They use a globally adaptive integration scheme from

Piessens et al. (

2012) to integrate

h. While this procedure works well for a range of inputs, it deteriorates for input

with large components.

Consider first

and recall that the density of

is known and given by (

17); this is useful to test our estimation procedure. As such, let

and consider the problem of evaluating the density of

at

. Some values of the corresponding integrands are shown in

Figure 2. In

Table 1, true and estimated (log-)density values are reported; once estimated using the

R function

integrate(), which is based on the QUADPACK package of

Piessens et al. (

2012) and once using

dgnvmix(), which is based on Algorithm 1. Clearly, the

integrate() integration routine is not capable of detecting the peak when input

is large, yielding substantially flawed estimates. The estimates obtained from

dgnvmix(), however, are quite close to the true values even far out in the tail.

| Algorithm 1: Adaptive RQMC Algorithm to Estimate . |

Given , , , , , estimate , , via: Compute with sample size using the same random numbers for all input , . Store all uniforms with corresponding quantile evaluations in a list . If all estimates , , meet the error tolerance , go to Step 4. Otherwise let , with be the inputs whose error estimates exceed the error tolerance. For each remaining input , , do:

- (a)

Use all pairs in to compute values of and set . If the largest value of h is obtained for the largest (smallest) u in the list , set (). - (b)

If , set and if , set . Unless already specified, use bisections to find and such that and ( ) is the smallest (largest) u such that from ( 19) with replaced by . Starting intervals for the bisections can be found from the values in . - (c)

If , approximate using a trapezoidal rule with proper logarithm and knots where are those u’s in satisfying . Call the approximation . If , set . - (d)

If , approximate using a trapezoidal rule with proper logarithm and knots where are those u’s in satisfying . Call the approximation . If , set . - (e)

Estimate via RQMC. That is, compute from ( 10) where every is replaced by . Increase n until the error tolerance is met. Then set which estimates . - (f)

Return , .

|

The preceding discussion focused on the classical multivariate

t setting, as the density is known in this case. Next, consider a grouped inverse-gamma mixture model and let

. The density

of

is not available in closed form, so that here we indeed need to rely on estimation of the latter. The following experiment is performed for

with

and for

where

(corresponding to two groups of size 5 each). First, a sample from a more heavy tailed grouped

t distribution of size 2500 is sampled (with degrees of freedom

and

, respectively) and then the log-density function of

is evaluated at the sample. The results are shown in

Figure 3.

It is clear from the plots that integrate() again gives wrong approximations to for input far out in the tail; for small input , the results from integrate() and from dgnvmix() coincide. Furthermore, it can be seen that the density function is not monotonic in the Mahalanobis distance (as grouped normal mixtures are not elliptical anymore). The plot also includes the log-density functions of an ungrouped d-dimensional t distribution with degrees of freedom 3 and 6, respectively. The log-density function of the grouped mixture with is not bounded by either; in fact, the grouped mixture shows heavier tails than both the t distribution with 3 and with 6 dof.

5. Kendall tau and Spearman rho

Two widely used measures of association are the rank correlation coefficients Spearman’s rho and Kendall’s tau . For elliptical models, one can easily compute Spearman’s rho as a function of the copula parameter which can be useful in estimating the matrix P non-parametrically. For grouped mixtures, however, this is not easily possible. In this section, integral representations for Spearman’s rho and Kendall’s tau in the general grouped NVM case are derived.

If is a random vector with continuous margins , then and , where independent of and is the linear correlation between X and Y. Both and depend only on the copula of F.

If

is elliptical and

, then

see (

Lindskog et al. 2003, Theorem 2). This formula holds only approximately for grouped normal variance mixtures. In

Daul et al. (

2003), an expression was derived for Kendall’s tau of bivariate, grouped

t copulas. Their result is easily extended to the more general grouped normal variance mixture case; see

Section 8 for the proof.

Proposition 1. Let and . Then where .

Next, we address Spearman’s rho

. For computing

, it is useful to study

. If

where

P is a correlation matrix with

and

, then

see, e.g., (

McNeil et al. 2015, Proposition 7.41). Using the same technique, we can show that this result also holds for grouped normal variance mixtures; see

Section 8 for the proof.

Proposition 2. Let and . Then Remark 1. If is a grouped elliptical distribution in the sense of (5), a very similar idea can be used to show that . Next, we derive a new expression for Spearman’s rho

for bivariate grouped normal variance mixture distributions; see

Section 8 for the proof.

Proposition 3. Let and . Thenwhere . Numerical Results

Let

. It follows from Proposition 1 that

Similarly, Proposition 3 implies that

Hence, both

and

can be expressed as integrals over the

d-dimensional unit hypercube with

so that RQMC methods as described in

Section 2.2 can be applied directly to the problem in this form to estimate

and

, respectively. This is implemented in the function

corgnvmix() (with

method = "kendall" or

method = "spearman") of the

R package

nvmix.

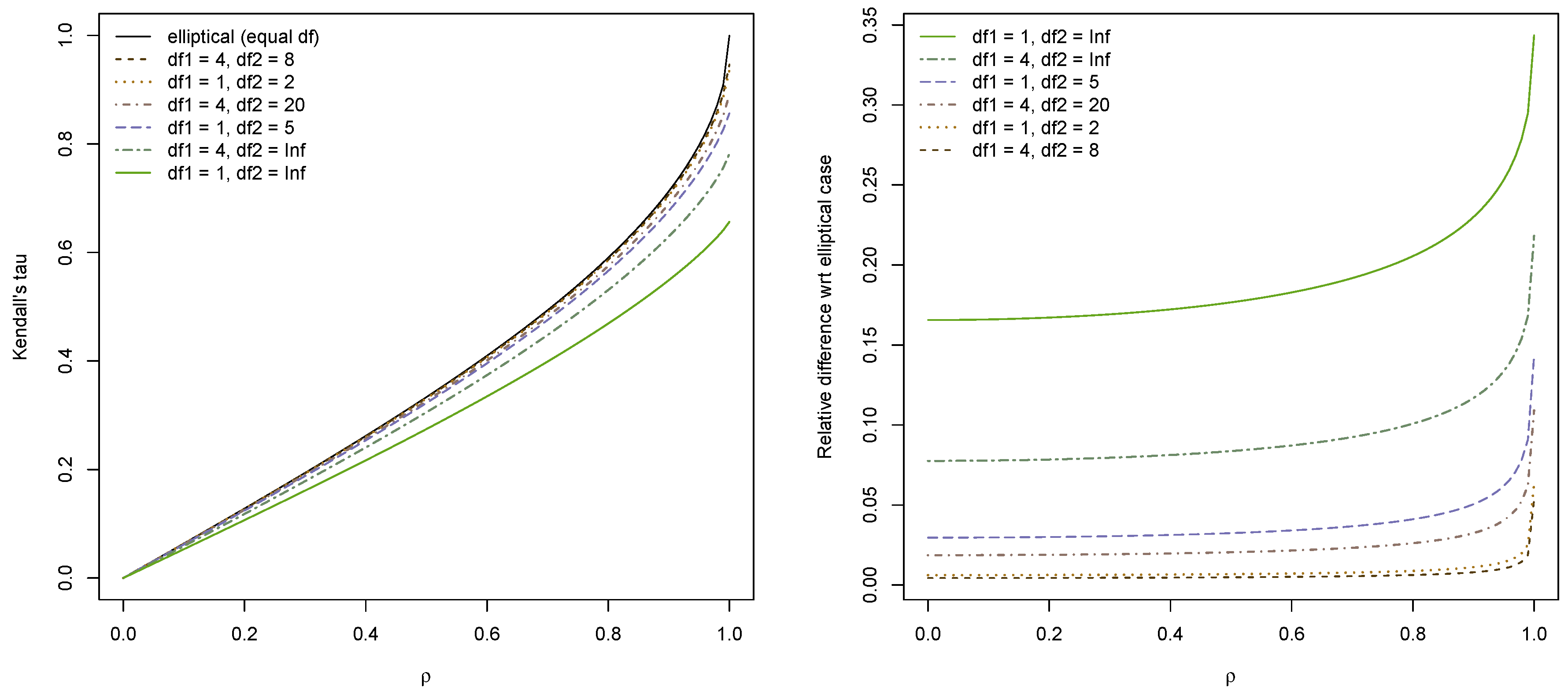

As an example, we consider three different bivariate grouped

t distributions with

and plot estimated

as a function of

in

Figure 4. The elliptical case (corresponding to equal dof) is included for comparison. When the pairwise dof are close and

is not too close to 1, the elliptical approximation is quite satisfactory. However, when the dof are further apart there is a significant difference between the estimated

and the elliptical approximation. This is highlighted in the plot on the right side, which displays the relative difference

. Intuitively, it makes sense that the approximation deteriorates when the dof are further apart, as the closer the dof, the “closer” is the model to being elliptical.

6. Copula Setting

So far, the focus of this paper was on grouped normal variance mixtures. This section addresses grouped normal variance mixture copulas, i.e., the copulas derived from via Sklar’s theorem. The first part addresses grouped NVM copulas in full generality and provides formulas for the copula, its density and the tail dependence coefficients. The second part details the important special case of inverse-gamma mixture copulas, that is copulas derived from a grouped t distribution, . The third part discusses estimation of the copula and its density whereas the fourth part answers the question of how copula parameters can be fitted to a dataset. The last part of this section includes numerical examples.

6.1. Grouped Normal Variance Mixture Copulas

Copulas provide a flexible tool for modeling dependent risks, as they allow one to model the margins separately from the dependence between the margins. Let

be a

d-dimensional random vector with continuous margins

. Consider the random vector

given by

; note that

for

. The

copula C of

F (or

) is the distribution function of the margin-free

, i.e.,

If

F is absolutely continuous and the margins

are strictly increasing and continuous, the

copula density is given by

where

f denotes the (joint) density of

F and

is the marginal density of

. For more about copulas and their applications to risk management, see, e.g.,

Embrechts et al. (

2001);

Nelsen (

2007).

Since copulas are invariant with respect to strictly increasing marginal transformations, we may wlog assume that

,

is a correlation matrix and we may consider

. We find using (

11) that the

grouped normal variance mixture copula is given by

and its density can be computed using (

18) as

where

and

denote the distribution function and density function of

for

; directly considering

also makes (

25) more robust to compute.

In the remainder of this subsection, some useful properties of gNVM copulas are derived. In particular, we study symmetry properties, rank correlation and tail dependence coefficients.

6.1.1. Radial Symmetry and Exchangeability

A

d-dimensional random vector

is radially symmetric about

if

. It is evident from (

2) that

is radially symmetric about its location vector

. In layman’s terms this implies that jointly large values of

are as likely as jointly small values of

. Radial symmetry also implies that

.

If for all permutations of , the random vector is called exchangeable. The same definition applies to copulas. If , then is in general not exchangeable unless in which case . The lack of exchangeability implies that , in general.

6.1.2. Tail Dependence Coefficients

Consider a bivariate

copula. Such copula is radially symmetric, hence the lower and upper tail dependence coefficients are equal, i.e.,

, where

for

. In the case where only the quantile functions

are available, no simple expression for

is available. In

Luo and Shevchenko (

2010),

is derived for grouped

t copulas, as will be discussed in

Section 6.2. Following the arguments used in their proof, the following lemma provides a new expression for

in the more general normal variance mixture case.

Proposition 4. The tail dependence coefficient λ for a bivariate with satisfieswhere for , 6.2. Inverse-Gamma Mixtures

If

for a positive definite correlation matrix

P, the copula of

extracted via Sklar’s theorem is the well known

t copula, denoted by

. This copula is given by

where

and

denote the distribution function and quantile function of a univariate standard

t distribution. Note that (

26) is merely the distribution function of

evaluated at the quantiles

. The copula density

is

The (upper and lower) tail dependence coefficient

of the bivariate

with

is well known to be

see (

Demarta and McNeil 2005, Propositon 1). The multivariate

t distribution being elliptical implies the formula

for Kendall’s tau.

A closed formula for Spearman’s rho is not available, but our Proposition 3 implies that

Next, consider a grouped inverse-gamma mixture model. If

, the copula of

is the grouped

t copula, denoted by

. From (

24),

and the copula density follows from (

25) as

The (lower and upper) tail dependence coefficient

of

is given by

see (

Luo and Shevchenko 2010, Equation (26)). Here,

denotes the density of a

distribution.

Finally, consider rank correlation coefficients for grouped

t copulas. No closed formula for either Kendall’s tau or Spearman’s rho exists in the grouped

t case. An exact integral representation of

for

follows from Proposition 1. No substantial simplification of (

21) therein can be achieved by considering the special case when

. In order to compute

, one can either numerically integrate (

21) (as will be discussed in the next subsection) or use the approximation

which was shown to be a “very accurate” approximation in

Daul et al. (

2003).

For Spearman’s rho, no closed formula can be derived either, not even in the ungrouped

t copula case, so that the integral in (

22) in Proposition 3 needs be computed numerically, as will be discussed in the next subsection.

The discussion in this section highlights that moving from a scalar mixing rv W (as in the classical t case) to comonotone mixing rvs (as in the grouped t case) introduces challenges from a computational point of view. While in the classical t setting, the density, Kendall’s tau and the tail dependence coefficient are available in closed form, all of these quantities need to be estimated in the more general grouped setting. Efficient estimation of these important quantities is discussed in the next subsection.

6.3. Estimation of the Copula and Its Density

Consider a

d-dimensional normal variance mixture copula

. From (

24), it follows that

where

is the distribution function of

and

is the distribution function of

for

. If the margins are known (as in the case of an inverse-gamma mixture), evaluating the copula is no harder than evaluating the distribution function of

so that the methods described in

Section 3.1 can be applied.

When the mixing rvs

are only known through their quantile functions in the form of a “black box”, one needs to estimate the marginal quantiles

of

F first. Note that

which can be estimated using RQMC. The quantile

can then be estimated by numerically solving

for

x, for instance using a bisection algorithm or Newton’s method.

The general form of gNVM copula densities was given in (

25). Again, if the margins are known, the only unknown quantity is the joint density

which can be estimated using the adaptive RQMC procedure proposed in

Section 4.1. If the margins are not available,

can be estimated as discussed above. The marginal densities

can be estimated using an adaptive RQMC algorithm similar to the one developed in

Section 4.1; see also (

Hintz et al. 2020, Section 4).

Remark 2. Estimating the copula density is the most challenging problem discussed in this paper if we assume that is only known via its marginal quantile functions. Evaluating the copula density at one requires estimation of:

the marginal quantiles , which involves estimation of and then numerical root finding, for each ,

the marginal densities evaluated at the quantiles for . This involves estimation of the density of a univariate normal variance mixture,

the joint density evaluated at the quantiles , which is another one dimensional integration problem.

It follows from Remark 2 that, while estimation of is theoretically possible with the methods proposed in this paper, the problem becomes computationally intractable for large dimensions d. If the margins are known, however, our proposed methods are efficient and accurate, as demonstrated in next subsection, where we focus on the important case of a grouped t model. Our methods to estimate the copula and the density of are implemented in the functions pgStudentcopula() and dgStudentcopula() in the R package nvmix.

6.4. Fitting Copula Parameters to a Dataset

In this subsection, we discuss estimation methods for grouped normal variance mixture copulas. Let be independent and distributed according to some distribution with as underlying copula, with and group sizes with . Furthermore, let be (a vector of) parameters of the kth mixing distribution for ; for instance, in the grouped t case, is the degrees of freedom for group k. Finally, denote by the vector consisting of all mixing parameters. Note that we assume that the group structure is given. We are interested in estimating the parameter vector and the matrix P of the underlying copula .

In

Daul et al. (

2003), this problem was discussed for the grouped

t copula where

for

. In this case, all subgroups are

t copulas and

Daul et al. (

2003) suggest estimating the dof

separately in each subgroup. Computationally, this is rather simple as the density of the ungrouped

t copula is known analytically.

Luo and Shevchenko (

2010) consider the grouped

t copula with

, so

for

. Since any univariate margin of a copula is uniformly distributed, separate estimation is not feasible. As such,

Luo and Shevchenko (

2010) suggest estimating

jointly by maximizing the copula-likelihood of the grouped mixture. In both references, the matrix

P is estimated by estimating pairwise Kendall’s tau and using the approximate identity

for

. Although we have shown in

Section 5 that in some cases, this approximation could be too crude, our assessment is that in the context of the fitting examples considered in the present section, this approximation is sufficiently accurate.

Luo and Shevchenko (

2010) also consider joint estimation of

by maximizing the corresponding copula likelihood simultaneously over all

parameters. Their numerical results in

suggest that this does not lead to a significant improvement. In large dimensions

, the optimization problem becomes intractable, however, so that the first non-parametric approach for estimating

P is likely to be preferred.

We combine the two estimation methods, applied to the general case of a grouped normal variance mixture, in Algorithm 2.

| Algorithm 2: Estimation of the Copula Parameters and of . |

Given iid , estimate and P of the underlying as follows: Estimation of P. Estimate Kendall’s tau for each pair . Use the approximate identity to find the estimates . Then combine the estimates into a correlation matrix , which may have to be modified to ensure positive definiteness. Transformation to pseudo-observations. If necessary, transform the data to pseudo-observations from the underlying copula, for instance, by setting where is the rank of among . Initial parameters. Maximize the copula log-likelihood for each subgroup k with over their respective parameters separately. That is, if (where ) denotes the sub-vector of belonging to group k, and if is defined accordingly, solve the following optimization problems:

For “groups” with , choose the initial estimate from prior/expert experience or as a hard-coded value. Joint estimation. With initial estimates , at hand, optimize the full copula likelihood to estimate ; that is,

|

The method proposed in

Daul et al. (

2003) returns the initial estimates obtained in Step 3. A potential drawback of this approach is that it fails to consider the dependence between the groups correctly. Indeed, the dependence between a component in group

and a component in group

(e.g., measured by Kendall’s tau or by the tail-dependence coefficient) is determined by both

and

. As such, these parameters should be estimated jointly.

Note that the copula density is not available in closed form, not even in the grouped

t case, so that each call of the likelihood function in (

30) requires the approximation of

n integrals. This poses numerical challenges, as the estimated likelihood function is typically “bumpy”, having many local maxima due to estimation errors.

If

is only known via its marginal quantile functions, as is the general theme of this paper, the optimization problem in (

29) and in (

30) become intractable (unless

d and

n are small) due to the numerical challenges involved in the estimation of the copula density; see also Remark 2. We leave the problem of fitting grouped normal variance mixture copulas in full generality (where the distribution of the mixing random variables

is only specified via marginal quantile functions in the form of a “black box”) for future research. Instead, we focus on the important case of a grouped

t copula. Here, the quantile functions

(of

) and the densities

are known for

, since the margins are all

t distributed. This substantially simplifies the underlying numerical procedure. Our method is implemented in the function

fitgStudentcopula() of the R package

nvmix. The numerical optimizations in Steps 3 and 4 are passed to the

R optimizer

optim() and the copula density is estimated as in

Section 6.3.

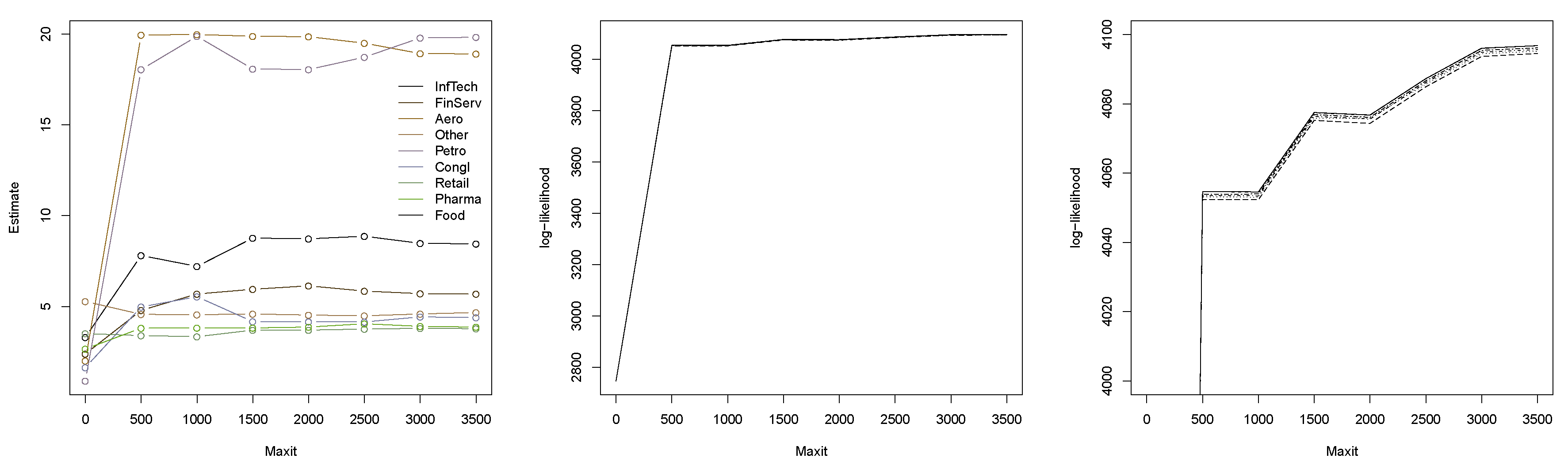

Example 1. Consider a 6-dimensional grouped t copula, with three groups of size 2 each and degrees of freedom and 7, respectively. We perform the following experiment: We sample a correlation matrix P using the R function rWishart(). Then, for each sample size , we repeat sampling 15 times, and in each case, estimate the degrees of freedom once using the method in Daul et al. (2003) (i.e., by estimating the dof in each group separately) and once using our method from the previous section. The true matrix P is used in the fitting, so that the focus is really on estimating the dof. The results are displayed in Figure 5. The estimates on the left are obtained for each group separately; on the right, the dof were estimated jointly by maximizing the full copula likelihood (with initial estimates obtained as in the left figure). Clearly, the jointly estimated parameters are much closer to their true values (which are known in this simulation study and indicated by horizontal lines), and it can be confirmed that the variance decreases with increasing sample size n. Example 2. Let us now consider the negative logarithmic returns of the constituents of the Dow Jones 30 index from 1 January 2014 to 31 December 2015 ( data points obtained from the R package qrmdata of Hofert and Hornik (2016)) and, after deGARCHing, fit a grouped t copula to the standardized residuals. We choose the natural groupings induced by the industry sectors of the 30 constituents and merge groups of size 1 so that 9 groups are left. Figure 6 displays the estimates obtained for various specifications of maxit, the maximum number of iterations for the underlying optimizer (note that the current default of optim() is as low as maxit = 500). The points for maxit = 0 correspond to the initial estimates found from separately fitting t copulas to the groups. The initial estimates differ significantly from the maximum likelihood estimates (MLEs) obtained from the joint estimation of the dof. Note also that the MLEs change with increasing maxit argument, even though they do not change drastically anymore if 1500 or more iterations are used. Note that the initial parameters result in a much more heavy tailed model than the MLEs. Figure 6 also displays the estimated log-likelihood of the parameters found by the fitting procedure. The six lines correspond to the estimated log-likelihood using six different seeds. It can be seen that estimating the dof jointly (as opposed to group-wise) yields a substantially larger log-likelihood, whereas increasing the parameter maxit (beyond a necessary minimum) only gives a minor improvement. In order to examine the impact of the different estimates on the underlying copula in terms of its tail behavior,

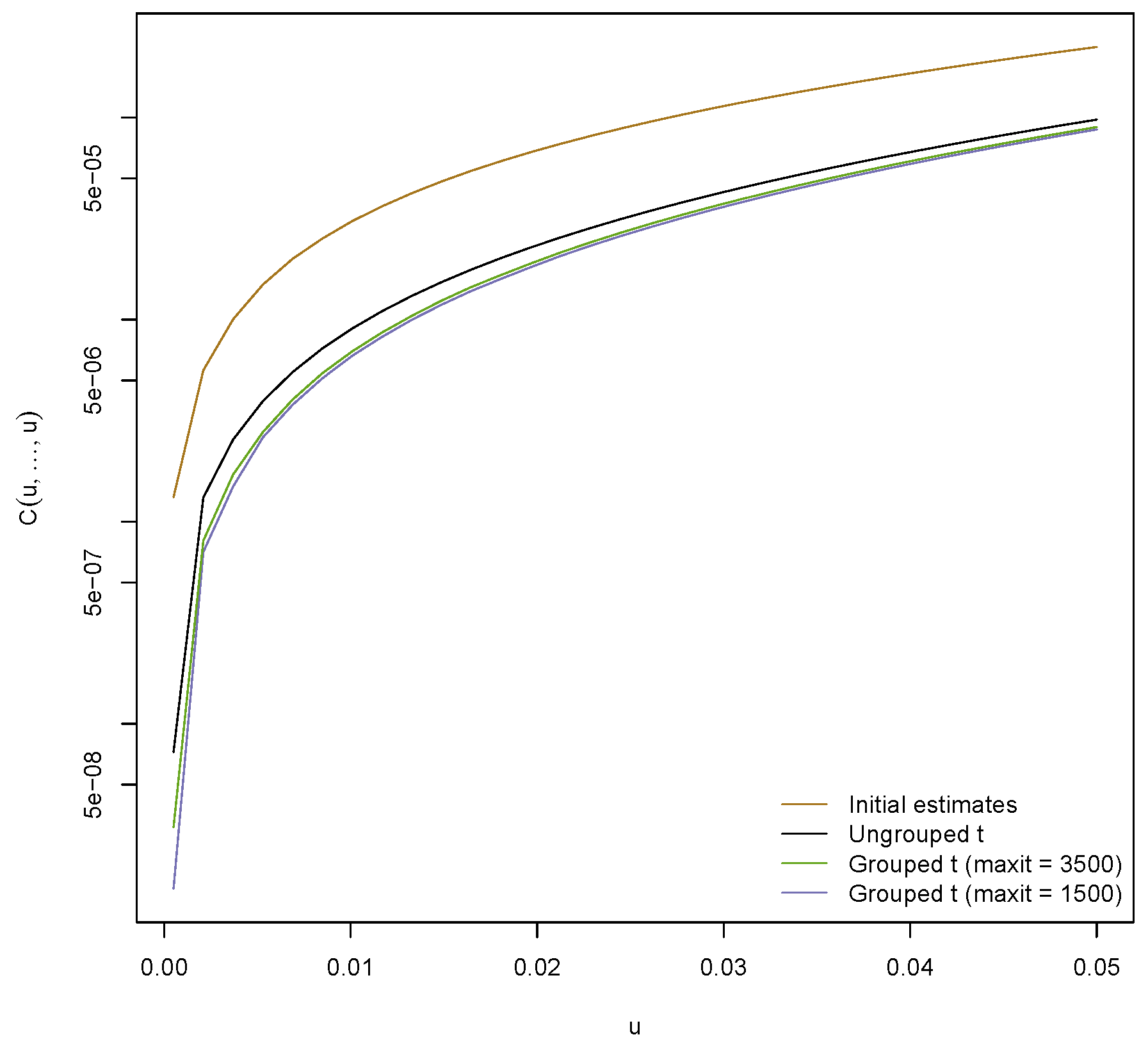

Figure 7 displays the probability

estimated using methods from

Section 6.3 as a function of

u; in a risk management context,

is the probability of a jointly large loss, hence a rare event. An absolute error tolerance of

was used to estimate the copula. The figure also includes the corresponding probability for the ungrouped

t copula, for which the dof were estimated to be 6.3.

Figure 7 indicates that the initial estimates yield the most heavy tailed model. This seems reasonable since all initial estimates for the dof range between 0.9 and 5.3 (with average 2.8). The models obtained from the MLEs exhibit the smallest tail probability, indicating that these are the least heavy tailed models considered here. This is in line with

Figure 6, which shows that the dof are substantially larger than the initial estimates. The ungrouped

t copula is more heavy tailed than the fitted grouped one (with MLEs) but less heavy tailed than the fitted grouped one with initial estimates.

This example demonstrates that it is generally advisable to estimate the dof jointly when grouped modeling is of interest, rather than group-wise as suggested in

Daul et al. (

2003). Indeed, in this particular example, the initial estimates give a model that substantially overestimates the risk of jointly large losses. As can be seen from

Figure 6, optimizing an estimated log-likelihood function is not at all trivial, in particular when many parameters are involved. Indeed, the underlying optimizer never detected convergence, which is why the user needs to carefully assess which specification of

maxit to use. We plan on exploring more elaborate optimization procedures which perform better in large dimensions for this problem in the future.

Example 3. In this example, we consider the problem of mean-variance (MV) portfolio optimization in the classical Markowitz (1952) setting. Consider d assets, and denote by and the expected return vector on the risky assets in excess of the risk free rate and the variance-covariance (VCV) matrix of asset returns in the portfolio at time t, respectively. We assume that an investor chooses the weights of the portfolio to maximize the quadratic utility function , where in what follows we assume the risk-aversion parameter . When there are no shortselling (or other) constraints, one finds the optimal as . As in Low et al. (2016), we consider relative portfolio weights, which are thus given by As such, the investor needs to estimate and . If we assume no shortselling, i.e., for , the optimization problem can be solved numerically, for instance using the R package quadprog of Turlach et al. (2019). Assume we have return data for the d assets stored in vectors , , and a sampling window . We perform an experiment similar to Low et al. (2016) and compare a historical approach with a model-based approach to estimate and . The main steps are as follows: - 1.

In each period , estimate and using the M previous return data , .

- 2.

Compute the optimal portfolio weights and the out-of-sample return .

In the historical approach, and in the first step are merely computed as the sample mean vector and sample VCV matrix of the past return data. Our model-based approach is a simplification of the approach used in Low et al. (2016). In particular, to estimate and in the first step, the following is done in each time period: - 1a.

Fit marginal models with standardized t innovations to , .

- 1b.

Extract the standardized residuals and fit a grouped t copula to the pseudo-observations thereof.

- 1c.

Sample n vectors from the fitted copula, transform the margins by applying the quantile function of the respective standardized t distribution and based on these n d-dimensional residuals, sample from the fitted giving a total of n simulated return vectors, say , .

- 1d.

Estimate and from , .

The historical and model-based approaches each produce out-of-sample returns from which we can estimate the certainty-equivalent return (CER) and the Sharpe-ratio (SR) aswhere and denote the sample mean and sample standard deviation of the out-of-sample returns; see also Tu and Zhou (2011). Note that larger, positive values of the SR and CER indicate better portfolio performance. We consider logarithmic returns of the constituents of the Dow Jones 30 index from 1 January 2013 to 31 December 2014 ( data points obtained from the R package qrmdata of Hofert and Hornik (2016)), a sampling window of days, samples to estimate and in the model-based approach, a risk-free interest rate of zero and no transaction costs. We report (in percent) the point estimates , and for the historical approach and for the model-based approach based on an ungrouped and grouped t copula in Table 2 assuming no shortselling. To limit the run time for this illustrative example, the degrees of freedom for the grouped and ungrouped t copula are estimated once and held fixed throughout all time periods . We see that the point estimates for the grouped model exceed the point estimates for the ungrouped model. 7. Discussion and Conclusions

We introduced the class of grouped normal variance mixtures and provided efficient algorithms to work with this class of distributions: Estimating the distribution function and log-density function, estimating the copula and its density, estimating Spearman’s rho and Kendall’s tau and estimating the parameters of a grouped NVM copula given a dataset. Most algorithms (and functions in the package nvmix) merely require one to provide the quantile function(s) of the mixing distributions. Due to their importance in practice, algorithms presented in this paper (and their implementation in the R package nvmix) are widely applicable in practice.

We saw that the distribution function (and hence, the copula) of grouped NVM distributions can be efficiently estimated even in high dimensions using RQMC algorithms. The density function of grouped NVM distributions is in general not available in closed form, not even for the grouped t distribution, so one relies on its estimation. Our proposed adaptive algorithm is capable of estimating the log-density even in high dimensions accurately and efficiently. Fitting grouped normal variance mixture copulas, such as the grouped t copula, to data is an important yet challenging task due to lack of a tractable density function. Thanks to our adaptive procedure for estimating the density, the parameters can be estimated jointly in the special case of a grouped t copula. As was demonstrated in the previous section, it is indeed advisable to estimate the dof jointly, as otherwise one might severely over- or underestimate the joint tails.

A computational challenge that we plan to further investigate is the optimization of the estimated log-likelihood function, which is currently slow and lacks a reliable convergence criterion that can be used for automation. Another avenue for future research is to study how one can, for a given multivariate dataset, assign the components to homogeneous groups.