1. Introduction

The aim of this paper is to investigate the elasticity of variance of variance of the S&P 500 index. As that concept is not part of the standard financial nomenclature

1, let us introduce the general set-up. We consider a probability space

whose filtration is generated by two independent Brownian motions

and

. Letting

denote the value of the S&P 500 index at time

t, we model dynamics under the real world probability measure

as:

with

and where we have defined the Brownian motion

for a

, meaning that

. We assume that

and

are general (but suitable) adapted processes. We refer to

as the (instantaneous) variance. Our main focus will be the elasticity parameter

2 , which controls the level dependence of variance-of-variance with respect to the instantaneous variance

itself. We will see that determining

is not merely an academic exercise. Empirically the parameter choice is important for model performance, including the effective hedging of options.

There is an abundance of both theoretical and empirical work on stochastic volatility in financial markets. However, as succinctly formulated by

Felpel et al. (

2020), “Often it is observed that a specific stochastic volatility model is chosen not for particular dynamical features, but instead for convenience and ease of implementation”. In this short paper we aim for an empirical cross-examination of models, methods, and markets. It means that either of the separate analyses may be described—or dismissed—as “quite partial”, but we believe that the sum of their parts brings to the fore some insights that were not hitherto available. In

Section 2 we estimate the elasticity from a time series of realized variance, thus extending the analysis in

Tegnèr and Poulsen (

2018), where only pre-specified values of elasticity were considered. We end the section with an investigation of features that are not captured by one-factor diffusion-type stochastic volatility models, or long-memory or long-range dependence in particular. In

Section 3 we turn our attention to option prices to investigate the two—by far—most widely used models, the square-root model from

Heston (

1993) and the log-normal SABR (stochastic-alpha-beta-rho) model from

Hagan et al. (

2002), as well a simple hybrid of the two models. We study in-sample calibration issues along the lines of

Guillaume and Schoutens (

2010) as well as predition and hedge performance, adding to the analyses in

Rebonato (

2020) and

Poulsen (

2020).

2. Evidence from Realized Variance

In this section we present four different methods for estimating

from time series data, investigate their biases in a simulation experiment, and finally show the estimates on publicly available empirical data—the realized variance obtained from the Oxford-Man Institute’s “realized library”.

3 In

Tegnèr and Poulsen (

2018) it is demonstrated that it is possible to discriminate between common diffusion-type stochastic volatility models when measuring realized variance from a 5-minute observation frequency, so we chose that data (denoted “rv5” in the files) among the many time series in the Oxford-Man data.

4 Our dataset thus consists of daily observations between the 3rd of January 2000 and the 3rd of September 2019. After filtering out non-positive variance estimates and removing the day of the Flash Crash (6th of May 2010) we were left with a total of 4935 observations. We should also remark that since we focus on the

-parameter, the estimation methods will all solely focus on estimating the variance (or volatility) process. That is, we leave out a joint estimation also including the index price.

Let us now start by assuming a mean-reverting variance model of the form

with

being additional parameters. To estimate the parameters we can apply an Euler discretisation and then use maximum likelihood. Let

with

denoting the observation time points. We will, for simplicity, assume the time points are equidistant with step sizes of

trading day with 252 trading days assumed per year. We then approximate

for

, where we write

for a general process

X. Under the approximative model (

4), the joint density of

is a product of conditional densities, which are all Gaussian. To obtain maximum approximate likelihood estimates we plug in the observed time series and numerically maximize the log of this joint density, the log-likelihood function, over the model parameters

5 and use the Hessian matrix of the log-likelihood at the maximum to give us standard errors. Another model specification is to use a mean-reverting model for the

volatility process instead,

Ito’s formula applied to the squared solution of (

5) reveals that while the mean-reverting volatility and variance models are not equivalent as the functional forms of the drifts are different, we do have

so that

and the maximum approximate likelihood technique applied to the volatility process gives us an alternative way to estimate the elasticity.

6 The final two estimators we will consider are based on the concept of quadratic variation. Following proposition 4.21 of

Le Gall (

2016) the quadratic variation of a continuous semimartingale

X over the interval

, here denoted

, can be characterised as follows: Let

with

for

be an increasing sequence of partitions of

satisfying

as

. Then

with convergence in probability. For the

in the general model (

2) the quadratic variation is:

Combining (

7) and (

8) leads to the approximation

, or in logarithm terms

This means we can estimate

and

from a linear regression between the samples

and

. Applying the same reasoning to the squared increments of the volatility process,

gives way to estimate

and

by a linear regression.

The four estimators presented all suffer from several sources of bias: discretization bias, small sample bias, and a possible error from model misspecification; if the variance has linear drift, then the volatility does not, and vice versa. Hence to validate the estimators we conducted a simulation experiment, whose results, shown in

Table 1, we shall now briefly describe. For the experiment we assumed either the mean-reverting volatility or the mean-reverting variance model to be the true model, and simulated 1000 sample paths with 2520 steps per year across a total of 4935 days, thus mimicking the empirical set-up. Using only daily observations, we then applied each of the four methods to estimate

and

and averaged across the paths. We performed this entire experiment for different choices of

and

. For a realistic set-up, the remaining parameters (except for

under (

3)) were chosen by running the relevant likelihood estimation on the Oxford-Man dataset with

or

held fixed at the true value. The experiment shows that both regression estimators are fairly robust under the two different models as well as across different elasticities. The same is true when using maximum approximate likelihood on Equation (

5). For these three estimators, the bias when estimating

is mostly less than 0.05. Maximum approximate likelihood estimation on Equation (

3) performed much worse, with a consistent downwards bias of 0.1–0.2 depending on the true modeling assumptions and the elasticity used.

In

Table 2 we show estimates on the actual dataset using each of the four methods. We include the

statistic for the regression methods and show standard errors in parenthesis. There is a high correspondence between the elasticities estimated under all methods—except using likelihood on Equation (

3), which we just saw to be problematic. We found

-values in the range 0.91–0.97. In this range, the bias is still mostly less than 0.05 and the estimated standard errors are less than 0.02, so we are quite confident that the elasticity is in that range. The estimates in

Table 2 also show that instantaneous variance is characterized by a combination of strong mean-reversion and high volatility of volatility (both

and

are high compared to many other sources), which—if one were to think briefly beyond the realm of diffusion models—could point towards so-called rough volatility models as suggested by

Gatheral et al. (

2018).

7We end this section by looking into some of the problems with modeling volatility by a one-dimensional diffusion process. First, the long-memory properties of volatility that have been widely documented; an example is the paper

Bennedsen et al. (

2016). To have a precise discussion let us introduce some notation: Consider a covariance-stationary process

X and pick an arbitrary time point

t. We then define the autocorrelation function of the

X-process at lag

h as:

Several slightly different definitions of long-memory can be found in the literature. As an example, in

Bennedsen et al. (

2016) the autocorrelation function of the log-volatility is assumed to decay as

for

, where

are constants. The volatility process is then said to have long-memory exactly if

, which happens when

. In our context, with daily observations, a reasonable test for long-memory is therefore to see if the sums

converge, where

denotes an empirical estimate of (

11).

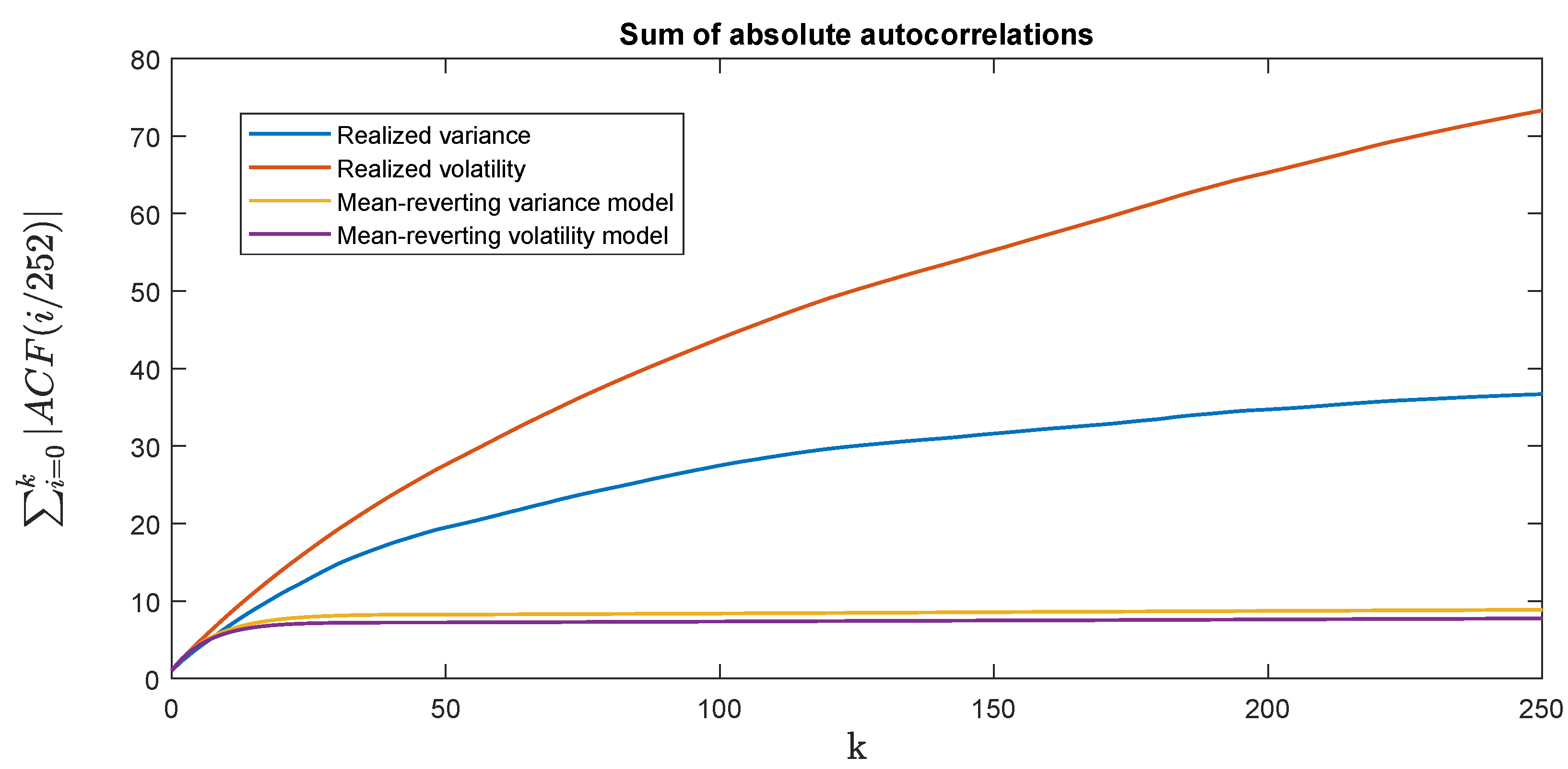

In

Figure 1, we show the sums in (

12) for lags up to around 1 year. Specifically, the blue and orange lines show the sums (

12) for the realized variance and volatility, respectively, both obtained from the Oxford-Man dataset. The lines indicate that there is detectable autocorrelation even at horizons of up to one year (although it is most pronounced looking at realized

volatility). This can then be compared to what our diffusion model specifications from (

3) and (

5) are able to produce. To this end, we conducted a simulation experiment, where we simulated each model in the same way as done for the bias-experiment shown in

Table 1, though this time using the estimated parameters from

Table 2. For each such simulated path we then computed the empirical autocorrelation function

at various lags

h. Repeating the simulations 10,000 times, averaging the autocorrelations and computing the running sums in Equation (

12), we get the yellow line (simulation of Equation (

3)) and purple line (simulation of Equation (

5)). Here it is clear that while the autocorrelations closely match the empirical ones for the first few lags, the fit is very bad at longer horizons—where in fact “longer” does not have to mean more than a few weeks.

To interpret the figure we once again cite

Bennedsen et al. (

2016), where it was assumed that the autocorrelation function of log-volatility behaves as

when

and where

and

are constants. Volatility is then said to be rough when

. That is, roughness is exactly related to how the autocorrelation function behaves for very short lags. With this knowledge and together with our high

and

estimates we interpret the good fits for short lags in

Figure 1 as evidence that our one-factor diffusion models have attempted to mimic roughness. The model-structure then does not allow us to simultaneously capture the memory in the process at medium and long lags.

An extension that would allow for both roughness and long-memory would be to model

(alternatively

) as a mean-reverting stochastic Volterra equation (SVE).

8 Specifically, one could model

where

K is a kernel function that if chosen appropriately and exactly would allow

to display both of these properties. Although an estimation of the more general Equation (

13) would certainly be a worthwhile pursuit, we leave it as an open hypothesis whether or not this will change the

-estimates. Two other examples—among several—of models that can capture long-memory are

Zumbach (

2004) and

Borland and Bouchaud (

2011).

Another objection to our models is the lack of jumps in the asset price. However, as shown in

Christensen et al. (

2014) using high-frequency data on numerous different financial assets, the jump proportion of the total variation of the asset price is generally small compared to the volatility component—at least when market micro-structure effects are properly accounted for. It is therefore very possible that adding jumps to the asset price will only slightly change the

-estimates. On the other hand, one could also consider jumps in the volatility process. Then again, with the current evidence in favor of rough volatility one could hypothesize that the most variation in the true volatility process can also be explained by continuous but rough (i.e., explosive) movements and not jumps. As with the long-memory objections, we leave a proper analysis for future research.

3. Evidence from Option Prices

We now turn our attention to options, more specifically to the market for European call and put options on the S&P 500 index. In an ideal world, one would specify flexible parametric structures for the drift function in Equation (

2) and for the market price of volatility risk, solve the associated pricing PDE, and then choose the parameter-values that minimize the distance—suitably measured—between market data and model prices. However, genuinely efficient methods for option price calculations exist only for a few quite specific models. We shall restrict our interest to the two most common models, the square-root model from

Heston (

1993) that sparked a revolution in affine models and transform methods, and the SABR (stochastic-alpha-beta-rho) model from

Hagan et al. (

2002) that made stochastic volatility a household object in banks.

We used the

End-of-Day Options Quote Data obtained from

https://datashop.cboe.com. This dataset contains bid and ask quotes on SPX European options at 15:45 Eastern Time (ET) and again at the close of the market. We used the 15:45 quotes for liquidity reasons and applied a number of filters to (a) clean the data and (b) compute the zero coupon bond and dividend yields implied by the put-call parity (and used in the subsequent analysis). Finally, we used smoothing and interpolation techniques on the mid quotes to obtain prices on a continuous set of strikes on each of the fixed set of expiries 1, 3, 6, 12, 18, and 30 months.

9 The cleaned dataset contains observations on 3783 trading days between the 3rd of May 2004 and the 15th of May 2019.

We will now briefly define the pricing models we use in our experiments. The models are therefore stated under an equivalent risk-neutral probability measure

. Note that Girsanov’s theorem tells us that the elasticity parameter is unaffected by equivalent measure changes. Thus, there is no a priori conflict between

-estimates obtained from time series observations of

(“under

”) from the previous section and risk-neutral values affecting option prices that we shall be looking at in this section. For simplicity we will abuse notation and write

and

to also denote two

-Brownian motions s.t.

for a

. Denoting the interest rate by

r and the dividend yield by

q we then have

Under the Heston model of

Heston (

1993), the variance process

is then modeled as a square-root diffusion of the form

with

. The model thus has elasticity

, which (Feller condition issues notwithstanding) is equivalent to

. We compute option prices using numerical integration with the techniques from

Lord and Kahl (

2006). The SABR model from

Hagan et al. (

2002) (here with their

) instead assumes

with

. To compute option prices we use here the approximation formula also found in

Hagan et al. (

2002). In elasticity-terms, the log-normal SABR model has

. Finally, we consider a third hybrid or mixture model, where we assume that the price of any vanilla European option is the average of the prices under both the Heston and SABR models. Specifying such a mixture model is exactly equivalent to specifying the marginal distributions of

for all

but nothing more. The mixture model allows us to incorporate a marginal distribution for

somewhere between Heston and SABR. The model is

underspecified, as it does not contain information on the dynamical structure of the asset price

; see the paper

Piterbarg (

2003) on mixture models and their pitfalls. However, because vanilla option prices under the mixture model are convex combinations of prices from the arbitrage-free models Heston and SABR, the mixture model is free from static arbitrages; it could thus at the very least be supported by a local volatility model for

S.

10As explained, our choice of pricing models has been restricted to those that are tractable. As a downside, each model has its own problems in terms of matching the empirical stylized facts of volatility. Some of these problems are related to the topic of this paper, i.e., what should the elasticity of variance-of-variance be. Specifically, in

Section 1 we estimated

while Heston has

. Likewise, in

Tegnèr and Poulsen (

2018) it is shown that while a log-normal model fits the marginal distribution of volatility well, Heston does not. Given our topic, having differences in

is of course exactly as desired. In an ideal controlled experiment this would be the only difference between the models. This is not quite true. The Heston model has mean-reversion and SABR does not. Furthermore, any inability to match empirical facts, even if shared by both models, could make other parts of the models and the results move in unexpected and hard-to-explain ways. As an example, both models suffer from the inability to match the entire term structure of autocorrelations (as shown in

Section 1). With all of this taken together, it would be naive to think that we can reasonably fit and/or accurately model multiple expiries at once. In an attempt to mend some of these problems we therefore chose to model each expiration-slice with a separate model.

11 The hope is that this will help the models capture the temporal properties that are the most important to modeling the particular expiration in question. Ideally this would also mean that the models approximately only differ by their elasticity. The reality is of course not so simple. We will make our best attempt at analyzing the results despite these a-priori objections.

3.1. Calibration and In-Sample Model Performance

In this subsection we perform a calibration experiment, where we calibrate the models separately on each expiry. We perform the calibration on a given expiry by minimizing the mean absolute error in Black–Scholes implied volatilities across a number of observed contracts,

n. Let

denote the implied volatility of the

i’th contract as observed on the market, and let

denote the corresponding implied volatility for the model in question and that for a particular choice of parameters. We thus calibrate each model by minimizing

with respect to the model parameters. On each day and for each expiry we aim to calibrate to 7 options: those with Black–Scholes call Deltas 0.05, 0.2, 0.3, 0.5, 0.7, 0.8, and 0.95.

12 When calibrating the Heston model to a single expiry-slice it is difficult to separate the speed of mean-reversion parameter

from the volatility-of-volatility parameter

, as well as separating the current instantaneous variance

from its long-term mean

; see also

Guillaume and Schoutens (

2010). Hence we use the following two-step procedure: first, optimize the fit to all implied volatilities over

; second, keep the

-estimate from the first step fixed and then optimize each expiry-slice separately and under the constraint

.

In the left-hand side of

Table 3 we show average calibration errors in basis points (bps) as well as their standard deviations (shown in parentheses). The main observation is that on average, SABR calibrates better for short expiries (less than 6 months) and Heston calibrates better for long expiries (more than one year). Specifically, the average error for the 1 month expiry is 28.7 bps for Heston and 11.2 bps for SABR, whereas for the 2.5 years’ expiry, the average error is 14.2 bps for Heston and 23.6 bps for SABR.

13 To understand this result, remember (see

Romano and Touzi (

1997)) that call option prices are determined by the (risk-neutral) distribution of instantaneous variance integrated over the life-time of the option (

),

For short times to expiry (small

), the dominant feature in determining this distribution is the

-term in the dynamics of instantaneous volatility, while for longer times to expiry temporal dependence (such as mean-reversion) becomes more important. The empirical analysis in

Section 2 shows that the SABR model (with

captures the functional form of the variance of instantaneous variance better than the Heston model (that has

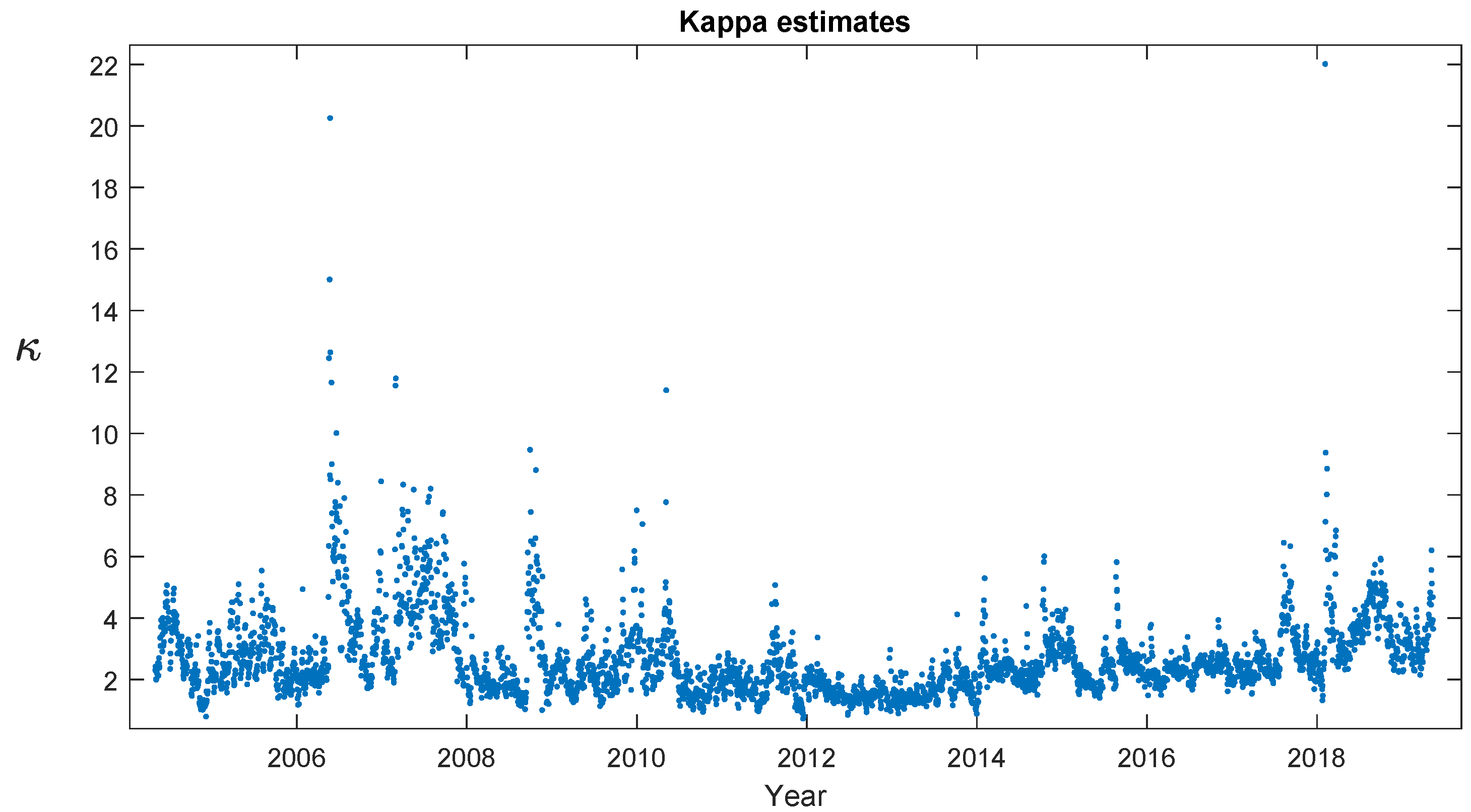

). On the other hand, that analysis also shows that instantaneous variance has a quite significant mean-reversion, which is something that the Heston model captures but the SABR model does not. As

Figure 2 shows, there is, however, a subtlety to this; the risk-neutral (

) speed of mean-reversion is considerably lower (2–4 typically) than the real-world (

) estimates (18.62 from

Table 2 is directly comparable).

14 It is widely documented in the literature that the short-expiry at-the-money implied volatility is typically higher than realized volatility, i.e., long-term levels are different between

and

(

), which can be explained by investor risk-aversion as a stochastic volatility model is, in option pricing terms, incomplete. The effect of investor preferences on the speed of mean-reversion is however less well documented. With that mean-reversion vs. elasticity reasoning in mind, a natural conjecture would be that a mixture model, a convex combination of SABR and Heston, would outperform both these models; that there would be a benefit from model diversification. However, such an effect is far from evident in the data. With the 6-month horizon as the only exception, the errors from the mixture model fall between those of SABR and Heston—often right in the middle.

Average errors do not tell the whole story; the variation (between days) of calibration errors (as measured by their standard deviations) are of the same order of magnitude as the averages. In the right-hand part of

Table 1, we give paired comparisons of the models. On each day (and for all three combinations) we subtract one model’s calibration error from an other model’s and report averages (that could be calculated from the left-hand part of the table) and standard deviations (that cannot). Let us first note that this shows that the differences in average that we comment on are all highly statistically significant (thus we have not cluttered the table with indications of this). However, we also note that the differences display a variation that is quite similar to the individual calibration errors themselves. Hence when the average Heston error is 14.2 bps for 2.5-year options and it is 23.6 bps for SABR, it by no means implies that Heston calibrates around 10 bps better each and every day, as the standard deviation of the difference is 7.5 bps.

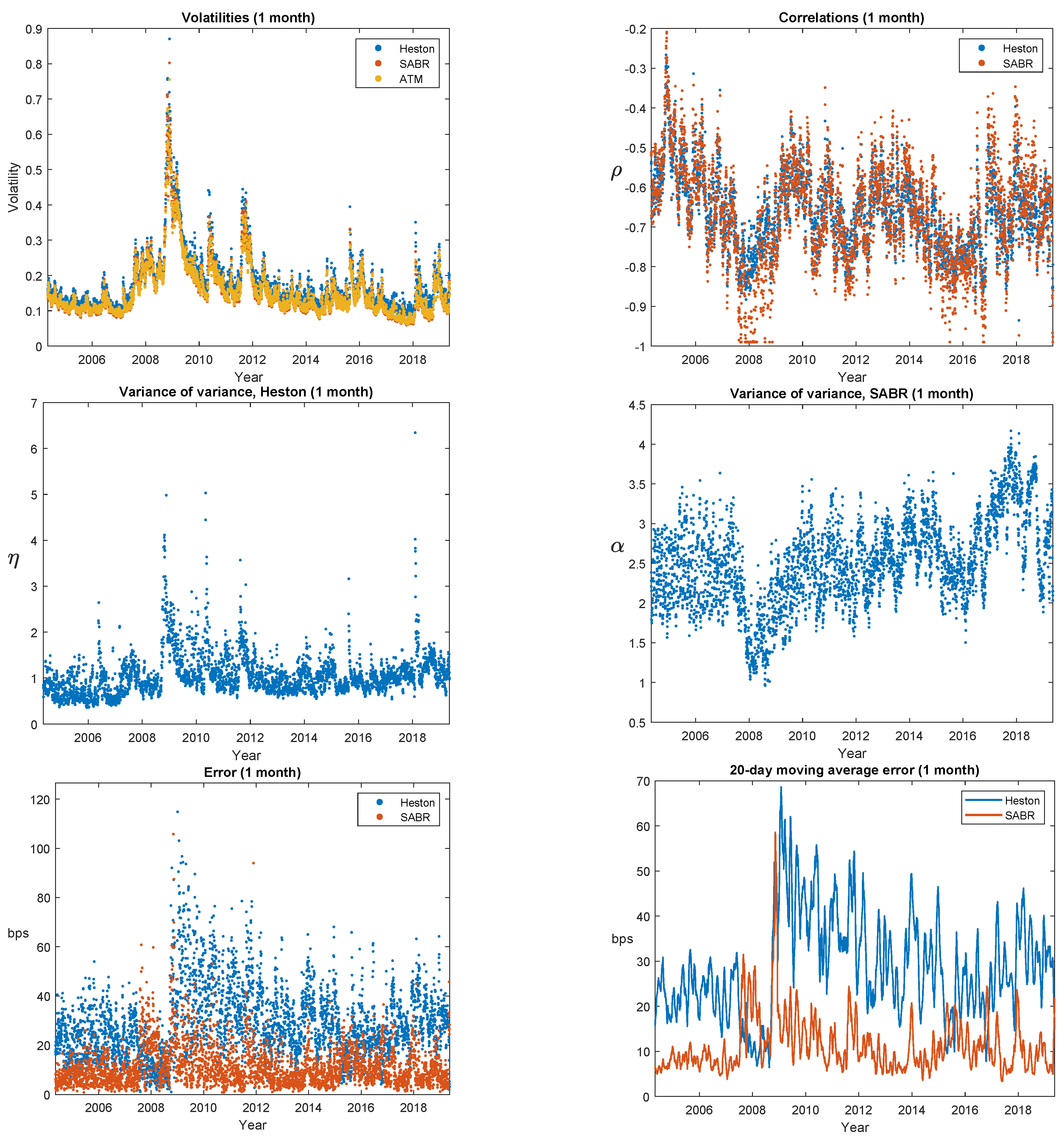

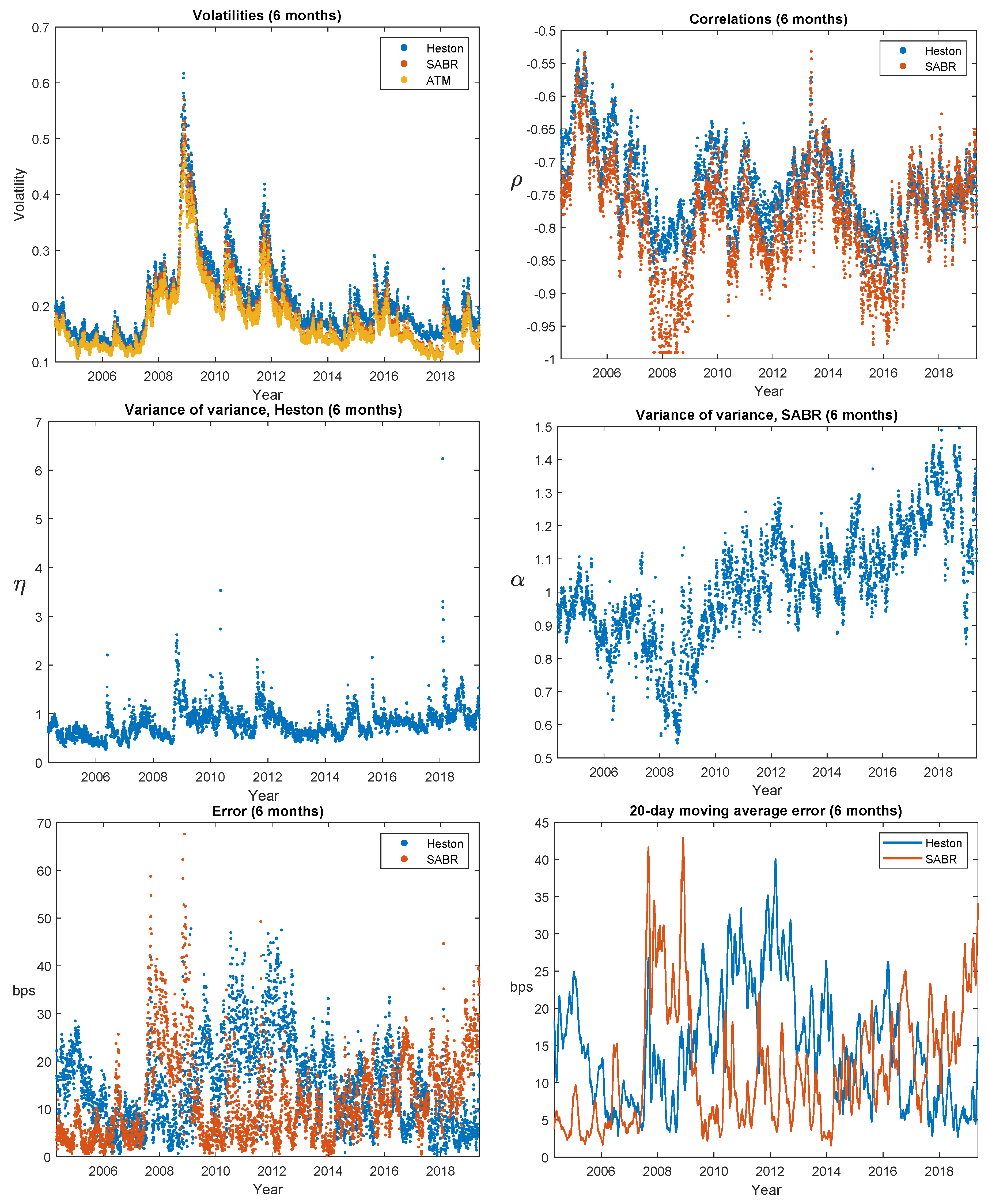

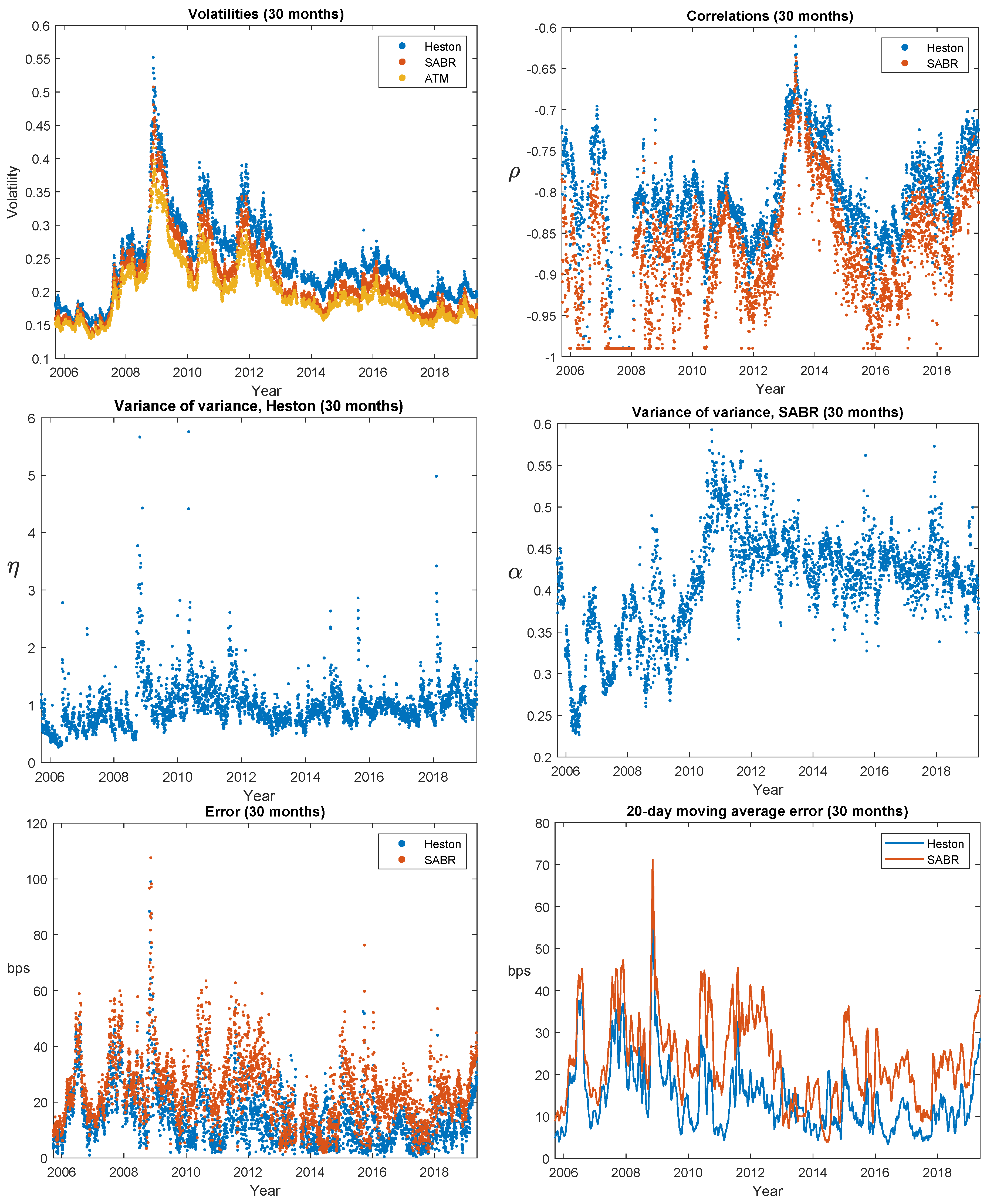

As a final in-sample investigation, let us look at the time evolution of the calibration errors and the model parameters. In

Figure 3,

Figure 4 and

Figure 5 we therefore show such results for, respectively, 1 month, 6 months, and 2.5 years expiries. The calendar and expiry time variation that one would expect from the results in

Table 3 is evident. Around the 2008–2009 financial crisis we see deterioration in model performance in various guises; Heston calibration errors for 1 month options more than double, and the model never really recovers; the crisis leads to extreme (negative) correlation for SABR. But overall, nothing off-the-scale happened. One thing that stands out visually is how high the Heston’s variance-of-variance parameter

(left-hand side columns, 2nd panel) correlates with the level of volatility (left-hand columns, 1st panel); the average (across expires) correlation is 0.53. This is consistent with our previous elasticity estimation: The Heston model’s elasticity of

does not allow the variance of variance to react as strongly to changes in the variance level as empirically observed in time-series (

around 0.91–0.97), and that manifests itself as changes in the

-estimate. For SABR, the (volatility, vol-of-vol) correlation is mildly negative (−0.28 on average), which is also consistent with that model slightly overstating the elasticity. A final note to make (based on the top left panel in

Figure 3) is that even though implied, at-the-money volatility goes to instantaneous volatility

as time to expiry goes to 0, and a one month expiry is not sufficiently small for this asymptotic result to have kicked in.

3.2. Predictions and Hedging; Out-of-Sample Performance

Figure 3,

Figure 4 and

Figure 5 show that calibrated model parameters change both over calendar time and across expiries. A cynic would say, “Therefore they are not

parameters—which is a crucial assumption in analytical work with the models, e.g., derivation of option price formulas. So: back to the drawing-board”. Our defense against that argument is pragmatism as formulated in the famous quote from statistician George Box that “all models are wrong, some are useful”. Thus we now investigate the practical usefulness of the SABR and Heston models. More specifically, we look at the quality of predictions and at how helpful they are for constructing hedge portfolios; two aspects that are central to financial risk management. Both investigations are done in out-of-sample fashion; the predictions or portfolios made at time

t use only information that is available at time

t.

To study prediction quality, we conducted the following experiment: on each trading day we calibrated the parameters and volatility. We then moved forward in time, updated observable market variables, such as the index price as well as yields, and recalibrated the volatility (which is allowed to change in the model)—but

not the parameters. We performed the experiment by moving 1 to 20 trading days ahead (the horizon) and considering all possible starting dates.

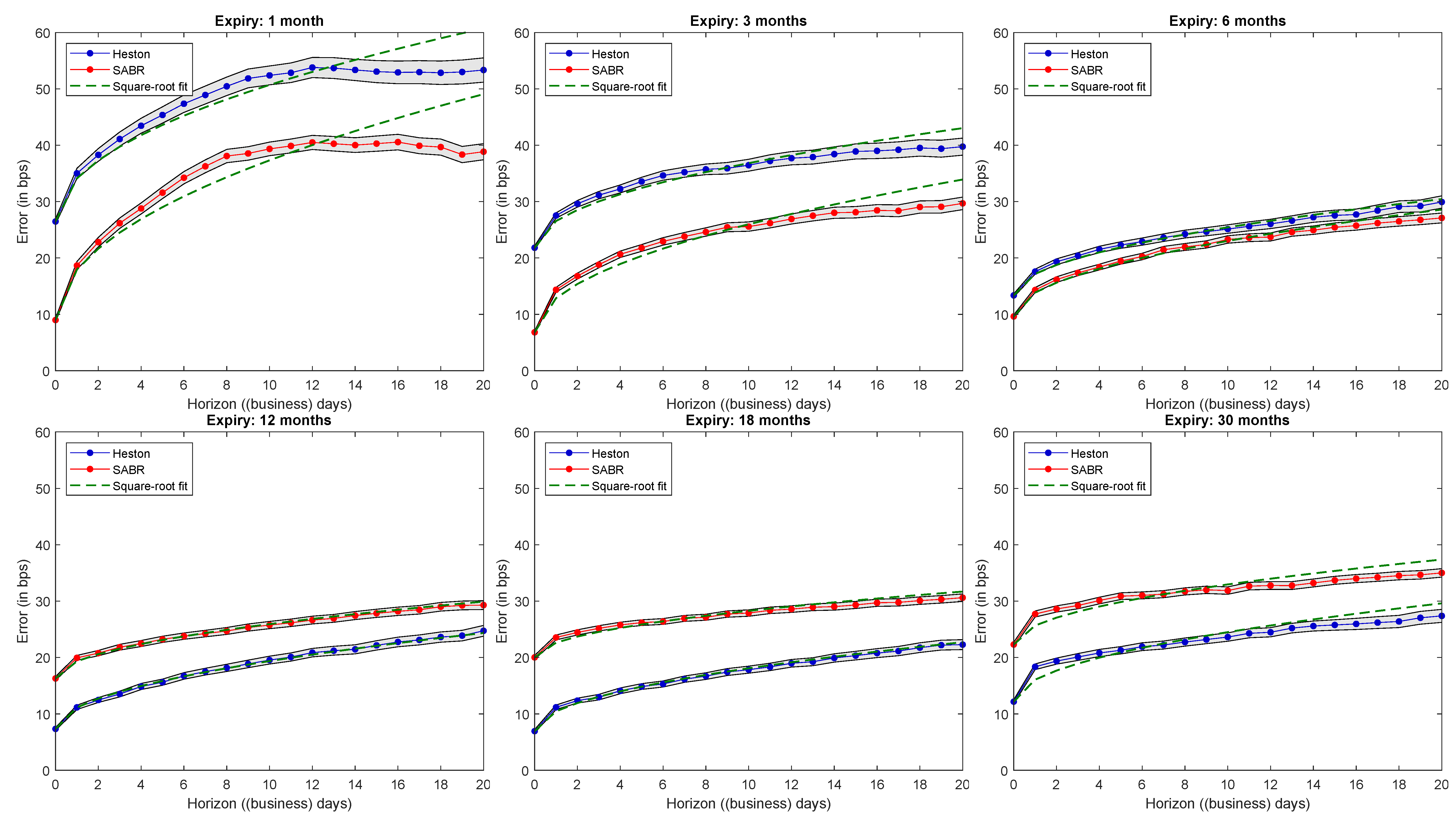

Figure 6 shows the results, and more specifically average absolute errors at different horizons and for different expiries for the SABR and Heston models.

15 The results are consistent with what we have observed so far. SABR works better for short expiries (where errors are generally larger), while Heston does better for longer expiries. Average absolute errors are well fitted by square-root functions (which is how we would a priori expect standard deviation-like quantities to grow with time), and the difference between the models is stable across horizons.

Finally, we turn to the hedge performance of the models. We will attempt to hedge out-of-the-money options by trading appropriately in the underlying asset, the risk-free asset as well as an at-the-money option of the same type as the out-of-the-money option (i.e., call or put). We performed the analysis on out-of-the-money options with strikes corresponding to Black–Scholes Delta values of 0.1, 0.3, 0.7, and 0.9. Thus, if the Delta is below 0.5 we hedged a call option and otherwise hedged a put option.

The details of the experiment are as follows: on each day we sell the out-of-the-money option and form a portfolio that according to the calibrated model perfectly hedges this option. Let us write to denote the entire portfolio time t, where denotes the number of units in the risk-free asset, the number of units in the underlying asset, the number of units in the at-the-money option, and the number of units in the out-of-the-money option. We set as mentioned. Let us also write and to denote the observed values of the out- and at-the-money option, respectively.

For compactness of presentation, let us consider a general hedge model

where

and where

a and

b are functions. We get Heston with

,

, and SABR with

,

and

. We can write the price of the out-of-the-money option at time

t as

for an appropriate function

F and a parameter vector

depending on model specifics;

for Heston and

for SABR. Similarly, the price of the at-the-money option can be written as

for an appropriate function

G. To determine the perfectly replicating portfolio under the hedge model we start by computing our models

Delta and

Vega, which for the out-of-the-money option with function

will be defined as

and

, respectively.

16 Our hedge will now consist of

in the at-the-money option, and

in the underlying asset. The joint portfolio will then be kept self-financing with the risk-free asset. With these choices the associated value process will exactly be a function of the current state

. Computing the dynamics of it using Ito’s Formula and applying the principle of no arbitrage proves that

is in fact a perfect hedge of the out-of-the-money option—assuming we are using the correct model.

For the mixture model the whole problem of finding a perfectly replicating portfolio is ill-defined, since the dynamical structure is unspecified. We therefore instead test a mixed portfolio that is the average of the portfolios under Heston and SABR. While this portfolio may not perfectly replicate the option it will be a valid self-financing strategy, which we can compare to the pure Heston and SABR strategies.

Consider now discrete hedging between two trading days

and

with

assumed for simplicity. Letting

denote the actual value of the portfolio at time

t we record (with a few discrete approximations) the change in the value process from

to

as

where

is the value of the risk-free asset. We then define the (relative and discounted) hedge error as

and summarize the performance of each model by taking the standard deviation of this across all trading days.

In

Table 4 we show the results across expires, moneyness, and models. We first note that the more out-of-the-money the target option is, the more difficult it is to hedge; not surprising, particularly because the at-the-money option is one of the hedge instruments. Short-expiry options are more difficult to hedge; above 6 months expiry standard deviations are quite stable. For low-strike options (i.e., out-of-the-money puts) the differences between models are small: at most 0.5 percentage (the 1 month, lowest-strike case), and none of the differences are statistically significant at the 5%-level. But there is an asymmetry. For high strikes (i.e., for out-of-the-money calls) differences in hedge errors are statistically significant across models; SABR outperforms Heston for expiries of three months and below, but is beaten by Heston for expiries above one year, albeit with a lower absolute margin. We also see that except in a single, statistically insignificant case (highest strike, 6 months expiry) the mixture strategy is dominated in terms of hedge performance by either Heston or SABR, i.e., there are no benefits from model “averaging” or “diversification”. Results (not reported) are qualitatively similar in a hedge experiment where Vega-hedging is done only weekly.